Abstract

Stated preference practitioners are increasingly relying on internet panels to gather data, but emerging evidence suggests potential limitations with respect to respondent and response quality in such panels. We identify groups of inattentive respondents who have failed to watch information videos provided in the survey to completion. Our results show that inattentive respondents have a higher cost sensitivity, are more likely to have a small scale parameter, and are more likely to ignore the non-cost attributes. These results are largely driven by respondents failing to watch the information video about the discrete choice experiment, attributes and levels, which underlines the importance of information provision and highlights possible implications of inattentiveness. We develop a modeling framework to simultaneously address preference, scale and attribute processing heterogeneity. We find that when we consider attribute non-attendance—scale differences disappear, which suggests that the type of heterogeneity detected in a model could be the result of un-modeled heterogeneity of a different kind. We discuss implications of our results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The purpose of many stated preference surveys is to derive willingness-to-pay or welfare measures to use in cost benefit analysis. Over the past two decades, stated preference practitioners have increasingly relied on internet panels to administer surveys and gather data (see Lindhjem and Navrud 2011b, for an overview). Respondents in such surveys are required to exert considerable effort to provide thoughtful and accurate answers to truthfully reveal their preferences. This has led several researchers to voice concerns regarding respondent and response quality in such panels (see e.g. Börger 2016; Börjesson and Fosgerau 2015; Gao et al. 2016b; Hess et al. 2013; Lindhjem and Navrud 2011a; Windle and Rolfe 2011; Baker et al. 2010; Heerwegh and Loosveldt 2008). These concerns range from speeding behavior and inattentiveness to respondents trying to qualify for panel membership or survey participation by acting dishonestly (fraudulently). These “low-quality” respondents have been identified through low-probability screening questions (Gao et al. 2016b; Jones et al. 2015b), “trap-questions” or “red herrings” (Baker and Downes-Le Guin 2007; Baker et al. 2010), and hidden speeding metrics (Börger 2016). Once identified, these respondents have commonly been excluded from the panel or analysis (Jones et al. 2015b; Baker et al. 2010). In this paper we argue that simply excluding these respondents from the sample is misguided and unnecessary,Footnote 1 and that inattentiveness or dishonesty can show itself as additional heterogeneity. As such, we make hypotheses regarding these respondents’ behavior and instead use models that are able to capture this.

We identify two main groups of respondents using a combination of information gathered by hidden speed metrics and follow-up questions. In the survey, we provided information about the good to be valued and the discrete choice experiment using two information videos. By measuring how long respondents stayed on the survey pages with the videos, we know that if they moved ahead with the survey in less time than it would take to watch the video, then they cannot have watched it to completion, and we classify them as inattentive. In the follow-up questions we asked respondents whether they watched the entire video to completion. By combining these two pieces of information we create a measure of dishonesty, or a willingness to deceive.Footnote 2 Inattentiveness is associated with speeding and dishonesty is likely associated with a fear of being disqualified from the survey coupled with social desirability bias. Since, the type of “trap question” used to identify dishonest respondents is distinctly different from those used in the literature to identify fraudulence, we provide a discussion of these approaches in Sect. 2. We hypothesize that inattentiveness is symptomatic of spending less effort throughout the survey. It is reasonable to assume that a respondent spending less effort also spends less time carefully deliberating on their choices. Indeed, looking at speeding behavior Börger (2016) found that respondents rushing through the survey had relatively more stochastic choices as seen from the econometrician’s point of view, which can partly be explained by less deliberation, and Gao et al. (2016b) find that respondents failing a trap question had larger variance in their willingness-to-pay estimates. Furthermore, when individuals face complex and difficult choices they tend to fall back on simplifying strategies and heuristics to reduce the cognitive burden of the choice and hence the effort necessary to make it (Gigerenzer and Gaissmaier 2011). One such strategy, which we test in this paper, is ignoring one or more of the attributes in the choice tasks, also known as attribute non-attendance (AN-A) (see e.g. Campbell et al. 2011; Hensher et al. 2005). We hypothesize that inattentive respondents are more likely to ignore attributes. For example, if you do not pay close attention throughout the survey, then it is possible that you also pay less attention to all of the attribute information provided in the choice tasks. Failing to consider the actual choice behavior of respondents can lead to biased estimates and wrong inferences drawn regarding preferences. Given this we identify and test the following two hypotheses: (i) inattentive respondents have a relatively more stochastic choice process, and (ii) inattentive respondents are more likely to ignore attributes on the choice cards. The majority of discussions relating to dishonest respondents deal with those who fraudulently tries to qualify for panel membership or survey participation (Baker et al. 2010). It is believed that these respondents are “in it for the money” or want their opinions heard (Baker et al. 2010), and as such they are a source of possibly lower quality answers. However, this is mostly related to ‘opt-in’ panels and surveys. In our case, where initial sampling to participate in the panel and survey was random, there is less information to form a priori hypotheses. That said, given the way we are identifying dishonest respondents in this paper—as a sub-group of inattentive respondents—we would expect that their behavior is either less or more extreme, relative to inattentive ones. As such we test the same hypotheses for dishonest respondents.

To test our hypotheses we develop a modeling framework that can jointly test both hypotheses. The model extends those used in Thiene et al. (2014) and Campbell et al. (2018) by using a mixed logit to capture preference heterogeneity, and Thiene et al. (2014) by inferring attribute non-attendance allowing for all possible AN-A patterns. The model allows us to capture possible scale differences and respondents ignoring attributes up to a probability. Our results show that inattentive respondents have a higher cost sensitivity, are more likely to have a small scale parameter, and are more likely to ignore the non-cost attributes. These results are largely driven by respondents failing to watch the information video about the discrete choice experiment, attributes and levels. While confirming previous results that inattention is linked to higher error-variance, we show that it might not be inattention in general that is the problem, but rather inattention at a crucial point in the survey, which led to a lack of information on how to complete the choice tasks. This suggests that proper information provision is crucial and can to some extent avoid respondents ignoring attributes. Furthermore, we find that inattentive respondents are more likely to attend to the cost attribute. This suggests a strategy involving focusing on the more familiar or self-explanatory attributes. In line with most results in the attribute non-attendance literature (see e.g. Colombo et al. 2013; Campbell et al. 2008; Hensher et al. 2005), we find that considering such behavior leads to substantially lower willingness-to-pay estimates.

The rest of the paper is outlined as follows: Section 2 provides background to the investigations undertaken in this paper, Sect. 3 outlines the theoretical framework, Sect. 4 introduces the empirical case study and data, Section 5 discusses the results and Sect. 6 concludes the paper.

2 Background

Internet panels are often divided into probability- and non-probability based, where the former recruits members using, for example, random digit dialing, while the latter uses recruitment campaigns, banner ads on web-pages and social media (Baker et al. 2010). While we use a probability based panel in this study, it is nonetheless important to recognize the steps taken to improve data quality in non-probability based internet panels since much of the research by both industry and academia deals with respondent and response quality in such panels (Baker et al. 2010). An initial concern relates to individuals who misrepresent themselves to qualify for either panel membership or survey participation. It is argued that these individuals are often members of multiple panels and seek to answer as many surveys as possible for the monetary reward. As such, they are neither interested in the topic of the survey nor to provide thoughtful answers. This can lead to lower data quality (Baker and Downes-Le Guin 2007). Furthermore, this suggests that non-probability panels might be plagued by self-selection issues to a larger extent relative to probability based panels where opting to be a member of the panel is not possible (see e.g. Lindhjem and Navrud 2011a, b, for a discussion). Self-selection issues aside, some survey companies and practitioners have included low-probability screening questions to filter out respondents who attempt to falsely qualify for survey participation (Baker et al. 2010; Jones et al. 2015b). These respondents are sometimes dropped in “real time” before even completing the survey, and if not, they are usually excluded prior to analysis (Baker and Downes-Le Guin 2007; Baker et al. 2010). A low-probability screening question is designed to be survey specific. For example, in a survey on food choice, the screening question can be of the form: “Please select the items you own/currently purchase from the list below”, where it is unlikely that someone would own/purchase more than one of the low-probability items on the list. Chandler and Paolacci (2017) find that people are indeed willing to lie to gain entry to surveys, but that they do not immediately perceive early questions as screening questions, and they do not try to strategically answer them unless it is clear that they are screening questions, for example, if the study is targeting a specific demographic. This type of fraudulent respondents is unlikely to be present in the current dataset for two main reasons: (i) we use data from a probability based internet panel (details in Sect. 4), and (ii) we used generic invitation e-mails when recruiting respondents to participate. That said, if respondents do perceive the follow-up questions as qualifiers, then they might have an incentive to be dishonest.

Researchers’ concern with inattentive respondents is often attributed to Krosnick (1991) and his ideas about survey satisficing behavior (Baker and Downes-Le Guin 2007). Respondents engaging in such behavior often have short completion times; non-differentiate on rating scales or straight-line, i.e always choose the same response in a battery of Likert scale questions; have high levels of item non-response; do “mental coin-flipping”; and choose a “don’t know” or opt-out response if available (Krosnick 1991; Baker and Downes-Le Guin 2007; Baker et al. 2010). This implies that respondents have not paid close attention to the questions and possibly given answers that are not a true reflection of their attitudes or preferences. The problem is that this might lead to lower quality data. In addition to the behaviors characterized as survey satisficing—a common way to identify inattentive respondents is to use trap questions (also called instructional manipulation checks (IMC), red herrings, validation-, and screening questions) (see e.g. Oppenheimer et al. 2009; Baker et al. 2010; Berinsky et al. 2014). Individuals failing these trap questions have not given a deliberately false answer, but missed a directive or answered incorrectly through carelessness. Questions of this type usually instruct respondents to give a particular response to a question or statement, for example, “For data quality purposes, please select ’strongly agree’ ” and can be placed in a battery of Likert scale questions (Baker et al. 2010), and sometimes respondents are even asked to ignore the response format (Oppenheimer et al. 2009). Failing a trap question like this is likely linked to survey satisficing and shorter response times (speeding), which is indicative of spending less effort in the survey. Some researchers suggest that respondents who fail trap questions and provide strong indications of survey satisficing behavior should be considered as candidates for deletion (Baker and Downes-Le Guin 2007; Heerwegh and Loosveldt 2008; Baker et al. 2010). For example, Heerwegh and Loosveldt (2008) excluded all respondents with completion times above 3 hours based on the likelihood that they were interrupted while completing the survey. However, they provided no additional information or justification for this choice. Downes-Le Guin et al. (2012) found that respondents who passed and failed the trap questions embedded in their survey showed no difference in terms of self-reported engagement scores, which suggests that the connection between inattentiveness and engagement might be weaker than initially thought.

Looking at the stated preference literature, fewer papers have been concerned with inattentive respondents. For example, Jones et al. (2015b) test whether inattentive respondents are more likely to violate the weak and strong axioms of revealed preference in a stated preference experiment on blueberries. Indeed, they find that respondents failing the screening metrics are more likely to violate the axioms of revealed preference, and that they provide answers that are less consistent. They suggest that these respondents are candidates for deletion in combination with additional metrics. In a different paper, Jones et al. (2015a) hypothesize that respondents who fail screening metrics are more likely to ignore attributes in the choice tasks and have a smaller scale parameter, i.e. a higher error variance. They test both independently and use an equality constrained latent class model to infer attribute non-attendance and a scaled mixed logit model to attempt to disentangle scale and preference heterogeneity. In both cases they find that removing inattentive respondents from the sample lead to better model fits. In the AN-A model they find that removing these respondents leads to a reduction in the percentage of respondents predicted to ignore all attributes, and the price attribute in particular. Running separate models on respondents passing and failing the screening metrics they find that respondents who fail have larger variance as measured by the scale parameter. While we test similar hypotheses, in this paper we use a state-of-the-art approach to identifying AN-A and estimate relative scale differences simultaneously, as well as retaining all respondents in the analysis. Gao et al. (2016b) use a single trap question to identify inattentive respondents and find that younger and low income respondents are more likely to fail the trap question, and suggest over-sampling this group to increase overall data quality. This finding is in line with Anduiza and Galais (2016) who find that respondents who pass and fail IMCs are significantly different on key demographic characteristics, and that respondents who fail are often younger, less educated and less motivated by the topic of the survey, which exacerbates their concern that eliminating these respondents could increase sample bias. Running separate models on respondents who fail and pass the trap question, Gao et al. (2016b) find that the model for the group that passed fit the data better and that the estimated willingness-to-pay had smaller variation. However, they do not recommend deletion of respondents who fail a single trap question, but that these respondents are candidates for deletion when seen in connection with other survey satisficing behaviors, e.g. speeding and straight-lining (Baker et al. 2010). In a different study Gao et al. (2016a), like Jones et al. (2015b), test the impact of inattentive respondents on adherence to the axioms of revealed preference. Running separate models for respondents who pass and fail the trap question, they find that respondents failing the question are more likely to violate the weak axiom of revealed preference, have higher mean willingness-to-pay and higher variance. They find that respondents failing the trap question are younger, have lower income, less likely to be Caucasian and less likely to have higher education. They do not recommend removal of these respondents since it can lead to sample bias, but like Gao et al. (2016b) they suggest over-sampling of respondents who fail the trap questions to increase overall data quality. Malone and Lusk (2018) use three different trap questions implemented in three different surveys that differ in complexity (difficulty). They find that the three questions trap different types of respondents, and that the most difficult trap question (a long text with directions) traps the most respondents. Interestingly, they find little connection between survey time and inattention and that in 2 of the surveys the mean completion time was was not different between the two groups of respondents. They find that estimated willingness-to-pay differ between respondents and that it could potentially bias policy relevant measures. They do not recommend deleting respondents who fail trap-questions, but rather support the idea of using an inattentive weighting scale as proposed by Berinsky et al. (2014), or give respondents who failed the question the option to revise their answer. If they still fail, they would be candidates for deletion.

3 Theoretical Framework and Model Specification

3.1 Background Notation

When analyzing discrete choice data we assume that respondents act according to random utility theory, and choose the alternative in each choice task that maximizes utility. This implies trading off between, and considering, all attributes and alternatives. We assume that a respondent’s utility can be described by one observable deterministic and one unobservable stochastic component. To introduce notation and develop the models, we assume that the utility of respondent n choosing alternative i in choice situation t can be expressed by Eq. 1:

where \(\beta \) is a vector of parameters to be estimated, \(x_{nit}\) is a vector of attributes and \(\varepsilon _{nit}\) is a type I extreme value distributed error term \(\pi ^2/6 s^2\), with \(var(\varepsilon _{nit}) = s^2\). Given these assumptions; the probability of the sequence of choices \(y_n = <i_{n1}, i_{n2},\ldots , i_{nT_n}>\) over the \(T_n\) choice situations faced by respondent n, can be expressed by the multinomial logit (MNL) model (McFadden 1974).

where \(\lambda \) is a scale parameter, which we assume equal to unity (an assumption we relax in the next section). While widely used, the MNL model assumes homogeneous preferences. We believe that this assumption is unlikely to hold and that it is better to allow for heterogeneous preferences by specifying distributions for the parameters. Let the joint density of the parameters \([\beta _{n1}, \beta _{n2},\ldots ,\beta _{nK}]\) be expressed as \(f(\theta _n|\Omega )\), where k indexes the attributes, \(\theta _n\) is a vector of random parameters and \(\Omega \) the parameters of the distributions. Then, we can write the unconditional probability as the integral over the logit formula for all possible values of \(\theta \). This model is often referred to as the mixed logit or random parameter logit model.

This integral does not have a closed form solution, but we approximate it using simulation. To test for the marginal impact of inattentiveness on the mean of the parameter distributions, we can specify \(\beta _n\) as: \(\beta _n = \bar{\beta } + \zeta d_{n} + \sigma \eta _n\), where \(\bar{\beta }\) is the mean, \(\zeta \) a vector of parameters to be estimated, \(d_n\) a vector of dummy variables indicating whether a respondent is inattentive, dishonest or both, \(\sigma \) the standard deviation and \(\eta _n\) a normally distributed variable.Footnote 3

3.2 The Latent Scale Class Model

In the standard models outlined above, the scale parameter \(\lambda \) and the vector of parameters \(\beta \) are not separately identified and the model estimates are in fact the product \(\lambda \beta \). Standard practice is to assume that the scale parameter is constant and equal to unity. However, if this assumption is violated, then we get confounded estimates of the preference part-worths (Swait and Louviere 1993). It is easy to see that this is the case given the inverse relationship between the scale parameter and error variance. For example, if the variance of the stochastic part of utility is large, then the scale parameter is small and the product \(\lambda \beta \) will be small. Assuming scale equal to unity implies that the error variance is equal across respondents and groups of respondents. One interpretation of scale, which we adopt here, is that it represents choice determinism, i.e. as error variance approaches infinity, scale limits to zero and choice resembles a purely random process as seen from the perspective of the econometrician (Swait and Louviere 1993; Magidson and Vermunt 2007). We hypothesize that inattentive respondents have a less deterministic choice process from the practitioner’s point of view, i.e. a smaller scale. The problem of scale heterogeneity can be particularly challenging in a latent class framework. Magidson and Vermunt (2007) point out that respondents with equal preference weights but different degrees of choice certainty might be incorrectly sorted into separate preference classes and suggest a scale extended (adjusted) latent class model, whereby subgroups within a class can differ with respect to choice certainty, i.e. error variance. Variations of the scale adjusted latent class model (Magidson and Vermunt 2007) have been successfully applied by e.g. Campbell et al. (2011) in the context of rural landscape improvement, Thiene et al. (2012) for forest conversion and biodiversity, Flynn et al. (2010) within health economics, and Mueller et al. (2010) in the context of wine labels.

We do recognize that subgroups of respondents might differ in scale, particularly that attentive and inattentive, as well as honest and dishonest, respondents have different error variances. However, our model departs from the traditional scale adjusted latent class model in two important ways: (1) we do not segment respondents into latent classes based on preferences, rather we segment them based on different scales and (2) we let preferences be described by a mixed logit model. This allow us to treat the scale parameter itself as latent and make probabilistic statements about the likelihood that a respondent belongs in a class with a relatively higher (lower) scale parameter. It explicitly allows us to test hypothesis 1 through the probability of being in a particular scale class. This implies that we assume a common underlying preference structure for both attentive and inattentive respondents, i.e. the mixed logit model , but allow them to differ in choice certainty. We operationalize this using an equality constraint on preferences. We call this model the latent scale class model. We write the unconditional (prior) probability that respondent n belongs to scale class s, \(s = <1, \ldots , S>\), as:

where \(\alpha _{s}\) is a class constant, \(\xi _s\) a vector of parameters to be estimated and \(d_n\) a vector of dummy variables indicating whether a respondent is classified as either inattentive, dishonest or both—with one parameter vector set to zero for identification. The unconditional choice probability can be expressed as in Eq. 5:

where \(\psi _{ns}\) is the unconditional probability relating to \(\mu _s\). We set scale equal to unity in one class for identification and use an exponential transformation to allow scale to be positive and freely estimated in the other classes, e.g. \(\mu _s = \exp (\lambda _s)\). Again the integral does not have an analytical solution, but is approximated using simulation. We recognize the contribution by Campbell et al. (2018) who also use a latent scale class model to accommodate different scales, however, our approach departs from theirs in that we assume that preference heterogeneity can be described by a mixed logit model as opposed to a latent class model.

3.3 Discrete Mixture Random Parameter Logit Model

A growing body of research shows that respondents in discrete choice experiments adopt a range of possible heuristics and simplifying strategies, for example ignoring one or more of the attributes in the choice tasks (Hensher et al. 2005; Hensher and Greene 2010; Campbell et al. 2011). This type of boundedly rational behavior is a deviation from random utility theory, and failing to take this into account can lead to wrong inferences with respect to preferences. We hypothesize that inattentive respondents have spent less effort on the choice tasks and as such are more likely to ignore attributes, a behavior known as attribute non-attendance (AN-A). To consider the full range of AN-A behavior we need to consider \(2^k\) possible combinations of attending to or ignoring attributes. We assume that non-attendance rates are independent across attributes and use a discrete mixture model to calculate the class probabilities (see e.g. Campbell et al. 2010; Hole 2011; Sandorf et al. 2017). Let the unconditional probability that respondent n attends to attribute k be denoted \(\pi ^1_{nk}\) and the probability that she ignores it \(\pi ^0_{nk} = 1 - \pi ^1_{nk}\). We estimate the probability of attendance according to Eq. 6:

where \(\alpha \) is a constant, \(\gamma \) a vector of parameters to be estimated and \(d_n\) a vector of dummy variables indicating inattentiveness or dishonesty. Letting \(k = \{1, 2, 3, 4\}\) index the attributes and \(q = <1, \ldots , Q>\) index all possible combinations of attending to or ignoring the attributes, we calculate the discrete mixing distribution (class membership probabilities) as follows:

We accommodate attribute non-attendance by restricting the parameter on the ignored attribute to zero in the likelihood function (Hensher et al. 2005). Specifically, we introduce a masking vector \(\delta _q\) taking on the value 1 for classes in which the corresponding value of \(\pi ^1_{nk}\) was used to determine class probability and 0 otherwise. Then we can write the unconditional choice probability as follows:

where \(\omega _{nq}\) is the unconditional probability relating to the masking vector \(\delta _q\) and \(\lambda \) assumed equal to unity. We allow preferences to follow continuous distributions, i.e. a mixed logit model, to minimize the risk of classifying respondents with low taste intensities as ignoring a particular attribute (Hess et al. 2013). The integral in Eq. 8 is approximated through simulation.

3.4 Latent Scale Class Discrete Mixture Random Parameter Logit Model

So far we have outlined models that consider two types of heterogeneity—either preference and scale heterogeneity, or preference and attribute processing heterogeneity. There is growing recognition that failing to appropriately consider different types of heterogeneity may bias estimates (Thiene et al. 2014; Campbell et al. 2018). Here we seek to develop a model that simultaneously disentangles preference, scale and attribute processing heterogeneity. Possibly the first paper to attempt to disentangle the three types of heterogeneity is Thiene et al. (2014). They use a finite mixture framework and allow for correlations between the different types of heterogeneity. Our model differ from theirs in three key aspects: (1) We use a mixed logit rather than a latent class model to capture preference heterogeneity, (2) we consider all possible combinations of attending to and ignoring attributes, and (3) we do not allow for correlations between the different types of heterogeneity. As such, we provide some extensions to their modeling approach. A recent contribution disentangling the three sources of heterogeneity is Campbell et al. (2018). Like Thiene et al. (2014), they use a latent class model to capture preference heterogeneity, but they do not consider attribute non-attendance—rather, they focus on a choice set generation heuristic that bear some similarities to elimination-by-aspects. As such—the methodological contribution of the present paper is to operationalize a model that allows for preference heterogeneity through a mixed logit model and the full \(2^k\) AN-A patterns.

Consider a respondent whose choice behavior is described by a class in which all but one attribute is ignored. Her choices are likely to be noisy since only the considered attribute is a predictor of choice. Hence, the product \(\lambda \beta \) will be small, however, her \(\beta \) might not be. Consequently, failing to consider scale heterogeneity in this model could lead to a possible downward bias in our estimates. We specify the probabilities of being in a particular scale- and AN-A class to be independent and functions of whether a given respondent is identified as inattentive or dishonest (i.e. Eqs. 4, 6).Footnote 4 By combining Eqs. 5 and 8 we get a model that allows us to consider all three types of heterogeneity: preference-, scale- and attribute processing. The unconditional choice probability can be expressed by Eq. 9:

This probability does not have closed form solution and hence we approximate it using simulation.

3.5 Conditional Estimates

We can use Bayes’ theorem to obtain the conditional class membership probabilities (Train 2009). These probabilities are conditional on a respondent’s sequence of choices, i.e. individual specific. We show the formula for calculating conditional probabilities from the attribute non-attendance models in Eq. 10. The approach for the latent scale class model is identical.

We can also obtain the conditional (i.e. individual specific) willingness-to-pay estimates using the same approach. Given our linear-in-the-parameters utility function we can define WTP as the negative ratio of the non-cost to the cost attribute, i.e. \(\upsilon = -{\beta _{k_{\mathrm {non\_cost}}}}/{\beta _{k_{\mathrm {cost}}}}\). Next, we can obtain the conditional WTP distributions by integrating over the distribution of WTP conditional on the sequence of choices and weighted by the conditional class probabilities as shown in Eq. 11. The mean of this distribution gives and indication as to where a given respondent lies on the willingness-to-pay distribution.

In the models that consider attribute non-attendance behavior we need to be aware that in the classes where either the non-cost or cost parameters were restricted to zero, WTP is not defined (i.e. in practice would be zero or infinity). We consider this explicitly by only calculating WTP for classes where both the non-cost and cost attributes were considered. Remember that we have \(2^4 = 16\) possible AN-A classes, and in half of these the parameter estimate on any attribute is restricted to zero. This leaves 4 classes where any given attribute and cost are considered. The willingness-to-pay for a non-cost attribute is then the weighted sum across these four classes, where the weights are the conditional probabilities of class membership as well as the conditional probability that the considered class is any of the four viable classes.

3.6 Model Estimation

All models are estimated in Ox v. 7.1 (Doornik 2009) using 1000 scrambled Halton draws (Bhat 2003). We ran each model multiple times with different starting vectors chosen at random to increase our certainty of reaching a global maximum, and selected the final models based on log-likelihood value and the AIC statistic. We assume that the estimated parameters for the size attributes follow log-normal distributions,Footnote 5 the parameters for the industry and habitat attributes follow normal distributions and the cost parameter follows a log-normal distribution with sign change. A log-normal distribution is by definition positive, and we force a sign change by multiplying the distribution by \(-\,1\). Since it is more appropriate to allow all parameters to follow distributions when inferring AN-A (Hess et al. 2013), we allow all parameters to follow distributions in all models to make clear the effect of adding additional layers of heterogeneity, i.e. scale and attribute non-attendance, on top of unobserved preference heterogeneity.

4 Empirical Case Study and Data

To test our hypotheses we use data from an online discrete choice experiment aimed at eliciting the Norwegian population’s preferences for increased cold-water coral protection (Sandorf et al. 2016).Footnote 6 These ecosystems are considered biodiversity hot-spots in the deep sea, and habitat for a large number of species (Fosså et al. 2002). Despite their apparent beneficial ecosystem services, they are under pressure from human activities, e.g. bottom trawling, hydrocarbon exploration and production, aquaculture and ocean dumping (Freiwald et al. 2004). In the DCE, each respondent made a sequence of 12 choices, each choice consisted of three alternatives described by four attributes. The selection of attributes was based on a review of the literature and expert interviews before the survey was tested in focus groups with experts and the general public (Aanesen et al. 2015). We used a Bayesian efficient design procedure where efficiency was determined based on minimizing the \(D_b\)-error (Scarpa and Rose 2008). The design was optimized for the multinomial logit model and updated based on pilot studies to obtain more precise priors. We show the attributes and corresponding levels in Table 1 and a sample choice card in Fig. 4.

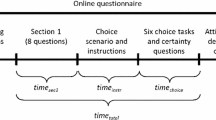

The survey took place in August 2014 and we used a sampling quota of 500 respondentsFootnote 7 from a probability based internet panel run by Norstat AS.Footnote 8 Respondents were sampled to be representative with respect to gender, age and geographic location. Invited respondents received a generic e-mail invitation to participate in a survey that would last 25 min and receive 50 reward points for participating.

In the invitation e-mail there was a link taking respondents to the beginning of the survey and a 9-min video presentation about cold-water corals. The video covered, among other things, current knowledge and status of the ecosystem, and protection legislation. Immediately following the video, respondents filled in the first part of the survey including some general questions about marine governance and a short quiz over the material covered (LaRiviere et al. 2014). Next, respondents watched a second video describing the discrete choice experiment, attributes and levels, before filling in the rest of the survey. We displayed each choice card on a separate screen and included a link on each page that would open a description of the attributes and levels in a separate browser window. This description contained the exact same information as covered in the video.

5 Results

5.1 The Identification of Inattentive and Dishonest Respondents

We identify inattentive and dishonest respondents using a combination of hidden speed metrics and follow-up questions. In Table 2 we show a summary of the definitions used and the size of the groups identified. Inattentive respondents are identified using hidden speed metrics. Specifically, we measured how long respondents stayed on the pages with the videos, and if they moved ahead with the survey in less time than it would take to watch the video to completion, we classify them as inattentive. Using this information we create three measures of inattentiveness: (i) move forward without watching the first video to completion, (ii) move forward without watching the second video to completion, and (iii) move forward without watching either video to completion. As such the final measure is stricter in terms of identifying inattentive respondents. The last two questions in the survey read: “Did you watch the entire video about cold-water corals?” and “Did you watch the entire video about the discrete choice experiment?”Footnote 9 Combining this information with the data on whether a respondent stayed on the pages with the videos long enough to have watched them, i.e. our measures of inattentiveness, we identify respondents giving a clearly incorrect answer to these follow-up questions. For example, a respondent who hasn’t watched video 1 might state that he did. We label these respondents dishonest. If a respondent perceives this question as a “qualifier” for survey completion and consequently compensation, then he would want to lie; or it could be a form of social desirability at play in that he wants to state that he did what was asked of him even though he did not.

In Table 3 we show summary statistics for all groups of respondents across relevant socio-demographic characteristics. We see that, on average, more men tended to not watch the videos to completion, but that when it comes to lying about it on the follow-up questions, there are no significant differences between men and women. In general, there are no differences between the groups in terms of age, except for weak significance at the 10 percent level between A2 and I2. Furthermore, we see that across all groupings we have significantly fewer respondents with higher education among those identified as either inattentive or dishonest, but that this does not translate into significantly different distributions of income. As part of the survey, after watching the first video, respondents filled in a multiple choice quiz with eight questions over the material covered in the video. We see that across all groupings, respondents classified as either inattentive or dishonest have fewer correct answers (one on the median).Footnote 10 However, how well a respondent did on the quiz is only weakly correlated with education (Table 4). Taking a closer look at the completion times we see that across all groupings, inattentive and dishonest respondents spend less time on both the survey as a whole and on the choice cards. The large difference for the survey as a whole is likely caused by the length of the videos, and the difference on the choice cards may be caused by additional speeding behavior.Footnote 11

In Table 4 we show the correlations between our measures of inattentiveness and dishonesty, and education; quiz score; and time spent on the survey and choice tasks. Perhaps of most interest are the measures entering the same models, i.e. I1 and I2, D1 and D2, and I and D. We see that the correlation between these variables range from 0.54 to 0.66, which suggests that they are moderately positively correlated. Furthermore, we see that there is a negative correlation between inattentiveness and score on the quiz, and that it is stronger for first video compared to the second video. This is expected since the first video came before the quiz and included all necessary information to obtain a perfect score. This is in line with the results reported in Table 3. Given that cold-water corals are unfamiliar to most people, prior knowledge about them is unlikely. We note that the correlation between dishonesty and the quiz score is weaker, which is most likely caused by dishonesty being a subset of inattentiveness (as defined here). Furthermore we note that the relationships between education and the measures of inattentiveness and dishonesty are weak and negative. This suggests that while there are differences between the groups in terms of education, the correlation is rather weak.

The preceding investigation into the measures of inattentiveness and dishonesty shows that they are linked to speeding behavior and to education, but that the correlations are relatively weak. While inattentive and dishonest respondents are faster, our measures are different from that of pure speeding behavior. In the following we present three sets of models using disaggregate and separate measures of inattentiveness and dishonesty, and models using aggregate measures in the same modeling framework. This allows us to explore the effect and impact of these respondents.

5.2 Estimation Results

In Table 5 we show the first set of models based on the definitions of inattentiveness outlined in Table 2. The models increase in complexity from left to right, where each new model adds a new layer. We start with the random parameter logit model (RPL, Eq. 3), which serves as a reference for comparison. Next, we introduce observed heterogeneity in the mean by adding interaction terms for inattentiveness. Third, we allow for possible differences in error variance using a latent scale class (LSC) model where we accommodate preference heterogeneity using an RPL model (Eq. 5). Fourth, we use a discrete mixture random parameter logit (DM-RPL) model to uncover attribute non-attendance (Eq. 8), and finally, we combine the two models to simultaneously capture preference, scale and processing heterogeneity in a model we call the latent scale class discrete mixture random parameter logit model (LSC-DM-RPL, Eq. 9).

From the RPL model, we see that, on average, people prefer increased protection of cold-water coral and that there is considerable heterogeneity in preferences as evident by the significant standard deviations. Interestingly, we observe that on average people do not prefer to protect areas that are important for the oil- and gas industry, but that they do prefer to protect areas that are important for the fisheries. Again we observe a large degree of preference heterogeneity. This result has been discussed in detail by Aanesen et al. (2015) and Sandorf et al. (2016), and is possibly a result of information provided in the videos, where respondents learned that the largest threat to cold-water corals is bottom trawling. Lastly, it seems that people care most about protecting reefs that are important habitat for fish.

In this paper we are concerned with the impact of inattentive respondents.Footnote 12 Our definitions of inattentiveness relate to whether or not a respondent watched either, both or none of the information videos to completion. The first video concerned information about the environmental good and the second—the discrete choice experiment. This allow us to explore whether inattention to different pieces of information affects results. In the second model we have interacted our measures with the means of the preference distributions to explore how our measures affect marginal utility. This model fits the data better, but the BIC statistic, which penalizes additional parameters heavily, suggests that the gain in fit does not outweigh the negative impact of estimating an additional 12 parameters. Nevertheless, we do gain some insights, the most important of which is that respondents who failed to watch the entire video about the discrete choice experiment have higher cost sensitivity, and a lower mean marginal utility of the size and habitat attributes. This indicates that these individuals are choosing alternatives that are cheaper or the status quo. Relatedly, not having received information about the attributes might lead to a focus on “self-explanatory” attributes such as cost, which translates into a higher sensitivity for this attribute. Furthermore, this result is supported by the attribute non-attendance models, which show that the probability of attendance is slightly higher for those that failed to watch the second video to completion. The evidence for respondents failing to watch the entire video about the environmental good is mixed, but they appear to prefer a small increase in protection and they have lower mean marginal utility for the industry and habitat attributes.

In Tables 7 and 8 in “Appendix B”, we show models estimated using our definition of “dishonesty” and the strictest definitions of inattentiveness and “dishonesty”, respectively. Looking at the RPL with observed heterogeneity reported in Table 7, we see that respondents who stated that they watched the first video when they did not have a higher cost sensitivity, and lower mean marginal utility for the habitat attribute. The latter effect is stronger for those stating that they watched the second video, when in fact they didn’t. Interestingly, it suggests that these “dishonest” individuals are somewhat different to those that are purely inattentive. Turning our attention to the same model reported in Table 8, we see that a similar pattern holds, but that the results are, to a large extent, driven by the strict definition of inattentiveness. This supports the results reported above, that it is not necessarily inattentiveness to, or unfamiliarity with, the environmental good that drives results, but rather when it relates to the discrete choice experiment itself.

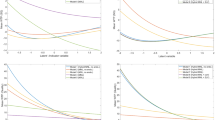

We hypothesized that inattentive respondents might have a less deterministic choice process as seen from the econometrician’s point of view. To test this we developed a latent scale class model, which allow us to make probabilistic statements about the likelihood that a given respondent is in a class with a relatively smaller or larger scale parameter. This model is similar to a latent class model, but we do not classify respondents based on different preferences (in fact we have imposed an equality constraint across classes), but rather based on different scale parameters. We see from Table 5 that the model allowing for different scale parameters fit the data better than the standard RPL model, and that at 4 additional parameters both the AIC and BIC statistic support this. In our preferred model we operate with two latent scale classes.Footnote 13 First, we note that the signs and significance of the estimates remain largely the same, but that several are larger compared to the RPL model. That said, any direct comparison is not possible due to different scaling of the models, but it is worth noting that considering scale differences within the model does lead to larger estimates. This is expected since we are separating out individuals with a very small scale parameter. In Scale Class (SC) 1, we have normalized the scale parameter to unity and in SC 2 we used an exponential transformation and let it be freely estimated. We are making the reader aware that the estimate for the scale parameter reported in Table 5 is the transformed estimate. The estimated scale parameter in SC 2 is 0.0726 and is significantly different from both zero and one,Footnote 14 which implies that the two classes describe different degrees of choice certainty and that SC 2 is characterized by an almost random choice process as seen from the econometrician’s point of view. This is in line with the results presented by Gao et al. (2016b) and Jones et al. (2015a). As the scale parameter approaches zero, the probability of choosing an alternative approaches 1 / J, where J is the number of alternatives, which implies that the probability of choosing any alternative is equal. Rather than reporting the covariates of the class probability function, we report the unconditional probabilities directly. On average, people are unlikely to be in SC2, but that people who failed to watch the first or second video are about twice as likely as those watched both, and those that failed to watch either to completion are about four times as likely as those who watched both with a predicted class probability of 40 %. Again, we see this result reflected in the models reported in Tables 7 and 8, where what appears to be driving the results are lying about watching the first video and inattentiveness. It is less clear now whether it is failure to watch the first or second video that drives results, but we note that the unconditional probability of being in SC2 is slightly higher for those failing to watch the video about the discrete choice experiment. This indicates that having less information is linked to a more stochastic choice process as seen from the econometrician’s point of view. Taken together this evidence suggests that inattentiveness, measured as not watching the videos, drives the results, with the effect of failing to watch the second video to completion being slightly higher. In Fig. 1a we have plotted the empirical distribution of conditional class membership probabilities for each of the groups. We see that those who did not watch one of the videos to completion are more likely to be in SC 2, but that those who watched neither to completion are much more likely to be in this class.

We hypothesized that inattentive respondents have put less effort into the choice tasks and as such are more likely to ignore one or more of the attributes. We use a combination of discrete and continuous mixture models to uncover the full set of possible AN-A strategies while considering preference heterogeneity using a RPL model. In Table 5 we see that the DM-RPL model represents a large improvement in model fit (186 log likelihood units), and that at 12 additional parameters this is supported by both the AIC and BIC statistic. Considering AN-A behavior leads to changes in some parameters compared to the RPL model, however, we note that a direct comparison of parameter estimates is inappropriate. Nonetheless, a few points are worth discussing. The standard deviation for size—small and the mean for industry—fisheries are now insignificant. This suggests that the heterogeneity we observed with regard to size—small was in part caused by respondents ignoring the attribute. On average, people are indifferent to protect areas that are important for the fisheries, but we do observe large heterogeneity associated with this attribute. An interesting observation is that the means of the parameter distributions are relatively larger than the corresponding standard deviations, except for the industry attributes, which was not the case in the RPL model. This suggests that there is a potential confounding between preference- and attribute processing heterogeneity (Hess et al. 2013).

In Table 5 we report the unconditional probabilities of attendance and the corresponding standard errors rather than the covariates of the probability functions. What we see is that inattentive respondents, by any measure, are less likely to attend to the non-cost attributes, but that they are more likely to attend to the cost attribute. We see that respondents failing to watch the video about the discrete choice experiment, where the attributes and levels were explained, are much less likely to attend to the non-cost attributes, with the exception of the industry attribute, and more likely to attend to the cost attribute. The results are strongest for those that failed to watch both videos to completion. Again, this underlines the importance of providing proper information about the attributes. These results suggest that respondents who lack such information are more likely to ignore attributes. If we look at the sensitivity analyses reported in Tables 7 and 8, we see that the results are somewhat similar with the drivers being lying about watching the first video and inattentiveness in the form of failing to watch both to completion. As such, our investigations provide partial support for the hypothesis that inattentive respondents are more likely to ignore attributes.

In Fig. 2 we have plotted the distributions of conditional probabilities of attendance for each attribute.Footnote 15 We see that inattentive respondents are less likely to attend to the non-cost attributes and more likely to attend to the cost attribute with those failing to watch both videos to completion much less likely to attend to the non-cost attributes. While the rates of attendance vary by sub-group, it is reassuring that irrespective of what group a respondent belongs to—they appear to attend to the cost attribute. The fact that inattentive respondents are more likely to attend to this attribute suggests that they focus on an attribute that might be familiar or self-explanatory, which supports the observations in the RPL model with observed heterogeneity. Given that inattentive respondents have not watched the entire information video about cold-water corals and/or the DCE and that they are less likely to have higher education, it seems a reasonable strategy to focus on an attribute that is familiar and self-explanatory.

In the last column of Table 5, we report the results from the model that simultaneously uncovers all three sources of heterogeneity. We see that the preference distributions are relatively similar to those uncovered in the other models, and we will not reiterate these results in detail. First, let us discuss the latent scale class model. We see that the estimated scale parameter is relatively large, which would indicate a relatively more deterministic choice process as seen from the econometricians point of view, however, the estimated parameter is not significantly different from 1. This indicates that both scale classes represent the same level of choice determinism. This is also evident by the fact that everyone is predicted to be in the same scale class.Footnote 16 In Fig. 1b we have plotted the distribution of conditional scale class probabilities by inatentiveness. What this indicates is that considering attribute non-attendance mitigates the observed scale differences between subgroups of respondents. This conjecture is further supported by the small increase in model fit. A likelihood ratio test fails to reject the null hypothesis that the final model has the same explanatory power as the attribute non-attendance model. This result is potentially important since it points to one possible source of scale heterogeneity. That said, the more heterogeneity we try to capture with our models, the more closely we tailor it to the data. We note that the predicted unconditional probabilities of attendance remain comparable across models. These results are mirrored in the models reported in Tables 7 and 8. In Fig. 3, we have plotted the distributions of conditional probabilities of attending to the attributes and they show the same pattern of results.

5.3 Willingness-to-Pay

In this section we take a closer look at the willingness-to-pay derived from each of the five models.Footnote 17 Rather than focusing on the unconditional estimates, we derive WTP conditional on a respondent’s sequence of choices. These conditional, i.e. individual specific, estimates gives an indication as to where the respondent lies on the willingness-to-pay distribution. In Table 6 we report the .05, .50, and .95 percentiles and the mean of the means of the conditional distributions. We calculate separate descriptive statistics of the conditional WTP estimates and report them separately for the various inattentiveness measures.

First, we see that the estimates from the RPL and RPL with observed heterogeneity are rather large and arguably unreasonable. The mean estimates are far above the highest cost level used in the discrete choice experiment and the .05 and .95 percentiles suggests a rather large spread. Although the mean is large, the median estimates are more in line with expectations, which suggests that the estimates are affected by distributional assumptions and outliers (more on this later). We see that in general inattentive respondents have lower WTP. This is expected given the evidence that inattentive respondents have a higher sensitivity to cost. Turning our attention to the LSC model, we see that the means, median and spread are even larger. This result is likely caused by the larger standard deviation of the cost parameter. When we consider respondents ignoring attributes in the choice tasks we observe large changes to willingness-to-pay. We see that they become substantially smaller and in line with expectations. The means of the WTP distributions are now also within the ranges given by the cost attribute. This result is consistent with the majority of papers exploring attribute non-attendance (see e.g. Hensher et al. 2005; Campbell et al. 2010). We observe only small changes in WTP between the DM-RPL and LSC-DM-RPL models, however, this is not surprising given how similar the models were. Turning our attention to the differences between attentive and inattentive respondents we see that, on average, inattentive respondents have lower WTP and narrower distributions of the conditional means compared to attentive ones. This is potentially connected to inattentive respondents’ greater propensity to ignore one or more of the attributes in the choice tasks as well as a higher cost sensitivity.

It is apparent that the estimates from the RPL, RPL with heterogeneity and the LSC are rather large and arguably unreasonable. We propose 3 possible reasons why these willingness-to-pay estimates are inflated. First, when calculating WTP from a random parameters logit model, the distribution of willingness-to-pay is the ratio of two independent distributions. Other authors show that this could lead to questionable signs and magnitudes of the resulting ratio distribution (Hensher and Greene 2011). One likely culprit is the choice of a log-normal distribution for the cost parameter. While the log-normal distribution is appealing from a statistical standpoint [i.e. existing moments of the willingness-to-pay distribution (Daly et al. 2012)] and a behavioral standpoint (all respondents have a negative sign for the cost coefficient), it can have support close to zero, which leads to rather large WTP estimates. In addition, both the distribution in the numerator and denominator are unbounded—potentially leading to large outliers. In this data, both of these issues are present. Indeed, from Table 5 we see that the small and significant estimate of the mean of the underlying normal distribution for the cost parameter combined with a large standard deviation does suggest support close to zero. Looking at the WTP estimates themselves, we see that the mean estimates from the RPL model are relatively large, but the median estimates are substantially smaller and more reasonable. Taken together with a visual inspection of the WTP distributions, it is reasonable to conclude that these factors contribute to the inflated estimates. Second, it can be the result of respondents ignoring one or more of the attributes. In fact we see that once we consider respondents ignoring attributes, the mean of the underlying normal distribution for cost increase by an order of magnitude suggesting that a smaller part of the cost distribution is close to zero. This is reflected in the willingness-to-pay estimates from the DM-RPL model, which are not only substantially smaller, but more reasonable. It also underlines the importance of considering the potential simplifying strategies that respondents use when participating in discrete choice experiments. This result conforms to the majority of findings in the literature, which suggests that considering attribute non-attendance leads to smaller WTP estimates (see e.g. Hensher et al. 2005; Campbell et al. 2010). A third point related to the LSC model is that when we consider potential scale differences between subgroups of respondents, the relative relationship between the mean and standard deviations of some attributes change. For example, in the LSC model, the standard deviation of cost becomes relatively larger compared to, for example, the RPL model. This indicates a larger variation in the denominator of the WTP distribution. Taken together with the relatively larger means in the distributions describing non-cost attributes—this can potentially explain the wider spread of conditional WTP.

6 Conclusion

In this paper we use data from an internet panel discrete choice experiment aimed at eliciting preferences for increased cold-water coral protection in Norway (Aanesen et al. 2015; Sandorf et al. 2016). We identified a group of inattentive respondents—respondents who failed to watch information videos about the environmental good and the discrete choice experiment to completion. Given recent interest in inattentive respondents, and the growing concerns that these respondents spend less effort answering the survey, we test two hypotheses in the context of a discrete choice experiment: inattentive respondents (a) have less deterministic choices as seen from the econometrician’s point of view, and (b) are more likely to ignore attributes in the choice tasks. We test both hypotheses separately and jointly in a modeling framework that can simultaneously address preference, scale and attribute processing heterogeneity.

We find partial support for both hypotheses. First, we find that inattentive respondents are more likely to be in a scale class characterized by a relatively smaller scale parameter, and they are more likely to ignore the non-cost attributes. This is similar to what was found by Jones et al. (2015b), however, their results were based on tests on different samples where inattentive respondents were excluded. That said, when we simultaneously test our hypotheses the differences in scale disappear. This suggests that what we observed in terms of scale heterogeneity between the groups of respondents was in fact non-modeled differences in the propensity to ignore attributes. This points to a possible source of differences in error variance between respondents and shows that failing to address sources of heterogeneity might lead the researcher to make the wrong inferences. This argument was also made by Campbell et al. (2018). Turning our attention to the willingness-to-pay estimates, we find that, in general, inattentive respondents have lower mean willingness-to-pay, which is driven by a higher cost sensitivity. This is in contrast to Gao et al. (2016b) who reported higher WTP. It is possible that this difference is caused by the identification of inattentiveness, which in our case is inattentiveness to crucial information in the discrete choice experiment. Furthermore, we find that considering AN-A leads to substantially lower WTP estimates that are more in line with expectations and means that are within the range of the cost attribute.

Interestingly, defining inattentiveness as failing to watch the video about the environmental good or the video about the discrete choice experiment reveals a potentially important result. It appears the differences in cost sensitivity, scale class membership and ignoring attributes are driven by a failure to watch the video about the discrete choice experiment where the attributes and levels are described. This suggests a focus on a familiar and self-explanatory attribute when making their choices. It also suggests that a failure to watch this video leads to uncertainty about how to complete the choice tasks, which explains the relatively more stochastic choice process as seen from a practitioner’s point of view. Crucially, this result suggests that stated preference practitioners need to pay close attention to information provision, and especially try to make sure that respondents understand how to answer the choice tasks. Furthermore, these results also emphasize the importance of including time-stamps in our surveys. In addition to being able to detect pure speeding behavior, we can use them as proxies for whether or not a respondent have received all the necessary information. Even in the cases where information is presented as text—time stamps can provide information on whether it is likely that respondents have read it. Moving forward, testing hypotheses in this direction might provide fruitful. In this data it appears that neither receiving information about the good to be valued nor the results of the quiz over this information was strongly correlated with failure to watching the second video. Future research can consider to include instrumental checks, e.g. a quiz or trial choice task, can help gauge whether or not a respondent understands how to answer a choice task. This will allow for a cleaner test of the results obtained in this paper.

Notes

Eliminating these respondents leads to a loss of the random sampling properties and can make out-of-sample aggregation invalid. Furthermore, choices made by respondents who missed a directive or failed a follow-up question still represents a choice and an expression of preferences.

We recognize that including inattentiveness or dishonesty in this manner may lead to endogeneity bias, however, we believe it is of interest to see how being classified as inattentive or dishonest might influence the mean of the preference distribution.

If these two probabilities are not independent, then the probability of being in a particular scale- and AN-A class is not the product of the two. Specifying both probabilities to be functions of inattentiveness might lead to poorer model fit, however, it does make the model more tractable and it is easier to interpret the key aspects of interest.

We also tried using normal distributions for these parameters, but this led to unreasonably large estimates for some attributes and numerical instability in the final models. This could be the result of an identification problem since the normal distribution covers zero. Consider a respondent that either ignores the attribute or is just indifferent. This respondent could fall into classes where the value is zero and where it is non-zero, but overlaps zero.

In total, 3462 potential respondents received the invitation to participate, 761 began the survey and 500 completed the survey.

Norstat AS was founded in 2003 and has the largest internet panel in Norway with 80,000 registered members. Panel members are recruited in two ways: (i) after a phone interview where respondents were recruited by random digit dialing (RDD) or (ii) RDD specifically to recruit members to the panel. Sampling to participate in any given survey once a person is a member of the panel depends on the survey. Respondents are compensated in points based on the length and type of survey. These points can be exchanged for gift cards or donated to charity. For example, 200 reward points can be exchanged for a NOK 200 gift certificate. This level of compensation is common for this type of survey in Norway.

We put these two questions at the very end so that they would not influence other results.

Sandorf et al. (2016) also discuss the connection between the quiz and watching the videos.

We are using Winsorized means to avoid the influence of extreme outliers in the distribution of time spent on the survey and the choice tasks. For example, Winsorized at the 90th percentile means that any value above the 90th is set to the 90th. If we include all observations, the difference between the groups is insignificant and in some cases opposite. This is purely driven by more people with extreme completion times (several days) in the groups identified as inattentive or dishonest.

We report the models for dishonesty in “Appendix B” and make reference to these models where appropriate.

During our model search we found that moving beyond two classes led to only marginal increase in the log-likelihood value and was not supported by the AIC or BIC statistics. Furthermore, moving beyond four classes resulted in numerical instability and convergence issues.

To test whether the scale parameter is different from zero, we use a standard t-test. We obtain the standard error of the transformed estimate, i.e. \(\exp (-2.6224)\), using the Delta method. Then we construct the test-statistic: \(t = \exp (-2.6224)/0.0267 = 0.0726/0.0267 = 2.7188\), which is higher than the critical value of 2.576 at the 1% level. Similarly, we can test whether it is different from one. We construct the test-statistic: \(t = (\exp (-2.6224) - 1)/0.0267 = (0.0726 - 1)/0.0267 = -34.7154\), which again is statistically significant at the 1% level.

These conditional probabilities are obtained by summing, for each individual, the conditional class membership probabilities over the classes where a particular attribute was attended to.

The fact that the scale parameters do not differ between classes might lead to a potential identification problem. It is very difficult for the model to predict class membership when there are no differences between classes. Furthermore, we observed evidence in this respect since the log-likelihood function appeared flat in the region of convergence for the parameters in the scale class probability function. This holds true for the models estimated and reported in “Appendix B” as well.

The WTP estimates reported here differ from those reported in Sandorf et al. (2016). Sandorf et al. (2016) used an RPL estimated in “willingness-to-pay space”, with non-cost attributes following normal distributions and cost following a log-normal distribution. The rather large estimates found here are likely caused by the log-normally distributed cost parameter with a mass close to zero, and the log-normal distribution for the size-small and size-large attribute levels. Considering respondents ignoring attributes leads to more reasonable WTP estimates.

References

Aanesen M, Armstrong C, Czajkowski M, Falk-Petersen J, Hanley N, Navrud S (2015) Willingness to pay for unfamiliar public goods: preserving cold-water coral in norway. Ecol Econ 112:53–67

Anduiza E, Galais C (2016) Answering without reading: IMCs and strong satisficing in online surveys. Int J Public Opin Res 29(3):497–519

Baker R, Blumberg SJ, Brick JM, Couper MP, Courtright M, Dennis JM, Dillman D, Frankel MR, Garland P, Groves RM, Kennedy C, Krosnick JA, Lavrakas PJ, Lee S, Link M, Piekarski L, Rao K, Thomas RK, Zahs D (2010) AAPOR report on online panels. Public Opin Q 74(4):711–781

Baker R, Downes-Le Guin T (2007) Separating the wheat from the chaff: ensuring data quality in internet samples. In: Proceedings of the fifth international conference of the association for survey computing: the challenges of a changing world, pp 157–166

Berinsky AJ, Margolis MF, Sances MW (2014) Separating the shirkers from the workers? Making sure respondents pay attention on self-administered surveys. Am J Pol Sci 58(3):739–753

Bhat CR (2003) Simulation estimation of mixed discrete choice models using randomized and scrambled Halton sequences. Trans Res Part B Methodol 37(9):837–855

Börger T (2016) Are fast responses more random? Testing the effect of response time on scale in an online choice experiment. Environ Resour Econ 65(2):389–413

Börjesson M, Fosgerau M (2015) Response time patterns in a stated choice experiment. J Choice Model 14:48–58

Campbell D, Doherty E, Hynes S, Rensburg TV (2010) Combining discrete and continuous mixing approaches to accommodate heterogeneity in price sensitivities in environmental choice analysis. Agric Econ 29:31–49

Campbell D, Hensher DA, Scarpa R (2011) Non-attendance to attributes in environmental choice analysis: a latent class specification. J Environ Plan Manag 54(8):1061–1076

Campbell D, Hutchinson WG, Scarpa R (2008) Incorporating discontinuous preferences into the analysis of discrete choice experiments. Environ Resour Econ 41(3):401–417

Campbell D, Mørkbak MR, Olsen SB (2018) The link between response time and preference, variance and processing heterogeneity in stated choice experiments. J Environ Econ Manag 88:18–34

Chandler JJ, Paolacci G (2017) Lie for a dime. Soc Psychol Personal Sci 8(5):500–508

Colombo S, Christie M, Hanley N (2013) What are the consequences of ignoring attributes in choice experiments? Implications for ecosystem service valuation. Ecol Econ 96:25–35

Daly A, Hess S, Train K (2012) Assuring finite moments for willingness to pay in random coefficient models. Transportation 39(1):19–31

Doornik JA (2009) An object-oriented matrix programming langue Ox 6. http://www.doornik.com

Downes-Le Guin T, Baker R, Mechling J, Ruylea E (2012) Myths and realities of respondent engagement in online surveys. Int J Mark Res 54(5):1–21

Flynn TN, Louviere JJ, Peters TJ, Coast J (2010) Using discrete choice experiments to understand preferences for quality of life. Variance-scale heterogeneity matters. Soc Sci Med 70(12):1957–1965

Fosså JH, Buhl-Mortensen P, Furevik DM (2002) The deep-water coral Lophelia pertusa in Norwegian waters: distribution and fishery impacts. Hydrobiologia 471(1):1–12

Freiwald A, Fosså JH, Grehan A, Koslow T, Roberts JM (2004) Cold-water coral reefs: out of sight—no longer out of mind. Tech. rep, UNEP-WCMC, Cambridge, UK

Gao Z, House LA, Bi X (2016a) Impact of satisficing behavior in online surveys on consumer preference and welfare estimates. Food Policy 64:26–36

Gao Z, House LA, Xie J (2016b) Online survey data quality and its implication for willingness-to-pay: a cross-country comparison. Can J Agric Econ 64(2):199–221

Gigerenzer G, Gaissmaier W (2011) Heuristic decision making. Ann Rev Psychol 62:451–482

Heerwegh D, Loosveldt G (2008) Face-to-face versus web surveying in a high-internet-coverage population: differences in response quality. Public Opin Q 72(5):836–846

Hensher DA, Greene WH (2010) Non-attendance and dual processing of common-metric attributes in choice analysis: a latent class specification. Empir Econ 39(2):413–426

Hensher DA, Greene WH (2011) Valuation of travel time savings in WTP and preference space in the presence of taste and scale heterogeneity. J Transp Econ Policy 45(3):505–525

Hensher DA, Rose J, Greene WH (2005) The implications on willingness to pay of respondents ignoring specific attributes. Transportation 32(3):203–222

Hess S, Stathopoulos A, Campbell D, O’Neill V, Caussade S (2013) It’s not that I don’t care, I just don’t care very much: confounding between attribute non-attendance and taste heterogeneity. Transportation 40(3):583–607

Hole AR (2011) A discrete choice model with endogenous attribute attendance. Econ Lett 110(3):203–205

Jones MS, House LA, Gao Z (2015a) Attribute non-attendance and satisficing behavior in online choice experiments. In: Proceedings in food system dynamics, pp 415–432

Jones MS, House LA, Gao Z (2015b) Respondent screening and revealed preference axioms: testing quarantining methods for enhanced data quality in web panel surveys. Public Opin Q 79(3):687–709

Krosnick J (1991) Response strategies for coping with the cognitive demands of attitude measures in surveys. Appl Cogn Psychol 5(3):213–236

LaRiviere J, Czajkowski M, Hanley N, Aanesen M, Falk-Petersen J, Tinch D (2014) The value of familiarity: effects of knowledge and objective signals on willingness to pay for a public good. J Environ Econ Manag 68(2):376–389

Lindhjem H, Navrud S (2011a) Are internet surveys an alternative to face-to-face interviews in contingent valuation? Ecol Econ 70(9):1628–1637

Lindhjem H, Navrud S (2011b) Using internet in stated preference surveys: a review and comparison of survey modes. Int Rev Environ Resour Econ 5:309–351

Magidson J, Vermunt JK (2007) Removing the scale factor confound in multinomial logit choice omodels to obtain better estimates of preference. In: Sawtooth software conference, pp 139–154

Malone T, Lusk JL (2018) Consequences of participant inattention with an application to carbon taxes for meat products. Ecol Econ 145:218–230

McFadden D (1974) Conditional logit analysis of qualitative choice behavior. In: Zarembka P (ed) Frontiers in econometrics. Academic Press, New York, pp 105–142

Mueller S, Lockshin L, Saltman Y, Blanford J (2010) Message on a bottle: the relative influence of wine back label information on wine choice. Food Qual Prefer 21(1):22–32

Oppenheimer DM, Meyvis T, Davidenko N (2009) Instructional manipulation checks: detecting satisficing to increase statistical power. J Exp Soc Psychol 45(4):867–872

Sandorf ED, Aanesen M, Navrud S (2016) Valuing unfamiliar and complex environmental goods: a comparison of valuation workshops and internet panel surveys with videos. Ecol Econ 129:50–61

Sandorf ED, Campbell D, Hanley N (2017) Disentangling the influence of knowledge on attribute non-attendance. J Choice Model 24:36–50

Scarpa R, Rose JM (2008) Design efficiency for non-market valuation with choice modelling: how to measure it, what to report and why*. Aust J Agric Resour Econ 52(3):253–282

Swait J, Louviere J (1993) The role of the scale parameter in the estimation and comparison of multinomial logit models. J Mark Res 30(3):305–314

Thiene M, Meyerhoff J, De Salvo M (2012) Scale and taste heterogeneity for forest biodiversity: models of serial nonparticipation and their effects. J Forest Econ 18(4):355–369

Thiene M, Scarpa R, Louviere JJ (2014) Addressing preference heterogeneity, multiple scales and attribute attendance with a correlated finite mixing model of tap water choice. Environ Resour Econ 62(3):637–656

Train KE (2009) Discrete choice methods with simulation, 2nd edn. Cambridge University Press, New York

Windle J, Rolfe J (2011) Comparing responses from internet and paper-based collection methods in more complex stated preference environmental valuation surveys. Econ Anal Policy 41(1):83–97

Acknowledgements

The author would like to thank Danny Campbell for good discussions and comments on an earlier version of the paper. In addition, Margrethe Aanesen and Claire Armstrong have provided feedback. The data was collected as part of the project “Habitat-Fisheries interactions - Valuation and bio-economic modeling of Cold Water Coral” funded by the Norwegian Research Council (Grant no. 216485). Funding from the Jan Wallander and Tom Hedelius foundation, and the Tore Browaldh foundation (Grant no. B2015-0490:1) is gratefully acknowledge. Part of this work was conducted while the author was working at UiT - The Arctic University of Norway. I would like to thank Danny Campbell, Margrethe Aanesen and Clair Armstrong for input on an earlier version, and an associate editor and two anonymous reviewers for valuable feedback. Any remaining errors are the sole responsibility of the author.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions