Abstract

Although the competencies expected from administrators have increased with the development of technology, it is seen that there are a limited number of studies on innovative approaches in training school administrators. The study aims investigate the impact of simulation applications on the problem-solving and decision-making abilities of school administrators and prospective administrators. To achieve this objective, a pretest and posttest quasi-experimental study was conducted, using experimental and control groups. The study employed a parallel mixed design, which combines quantitative and qualitative research approaches. The research group consisted of 61 school administrators and prospective administrators, with 30 participants in the experimental group and 31 in the control group. The Melbourne Decision Making Questionary I-II (MCDS I-II) and the Problem Solving Inventory (PSI) were utilized as data collection instruments before and after the 6-week experimental process. Furthermore, a semi-structured interview form was administered to the experimental group participants following the application. The results of the data analysis indicated that the simulation application effectively enhanced the decision-making and problem-solving skills of the experimental group participants, while reducing the use of approaches that hindered the positive effects of these skills.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerous applications supported by information and communication technologies (animation, simulation, video, multimedia, augmented reality, virtual reality) are prevalent in the education. Previous research demonstrates the effectiveness of utilizing these tools and techniques in teaching abstract concepts (Pekdağ, 2010; Schrum & Levin, 2016; Hallinger et al., 2017). One such application is the simulation technique. According to Kelton (1999), simulation is defined as “a technique based on transferring real situations to a computer environment using an appropriate software system and reliably imitating the real situation in this environment.” Through the simulation technique, real-life problems are transferred to the virtual environment for participants, creating opportunities to solve encountered issues. This allows individuals using the simulation technique to observe the outcomes of their decisions and potential situations in advance (McClarty et al., 2012).

In recent years, there have been numerous studies examining the effectiveness and validity of simulation applications in learning (Faria, 2001; Faria et al., 2009; Hofstede et al., 2010; Veerson & Lawton, 2009). Additionally, several applications have been developed and research has been conducted on the use of simulations in various teaching areas (Militello et al., 2021; Palmunen et al., 2021; Volante et al., 2020). However, despite suggestions that the simulation technique can be effective for training school administrators and the need for further experimental research, there is a lack of sufficient research on this subject. Therefore, the primary objective of this study is to determine the extent to which the simulation technique is effective in training school administrators. Specifically, the literature lacks sufficient investigation into the impact of simulation-based training, utilizing scenarios based on real situations, on the problem-solving and decision-making skills of school administrators. To address this gap, this study examines the effect of the simulation technique on the decision-making and problem-solving skills of both current and prospective school administrators. Accordingly, a quasi-experimental design was adopted within the framework of a mixed research paradigm. The research utilized a simulation application developed by experts that focused on instructional leadership. This simulation application aimed to create a realistic school environment, personalize participants’ learning experiences, facilitate unlimited practice, and provide feedback on performance outcomes.

The Covid-19 pandemic, which has had a global impact, has underscored the significance of utilizing digital tools in education and training. Throughout the pandemic, in-person education was disrupted worldwide due to social distancing measures, necessitating the use of technological solutions to continue learning (Goldschmidt & Msn, 2020). In this educational setting, it is crucial for simulation to both support individual learning and replicate online learning as closely as possible to real-life scenarios. When examining the literature on school administrator training, numerous studies have explored the content, theories, curriculum designs, and learning methods employed in these programs. However, as Wildman (2001) points out, despite the extensive research on school administrator training, few studies focus on evaluating the effectiveness of training programs and practices or offer a novel approach to the process (Showanasai & Hallinger, 2013).

The simulation technique provides school administrators with an opportunity to enhance their experience and professional development by engaging in problem-solving practice and developing effective decision-making skills. Furthermore, the scenarios presented in the simulation application closely resemble real-life challenges, allowing administrators to gain valuable experience on how to navigate similar situations in the future. This experience is risk-free, enabling administrators to tackle problems confidently and without hesitation. Through repeated engagement in individual learning within a safe environment, administrators can gain mastery over challenging situations. These features highlight the significance of simulations in professional development. Moreover, the accessibility of information without temporal or spatial constraints is highly desirable. Conducting this study through distance education emphasizes the potential advantages of simulation applications, such as cost-effectiveness and improved performance, free from the limitations of time and location. In conclusion, this study aimed to address the following six research questions:

-

1.

Is there a significant difference observed between the pre-test and post-test scores of the decision-making skills of the participants in the experimental group who underwent simulation-supported education?

-

2.

Is there a significant difference observed between the post-test scores of the decision-making skills of the participants in the experimental group who underwent simulation-supported education and the control group participants who received traditional education methods?

-

3.

Is there a significant difference observed between the pre-test and post-test scores of the problem-solving skills of the participants in the experimental group who underwent simulation-supported education?

-

4.

Is there a significant difference observed between the post-test scores of the problem-solving skills of the participants in the experimental group who underwent simulation-supported education and the participants in the control group who were exposed to traditional education methods?

-

5.

How do the participants in the experimental group assess the effectiveness of the simulation application in enhancing the decision-making skills of school administrators?

-

6.

How do the participants in the experimental group evaluate the impact of the simulation application on the development of problem-solving skills?

2 Theoretical framework

2.1 Purpose and application areas of simulations

According to Patrick (2002), simulation technique serves three main purposes. Firstly, it is used to test and plan new problems, situations, events, and so forth. Secondly, it provides training for situations that are dangerous and costly to replicate in real life. Lastly, it enables the assessment of learners in real-life scenarios. Simulation-based learning offers a realistic learning experience that closely resembles real-life applications. It effectively overcomes the limitations of learning in real-life situations and is an efficient method for developing complex skills (Chernikova et al., 2020). Simulations foster critical thinking, decision making, and problem-solving skills during the learning process, necessitating active learner engagement (Chernikova et al., 2020). By engaging in simulation-based learning, individuals not only acquire problem-solving skills but also gain an understanding of the various stages involved. They learn to identify problematic situations, define the elements of the problem, generate suitable solutions, and engage in decision-making processes.

Simulations may sometimes be mistaken for other types of visual digital resources, such as animations or digital games. However, there are distinct features that set simulations apart from these other resources. Ellington et al. (1981) presented a schematic representation of the relationship between simulations, games, and case studies, highlighting their differences (see Fig. 1). Simulations differ structurally from still pictures, illustrations, animations, and games, which combine still pictures and illustrations to create the illusion of movement for the viewer. Unlike animations, simulations allow users to interact with and influence the simulated structure, while animations merely present visual content to the user without providing the opportunity for user input (Renken et al., 2016). In addition, unlike games, simulations are slowly developing applications that are based on certain social or physical reality and have steps. The aim of simulations is to take a real role, rather than to win, to determine the issue, threat or problem that arises in the scenario and to evaluate the results of your decisions. However, the aim of games is to win and no judgment is made as a result.

The relationship between games, simulations, and case studies (Ellington, Addinall and Percival, 1981)

2.2 Simulation applications in administrator and leader training

Simulations have been widely recognized as an effective method for training and enhancing the decision-making skills of managers (Staub & Bravender, 2014). There are various forms of simulations, including role-playing games and animation (Chance & Chance, 2000; Storey & Dambo, 2016; Veerson, 1991), which have long been utilized in the training of administrators. The first simulation program developed for training educational administrators was the “Jefferson Township Simulation” in 1957. Culbertson (1968) later updated it to reflect the evolving challenges faced by schools and managers. Another simulation called the “Monroe City Simulation” was created by the University Council for Educational Administration (UCEA) for educational administrator training (Engin & Miller, 1975). In 1993, UCEA introduced the Information Environment for School Leader Preparation (IESLP) simulation project, which incorporates web-based studies (Karabatak & Şengür, 2018; Young et al., 2009). Simulations have been highly praised in the literature for their ability to help participants identify organizational variables, make connections to decision-making, and foster a positive attitude towards management tools. Notably, the “Center of Things” simulation, developed by Vanderbilt researchers in 1980, focuses on improving and transforming school climate by identifying and fostering instructional leadership qualities in school administrators. The “Making Change Happen” simulation, by Hallinger et al., supports problem-solving skills among participants and has been tested for its effectiveness across different cultures (Young et al., 2009).

Showanasai et al. (2013) conducted a study to investigate the potential use of computer simulations as a research tool in the field of school leadership development. The research not only demonstrated the customization of specific simulations for collecting data on leadership development, but also highlighted the potential for training in various educational and training domains. In a subsequent study, Hallinger et al. (2017) adapted an existing online problem-based computer simulation, namely “Making Change Happen,” for Chinese school administrators. The study outlines the evaluation process used to assess the adaptation of a simulation designed for Western culture and curriculum to the Chinese cultural and curriculum context. Additionally, the study examines the response of Chinese administrators to the learning process facilitated by this innovative method. The findings suggest that Chinese school administrators responded positively to learning through an online computer simulation, emphasizing the potential of culturally relevant web-based simulations to enhance accessibility, flexibility, and convenience for both pre-service and in-service individuals.

The study conducted by Volante et al. (2020) aimed to investigate the effects of simulations on the decision-making capacities of educational administrators. Specifically, the researchers implemented computerized simulations that replicated the Chilean education system, and subsequently administered these simulations to school leaders. Both qualitative and quantitative methods were employed to assess the impact of the simulations. The results demonstrated a significant enhancement in the decision-making abilities of school leaders who experienced simulations closely aligned with the Chilean education system. As a result, this study concludes that computer-based simulations hold substantial promise as an accessible and supplementary training approach for educational administrators.

Militello et al. (2021) conducted a study to evaluate the efficacy of a virtual reality (VR) classroom experience in enhancing the skills of instructional leaders. Specifically, the study aimed to enhance school leaders’ capacity to observe and analyze classroom activities, thereby facilitating effective communication with teachers. The research comprised 75 school leaders and demonstrated that the VR application provided a pioneering approach to leadership development, particularly in terms of improving school leaders’ ability to identify aspects of academic discourse during classroom observations. Participants expressed the value of being able to practice classroom observations in a risk-free environment.

When conducting a literature review (Hallinger et al., 2017; Militello & Friend, 2013; Palmunen et al., 2021; Schrum & Levin, 2016), it becomes evident that there is a need for further empirical research on the effective use of technology in the training of school administrators. The objective of this study is to examine the advantages of integrating technology into school administrator training and establish a foundation for an education system that is more closely intertwined with technology. Previous research on simulation applications has demonstrated their potential to enhance administrators’ instructional leadership abilities and improve their skills, which are constantly evolving due to technological advancements.

3 Method

3.1 Research design

In this study, the aim is to examine the impact of simulation on the decision-making and problem-solving skills of school administrators. To achieve this objective, a mixed research method (Creswell & Plano Clark, 2015) was utilized, which involved gathering both quantitative and qualitative data. These data were carefully analyzed and the findings were synthesized. For this study, a convergent mixed design was chosen, which is a recognized approach in mixed research methods. This design ensures that the qualitative and quantitative stages of the research are treated independently. Equal importance is given to both qualitative and quantitative data, without favoring one over the other. Additionally, there is no prescribed sequence or order between the qualitative and quantitative stages. The data was collected simultaneously, and during the interpretation process, the data was combined and analyzed to derive meaningful conclusions.

3.2 Study participants

The research study included a total of Firat University 61 school administrators and prospective administrators who were enrolled in the Department of Educational Administration and were pursuing their master’s degree without a thesis. Among these participants, 30 were assigned to the experimental group, while the remaining 31 were assigned to the control group. While creating the study group in accordance with the purpose of the research, priority was given to the criteria of willingness to participate and the ability to use information and communication technologies effectively in both the qualitative and quantitative study groups. Regardless of whether the participants had participated in any simulation application before, all participants were given preliminary information about the use of simulation. The sample selection process employed the purposive sampling method, which is a non-probability based technique.

The experimental group comprised 19 (63.3%) female and 11 (36.7%) male participants. In the control group, there were 23 (74.2%) female and 8 (25.8%) male participants. Among the participants in the experimental group, 12 (40.0%) individuals, including one school principal, held administrative positions, while 18 (60.0%) worked as teachers. In the control group, 12 (38.7%) participants, with one school principal among them, occupied managerial roles, while 19 (61.3%) were teachers. It was observed that 19 (63.3%) participants in the experimental group had managerial experience, whereas the control group had 13 (41.9%) participants with managerial experience. Lastly, when analyzing the age distribution of the participants, it was discovered that 3 (10.0%) individuals in the experimental group were 30 years old or younger, 18 (60.0%) fell within the 31–40 years age range, and 9 (30.0%) were 41 years old or older. In the control group, 4 (12.9%) participants were 30 years old or younger, 17 (54.8%) were aged between 31 and 40, and 10 (32.3%) were 41 years old or older.

3.3 Simulation preparation process

Simulations, which form the basis of the research and training program, are virtual environments that replicate situations that may be fraught with risks or entail significant costs in real-life scenarios (Chernikova et al., 2020). Accordingly, a team of experts was convened to meticulously craft scenarios that closely mirror the specific problem situations being targeted, and which can be comprehensively addressed through rigorous planning.

The preparation process of the simulation the participants consist of different people application involves three distinct groups: the problem group, the design group, and the test group. The primary responsibility of the problem group is to identify the key issues that contribute to the development of the simulation and to formulate solution scenarios accordingly. The design group, on the other hand, is tasked with digitalizing the simulation scenario based on the problems and hypotheses identified by the problem group, in accordance with the intended simulation type. Lastly, the test group is responsible for carrying out the simulation and evaluating its performance, with the aim of identifying any deficiencies or areas that require improvement (Karabatak & Şengür, 2018).

The simulation preparation process for this study was planned as shown in Table 1.

As seen in Table 1, the problem(s) to be addressed in the simulations prepared to identify the researcher’s problem(s) should be problems that can be encountered in real organizational life. In addition, the main and sub-solution variables (hypotheses) of the problem(s) should be determined in line with the opinions and information obtained. For this reason, the problem group in the research was created with two experienced school administrators and two experts in the field of educational management. The researcher asked the school administrators she had previously determined to “write down an event they experienced regarding the problems they encountered most as a school administrator while managing the school.” The problem was identified with the answers received, and the main and sub-hypotheses of the problem were created. Then, examples of scenarios with current management problems were created by taking the opinions of experienced school administrators and experts in the problem group. Among the scenario examples created, the scenario that was easiest to understand and included more behavioral dimensions of instructional leadership was selected.

A design group was formed with software experts to prepare a simulation of the determined draft scenario’s problem, hypotheses, solution options and score calculations and transfer it to the computer environment, and the sample scenario was transferred to the digital environment. The sample scenario was created to be displayed on the screen both in audio and text format, and the user was allowed to enter the simulation as many times as she wanted.

The last step in the preparation of the simulation is the test group. The prepared simulation application was applied to two academicians in the field of educational management, who were not involved in the problem and design processes, two academicians in the field of computer software, and four experienced school administrators who encountered the simulation application for the first time, in order to obtain expert opinion. With the feedback received, deficiencies in the simulation were identified, corrections were made and the simulation application was finalized. These three steps in the preparation of the simulation cover a period of 9 months.

3.4 Simulation steps

3.4.1 1st step: Simulation home interface

Participants are required to login to the simulation application by entering their full name and student id number (see in Fig. 2).

3.4.2 2nd step: Simulation information interface

Participants are directed to an information screen that offers comprehensive details about the simulation application and presents symbolic expressions of the scores that can be achieved (see in Fig. 3).

3.4.3 3rd step: Simulation start interface

After reviewing the information interface, the participants proceeded by clicking on the “Next” button to initiate the problem scenario in the simulation. At this juncture, the audio features within the simulation are activated to capture the attention of the participants (see in Fig. 4).

3.4.4 4th step: functioning of simulation questions

The problem statements (there are 3 problem statements in the simulation) related to the dimensions of instructional leadership behavior placed in the simulation were encountered by the participants in the simulation. Each problem situation consists of four options. Participants were asked to mark one of the four options for these problem statements (see in Fig. 5).

The feedback bubbles were presented in order to clarify the score attributed to each option, as well as the underlying reasoning (see in Fig. 6). If the option chosen by the participant was determined to be the most accurate, the scenario would continue. However, if the participant’s choice did not align with the most correct option, the simulation would pause until the participant identified the most suitable solution for the given problem. The purpose of this simulation is to direct participants towards the most correct option and promptly provide them with feedback regarding their errors.

3.5 Simulation final interface

After successfully answering the problematic sentences presented in the simulation scenario, participants will be able to view their final score at the conclusion of the simulation (see in Fig. 7).

The final scores shown to the participants were reflected on the screen to allow them to see their missing learning in the scenario. The points received are not given to the participants as a grade. Participants are given the opportunity to test themselves by entering the questions they think are missing into the simulation application again and again. One scenario lasting a total of 23 min was included in the simulation.

3.6 Data collection tools

Quantitative and qualitative data collection tools were used in this study. These are shown in Table 2.

3.6.1 Melbourne decision making questionary (MDMQ I-II)

MDMQ I-II, originally developed by Mann et al., (1998) and later adapted into Turkish by Deniz (2004) through a validity and reliability study, comprises two sections:

Section I: This section consists of six items, three of which are direct items and three of which are reverse items. These items aim to assess the self-esteem (self-confidence) dimension in decision making.

Section II: This section comprises 22 items that measure the sub-dimensions of decision-making skills. These sub-dimensions include vigilance decision making (items 2, 4, 6, 8, 12, 16), hypervigilance decision making (items 3, 9, 11, 14, 17, 19), procrastination decision making (items 5, 7, 10, 18, 21), and buck-passing decision making (items 1, 13, 15, 20, 22) styles (Deniz, 2004).

Originally, MDMQ I-II had three response options. However, based on studies indicating that the reliability of a scale increases when more response options are available, the number of options was increased from three to five (Doğan et al., 2017).

In this study, the internal consistency coefficients of MDMQ I-II were calculated as 0.87 for careful decision making, 0.72 for self-esteem, 0.86 for procrastinator, 0.77 for avoidant, and 0.70 for panic decision making. Based on these values, it was concluded that the scale demonstrated satisfactory reliability.

3.6.2 Problem solving ınventory (PSI)

In the present study, the researchers utilized the Problem Solving Inventory (PSI), originally developed by Heppner and Petersen (1982) and subsequently adapted into Turkish by Şahin et al. (1993), to assess the problem-solving skills of school administrators. The PSI encompasses a total of 35 items, with 21 items reflecting positive aspects and 14 items reflecting negative aspects. Conceptually, the inventory consists of six sub-dimensions, namely avoidant style (items 1, 2, 3, 4), monitoring (items 6, 7, 8), problem-solving confidence (items 5, 23, 24, 27, 28, 34), impulsive style (items 13, 14, 15, 17, 21, 25, 26, 30, 32), reflective style (items 18, 20, 31, 33, 35), and planfulness(items 10, 12, 16, 19).

PSI used in this study employs a Likert-type 6-point scale, with scores ranging from 1 to 6. Higher scores on the scale indicate lower levels of problem-solving skills.

To establish the reliability of the scale, Cronbach’s α internal consistency coefficients were computed for each of the scale’s sub-dimensions, yielding the following results: avoidant approach (α = 0.63), confident approach (α = 0.69), evaluative approach (α = 0.75), planned approach (α = 0.75), hasty approach (α = 0.53), and thinking approach (α = 0.75). The overall scale demonstrated satisfactory internal consistency, with a Cronbach’s α coefficient of 0.82. Based on this coefficient, it can be concluded that the scale exhibited adequate reliability.

3.6.3 Interview form for the evaluation of the simulation application process

In this study, the interview technique, an essential tool for collecting qualitative data, was employed to gain insight into the perspectives of school administrators and prospective in the experimental group who participated in the simulation application. The aim was to supplement the findings obtained through quantitative analysis. Initially, the researcher conducted a thorough literature review pertaining to the research topic. Subsequently, a pool of 15 questions was formulated, aligning with the relevant research and addressing the research problem. Following feedback, the number of questions in the interview form was reduced from 15 to 9.

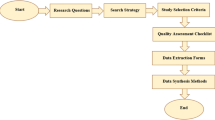

3.7 Research process

The research process consisted of three stages: preparation process, experimental process (application), and post-application process. Research process is shown in Fig. 8.

The explanation of the steps of the research process shown in the Fig. 8 is as follows:

-

1.

Preparation process: This is the stage in which the researcher planned the training process in line with the purpose of the study, planned the simulation application (for the educational leadership course) and made the preparation.

-

2.

Experimental process: This is the stage in which simulation-supported education is applied to the experimental group and traditional teaching is applied to the control group. This stage is the stage where the prepared simulation application is introduced, pre-tests are made and the simulation-supported education process is applied. This phase lasted 6 weeks. Throughout the application, participants in the experimental group were given the opportunity to play the simulation application as many times as they wanted. The control group was trained face-to-face using traditional methods in the educational leadership course for a period of 6 weeks.

-

3.

Post-application process: In this stage, the researcher administered post-tests to the participants in the experimental and control groups. A semi-structured interview form was applied to the participants in the experimental group to evaluate the experimental research process and the simulation application.

3.8 Data analysis

3.8.1 Quantitative data analysis

In the analysis of the quantitative data of the study, the SPSS 26.0 package program was utilized for statistical procedures. Demographic data was analyzed using the percentage (%) and frequency (f) techniques.

A descriptive analysis was conducted to analyze the qualitative data collected via the post-study interview form. Adhering to the designated steps for descriptive analysis, coding was executed according to the information provided by the participants. The resulting codes were subsequently categorized and organized into relevant themes. Furthermore, the themes and categories were further refined and structured to ensure accurate data representation. Ultimately, the findings were interpreted and concluded.

4 Findings

4.1 Quantitative findings

4.1.1 Comparison of pre-test and post-test scores on decision making skills in the experimental group

A pre-test and post-test were conducted to evaluate whether there was a substantial difference in the decision-making capabilities of the participants in the experimental group before and after the application. Given that the pre-test and post-test scores of the MDMQ I-II sub-dimensions showed a normal distribution, a dependent groups t-test was utilized to compare the scores. The results of the dependent groups t-test are presented in Table 3.

As seen in Table 3, when analyzing the pre-test and post-test scores of the participants from MDMQ I-II, it is evident that there is a statistically significant difference in favor of the post-test for self-esteem decision-making [t(29) = -2.70] and vigilance decision-making [t(29) = -2.31]. Upon calculating the effect sizes for these differences, it was determined that the self-esteem in decision-making dimension exhibited a strong effect size (η2 = 0.78), while the vigilance decision-making dimension demonstrated a moderate effect size (η2 = 0.56).

Conversely, for the dimensions of hypervigilance decision-making [t(29) = 2.62], procrastination decision-making [t(29) = 1.99], and buck-passing decision-making [t(29) = 3.67], a statistically significant difference was observed in favor of the pre-test. The effect size calculation revealed that the hypervigilance decision-making dimension displayed a weak effect size (η2 = 0.33), whereas the procrastination decision-making (η2 = 0.49) and buck-passing decision-making (η2 = 0.58) dimensions each exhibited a moderate effect size.

4.1.2 A comparative analysis of pre-test and post-test scores on decision-making skills between experimental and control groups

A comparison was conducted between the pre-test and post-test scores of school administrators and candidates in the pre-teen experimental group and the control group to determine if there was a significant difference. The scores obtained from the MDMQ I-II exhibited a normal distribution. An independent samples t-test was performed to ascertain the presence of a significant difference. The results of the analysis are presented in Table 4.

As observed in Table 4, a comparison was made between the pre-test scores of the experimental and control groups using t-test results from the MDMQ I-II. It was determined that there were no significant differences in any dimension. Specifically, self-esteem in decision making [t(59) = -0.148, p > .05], vigilance decision making [t(59) = -1.65, p > .05], hypervigilance decision making [t(59) = 0.797, p > .05], procrastination decision making [t(59) = 0.750, p > .05], and buck passing decision making [t(59) = 0.220, p > .05]. These findings indicate that participants in both groups had comparable levels of decision-making skills before the training process.

Table 5 presents the t-test results for comparing the post-test scores of participants in the experimental and control groups using the MDMQ I-II.

According to Table 5, a substantial discrepancy has been found in the post-test scores of the experimental and control groups across all dimensions of the MDMQ I-II, except for the Self-esteem dimension in decision-making. There is no statistically significant difference between the post-test scores of the experimental group and the control group in terms of self-esteem in the decision-making dimension [t(59) = 1.77, p > .05]. However, it can be observed that there is a significant difference between the post-test scores of the experimental and control groups in the dimensions of vigilance decision-making [t(59) = 2.82], hypervigilance decision-making [t(59)= -2.42], procrastination decision-making [t(59)= -2.62], and buck-passing decision-making [t(59)= -1.93] (p < .05). In the dimensions of vigilance decision-making (η2 = 0.72), hypervigilance decision-making (η2 = 0.61), and procrastination decision-making (η2 = 0.61), the effect size of the significant difference is strong. However, in the buck-passing decision-making dimension (η2 = 0.49), the effect size is moderate.

Based on the findings obtained, it can be argued that the experimental group, which utilized simulation applications in their educational approach, displayed superior levels of decision-making skills in comparison to the control group, except for the aspect of self-confidence in decision-making. These findings suggest that simulation applications are more effective in imparting decision-making skills when compared to conventional teaching methods.

4.1.3 Comparison of problem-solving skill pre-test and post-test scores in the experimental group

A pre-test and post-test were conducted to evaluate the potential discrepancy in problem-solving capabilities among participants in the experimental group before and after the intervention. Since the pre-test and post-test scores for the sub-dimensions of PSI exhibited a normal distribution, a dependent t-test was utilized to compare the scores. The outcomes of the dependent t-test are delineated in Table 6.

As seen in Table 6, when analyzing the pre-test and post-test scores of the participants from the PSI, we observed statistically significant differences in all sub-dimensions, except for the impulsive style sub-dimension (avoidant style [t(29) = 2.97], problem-solving confidence [t(29)= -2.26], monitoring [t(29)= -2.03], planfulness [t(29)= -2.40], and reflective style [t(29)= -2.63]). Furthermore, when calculating the effect sizes of these differences, we found that the differences were strong for the avoidant style dimension (η2 = 0.64) and reflective style dimension (η2 = 0.60). The effect size was determined to be moderate for the problem-solving confidence dimension (η2 = 0.57), monitoring dimension (η2 = 0.54), and planfulness dimension (η2 = 0.57).

4.1.4 A comparative analysis of pre-test and post-test scores for experimental and control groups with regard to problem-solving skills

Since the PSI scores of the school administrators and candidates in the experimental and control groups exhibit a normal distribution, an independent samples t-test was performed to ascertain the presence of a significant difference between the pre-test and post-test scores. The findings of this analysis are presented in Table 7.

As shown in Table 7, the t-test results comparing the pre-test scores of the experimental group and the control group on the PSI indicate no significant differences in the sub-dimensions of avoidant style [t(59) = 0.36], problem-solving confidence [t(59) = 1.66], monitoring [t(59) = 0.07], planfulness [t(59) = 1.12], and reflective style [t(59) = 1.47]. However, there was a significant difference in favor of the experimental group in the impulsive style dimension [t(59) = 3.00]. These findings suggest that, except for the impulsive style dimension, the participants in both the experimental and control groups had similar levels of problem-solving skills before the training process.

Table 8 presents the t-test results for the comparison between the post-test scores of the participants belonging to the experimental and control groups.

As demonstrated in Table 8, it has been observed that the post-test scores of both the experimental and control groups on the PSI exhibited considerable variations across all dimensions, except for the impetuous sub-dimension (avoidant style [t(54)= -2.58], problem-solving confidence [t(59) = 4.08], monitoring [t(59) = 2.15], planfulness [t(59) = 4.48], and reflective style [t(59) = 3.16]) (p ≤ .05). After conducting a series of calculations to assess the magnitude of these variations, the effect sizes for problem-solving confidence (η2 = 1.00), planfulness (η2 = 1.00), and reflective style (η2 = 0.80) were determined to be significantly strong. Similarly, the effect size for avoidant style (η2 = 0.66) was found to be strong, while the effect size for monitoring (η2 = 0.55) was determined to be moderate.

Based on the acquired findings, it can be posited that the problem-solving skills of the participants in the experimental group, who utilized simulation applications during the training, exhibited a higher proficiency level, except for the dimension of impulsive style in problem-solving, when compared to the control group. This finding suggests that the utilization of simulation applications is a more efficacious approach than traditional methods for teaching problem-solving skills.

4.2 Findings regarding the qualitative dimension

To investigate the viewpoints of participants in the experimental group regarding the influence of simulation-supported training on their decision-making skills, data was collected using the following inquiry: “To what extent did the application of simulation influence your decision-making skills? Please provide specific details about your experiences.” The gathered responses were subsequently categorized, as illustrated in Table 9.

As depicted in Table 9, the participants’ opinions can be classified into two distinct categories: positive effects and negative effects. Within the positive effects category, the most prominent viewpoint expressed by the participants was “gaining experience in decision making” (f = 7). Other positive effects cited by the participants include accelerating the decision-making process (f = 4), gaining a different perspective (f = 3), and making effective and reliable decisions (f = 2). The subcategories falling under the negative effects category include the limited number of scenarios (f = 2) and the simplicity of the scenarios (f = 1).

To determine the viewpoints of participants in the experimental group regarding the influence of simulation-supported education on their problem-solving capabilities, the following inquiry was presented: “What effect did the utilization of simulation have on your problem-solving skills? Could you please share your experiences?” Subsequently, their responses were collected and classified, as presented in Table 10.

As illustrated in Table 10, the participants’ opinions have been classified into two categories: positive effects and negative effects. Regarding the positive effects, the majority of participants (n = 7) emphasized their perspective on understanding the problem-solving process. Additional positive views expressed by participants included gaining experience in problem-solving (n = 3), solution-oriented approach to problems (n = 3), recognize the problem correctly (n = 2), and observing the outcomes of various approaches (n = 3). On the other hand, negative effects were articulated in terms of a limited number of questions (n = 4) and the simplicity of the questions (n = 1). These opinions expressed by the participants are consistent with the findings derived from the quantitative research.

5 Discussion

In this study, the MDMQ I-II was administered to the group participating in the training before and after the application in order to obtain findings related to the participants’ decision-making skills. Additionally, the PSI was administered to the group participating in the training before and after the application to obtain findings related to problem-solving skills. The post-test results for the dimensions of decision-making skills after the application process indicate that the experimental group participants scored higher in the Vigilance Decision-Making dimension compared to the control group participants. Furthermore, it was found that the experimental group participants exhibited a greater decrease in avoidance, procrastination, and Buck Passing Decision-Making skills, which were expected to decrease after the training, compared to the control group participants. However, no significant difference was found in terms of self-esteem in the decision-making sub-dimension. Based on these results, it can be concluded that simulation-based instruction improves participants’ self-esteem in decision-making and Vigilance Decision-Making skills, and is effective in reducing negative tendencies such as Hypervigilance, Procrastination, and Buck Passing Decision-Making in decision-making situations.

There is a limited body of research available regarding the impact of simulation applications on the decision-making abilities of school administrators. However, the existing studies in the literature support the aforementioned findings. For instance, Staub and Bravender (2014) conducted a study where decision-making simulations, developed by graduate students in a school administrator training program, were evaluated by practitioners and experienced school administrators. The practitioners assessed the simulations in two sessions and observed that the online simulations facilitated reflection and improvement in the decision-making skills of the graduate students. Consequently, the study concluded that simulation applications offer valuable insights into the decision-making processes of school administrators. Furthermore, the research emphasized the noteworthy benefits of decision-making simulations for inexperienced (novice) school administrators, addressing the existing gaps in their decision-making processes.

In the study conducted by Volante et al. (2020) to investigate the impact of simulations on the decision-making abilities of school administrators, computerized simulations were developed based on the Chilean education system. These simulations were then administered to school leaders using both qualitative and quantitative approaches. The findings of the study indicated that the decision-making skills of school administrators showed improvement as a result of the simulations. Furthermore, Shapira-Lishchinsky (2015) highlighted that simulations offer a promising platform for fostering ethical decision-making practices and enhancing the professional development of educational leaders.

In the post-test scores for problem-solving skill dimensions following the implementation process, a notable decrease was observed in the avoidant problem-solving sub-dimension among participants in the experimental group compared to those in the control group. Conversely, a significant difference was found in favor of the experimental group in the dimensions of problem-solving confidence, monitoring, planfulness, and reflective style, which are sub-dimensions of positive problem-solving skills. Based on these findings, it can be concluded that simulation-supported instruction enhanced the participants’ problem-solving skills in terms of self-confidence, evaluation, planning, and reflection, while effectively reducing their avoidance tendencies, which are negative aspects of problem-solving.

When conducting a review of previous research, it was discovered that only a limited number of studies have been conducted on the impact of simulation applications on the problem-solving skills of school administrators. However, these existing studies do provide support for the aforementioned research findings. For example, Hallinger et al. (2017) conducted research on the transfer of leadership knowledge from theory to practice in the context of Chinese education. They adapted an existing problem-based computer simulation, known as Making Change Happen™, for implementation in China. The findings of their study emphasized that this problem-based application offers an alternative strategy for enhancing the quality of principal education. Similarly, Bravender and Staub (2018) explored the implementation of mentoring programs that utilized problem-based simulations for inexperienced (novice) principals in select states in the USA. Their study concluded that problem-based simulation applications proved to be effective tools for identifying and resolving various issues within schools.

Data availability

Data will be available on request.

References

Bravender, M., & Staub, N. (2018). Using interactive, problem-based simulations in a mentoring program for novice school leaders. Education Leadership Review, 19(1), 77–91.

Chance, E. W., & Chance, P. L. (2000). The use of simulations in principal Preparation Programs: A Bridge between the Classroom and Field-based experiences. Toward the Year,132–139.

Chernikova, O., Heitzmann, N., Stadler, M., Holzberger, D., Seidel, T., & Fischer, F. (2020). Simulation-based learning in higher education: A meta-analysis. Review of Educational Research, 90(4), 499–541.

Creswell, J. W., & Plano Clark, V. L. (2018). Designing and conducting mixed methods research(3rded). SAGE.

Culbertson, J. A. (1968). Amendment to the revising and updating the Jefferson Township Simulation materials Contract. the Development and Testing of Gamed Simulations.

Deniz, M. E. (2004). Investigation of the relation between decision-making Self-Esteem, decisionmaking styles and Problem solving skills of University students. Eurasian Journal of Educational Research, 4(15), 23–35.

Doğan, V., Özkara, B. Y., Yılmaz, C., & Torlak, Ö. (2017). Examining the number of participation Level options: An inference on the optimal participation level number of options. Journal of Business Research, 9(1), 464–484.

Ellington, H. (1981). Games and simulations in Science Education. Nichols Publishing Company. PO Box 96, New York, NY 10024.

Engin, A. W., & Miller, J. N. (1975). The training of school psychologists through simulation: PSYCHSIM. Psychology in the Schools, 12(1), 40–42.

Faria, A. J. (2001). The changing nature of Business Simulation/Gaming Research: A brief history. Simulation and Gaming, 32(1), 97–110.

Faria, A. J., Hutchinson, D., Wellington, W. J., & Gold, S. (2009). Developments İn Business Gaming: A review of the past 40 years. Simulation and Gaming, 40(4), 464–487.

Goldschmidt, K., & Msn, P. D. (2020). The COVID-19 pveemic? Technology use to support the wellbeing of children. Journal of Pediatric Nursing, 3–5.

Hallinger, P., Shaobing, T., & Jiafang, L. (2017). Learning to make change happen in Chinese schools: Adapting a problem-based computer simulation for developing school leaders. School Leadership ve Management, 37(1–2), 162–187.

Hofstede, G. J., De Caluwé, L., & Peters, V. (2010). Why simulation games work-in search of the active substance: A synthesis. Simulation and Gaming, 41(6), 824–843.

Karabatak, S., & Şengür, D. (2018). An alternative method in Training School administrators: Simulations. Journal of Theoretical Educational Science, 11(4), 748–771.

Kelton, W. D. (1999). Designing Simulation Experiments. In Proceedings of the 31st conference on Winter simulation: Simulation—a bridge to the future- 1, 33–38.

Mann, L., Radford, M., Burnett, P., Ford, S., Bond, M., Leung, K., & Yang, K. S. (1998). Cross-cultural differences in self-reported decision-making style and confidence. International Journal of Psychology, 33(5), 325–335.

McClarty, K. L., Orr, A., Frey, P. M., Dolan, R. P., Vassileva, V., & McVay, A. (2012). A Literature Review of Gaming in Education. Pearson.

Militello, M., Tredway, L., Hodgkins, L., & Simon, K. (2021). Virtual reality Classroom simulations: How School leaders İmprove İnstructional Leadership Capacity. Journal of Educational Administration.

Palmunen, L. M., Lainema, T., & Pelto, E. (2021). Towards a manager’s Mental Model: Conceptual change through Business Simulation. The International Journal of Management Education, 19(2), 100460.

Patrick, S. (2002). Numerical Simulation of Electric Power Steering (EPS) System. KOYO Engineering Journal English Edition, 16, 52–56.

Pekdağ, B. (2010). Alternative ways in Chemistry Learning: Learning with Animation, Simulation, Video and Multimedia. Turkish Journal of Science Education, 7(2), 79–110.

Renken, M., Peffer, M., Otrel-Cass, K., Girault, I., & Chiocarriello, A. (2016). Simulations as scaffolds in science education. Bloomington, IN: Association for Educational Communications and Technology, in collaboration with Springer International

Şahin, N., Şahin, N. H., & Heppner, P. P (1993). Psychometric properties of the problem solving inventory in a group of Turkish university students. Cognitive Therapy and Research, 17(4), 379–396.

Schrum, L., & Levin, B. B. (2016). Educational technologies and twenty-first century leadership for learning. International Journal of Leadership in Education, 19(1), 17–39.

Shapira-Lishchinsky, O. (2015). Simulation-based Constructivist Approach for Education leaders. Educational Management Administration and Leadership, 43(6), 972–988.

Showanasai, P., Lu, J., & Hallinger, P. (2013). Developing tools for research on school leadership development: An illustrative case of a computer simulation. Journal of Educational Administration.

Staub, N. A., & Bravender, M. (2014). The construction of simulations as an instructional activity for graduate students in an Education Leadership Program. Leadership and Research in Education, 1, 67–78.

Storey, V. A., & Dambo, N. J. (2016). Mixed-reality immersive environment as an instructional tool for building educational leadership capacity: Is this the future? In V. C. Wang (Ed.), Hvebook of research on learning outcomes and opportunities in the Digital Age (pp. 744–768). IGI Global.

Veerson, M. E. (1991). Principals: How to Train, Recruit, Select, Induct, and Evaluate Leaders for America’s Schools. ERIC Clearinghouse on Educational Management College of Education University of Oregon, 1787 Agate Street, Eugene, OR 97403.

Veerson, P. H., & Lawton, L. (2009). The relationship between goal orientation and simulation performance with attitude change ve perceived learning. In Developments in Business Simulation ve Experiential Learning: Proceedings of the Annual ABSEL conference (Vol. 36).

Volante, P., Jeldres, R., Spero, K., Llorente, C., & Johanek, M. C. (2020). Simulations for the learning of decision making in educational leadership in the context of the Chilean school system. Research in Educational Administration and Leadership, 5(1), 1–41.

Wildman, L. (2001). Research on the preparation of school administrators, paper prepared for the Board of the National Council of Professors of Educational Administration, Bakersfield, CA, August.

Young, M. D., Crow, G. M., Murphy, J., & Ogawa, R. T. (Eds.). (2009). Hvebook of research on the education of school leaders. Routledge.

Acknowledgements

The authors would like to thank all participating students.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK). This study was supported by Fırat University Scientific Research Projects Coordination (FÜBAP) Unit with project number EF.22.04.

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Generative AI and AI-assisted technologies in the writing process

Not applicable.

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Atici, E.K., Turhan, M. The impact of simulation applications on the problem-solving and decision-making skills of school administrators and prospective administrators: An experimental study. Educ Inf Technol (2024). https://doi.org/10.1007/s10639-024-12866-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-024-12866-x