Abstract

Digital competence (DC) has become a key element for future teachers in the effort to guarantee a high-quality education system that responds to the needs of the 21st century. For this reason, numerous studies have tried to evaluate the DC of university students in education degrees, although very few have focused on the differences according to the academic year or the methodology used. This study aims to determine the level of DC among 1st and 4th year Primary Education undergraduates at two Spanish universities that employ different learning methods (face-to-face and online). The sample comprised 396 undergraduates who completed an online instrument (with a 10-point response scale) called the Higher Education Student Digital Competence Questionnaire (CDAES). The results reveal that students’ level of DC upon graduation is basic-intermediate and that the dimensions in which they are most proficient are ‘Digital Citizenship’ and ‘Innovation’. Despite this, however, the tasks performed to justify this level are basic. The results also indicate that students’ DC improves as they progress in their degree and that the online method seems to be more effective in promoting this particular competency. We can therefore conclude that tasks specially designed to improve DC are included in teacher training degrees, particularly in the case of online courses, although we are unable to determine which specific practices or methodologies foster better outcomes. To clarify this, new empirical approaches that focus on these aspects are required, along with specific improvement actions or initiatives adapted to the needs of each individual group.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The 21st century will go down in history for being the era that saw the dawn of the ‘Digital Society’. The fast, energetic spread of Information and Communication Technology (ICT) during this period has had a direct, progressive impact on the way in which people all over our rapidly-changing, increasingly digitised and, consequently, globalised world study, work, live together and interact (Basilotta et al., 2022; Silva & Morales, 2022). In the field of education, the swift growth of digital resources has called both the policies and infrastructure that underpin schools into question (Colomo et al., 2023; Fernández-Batanero et al., 2021). This in turn has prompted a gradual transformation in several fundamental aspects of the teaching-learning (T-L) process, including targets, the curriculum, paradigms, resources, contents, techniques, assessment and, most particularly, the relationship between teachers and their students (Cabero-Almenara et al., 2020; Gros & Silva, 2005). In this scenario, students are no longer mere recipients of contents, but rather active, independent subjects aware of their responsibility in terms of promoting less classical and more innovative T-L processes (Pertusa-Mirete, 2020), with the support of educational technologies (Garcés-Prettel et al., 2014).

Consequently, the extant literature emphasises the importance of preparing students (Romero-Tena et al., 2020), existing teachers or educational stakeholders (Fernández-Miravete & Prendes-Espinosa, 2022; Inamorato et al., 2023) and future teachers (Aguilar et al., 2022; Colomo et al., 2023; Girón-Escudero et al., 2019) to respond to the new ‘demands of the script’ in life, at school and in the labour market (Inamorato et al., 2023). In other words, previous studies underscore the need to train all those involved in teaching to become more digitally competent in order to enable them to transform and improve education (Basilotta et al., 2022). This approach has become particularly popular since the European Commission (2007, 2018) began arguing that ‘Digital Competence’ is a basic cross-cutting competence that citizens in general and educational stakeholders in particular (Cabero-Almenara et al., 2021) should develop in order to respond to the demands of an increasingly changeable and interconnected society and education system (Romero-García et al., 2020; Silva & Morales, 2022).

1.1 Areas or dimensions of digital competence

Digital Competence (DC) encompasses knowledge, skills, attitudes and awareness (Ferrari, 2012) that foster the ‘confident, critical and responsible use of, and engagement with, digital technologies for learning, at work, and for participation in society’ (European Commission, 2018). In this sense, DC in the educational field encompasses a set of skills, abilities, strategies and aptitudes that foster the fruitful, efficient, critical, responsible, safe, creative, autonomous, inclusive, ethical and effective integration of ICT and the digital media into teaching, learning, design, problem-solving, research, assessment and interaction practices in the school environment (Rodríguez-García et al., 2019; Romero-Tena et al., 2021). In sum, DC is a multidimensional, comprehensive competence that covers a range of different independent yet complementary areas (literacy, access to information, multimedia creation, collaboration, safety and ethics) (Gutiérrez-Castillo et al., 2017; Pozo-Sánchez et al., 2020) that may be improved or developed (Fernández-Miravete & Prendes-Espinosa, 2022) in order to both design virtual learning environments and participate in them (Gros & Silva, 2005).

Many different national and international standards and reference models have arisen in this scenario (Mattar et al., 2022) with the aim of establishing the priority areas or dimensions of digital competence that should be fostered among students and teachers (Basilotta et al., 2022; Jiménez-Hernández et al., 2020). The most important of these are outlined in Table 1.

These models and indicators have in turn prompted diverse studies aimed at developing instruments for assessing DC (Gabarda et al., 2020; Jiménez-Hernández et al., 2020), establishing six levels for measuring this construct: beginner (A1), explorer (A2), integrator (B1), expert (B2), leader (C1) and pioneer (C2) (Romero-Tena et al., 2020; Gutiérrez-Castillo et al., 2017). At the same time, a concerted effort has been made to develop training programmes to improve the DC of both students and teachers (Inamorato et al., 2023). However, although proficiency in the use of ICT is vital to improving education and reducing the digital divide -one of the greatest challenges in terms of ensuring, in accordance with that stated in the 2030 Agenda, ‘inclusive and equitable quality education’ and promoting ‘lifelong learning opportunities for all’ at all levels of the education system (Spanish Government, 2015, p. 28)- empirical research to date has focused mainly on DC in pre-university stages (Basilotta et al., 2022). Given that the empirical-academic activities carried out in the university environment constitute an ideal framework for acquiring DC (Romero-Tena et al., 2021) and that future teachers (i.e., undergraduate trainee teachers) are, from a holistic perspective, agents of change (García-Correa et al., 2022) with a pivotal role (Gao et al., 2024) in the transmission of DC (Moreno-Rodríguez et al., 2018), increasing attention is currently being paid to determining and responding to their digital needs.

1.2 Digital competence among primary education undergraduate students

In the university field, most empirical studies have assessed DC using self-reports (Pérez-Navío et al., 2021). Research carried out with Primary Education undergraduates has found, in general, that these trainee teachers consider themselves to be competent for engaging in basic activities: searches, presentation and organisation of content in different formats, security and online collaboration with educational professionals (Gallego-Arrufat et al., 2019; Moreno-Fernández et al., 2021; Silva & Morales, 2022). However, studies also highlight the existence of difficulties and a low level of training in terms of using new technologies or specific technical skills to create and manage contents, solve problems (Basilotta et al., 2022; Cabero-Almenara et al., 2021) or foster innovative educational practices (Moreno-Rodríguez et al., 2018; Røkenes & Krumsvik, 2014).

In this context, the extant literature also reports differences in digital skills in accordance with aspects such as gender, academic year and learning format. In the case of gender, the results are inconsistent. For example, Jiménez-Hernández et al. (2020) report a gender gap, with men, in general, scoring higher than women in all areas of DC and being effective in the use and management of information and digital security (Gallego-Arrufat et al., 2019). In contrast, Marimon-Martí et al. (2023) found that women scored higher than men in all dimensions, particularly in ‘advanced information search’ and ‘online collaboration’ (López-Belmonte et al., 2019; Pérez-Navío et al., 2021). Finally, Marín-Suelves et al. (2022) found no gender differences at all. The situation in relation to academic year is totally different, with all studies analysing this variable unanimously affirming that undergraduates’ technological competence increases as they progress in their degree (Marimon-Martí et al., 2023; Marín-Suelves et al., 2022; Romero-Tena et al., 2021).

The effects of learning format (face-to-face or online) have been less widely studied in this field. In a comparative study with a group of students who had to migrate during the pandemic from a face-to-face format to an online one, Romero-Tena et al. (2021) found that this abrupt change, with no previous preparation, had a considerable negative impact on participants’ competence level and academic development, with no differences being detected between those who had received specific training in digital material and those who had not. For their part, Álvarez-Herrero (2020) argue that students and teachers who have been trained in new technologies are better prepared and are more enthusiastic to cope with the challenges of a changing field in which emerging methodologies and technologies (gamification, blended learning, design thinking, etc.) often overlap and are transforming life in the classroom (Gao et al., 2024). The research community has, in general, begun to advocate for the need to design training plans that contemplate or incorporate activities designed to foster the practical, critical, intelligent and responsible use of DC, right from the very beginning (Romero-Tena et al., 2021; Silva & Morales, 2022; Wang et al., 2023). It is therefore important to analyse the differences and similarities in DC among students learning in a face-to-face format and those learning online.

1.3 General and specific aims

The general aim of the present study is to determine the level of DC among 1st and 4th year Primary Education undergraduates at two Spanish universities that employ different learning methods (face-to-face and online). The specific aims are as follows:

A1.

To analyse the level of DC among a sample of Spanish undergraduate students.

A2.

To compare the level of DC in accordance with factors such as gender, academic year and learning format.

2 Material and method

To achieve the aforementioned aims, the present study follows a quantitative, descriptive, comparative and correlational design.

2.1 Participants

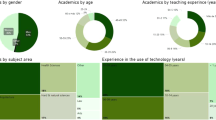

The questionnaire was completed by a representative sample of 396 students (22.5% men and 77.5% women) aged between 18 and 41 years (M = 20.81; SD = 2.33) from two public universities. The sample was recruited evenly across the different academic years and universities (Year 1: n = 117 -U1- and n = 116 -U2-; Year 4: n = 79 -U1- and n = 84 -U2) (Table 2). In general, participants claimed to spend over 5 h a week on their computers (83%), although in the case of those on face-to-face courses, most (61.7%) claimed to spend between 5 and 20 h a week in front of a screen, whereas among those on online courses, most (55%) claimed to spend over 20 h a week on their computers.

2.2 Instruments

The data collection instrument used in the present study was a self-report questionnaire comprising a battery of sociodemographic questions and a scale adapted and validated with future Spanish teachers: the Higher Education Student Digital Competency Questionnaire (CDAES), developed by Gutiérrez-Castillo et al. (2017) on the basis of the indicators proposed by the ISTE (2023) and the INTEF (2017). The questionnaire comprises 44 items rated on a 10-point scale (1 = Completely incapable; 10 = Completely capable) and measures students’ digital performance in six dimensions encompassing a range of activities and situations designed to test their ability to effectively use ICT: (1) Technological literacy (effective use and application of ICT); (2) Information search and processing (positive aptitudes for searching, analysing, managing, assessing and transmitting information using ICT); (3) Critical thinking (digital capacity to define, plan, develop, apply and assess projects); (4) Communication and collaboration (aptitudes for collaborating and interacting on digital platforms); (5) Digital citizenship (ethical, safe and responsible use of ICT); and (6) Creativity and innovation (use of ICT tools as an innovative resource for changing and improving existing knowledge).

Consistently with that reported by Gutiérrez-Castillo et al. (2017), the psychometric properties for the whole instrument were adequate (α = 0.96).

2.3 Procedure

The sample was recruited through lecturers teaching on the Primary Education undergraduate degree at the two universities, who, after being informed of the aims and procedure of the study, agreed to help disseminate the link to the Microsoft Forms questionnaire used to collect the data. The Microsoft Forms platform was chosen because the Ethics Committee at the University of the Basque Country (CEISH) had identified it as the one that best guaranteed respondents’ anonymity (M10_2023_178). Participation was voluntary and, before completing the questionnaire, respondents signed an informed consent form.

Participants’ responses were first downloaded, debugged and processed using Microsoft Excel, before being exported to the IBM SPSS statistical package Statistics 28.

2.4 Data analysis

To guarantee that the instrument was suitable for use in academic research, its reliability was analysed using Cronbach’s alpha (α). Next, to fulfil the study aims and answer the research questions, descriptive analyses were carried out of the dimensions and their indicators (means and standard deviations) and a correlation analysis was performed (Pearson). After the Kolmogorov-Smirnov test had determined that the data followed a normal distribution (p ≥ .05), several comparative analyses were carried out (Student’s t for independent samples) and the effect sizes were calculated using Cohen’s d, in accordance with the following criteria: d = < 0.20 was considered to indicate no effect; d = 0.21–0.49 a small effect, d = 0.50–0.70 a moderate effect and d = > 0.80 a large effect (Cohen, 2013).

3 Results

3.1 General digital competence in each dimension and indicator

Participants obtained a general DC score of 6.91 points, indicating a low-intermediate or ‘explorer’ level (Romero-Tena et al., 2020). The least developed areas were ‘Information search and processing’, ‘Technological literacy’ and ‘Critical thinking’. In contrast, the most developed areas (at an ‘integrator’ level) were ‘Communication and collaboration’, ‘Digital citizenship’ and ‘Creativity and innovation’ (Fig. 1).

Participants scored particularly highly in some basic aspects of the following sub-dimensions or indicators: safe, legal and responsible use of information and ICT (for example, respecting copyright) (Area-5.1: M = 7.36; SD = 1.64), understanding and use of operating systems and browsers (Area-1.1: M = 8.11; SD = 1.88), participation in and coordination of group activities (Area-4.4: M = 7.81; SD = 1.68) and creation of original resources for expressing oneself (Area-6.2: M = 7.68; SD = 1.95). In contrast, they had difficulties performing more complex tasks, such as offering constructive criticism and taking on a digital leadership role (Area-5.3: M = 6.72; SD = 1.88), researching problems and configuring systems (e.g., antivirus software, hard drives, etc.) to solve them (Area-1.3: M = 5.33; SD = 2.39) and configuring resources, programs and processes (e.g., software, hardware) to offer alternative solutions (Area-3.4: M = 5.13; SD = 2.21).

3.2 General digital competence in each dimension and indicator, by gender

In terms of gender, men generally scored better in all the different dimensions of DC, although the differences were not significant (p = > 0.05). Specifically, the greatest differences (with men scoring higher) were found in ‘Critical thinking’, although the effect size was small (d = 0.37) (Fig. 2).

If we analyse the individual results for each indicator, we see that men were better at configuring systems and resources to offer alternative solutions (Area-3.4: p = .001; d = 0.70) and at resolving problems using different ICT systems and applications (Area-1.3: p = .001; d = 0.47). They were also better at studying the possibilities and limitations of ICT resources in order to make informed decisions (Area-3-3: p = .001; d = 0.34) and at organising, assessing, summing up and using information ethically with the help of ICT (Area-2.2: p = .001; d = 0.29), although again, the differences were small. For their part, women scored slightly higher for understanding and using multiple technological systems (devices, browsers, etc.) (Area-1.1), as well as for planning customised digital activities in order to solve problems (Area-3.2), again with fairly small differences.

3.3 General digital competence in each dimension and indicator, by academic year

The results for DC by academic year revealed that students in year 4 of their Primary Education teacher training degree performed better in all areas, attaining an intermediate or ‘integrator’ level, as opposed to the low-intermediate or ‘explorer’ level attained by 1st year students. Students in the last year of their degree scored significantly higher in all dimensions and indicators (p = .001), particularly ‘Communication and collaboration’, with a medium effect size (p = .001; d = 0.65). It is worth noting, however, that both groups had the most difficulty in the ‘Critical thinking’ dimension (Table 3).

In terms of the indicators measured, as shown in Table 3, both groups scored highest for understanding and using different types of ICT (devices, operating systems, etc.) (Area 1.1.) and lowest for configuring different resources (software, hardware, etc.) to offer alternative solutions (Area 3.4.) and solving problems in diverse systems and applications (Area 1.3). The results also revealed that inter-group differences (with 4th year students scoring higher) were greater in those indicators referring to the identification and definition of significant research problems and questions (Area 3.1: p = .001; d = 0.66), interaction using different media (Twitter, YouTube, etc.) and formats (video, audio, image, etc.) (Area 4.2: p = .001; d = 0.47) and collaboration in multidisciplinary teams to develop original projects using ICT (Area 4.4: p = .001; d = 0.41).

3.4 General digital competence in each dimension and indicator, by learning format

In terms of DC level by learning format, students on the online course scored significantly higher in all dimensions than their counterparts on the face-to-face course. Indeed, the online group achieved an intermediate or ‘integrator’ level in all areas, as opposed to the ‘explorer’ level attained by the face-to-face group. In general, the dimension in which both groups scored highest was ‘Innovation and creativity’ (p = .001; d = 1.01), and the dimension in which they scored lowest was ‘Critical thinking’, although with significant differences (p = .001; d = 1.18) (Table 4).

If we look carefully at the dimensions and indicators shown in Table 4, we see that the indicator for which both groups scored highest was the use of multiple ICT resources, with online students scoring significantly higher than their face-to-face counterparts (Area 1.1: p = .001; d = 0.75). Similar results were found also in relation to collaborating in the development of original group projects to solve problems (Area 4.4: p = .001; d = 0.74) and creating original basic contents, with online students scoring particularly highly for this indicator (Area 6.2: p = .001; d = 0.70). Nevertheless, the results also reveal a lower performance level (particularly among face-to-face students) in identification of trends and possibilities (Area 6.3: p = .001; d = 1.01) and the use of multiple ICT tools and resources to optimise work and offer alternative responses (Area 3:4. p = .001; d = 0.94). Moreover, both groups had difficulties resolving problems using different technological systems (decompressing a hard drive, configuring an email account, etc.) (Area 1.3: p = .001; d = 1.85).

4 Discussion

The present study aimed to determine the DC level of a sample of Spanish undergraduate trainee teachers on a Primary Education degree and to analyse the relationship between DC and factors such as gender, academic year and learning format.

4.1 Digital competence in each dimension and indicator

In relation to the first aim, the results reveal that, in general, participants had a low-intermediate (‘explorer’ or ‘integrator’ A2-B1) level in the different dimensions assessed using the CDAES questionnaire. In other words, the results point to a lack of training among undergraduate teacher training students. These findings are similar to those reported by Romero-Tena et al. (2020), who, using the same instrument, found that students on Pre-school and Primary Education teacher training degrees had (initially and with no specific training) a basic level of technological competence. Our results are also consistent with those reported by Basilotta et al. (2022) and de Cabero-Almenara et al. (2021), who confirmed the existence of a notable gap between the training received and the expectations and demands of a digital society (Marín-Suelves et al., 2022). However, they contrast with the conclusions published by Pinto-Santos et al. (2020) and Marimón-Martí et al. (2023), who found that students had a high perception of their own DC.

Consistently with that observed by Silva and Morales (2022), respondents scored highest in the ‘Communication and collaboration’ and ‘Digital citizenship’ dimensions. This is also similar to that observed by Marín-Suelves et al. (2022) who, in a sample of 230 undergraduate and postgraduate Education students, found that participants scored highest for working in interconnected teams, communicating adequately in a variety of different formats, and having a high awareness of the importance of digital security. Our results also coincide with the conclusions drawn by Pinto-Santos et al. (2020) and Colomo et al. (2023), who highlighted the fact that future primary school teachers performed well in aspects linked to the safe, legal and responsible use of information, since they accepted as a basic principle in their teaching practice both the ethical and legal use of online contents and a commitment to lifelong learning in order to perfect their knowledge and keep abreast of new developments. Despite this, however, the extant literature points out that even though trainee teachers have a good knowledge of and are aware of these aspects (Gallego-Arrufat et al., 2019), they lack the specific skills necessary to apply them online or foster their use among learners (Romero-Tena et al., 2020). This may explain the lower scores obtained in the more complex activities in this study. Particularly striking are the scores obtained for ‘Creativity and innovation’, since the design and creation of teaching-learning content or spaces (Marimón-Martí et al. 2023), the use of emerging technologies and simulators (Colomo et al., 2023) and the establishment of innovative practices are usually tasks in which students have been found in previous studies to have serious gaps in their knowledge (Moreno-Rodríguez et al., 2018; Romero-Tena et al., 2020).

In contrast, the least developed areas were ‘Information search and processing’, ‘Technological literacy’ and, above all, ‘Critical thinking’. Although these findings are similar to those reported by Silva and Morales (2022), they differ from those found in a large number of other studies that observed that both information search and (especially) technological literacy (Colomo et al., 2023) are areas in which participants tend to score highest (Moreno-Fernández et al., 2021; Pérez-Navío et al., 2021). One possible explanation for this may be found in those studies that assert that although future teachers have a good level in simple or instrumental skills as applied to everyday life, they lack the ability to make use of more technical or specialist knowledge (Basilotta et al., 2022). The scores obtained in ‘Critical thinking’ however, highlight the difficulty experienced by trainee teachers when asked to identify problems or come up with alternative solutions to them using a variety of ICT resources (Girón-Escudero et al., 2019; Romero-Tena et al., 2020).

4.2 Digital competence in each dimension and indicator, by gender, academic year and learning format

In relation to the second aim, although the training received could be improved for both genders, academic years and learning formats, significant differences were found in relation to these variables that are worth highlighting.

In terms of gender, men scored higher in all dimensions, although the size of the male group was notably smaller than that of the female one and the differences observed were not significant, except in the case of ‘Critical thinking’ (d = 0.37). These results are similar to those reported by Jiménez-Hernández et al. (2020) and Pinto-Santos et al. (2020), who, unlike Marín-Suelves et al. (2022) and Marimón-Martí et al. (2022), confirmed the existence of a gender gap, observing that men were usually more effective in activities linked to information management, digital security, online collaboration and content creation (Gallego-Arrufat et al., 2019; Pérez-Navío et al., 2021).

In relation to academic year, 4th year students scored higher than their 1st year counterparts. Consistently with that reported by Gabarda et al. (2020), who compared 104 students from different years, our results confirm that the ‘Technological literacy’ (d= −0.61) and ‘Communication and collaboration’ dimensions (d = − 0.65) were the ones in which students progressed most, thereby suggesting that they are the fields focused on most during the undergraduate degree course. The fostering of practices that require the use of ICT in the everyday life of the classroom is directly linked to the increase observed in students’ DC from one academic year to the next (Romero-García et al., 2020). Indeed, empirical research holds that common practices in teacher training degrees, such as online group projects, advanced searches for resources while respecting intellectual property, and even syllabus design may explain the gradual improvement of these skills (Marín-Suelves et al., 2022; Romero-Tena et al., 2020).

In relation to learning format, the results indicate that the face-to-face group scored significantly lower in all areas than their online counterparts, thereby confirming the association between DC and online teaching. Although this link has received scarce empirical attention to date, training that includes new technologies seems to be more effective in preparing future teachers for the digital world (Álvarez-Herrero, 2020). It is important to point out here that although one may be tempted to think that these results are linked to aspects such as the use of electronic devices during the T-L process itself, the use of an LMS (Learning Management System) to disseminate resources or the change from physical to digital content format, previous studies have found that it is in fact due to the establishment of different practices, methodologies and challenges that test and foster students’ DC, while at the same time fostering their contact with specific knowledge and skills linked to its different areas (Gutiérrez-Porlán & Serrano-Sánchez, 2016; Romero-Tena et al., 2020).

5 Conclusions

The results found in this study allow us to draw several conclusions. It is vital to include specific training in ICT in the initial training of future teachers, regardless of the learning format chosen, in order to promote and guarantee the development of their DC beyond everyday use. As the European Commission points out (2018), innovative training actions and activities are required right from the initial teacher training, in order to ensure academic-personal training that is in keeping with the demands of today’s digital society. Having a basic-intermediate level of DC is no longer enough. It is therefore essential to provide, particularly in face-to-face degree courses, progressive and adapted training designed to guarantee that, upon graduation, students will have an ‘expert’ level. To this end, although some studies advocate prioritising the communication and collaboration (Silva & Morales, 2022) or the creation of digital content (Jiménez-Hernández et al., 2020), the results presented here prompt the conclusion that it is important to work on all dimensions simultaneously. For example, we recommend to include different activities, with an ever-increasing level of complexity, to enable trainee teachers to practically, effectively and intelligently develop their DC in each different subject. This would enable aspiring teachers to feel fully empowered and able to use ICT to guide their students in the T-L process, engaging in all the design, creation, collaboration, resolution and assessment activities required by their future profession.

In turn, for the effective integration of ICT in schools, i.e., to guarantee the support and improvement of educational practice, it is crucial to focus on its pedagogic function (Basilotta et al., 2022). This involves boosting the knowledge and critical thinking of future teachers to enable them to identify and understand the digital resources that best adapt to the content and methodologies used in the classroom; showing them, for example, different models, such as TPACK (Technological Pedagogical Content Knowledge), which enable them to explore their full potential (Garcés-Prettel et al., 2014). In addition, Gabarda et al. (2020) highlight the importance of incorporating ICT and DC into the teacher training curriculum, in order to enable changes to be made to both syllabuses and subjects, since the current content taught has remained more or less unchanged over recent decades (Silva & Morales, 2022). Nevertheless, no information has yet been gathered regarding these factors, a gap that future studies should seek to fill in order to enable a more comprehensive description of the situation.

The present study has certain limitations that should be taken into consideration when attempting to replicate or generalise the results reported here. The first is linked to the fact that DC was measured using a self-report instrument, although an effort was made to mitigate its inherent lack of objectivity by taking into consideration the scores for the individual indicators in each dimension. The second limitation is related to the fact that the study was carried out in only two Spanish universities, meaning that, although the sample was large enough to be representative of those two institutions, it was not large enough to be representative of Spain as a whole.

This said, the results ratify the need to continue working on this issue. Futures studies should strive to recruit larger and more heterogeneous samples, and may also wish to use quantitative and, above all, qualitative techniques to analyse those factors that determine the DC of future teachers, with the aim of understanding the difficulties they perceive during their professional practice. In this sense, it is important to try to broaden our current knowledge of those educational methodologies and practices that are used and promoted in online courses and have a positive impact on DC. This will enable us to define innovative educational strategies that are adapted to the demands of today’s digital society and can be used in face-to-face teaching. It may also be a good idea to conduct comparative studies focused on variables such as university access pathways, knowledge area, type of university (public or private), and country or context, since the international literature has begun to warn of the key role such variables may play in DC.

Data availability

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Aguilar, Á. I., Colomo, E., Colomo, A., & Sánchez, E. (2022). COVID-19 y competencia digital: percepción del nivel en futuros profesionales de la educación. Hachetetepé Revista científica De educación Y comunicación, 24, 1–14. https://doi.org/10.25267/Hachetetepe.2022.i24.1102

Álvarez-Herrero, J. F. (2020). 19 de noviembre de La competencia digital del alumnado universitario de educación ante el reto del cambio a modalidad de enseñanza online por la COVID-19. Estudio de caso sobre la efectividad de una formación previa [Oral Communication]. VII Iberoamerican Conference on Educational Innovation in the field of ICT and TAC, Las Palmas, Spain. https://accedacris.ulpgc.es/bitstream/10553/76543/2/La_competencia_digital_del_alumnado.pdf

Basilotta, V., Matarranz, M., Casado-Aranda, L. A., & Otto, A. (2022). Teachers’ digital competencies in higher education: A systematic literature review. International Journal of Education Technology in Higher Education, 19(8), 1–16. https://doi.org/10.1186/s41239-021-00312-8

Cabero-Almenara, J., Barroso-Osuna, J., Palacios-Rodríguez, A., & Llorente-Cejudo, C. (2020). Marcos De Competencias Digitales para docentes universitarios: Su evaluación a través del coeficiente competencia experta. Revista Electrónica Interuniversitaria De Formación Del Profesorado, 23(3), 17–34. https://doi.org/10.6018/reifop.414501

Cabero-Almenara, J., Guillén-Gámez, F. D., Ruiz-Palmero, J., & Palacios-Rodríguez, A. (2021). Digital competence of higher education professor according to DigCompEdu. Statistical research methods with ANOVA between fields of knowledge in different age ranges. Education and Information Technologies, 26, 4691–4708. https://doi.org/10.1007/s10639-021-10476-5

Cohen, J. (2013). Statistical power analysis for the behavioral sciences. Academic.

Colomo, E., Aguilar, Á. I., Cívico, A., & Colomo, A. (2023). Percepción De Futuros docentes sobre su nivel de competencia digital. Revista Electrónica Interuniversitaria De Formación Del Profesorado, 26(1), 27–39. https://doi.org/10.6018/reifop.542191

European Commission (2018). DigComp 2.1. Marco de Competencias Digitales para la Ciudadanía. https://www.aupex.org/centrodocumentacion/pub/DigCompEs.pdf

European Commission (2007). Un planteamiento europeo de la alfabetización mediática en el entorno digital. http://eur-lex.europa.eu/legalcontent/ES/TXT/?uri=URISERV:l24112

Fernández-Batanero, J. M., Román-Graván, P., Montenegro-Rueda, M., López-Meneses, E., & Fernández-Cerero, J. (2021). Digital teaching competence in higher education: A systematic review. Education Sciences, 11(11), 689. https://doi.org/10.3390/educsci11110689

Fernández-Miravete, Á. D., & Prendes-Espinosa, M. P. (2022). Evaluación Del proceso de digitalización de un centro de Enseñanza secundaria con la herramienta SELFIE. Contextos Educativos, 30, 99–116. https://doi.org/10.18172/con.5357

Ferrari, A. (2012). Digital competence in practice: An analysis of frameworks. European Commission. https://op.europa.eu/es/publication-detail/-/publication/2547ebf4-bd21-46e8-88e9-f53c1b3b927f/language-en Joint Research Centre (JRC).

Gabarda, V., Marín, D., & Romero, M. M. (2020). La Competencia digital en la formación inicial docente. Percepción De Los estudiantes de magisterio de la Universidad De Valencia. Ensayos: Revista De La Facultad De Educación De Albacete, 35(2). https://doi.org/10.18239/ensayos.v35i2.2176

Gallego-Arrufat, M. J., Torres-Hernández, N., & Pessoa, T. (2019). Competence of future teachers in the digital security area. Comunicar, 61, 57–67. https://doi.org/10.3916/c61-2019-05

Gao, Y., Wang, Q., & Wang, X. (2024). Exploring EFL University teachers’ beliefs in integrating ChatGPT and other large Language models in Language Education: A study in China. Asia Pacific Journal of Education, 44(1), 29–44. https://doi.org/10.1080/02188791.2024.2305173

Garcés-Prettel, M., Cantillo, R. R., & Ávila, D. M. (2014). Transformación pedagógica Mediada Por tecnologías de la información y la comunicación (TIC). Saber Ciencia Y Libertad, 9(2), 217–228. https://doi.org/10.18041/2382-3240/saber.2014v9n2.2352

García-Correa, M., Morales-González, M. J., & Gisbert, M. (2022). El Desarrollo De La Competencia Digital Docente en Educación Superior. Una revisión sistemática de la literatura. RiiTE Revista Interuniversitaria De Investigación en Tecnología Educativa, 13, 173–199. https://doi.org/10.6018/riite.543011

Girón-Escudero, V., Cozar-Gutierrez, R., & Somoza, G. C., J.A (2019). Análisis De La autopercepción Sobre El Nivel De Competencia digital docente en la formación inicial de maestros/as. Revista Electrónica Interuniversitaria De Formación Del Profesorado, 22(3), 193–218. https://doi.org/10.6018/reifop.22.3.373421

Gros, B., & Silva, J. (2005). La formación Del profesorado como docente en Los espacios virtuales de aprendizaje. Revista Iberoamericana De Educación, 36(1), 1–13.

Gutiérrez-Castillo, J. J., Cabero-Almenara, J., & Estrada-Vidal, L. I. (2017). Diseño y validación de un instrumento de evaluación de la competencia digital del estudiante universitario. Revista Espacios, 38(10).

Gutiérrez-Porlán, I., & Serrano-Sánchez, J. (2016). Evaluation and development of digital competence in future primary school teachers at the University of Murcia. Journal of New Approaches in Educational Research, 5(1), 51–56. https://doi.org/10.7821/naer.2016.1.152

Inamorato, A., Chinkes, E., Carvalho, M. A., Solórzano, C. M., & Marroni, L. S. (2023). The digital competence of academics in higher education: Is the glass half empty or half full? International Journal of Educational Technology in Higher Education, 20(1), 9. https://doi.org/10.1186/s41239-022-00376-0

Marco Común de Competencia Digital Docente. Ministerio INTEF, & de Educación (2017). Cultura y Deporte. https://aprende.intef.es/sites/default/files/2018-05/2017_1020_Marco-Com%C3%BAn-de-Competencia-Digital-Docente.pdf

ISTE (2023). Estándares ISTE: Estudiantes. https://www.iste.org/es/iste-standards

Jiménez-Hernández, D., González-Calatayud, V., Torres-Soto, A., Martínez Mayoral, A., & Morales, J. (2020). Digital competence of future secondary School teachers: Differences according to gender, Age, and Branch of Knowledge. Sustainability, 12(22), 9473. https://doi.org/10.3390/su12229473

López-Belmonte, J., Pozo-Sánchez, S., Fuentes-Cabrera, A., & Romero-Rodríguez, J. M. (2019). Análisis Del Liderazgo Electrónico Y La Competencia Digital Del Profesorado De Cooperativas Educativas De Andalucía (España). Multidisciplinary Journal of Educational Research, 9(2), 194–223. https://doi.org/10.17583/remie.2019.4149

Marimon-Martí, M., Romeu, T., Usart, M., & Ojando, E. S. (2023). Análisis de la autopercepción de la competencia digital docente en la formación inicial de maestros y maestras. Revista De Investigación Educativa, 41(1), 51–67. https://doi.org/10.6018/rie.501151

Marín-Suelves, D., Gabarda-Méndez, V., & Ramón-Llin, J. A. (2022). Análisis De La Competencia digital en El Futuro Profesorado a través de un diseño mixto. Revista De Educación a Distancia (RED), 22(70), 1–30. https://doi.org/10.6018/red.523071

Mattar, J., Cabral, C., & Cuque, L. M. (2022). Analysis and Comparison of International Digital Competence Frameworks for Education. Education Sciences, 12(12), 932. https://doi.org/10.3390/educsci12120932

Moreno-Fernández, O., Núñez-Román, F., Solís-Espallargas, C., & Ferreras-Listán, M. (2021). La Competencia digital del alumnado universitario de carreras vinculadas al ámbito educativo. In R. Gillain-Muñoz, H. Gonçalves-Pinto, I. Simoes-Dias, M. Odilia-Abreu, & D. Alves (Orgs.) (Eds.), Investigação, Práticas E contextos em Educação (pp. 85–91). Polytechnic Institute of Leiria.

Moreno-Rodríguez, M. D., Gabarda-Méndez, V., & Rodríguez-Martín, A. M. (2018). Alfabetización informacional y competencia digital en estudiantes de Magisterio. Profesorado Revista De Currículum Y Formación Del Profesorado, 22(3), 253–270. https://doi.org/10.30827/profesorado.v22i3.8001

Pérez-Navío, E., Ocaña-Moral, M. T., & Martínez-Serrano, M. C. (2021). University Graduate Students and Digital competence: Are future secondary School teachers digitally competent? Sustainability, 13(15), 8519. https://doi.org/10.3390/su13158519

Pertusa-Mirete, J. (2020). Metodologías activas: La Necesaria actualización Del sistema educativo Y La práctica Docente. Supervisión 21, 56, 1–21.

Pinto-Santos, A. R., Pérez, A., & Darder, A. (2020). Autopercepción De La Competencia digital docente en la formación inicial del profesorado de educación infantil. Revista Espacios, 41(18), 29.

Pozo-Sánchez, S., López-Belmonte, J., Fernández-Cruz, M., & López-Núñez, J. A. (2020). Análisis correlacional de los factores incidentes en el nivel de competencia digital del profesorado. Revista Electrónica Interuniversitaria de Formación del Profesorado, 23(1), 143–159. https://doi.org/10.6018/reifop.396741

Redecker, C. (2017). European Framework for the Digital competence of educators: DigCompEdu. Publications Office of the European Union. https://doi.org/10.2760/159770

Rodríguez-García, A. M., Raso Sánchez, F., & Ruiz-Palmero, J. (2019). Competencia digital, educación superior y formación del profesorado: Un estudio de meta-análisis en la web of science. Pixel-Bit Revista De Medios Y Educación, 54, 65–82. https://doi.org/10.12795/pixelbit.2019.i54.04

Røkenes, F. M., & Krumsvik, R. J. (2014). Development of Student teachers’ Digital competence in Teacher Education. A literature review. Nordic Journal of Digital Literacy, 9(4), 250–260. https://doi.org/10.18261/ISSN1891-943X-2014-04-0

Romero-García, C., Buzón-García, O., & de Paz-Lugo, P. (2020). Improving future teachers’ Digital competence using active methodologies. Sustainability, 12(18), 7798. https://doi.org/10.3390/su12187798

Romero-Tena, R., Barragán-Sánchez, R., Llorente-Cejudo, C., & Palacios-Rodríguez, A. (2020). The challenge of initial training for early childhood teachers. A cross sectional study of their digital competences. Sustainability, 12(11), 4782. https://doi.org/10.3390/su12114782

Romero-Tena, R., Llorente-Cejudo, C., & Palacios-Rodríguez, A. (2021). Competencias Digitales Docentes desarrolladas Por El alumnado del grado en Educación Infantil: Presencialidad vs virtualidad. Edutec. Revista Electrónica De Tecnología Educativa, (76), 109–125. https://doi.org/10.21556/edutec.2021.76.2071

Silva, J., & Morales, E. M. (2022). Assessing digital competence and its relationship with the socioeconomic level of Chilean university students. International Journal of Educational Technology in Higher Education, 19(1), 46. https://doi.org/10.1186/s41239-022-00346-6

Spanish Government (2015). Plan de acción para la implementación de la Agenda 2030. Hacia una Estrategia Española de Desarrollo Sostenible. https://www.mdsocialesa2030.gob.es/agenda2030/documentos/plan-accion-implementacion-a2030.pdf

UNESCO (2018). Marco de competencias de los docentes en materia de TIC UNESCO. https://unesdoc.unesco.org/ark:/48223/pf0000371024

Wang, Q., Sun, F., Wang, X., & Gao, Y. (2023). Exploring undergraduate students’ Digital Multitasking in Class: An empirical study in China. Sustainability, 15(13), 10184. https://doi.org/10.3390/su151310184

Acknowledgements

Not applicable.

Funding

Not applicable.

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cepa-Rodríguez, E., Murgiondo, J.E. Digital competence among 1st and 4th year primary education undergraduate students: a comparative study of face-to-face and on-line teaching. Educ Inf Technol (2024). https://doi.org/10.1007/s10639-024-12828-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-024-12828-3