Abstract

The flexible, changing, and uncertain nature of present-day society requires its citizens have new personal, professional, and social competences which exceed the traditional knowledge-based, academic skills imparted in higher education. This study aims to identify those factors associated with active methodologies that predict university students’ learning achievements in a digital ecosystem and thus, optimize the learning-teaching process. The teaching management tool Learning Analytics in Higher Education (LAHE) has been applied to a 200-student non-probabilistic incidental sample spread over 5 different university courses, enabling a personalized learning-teaching process tailored to the needs of each group and /or student. Based on a pre-experimental design without a control group, an analysis through decision trees based on educational data mining has been undertaken on the predictive potential of the active methodologies employed, and their effects on students’ learning achievements. The criterion variable of the study was the final exam grade, and the explanatory variables included student characteristics, indicators of the teaching–learning process and non-cognitive factors. Results show that factors associated with active methodologies correctly predict a significant portion of the learning achieved by students. More specifically, the factors that have the greatest impact on learning are those related to academic engagement and to a student continuous learning process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Different actors -civil society, companies, the European Union- are calling for an integrated vision of people in society, pivoting on psycho-socio-political capabilities as an element of possibility for a sustainable future at all levels (Amaya et al., 2021; Brandenburg et al., 2020).

The university must play a decisive role in this new scenario by providing global educational responses to citizens and professionals who will face global challenges (Massaro, 2022; Moscardini et al., 2022), consequently, university education is fully immersed in a process of transformation in which many elements converge: pedagogy, professional training, and knowledge transfer, among others.

University education must foster learning to be more effective, efficient, and attractive (Singh & Miah, 2020) assuming that technology is a driver of university transformation (Goh et al., 2020). Otherwise, the institutional challenges that the university faces are far-reaching; jobs that require new professional competencies, mobility (geographic and between specialties) and the unwieldy mindset of universities in adapting to new professional profiles (Benito Mundet et al., 2021) are the most important. In addition, professionals must think critically and have the necessary skills to gather data and interpret them according to the changing contingencies of the environment and the new needs of the firm -mostly still undefined- to make decisions that maximize value generation in their personal, professional, and social environments. Finally, the pandemic has accelerated the university transformation process, highlighting, in general, that online teaching is not at the same level as face-to-face, and that many universities are not strategically prepared to offer quality education under such contingencies (Gavesic, 2020).

Currently, the learning process has several critical success factors, as such as, new interdisciplinary concepts or new technological realities (Alé-Ruiz & Earle, 2020; Bonami et al., 2020). Hwang and Choi (2016) and Massaro (2022) go further, and state that the University must enable today's students to take on global leadership roles in the future.

Thus, it is essential to consider what are the multiple personal and professional competencies demanded by the labour market, and that are also essential for the adequate performance of people in society as digital citizens capable of exercising civic leadership (Tenuto, 2021) and to do so in accordance with lines of research suggested in the literature on virtual learning environments (Flavin & Bhandari, 2021) to include them in the teaching–learning process of university students.

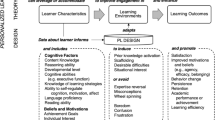

The main objective of this study is to identify the factors associated with active methodologies, implemented in a digital teaching–learning ecosystem, that predict the level of learning achieved by university students. For this purpose, the Learning Analytics in Higher Education (LAHE) teaching management tool is used, a tool specifically designed for its application in digital educational ecosystems.

There are two fundamental axes of this work, which expressed as research questions are:

-

Q1: Is it possible to create a predictive model, based on the decision tree technique, with a good fit?

-

Q2: What are the main factors, associated with active methodologies, that predict the level of learning of students in a digital teaching–learning ecosystem?

1.1 State of the art

In the current teaching–learning process there are new elements and approaches. There are also new actors: companies, professionals, and society. In addition, university education must equip students with skills that will enable them to generate personal, professional, and social value throughout their lives, skills whose benchmark must lie in employability and the full exercise of the status of digital citizen. Consequently, any educational model, pedagogical framework or university syllabus must include aspects related to knowledge, and also to the production and access to distributed knowledge, meta-learning approaches, use of open educational resources, problem-based learning, gamification, active learning, digital portfolios, student and teacher mobility, flexible learning and skills and values (Benito Mundet et al., 2021; Brandenburg et al., 2020; Fadel & Groff, 2019; Guàrdia et al., 2021; Hamzah et al., 2022; Hwang & Chien, 2022; Vânia et al., 2023). Therefore, any teaching management tool should be able to operationally envisage them.

1.1.1 Competences-Technology: Educational innovation and intelligent education

Competences-Technology is a core binomial in the current teaching–learning process. This binomial presents challenges and opportunities that arise from the combination of the digital era and education. We understand competence as the student's ability (which implies possessing the cognitive structure that supports it) to perform tasks and participate in various situations of political, social, and cultural life in an effective and conscious way, adapting to a given context, so it is necessary to mobilize attitudes, skills, and knowledge, orchestrating and interrelating them. As for technology, we consider it as a vehicle for competencies in the teaching–learning process (Hwang, 2014).

Combining the above aspects and focusing them, we assert that educational innovation entails making changes in the teaching–learning process to improve student learning outcomes. We think, in line with Baumann et al. (2016), that, in order to achieve these improvements, any educational innovation must be adopted in a holistic and inclusive manner. Ramirez and Valenzuela (2020) propose a general framework for the development of educational innovation under the above prism, defining four categories of innovation: psycho-pedagogical studies, use and development of technology in education, educational management, and socio-cultural environment: elements confirmed in subsequent works (Johnson et al., 2020).

Smart education is identified in the literature (Yoo et al., 2015) as the compendium of learner-centred, personalized, adaptive, interactive, collaborative, context-dependent, and ubiquitous learning. These aspects are affirmed by Li and Wong (2021a) in their comprehensive review on the use of smart learning in the decade 2010–2018. Technology and smart education mediated by competencies (Guàrdia et al., 2021), are not only at the epicentre of development and change in higher education but are the key players in many of the challenges, associated pedagogical practices and trends in university education today, which must crystallize in a digital educational ecosystem for their successful practical application, as described by Gros (2016).

1.1.2 Learner-focused learning—Personalised learning

There is extensive literature on the need to place the learner at the core of the teaching–learning process and the impacts it entails. The role -active and leading- of the learner in this process is redefined. The multiple facets of this Copernican turn are brought together under the generic meaning of active learning, an umbrella concept, which generates advantages from exposing students to complex realities and real problems that activate their knowledge, skills, energy, dedication, and commitment. These advantages are seen as positive by students and teachers (Crisol-Moya et al., 2020), confirming that active learning is more about cooperation than competition.

There is also consensus that digital learning environments offer possibilities for immediate feedback, time-based student progress reports and the application of short- and long-term reward mechanisms that motivate student progress and self-regulation in their learning (Hernández Rivero et al., 2021; Lucieer et al., 2016; Theobald et al., 2020). Equally important is the role of the generation and use of learning analytics and associated tools for their practical application in the management of the teaching–learning process, helping, for example, the early identification of students at risk of failure, the satisfaction of students' personal needs in relation to their learning, and the adaptation of teaching activities to changing realities (Tsai et al., 2021).

Personalized learning is another vector of change in university education (Li & Wong, 2021b), beyond the many definitions of the term and its pros and cons (Groff, 2017). Customization focuses primarily on the use of technology as a vehicle for teaching and learning. Its impact on learner performance, increased motivation, academic engagement and satisfaction are its main benefits. The theoretical concepts and latest trends in personalised learning are described by Walkington and Bernacki (2020) and best practices, relevant case studies and key elements of success are reported in Cheung et al. (2021).

This pairing leads to several important impacts. The increased use of automatic response systems (Li & Wong, 2020) is the first. These authors also highlight the symbiotic relationship of these systems with learning analytics and the measurement of learner cognition.

Learner cognition is the second impact of this pairing. Cognition is particularly enhanced by using gamification methodological strategies. Gamification, necessarily learner-centred, has a favourable perception on learners if it is properly designed (Pegalajar, 2021). Otherwise, authors such as Dascalu et al. (2016) refer to the positive effect of gamification on the development of skills that favour the employability and later working life of students, such as creativity, problem solving, teamwork, discovery learning and decision making. Positive contributions of gamification on academic engagement (Sánchez-Martín et al., 2017) and on the increased meaningfulness of learning (Fernández Gavira et al., 2018) are also reported in the literature.

1.1.3 Academic engagement

Purely regarding the academic context, Christenson et al. (2012) identify academic engagement with the involvement and active participation of the learner in the learning process. Dynamicity, dynamism as a prominent characteristic of academic engagement, was later introduced (Picton et al., 2018). Academic engagement is thus something that is being done and includes teaching practices, student behaviours and elements that relate to student achievement and satisfaction, both during their time at university and in their lifelong learning. Academic engagement is also closely related to cognitive, affective, and motivational elements that teachers must assess—in terms of effectiveness and efficiency—in their teaching (Schnitzler et al., 2020).

Academic engagement is a multidimensional construct (Kahu, 2013; Rodríguez-Izquierdo, 2020) that changes according to the stages of the learner's life, their biopsychosocial development and the experience lived in the institution where they learn (Lam et al., 2016).

The literature also shows a pair of interesting aspects: firstly, academic engagement has a huge impact on the academic results achieved by students (Chipchase, et al., 2017); secondly, academic engagement is lower in non-face-to-face classes compared to face-to-face classes (Farrell & Brunton, 2020).

2 Material and methods

2.1 Design, participants and data collection

Based on a quantitative paradigm, a pre-experimental design (Campbell & Stanley, 1963) without a control group was applied. From a population of undergraduate Social Sciences students during the 2020–21 academic year, a non-probabilistic accessibility sample of 200 students from the Universidad Francisco de Vitoria (Madrid) was obtained in the following subjects:

-

Introduction to Business (n = 30)

-

Business Organisation and Administration I (n = 69)

-

Business Organisation and Administration II (n = 101, three groups of different degrees).

The teaching–learning process in the five groups was developed in face-to-face teaching mode.

The information was collected using a computer tool for managing the teaching–learning process: the LAHE tool. All individual and group events and performances of the students were recorded in this tool. This information was extracted and a database with a total of 36 variables was generated:

-

Final exam grade (criterion variable).

-

General student and subject data: student assessment regime, type of student assessment, etc.

-

Awards: total number of awards obtained, commitment award, regularity award, success award, personal growth award.

-

Quizzes: number of quizzes completed by the student and grade for each quiz.

-

Short tasks: score on each of the six short tasks in the course.

-

Extensive assignment: score on the extensive course assignment and 360º peer feedback score

-

Attendance: percentage of attendance and absences, both approved and unapproved

-

Supplementary assignments: number of assignments handed in and grades obtained

-

Expected grade: minimum and maximum grade expected by the student.

-

Discretionary mark: extraordinary award achieved for outstanding performance

-

Grade obtained in the mid-term examination

A non-random distribution of missing values was considered, and it was decided to include them as a separate category in each variable. Thus, all quantitative variables were categorised into four groups, using the distribution's thirds:

-

Non-response

-

Low score (bottom third)

-

Medium score (second third)

-

High score (highest third)

Certain activities (awards, quizzes, etc.) were present only in some subjects. In these cases, a new category was included in the corresponding variable to differentiate non-response (subjects not delivering the activity) from non-availability of the activity (subject matter in subjects where the activity was not included).

2.2 Learning Analytics in Higher Education (LAHE), a digital tool for teaching–learning management

The digital teaching management tool LAHE (V. 6.0) has been designed and implemented for application in the university teaching–learning process with active learning methodologies in digital ecosystems (Alé-Ruiz & Earle, 2020). It collects data from various sources with sufficient flexibility to meet any teaching needs. Its design is modular and scalable to meet future educational needs and functionalities.

Some of the elements it allows to define and use are:

-

Independently configurable assessment regimes (continuous, extraordinary, personalised, etc.).

-

Student type categories (delegate, academic waiver, etc.).

-

Class modalities (seminar, gaming, guest lecturer, etc.).

-

Exam modalities (ordinary, extraordinary, etc.).

-

Challenges: independently configurable (problems, case studies, research proposals, etc.).

-

Quizzes (individual, team, etc.). The tool interacts automatically with Socrative platform.

-

Short and long tasks: the tool randomly defines the desired number of teams, assigning a leader per team.

-

360° peer assessment configurable for any activity carried out by the learners.

-

Rewards (“prizes”): independently configurable motivational elements, designed following the remuneration pattern used in companies. Those applied in this work are:

-

(1)

Commitment award: no more than X unapproved absences during the course.

-

(2)

Regularity award: average grade in questionnaires > = X with a minimum % of questionnaires completed and a grade per questionnaire > = Y.

-

(3)

Success award: Z top students in a summative assessment at different moments in time of the course.

-

(4)

Personal growth award: summative assessment mark > = X at different moments in time of the course, with Y% growth between moments (with minimum mark > = Z at the first moment in time).

-

(1)

-

Discretionary mark: awarded by the teacher for outstanding performance during the course.

-

Attendance (attendance, approved absences, unapproved absences).

The elements described above make up most digital university teaching ecosystems applied in active learning.

2.3 Data processing and analysis

An initial univariate exploration of the original (non-categorised) variables was carried out using the free software JASP (V.0.14.1.1) and Microsoft Excel.

After categorisation of the relevant variables, the decision tree was computed. For this purpose, the free specialised data mining software Weka (V.3.8.5) was selected. Given that it is an appropriate algorithm for categorical variables that promotes the obtaining of simple and easily construed predictive models (Martínez-Abad et al., 2020), the J48 algorithm (Quinlan, 1992; Witten et al., 2017) was used. To reduce the size of the tree and to obtain a parsimonious and easily interpretable model, a minimum of 10 elements per final leaf was established.

3 Results

3.1 Descriptive exploration

The attendance rate is high (Fig. 1), which is indicative of the commitment shown by the students. It is important to note that the rate of student achievement is high: commitment (70.7%), regularity (81.2%), success (73.5%) and growth (73.5%). Despite this, a large proportion of absences are unapproved (Average = 18.2%, P75 = 17%).

In addition, 15% of students receive an additional discretionary mark for particularly positive participation or attitude. These rewards can amount to 0.1 points (1.5% of students), 0.2 points (1.5%), 0.3 points (3.5%), 0.4 points (2%) or 0.5 points (6.5%) on the final mark, depending on the achievement.

Table 1 shows how the overall response rate to the questionnaires is above 80%. In fact, there is little fluctuation in the response rate between questionnaires, all of them being above 70%. Students therefore achieve a high level of regularity. In terms of marks, most students (more than 75%) obtain a mark of 50 points or higher in almost all questionnaires, although the general standard deviation is high. Moreover, the completion rate of the mid-term and final exams is at high levels (above 80%), with lower average marks and higher variances.

3.2 Predictive model: Decision tree

A model with 17 branches and 12 leaves is obtained. Furthermore, in relation to the base model (accuracy = 31%), the level of accuracy of the model obtained is 60.5%. Given that the base accuracy level is doubled from a reduced size model, we can affirm that the model is parsimonious and with an acceptable global fit.

Figure 2 shows the decision tree obtained. The ellipses represent the predictor variables that divide the sample, and the rectangles represent the final leaves. Each leaf indicates the predicted category (No performance; Low performance; Medium performance; High performance) in the criterion variable (performance in the final exam) for the included students; the % accuracy of this prediction and the number of students included in that branch. The leaves of each of the four categories with the highest accuracy levels are also highlighted.

The factor with the greatest impact on the final exam score is the regularity of the student. It should be recalled that this factor, listed as a "prize", was awarded to students with a minimum average mark, both overall and on each questionnaire, and who had completed a minimum number of questionnaires in the course.

While the prediction for the approximately 10% of students who did not receive a regularity award (20% of the total number of students whose subjects included this award) is that they will not sit the final exam (a prediction with low accuracy), most students who get this award predict average or high scores (69 of the 82 who go down this route). In this group, performance in the mid-term exam is the most relevant predictor: students with low scores in the mid-term exam predict low or medium scores in the final exam, based on whether they have completed all the questionnaires or not; and students with medium or high scores in the mid-term exam predict the same level on the criterion variable. The accuracy levels of these predictions remain at acceptable values of around 60%.

In the case of subjects without a regularity award, the most discriminating variable is the discretionary mark. Those students who obtain this reward predict high performance (with an accuracy of over 70%). Therefore, it seems that the detailed student monitoring enabled by the LAHE tool provides the teacher with reliable information on student performance, which is clearly related to the level of knowledge and competences that the student is achieving. For students who do not receive a discretionary mark, the mid-term exam is again the best predictor of their final performance, with a similar distribution to the group obtaining the regularity award. In this case, the biggest difference in those students who obtain low marks is in the mid-term exam: while those with a percentage of unapproved absences below 16% predict low performance, those with more unapproved absences are likely to miss the final exam. Both predictions have high levels of accuracy. The high prediction accuracy of the 19 students with lower levels of unapproved absences, almost 85%, stands out. Therefore, students who during the course show the above indicators most likely obtain a low level of performance.

The confusion matrix (Table 2) shows how the prediction is mainly located on or near the main diagonal, with fewer cases observed at the extremes of the secondary diagonal. This indicates that, while correct predictions are achieved in a good proportion of the cases (61.8% of the total), incorrect predictions are placed close to the scores of the criterion variable. For example, although incorrect prediction of high scores occurs in 17 of the 46 cases, 12 of these incorrect assignments predict average performance, with the model predicting only five students with low scores or no-show students.

In the case of the prediction of no-show students, although the accuracy is at low levels (50%), we can observe that the model is able to detect almost all the no-show students (25 out of 31), predicting low scores for four of the six incorrect detections.

Table 3 presents the levels of adjustment of the model. The relative error is reduced by almost 30% with respect to the base model and the area under the ROC curve reaches considerable levels (more than 80% overall), although the Kappa statistic obtains limited levels. While the accuracy levels are significantly lower for the prediction of students who do not sit the exam, the highest accuracy is found when predicting students who achieve lower marks in the exam.

4 Discussion and conclusions

The results found in this study show that the objective has been achieved. Regarding the first research question (Is it possible to create a predictive model, based on the decision tree technique, with a good fit?), it is in fact as we shown, possible to use the decision tree technique to predict, with good fit, the academic success of students.

Regarding the second of our research questions (What are the main factors, associated with active methodologies, that predict the level of learning of students in a digital teaching–learning ecosystem?), the digital educational ecosystem –made up of elements characteristic of active learning, adapted to a highly changing and volatile environment– focused on the learner has proven to be effective in achieving university student performance objectives, as well as in the development of skills. The practical implementation of this digital ecosystem has been carried out assuming: the catalytic role of technology (Goh et al., 2020), the sheer magnitude of the challenges facing the university (Collie et al., 2017) and an efficiency-enhancing orientation of the teaching–learning process that also makes it attractive to the learner (Singh & Miah, 2020). The aim was not only to optimise student results, but also to develop professional skills (Benito Mundet et al., 2021) based on the redefinition of the roles of the learner and the teacher (Bonami et al., 2020). This digital ecosystem has made it possible to incorporate the new elements, approaches and actors present in university education into the educational process (Fadel & Groff, 2019; Tharwat & Schenck, 2023) with positive results. In this sense, and answering the second research question, the analysis of the data collected with the LAHE teaching management tool shows that there is a clear direct relationship between the academic results obtained by the student and the development of other essential professional and personal skills, but with a more transversal nature, for example the assumption of a personal commitment and regular effort throughout the teaching–learning process.

Educational innovation (Guàrdia et al., 2021) and "smart education" (Hwang, 2014) –understood as the conjunction of a digital ecosystem and an active learning methodology– provide a robust conceptual framework for its practical implementation, in line with a holistic and inclusive vision of education (Baumann et al., 2016). Such educational innovation must it be done applying an academic framework that includes: the characteristics of smart learning (Yoo et al., 2015), comprehensive review of its elements (Li & Wong, 2021a), personalisation (Li & Wong, 2021b; Walkington & Bernacki, 2020), interactivity (Hernández Rivero et al., 2021; Zamora-Polo & Sánchez-Martín, 2019) and gamification strategy (Pegalajar, 2021). The analysis of the data shows that the adaptation to the socio-economic context of the teaching–learning process, the collaboration and the ubiquity that a digital ecosystem allows, make a positive contribution to both the outcome and the significance of university student learning (Crisol-Moya et al., 2020; Fernández Gavira et al., 2018). This positive contribution is quantitatively confirmed by the results obtained in the decision tree, highlighting the factors of the teaching–learning process that can predict student learning with acceptable levels of accuracy.

Immediate feedback (Li & Wong, 2020), time-spaced learning progress reports and “prizes” –reward and motivational mechanisms– (Hernández Rivero et al., 2021) favour achievement, both in results and in the development of student skills, having contributed value to student learning. In this regard, it is important to highlight that academic engagement (Christenson et al., 2012) is also favoured by the use of multiple academic activities with different timeframes, placing value on the dynamicity (Kahu & Nelson, 2018; Picton et al., 2018) and multidimensionality of this educational concept (Gil-Fernández et al., 2023; Rodríguez-Izquierdo, 2020). The results obtained in this work abound in this issue, stressing the importance of monitoring and managing the teaching–learning process, in order to be able to make an early detection of strengths and weaknesses in it, both at an individual and group level.

Real-time analysis of student learning data enables early identification of potential failures for individual and group corrective action (Tsai et al., 2021). It also allows for positive motivation of students' performance towards excellence. In this sense, the LAHE tool, as a complement to the learning management systems commonly used in higher education (e.g., Moodle), allows for a more detailed, personal and comprehensive control of student learning (Alé-Ruiz & Earle, 2020).

Data availability

The datasets generated and/or analysed during the current study are not publicly available due to privateness but are available from the corresponding author on reasonable request.

References

Alé-Ruiz, R., & Earle, D. H. (2020). Una herramienta para la gestión y el gobierno integrales del aprehendizaje universitario en entornos active learning. (A tool for the integrated management and governance of university apprehension in Active Learning environments). Revista Interuniversitaria de Formación Del Profesorado. Continuación de La Antigua Revista de Escuelas Normales, (Inter-University Journal of Teacher Training. Continuation of the old Journal of Teacher Training Schools), 34(2), 37–60. https://doi.org/10.47553/rifop.v34i2.77913

Amaya, A., Cantú Cervantes, D., & Marreros Vázquez, J. G. (2021). Análisis de las competencias didácticas virtuales en la impartición de clases universitarias en línea, durante contingencia del COVID-19. (Analysis of virtual teaching skills in the delivery of online university classes during the COVID-19 contingency). Revista de Educación a Distancia (RED), 21(65), 20. https://doi.org/10.6018/red.426371

Baumann, T., Mantay, K., Swanger, A., Saganski, G., & Stepke, S. (2016). Education and innovation management: A contradiction? How to manage educational projects if innovation is crucial for success and innovation management is mostly unknown. Procedia - Social and Behavioral Sciences, 226, 243–251. https://doi.org/10.1016/j.sbspro.2016.06.185

Benito Mundet, H., Llop Escorihuela, E., Verdaguer Planas, M., Comas Matas, J., Lleonart Sitjar, A., Orts Alis, M., Amadó Codony, A., & Rostan Sánchez, C. (2021). Multidimensional research on university engagement using a mixed method approach. Educación XX1, 24(2), 65–96. https://doi.org/10.5944/educxx1.28561

Bonami, B., Piazentini, L., & Dala-Possa, A. (2020). Education, big data and artificial intelligence: mixed methods in digital platforms. Comunicar, 28(65), 43–52. https://doi.org/10.3916/C65-2020-04

Brandenburg, U., de Wit, H., Jones, E., Leask, B., & Drobner, A. (2020). Internationalisation in Higher Education for Society (IHES): Concept, current research, and examples of good practice. DAAD. Retrieved June 5, 2023, from https://bit.ly/371Wled

Campbell, D. T., & Stanley, J. (1963). Experimental and Quasi-Experimental Designs for Research. Wadsworth Publishing. Retrieved January 23, 2023, from https://bit.ly/2UVKGKX

Cheung, S. K. S., Wang, F. L., Kwok, L. F., & Poulova, P. (2021). In search of the good practices of personalized learning. Interactive Learning Environments, 29(2), 179–181. https://doi.org/10.1080/10494820.2021.1894830

Chipchase, L., Davidson, M., Blackstock, F., Bye, R., Colthier, P., Krupp, N., Dickson, W., Turner, D., & Williams, M. (2017). Conceptualising and measuring student disengagement in higher education: A synthesis of the literature. International Journal of Higher Education, 6(2), 31. https://doi.org/10.5430/ijhe.v6n2p31

Christenson, S. L., Wylie, C., & Reschly, A. L. (2012). Handbook of research on student engagement. Springer. https://doi.org/10.1007/978-1-4614-2018-7

Collie, R. J., Holliman, A. J., & Martin, A. J. (2017). Adaptability, engagement and academic achievement at university. Educational Psychology, 37(5), 632–647. https://doi.org/10.1080/01443410.2016.1231296

Crisol-Moya, E., Romero-López, M. A., & Caurcel-Cara, M. J. (2020). Active methodologies in higher education: Perception and opinion as evaluated by professors and their students in the teaching-learning process. Frontiers in Psychology, 11, 1–10. https://doi.org/10.3389/fpsyg.2020.01703

Dascalu, M. I., Tesila, B., & Nedelcu, R. A. (2016). Enhancing employability through e-Learning communities: From myth to reality. In Y. Li, M. Chang, M. Kravcik, E. Popescu, R. Huang, Kinshuk, & N. S. Chen (Eds.), State of the art and Future Directions of Smart learning. Lecture Notes in Educational Technology (pp. 309–313). Springer International Publishing. https://doi.org/10.1007/978-981-287-868-7_38

Fadel, C., & Groff, J. S. (2019). Four-dimensional education for sustainable societies. In J. W. Cook (Ed.), Sustainability, Human Well-Being, and the Future of Education (pp. 269–281). Palgrave Macmillan. https://doi.org/10.1007/978-3-319-78580-6_8

Farrell, O., & Brunton, J. (2020). A balancing act: A window into online student engagement experiences. International Journal of Educational Technology in Higher Education, 17(1), 25. https://doi.org/10.1186/s41239-020-00199-x

Fernández Gavira, J., Prieto Gallego, E., Alcaraz Rodríguez, V., Sánchez Oliver, A. J., & Grimaldi Puyana, M. (2018). Aprendizajes Significativos mediante la Gamificación a partir del Juego de Rol: “Las Aldeas de la Historia”. (Meaningful Learning through Gamification using the Role Playing Game: "The Villages of History"). Espiral-Cuadernos del Profesorado (Spiral. Teachers' Notebooks), 11(22), 69. https://doi.org/10.25115/ecp.v11i21.1919

Flavin, M., & Bhandari, A. (2021). What we talk about when we talk about virtual learning environments. The International Review of Research in Open and Distributed Learning, 22(4), 164–193. https://doi.org/10.19173/irrodl.v23i1.5806

Gavesic, D. (2020). COVID-19: The steep learning curve for online education. Monash University. Retrieved June 14, 2023, from https://bit.ly/46w54CN

Gil-Fernández, R., Calderón-Garrido, D., & Martín-Piñol, C. (2023). Exploring the effect of social media in personal learning environments in the university settings: Analysing experiences and detecting future challenges. RED Revista De Educación a Distancia, 73(21), 1–24. https://doi.org/10.6018/red.526311

Goh, C. F., Hii, P. K., Tan, O. K., & Rasli, A. (2020). Why do University teachers use e-learning systems? International Review of Research in Open and Distance Learning, 21(2), 136–155. https://doi.org/10.19173/irrodl.v21i2.3720

Groff, J. S. (2017). The State of the Field & Future Directions. Center for Curriculum Redesign. Retrieved May 23, 2023, from https://bit.ly/2V1u0Ss

Gros, B. (2016). The design of smart educational environments. Smart Learning Environments, 3(1), 15. https://doi.org/10.1186/s40561-016-0039-x

Guàrdia, L., Clougher, D., Anderson, T., & Maina, M. (2021). IDEAS for transforming higher education: An overview of ongoing trends and challenges. The International Review of Research in Open and Distributed Learning, 22(2), 166–184. https://doi.org/10.19173/irrodl.v22i2.5206

Hamzah, H., Hamzah, M. I., & Zulkifli, H. (2022). Systematic literature review on the elements of metacognition-based Higher Order Thinking Skills (HOTS) teaching and learning modules. Sustainability, 14(2), 813. https://doi.org/10.3390/su14020813

Hernández Rivero, V. M., Santana Bonilla, P. J., & Sosa Alonso, J. J. (2021). Feedback y autorregulación del aprendizaje en educación superior. (Feedback and self-regulation of learning in higher education). Revista de Investigación Educativa, (Journal of Educational Research), 39(1), 227–248. https://doi.org/10.6018/rie.423341

Hwang, G. J. (2014). Definition, framework and research issues of smart learning environments - a context-aware ubiquitous learning perspective. Smart Learning Environments, 1(1), 4. https://doi.org/10.1186/s40561-014-0004-5

Hwang, J. H., & Choi, H. J. (2016). Influence of smart devices on the cognition and interest of underprivileged students in smart education. Indian Journal of Science and Technology, 9(44), 1–4. https://doi.org/10.17485/ijst/2016/v9i44/105171

Hwang, G. J., & Chien, S. Y. (2022). Definition, roles, and potential research issues of the metaverse in education: An artificial intelligence perspective. Computers and Education: Artificial Intelligence, 3, 100082. https://doi.org/10.1016/j.caeai.2022.100082

Johnson, N., Veletsianos, G., & Seaman, J. (2020). U.S. Faculty and administrators’ experiences and approaches in the early weeks of the COVID-19 pandemic. Online Learning, 24(2), 6–21. https://doi.org/10.24059/olj.v24i2.2285

Kahu, E. R. (2013). Framing student engagement in higher education. Studies in Higher Education, 38(5), 758–773. https://doi.org/10.1080/03075079.2011.598505

Kahu, E. R., & Nelson, K. (2018). Student engagement in the educational interface: Understanding the mechanisms of student success. Higher Education Research & Development, 37(1), 58–71. https://doi.org/10.1080/07294360.2017.1344197

Lam, S., Jimerson, S., Shin, H., Cefai, C., Veiga, F. H., Hatzichristou, C., Polychroni, F., Kikas, E., Wong, B. P. H., Stanculescu, E., Basnett, J., Duck, R., Farrell, P., Liu, Y., Negovan, V., Nelson, B., Yang, H., & Zollneritsch, J. (2016). Cultural universality and specificity of student engagement in school: The results of an international study from 12 countries. British Journal of Educational Psychology, 86(1), 137–153. https://doi.org/10.1111/bjep.12079

Li, K. C., & Wong, B. T. M. (2020). The use of student response systems with learning analytics: A review of case studies (2008–2017). International Journal of Mobile Learning and Organisation, 14(1), 63–79. https://doi.org/10.1504/IJMLO.2020.103901

Li, K. C., & Wong, B. T. M. (2021). Review of smart learning: Patterns and trends in research and practice. Australasian Journal of Educational Technology, 37(2), 189–204. https://doi.org/10.14742/ajet.6617

Li, K. C., & Wong, B. T. M. (2021b). Features and trends of personalised learning: A review of journal publications from 2001 to 2018. Interactive Learning Environments, 29(2), 182–195. https://doi.org/10.1080/10494820.2020.1811735s

Lucieer, S. M., van der Geest, J. N., Elói-Santos, S. M., de Faria, R. M. D., Jonker, L., Visscher, C., Rikers, R. M. J. P., & Themmen, A. P. N. (2016). The development of self-regulated learning during the pre-clinical stage of medical school: A comparison between a lecture-based and a problem-based curriculum. Advances in Health Sciences Education, 21(1), 93–104. https://doi.org/10.1007/s10459-015-9613-1

Martínez-Abad, F., Gamazo, A., & Rodríguez-Conde, M. J. (2020). Educational data mining: Identification of factors associated with school effectiveness in PISA assessment. Studies in Educational Evaluation, 66, 100875. https://doi.org/10.1016/j.stueduc.2020.100875

Massaro, V. R. (2022). Global citizenship development in higher education institutions: A systematic review of the literature. Journal of Global Education and Research, 6(1), 98–114. https://doi.org/10.5038/2577-509X.6.1.1124

Moscardini, A. O., Strachan, R., & Vlasova, T. (2022). The role of universities in modern society. Studies in Higher Education, 47(4), 812–830. https://doi.org/10.1080/03075079.2020.1807493

Pegalajar, M. C. (2021). Implicaciones de la gamificación en Educación Superior: una revisión sistemática sobre la percepción del estudiante. (Implications of gamification in Higher Education: a systematic review on student perception). Revista de Investigación Educativa, (Journal of Educational Research), 39(1), 169–188. https://doi.org/10.6018/rie.419481

Picton, C., Kahu, E. R., & Nelson, K. (2018). ‘Hardworking, determined and happy’: First-year students’ understanding and experience of success. Higher Education Research & Development, 37(6), 1260–1273. https://doi.org/10.1080/07294360.2018.1478803

Quinlan, R. (1992). C4.5: Programs for Machine Learning. Morgan Kaufmann Publishers Inc. Retrieved June 3, 2023, from https://bit.ly/3kCRhVs

Ramírez, S., & Valenzuela, J. (2020). Innovación educativa: tendencias globales de investigación e implicaciones prácticas (Education Innovation: Global research trends and practical implications). Octaedro. Retrieved May 21, 2023, from https://bit.ly/36KdBEB

Rodríguez-Izquierdo, R. M. (2020). Service learning and academic commitment in higher education. Revista De Psicodidáctica, 25(1), 45–51. https://doi.org/10.1016/j.psicod.2019.09.001

Sánchez-Martín, J., Cañada-Cañada, F., & Dávila-Acedo, M. A. (2017). Just a game? Gamifying a general science class at university. Thinking Skills and Creativity, 26, 51–59. https://doi.org/10.1016/j.tsc.2017.05.003

Schnitzler, K., Holzberger, D., & Seidel, T. (2020). connecting judgment process and accuracy of student teachers: Differences in observation and student engagement cues to assess student characteristics. Frontiers in Education, 5, 1–28. https://doi.org/10.3389/feduc.2020.602470

Singh, H., & Miah, S. J. (2020). Smart education literature: A theoretical analysis. Education and Information Technologies, 25(4), 3299–3328. https://doi.org/10.1007/s10639-020-10116-4

Tenuto, P. L. (2021). Teaching in a global society: Considerations for university-based educational leadership. Journal of Global Education and Research, 5(1), 96–110. https://doi.org/10.5038/2577-509X.5.1.1027

Tharwat, A., & Schenck, W. A. (2023). Survey on active learning: State-of-the-art, practical challenges and research directions. Mathematics, 11, 820. https://doi.org/10.3390/math11040820

Theobald, E. J., Hill, M. J., Tran, E., Agrawal, S., Nicole Arroyo, E., Behling, S., Chambwe, N., Cintrón, D. L., Cooper, J. D., Dunster, G., Grummer, J. A., Hennessey, K., Hsiao, J., Iranon, N., Jones, L., Jordt, H., Keller, M., Lacey, M. E., Littlefield, C. E.,… & Freeman, S. (2020). Active learning narrows achievement gaps for underrepresented students in undergraduate science, technology, engineering, and math. Proceedings of the National Academy of Sciences of the United States of America, 117(12), 6476–6483.https://doi.org/10.1073/pnas.1916903117

Tsai, Y. S., Kovanović, V., & Gašević, D. (2021). Connecting the dots: An exploratory study on learning analytics adoption factors, experience, and priorities. The Internet and Higher Education, 50, 100794. https://doi.org/10.1016/j.iheduc.2021.100794

Vânia, C., Reses, G., & Soares, S. C. (2023). Active learning spaces design and assessment: A qualitative systematic literature review. Interactive Learning Environments, 31(1), 1–18. https://doi.org/10.1080/10494820.2022.2163263

Walkington, C., & Bernacki, M. L. (2020). Appraising research on personalized learning: Definitions, theoretical alignment, advancements, and future directions. Journal of Research on Technology in Education, 52(3), 235–252. https://doi.org/10.1080/15391523.2020.1747757

Witten, I. H., Frank, E., Hall, M. A., & Pal, C. J. (2017). Data Mining. Practical Machine Learning Tools and Techniques (4th ed.). Morgan Kaufmann. https://doi.org/10.1016/C2015-0-02071-8

Yoo, Y., Lee, H., Jo, I. H., & Park, Y. (2015). Educational Dashboards for smart learning: review of case studies. In G. Chen, V. Kumar, H. R. Kinshuk y S. Kong (eds), Lecture Notes in Educational Technology (pp. 145–155). Springer International Publishing. https://doi.org/10.1007/978-3-662-44188-6_21

Zamora-Polo, F., & Sánchez-Martín, J. (2019). Teaching for a better world. Sustainability and sustainable development goals in the construction of a change-maker University. Sustainability, 11(15), 4224. https://doi.org/10.3390/su11154224

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Disclosure statement

The authors report there are no competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Alé-Ruiz, R., Martínez-Abad, F. & del Moral-Marcos, M.T. Academic engagement and management of personalised active learning in higher education digital ecosystems. Educ Inf Technol (2023). https://doi.org/10.1007/s10639-023-12358-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-023-12358-4