Abstract

Although video-based flipped learning is a widely accepted pedagogical strategy, few attempts have been made to explore the design and integration of pre-class instructional videos into in-class activities to improve the effectiveness of flipped classrooms. This study investigated whether question-embedded pre-class videos, together with the opportunity to review these questions at the beginning of in-class sessions, affected student learning processes and outcomes. Seventy university students from two naturally constituted classes participated in the quasi-experimental study. The experiment adopted a pre-test/post-test, between-subjects design and lasted for six weeks, with the same instructional content for the experimental and control groups. Students’ age, sex, pre-experiment motivation, prior knowledge, and perceived knowledge were controlled for in the study. The results indicate that the educational intervention significantly strengthened learning performance, likely due to the increased regularity of engagement with pre-class materials. The intervention did not influence student satisfaction with the pre-class videos or the video viewing duration. Overall, the findings suggest that instructors should consider embedding questions in pre-class videos and reviewing them at the beginning of in-class sessions to facilitate student learning in video-based flipped classrooms.

Similar content being viewed by others

1 Introduction

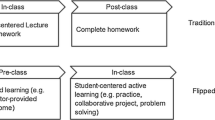

Flipped learning has been positively received by university students and instructors (Akparibo et al., 2021). There is growing evidence that flipped classrooms are more effective than traditional classrooms in reinforcing students’ academic performance (Hew et al., 2021; Torres-Martín et al., 2022), affective and attitudinal outcomes (Jeong et al., 2021; Tutal & Yazar, 2021), self-efficacy (Algarni & Lortie‐Forgues, 2022), and deep learning (Shen & Chang, 2023) and decreasing perceived course difficulty (Price & Walker, 2021) and assignment stress (Aydin & Demirer, 2022). To date, most studies investigate the effectiveness of flipped learning by comparing student experiences in flipped classrooms with traditional classrooms. Empirical evidence in favour of flipped classrooms has been observed in both university (e.g. Ozudogru & Aksu, 2020; Wu et al., 2022) and K-12 education settings (e.g. Moghadam & Razavi, 2022; Yang & Chen, 2020).

In flipped classrooms, the adoption of instructional videos as pre-class learning materials is a prevalent pedagogical practice also known as video-based flipped learning (Liao & Wu, 2023; Yu & Gao, 2022). A recent systematic review and meta-analysis of 64 studies revealed that 62 studies used videos as pre-class instructional materials (Turan, 2023). Despite widespread discussion of the strengths of video-based flipped learning (e.g. Fathi et al., 2022; Jensen et al., 2018; Lee et al., 2021), an important question remains: how can pre-class videos be designed and integrated with in-class activities to improve the effectiveness of flipped classrooms? This question is important partly because pre-class videos play an instrumental role in video-based flipped learning environments (Bordes et al., 2021; Tse et al., 2019). More importantly, students’ pre- and in-class learning behaviours and achievement are closely correlated (Chen et al., 2023), and fostering an overall supportive learning environment that connects in-class and out-of-class contexts is critical for student success (Ranellucci et al., 2021; Song, 2020). While much attention has been paid to the online components of this blended learning mode, there has been less inquiry into the design of face-to-face aspects of the instruction (Buhl-Wiggers et al., 2023a, b). In this context, the present study aims to provide empirically supported suggestions for improving the effectiveness of video-based flipped classrooms.

2 Literature review

In flipped classrooms, students are provided with learning materials before class to gain exposure to them beforehand and participate in student-centred activities during class that often involve interaction and collaboration. Videos have been identified as the most common resource used in the pre-class stage of flipped learning (Londgren et al., 2021). Although pre-class learning materials can take many forms such as audio and readings, the adoption of videos is so widespread that flipped learning has often come to be identified with pre-recorded instructional videos (Amstelveen, 2018; Çakiroğlu et al., 2020; Hew & Lo, 2018). Bordes et al. (2021) discovered that online asynchronous videos were preferred over reading assignments as pre-class learning materials and that the consistent scheduling of videos prompted student preparation for in-class sessions. Pulukuri and Abrams (2021) found that individuals assigned videos for out-of-class preparation outperformed those who used textbook reading. The more helpful students perceived pre-class videos to be, the more satisfied they were with flipped learning, and the more knowledge they felt they acquired in flipped lessons (Das et al., 2019). Consequently, this study considers videos a vital learning resource during the pre-class stage of flipped learning.

Scaffolding the pre-class learning process is important in flipped classrooms, not only because engagement with pre-class learning materials lays the foundation for effective participation in synchronous in-class activities (Lee & Martin, 2020), but also because students can fail to identify key information in these materials during the pre-class preparation phase (Shibukawa & Taguchi, 2019). Furthermore, a polarisation effect is observed in flipped instruction: while some individuals tend to perform better, others struggle even more (Ficano, 2019; Stöhr et al., 2020; Wagner & Urhahne, 2021). A potentially effective strategy to scaffold pre-class learning is to redesign instructional videos by adding prompts and interactive elements (Mayer, 2021). Wehling et al. (2021) enriched pre-existing video lectures with multiple-choice questions and opportunities to access further information; however, their study did not evaluate the effectiveness of such endeavours. van Alten et al. (2020a) presented self-regulated learning prompts as forced open question stops in videos, aiming to support their self-regulated learning at the pre-class stage of flipped learning; however, their results indicated that embedding prompts within videos impacted neither video-watching time nor academic achievement. To enhance the effectiveness of flipped learning, Awidi and Paynter (2019) contended that scholars should refine the subcomponents of the flipped design, particularly pre-class videos, and the structure of in-class sessions. This study accordingly aims to refine the design and utilisation of pre-class videos to maximise student learning in flipped classrooms.

Research has shown that students can achieve better performance when pre-class videos are supplemented with learning exercises and activities (Shahnama et al., 2021). However, not all supplementary exercises or activities enhance learning performance. For example, supporting the learning of pre-class videos with an instant messaging mobile application was found to have no effect on student achievement (Huang et al., 2022). Lai et al. (2021) proposed that embedded questions could be a promising instructional strategy because they promote interaction between videos and learners. Presumably, individuals tend to actively process and interact with the learning content when instructional videos are interpolated with questions (Gladys et al., 2022; Sauli et al., 2018). However, empirical studies have yielded mixed results on the effectiveness of these interventions. Sezer and Abay (2019) indicated that students taught using question-embedded videos outperformed those taught using the traditional method. However, their study did not disentangle the influence of embedded questions from the differences in instructional approaches. Kosmaca and Siiman (2023) and Chang (2021) reported that embedded questions led to improved achievement, whereas Deng and Gao (2023b) and Mar et al. (2017) did not observe a beneficial effect of question-embedded videos on overall post-test performance. This discrepancy in findings implies that additional research is warranted to determine strategies for the effective utilisation of question-embedded videos in flipped classrooms.

Empirical research indicates that guidance is the strongest predictor of positive learning experiences in flipped classrooms (Sointu et al., 2023). Tomas et al. (2019) suggested that additional instructor-led guidance and scaffolding may be required before learners participate in student-centred in-class activities because individuals who have trouble understanding pre-class materials can be reluctant to engage with these planned activities. Similarly, Guo (2019) recommends that instructors review pre-class instructional materials at the beginning of in-class sessions to help students activate prior knowledge and prepare for subsequent learning activities. Individuals watching pre-class videos with embedded questions may not fully understand the solutions to these questions, which may reduce the effectiveness of in-class sessions. Therefore, additional guidance may be required to diagnose and correct students’ misconceptions and enhance their understanding of pre-class learning materials. Flipped learning research has argued for the importance of aligning the content of pre-class videos and in-class sessions (Förster et al., 2022); however, empirical research on this subject is scarce. Unlike previous research, the educational intervention in the present study involved not only presenting multiple-choice questions in pre-class videos but also reviewing these questions at the beginning of in-class sessions.

While most past research has compared flipped and traditional instruction (Chen et al., 2019; Debbağ & Yıldız, 2021), the present study compares two flipped classrooms with the aim to explore strategies to improve the effectiveness of video-based flipped instruction. In this study, effectiveness is operationally defined as the extent to which students’ learning processes are strengthened and learning outcomes are elevated as a result of participating in the intervention. This study defines learning processes as comprising two components: student engagement with pre-class videos and time spent watching pre-class videos. Students’ self-reports of engagement (Fidan, 2023) and observed viewing time (Cho et al., 2021a) are frequently used as indicators of active involvement in the learning process and are regarded as determinants of flipped learning success. Both indicators were used in this study because individuals may have overestimated the amount of time spent watching pre-class videos (Scott et al., 2021). Learning outcomes are defined as comprising cognitive and affective parts – students’ learning performance and satisfaction with pre-class videos. The rationale is that motivational and emotional factors can mediate learning (Deng & Gao, 2023a). Moreover, students’ affective outcomes can be influenced by configurations of flipped classrooms (Ozudogru & Aksu, 2020) – they tend to experience greater enjoyment in flipped classrooms when the perceived value of pre-class videos is higher (Cho et al., 2021b). To address the research aim, this study proposes the following specific research question: Does interpolating pre-class instructional videos with multiple-choice questions and reviewing these questions at the beginning of in-class sessions affect learning processes (student engagement, video viewing time) and outcomes (learning performance, student satisfaction)?

3 Methodology

3.1 Research design

The quasi-experiment followed a pre-test/post-test between-subjects design. Students in the experimental and control groups were required to watch pre-class videos and participate in in-class learning activities. The difference between the two research conditions was that the experimental group watched videos interpolated with multiple-choice questions that were subsequently reviewed at the beginning of each in-class session. Notably, the questions embedded in the videos and reviewed in the classes were derived directly from the video content and did not introduce any supplementary information beyond what is already presented in the videos. Therefore, both the experimental and control groups had equal access to the learning content and materials.

The rationale behind this combined approach stems from previous research that has reported limited efficacy in using embedded questions to enhance learning performance (e.g. Mar et al., 2017). Our recent research also found that merely inserting interactive questions in pre-class videos did not sufficiently bolster learning performance in flipped classrooms (Deng & Gao, 2023b). Furthermore, it is not uncommon in educational experiments to introduce multiple differences between experimental and control groups (e.g. Jung et al., 2022; Yoon et al., 2021). For instance, in the study by Jung et al. (2022), a quasi-experimental design was employed, with the control group following a traditional flipped classroom approach and the experimental group engaging in a flipped classroom with additional activities before, during, and after class. Similarly, in a quasi-experiment conducted by Yoon et al. (2021), participants in the experimental group received self-regulated learning support but did not have access to the summary and reminders provided to the control group. Thus, there were at least two discrepancies between the experimental and control groups in their research: the instructor’s summary and reminders, and self-regulated learning support. In this context, our intervention adopts a two-step approach: embedding pre-class videos with questions and subsequently reviewing these questions at the beginning of the in-class sessions. The goal was to create an encompassing supportive learning environment for bridging the gap between in-class and out-of-class contexts, which is vital for student success in flipped classrooms (Ranellucci et al., 2021; Song, 2020).

3.2 Participants

The participants were sophomores at a Chinese university. Two parallel classes within the same undergraduate course were randomly selected and assigned to either the experimental or control conditions. Both groups watched the same pre-class instructional videos, with the key distinction being the inclusion of pop-up questions in the videos and the subsequent review of these questions in the experimental condition. Most participants majored in foreign languages, mathematics, or the political sciences. A prior sample size calculation was conducted using G*Power, with the alpha level set at 0.05, the power set at 0.08, and the effect size set at large (Faul et al., 2009). The calculation results indicated that at least 68 students were required for the experiment. A total of 70 students participated in the study, 28 in the experimental and 42 in the control group. The mean age of the participants was 20.41 years (SD = 0.67). Students did not receive monetary compensation for their participation.

3.3 Materials

The pre-class materials contained two versions of eleven 10-min instructional videos featuring presentation-slide designs. The videos comprised verbal explanations and stepwise demonstrations. The videos were obtained from a public online education platform. The design of the pre-class videos followed evidence-based multimedia design principles such as segmenting (i.e. longer videos were broken down into shorter, meaningful chunks) and coherence (i.e. only learning materials directly related to learning goals were presented). The questions were positioned during rather than after the videos, because students tended to prefer answering questions during the videos (Zolkwer et al., 2023).

A total of 26 multiple-choice questions were inserted into the pre-class videos watched by the experimental group, whereas no questions were presented in the videos for the control group. Each question had four options. A question would pop up on the screen when the progress bar reached a certain time node. Participants in the experimental group had to choose the correct option before continuing with the video. All the questions were pertinent to the information presented in the videos and appeared after the segment containing the presented information. A translated example of an embedded question is shown in Fig. 1.

As providing feedback on student answers tended to enhance self-efficacy beliefs and learning performance (Thai et al., 2023), this study followed this best practice to not only provide correct answers but also detailed feedback on answers to each question (Fig. 2). To ensure validity, the questions and feedback were designed by an e-learning expert and checked by another expert specialising in instructional technology. TronClass proved to be a reliable learning management system (LMS) in a previous study (Chen, 2022) and was used for flipped learning in the current study. Pre-class videos were uploaded to the LMS six days before the in-class sessions for both research conditions. As the present study was conducted in the natural context of flipped classrooms, students had unlimited access to videos, embedded questions, and question feedback.

3.4 Procedures

The study received ethics clearance through an institutional review board prior to conducting the experiment. The Modern Educational Technology course is intended to prepare students to design instructional resources and was held for 3 hours a week over 16 weeks, and the intervention lasted 6 weeks. The course instructor had more than eight years of teaching experience in higher education and was the same in both research conditions.

The experimental procedure is illustrated in Fig. 3. As unfamiliarity with flipped learning can adversely affect its implementation (Singh et al., 2022), the instructor provided students with information on how to excel in flipped classrooms during Week 1. To ensure compliance with pre-class study (He et al., 2016), the instructor explained to students the importance of pre-class preparation for subsequent face-to-face instruction and monitored their weekly video progress through the LMS dashboard. Students were also told upfront that instructional content in both pre-class videos and in-class sessions would be assessed in the performance test in Week 6, which would constitute 15% of the course grade. In class, the students completed the pre-test in 20 min and the pre-experiment questionnaire in 10 min.

In the pre-class stage of flipped learning during Weeks 2 to 5, students watched instructional videos, practiced the operations steps explained in the videos, and wrote down questions they had. The difference is that the experimental group watched videos embedded with questions, whereas videos viewed by the control group contained no such questions. For both research conditions, ‘prevent skipping’ feature was turned on to prevent students from skipping one or more portions of the video while watching it the first time. As learner control is crucial for video-based learning (Fyfield et al., 2022), students were given control over playback and pause while watching pre-class videos.

During the in-class sessions, the instructor helped the students consolidate and extend their knowledge through various learning activities. Under the experimental conditions, questions embedded in the pre-class videos were reviewed at the beginning of each in-class session. The instructor applied a questioning strategy to guide the students in reviewing the embedded questions (Wei et al., 2020). Specifically, the instructor called on students to answer questions and provide justifications for their chosen options. After the students attempted to justify their answers, the instructor did not directly point out whether the answer was correct, but rather randomly called on other students to comment on the answers provided. This is because students tend to value the instructor’s role as a moderator rather than as an information deliverer during in-class activities (McLean & Attardi, 2018). The instructor then provided verbal feedback on students’ answers, justifications, and comments. This process ensured that as many students as possible actively recalled the learning content and participated in the review process.

Under both research conditions, the instructor addressed students’ questions. Subsequently, students were instructed to download a task sheet from the LMS and complete it on their personal computers. Each task sheet contained at least three tasks. While the students worked to complete the task sheet, the instructor circulated the classroom, interacted with students to keep them on track, and provided scaffolding questions and feedback to address their immediate queries. Students were instructed to upload the completed task sheets to Padlet, a real-time collaborative web platform. After completing the tasks, students were called on to present their completed work to the class and verbally explain the operation procedures, and the instructor commented on the students’ work and explanations. Finally, the instructor summarised the key information that emerged from the pre-class videos and in-class sessions by drawing connections among the concepts.

In Week 6, the experimental and control groups completed the same post-experiment questionnaire and learning performance tests. The performance test was a closed-book exam, during which students had no access to pre-class videos or other study materials. The students were required to complete the test within 50 min. To control for the potential influence of familiarity with the stimuli on test performance, the questions in the learning performance test were intentionally designed to be distinct from those presented in the pre-class videos and reviewed during the in-class sessions.

3.5 Measurements

Key variables were collected and measured using a pre-test, pre-experiment questionnaire, post-test, post-experiment questionnaire, and learning analytics. All items were presented to the students in Chinese.

The pre-test was designed to detect possible differences in prior knowledge between the experimental and control groups. The pre-test included 20 multiple-choice questions that were delivered to the participants in random order. Each correct answer was given 1 point, up to a maximum score of 20. Questions on the pre-test were relevant to the instructional content but differed from the questions embedded in the pre-class videos and those used in the post-test. The difficulty level of the pre-test was lower than that of the post-test because the students had little previous experience with the topic to be learned. A reliability test was not performed because the pre-test was not designed to assess a coherent construct (van Alten et al., 2020b). Indeed, a high Cronbach’s alpha value may be undesirable when a performance test contains items tested across a range of concepts (Taber, 2017).

The pre-experiment questionnaire assessed the students’ motivation, perceived knowledge, and demographic information. Motivation was measured to investigate whether participants in different research conditions were equally motivated to study the instructional content. A global item was used to measure the level of motivation of students on an 11-point Likert scale (i.e. ‘How motivated are you to learn about this topic’) from (0) not very much motivated to (10) very much motivated. Because a higher level of perceived knowledge can result in greater feelings of efficacy and ultimately shape one’s behaviour (Eija et al., 2017), perceived knowledge was measured to understand whether the two research conditions differed in the knowledge that students believed they held. The questionnaire used a global item addressing students’ perceived knowledge about the topic to be learned on an 11-point Likert scale (i.e. ‘How much do you know about this topic’), from (0) not very knowledgeable to (10) very knowledgeable. Additionally, the questionnaire asked participants to report their demographic information, including year of birth, sex, and study major. The year of birth was transformed into age for data analysis.

The post-test was designed to detect possible differences in learning performance between the two research conditions after the intervention was implemented. Students’ performance was evaluated based on their cognitive level of knowledge retention. The post-test consisted of 40 multiple-choice questions and 10 sequencing tasks. One point was given for each correct answer, with a total of 50 points for the post-test. The questions and options in the post-test were randomly ordered for each student. Similar to the pre-test, reliability analysis was not performed because the post-test was not intended to measure the same underlying construct.

The post-experiment questionnaire evaluated students’ engagement with the instructional videos. The student engagement scale included 12 items adapted from the course engagement scale used in a previous study (Deng et al., 2020). Learners rated their level of engagement with the pre-class videos by scoring the 12 questions (e.g. ‘I often searched for further information when I encountered something in the instructional video that puzzled me’) on a 6-point Likert scale from (0) not agree at all to (6) totally agree. A high internal consistency of the student engagement scale was indicated by a Cronbach’s alpha value of 0.83 in this study. Responses to the 12 items were averaged to yield an aggregated engagement score, which was operationalised as an outcome variable in the data analysis. The post-experiment questionnaire measured student satisfaction with videos using two items adapted from Alqurashi (2018) on a 6-point Likert scale. Researchers averaged the items to obtain an aggregate satisfaction score. A Cronbach’s alpha value of 0.82 indicated good reliability of the construct.

The researchers collected learners’ viewing times of instructional videos from the LMS. Viewing time was measured in minutes. To ensure data accuracy, learners were asked to watch pre-class videos from the LMS and were not permitted to download these videos to their personal computers. The average amount of time each learner spent watching the videos was calculated and operationalised as the outcome variable in the data analysis.

4 Results

The predictor variable consisted of two independent groups, with different participants in each group. Outcome variables were measured on a continuous scale. IBM SPSS Statistics Version 29 was used for processing and analysing data. Prior to the analysis, the study variables were examined for outliers and assumptions of normality. First, we calculated the z-scores and inspected stem-and-leaf plots to eliminate outliers. Two participants were excluded from the control and experimental groups because they had very low post-test performance scores (z-score < − 3.0). Outliers were deleted, leaving 68 cases for analysis. Next, skewness and kurtosis statistics were calculated, and histograms were inspected to check for normality. The outcome variables exhibited univariate normality with skewness and kurtosis statistics within an acceptable level between − 2 and + 2, indicating that parametric tests were appropriate to analyse the data (George & Mallery, 2016).

The researchers checked whether the participants in the two research conditions differed significantly in terms of demographic variables, including age and sex. Results were considered significant at a p-value < 0.05. Because the assumption of homogeneity of variance was not met for age, Welch’s statistical test was conducted (West, 2021). The results revealed no significant differences in age between the experimental (M = 20.22, SD = 0.51) and control groups (M = 20.51, SD = 0.75), t(66) = 1.91, p = 0.06. A chi-square test of independence revealed no significant sex differences between the groups (χ2 (1, N = 68) = 1.57, p = 0.21).

The researchers then checked whether the participants in the two research conditions differed in motivation, perceived knowledge, and prior knowledge of the topic to be learned. The experimental (M = 6.56, SD = 2.67) and control groups (M = 6.88, SD = 2.25) demonstrated no significant differences in motivation to study, t(66) = 0.54, p = 0.59. Similarly, there was no significant difference between the experimental (M = 5.22, SD = 1.93) and control groups (M = 4.27, SD = 2.09) in perceived knowledge, t(67) = − 1.90, p = 0.06, or between the experimental (M = 16.04, SD = 1.93) and control groups (M = 15.37, SD = 2.40) in prior knowledge, t(66) = − 1.12, p = 0.23. The absence of statistical differences in age, sex, motivation, prior knowledge, and perceived knowledge indicates that the effects of educational interventions on learning performance could be analysed. As the level of prior knowledge and interest can affect the flipped learning experience (Chen et al., 2023), this study operationalised prior knowledge and pre-experiment motivation as covariates in analysis of covariance (ANCOVA).

4.1 What is the effect of the intervention on student engagement with pre-class videos?

An independent t-test was performed to investigate the differences in student engagement with pre-class videos between the experimental and control groups. The researchers found no statistically significant difference in the aggregated engagement scores between the groups, t(44) = − 0.87, p = 0.39, despite the fact that the experimental group (M = 4.64, SD = 0.83) demonstrated higher scores than the control group (M = 4.48, SD = 0.60; Table 1).

To take a closer look at the group differences in student engagement with the videos, a series of independent t-tests was performed to identify the relationship between the intervention and the scale values for each item. Results showed no statistically significant differences except for the item ‘I set aside a regular time each week to watch the instructional video’, for which the experimental group (M = 5.33, SD = 1.11) demonstrated significantly higher scores than the control group (M = 4.07, SD = 1.21), t(66) = − 4.34, p < 0.001.

The researchers further inspected group differences on this item when controlling for pre-experiment motivations. Levene’s test indicated that the variances could be assumed to be equal between the experimental and control groups for the item scores (p > 0.05). A customised model, including the main effects of intervention and motivation scores and the interaction term (intervention × motivation scores), indicated that the assumption of homogeneity of regression slopes was met, F(1, 64) = 0.24, p > 0.05. Once the assumptions were shown to have been met, a one-way ANCOVA was performed to determine the differences in the engagement items between the two groups when controlling for pre-experimental motivations. A significant effect of the covariate pre-experiment motivation on this item was observed, F(1,65) = 6.21, p = 0.02. Despite this, there was still a significant effect of the intervention on students setting aside regular time to watch the video after controlling for the effect of pre-experiment motivation, F(1,65) = 21.70, p < 0.001 (Table 2).

Researchers recommend using partial eta squared (η2) to enhance the comparability of effect sizes among experimental studies (Kahn, 2017; Richardson, 2011). The following equation was used to calculate the proportion of variance explained by the intervention, where SSEffect is the sum of squares of the intervention effect, and SSError is the sum of squares of the error associated with the effect:

The partial-eta-squared value of 0.25 implied that approximately 25% of the unique variance in the item was ascribable to the intervention, corresponding to a large effect size (Cohen, 1988).

4.2 What is the effect of the intervention on students’ viewing time of pre-class videos?

An independent t-test was conducted to explore the differences in viewing times between the experimental and control groups. The results revealed no statistically significant differences in the viewing time of the instructional videos, t(66) = 0.91, p = 0.37, even though the experimental group (M = 17.57, SD = 6.78) demonstrated a shorter viewing time than the control group (M = 19.23, SD = 7.78) (Table 3).

4.3 What is the effect of the intervention on students’ post-test performance?

An ANCOVA was used to determine the associations between the intervention and post-test performance while controlling for pre-test scores. Prior to the analysis, Levene’s test was performed to assess whether the variances of the experimental and control groups were approximately equal for learning performance scores. The results suggested that the assumption of homogeneity was tenable, and the variances could thus be assumed to be equal between the two research conditions (p > 0.05). To test the assumption of homogeneity of the regression slopes, the researchers customised an ANCOVA model to inspect the interaction between the predictor variable and the covariate. The customised model included the main effects of the intervention and pre-test scores, and the interaction term (intervention × pre-test scores). The results show that the assumption of homogeneity of the regression slopes was met, F(1, 64) = 0.01, p > 0.05.

As all assumptions were met, an ANCOVA was conducted to determine the differences in learning performance between the experimental and control groups when controlling for the level of prior knowledge. The results of the ANCOVA are presented in Table 4. The covariate pre-test scores were significantly related to post-test performance, F(1, 65) = 6.13, p < 0.05. The intervention had a significant effect on post-test performance after controlling for the effect of pre-test scores, F(1, 65) = 7.64, p < 0.05. In other words, the intervention significantly improved the students’ academic performance.

The calculation result yielded a partial eta-squared value of 0.11, indicating that approximately 11% of the unique variance in post-test performance was attributable to the intervention. Based on Cohen’s (1988) for behavioural sciences, partial η2 = 0.11 would correspond to a medium effect size.

4.4 What is the effect of the intervention on students’ satisfaction with pre-class videos?

ANCOVA was adopted to examine the relationship between the intervention and students’ satisfaction with the pre-class videos while controlling for their pre-experiment motivation scores. The researchers checked the assumption of equal variance and homogeneity of the regression slopes before the analysis. Levene’s test indicated that the variances in student satisfaction could be assumed to be equal between the experimental and control groups (p > 0.05). A customised ANCOVA model, including the main effects of intervention and motivation scores and the interaction term (intervention × motivation scores), was used to inspect the interaction between the predictor variable and covariate. The assumption of homogeneity in the regression slopes was retained, F(1, 64) = 0.16, p > 0.05.

As all assumptions were satisfied, a one-way ANCOVA was implemented to assess the intervention on satisfaction with the pre-class videos, controlling for pre-experiment motivations. The results of the ANCOVA are presented in Table 5. The covariate motivation scores were significantly related to student satisfaction, F(1, 65) = 5.78, p < 0.05. After controlling for the effect of pre-experiment motivation scores, the intervention on student satisfaction was not statistically significant, F(1, 65) = 0.02, p > 0.05. In other words, the more motivated the students were, the more satisfied they were with the pre-class videos. The educational intervention did not result in greater satisfaction with the pre-class videos.

5 Discussion

The results revealed that the intervention significantly improved post-test scores, and there was a medium effect size favouring the effectiveness of the intervention. Previous research has shown that embedding questions in instructional videos is more effective than priming students to read statements in presented materials (Griswold et al., 2017) or watching videos without any questions (van der Meij & Bӧckmann, 2020). It is noteworthy that the mere presence of questions in instructional videos may not always be sufficient to promote student learning. For example, Kestin and Miller (2022) recently found that solely incorporating embedded questions into educational videos had no effect on learning performance; however, combining embedded questions with enhanced visuals substantially contributed to learning gains. As this is one of the few studies that has examined the combined effect of inserting and reviewing embedded questions, its results indicate that interpolating pre-class videos with questions and subsequently reviewing these questions in face-to-face class sessions is beneficial for learning.

This study found that the intervention had a significant effect on students setting aside a regular time each week to watch the pre-class videos, and the intervention showed a large effect size relative to the control condition. In other words, the experimental group showed a tendency towards improved regularity of learning. One possible explanation for this outcome is that the intervention helps students stay involved in the learning process, motivating them to establish a regular learning pattern. Students tend to be more self-regulated and study consistently when they are actively involved in learning activities (Qiao et al., 2023; Tang & Hew, 2022). Incorporating interactive opportunities, such as providing frequent assessment and feedback, helps students maintain their attention and make progress towards their learning goals (Saqr & López-Pernas, 2021). When students watch a video without interpolated questions and are not given opportunities to review these questions in class, they may passively view the video without fully engaging with the materials or processing the content (Sun & Lin, 2022). In this case, they could lack the motivation to establish a consistent and regular learning routine.

The results of this study also corroborate previous findings that the regularity of engagement with pre-class learning materials on a weekly basis is positively associated with learning performance in flipped classrooms (Jovanović et al., 2019). In this study, students’ post-test performance and regularity of engaging with pre-class videos were positively correlated, r(68) = 0.27, p < 0.05. When students engage in regular, repeated practice of a skill or learning activity, they reinforce their knowledge, making it easier for them to recall and apply that knowledge in the future (Förster et al., 2022). Moreover, regularity helps solidify new information into long-term memory, enabling students to retain the information over a longer period of time (Theobald, 2021). This explains why it is important for students to maintain regular study schedules and to engage in consistent, focused practice to enhance learning performance. Empirical research supports this view by showing that individuals who make good use of study time and keep up with course materials tend to perform well academically (Neroni et al., 2019). For instance, Förster et al. (2022) observed that watching pre-class videos before attending in-class sessions was linked to superior learning performance and that the timing of video watching outweighed the frequency of video watching in determining knowledge retention. Aggregation of findings indicates that the mechanism of the effect of the intervention on learning performance may be its promotion of regularity of learning in flipped classrooms, which is known to enhance student success in traditional learning environments (Jovanović et al., 2021). In the present study, students in the experimental group may have worked on a more regular basis to acquire key information from viewing pre-class videos, and subsequently retrieved this information through the review activity and effectively combined it with the knowledge obtained during in-class sessions.

The present study indicated that the intervention had no impact on students’ viewing time for pre-class videos. In video-based learning environments, an increase in viewing time can be interpreted as a positive signal of a higher level of interaction with learning materials (Vural, 2013) and more active processing of video content (van der Meij & Bӧckmann, 2020). Simultaneously, the same indicator can be considered as indicating processing difficulty and the exertion of greater cognitive effort (Barut Tugtekin & Dursun, 2022). Recently, a learning analytics study showed that individuals who performed at an average level on the pre-test and whose performance was manifestly degraded at the end of the course exhibited the largest time ratio for skipping backwards, whereas those who scored the highest in the pre-test and post-test demonstrated only a small skipping-backwards time ratio, indicating that a longer viewing time may not necessarily imply academic success (Liao & Wu, 2023). Future research could include video interaction events (e.g. Yurum et al., 2023) to provide a more nuanced understanding of the impact of the intervention on learning processes.

The current study showed that the intervention did not affect students’ satisfaction with the pre-class videos. The adjusted mean score for satisfaction was approximately 4.8 on a 6-point scale for both the experimental and control groups. This result echoes the previous finding that both the question-present and question-absent groups reported a satisfaction mean score of 5.5 on a 7-point scale (van der Meij & Bӧckmann, 2020). This result is also in line with findings from cross-sectional studies showing that students tend to react positively to instructional videos interpolated with questions (Desai & Kulkarni, 2022; Palaigeorgiou & Papadopoulou, 2019). The aggregated findings suggest that learners report positive appraisals of videos containing embedded questions. The absence of an effect of the intervention on student satisfaction may be attributable to the overall positive appraisals of instructional content, which could overshadow the perceived benefits of the embedded questions (van der Meij & Bӧckmann, 2020). Based on these findings, our study provides several theoretical contributions and practical implications, which are discussed below.

5.1 Theoretical contributions

First, this study reinforces the view that boundary conditions should be considered a means of theory development in multimedia learning research (Deng & Gao, 2023a). It highlights that contextual factors in an authentic learning environment can mediate the efficacy of design principles observed under laboratory conditions (Fyfield et al., 2019). For example, while laboratory studies have shown that videos containing seductive details can decrease learning performance, the study by Maloy et al. (2019) reveals that such videos do not harm students’ actual or perceived learning in flipped classrooms. Although interpolating videos with questions has been shown to enhance student learning in experiments conducted in laboratory or artificial settings (Kestin & Miller, 2022; Tweissi, 2016; van der Meij & Bӧckmann, 2020), evidence regarding its effectiveness in flipped classrooms is not yet well-established. Flipped classroom observations and experiments have been conducted in ecologically valid settings; however, existing flipped classroom research often provides ‘little, if any, information on the design of the instructional videos’ (van der Meij & Dunkel, 2020, p. 2). This study represents an important step towards understanding how instructors can refine the design and utilisation of instructional videos to improve student learning. As videos form an instrumental part of flipped learning, future research should continue exploring effective interventions for optimising the use of video resources in flipped classrooms.

Second, our study contributes to the flipped learning literature by examining the combined effects of embedding and reviewing questions on learning processes and outcomes in flipped classrooms. The results demonstrate that embedding questions in pre-class videos and reviewing them during in-class sessions promotes consistent learner engagement and leads to improved learning performance. The possible mechanism of the effect of the intervention on performance could derive from students’ regular engagement with pre-class materials, which may have primed them to establish a more regular study pattern to make sense of the materials, leading to improved academic achievement (Jovanović et al., 2019). These findings reinforce previous evidence from experimental (van der Meij & Bӧckmann, 2020) and cross-sectional studies (Desai & Kulkarni, 2022) that the question-present and question-absent conditions demonstrate comparable satisfaction levels, indicating that embedded questions exert a limited influence on affective outcomes. The findings also advance our understanding of the specific advantages and boundary conditions of interpolated questions.

5.2 Practical implications

This study has important practical implications for designing video-based flipped classrooms. Despite the pervasive use of instructional videos as pre-class learning materials, there is a paucity of evidence-based studies that inform instructors on how to improve the effectiveness of video-based flipped learning (van der Meij & Dunkel, 2020). This study provides empirical evidence that students perform better and maintain a more consistent learning pattern when questions are embedded in pre-class videos and reviewed during in-class sessions. Although the researchers cannot dismiss the potential effectiveness of other instructional techniques, the findings strongly support the practice of incorporating questions into pre-class videos and reviewing them at the beginning of in-class sessions. Therefore, instructors can employ this technique to emphasise the most salient learning points. Moreover, the design of the educational intervention was thoroughly described, which will allow other researchers to replicate this study in different flipped classrooms. Not all interactive elements embedded in videos promote content learning (Cojean & Jamet, 2022). Future studies should continue to identify empirically validated teaching practices and their associated parameters. Such endeavours would enable more effective implementation of flipped classrooms, particularly for instructors who are not familiar with this teaching mode (Buhl-Wigger et al., 2023b).

6 Limitations and future directions

First, like many other flipped learning studies, this study considers the use of videos as an essential part of pre-class learning activities. Despite their pervasive use, pre-class activities in flipped classrooms can be structured in many ways. Future studies should explore and optimise the design of pre-class activities beyond watching instructional videos, such as peer coaching (Xu et al., 2021), game-based learning activities (Hwang & Chang, 2023), as well as the application of new technologies such as Virtual Reality (Marks & Thomas, 2022) and Metaverse (Onu et al., 2023). For instance, the results of our study can be extended to the context of flipped classes based on VR technologies such as virtual laboratories. In that regard, we can investigate whether embedding questions and cues in VR learning materials has an impact on student performance. Second, this study was based on a content area featuring procedural knowledge. Future research could replicate this study in other study areas and explore whether the effectiveness of the intervention is mediated by knowledge type (Liu et al., 2023). Third, this study evaluated learning performance at the cognitive level of knowledge retention. Future studies should focus on the development of higher cognitive levels of learning, such as knowledge application (e.g. Wang et al., 2023). Lastly, our study aimed to assess the combined effects of embedding and reviewing questions, and we did not investigate their individual effects. This approach is motivated by previous studies (e.g. Mar et al., 2017) that have reported mixed findings regarding the effectiveness of solely inserting questions in videos to enhance students’ performance. Additionally, our recent research (Deng & Gao, 2023b) has indicated that solely inserting questions in videos does not significantly improve students’ performance. Although investigating the combined effects of multiple instructional strategies is common in experiments conducted in flipped classrooms (e.g. Jung et al., 2022; Yoon et al., 2021) and broader educational settings (e.g. de Buisonjé et al., 2017; Marwaha et al., 2021; Motameni, 2018), isolating the individual effect of the explanatory variables would further determine whether the effectiveness of the intervention is attributable to presenting questions in videos, reviewing questions during class, or in combination. Future research could advance this line of inquiry by adding control conditions that enable individuals to access pre-class videos interpolated with questions or the opportunity to review the questions in class.

7 Conclusions

To improve learning effectiveness, instructors commonly interpolate pre-class instructional videos with questions (Cornwell, 2021; Fidan, 2023; Gibson & Shelton, 2021; Gündüz & Akkoyunlu, 2019). However, studies of the effectiveness of this design practice have yielded inconsistent results in flipped classrooms. While some researchers have reported positive effects (e.g. Haagsman et al., 2020), others have found that the presence of questions has a limited contribution to flipped learning (e.g. Deng & Gao, 2023b; Mar et al., 2017). This may be because individuals who watched the question-embedded videos did not fully understand the solutions to these questions, and additional guidance is warranted to clarify students’ doubts and better prepare them for subsequent learning activities. In this vein, this study designed an educational intervention in which the experimental group watched pre-class videos interpolated with multiple-choice questions and was given the opportunity to review these questions at the beginning of each in-class session. The results showed that the intervention had a positive influence on learning performance, with a medium effect size. The intervention also improved the regularity of learning, and a large effect was observed. The experimental conditions did not affect student satisfaction with pre-class videos or the video viewing duration. The study findings have important implications for flipped learning literature, as well as for instructors and educational institutions seeking to enhance the effectiveness of video-based flipped classrooms.

Data availability

The datasets analysed during the current study are available from the corresponding author upon reasonable request.

Change history

23 December 2023

A Correction to this paper has been published: https://doi.org/10.1007/s10639-023-12432-x

References

Akparibo, R., Osei-Kwasi, H. A., & Asamane, E. A. (2021). Flipped learning in the context of postgraduate public health higher education: A qualitative study involving students and their tutors. International Journal of Educational Technology in Higher Education, 18, Arctile e58. https://doi.org/10.1186/s41239-021-00294-7

Algarni, B., & Lortie-Forgues, H. (2022). An evaluation of the impact of flipped-classroom teaching on mathematics proficiency and self-efficacy in Saudi Arabia. British Journal of Educational Technology, 54(1), 414–435. https://doi.org/10.1111/bjet.13250

Alqurashi, E. (2018). Predicting student satisfaction and perceived learning within online learning environments. Distance Education, 40(1), 133–148. https://doi.org/10.1080/01587919.2018.1553562

Amstelveen, R. (2018). Flipping a college mathematics classroom: An action research project. Education and Information Technologies, 24(2), 1337–1350. https://doi.org/10.1007/s10639-018-9834-z

Awidi, I. T., & Paynter, M. (2019). The impact of a flipped classroom approach on student learning experience. Computers & Education, 128, 269–283. https://doi.org/10.1016/j.compedu.2018.09.013

Aydin, B., & Demirer, V. (2022). Are flipped classrooms less stressful and more successful? An experimental study on college students. International Journal of Educational Technology in Higher Education, 19, Article e55. https://doi.org/10.1186/s41239-022-00360-8

Barut Tugtekin, E., & Dursun, O. O. (2022). Effect of animated and interactive video variations on learners’ motivation in distance Education. Education and Information Technologies, 27, 3247–3276. https://doi.org/10.1007/s10639-021-10735-5

Bordes, S. J., Walker, D., Modica, L. J., Buckland, J., & Sobering, A. K. (2021). Towards the optimal use of video recordings to support the flipped classroom in medical school basic sciences education. Medical Education Online, 26(1), Article e1841406. https://doi.org/10.1080/10872981.2020.1841406

Buhl-Wiggers, J., Kjærgaard, A., & Munk, K. (2023). A scoping review of experimental evidence on face-to-face components of blended learning in higher education. Studies in Higher Education, 48(1), 151–173. https://doi.org/10.1080/03075079.2022.2123911

Buhl-Wiggers, J., la Cour, L., Franck, M. S., & Kjærgaard, A. (2023). Investigating effects of teachers in flipped classroom: a randomized controlled trial study of classroom level heterogeneity. International Journal of Educational Technology in Higher Education, 20(1), Article e26. https://doi.org/10.1186/s41239-023-00396-4

Çakiroğlu, Ü., Güven, O., & Saylan, E. (2020). Flipping the experimentation process: Influences on science process skills. Educational Technology Research and Development, 68(6), 3425–3448. https://doi.org/10.1007/s11423-020-09830-0

Chang, H. (2021). The effect of embedded interactive adjunct questions in instructional videos [Doctoral dissertation, The Pennsylvania State University]. University Park, Pennsylvania. https://www.proquest.com/openview/4896a7f284d0797e30f6b33384246da2/1?pq-origsite=gscholar&cbl=18750&diss=y

Chen, K. T. C. (2022). Speech-to-text recognition in university English as a foreign language learning. Education and Information Technologies, 27(7), 9857–9875. https://doi.org/10.1007/s10639-022-11016-5

Chen, Y.-T., Liou, S., & Chen, S.-M. (2019). Flipping the procedural knowledge learning – a case study of software learning. Interactive Learning Environments, 29(3), 428–441. https://doi.org/10.1080/10494820.2019.1579231

Chen, T., Luo, H., Wang, P., Yin, X., & Yang, J. (2023). The role of pre-class and in-class behaviors in predicting learning performance and experience in flipped classrooms. Heliyon, 9(4), Article e15234. https://doi.org/10.1016/j.heliyon.2023.e15234

Cho, M.-H., Park, S. W., & Lee, S.-E. (2021). Student characteristics and learning and teaching factors predicting affective and motivational outcomes in flipped college classrooms. Studies in Higher Education, 46(3), 509–522. https://doi.org/10.1080/03075079.2019.1643303

Cho, H. J., Zhao, K., Lee, C. R., Runshe, D., & Krousgrill, C. (2021). Active learning through flipped classroom in mechanical engineering: improving students' perception of learning and performance. International Journal of STEM Education, 8(1), Article e46. https://doi.org/10.1186/s40594-021-00302-2

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Erlbaum Associates.

Cojean, S., & Jamet, E. (2022). Does an interactive table of contents promote learning from videos? A study of consultation strategies and learning outcomes. British Journal of Educational Technology, 53, 269–285. https://doi.org/10.1111/bjet.13164

Cornwell, P. (2021). Interactive videos and “in-class” activities in a flipped remote dynamics class 2021 ASEE Virtual Annual Conference Content Access, Online. https://peer.asee.org/37371

Das, A., Lam, T. K., Thomas, S., Richardson, J., Kam, B. H., Lau, K. H., & Nkhoma, M. Z. (2019). Flipped classroom pedagogy: : Using pre-class videos in an undergraduate business information systems management course. Education and Training, 61(6), 756–774. https://doi.org/10.1108/ET-06-2018-0133

de Buisonjé, D. R., Ritter, S. M., de Bruin, S., ter Horst, J.M.-L., & Meeldijk, A. (2017). Facilitating creative idea selection: The combined effects of self-affirmation, promotion focus and positive affect. Creativity Research Journal, 29(2), 174–181. https://doi.org/10.1080/10400419.2017.1303308

Debbağ, M., & Yıldız, S. (2021). Effect of the flipped classroom model on academic achievement and motivation in teacher education. Education and Information Technologies, 26(3), 3057–3076. https://doi.org/10.1007/s10639-020-10395-x

Deng, R., & Gao, Y. (2023a). A review of eye tracking research on video-based learning. Education and Information Technologies, 28, 7671–7702. https://doi.org/10.1007/s10639-022-11486-7

Deng, R., & Gao, Y. (2023b). Effects of embedded questions in pre-class videos on learner perceptions, video engagement, and learning performance in flipped classrooms. Active Learning in Higher Education. Advance online publication. https://doi.org/10.1177/14697874231167098

Deng, R., Benckendorff, P., & Gannaway, D. (2020). Learner engagement in MOOCs: Scale development and validation. British Journal of Educational Technology, 51(1), 245–262. https://doi.org/10.1111/bjet.12810

Desai, T. S., & Kulkarni, D. C. (2022). Assessment of interactive video to enhance learning experience: A case study. Journal of Engineering Education Transformations, 35, 74–80.

Eija, Y., Eila, J., & Pongsakdi, N. (2017). Primary school student teachers’ perceived and actual knowledge in biology. Center for Educational Policy Studies Journal, 7(4), 125–146. https://doi.org/10.25656/01:15226

Fathi, J., Rahimi, M., & Liu, G. Z. (2022). A preliminary study on flipping an English as a foreign language collaborative writing course with video clips: Its impact on writing skills and writing motivation. Journal of Computer Assisted Learning, 39(2), 659–675. https://doi.org/10.1111/jcal.12772

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160. https://doi.org/10.3758/BRM.41.4.1149

Ficano, C. K. C. (2019). Identifying differential benefits from a flipped-group pedagogy in introductory microeconomics. International Review of Economics Education, 30, Article e100143. https://doi.org/10.1016/j.iree.2018.07.002

Fidan, M. (2023). The effects of microlearning-supported flipped classroom on pre-service teachers’ learning performance, motivation and engagement. Education and Information Technologies, Advance Online Publication. https://doi.org/10.1007/s10639-023-11639-2

Förster, M., Maur, A., Weiser, C., & Winkel, K. (2022). Pre-class video watching fosters achievement and knowledge retention in a flipped classroom. Computers & Education, 179, Article e104399. https://doi.org/10.1016/j.compedu.2021.104399

Fyfield, M., Henderson, M., Heinrich, E., & Redmond, P. (2019). Videos in higher education: Making the most of a good thing. Australasian Journal of Educational Technology, 35(5), 1–7. https://doi.org/10.14742/ajet.5930

Fyfield, M., Henderson, M., & Phillips, M. (2022). Improving instructional video design: A systematic review. Australasian Journal of Educational Technology, 38(3), 155–183. https://doi.org/10.14742/ajet.7296

George, D., & Mallery, M. (2016). IBM SPSS statistics 23 step-by-step: A simple guide and reference. Routledge.

Gibson, J. P., & Shelton, K. (2021). Introductory biology students’ opinions on the pivot to crisis distance education in response to the COVID-19 pandemic. Journal of College Science Teaching, 51(1), 12–18. https://www.jstor.org/stable/27133136.

Gladys, M. J., Rogers, L., Sharafutdinova, G., Barnham, N., Nichols, P., & Dastoor, P. C. (2022). Driving course engagement through multimodal strategic technologies. International Journal of Innovation in Science and Mathematics Education, 30(3), 19–31. https://www.jstor.org/stable/27133136

Griswold, L. A., Overson, C. E., & Benassi, V. A. (2017). Embedding questions during online lecture capture to promote learning and transfer of knowledge. American Journal of Occupational Therapy, 71(3), 1–7. https://doi.org/10.5014/ajot.2017.023374

Gündüz, A. Y., & Akkoyunlu, B. (2019). Student views on the use of flipped learning in higher education: A pilot study. Education and Information Technologies, 24(4), 2391–2401. https://doi.org/10.1007/s10639-019-09881-8

Guo, J. (2019). The use of an extended flipped classroom model in improving students’ learning in an undergraduate course. Journal of Computing in Higher Education, 31(2), 362–390. https://doi.org/10.1007/s12528-019-09224-z

Haagsman, M. E., Scager, K., Boonstra, J., & Koster, M. C. (2020). Pop-up questions within educational videos: Effects on students’ learning. Journal of Science Education and Technology, 29(6), 713–724. https://doi.org/10.1007/s10956-020-09847-3

He, W., Holton, A., Farkas, G., & Warschauer, M. (2016). The effects of flipped instruction on out-of-class study time, exam performance, and student perceptions. Learning and Instruction, 45, 61–71. https://doi.org/10.1016/j.learninstruc.2016.07.001

Hew, K. F., Bai, S., Huang, W., Dawson, P., Du, J., Huang, G., Jia, C., & Thankrit, K. (2021). On the use of flipped classroom across various disciplines Insights from a second-order meta-analysis. Australasian Journal of Educational Technology, 37(2), 132–151. https://doi.org/10.14742/ajet.6475

Hew, K. F., & Lo, C. K. (2018). Flipped classroom improves student learning in health professions education: A meta-analysis. BMC Medical Education, 18(1), Article e38. https://doi.org/10.1186/s12909-018-1144-z

Huang, L., Wang, K., Li, S., & Guo, J. (2022). Using WeChat as an educational tool in MOOC-based flipped classroom: What can we learn from students' learning experience? Frontiers in Psychology, 13, Article e1098585. https://doi.org/10.3389/fpsyg.2022.1098585

Hwang, G.-J., & Chang, C.-Y. (2023). Facilitating decision-making performances in nursing treatments: A contextual digital game-based flipped learning approach. Interactive Learning Environments, 31(1), 156–171. https://doi.org/10.1080/10494820.2020.1765391

Jensen, J. L., Holt, E. A., Sowards, J. B., Heath Ogden, T., & West, R. E. (2018). Investigating strategies for pre-class content learning in a flipped classroom. Journal of Science Education and Technology, 27(6), 523–535. https://doi.org/10.1007/s10956-018-9740-6

Jeong, J. S., González-Gómez, D., & Cañada-Cañada, F. (2021). How does a flipped classroom course affect the affective domain toward science course? Interactive Learning Environments, 29(5), 707–719. https://doi.org/10.1080/10494820.2019.1636079

Jovanović, J., Mirriahi, N., Gašević, D., Dawson, S., & Pardo, A. (2019). Predictive power of regularity of pre-class activities in a flipped classroom. Computers & Education, 134, 156–168. https://doi.org/10.1016/j.compedu.2019.02.011

Jovanović, J., Saqr, M., Joksimović, S., & Gašević, D. (2021). Students matter the most in learning analytics: The effects of internal and instructional conditions in predicting academic success. Computers & Education, 172, Article e104251. https://doi.org/10.1016/j.compedu.2021.104251

Jung, H., Park, S. W., Kim, H. S., & Park, J. (2022). The effects of the regulated learning-supported flipped classroom on student performance. Journal of Computing in Higher Education, 34(1), 132–153. https://doi.org/10.1007/s12528-021-09284-0

Kahn, A. S. (2017). Eta squared. In M. Allen (Ed.), The SAGE encyclopedia of communication research methods (pp. 447–448). SAGE.

Kestin, G., & Miller, K. (2022). Harnessing active engagement in educational videos: Enhanced visuals and embedded questions. Physical Review Physics Education Research, 18(1), Article e010148. https://doi.org/10.1103/PhysRevPhysEducRes.18.010148

Kosmaca, J., & Siiman, L. A. (2023). Collaboration and feeling of flow with an online interactive H5P video experiment on viscosity. Physics Education, 58(1), Article e015010. https://doi.org/10.1088/1361-6552/ac9ae0

Lai, H.-M., Hsieh, P.-J., Uden, L., & Yang, C.-H. (2021). A multilevel investigation of factors influencing university students’ behavioral engagement in flipped classrooms. Computers & Education, 175, Article e104318. https://doi.org/10.1016/j.compedu.2021.104318

Lee, Y., & Martin, K. I. (2020). The flipped classroom in ESL teacher education: An example from CALL. Education and Information Technologies, 25(4), 2605–2633. https://doi.org/10.1007/s10639-019-10082-6

Lee, G.-G., Jeon, Y.-E., & Hong, H.-G. (2021). The effects of cooperative flipped learning on science achievement and motivation in high school students. International Journal of Science Education, 43(9), 1381–1407. https://doi.org/10.1080/09500693.2021.1917788

Liao, C.-H., & Wu, J.-Y. (2023). Learning analytics on video-viewing engagement in a flipped statistics course: Relating external video-viewing patterns to internal motivational dynamics and performance. Computers & Education, 197, Article e104754. https://doi.org/10.1016/j.compedu.2023.104754

Liu, C., Liu, H., & Tan, Z. (2023). Choosing optimal means of knowledge visualization based on eye tracking for online education. Education and Information Technologies, Advance Online Publication. https://doi.org/10.1007/s10639-023-11815-4

Londgren, M. F., Baillie, S., Roberts, J. N., & Sonea, I. M. (2021). A survey to establish the extent of flipped classroom use prior to clinical skills laboratory teaching and determine potential benefits, challenges, and possibilities. Journal of Veterinary Medical Education, 48(4), 463–469. https://doi.org/10.3138/jvme-2019-0137

Maloy, J., Fries, L., Laski, F., & Ramirez, G. (2019). Seductive details in the flipped classroom: The impact of interesting but educationally irrelevant information on student learning and motivation. CBE—Life Sciences Education, 18(3), Article e42. https://doi.org/10.1187/cbe.19-01-0004

Mar, C., Sohoni, S., & Craig, S. D. (2017). The effect of embedded questions in programming education 2017 IEEE Frontiers in Education Conference, Indianapolis, US.

Marks, B., & Thomas, J. (2022). Adoption of virtual reality technology in higher education: An evaluation of five teaching semesters in a purpose-designed laboratory. Education and Information Technologies, 27(1), 1287–1305. https://doi.org/10.1007/s10639-021-10653-6

Marwaha, A., Zakeri, M., Sansgiry, S. S., & Salim, S. (2021). Combined effect of different teaching strategies on student performance in a large-enrollment undergraduate health sciences course. Advances in Physiology Education, 45(3), 454–460. https://doi.org/10.1152/advan.00030.2021

Mayer, R. E. (2021). Evidence-based principles for how to design effective instructional videos. Journal of Applied Research in Memory and Cognition, 10(2), 229–240. https://doi.org/10.1016/j.jarmac.2021.03.007

McLean, S., & Attardi, S. M. (2018). Sage or guide? Student perceptions of the role of the instructor in a flipped classroom. Active Learning in Higher Education, 24(1), 49–61. https://doi.org/10.1177/1469787418793725

Moghadam, S. N., & Razavi, M. R. (2022). The effect of the Flipped Learning method on academic performance and creativity of primary school students. European Review of Applied Psychology, 72(5), Article e100811. https://doi.org/10.1016/j.erap.2022.100811

Motameni, R. (2018). The combined impact of the flipped classroom, collaborative learning, on students’ learning of key marketing concepts. Journal of University Teaching and Learning Practice, 15(3), 47–65. https://doi.org/10.53761/1.15.3.4

Neroni, J., Meijs, C., Gijselaers, H. J. M., Kirschner, P. A., & de Groot, R. H. M. (2019). Learning strategies and academic performance in distance education. Learning and Individual Differences, 73, 1–7. https://doi.org/10.1016/j.lindif.2019.04.007

Onu, P., Pradhan, A., & Mbohwa, C. (2023). Potential to use metaverse for future teaching and learning. Education and Information Technologies, 1–32. https://doi.org/10.1007/s10639-023-12167-9

Ozudogru, M., & Aksu, M. (2020). Pre-service teachers’ achievement and perceptions of the classroom environment in flipped learning and traditional instruction classes. Australasian Journal of Educational Technology, 36(4), 27–43. https://doi.org/10.14742/ajet.5115

Palaigeorgiou, G., & Papadopoulou, A. (2019). Promoting self-paced learning in the elementary classroom with interactive video, an online course platform and tablets. Education and Information Technologies, 24(1), 805–823. https://doi.org/10.1007/s10639-018-9804-5

Price, C., & Walker, M. (2021). Improving the accessibility of foundation statistics for undergraduate business and management students using a flipped classroom. Studies in Higher Education, 46(2), 245–257. https://doi.org/10.1080/03075079.2019.1628204

Pulukuri, S., & Abrams, B. (2021). Improving learning outcomes and metacognitive monitoring: Replacing traditional textbook readings with question-embedded videos. Journal of Chemical Education, 98(7), 2156–2166. https://doi.org/10.1021/acs.jchemed.1c00237

Qiao, S., Yeung, S. S. S., Zainuddin, Z., Ng, D. T. K., & Chu, S. K. W. (2023). Examining the effects of mixed and non-digital gamification on students’ learning performance, cognitive engagement and course satisfaction. British Journal of Educational Technology, 54(1), 394–413. https://doi.org/10.1111/bjet.13249

Ranellucci, J., Robinson, K. A., Rosenberg, J. M., Lee, Y.-k., Roseth, C. J., & Linnenbrink-Garcia, L. (2021). Comparing the roles and correlates of emotions in class and during online video lectures in a flipped anatomy classroom. Contemporary Educational Psychology, 65, Article e101966. https://doi.org/10.1016/j.cedpsych.2021.101966

Richardson, J. T. E. (2011). Eta squared and partial eta squared as measures of effect size in educational research. Educational Research Review, 6(2), 135–147. https://doi.org/10.1016/j.edurev.2010.12.001

Saqr, M., & López-Pernas, S. (2021). The longitudinal trajectories of online engagement over a full program. Computers & Education, 175, Article e104325. https://doi.org/10.1016/j.compedu.2021.104325

Sauli, F., Cattaneo, A., & van der Meij, H. (2018). Hypervideo for educational purposes: A literature review on a multifaceted technological tool. Technology, Pedagogy and Education, 27(1), 115–134. https://doi.org/10.1080/1475939X.2017.1407357

Scott, J. M., Bohaty, B. S., & Gadbury-Amyot, C. C. (2021). Using learning management software data to compare students’ actual and self-reported viewing of video lectures. Journal of Dental Education, 85(10), 1674–1682. https://doi.org/10.1080/1475939X.2017.1407357

Sezer, B., & Abay, E. (2019). Looking at the impact of the flipped classroom model in medical education. Scandinavian Journal of Educational Research, 63(6), 853–868. https://doi.org/10.1080/00313831.2018.1452292

Shahnama, M., Ghonsooly, B., & Shirvan, M. E. (2021). A meta-analysis of relative effectiveness of flipped learning in English as second/foreign language research. Educational Technology Research and Development, 69(3), 1355–1386. https://doi.org/10.1007/s11423-021-09996-1

Shen, D., & Chang, C.-S. (2023). Implementation of the flipped classroom approach for promoting college students’ deeper learning. Educational Technology Research and Development, Advance Online Publication. https://doi.org/10.1007/s11423-023-10186-4

Shibukawa, S., & Taguchi, M. (2019). Exploring the difficulty on students’ preparation and the effective instruction in the flipped classroom. Journal of Computing in Higher Education, 31(2), 311–339. https://doi.org/10.1007/s12528-019-09220-3

Singh, J. K. N., Jacob-John, J., Nagpal, S., & Inglis, S. (2022). Undergraduate international students’ challenges in a flipped classroom environment: An Australian perspective. Innovations in Education and Teaching International, 59(6), 724–735. https://doi.org/10.1080/14703297.2021.1948888

Sointu, E., Hyypia, M., Lambert, M. C., Hirsto, L., Saarelainen, M., & Valtonen, T. (2023). Preliminary evidence of key factors in successful flipping: Predicting positive student experiences in flipped classrooms. Higher Education, 85(3), 503–520. https://doi.org/10.1007/s10734-022-00848-2

Song, Y. (2020). How to flip the classroom in school students’ mathematics learning: Bridging in- and out-of-class activities via innovative strategies. Technology, Pedagogy and Education, 29(3), 327–345. https://doi.org/10.1080/1475939X.2020.1749721

Stöhr, C., Demazière, C., & Adawi, T. (2020). The polarizing effect of the online flipped classroom. Computers & Education, 147, Article e103789. https://doi.org/10.1016/j.compedu.2019.103789

Sun, J. C.-Y., & Lin, H.-S. (2022). Effects of integrating an interactive response system into flipped classroom instruction on students’ anti-phishing self-efficacy, collective efficacy, and sequential behavioral patterns. Computers & Education, 180, Article e104430. https://doi.org/10.1016/j.compedu.2022.104430

Taber, K. S. (2017). The use of Cronbach’s alpha when developing and reporting research instruments in science education. Research in Science Education, 48(6), 1273–1296. https://doi.org/10.1007/s11165-016-9602-2

Tang, Y., & Hew, K. F. (2022). Effects of using mobile instant messaging on student behavioral, emotional, and cognitive engagement: A quasi-experimental study. International Journal of Educational Technology in Higher Education, 19, Article e3. https://doi.org/10.1186/s41239-021-00306-6

Thai, N. T. T., De Wever, B., & Valcke, M. (2023). Feedback: An important key in the online environment of a flipped classroom setting. Interactive Learning Environments, 31, 924–937. https://doi.org/10.1080/10494820.2020.1815218

Theobald, M. (2021). Self-regulated learning training programs enhance university students’ academic performance, self-regulated learning strategies, and motivation: A meta-analysis. Contemporary Educational Psychology, 66, Article e101976. https://doi.org/10.1016/j.cedpsych.2021.101976

Tomas, L., Evans, N., Doyle, T., & Skamp, K. (2019). Are first year students ready for a flipped classroom? A case for a flipped learning continuum. International Journal of Educational Technology in Higher Education, 16, Article e5. https://doi.org/10.1186/s41239-019-0135-4

Torres-Martín, C., Acal, C., El-Homrani, M., & Mingorance-Estrada, Á. C. (2022). Implementation of the flipped classroom and its longitudinal impact on improving academic performance. Educational Technology Research and Development, 70(3), 909–929. https://doi.org/10.1007/s11423-022-10095-y

Tse, W. S., Choi, L. Y. A., & Tang, W. S. (2019). Effects of video-based flipped class instruction on subject reading motivation. British Journal of Educational Technology, 50(1), 385–398. https://doi.org/10.1111/bjet.12569

Turan, Z. (2023). Evaluating whether flipped classrooms improve student learning in science education: A systematic review and meta-analysis. Scandinavian Journal of Educational Research, 67(1), 1–19. https://doi.org/10.1080/00313831.2021.1983868

Tutal, Ö., & Yazar, T. (2021). Flipped classroom improves academic achievement, learning retention and attitude towards course: A meta-analysis. Asia Pacific Education Review, 22(4), 655–673. https://doi.org/10.1007/s12564-021-09706-9

Tweissi, A. (2016). The effects of embedded questions strategy in video among graduate students at a middle eastern university. Doctoral dissertation, Ohio University. http://rave.ohiolink.edu/etdc/view?acc_num=ohiou1477493805206092

van Alten, D. C. D., Phielix, C., Janssen, J., & Kester, L. (2020). Effects of self-regulated learning prompts in a flipped history classroom. Computers in Human Behavior, 108, Article e106318. https://doi.org/10.1016/j.chb.2020.106318

van Alten, D. C. D., Phielix, C., Janssen, J., & Kester, L. (2020). Self-regulated learning support in flipped learning videos enhances learning outcomes. Computers & Education, 158, Article e104000. https://doi.org/10.1016/j.compedu.2020.104000

van der Meij, H., & Dunkel, P. (2020). Effects of a review video and practice in video-based statistics training. Computers & Education, 143, Article e103665. https://doi.org/10.1016/j.compedu.2019.103665

van der Meij, H., & Bӧckmann, L. (2020). Effects of embedded questions in recorded lectures. Journal of Computing in Higher Education, 33, 235–254. https://doi.org/10.1007/s12528-020-09263-x

Vural, O. F. (2013). The impact of a question-embedded video-based learning tool on e-learning. Educational Sciences: Theory and Practice, 13(2), 1315–1323. https://eric.ed.gov/?id=EJ1017292

Wagner, M., & Urhahne, D. (2021). Disentangling the effects of flipped classroom instruction in EFL secondary education: When is it effective and for whom? Learning and Instruction, 75, Article e101490. https://doi.org/10.1016/j.learninstruc.2021.101490

Wang, Y., Wang, F., Mayer, R. E., Hu, X., & Gong, S. (2023). Benefits of prompting students to generate summaries during pauses in segmented multimedia lessons. Journal of Computer Assisted Learning, 39(4), 1259–1273. https://doi.org/10.1111/jcal.12797

Wehling, J., Volkenstein, S., Dazert, S., Wrobel, C., van Ackeren, K., Johannsen, K., & Dombrowski, T. (2021). Fast-track flipping: Flipped classroom framework development with open-source H5P interactive tools. BMC Medical Education, 21(1), Article e351. https://doi.org/10.1186/s12909-021-02784-8

Wei, X., Cheng, I. L., Chen, N.-S., Yang, X., Liu, Y., Dong, Y., Zhai, X., & Kinshuk. (2020). Effect of the flipped classroom on the mathematics performance of middle school students. Educational Technology Research and Development, 68(3), 1461–1484. https://doi.org/10.1007/s11423-020-09752-x

West, R. M. (2021). Best practice in statistics: Use the Welch t-test when testing the difference between two groups. Annals of Clinical Biochemistry, 58(4), 267–269. https://doi.org/10.1177/0004563221992088

Wu, Y. Y., Liu, S., Man, Q., Luo, F. L., Zheng, Y. X., Yang, S., Ming, X., & Zhang, F. Y. (2022). Application and evaluation of the flipped classroom based on micro-video class in pharmacology teaching. Frontiers in Public Health, 10, Article e838900. https://doi.org/10.3389/fpubh.2022.838900