Abstract

Pulsed-wave Doppler ultrasound is a widely used technique for monitoring pregnancies. As ultrasound equipment becomes more advanced, it becomes harder to train practitioners to be proficient in the procedure as it requires the presence of an expert, access to high-tech equipment as well as several volunteering patients. Immersive environments such as mixed reality can help trainees in this regard due to their capabilities to simulate real environments and objects. In this article, we propose a mixed reality application to facilitate training in performing pulsed-wave Doppler ultrasound when acquiring a spectrogram to measure blood velocity in the umbilical cord. The application simulates Doppler spectrograms while the trainee has the possibility of adjusting parameters such as pulse repetition frequency, sampling depth, and beam-to-flow angle. This is done using a combination of an optimized user interface, 3D-printed objects tracked using image recognition and data acquisition from a gyroscope. The application was developed for Microsoft HoloLens as the archetype of mixed reality, while a 3D-printed abdomen was used to simulate a patient. The application aims to aid in both simulated and real-life ultrasound procedures. Expert feedback and user-testing results were collected to validate the purpose and use of the designed application. Design science research was followed to propose the intended application while contributing to the literature on leveraging immersive environments for medical training and practice. Based on the results of the study, it was concluded that mixed reality can be efficiently used in ultrasound training.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Doppler ultrasound offers a noninvasive and safe method for measuring blood flow in a wide range of blood vessels including the umbilical cord (Gill, 1985). In fetal blood vessels, Doppler assessment reduces perinatal mortality and morbidity in high-risk obstetric pregnancy situations (Alfirevic et al., 2017; Maulik et al., 2010). Furthermore, by measuring the mother's blood flow to the placenta, a Doppler ultrasound examination around pregnancy week 24 can detect women at high risk of developing preeclampsia which is one of the most common complications in pregnancy (Brichant & Bonhomme, 2014). Moreover, proper use of ultrasound can improve prenatal care and lead to increased detection of fetuses with malnutrition, which causes death both during pregnancy and in the newborn period (Kim et al., 2017).

Pulsed-wave (PW) Doppler ultrasound is the preferred technique to monitor blood flow in pregnancies and umbilical arteries due to its capability to provide more precise data about a specific location compared to continuous wave Doppler (Dwivedi, 2018). Fetal age is required for most procedures done during pregnancy and correct pregnancy age is the absolute basis for defining growth abnormalities in fetuses such as growth restrictions (Cosmi et al., 2017). One of these restrictions can be diagnosed by monitoring the diastolic blood flow in the umbilical cord, more specifically the umbilical artery. A decreased diastolic flow indicates early placental insufficiency while a reversal of flow may be a clinical emergency leading to fetal fatality within two weeks (Dwivedi, 2018). For ultrasound practitioners such as midwives, sonographers, radiologists, and gynecologists involved in antenatal care and ultrasound procedures, proper training is of high importance. It has been suggested that the medical-technical equipment and training procedures have limited feasibility in situations where there is a lack of resources or limited accessibility to resources (Dreier, 2012). This is due to the limited technical knowledge of the trainees and the high sensitivity of the equipment which can lead to major errors in monitoring pregnancies. Along with this, the presence of an expert during training, access to high-tech equipment and a lack of volunteering patients pose challenges to conducting competent ultrasound training (NTNU, 2014).

Several solutions have been proposed in this regard, for e.g., Palmer et al. (2015) used mobile augmented reality to simulate Doppler spectrograms. Mixed Reality (MR) technology, which includes augmented reality, has been advocated as one of the technologies to improve medical training procedures due to its capability to simulate a variety of real-life environments and leverage communication between digital and physical objects in real time (Flavián et al., 2019). Yeo and Romero (2020) also used the concept of digital simulations, and designed an ultrasound training environment, but with limited capabilities such as 2D visualization and limited movement for the ultrasound probe. The literature presents an oversight regarding how MR technology can be used to create an ultrasound training environment for training ultrasound practitioners to measure blood flow in the umbilical cord. This can be referred to as a case of application and neglect spotting in the literature (Sandberg & Alvesson, 2011). Hence, we pose the research question (RQ): How to use mixed reality to create a pulsed wave Doppler ultrasound training environment to measure blood flow in the umbilical cord?

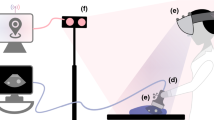

To answer the aforementioned question, we used the design science research method by Vaishnavi and Kuechler (2004) and created an MR application with Microsoft HoloLens as the archetype. The application aims to help ultrasound practitioners perform PW Doppler ultrasound, specifically to measure the blood flow in the umbilical cord. Trainees can visualize the effect of Pulse Repetition Frequency (PRF), sampling depth and beam-to-flow angle on the Doppler spectrogram. PRF and sampling depth can be changed by the user explicitly using gesture-based inputs, while a 3D-printed ultrasound probe was used to control the beam-to-flow angle which is tracked using image recognition and a gyroscope sensor-equipped Arduino. Additionally, 3D-printing technology was used to create a model of the abdomen that could be used as a training aid. With this article, we aim to disseminate the design process of the application and the system. The paper also contributes to design science research in information systems as well as human–computer interaction.

The main audience of the paper is medical education organizations responsible for training personnel in performing obstetric ultrasound, as well as the technology managers/developers responsible for building tools for the trainees. The paper points out the advantages of using such a system while communicating an exhaustive account of the development, allowing the readers to deploy and experiment with similar systems to overcome the current limitations of traditional ultrasound training mentioned earlier. The developed system consequently aims to help ultrasound practitioners.

Following the current section, Section 2 reports on previous works and background in the current context, while Section 3 discusses the used methodology. Sections 4 and 5 report the extracted requirements and the design of the intended application using a requirement-matching approach. Furthermore, the experimentation methodology and the results from the initial study are reported in Sections 6 and 7, followed by a discussion in Section 8.

2 Background and previous work

To justify the use of MR for ultrasound, we have reviewed previous approaches of systems intended to train ultrasound practitioners to perform PW ultrasound. We have identified their limitations and drawn inspiration for our MR application. We will begin by briefly stating the capabilities and concepts of MR and then moving on to previous research towards ultrasound training.

2.1 Mixed reality technology

The term Mixed Reality was coined by Milgram and Kishino (1994) where the authors defined it to be anywhere between the extremes of the Reality-Virtuality (RV) continuum. In recent years, the line between the different kinds of MR technology has been blurring out due to the emergence of market devices that try to redefine the terminologies in the mixed reality ecosystem (Virtual Reality, Augmented Reality, etc.) (Milman, 2018). In this paper, we have specifically used optical-see through MR which can be defined as “Head Mounted Displays equipped with a see-through capability, with which computer-generated graphics can be optically superimposed, using half-silvered mirrors, onto directly viewed real-world scenes” (Milgram & Kishino, 1994, p. 3). This technology has the advantage of facilitating visualization and interaction with digital objects without occluding the user’s real-life vision. In the intended research, it is also important to optimize the extent of world knowledge in the system since medical applications like ultrasound training require a high precision and understanding of the surrounding as well as underlying anatomy. This can be done by using the concept of Tangible AR which emphasizes physical object form and interactions (Billinghurst et al., 2008). This concept can be further understood using the terminology Pure Mixed Reality by Flavián et al. (2019) where the integration of digital objects into the real world is such that they are indistinguishable from the real objects. Skarbez et al. ( 2021) extended the RV continuum and proposed a 3D continuum with immersion and coherence, along with extend of world knowledge, as shown in Fig. 1. The authors introduced the concept of mixed reality illusion where the users feel “that they are in a place that blends real and virtual stimuli seamlessly and responds intelligently to user behavior” (Skarbez et al., 2021, p. 6). To create a mixed reality illusion, optimizing all three parameters is required, as shown in Fig. 1.

Three-dimensional taxonomy and the concept of the mixed reality illusion (Skarbez et al., 2021)

Among the hardware solutions currently available for creating an optimized mixed reality illusion, Microsoft HoloLens 2 is considered the flagship device since it has considerable advantages over fully immersive displays (VR) such as Meta 2 and see-through devices like Magic Leap (Roy et al., 2019). Unlike fully immersive VR, HoloLens 2 does not obstruct the user’s vision and provides advanced ergonomics in terms of field of view, weight balance, and mobility compared to Magic Leap and Meta devices. MR devices such as HoloLens have been motivated in literature to be used in health and medical research (Moro et al., 2021; Park et al., 2021) since they maintain the possibility of having direct contact with the patient.

2.2 Training with digital technologies and XR

The role of digital technologies in training and education has been extensively studied in the literature. One of the earliest examples of immersive displays was developed as flight simulators for trainee pilots by Furness (1986). Over the following years, the potential of these technologies in education and training has been studied in both industry and academia. Psotka (1995) argues that immersive environments “can be used for exploration and for training practical skills, technical skills, operations, maintenance and academic concerns” (p. 428). Zyda (2005) motivated the development of serious games which are “mental contest, played with a computer in accordance with specific rules, that uses entertainment to further government or corporate training, education, health, public policy, and strategic communication objectives” (p. 2). The authors reported the use of visual simulation, spatial and immersive sound, immersion, and advanced user interfaces as key components for the development of these systems. With the rapid development of the technology sector, a wider range of technologies has proved their relevance in training and education. Punie et al. (2006) reviewed the role of information and communication technologies (ICT) on learning in 2006, and mentioned that “it is difficult and maybe even impossible to imagine future learning environments that are not supported, in one way or the other, by ICT” (p. 5). The authors here concluded that “educational achievements are positively influenced by ICT” (p. 19) and ICTs can be used “as tools to support and improve the existing learning process and its administration more than their transformative potential” (p. 20).

Adelsberger et al. (2013) pointed out convergence as the major characteristic of ICTs that can be leveraged in education and training at micro, meso and macro levels. This implies the convergence of multimodal content (text, audio, and video), pedagogical curricula and services offered by institutions. Freina and Ott (2015) further pointed out that training with immersive environments “allows a direct feeling of objects and events that are physically out of our reach, it supports training in a safe environment avoiding potential real dangers” (p. 6). In the case of medical training, immersive environments have been used to support communication among medical personnel, simulate high-risk environments to train medical trainees, practice diagnoses as well as therapeutic interventions (Freina & Ott, 2015; Pensieri & Pennacchini, 2014). Li et al. (2017) discussed the use of 3D head mounted displays in clinical medicine for surgical training due to their simulation capabilities.

Research on the relevance of these technologies remains an important topic even in current times. Recently, Wharrad et al. (2021) emphasized the relevance of digital technology-based education for healthcare students and professionals. The authors argued for reusable object learning which is characterized by short sessions on discrete topics assisted by digital technologies. Haleem et al. (2022) expanded on this and highlighted that these technologies have significantly shifted the education system by taking up roles of information co-creators, mentors and assessors. According to the review by Barteit et al. (2021), MR can enhance teaching and learning by providing immersive experiences that may foster the teaching and learning of complex medical content. MR enables repeated practice without adverse effects on the patient in various medical disciplines. This may introduce new ways to learn complex medical content and alleviate financial, ethical, and supervisory constraints on the use of traditional medical learning materials, such as cadavers and other lab equipment. Furthermore, MR devices have also been specifically used in ultrasound applications, for e.g., Nguyen et al (2022) developed an application using HoloLens and a portable ultrasound machine connected via a wireless network. More ultrasound applications based on these technologies are discussed in the next section.

2.3 Medical ultrasound and ultrasound training

Firstly, we need to familiarize ourselves with the basic concepts of ultrasound described in the literature, which are essential for developing an ultrasound training application. Ultrasound, also known as ultrasonography or sonography, is an imaging technique that uses short pulses of sound waves (2-15 MHz) for visualization of anatomical structures (Varsou, 2019). Tissue echoes have a high amplitude and blood has a low amplitude (due to the low reflexivity of ultrasound waves). Thus, in a typical B-mode image tissue is visualized as bright while blood is darker (Stoylen, 2020). Further, PW Doppler can be used to measure real-time blood velocity at a specific depth using the transducer interface. Within the sampled region, blood has multiple velocities, and the Doppler method uses spectral frequency analysis to display them (Stoylen, 2020). Several factors affect the Doppler spectrogram output, and in this paper, we focus on blood velocity (v), blood-to-flow angle \((\theta )\), PRF (\({f}_{PRF})\) and sampling depth (\(z)\). While blood velocity represents the velocity of blood flow, the blood-to-flow angle is the angle between the ultrasound beam and the blood flow. The sampling depth represents the distance traveled by the ultrasound waves. To calculate the blood velocity \(v\) at a specified depth d, we can use the Doppler shift 𝑓𝑑, which is the difference between the transmitted and received frequencies. Equation 1 illustrates this relationship, where \({f}_{0}\) is the central frequency of the probe and c is the speed of sound in the tissue (Uppal & Mogra, 2010). The blood velocity is given by Eq. 2. The Doppler spectrogram is a plot between blood velocity and time. PW Doppler is bounded by the PRF (\({f}_{PRF})\), which is the frequency at which the pulses are emitted in PW Doppler. One can notice aliasing attributes in the graph when the signal is under sampled and various signals become indistinguishable, meaning that velocities become ambiguous. To avoid aliasing, we need to remember the Nyquist rule given by Eq. 3.

where \({v}_{max}\) is the maximum measurable blood velocity without having aliasing issues. There is also another difficult trade-off as higher PRF means better temporal resolution but limited depth. Looking at the Doppler equation, we can see it is important that the beam-to-flow angle is not 90° since \(cos 90^\circ\) = 0. Usually, for accurate measurements, the angle should be at least less than 60°, as an incorrect beam-to-flow angle can lead to an overestimation of velocities (Park et al., 2012).

Now, to perform correct Doppler ultrasound, ultrasound practitioners need to be trained to understand these relationships and their effects. One of the common methods for ultrasound training, apart from real patients, is the use of systems such as ScanTrainer (Intelligent Ultrasound Simulation, 2022) or SonoTrainer (Maul et al., 2004). These machines use real patient 3D scans for a variety of cases allowing trainees to learn spectral Doppler in obstetrics, including the umbilical cord that allows for great reproducibility for anomaly cases. They are equipped with a probe with a 3D sensor that sends the rotation and position of the transducer, converting the 3D scan into slice sections (Maul et al., 2004). However, there are several limitations with such systems such as cumbersome addition of new data, expensive setup, technical knowledge to operate the simulator, and the requirement of a separate room for training. Yeo and Romero (2020) explored a simpler educational tool where they used a pad with a probe attached that can be moved in three degrees of freedom. The probe could only be rotated, not moved to a specific position and the ultrasound environment was constrained to a 2D screen, which has limitations in its effectiveness for transferring the context of the ultrasound environment. Khoshniat et al. (2004) show the ability to make a simulation tool for sonographers to improve their hand–eye coordination and understanding of the effects of Doppler parameters. They used a motion-tracking device to mimic an ultrasound probe and incorporated sonographer-controlled parameters, such as sampling depth and beam-to-flow angle, to show their effects on a screen.

Several authors have specifically used immersive environments to propose ultrasound training systems. One of the first examples is by Palmer et al. (2015) where they synchronized data from a GE Vivid E9 ultrasound machine with the 3D model seen through a mobile AR screen. The authors used visual markers to track the ultrasound probe, the patient's body, and rendered a 3D heart or fetal model onto the patient. However, the system lacked control over the parameters needed to set up during the procedure and provided a limited field of view to the users. Another example is by Mahmood et al. (2018) where they used Microsoft HoloLens 1 together with a Vimedix TEE/TTE simulator. The set up included a medical mannequin and a computer running the Vimedix software and the application allowed multiple users to see the same scene. The authors reported that the initialization of the synchronization software was time-consuming, and the implementation still required the use of an ultrasound machine and mannequins. Looking at these previous works, the need for an MR application for ultrasound training can be verified. Drawing from the limitations of the current studies, an optimal application should require no real-time patient data or ultrasound machines and should be implemented in an immersive environment that can simulate the real environment and objects of an ultrasound setup with minimal external needs. These requirements are further explored using the design science process which is explained in the next section.

3 Research method

To answer the aforementioned research question, we followed the design science research process outlined by Vaishnavi and Kuechler (2004) which consists of five consecutive steps to conduct design research: awareness, suggestion, development, evaluation, and conclusion, as shown in Fig. 2. In this article, we primarily focused on the first three steps of the process.

The choice of design science research process was motivated by the empirical nature of the work and the goal of conducting artefact-based research. As Fahd et al. (2021) pointed out, design science research allows to concentrate on creating IT-based artifacts as the main outcome which aligns with the central aim of the research, as opposed to methodologies like design-based research (DBR) which primarily focuses on theories of educational technologies as the main contribution. Compared to DBR, design research accommodates more innovation in the research process while developing artifacts specifically tailored to address particular problems. Additionally, design research provides a more rigorous framework, as depicted in Fig. 2, which has been widely adopted in research based on MR. Authors in (Adikari et al., 2009) highlight the synergies between design science research and User-Centric Design (UCD), another design framework. Design science research incorporates the concepts defined in UCD while still grounding the method in scientific literature and theories, this aiding in the creation of more academically validated artifacts. While UCD focuses more on involving users in the design process, design science research also includes users at appropriate stages, as shown in Fig. 2.

It is important to understand that DSR, DBR and UCD are not mutually exclusive concepts, and several authors have attempted to use combinations of these methods in their research such as (Nguyen et al., 2015; Sabbioni et al., 2022). We align our research method with DSR since it offers a more rigorous and academically rooted approach to conducting research with the design of novel systems. Moreover, incorporating knowledge from the literature alongside user inputs enables us to overcome some disadvantages of UCD such as costs, time, intra and inter group communication, and most importantly the scalability of the designed system (Abras et al., 2004). Drawing knowledge from literature helps us incorporate results and suggestions from an extensive body of work conducted by researchers from diverse demographics rather than restricting the knowledge to only the current geographical and research context.

Hence, the motivation of the current research stems from a combination of user-based and literature-based problems, which led us to choose design science research as the most appropriate approach. Design research artifacts can be broadly defined as constructs (vocabulary and symbols), models (abstractions and representations), methods (algorithms and practices), and instantiations (implemented and prototype systems) (Hevner et al., 2004). In the present research, we aim to contribute an instantiation as the primary artifact, and methods as a complementary contribution. In order to adhere to the design research process, the design theory by (Gregor & Jones, 2007) was adopted, where authors signify the importance of instantiations to bridge the gap between engineering, design, and knowledge creation.

The design science research process by Peffers et al. (2007) signifies the use of a rigorous scientific process such as the process by Vaishnavi and Kuechler (2004). Furthermore, the guidelines from Hevner and Chatterjee (2010) were adopted to fine-tune the method, allowing us to produce design as an artifact. Design science research has been adopted by several applications in health and medical science research where digital technologies can be leveraged. For example., authors in (Beinke et al., 2019) utilized blockchain technology for electronic health records using the design science research method, and authors in (Gregório et al., 2021) used design science research to develop pharmacy e-health services. Furthermore, the significance of design science research process in health research has been highlighted by several authors like Hevner and Wickramasinghe (2018) who believe that DSR can be used for “design innovations with a goal of improving the effectiveness and efficiencies of healthcare products, services, and delivery” (p.3). Hence, the use of this methodology for the current research can be justified in creating the intended application. The research can be categorized under the umbrella of using digital technologies to improve the effectiveness and efficiency of the ultrasound training procedure as the key service with the ultrasound practitioners being the primary beneficiaries and potential benefits extending to educators.

Each phase of the design research produced utilized several sources of knowledge and produced specific outcomes, as shown in Fig. 2. The knowledge sources are broadly categorized as literature, input from users where users means both the trainees and trainers, and interpretations from the authors. Within the awareness phase, we collected relevant literature to shape our motivation and identified the research gap as the oversight over applications that leverage MR to train ultrasound practitioners for monitoring blood flow in the umbilical cord, which is a case of neglect and application spotting, as mentioned in Section 1. The literature findings were complemented by input from trainers involved in a graduate training programme called “Doppler ultrasound in obstetrics” (NTNU, n.d.), and experts from an ultrasound training research project (NTNU, 2014). This shaped our research question which was followed by a literature review to define the key concepts for the intended application and these concepts were validated by the aforementioned trainers and researchers. These synthesized concepts were then formulated as requirements for the system in Section 4. These requirements are matched by leveraging the capabilities of MR, 3D printing and interaction design. The design decisions in this phase were influenced by the methods used in the present literature and interpretation by the authors. The design and development of the application is done to create a proof-of-concept application. The evaluation of the proof of concept was then done using mixed methods where trainers and trainees were both involved in the feedback sessions. The research concludes with a set of results and suggestions for future work and future iterations, synthesized by the authors. The extracted requirements in the suggestion phase are reported in the next section.

4 Requirement extraction

In order to extract requirements for the intended application, the ultrasound training literature was reviewed. The literature was collected using the snowballing technique. First, the literature produced in the project (NTNU, 2014) where several authors and ultrasound practitioners have contributed to a body of literature towards ultrasound training systems. These articles were used as a starting point, and studied to gather a set of general requirements needed for the system. As mentioned in Sect. 3, ultrasound training requires an environment with high-tech equipment which can be a challenge in certain situations (Bhide et al., 2021). This includes the ultrasound machines such as Voluson Expert 22 (https://www.gehealthcare.com/products/ultrasound/voluson) or EPIQ Elite (https://www.philips.no/healthcare/solutions/ultrasound/obstetrics-and-gynecology-ultrasound) that are equipped with ultrasound probes as well as an output display screen for the spectrograms as well as the other values. Along with the use of this equipment, it requires volunteering patients on whom this equipment can be used. An appropriate training environment should be equipped with all these resources along with an instructor who can help with the understanding of the procedures and provide feedback to develop the necessary skills (Bhide et al., 2021). Hence, the first requirement R1 of the ultrasound training application is that the application should mimic the equipment and the environment specifically needed to perform PW Doppler ultrasound. Since the ultrasound probe in the environment is an essential part of the system, the overall system needs to acknowledge it and continuously be aware of it. Further, the system needs to also detect the simulated abdomen of the patient in order to provide tactile feedback and an anchor point to trainees as in real ultrasound procedures (Harris-Love et al., 2016). Hence, the system should be able to acknowledge the external objects present in the ultrasound training environment. This is done to optimize the extent of world knowledge part of the MR illusion mentioned in Section 2.1 (Bozgeyikli & Bozgeyikli, 2021). This is the second requirement of the system, R2.

With PW Doppler ultrasound, several constructs need to be taken into account as mentioned in Section 2.2. Firstly, the system needs to calculate the beam-to-flow angle based on the position and orientation of the ultrasound probe with respect to the umbilical cord (Szabo, 2014). This is the third requirement, R3 of the system. Along with the beam-to-flow angle, PRF and sampling depth also affect the measurement of the blood flow in the umbilical cord. In order to generate a clinically useful Doppler spectrogram, all these parameters need to be adjusted to match the blood velocity present in the artery. The simulation of the Doppler spectrogram based on these variables is the fourth requirement of the system, R4 (Szabo, 2014).

All the components of the system need to be integrated into an optimized interface where the user can interact with the components and understand the different training modules. Since, the MR device is merely an input/output hardware system, a major part of the application is carried out by the user interface. User interaction in 3D space can help users develop associations between the activity and space (Wigdor & Wixon, 2011). Thus, the requirement of an optimized user interface is reported as the fifth requirement of the system, R5. All the extracted requirements are reported in Table 1.

5 Requirement matching and design

Figure 3 shows the overall design of the application. The development of the MR application was done in Unity 3D engine which allows for easier visualization of 3D models and scripting through C#. Mixed Reality Tool Kit (MRTK) (Microsoft, 2022) was used to configure the application specifically for HoloLens and access some ready-to-use UI and interaction modules from Microsoft. Vuforia Engine Library (https://library.vuforia.com/) was used to integrate the image recognition feature into the application. In the next step, the defined requirements are matched by leveraging the capabilities of Microsoft HoloLens and 3D printing. The following paragraphs will outline information about the corresponding technical development.

5.1 R1 Ultrasound environment

For the system to be able to imitate an ultrasound procedure, 3D printing technology was used to create an abdomen and ultrasound probe, as shown in Fig. 4(a). Using the abdomen model, ultrasound training can be done without a volunteering patient. The users can still have tactile feedback from the model, unlike a virtual model. The ultrasound probe was printed to be used as a replacement for a real ultrasound probe that is attached to an ultrasound machine. Using the probe and the visual capabilities of MR, the ultrasound machine can be imitated in the system. Further, a 3D model of a fetus (https://www.turbosquid.com/3d-models/3d-obj-pregnant-woman-fetus-uterus/699304) was rendered inside the abdomen to provide visual aid to the users, as shown in Fig. 4(b). The model was simplified to 10,000 polygons using Blender to improve the frame rate of the application. Appropriate visual aids were added to the system such as signaling the user on which part of the umbilical cord is interactable with a red textured gap on the umbilical cord. Furthermore, initial tutorials were developed as a part of the application to help users understand how the application works and provide guidance on the ultrasound process. This was done to compensate for a physical instructor in the training activity.

5.2 R2 Object detection and tracking

The added physical objects in the system had to be tracked by the HoloLens to connect the physical and digital space. The 3D printed objects were tracked using the image tracking functionality of the Vuforia library, which provides a set of standard images (Vuforia, n.d.), that can be used as targets for the HoloLens to recognize, as shown in Fig 5. Drone was used for the abdomen and Astronaut was used for the probe. The HoloLens uses a range of sensors, including 4 visible light cameras, 2 infrared (IR) cameras, a 1-MP Time-of-Flight depth sensor, an inertial measurement unit (IMU), an accelerometer, a gyroscope, a magnetometer and an RGB Camera (8-MP stills, 1080p30 video), to accurately capture and track the targets. The target images were uploaded to the Vuforia web interface to create a tracking database, which was then added to Unity to define what the cameras should be looking for. As a result, the application recognizes the images when they are brought into the field of view of the HoloLens.

The targets were used to place digital objects, such as the 3D fetus model, and to synchronize other visual elements as described in Sect. 5.5. When the user and headset move within the space, the 3D models remain stationary while the user has the ability to look at a particular 3D model from different points of view. If the image targets are moved out of the field of view of the headset, the image tracking loses its anchor point but uses predictions to place the objects with compromised stability. The 3D printed abdomen is kept stationary during training, so image tracking is sufficient for it even if the image target is no longer in the field of view. On the other hand, the probe requires more precise movement tracking to calculate the angle between the ultrasound waves and the blood flow, as mentioned in the next section.

5.3 R3 Beam-to-flow angle

To calculate the beam-to-flow angle using the 3D probe, we needed accurate and real-time rotational tracking of the probe. For this purpose, sensor fusion between the image tracking and the gyroscope was implemented. An Arduino Nano 33 BLE, equipped with a 9 axis LSM9DS1 inertial measurement unit (IMU) chip with an accelerometer, gyroscope and magnetometer, was used to optimize rotational tracking. The Arduino was attached to the probe, and the open-source library LSM9DS1 by Verbeek (Verbeek, 2020) was used to program the intended functionalities. First, the gyroscope is calibrated using the instructions given by the LSM9DS1library. This involved determining the amount of rotation around each axis for the sensor on the probe. Then, the values are converted from the IMU coordinate system to the Unity coordinate system, as shown in Table 2. The values are multiplied by the time since the last reading, converted to a quaternion and multiplied with the previous rotation. This loop runs 119 times per second on the Arduino, which is the same as the output data rate of the sensor.

To achieve more stable rotation, we used sensor fusion based on the tracking state given by the Vuforia library. If the image is tracked, which means that the image target is within the field of view of the HoloLens, we interpolated between 85% of the rotation given by the image and 15% from the gyroscope. If the state is extended tracking which means that the image target is out of the view, then only the gyroscope rotation is used. The open-source project (https://github.com/adabru/BleWinrtDll) and BLE technology were used to establish communication between the HoloLens and the Arduino-equipped probe. Through this channel, three sets of information were transferred: rotation from Arduino to HoloLens, previous rotation from HoloLens to Arduino, and a Boolean variable to indicate if the previous rotation has been changed. To make the probe wireless and easier to use, a rechargeable Li-Poly battery was soldered to the Arduino board, as shown in Fig. 6(a) and (b). To calculate the beam-to-flow angle between the probe and the model of the umbilical cord, we defined a static direction of the blood flow. Then, using built-in Unity functions, we calculated the angle between the static direction of the blood flow and the virtual ray coming out of the ultrasound probe, as shown in Fig. 6(c).

5.4 R4 Doppler simulation

The beam-to-flow angle, along with the specified blood velocity, PRF and sampling depth was used to simulate a Doppler spectrogram. We used the DopplerSim Applet code in C# created by Hammer (2014), which is a desktop application that allows a user to simulate a Doppler signal controlled by the four variables (PRF, sampling depth, blood to flow angle and blood velocity). To make the program compatible with the Unity Engine and HoloLens, the code was rewritten in C# language and optimized to be supported by Unity. Additionally, X and Y axes were added to the output spectrogram to improve the readability of the blood velocity using the graph, as shown in Fig. 7 where the Y-axis represents the blood velocity and the X-axis represents time.

5.5 R5 User interface

The implemented application runs on the MR headset, HoloLens 2. The interface is developed with the basic color scheme, size references, and fonts from MRTK. The hand recognition and gesture recognition features of HoloLens facilitate interaction between the user and the application. The hand gestures that facilitate interaction in the application are Touch, Air Tap, Drag, and Hand Menu, as explained in by Microsoft (2021).

When started, the application greets the user with text on a 3D window in front of the user view. Users can use touch, drag or air tap gestures to interact with the buttons and the window in the interface. To guide users through the experience we divided the flow of the application into sizable steps where the users can go to the next step or go back using Next and Previous buttons simultaneously, as shown in Fig. 8. By using the touch gesture on the Next and Back buttons, users can move through the interface. During the tutorial, we utilized animated visual hints as recommended by (White et al., 2007). The application starts with a welcome screen and an option to start a tutorial that focuses on teaching the gesture inputs used in the application and the functionalities of the application. The user can either go through learning the gesture inputs or press the Skip button on the first screen to skip the tutorial and directly go to the tracking window. The tutorial is designed for users who are not experienced with Microsoft HoloLens.

Once the user finishes the tutorial or skips it, the application assists the user in tracking the image targets mentioned in Section 5.2. When a specific image is successfully tracked, a green checkmark appears on the image and a sound effect plays. When the image targets are recognized by the system, the application moves on to check the BLE connectivity mentioned in Section 5.3. If there is a problem with the connectivity, the user has the option to debug the issue and setup BLE. When tracking and BL E are setup, the application displays an interface where the user uses the Drag gesture to setup the values of PRF and sampling depth on a slider. The corresponding Doppler spectrogram is displayed in the user’s field of view. The user can use the Hand Menu gesture where.

a reset button appears on the palm of the user to reset the tracking of the image target. This will reset the position of the menus to their original positions in case the user has moved them out of view. The users can Pin or Unpin the 3D window, which makes the window follow the user or remain static in the environment respectively.

In Fig. 8, the flow of the interface is depicted. The user learns the functionalities in steps 2–5, sets up the application for use in steps 6 and 7, uses the application to understand the concepts of Doppler ultrasound in step 8, and has the option to test their skills by guessing the blood velocity in step 9. A number of visual cues were added to assist the user in understanding the correct ways of measuring the blood flow. The user was given cues on keeping the blood-to-flow angle between 90 and 0 degrees, i.e., it is 90 when it is perpendicular to the blood flow and 0 when it is directly aligned against or with the blood flow. We have visualized the ultrasound waves with a colored ray coming out of the probe. The ray changes color depending on the angle, and the texture moves when it intersects with the correct part of the umbilical cord. If the angle is bigger than 30 degrees and intersects with the correct part of the umbilical cord, the color becomes yellow, and the texture is moving. If the angle is less than 30 degrees then the color becomes green to indicate the preferable angle, as shown in Fig. 9(a). Furthermore, to make it easier for the user to understand when the spectrogram is updating, we have added a blue line moving from left to right together with the updates, as shown in Fig. 9(b). A demo of the application can be seen in the video provided as supplementary material.

Hence, all the requirements are matched by utilizing the attributes of the used technologies. This is summarized below in Table 3.

The overall architecture of the system is depicted below in Figs. 10 and 11. Figure 10 shows the hardware architecture and the communication between the hardware systems i.e., HoloLens 2 and the Arduino attached to the ultrasound probe. As shown in the figure, MS HoloLens 2 and Arduino are the only hardware used in the system along with the 3D printed abdomen and the probe. The vertical blocks represent the life of involvement of a particular component in HoloLens 2 and Arduino. The communication between these components is described in the Sects. 5.1 to 5.5. Figure 11 represents the application/software architecture and the communication between the various components developed during software development. As shown in the figure, the system is built as a “Unity Scene” using Unity and C# as the programming language. The scripts are clustered in the figure according to the functionality they provide, and the communication between all the components is shown with arrows. The ultimate goal of the system is the flow of the data to/from the central “prefabs”. The content and further explanation of the scripts can be accessed through the public repository mentioned in Appendix A1. The application can be reproduced using the mentioned hardware and by deploying the repository project through a Windows computer.

6 Experimental methodology

A study was conducted to evaluate the usability of the application. Authors in (Turner et al., 2006, p. 3084) suggested that "five users are able to detect approximately 80% of the problems in an interface, given that the probability a user would encounter a problem is about 31%". Here, 11 users were recruited through convenience sampling, where researchers attempted to follow the persona created for the application. Six (55%) of the participants were aged between 26–35 while three (26.5%) participants were aged between 18–25. The other two participants were between 36 and 50. Seven (64%) participants were male, while the other 4 (36%) were female. In terms of the highest level of education received, seven (64%) participants had a master’s degree, three (26.5%) participants had a bachelor’s degree, and one (9%) had a high school education. Six (55%) participants worked in fields related to information technology, and two (18%) participants worked in medicine. Six (55%) participants had previously tried Microsoft HoloLens, and two (18%) had previously performed a Doppler ultrasound.

The participants were welcomed and given a short introduction and training to the application for five minutes. Then the participants used the application to review it for 15 min, as shown in Fig. 12. The task scenario is described in Appendix A2. Although the task instructions were designed to provide context and explanations to the participants, the users were not assessed on achieving a specific goal. Venkatesh et al. (2017, p. 97) have pointed out the significance of test scenarios that are not specific task-based and argued that such a scenario can allow the users to utilize the technology to gather information “that is independent of a current need within a specific category.” Therefore, such a scenario allows us to gather user feedback on items that could have been overlooked in achieving the pragmatic goal of the application. Following this, the participants were asked to complete a quantitative questionnaire with 27 items where they expressed their agreement with the statements on a 7-point Likert scale as reported in the supplementary file. The scale was developed using the SPINE questionnaire which is used to assess a system's control, navigation, manipulation, selection, input modalities, and output modalities (Ravagnolo et al., 2019). After the quantitative questionnaire, participants were asked to comment on the educational potential of the application through a semi-structured interview based on the central theme of: “How do you see the potential of this application in ultrasound training?”. The aim was to collect feedback about limitations and improvements in the application. The study lasted for approximately 50 min with a 20–25-min exposure to the immersive technology for each participant.

7 Results

The scores collected through the SPINE questionnaire were analyzed using Python to obtain median scores and standard deviation, as shown in Fig. 13. The blue line in the plots represents the median score for each usability group. As can be seen in the figure, the users rated a median score of 5 for all the usability groups. The circles in the figure represent the median opinion of the two midwives who tested the application. This has been highlighted because of the importance of their opinion with respect to the intended target group for the application.

Furthermore, the qualitative comments were analyzed from the users to highlight their cumulative opinions. The comments were categorized based on their correspondence to the requirements used in the research. The feedback from the participants is summarized in Table 4.

8 Discussion

In the present research article, the design science research methodology was used to design an MR application for Microsoft HoloLens. Hence, the research further validates the use of design science research methods for health science applications. The application aims to help ultrasound practitioners be trained to perform PW Doppler ultrasound and measure the blood velocity in the umbilical cord. The results in Sect. 7 show a positive opinion score of users towards the application (median = 5). Furthermore, the participants in the study suggest a positive acceptance of the system. The users indicated a high acceptance of using mixed reality illusion for the intended application, along with a positive impact of the implemented visual and training cues.

The current research proposes the application as the first working proof of concept of an MR ultrasound training application, which could be used by ultrasound practitioners to train themselves in performing Doppler ultrasound on the umbilical cord. Our application demonstrates how 3D-printed objects can successfully be used in this field to bridge the physical and augmented worlds. Furthermore, the application showcases examples of explicit inputs (utilizing visual sliders in the user interface) and passive input (using position and rotation of the 3D probe). This application could make a significant impact in various professional and social capacities. Such a training application could be beneficial in low-income countries with limited access to resources required for proper training environments (NTNU, 2014). The simulated Doppler spectrogram offers a faster training method compared to systems that rely on real data integration. This could provide a more efficient training environment. In comparison to ScanTrainer, our solution is lightweight and potentially easier to adapt and expand since it does not rely on real data. By using HoloLens 2, this application eliminates the need to consider the placement of monitors and cameras, improving over the method discussed by Palmer et al. (2015). Compared to Yeo and Romero's (2020) solution, our application allows users more freedom to move the ultrasound probe and does not utilize any AR technology. Expert feedback suggests that by displaying a 3D model of a fetus, we could assist trainees in gaining a better volumetric understanding of the internal structure, also mentioned by Mahmood et al. (2018). Mahmood et al. (2018) used an older version of the HoloLens headset with a mannequin. Although their calibration method enables the application to be used with various mannequins, our constant image tracking of 3D-printed objects may lead to quicker onboarding.

8.1 Limitations, improvements and future work

The number of user tests in the current study primarily aimed to gain an initial understanding of user attitudes towards an MR ultrasound training application; therefore, no statistical conclusions were drawn. Thus, the sample size and the experimentation method used are the primary limitations in the current study. We encourage future researchers to conduct larger studies to examine and understand the relationship between the different groups of the SPINE questionnaire. Along with this, there are several aspects of the application that can be improved.

-

One major feature that needs improvement, as pointed out by the expert users, is the stability of ultrasound probe tracking. The instability of the tracking occurs when the HoloLens loses tracking of the image target during movement. One suggestion was to allow users to freeze the position of the ultrasound probe for more precise control over its rotation. While the additional gyroscope sensor allows for more precise user control, it is affected by the jitter of the magnetic sensor, which could have an effect on the orientation of the ultrasound probe.

-

Future iterations of the application can incorporate additional spatial interactions and inputs for variables currently not included in the system, such as spatial depth. Since the application was designed with scalability and adaptability in mind, the proof of concept can be further developed for broader ultrasound applications and other medical training systems. For e.g., a similar application can be developed for monitoring the hearts and brains of premature newborns as ultrasound can be helpful in these cases where special care is required (Vik et al., 2020).

-

Doppler ultrasound is a highly complex procedure that is not limited to measuring blood velocity but includes functionalities like fetal imaging. Since the application currently focuses on limited training in this area, suggestions were made to scale the application to create a holistic ultrasound training application that is not limited to measuring blood flow.

-

The Doppler ultrasound procedure for monitoring flood flow can be extended to include crucial steps such as locating the umbilical artery and identification of a suitable measurement location. For this, it would be useful to have B-mode image data in addition to Doppler ultrasound, which also helps in monitoring cardiac activity and the overall health of the fetus (Bhide et al., 2021).

-

In the current application, the direction of the blood flow (towards the probe vs away from the probe) cannot be determined, which is a crucial part of antenatal care (Bhide et al., 2021). Future iterations can develop the interface to include cues towards the direction of the blood flow.

-

The application shows the Doppler spectrum in a simplified manner, as shown in Fig. 7. This should be improved, and the Doppler spectrum should be presented in a more realistic manner to increase the transferability of the knowledge gained through training on the MR system.

-

To improve the pedagogical competence of the system, it can be connected to a database of healthy and diseased Doppler spectra that can be visualized by the trainees. Moreover, the application should be integrated with the traditional courses for trainees so that they can have an enhanced understanding of the anatomy through visualization, before performing acquisitions on a pregnant woman.

-

Finally, the validity of the current system should be tested on other immersive hardware systems such as mobile-based augmented reality, VR, or hybrid systems like Meta Quest Pro and Magic Leap to validate the scalability of the system.

8.2 Research question, lessons learned and implications

The research question mentioned in Section 1 can be answered in the form of certain implications and learnings from the design process as well as the feedback. We argue that MR technology could be used in an effective manner for ultrasound training in the form of a training application that leverages the capabilities of the MR device, 3D printing, gesture inputs, image recognition and sensor fusion technologies. The application can be optimized by using several interaction and visualization methods such as rendering of the fetus, training and visual cues and appropriate ways to test the knowledge. Hence, the following learnings can be extracted from the research for the application domain:

-

MR visualization could help trainees better understand the anatomy of a subject and the procedure of measuring blood flow in the umbilical cord by simulating the ultrasound environment using digital objects in a pseudo-immersive environment.

-

3D printed objects could help trainees get tactile feedback from ultrasound components like the probe as well as the patient anatomy. Pushing against the 3D printed abdomen can be a substitute for the simulator with force feedback. Combined with digital objects in an XR environment, a highly competent training environment could be created.

-

Interactions between the system and the trainees in a digital environment play an important role, hence gesture-based inputs along with image recognition and tracking can be useful to optimize the training environment. A digital tutorial and visual cues can be especially useful to get more concrete feedback on the ultrasound.

-

Doppler spectrogram in a virtual environment looks realistic enough to help trainees understand the influence of the most critical parameters on the resulting measurement. Hence, digital training environments could be a valid method of medical ultrasound training.

-

Education and training with MR devices could offer an interesting approach to optimize risk and resources in traditional training methods.

In terms of research methodology,

-

This research illustrates the use of design science as a possible developing application for education and training with novel technologies such as MR.

-

The user tests with open tasks and feedback from trainers and trainees were useful in this first stages of implementation. A higher number of participants would be needed to do statistical analysis of the feedback.

-

The research demonstrates how innovation and theory can be combined to conduct research f novel applications. The paper shows an early example of how MR can be studied using feedback, literature reviews and user testing.

For educators, several routes can be taken with the system.

-

Before integrating a virtual reality ultrasound training system into a curriculum, educators could conduct a pilot study to assess its effectiveness and identify any areas of improvement.

-

It is important to gather feedback from students and instructors who use the mixed reality ultrasound training system. This feedback can be used to improve the system and ensure it meets the students’ and instructors' needs.

-

Educators should consider the learning objectives they want to achieve through the mixed reality ultrasound training system, and ensure that the system aligns with these objectives. This will help ensure that the training is effective and relevant.

-

Compare with traditional training: It is important to compare the effectiveness of virtual reality ultrasound training with traditional training methods to determine if it is a suitable replacement or supplement to traditional training.

-

Collaborate with industry partners: Educators could collaborate with industry partners who develop mixed reality ultrasound training systems to ensure that the training system is up-to-date and incorporates the latest technology and research.

-

Monitor the technology's adoption: Educators should monitor the adoption of the mixed reality ultrasound training system to ensure it is being effectively used by students and instructors. This can help identify any barriers to adoption and ensure that the system is being used to its full potential.

With the current research, we aimed to contribute primarily to the domain of education in health science and human–computer interaction domain. The research is useful for developers, engineers, and managers towards creating training systems and considers health care professionals who perform ultrasound procedures as the primary users of the application.

Data availability

The data collected from the subjective study can be made available upon request to the corresponding authors. The code used for the application is available in the git repository: Repository for the main Unity Scene and Arduino connection (HoloLens): https://github.com/marianylund/mr-pw-doppler-training;Repository for Arduino connection (Arduino): https://github.com/marianylund/arduinoGyroBLE

References

Abras, C., Maloney-Krichmar, D., & Preece, J. (2004). User-centered design. In Bainbridge, W. (Ed.), Encyclopedia of Human-Computer Interaction. Sage Publications, 37(4), 445–456.

Adelsberger, H. H., Collis, B., & Pawlowski, J. M. (2013). Handbook on Information Technologies for Education and Training. Springer Science & Business Media.

Adikari, S., McDonald, C. & Campbell, J, (2009). Little design up-front: A design science approach to integrating usability into agile requirements engineering. In Human-Computer Interaction. New Trends: 13th International Conference, HCI International 2009, San Diego, CA, USA, July 19-24, 2009, Proceedings, Part I 13 (pp. 549-558). Springer Berlin Heidelberg.

Alfirevic, Z., Stampalija, T., & Dowswell, T. (2017). Fetal and umbilical Doppler ultrasound in high‐risk pregnancies. Cochrane Database of Systematic Reviews, 6. https://doi.org/10.1002/14651858.CD007529.pub4

Barteit, S., Lanfermann, L., Bärnighausen, T., Neuhann, F., Beiersmann, C., et al. (2021). Augmented, mixed, and virtual reality-based head-mounted devices for medical education: Systematic review. JMIR Serious Games, 9(3), e29080.

Beinke, J. H., Fitte, C., & Teuteberg, F. (2019). Towards a Stakeholder-Oriented Blockchain-Based Architecture for Electronic Health Records: Design Science Research Study. Journal of Medical Internet Research, 21(10), e13585. https://doi.org/10.2196/13585

Bhide, A., Acharya, G., Baschat, A., Bilardo, C. M., Brezinka, C., Cafici, D., Ebbing, C., Hernandez-Andrade, E., Kalache, K., Kingdom, J., Kiserud, T., Kumar, S., Lee, W., Lees, C., Leung, K. Y., Malinger, G., Mari, G., Prefumo, F., Sepulveda, W., & Trudinger, B. (2021). ISUOG Practice Guidelines (updated): Use of Doppler velocimetry in obstetrics. Ultrasound in Obstetrics & Gynecology: The Official Journal of the International Society of Ultrasound in Obstetrics and Gynecology, 58(2), 331–339. https://doi.org/10.1002/uog.23698

Billinghurst, M., Kato, H., & Poupyrev, I. (2008). Tangible augmented reality. ACM SIGGRAPH Asia, 7(2), 1–10. https://doi.org/10.1145/1508044.1508051

Bozgeyikli, E., & Bozgeyikli, L. L. (2021). Evaluating Object Manipulation Interaction Techniques in Mixed Reality: Tangible User Interfaces and Gesture. 2021 IEEE Virtual Reality and 3D User Interfaces (VR), 778–787. https://doi.org/10.1109/VR50410.2021.00105

Brichant, J. F., & Bonhomme, V. (2014). Preeclampsia: An update. Acta Anaesthesiologica Belgica, 65(4), 137–149.

Cosmi, E., Grisan, E., Fanos, V., Rizzo, G., Sivanandam, S., & Visentin, S. (2017). Growth Abnormalities of Fetuses and Infants. BioMed Research International, 2017, 3191308. https://doi.org/10.1155/2017/3191308

Dreier, J. M. (2012). User-centered design in rural South Africa: How well does current best practice apply for this setting? [Master’s Thesis]. Institutt for datateknikk og informasjonsvitenskap.

Dwivedi, M. K. (2018). Colour Doppler Sonography in Obstetrics. In R. K. Diwakar (Ed.), Basics of Abdominal, Gynaecological, Obstetrics and Small Parts Ultrasound (pp. 121–126). Springer Singapore. https://doi.org/10.1007/978-981-10-4873-9_5

Fahd, K., Miah, S. J., Ahmed, K., Venkatraman, S., & Miao, Y. (2021). Integrating design science research and design based research frameworks for developing education support systems. Education and Information Technologies, 26, 4027–4048.

Flavián, C., Ibáñez-Sánchez, S., & Orús, C. (2019). The impact of virtual, augmented and mixed reality technologies on the customer experience. Journal of Business Research, 100, 547–560. https://doi.org/10.1016/j.jbusres.2018.10.050

Freina, L., & Ott, M. (2015). A literature review on immersive virtual reality in education: State UNS of the art and perspectives. eLearning & Software for Education,(1). Verfügbar Unter. Retrieved November 10, 2022 from https://www.itd.cnr.it/download/eLSE%202015%20Freina%20Ott%20Paper.pdf

Furness, T. A. (1986). The Super Cockpit and its Human Factors Challenges. Proceedings of the Human Factors Society Annual Meeting, 30(1), 48–52. https://doi.org/10.1177/154193128603000112

Gill, R. W. (1985). Measurement of blood flow by ultrasound: Accuracy and sources of error. Ultrasound in Medicine & Biology, 11(4), 625–641. https://doi.org/10.1016/0301-5629(85)90035-3

Gregor, S., & Jones, D. (2007). The anatomy of a design theory. Journal of the Association of Information Systems, 8(5), 313-335. https://openresearch-repository.anu.edu.au/handle/1885/32762

Gregório, J., Reis, L., Peyroteo, M., Maia, M., Mira da Silva, M., & Lapão, L. V. (2021). The role of Design Science Research Methodology in developing pharmacy eHealth services. Research in Social & Administrative Pharmacy: RSAP, 17(12), 2089–2096. https://doi.org/10.1016/j.sapharm.2021.05.016

Haleem, A., Javaid, M., Qadri, M. A., & Suman, R. (2022). Understanding the role of digital technologies in education: A review. Sustainable Operations and Computers, 3, 275–285. https://doi.org/10.1016/j.susoc.2022.05.004

Hammer, M. (2014). DopplerSim. XRayPhysics - Interactive Radiology Physics. Retrieved November 10, 2022 from http://xrayphysics.com/doppler.html

Harris-Love, M. O., Ismail, C., Monfaredi, R., Hernandez, H. J., Pennington, D., Woletz, P., McIntosh, V., Adams, B., & Blackman, M. R. (2016). Interrater reliability of quantitative ultrasound using force feedback among examiners with varied levels of experience. PeerJ, 4, e2146. https://doi.org/10.7717/peerj.2146

Hevner, A. R., & Wickramasinghe, N. (2018). Design Science Research Opportunities in Health Care. In N. Wickramasinghe & J. L. Schaffer (Eds.), Theories to Inform Superior Health Informatics Research and Practice (pp. 3–18). Springer International Publishing. https://doi.org/10.1007/978-3-319-72287-0_1

Hevner, A. R., March, S. T., Park, J., & Ram, S. (2004). Design Science in Information Systems Research. MIS Quarterly, 28(1), 75–105. https://doi.org/10.2307/25148625

Hevner, A., & Chatterjee, S. (2010). Design Science Research in Information Systems. In A. Hevner & S. Chatterjee (Eds.), Design Research in Information Systems: Theory and Practice (pp. 9–22). Springer US. https://doi.org/10.1007/978-1-4419-5653-8_2

Intelligent Ultrasound Simulation. (2022). ScanTrainer. Retrieved November 10, 2022 from https://www.intelligentultrasound.com/scantrainer/

Khoshniat, M., Thorne, M. L., Poepping, T. L., Holdsworth, D. W., & Steinman, D. A. (2004). Real-time virtual Doppler ultrasound. In Medical Imaging 2004: Ultrasonic Imaging and Signal Processing (Vol. 5373, pp. 373-384). SPIE. https://doi.org/10.1117/12.535490

Kim, S., Fleisher, B., & Sun, J. Y. (2017). The Long-term Health Effects of Fetal Malnutrition: Evidence from the 1959–1961 China Great Leap Forward Famine. Health Economics, 26(10), 1264–1277. https://doi.org/10.1002/hec.3397

Li, L., Yu, F., Shi, D., Shi, J., Tian, Z., Yang, J., Wang, X., & Jiang, Q. (2017). Application of virtual reality technology in clinical medicine. American Journal of Translational Research, 9(9), 3867–3880.

Mahmood, F., Mahmood, E., Dorfman, R. G., Mitchell, J., Mahmood, F.-U., Jones, S. B., & Matyal, R. (2018). Augmented reality and ultrasound education: Initial experience. Journal of Cardiothoracic and Vascular Anesthesia, 32(3), 1363–1367.

Maul, H., Scharf, A., Baier, P., Wüstemann, M., Günter, H., Gebauer, G., & Sohn, C. (2004). Ultrasound simulators: Experience with the SonoTrainer and comparative review of other training systems. Ultrasound in Obstetrics and Gynecology: The Official Journal of the International Society of Ultrasound in Obstetrics and Gynecology, 24(5), 581–585.

Maulik, D., Mundy, D., Heitmann, E., & Maulik, D. (2010). Evidence-based approach to umbilical artery Doppler fetal surveillance in high-risk pregnancies: An update. Clinical Obstetrics and Gynecology, 53(4), 869–878.

Microsoft. (2021). Getting around HoloLens 2. Retrieved November 15, 2022, from https://learn.microsoft.com/en-us/hololens/hololens2-basic-usage

Microsoft. (2022). What is Mixed Reality Toolkit 2? Retrieved November 15, 2022, from https://learn.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/mrtk2/?view=mrtkunity-2022-05

Milgram, P., & Kishino, F. (1994). A taxonomy of mixed reality visual displays. IEICE TRANSACTIONS on Information and Systems, 77(12), 1321–1329.

Milman, N. B. (2018). Defining and Conceptualizing Mixed Reality, Augmented Reality, and Virtual Reality. Distance Learning, 15(2), 55–58.

Moro, C., Phelps, C., Redmond, P., & Stromberga, Z. (2021). HoloLens and mobile augmented reality in medical and health science education: A randomised controlled trial. British Journal of Educational Technology, 52(2), 680–694. https://doi.org/10.1111/bjet.13049

Nguyen, L., Haddad, P., Mogimi, H., Coleman, K., Redley, B., Botti, M., & Wickramasinghe, N. (2015). Developing an information system for nursing in acute care contexts. Presented at the Pacific Asia Conference on Information Systems, PACIS 2015 – Proceedings

Nguyen, T., Plishker, W., Matisoff, A., Sharma, K., & Shekhar, R. (2022). HoloUS: Augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. International Journal of Computer Assisted Radiology and Surgery, 17(2), 385–391. https://doi.org/10.1007/s11548-021-02526-7

NTNU. (2014). UMOJA - Ultrasound for Midwives in rural areas. Retrieved Junee 26, 2023, from https://forskningsprosjekter.ihelse.net/prosjekt/46060912

NTNU. (n.d.). Doppler ultrasound in obstetrics. Retrieved June 26, 2023, from https://www.ntnu.no/videre/gen/-/courses/nv22808

Palmer, C. L., Haugen, B. O., Tegnander, E., Eik-Nes, S. H., Torp, H., & Kiss, G. (2015). Mobile 3D augmented-reality system for ultrasound applications. IEEE International Ultrasonics Symposium (IUS), 2015, 1–4. https://doi.org/10.1109/ULTSYM.2015.0488

Park, M. Y., Jung, S. E., Byun, J. Y., Kim, J. H., & Joo, G. E. (2012). Effect of beam-flow angle on velocity measurements in modern Doppler ultrasound systems. American Journal of Roentgenology, 198(5), 1139–1143.

Park, S., Bokijonov, S., & Choi, Y. (2021). Review of Microsoft HoloLens Applications over the Past Five Years. Applied Sciences, 11(16), Article 16. https://doi.org/10.3390/app11167259

Peffers, K., Tuunanen, T., Rothenberger, M. A., & Chatterjee, S. (2007). A Design Science Research Methodology for Information Systems Research. Journal of Management Information Systems, 24(3), 45–77. https://doi.org/10.2753/MIS0742-1222240302

Pensieri, C., & Pennacchini, M. (2014). Overview: Virtual Reality in Medicine. Journal For Virtual Worlds Research, 7(1), Article 1. https://doi.org/10.4101/jvwr.v7i1.6364

Psotka, J. (1995). Immersive training systems: Virtual reality and education and training. Instructional Science, 23(5), 405–431. https://doi.org/10.1007/BF00896880

Punie, Y., Zinnbauer, D., & Cabrera, M. (2006). A review of the impact of ICT on learning. European Commission, Brussels, 6(5), 635–650.

Ravagnolo, L., Helin, K., Musso, I., Sapone, R., Vizzi, C., Wild, F., Vovk, A., Limbu, B. H., Ransley, M., Smith, C. H., et al. (2019). Enhancing Crew Training for Exploration Missions: The WEKIT experience. In Proceedings of the International Astronautical Congress (Vol. 2019). International Astronautical Federation, Paris. https://iafastro.directory/iac/paper/id/50193/abstract-pdf/IAC-19,B3,5,5,x50193.brief.pdf?2019-10-17.04:25:09

Roy, Q., Zakaria, C., Perrault, S., Nancel, M., Kim, W., Misra, A., & Cockburn, A. (2019).A Comparative Study of Pointing Techniques for Eyewear Using a Simulated Pedestrian Environment. In Human-Computer Interaction – INTERACT 2019: 17th IFIP TC 13 International Conference, Paphos, Cyprus, September 2–6, 2019, Proceedings, Part III 17 (pp. 625-646).Springer International Publishing. https://doi.org/10.1007/978-3-030-29387-1_36

Sabbioni, G., Zhong, V. J., Jäger, J., & Schmiedel, T. (2022). May I Show You the Route? Developing a Service Robot Application in a Library Using Design Science Research. In Human Interaction, Emerging Technologies and Future Systems V: Proceedings of the 5th International Virtual Conference on Human Interaction and Emerging Technologies, IHIET 2021, August 27–29, 2021 and the 6th IHIET: Future Systems (IHIET-FS 2021), October 28–30, 2021, France (pp. 306–313). Springer International Publishing. https://doi.org/10.1007/978-3-030-85540-6_39

Sandberg, J., & Alvesson, M. (2011). Ways of constructing research questions: Gap-spotting or problematization? Organization, 18(1), 23–44. https://doi.org/10.1177/1350508410372151

Skarbez, R., Smith, M., & Whitton, M. C. (2021). Revisiting Milgram and Kishino’s Reality-Virtuality Continuum. Frontiers in Virtual Reality, 2, 27. https://doi.org/10.3389/frvir.2021.647997

Stoylen. (2020). Basic ultrasound, echocardiography and Doppler ultrasound. Retrieved November 10, 2022, from https://folk.ntnu.no/stoylen/old_strainrate/Basic_Doppler_ultrasound

Szabo, T. L. (2014). Chapter 11—Doppler Modes. In T. L. Szabo (Ed.), Diagnostic Ultrasound Imaging: Inside Out (Second Edition) (pp. 431–500). Academic Press. https://doi.org/10.1016/B978-0-12-396487-8.00011-2

Turner, C. W., Lewis, J. R., & Nielsen, J. (2006). Determining usability test sample size. International Encyclopedia of Ergonomics and Human Factors, 3(2), 3084–3088.

Uppal, T., & Mogra, R. (2010). RBC motion and the basis of ultrasound Doppler instrumentation. Australasian Journal of Ultrasound in Medicine, 13(1), 32–34. https://doi.org/10.1002/j.2205-0140.2010.tb00216.x

Vaishnavi, V., & Kuechler, W. (2004). Design research in information systems. Retrieved November 15, 2022. https://www.desrist.org/design-research-in-information-systems/

Varsou, O. (2019). The Use of Ultrasound in Educational Settings: What Should We Consider When Implementing this Technique for Visualisation of Anatomical Structures? In P. M. Rea (Ed.), Biomedical Visualisation: Volume 3 (pp. 1–11). Springer International Publishing. https://doi.org/10.1007/978-3-030-19385-0_1

Venkatesh, V., Aloysius, J. A., Hoehle, H., & Burton, S. (2017). Design and Evaluation of Auto-ID Enabled Shopping Assistance Artifacts in Customers' Mobile Phones: Two Retail Store Laboratory Experiments. MIS Quarterly 41(1) 83-113. https://doi.org/10.25300/MISQ/2017/41.1.05

Verbeek, F. (2020). LSM9DS1 library. GitHub. Retrieved November 15, 2022, https://github.com/FemmeVerbeek/Arduino_LSM9DS1

Vik, S. D., Torp, H., Follestad, T., Støen, R., & Nyrnes, S. A. (2020). NeoDoppler: New ultrasound technology for continous cerebral circulation monitoring in neonates. Pediatric Research, 87(1), 95–103. https://doi.org/10.1038/s41390-019-0535-0

Vuforia. (n.d.). Vuforia Image Targets. Retrieved November 10, 2022, from https://library.vuforia.com/objects/image-targets#using-image-targets)

Wharrad, H., Windle, R., & Taylor, M. (2021). Chapter Three—Designing digital education and training for health. In S. Th. Konstantinidis, P. D. Bamidis, & N. Zary (Eds.), Digital Innovations in Healthcare Education and Training (pp. 31–45). Academic Press. https://doi.org/10.1016/B978-0-12-813144-2.00003-9

White, S., Lister, L., & Feiner, S. (2007). Visual hints for tangible gestures in augmented reality. In 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality (pp. 47-50). IEEE. https://doi.org/10.1109/ISMAR.2007.4538824

Wigdor, D., & Wixon, D. (2011). The Spatial NUI. In Brave NUI world: Designing natural user interfaces for touch and gesture (pp. 33-36). Elsevier.

Yeo, L., & Romero, R. (2020). Optical ultrasound simulation-based training in obstetric sonography. The Journal of Maternal-Fetal & Neonatal Medicine, 35(13), 2469-24841–16. https://doi.org/10.1080/14767058.2020.1786519

Zyda, M. (2005). From visual simulation to virtual reality to games. Computer, 38(9), 25–32. https://doi.org/10.1109/MC.2005.297

Funding

Open access funding provided by NTNU Norwegian University of Science and Technology (incl St. Olavs Hospital - Trondheim University Hospital)

Author information

Authors and Affiliations

Contributions

All authors contributed to the research and manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent

The collected data was anonymized, and consent was collected from the participants for their data to be used in the study. Participant photo (who is a co-author) was used with the consent of the subject.

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

1.1 Installation guide

1.1.1 Requirements

Requirement | Useful Links | |

|---|---|---|

Hardware | ||

1 | Microsoft HoloLens 2 | |

2 | Windows PC (configured to run 2022.3.20f1) | https://docs.unity3d.com/2022.2/Documentation/Manual/system-requirements.html |

3 | Arduino Nano 33 BLE | |

3D Printed Objects | ||

4 | 3D Printed Abdomen | https://drive.google.com/file/d/1siNU1OD-TJ072RrOURVKzLjGZiQxsHZp/view?usp=sharing; https://drive.google.com/file/d/14isLPeJwrGkoPpuO-XqE2mOIHo02mHwR/view?usp=sharing; https://drive.google.com/file/d/1dVvMpS-W6HDhdj21Cz6A_7PacVm1tn_H/view?usp=sharing; https://drive.google.com/file/d/1bGBLgVrluVfChKY0cuCPQihA374LE7GK/view?usp=sharing |

5 | 3D Printed Probe | https://github.com/marianylund/mr-pw-doppler-training/tree/main/Assets/Mesh |

Software | ||

6 | Unity 2022.3.20f | https://docs.unity3d.com/2022.2/Documentation/Manual/UnityManual.html |

7 | Mixed Reality Tool Kit (MRTK) | |

8 | Vuforia Engine Library | |

9 | Visual Studio (2019 or later) | |

10 | Visual Code + PlatformIO |

1.1.2 Git repositories

The following repositories contain the project including ‘readme’ files, C# scripts, and the steps to implement them.

Repository for the main Unity Scene:

-

1.

Repository for the main Unity Scene and Arduino connection (HoloLens): https://github.com/marianylund/mr-pw-doppler-training

-

2.

Repository for Arduino connection (Arduino): https://github.com/marianylund/arduinoGyroBLE

1.1.3 Setup

Once, the required hardware, 3D printed objects and software have been acquired, the system can be reproduced using the repositories mentioned in the section above. Once the unity scene is deployed in HoloLens, and the Arduino code is deployed in the Arduino, the system should work right away. The repositories can be cloned and edited according to the future requirements of a system.

1.2 Training and user guide

1.2.1 Task scenario

You are interested in exploring how to measure blood velocity in an umbilical cord using Doppler ultrasound and how the four parameters such as beam-to-flow angle, PRF, blood velocity and sampling depth affect the measurement.

-

A:

On-boarding

You are at a university and want to explore the MR application. In front of you, you see 3D printed models of an ultrasound probe and abdomen with images connected to them. You want to start the application and see if you are able to see a visualisation of a fetus inside the abdomen and a ray coming out of the probe.

-

1.

Open the HoloLens menu and click on the HoloUmoja application

-

2.

Notice the "Welcome" menu explaining the situation

-

3.

Navigate the HoloLens camera towards the images until the 3D objects are visible

-

1.

-

B:

Spectrograms

Now that you see the fetus, you notice the umbilical cord, you want to measure the blood velocity using the ray coming out of the probe and see the spectrograms.

-

1.

Point the ray coming out of the probe towards the orange part of the umbilical cord

-

2.

Turn towards the "Ultrasound" menu and see the changes to the black square having a spectrogram

-

1.

-

C:

Angle 90