Abstract

Distance learning has been adopted as an alternative learning strategy to the face-to-face teaching methodology. It has been largely implemented by many governments worldwide due to the spread of the COVID-19 pandemic and the implication in enforcing lockdown and social distancing. In emergency situations distance learning is referred to as Emergency Remote Teaching (ERT). Due to this dynamic, sudden shift, and scaling demand in distance learning, many challenges have been accentuated. These include technological adoption, student commitments, parent involvement, and teacher extra burden management, changes in the organization methodology, in addition to government development of new guidelines and regulations to assess, manage, and control the outcomes of distance learning. The objective of this paper is to analyze the alternatives of distance learning and discuss how these alternatives reflect on student academic performance and retention in distance learning education. We first, examine how different stakeholders make use of distance learning to achieve the learning objectives. Then, we evaluate various alternatives and criteria that influence distance learning, we study the correlation between them and extract the best alternatives. The model we propose is a multi-criteria decision-making model that assigns various scores of weights to alternatives, then the best-scored alternative is passed through a recommendation model. Finally, our system proposes customized recommendations to students, and teachers which will lead to enhancing student academic performance. We believe that this study will serve the education system and provides valuable insights and understanding of the use of distance learning and its effectiveness.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The COVID-19 pandemic deeply and negatively affected the education sector. Governments were forced to close educational institutions and implement social distancing. In response, educational institutions introduced an alternative learning approach, namely distance learning. Previously, distance learning had not been widely adopted or implemented particularly in the primary and secondary institutions due to institutional concerns about outcomes delivery and the quality of education for this category of learners. Consequently, when the pandemic broke out, these institutions faced significant challenges in adapting to distance learning and communicating with students and providing them with the support they needed to achieve their educational and learning objectives. The sudden shift from the traditional learning model to only distance learning resulted in few challenges such as difficulties in adopting e-learning technologies, platforms, and the degree of readiness to shift to fully distance learning.

The integration of Information and Communications Technologies (ICTs) in distance learning is very crucial in supporting faster and flexible course delivery. The widespread adoption of online education led to significant interventions in the education sector that also have highlighted room for improvement in distance learning programs for the long-term sustainability of the education sector (Stepanyan et al., 2013). Although students’ exposure to e-learning and distance learning platforms has increased, it is important to reassess the situation and review the technological practices involved in distance learning (Hunter & Carr, 2004). Additionally, it is important to measure the quality of teaching and the performance of the student while considering many alternatives and criteria that could categorize the learning experience (Zare et al., 2016). Many studies have examined facets of distance learning such as distance learning methods, architectural models of learning systems, and context to assess and analyze the effectiveness of education deliverables (Lalitha & Sreeja, 2020; Zorrilla et al., 2010; Shih et al., 2003).

During the COVID-19 pandemic, several countries, including the United Arab Emirates, implemented distance learning to prevent the coronavirus from spreading. Distance learning is a type of educational learning process that allows the instructor and students to be in different physical locations (Moore et al., 2011). It can replace the conventional learning process in case of unexpected situations such as pandemics, and wars. It involves three types of interaction within the education environment—learner-to-learner, learner-to-instructor, and learner-to-course-content interaction—that are available online. Hence, distance learning is an intervention in which the students and teachers can create real classroom sessions using a virtual environment (Abuhassna & Yahaya, 2018).

Distance education has transformed the educational process in unprecedented ways. Students, employers, and society are all adopting a more favorable attitude toward distance education, as students pursuing it receive similar level of recognition, respect, and career chances as those enrolled in traditional courses. From increasing flexibility to introducing new learning techniques, distance learning appears to be as diverse in time and location as it is in concept. By choosing the time, location, and course content, students can make their own decisions about their education. Moreover, distance learning can reach students where they are and connect educators and learners in innovative ways. Distance learning programs allow students who do not have access to educational facilities, whether because of their rural location or because they have work commitments, to learn and progress in the environment that is most conducive and favorable to their success. Furthermore, distance learning reduces the cost of education, especially for learners thanks to the scalable nature of digital learning.

In the context of the current study, alternatives are a set of distance learning features that are to be evaluated, and criteria are influencing factors needed to determine the best alternatives. The objective of this study is to analyze distance learning alternatives and discuss how these alternatives and the related criteria influenced students’ academic performance and retention in distance learning education. We developed a multicriteria decision-making (MCDM) model to optimize learning objectives and outcomes, we then developed a recommendation model to enhance and optimize learning strategies through the adoption of customized recommendations for all stakeholders involved in the learning process. To experiment our model, data were collected using surveys and then processed and further analyzed using data mining techniques to develop a better understanding of the alternatives.

Nevertheless, distance learning is not without drawbacks. The challenges of implementing distance learning are substantial, both in terms of infrastructure distribution, Internet access, and learning environments. To address these issues, several studies (Willis, 1994; Shih et al., 2003) have tackled the inherent challenges of distance learning and its application strategies.

Distance learning was introduced in the eighteenth century and has gradually grown and evolved from correspondence distance learning, in which learning was delivered by mail. In the last two decades of the twentieth century, distance learning became computer-based, and it flourished when it was adopted in universities as a learning mechanism and platform. For example, virtual universities established in the first decade of the twenty-first century led to the wider adoption of distance learning platforms. IT developments have led to corresponding developments in distance education, and the characteristics of distance learning have changed with technological developments and trends (Bozkurt, 2019).

E-learning is defined as “the wide set of applications and processes which use available electronic media and tools to deliver vocational education and training” (Alqahtani & Rajkhan, 2020). Appropriate e-learning technology choices help institutions avoid technological issues and barriers (Zaied 2012).. Learning styles, learning resources, learning activities, courses, and even learning pathways can all be included in e-learning recommender systems (Zhang et al., 2021).

According to Alqahtani and Rajkhan, e-education, distance learning, and online learning are different e-learning methodologies. E-learning can be conducted using an electronic device, and it helps individuals and people in large groups to learn about a particular phenomenon over the Internet (Chitra & Raj, 2018). Synchronized learning is a real-time interaction between student and instructor in distance learning. Asynchronized learning is the absence of real-time interaction in distance learning; a student can learn at their own pace. Finally, blended learning is a combination of traditional learning and online learning.

Online learning integrates various technologies such as the Web, email, audio and video conferences, and computer networks to deliver online education. Online learning allows the learners to learn at their own pace and in a comfortable environment. In the twentieth century, the Open University in the United Kingdom introduced partially and fully online courses.

Distance learning, e-learning, and online learning rely heavily on underlying technologies and platforms. These technologies include the Internet, intranet, extranet, audio and video conference platforms, information and collaboration technologies, and digital collaboration platforms. It is also critical to have the technological infrastructure and computation capacity to support distance education (Dawson & British Computer Society, 2006).

2 Literature review

This section describes the learning strategies and methodologies, and the distance learning models implemented in other studies.

2.1 MCDM and recommendation systems

To explain the context of this study, we introduce e-learning and summarize and discuss the main concepts involved in decision-making models, including MCDM, and recommender systems.

2.1.1 MCDM

MCDM is a decision-making technique based on several available alternatives, or a theory that explains the decision-making process while considering several criteria. In other words, MCDM is a decision-making theory that weighs asmall number of alternatives against a variety of criteria (Mardani et al., 2015). Multicriteria analysis, or making decisions based on several, often contradicting, criteria, is referred to as a decision-making system (Hwang & Yoon, 1981). Thus, MCDM models are useful for assessing alternatives and deciding on the best alternatives to select the ideal criteria. MCDM integrates computational and mathematical methods to subjectively analyze performance criteria by decision makers. MCDM employs operations-research models that are based on a list of predetermined criteria, available alternatives, and variables that a decision maker can examine during the decision-making process, and decision makers must simply, evaluate, and rank the current choices (Triantaphyllou, 2000). Researchers have identified several characteristics and processes involved in MCDM modeling. The main aspects of the MCDM process are the criteria set, preference structure, alternative set, and performance values. A hierarchical analysis must be performed to acquire the criteria set and preference structure, this has been applied in our study. Decision makers can use this hierarchy to determine the relative relevance of the criteria and then evaluate alternatives against each (Zaied 2012).

Although MCDM models are applied in numerous situations and industries, the method has several flaws some of which have been addressed in this study. Jassbi et al. in Jassbi et al., (2014) noted that the primary flaw in the original MCDM model is that the type of data used pertains to previous decisions made about the available options. This can have a significant impact on the current decision-making process because alternatives with a “bad” history will influence the current decision and vice versa. In our model, we rely on experts to validate the model’s criteria and attributes and decisions are made based on the analysis of data collection from students and teachers in addition to the data received from the UAEU IT technical center reporting panel issues. Additionally, (Pourjavad & Shirouyehzad, 2011) pointed out that, when applied to the same problem, different methodologies can produce different solutions in MCDM. A decision maker seeks the closest answer to the ideal, weighing alternatives against all stated criteria. Therefore, in critical situations, decision makers employ several MCDM approaches, or an aggregation of approaches, such as the rank average method, to solve this problem (Pourjavad & Shirouyehzad, 2011). Finding and selecting an effective MCDM method is not an easy undertaking, and much consideration must be given to the method selection (Mulliner et al., 2015). In our model we have aggregated the results of three MCDM approaches to acquire the advantages of the weighted sum, weighted product, and the AHP.

Over the last three decades, several methods for solving MCDM between finite options, involving pairwise comparisons of the alternatives and criteria, have been developed (Alonso & Lamata, 2006). However, in MCDM, there are usually conflicts between criteria. Criteria can have a significant impact on the output of the assessment system, particularly evaluation and selection, in any MCDM problem. Exploring novel and reasonable ways to weight choice variables or qualities is one of the most important functions in MCDM modeling. Two MCDM techniques—the weighted sum method (WSM) and the weighted product method (WPM)—have been combined to create the weighted normalized decision matrix (Yazdani et al., 2016).

2.1.2 Recommendation systems

The problem of recommendation in MCDM has been recognized as a guide to assist members of a community in locating information or objects that are most likely to be of interest or relevance to their requirements. The addition of many parameters that can affect the users’ opinions may lead to more accurate suggestions in systems in which recommendations are based on the opinions of others. Hence, the additional information offered by multicriteria ratings could aid in improving the quality of suggestions by allowing for more complicated preferences of each user to be represented (Adomavicius et al., 2011). Developing recommender systems to help people select courses, resources, and learning materials in e-learning is essential (Zhang et al., 2021). A recommender system can be defined as a system that produces individual recommendations as an output or as a system that assists users in locating desired items by providing recommendations based on one of the recommended content items or similar user ratings on recommended items (Marlinda et al., 2017).

According to Marlinda et al. (2017) and Zhang et al. (2021) recommender systems are usually divided into the following categories:

-

Content-based recommendation (content-based filtering)—the user is recommended products that are comparable to those that the user has previously favored

-

Collaborative suggestions (collaborative filtering)—the user is offered items based on other users’ preferences and interests

-

Hybrid approaches (hybrid collaboration)—a combination of collaborative and content-based methods

2.2 Learning strategies and methods

Many researchers anticipated the increase in the scope of distance learning before it expanded. Analyzing academic performance in distance learning involves, on one hand, a micro-level examination of the instructional strategies used or demographic profiles of successful learners in these environments and, on the other hand, a macro-level analysis of national or global data (Martin & Oyarzun, 2017). Questionnaires and follow-up interviews offer a cost-effective way to investigate academic performance (Neroni et al., 2019; Utomo et al., 2020). In massive open online courses (MOOCs), global standards and factors such as the course quality and delivery method and the perceptions of online learning of students, faculty members, and administrators need to be considered (Martin & Oyarzun, 2017). An investigation of the readiness of online teaching for both faculty and student by implementing the distance learning course following the education committee guidelines (Griffiths, 2016). Some authors emphasized that the adoption of distance learning during the COVID-19 pandemic was hampered by reluctance (Ali, 2020; Burgstahler et al., 2004; Niemann, 2017; Roberts & Crittenden, 2009).

Faculty members’ ability to adapt to online learning is a crucial aspect of changing from traditional to distance learning and online platforms. Facilitators need to be able to adapt to the change in the teaching methodology (König et al., 2020). Faculty members face the challenge of maintaining the same level of flow to deliver knowledge in online classes. To further analyze the issues students and faculty members face, Al Lily et al. developed a model to examine pandemic-related stress, anxiety, depression, and family issues. Educational institutions deal with many students and tend to adopt distance learning programs only if the infrastructure and software are viable (Erol & Danyal, 2020). During the COVID-19 pandemic, educational institutions began to invest heavily in training their workforce to deal with many students learning online (Fatonia et al., 2020). Nevertheless, some students still felt that they were exposed to a relatively new educational setup with little or no guidance on how to learn like they did in the traditional environment (Awan et al., 2020).

Several researchers (Griffiths, 2016; Ko et al., 2022; Lalitha & Sreeja, 2020; Shih et al., 2003; Utomo et al., 2020) have identified the methodologies and learning strategies adapted to conduct distance learning as asynchronous or synchronous learning. In the virtual synchronous learning method, an instructor is available in real time for assistance, whereas the asynchronous learning method consists of recorded or deferred lessons. Several authors by Lalitha & Sreeja, (2020) Zorrilla et al., (2010) also discussed a blended or hybrid learning strategy in which a combination of both synchronous and asynchronous methods is adopted. Furthermore, Lalitha and Sreeja described a self-learning strategy, in which learners adapt to the self-learning method and the open distance learning method proposed (Shih et al., 2003), where the learners learn in a self-paced environment.

Distance learning methods can be based on global, economic, or technological criteria (Roberts & Crittenden, 2009; Traxler, 2018). Furthermore, qualitative, quantitative, portfolio-based, formative, and summative assessments are used to evaluate academic performance in distance learning (Deniz & Ersan, 2002; Shih et al., 2003; Traxler, 2018). Data collected from surveys are also used in this context (Lalitha & Sreeja, 2020) (Neroni et al., 2019) (Utomo et al., 2020) (Martin & Oyarzun, 2017). Recommendations and predictions are derived from patterns found in assessment data (Lalitha & Sreeja, 2020).

2.3 Classification and comparison of existing work

Learning comprises a learner’s ability to understand content, the learner’s thinking processes and reasoning capacity, and how the learner implements the knowledge and competencies acquired factors reflected in the learner’s personality (Lalitha & Sreeja, 2020). The contextual criteria that influence learning are the student’s personality traits and demographic characteristics, session patterns, success rate, time spent on study, delays, content viewed, and attempts made on tests, assignments, and quizzes, and the difficulty of the questions (Zorrilla et al., 2010). Assessments used to measure learner performance include quantitative and qualitative assessments, curriculum and portfolio-based assessments, and outcomes- or performance-based assessments (Deniz & Ersan, 2002).

Social, cognitive, metacognitive, resource management, and teaching strategies are important in formulating learning strategies. The management of time and effort, and biological, psychological, demographic, and cognitive factors affect academic performance (Martin & Oyarzun, 2017; Neroni et al., 2019). Thus, the conventional practices related to scalability proved inadequate for survival when faced with the extra pressure of the pandemic environment. It has been extremely hard to scale the scope of operations demanded by conditions during the pandemic because of cost and Internet availability constraints (Adnan & Anwar, 2020).

In several studies, learners’ profiles and demographic characteristics were examined to evaluate their academic performance (Deniz & Ersan, 2002; Erol & Danyal, 2020; Khanna & Basak, 2014; Shih et al., 2003; Traxler, 2018; Zorrilla et al., 2010). Similarly, academic performance was evaluated using learner characteristics (Deniz & Ersan, 2002; Martin & Oyarzun, 2017; Utomo et al., 2020). Contextual criteria such as success rate, engagement of the student, learning patterns, course features, and institutional support were also studied (Deniz & Ersan, 2002; Zorrilla et al., 2010), and cognitive and constructive factors such as student self-introspection, awareness, thinking and reasoning skills, and social intelligence were also examined (Lalitha & Sreeja, 2020) (Neroni et al., 2019) (Traxler, 2018).

Factors such as time spent, effort invested, financial position, and policies were found to influence the outcomes of distance learning (Griffiths, 2016; Khanna & Basak, 2014; Martin & Oyarzun, 2017; Neroni et al., 2019; Traxler, 2018). Moreover, technical difficulties such as Internet access, speed, and cost, and workload were found to affect the students’ academic performance and the outcomes of distance learning (Adnan & Anwar, 2020; Dhawan, 2020; Erol & Danyal, 2020; Griffiths, 2016; Martin & Oyarzun, 2017; Traxler, 2018; Utomo et al., 2020). Political, financial, and other global aspects also directly affect distance learning outcomes (Erol & Danyal, 2020; Martin & Oyarzun, 2017; Traxler, 2018). Finally, it was found that instructors need to be highly competitive to accept changes in curriculum technologies (Dhawan, 2020; König et al., 2020; al Lily et al., 2020; Shahzad et al., 2021).

From the study and the analysis of the studies listed in Table 1, we identified the following limitations:

-

Lack of statistical evidence and analysis used for the evaluation of distance learning, including the evaluation of:

-

Stakeholder factors in distance learning and

-

Demographic and contextual criteria

-

Technological implementations were not thoroughly assessed

-

Lack of in-depth evaluation of technological implications

-

Relationship between alternatives were not considered

-

Covariance between distance learning alternatives

-

Hidden patterns and most important alternatives affecting distance learning

-

Fundamental problems and solutions of distance learning are broadly classified

-

Scalability of distance learning

-

Technology adoption

3 MCDM Framework for efficient distance learning strategies

This section explains in detail the research methodology and the formal modeling of our MCDM framework. The methodology explains the type of research conducted and how data was collected, cleaned, normalized, analyzed, and evaluated. Moreover, we discuss the methods adopted and the rationale for choosing these methods.

In this study, MCDM was used to evaluate alternatives according to a variety of criteria to extract the best alternative available to improve the performance of student undertaking distance learning. Additionally, the set of factors that influence the distance learning system are called criteria, and the features that correlate with these criteria are called alternatives. Therefore, the alternatives were evaluated according to the criteria selected. The correlation between the two has been defined using a decision matrix. The research procedure and the topics described in this paper involve the creation of a survey that was based on the requirements, alternatives, and criteria of distance learning. The following sections detail the MCDM model, matrix creation, and processing of the results. Furthermore, we explain the process of ranking alternatives and selecting the best alternatives. The results were used as an input for the recommendation model, which was intended to enhance the performance of students undertaking distance learning.

We describe hereafter our multicriteria decision-making model including the adopted methods of the WSM, WPM, AHP, and the proposed recommendation model.

3.1 Multi-criteria decision-making model description

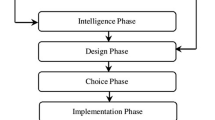

The MCDM model is discussed and implemented in this research study. In various application domains, scientists and engineers employ MCDM methods to solve problems involving multiple variables and uncertain conditions (Farkaš & Hrastov, 2021). The method is based on comparing several alternatives to estimate their importance and then ranking them depending on the weight of each evaluation criterion. The model compares each alternative according to existing criteria, and the best alternative is ranked, as illustrated in Fig. 1.

Our proposed model uses research data from two sources: (a) surveys completed by students and instructors and (b) data captured by the incident reporting system of United Arab Emirates University (UAEU). The collected data were analyzed and then evaluated for the study. While collecting the data, the demographic, contextual, technological, economic, cognitive, and constructive information of students and instructors were considered. This data was preprocessed and subjected to the MCDM model for calculations. During preprocessing, the data were converted into a numerical format in the form of weights. This is required, as MCDM deals only with quantitative data. After the survey was validated, survey questions were considered relevant and within the scope of the proposed research. The final set of data was collected from students and instructors and then used in the MCDM process, as depicted in Fig. 2.

The first step in MCDM is data normalization, which is a regulation process where all data have been processed for consistency and redundancy removal. The normalization process ensures that all data looks and reads the same way across all records, which is crucial in statistical calculations. The next phase of the MCDM process is changing the normalized matrix into a weighted normalized decision matrix.

The weighted matrix is used to calculate the ranks according to each of the MCDM methods. In this study, the two matrices (student and instructor) were combined into one single matrix by taking the average of the values of each cell with respect to the criteria and alternative. This combined data is used to calculate the weighted sum, weighted product, and AHP. The derived weighted coefficients decide the rank of each alternative. The best five alternatives are compiled using WSM, WPM, and AHP. Then, results are compared together based on the highest-ranking scores to find the best alternatives. These alternatives are used in the recommendation model, which would give recommendations based on the best alternative for the learning environment.

3.1.1 Alternative and criteria creation

To create a trustworthy model, it is important to select alternatives and criteria that express the underlying problems. Feature engineering is the process of transforming raw data into alternatives that better represent the problem to create a predictive model (Rawat & Khemchandani, 2017). Feature engineering relies on two main processes: feature extraction and feature selection. Feature extraction is the process of identifying key data elements that represent and highlight important areas. In feature extraction, all alternatives are extracted from the data set. Some of these alternatives may be irrelevant. In feature selection, all the alternatives that were extracted from the given data set are analyzed and then the important and relevant alternatives are identified. The selection of weak alternatives may negatively affect the MCDM model.

We mapped the key words from the survey questions and the technical incidents reports to a set of criteria and alternatives based on domain expert knowledge (Rawat & Khemchandani, 2017). Criteria are the set of factors that may affect the quality of learning, including the level of education, number of courses, study hours, and device availability. The alternatives would be the aspects of the education system that are affected by criteria such as the learning environment, motivation to learn, and satisfaction level. Table 2 lists the criteria and the alternatives that were extracted from the incident data and the data collected from surveys. Criteria such as Internet and Wi-Fi connectivity, occurrences of technical issues, and technical support can affect student participation and opportunities to demonstrate learning, which are considered alternatives.

3.1.2 Matrix creation and normalization

Once criteria and alternatives were extracted and identified as elaborated in the previous Section 3.1.1, we could construct a decision matrix. A decision matrix is useful when there is more than one option to decide among and there are several criteria to consider for making a good, reliable, and final decision.

To create a decision matrix, we followed several steps described in Xu et al., (2001). The first step was to identify the criteria and alternatives, and this had already been completed in the feature extraction phase. Then, we built the matrix using alternatives as columns and criteria as rows. The criteria and alternatives used to construct the decision matrix were extracted from the student and instructor surveys and reported incidents. The matrix was uniform and made consistent throughout the study. To measure the direction of a relationship between two variables, here a pair of criteria-alternative in each row and column in the matrix, we used the covariance method. Covariance measures the relationship between the movements of two variables and measure how they are correlated (Wu et al., 2012). The covariance of two variables is calculated using the following formula: \({COV}_{x,y}= \frac{\sum \left({x}_{i}- \overline{x } \right)\left({y}_{i}- \overline{y } \right) }{N-1}\)

This led to the creation of two matrices: the student covariance matrix and the instructor covariance matrix. We combined the two matrices into a single matrix by calculating the average of both.

The uniformed combined matrix was normalized. Normalization is important because it changes the values of a numerical data set to a range of common value scale [0,1], without distorting the current values (Bronston, 1976). To normalize the matrix, we divided each cell value in the matrix by the total row value.

We used a 5-point Likert scale (1–5) to rank alternatives. The Likert scale was derived to measure the acceptance and validity of the criteria against the alternatives (Joshi et al., 2015). After a rank was assigned to each alternative, we calculated the weights for each alternative by dividing the rank score by the total value of ranks collectively. The weights were used in the WSM and WPM.

The next subsections describe the remaining steps in the MCDM process.

3.1.3 Decision making algorithms

All criteria in an MCDM model can be classified into two categories: criteria that are high in value have high rankings and criteria that are in low value have low rankings (Miljković et al., 2017). In the following subsections, we detail MCDM methods using the WSM, WPM, and AHP. The best alternatives resulting from all three methods were carried forward to the recommendation phase.

WSM

The WSM is used in many fields such as robotics and data processing. It is one of the MCDM methods that is used to determine the best alternative based on multiple criteria. Below is the weighted sum formula used in this study:

where \({w}_{i}\) is the weight, \({a}_{ij}\) is the value of the alternative at row \(i\) and column \(j\) in the matrix, \(n\) is the number of criteria, and \(m\) is the number of alternatives. The values in the matrix are normalized (Budiharjo & Muhammad, 2017). The normalization value is a nondimensional schema, which has a range value between 0 and 1. The total of the weighted sum based on the criteria is calculated and the scores are ranked to select the best alternative. In this study, we have ranked the alternatives, then added all the ranks of each alternative to calculate the sum of ranks. After that, each rank was divided by the sum of ranks to produce the weight for each alternative. Finally, the sum of all values for each alternative was displayed as the WSM result, which was used to identify the best alternatives.

WPM

The weighted product is an MCDM method. To calculate the weighted product, the ranks and weights of the alternatives were considered as discussed for the WSM. However, this method applies a product for all derived values of an alternative raised to the weight power to score the alternatives (Budiharjo & Muhammad, 2017). To calculate the value for each cell, the following formula is used:

where \({\mathrm{A}}_{j}^{wpm}\) is the weighted product score value, \({a}_{ij}^{wi}\) is the alternative value for the \({i}^{th}\) row and \({j}^{th}\) column to the power of the weight of that \(i\) row (criteria), \(n\) is the number of criteria, and \(m\) is the number of alternatives.

AHP

AHP is one of the most widely used decision-making methodologies. Many researchers adopt the AHP because of its appealing mathematical qualities and it is widely used in a variety of industries around the world, including business, government, industry, education, and health. AHP is made up of strategies that are effective at ranking significant management challenges (Forman & Gass, 2001). Moreover, this method allows for consistency in judgment, where inconsistent opinions or judgments are checked and reduced. The strategy focuses on prioritizing selection criteria and discriminating between the more significant and less important criteria and alternatives.

The process begins with the creation of a hierarchy in which objectives are highlighted and alternatives are specified. Then a square matrix is created for comparisons where we can compare alternatives with other alternatives and then select the optimal alternative (solution) based on weighting coefficients. It is important to add numerical values to the elements in the matrix to be compared together and produce a result in the outcome, which is the ranking of alternatives. The square matrix of comparisons, also known as the relative importance, is generated by comparing two components in pairs, one against the other in a square matrix denoted by \({A}_{1}={\left({a}_{1,ij}\right)}_{m\times m}\).

The relative importance of alternative \(i\) to the alternative \(j\) is represented by the element \({a}_{1,ij}\). Note that the transposed value is defined intuitively by \({a}_{1,ji}=\frac{1}{{a}_{1,ij}}, i,j=1,\dots ,m.\)

The decision-maker, students, and instructors, derive a preference weight vector \(W=\left({w}_{1},{w}_{2},\dots ,{w}_{m}\right)\), where \({w}_{i}\) denotes the absolute preference value of the \({i}^{th}\) alternative. Therefore, \({a}_{1,ij}=\frac{{w}_{i}}{{w}_{j}}\), which leads to \({a}_{1,ii}=1\), for all \(i=1,\dots ,m.\) The next step is to normalize the pairwise comparison matrix \({A}_{1}\). The normalized pairwise comparison matrix of the \({i}^{th}\) alternative is denoted by \({A}_{2}\) with elements defined as \({a}_{2,ij}=\frac{{a}_{1,ij}}{\sum_{i=1}^{m}{a}_{1,ij}}, i,j=1,\dots ,m\). This normalization is called the distributive mode of AHP. Hence, the criteria weights vector will be given by \(CW=c({cw}_{1},\dots ,{cw}_{m})\) where \({cw}_{i}=\frac{1}{m}\sum_{j=1}^{m}{a}_{2,ij}\), \(i=1,\dots ,m.\)

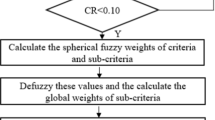

Now, for the consistency analysis, one will create a new matrix \({A}_{3}\) defined as \({A}_{3}={A}_{1}\times diag\left({cw}_{1},\dots ,{cw}_{m}\right)={\left({a}_{3,ij}\right)}_{m\times m}\) where \({a}_{3,ij}={a}_{1.ij}\times {cw}_{j}\). The weighted sum value is \(WS=c\left({ws}_{1},\dots ,{ws}_{m}\right)\), where \({ws}_{i}=\sum_{j=1}^{m}{a}_{3,ij}\). The ratio of consistency \({R}_{i}=\frac{{ws}_{i}}{{cw}_{i}}, i=1,\dots ,m\) is used to calculate the consistency index \(CI= \frac{{\lambda }_{max}-m}{m-1}\) where \({\lambda }_{max}=\frac{1}{m}\sum_{i=1}^{m}{R}_{i}.\) Finally, the consistency ratio will be defined as the proportion of non-consistency \(CR=\frac{CI}{RI}\) where \(RI\) define the Random Index which is generated randomly for different number of criteria \(n.\) If the \(CR\) is less than 10%, the metrics are said to be consistent and consequently the AHP will be used by ranking the weight criteria to find the most preferred alternative.

Accordingly, a random value is awarded to compare two alternatives based on the relationship between the two. These rankings are between 1 and 5 both inclusive. A strong relationship is denoted by 5 and a weak relationship is denoted by 1.

3.2 Recommendation model

The recommendation model is specifically based upon the ranking system. With the help of mathematical analysis, the link between alternatives and criteria has been created effectively. The use of mathematical evaluation and methodologies in data analytics is essential as it allows the user to determine the relationships among variables (Kumar & Chong, 2018). The data collected involved survey and incident data, which are amalgamated in an effective manner to perform the analysis (Ko et al., 2022).

The MCDM model was used to extract the key alternatives that are essential to the core practices in the recommendation model. The best alternatives serve as an input for the recommendation model we built. The recommendation model analyzes the parameters to carry out and identify whether an aspect or area of distance learning is favorable for a particular stakeholder and provides the appropriate recommendation accordingly. In other words, the overall objective is to construct the basis of a learning strategy or recommendations for the concerned stakeholder to excel and overcome the challenges in distance learning (Ameri, 2013). The possibility of the students adhering to the new distance learning environment is somehow transformed with the help of the recommendation model. The recommendation model involves the following core elements.

-

Decision-making criteria methods

-

Ranking system

-

Best alternatives

-

Recommendations

The decision-making criteria method derives the best alternatives using the ranking system. The ranking system helps to identify the most viable outcome and gives recommendations accordingly.

We have collected and ranked the best alternatives obtained from the methods WSM, WPM, and AHP. We assigned a rank of 5 to the highest-scoring alternative and 1 to the lowest scoring alternatives.

We created a unified table containing all the best alternatives. We then calculated the total ranking score of each of the best alternatives by adding their scores from the (WSM, WPM, and AHP) methods. The table shows the order of the best-scored alternatives (teaching environment, opportunity to demonstrate learning, participation, motivation to learn, professional development training, satisfaction level, ability to understand curriculum, and effectiveness of distance learning) respectively. Table 3 below shows the order of the best alternatives and the number of recommendations proposed for each alternative.

An interactive recommendation system was created to suggest several actions to users through the adoption of a Web portal. Two web pages have been created (an instructor webpage and a student webpage). Each webpage contains a set of questions that should be answered by the user within a predefined set of ranges. After the user answers the form questions, the interactive recommendation system generates recommendations accordingly.

To analyze the data collected from users through the surveys we have created a static recommendation model. This model is used to suggest recommendations for each user based on their survey-populated data. The static recommendation model takes as input the user ID used in the survey data, and the model will retrieve all answers for that specific user. Recommendations will be given accordingly based on the data score of the user. An example is shown in Figs. 3 and 4.

4 Experiments

In this section, we cover the experimentation and evaluation phase, including survey creation and evaluation, data collection, and analysis. Upon validating the surveys, they were distributed to instructors and students, and then data were collected. Moreover, additional data were collected from the help desk and service center through the UAEU IT technical center reporting panel. The collected data were evaluated, cleaned, and analyzed. We examined the data collected and extracted the main keywords and their frequency of occurrence. Then, we created groups that represent the main keyword; for example, “Blackboard” and “BB” were assigned to the same group, as they represent the same topic. Thereafter, data from surveys and incidents reported at UAEU were collected and examined to extract relevant criteria and corresponding alternatives. Incidents are reported through the UAEU IT technical support and service portal by students and instructors when they face any challenges during online teaching. The data set contains approximately 800 data records, from which 27 keywords were filtered. Figure 5 provides a visual representation of incidents in percentages. “Lockdown Browser” made up 40% of the total incident count, “Blackboard” 27%, “courses” 21%, “technical issues” 4%, “recordings” 3%, “textbooks” and “applications” 2%, and mail “1%.”

After completing the survey evaluation, the finalized questionnaires were distributed. The survey was created using Google Forms and made available to students and faculty members from mid-February to mid-March 2021, for one month. The population size includes 327 students, and 76 faculty members. The data collected consisted of letters and numbers, it went through cleaning and preparation for analysis.

4.1 Survey creation

Because of the prevalence of distance learning, it is critical to examine the effectiveness of both distance learning and the learning process. Therefore, we created a questionnaire that we distributed to students and instructors We considered the educational levels of both instructor and student participants. Thus, in the student survey, we inquired about the current level of study the student is pursuing, while for instructors, we asked what levels they teach. The instructors have also completed professional development and training courses on teaching methods and practices that allow students to participate in lifelong learning; therefore, these areas were covered in our questionnaire. Likewise, because instructors have addressed the effectiveness and the technical and educational difficulties of online learning, these areas were also covered in our survey.

Shih et al. included in their questionnaire the areas of user’s motivation to engage in distance learning, the support provided by the institution for any challenges faced through distance learning, and the suitability of the courses for students. In our survey, we included questions about courses that are more specific; for example, we asked about the number of courses students take per semester and the number of hours they spend attending online lectures. We also covered group discussions and peer support in our survey (Shih et al., 2003).

4.1.1 Student survey

The student survey consisted of the following questions.

-

1.

What is your name? (optional)

-

2.

How old are you?

-

3.

What is your gender?

-

4.

What educational level have you achieved?

-

5.

How many distance learning classes do you take per semester?

-

6.

How many hours a day do you attend classes via distance learning?

-

7.

How satisfied are you with the distance learning?

-

8.

How motivated are you to pursue distance learning?

-

9.

How effective has the distance learning been for your education?

-

10.

Is your home environment quiet and peaceful enough for distance learning?

-

11.

How eager are you to participate in remote learning classes?

-

12.

Can you understand the lessons taught, and do they cover the curriculum?

-

13.

Are you getting feedback from your instructor?

-

14.

Did the stress caused by the pandemic affect your academic performance?

-

15.

Do you have a proper device for attending distance learning classes?

-

16.

Is your Internet fast and stable?

-

17.

Did you miss any exams or classes because of technical issues?

-

18.

How often do you face a technical issue?

-

19.

Has your institution provided support for any technical issues you have faced?

-

20.

What devices are you using to attend you distance learning classes?

-

21.

Do you struggle with any software or tool provided by your instructor for distance learning?

-

22.

Are enough devices available within your household for each family member to use at the same time?

A data sample of 26 records from the student survey was collected for the analysis. The Likert scale from 1 to 5 was used for the numerical questions.

4.1.2 Instructor survey

We collected data from seven instructors with the same Likert scale used for the student survey, using the following questions:

-

What is your name? (optional)

-

What is your gender?

-

Which level do you teach?

-

How often do students have a one-on-one discussion with you?

-

How helpful were your peers (colleagues) in supporting you through distance learning?

-

How stressful was distance learning for you during this pandemic?

-

Are you enjoying teaching students remotely?

-

How satisfied are you with the distance learning?

-

Is your home environment quiet and peaceful enough for distance learning?

-

Are you receiving any professional development or other training to help you with distance learning?

-

Are students gaining as much knowledge through distance learning as they did in traditional learning?

-

Did your workload increase during distance learning?

-

How do you evaluate the level of interaction of students in the lecture?

-

Do you give students opportunities to demonstrate their learning?

-

How many classes do you teach through distance learning?

-

Is the worry about the pandemic affecting your teaching skills?

-

Do you have a proper device to conduct distance learning classes?

-

Is your Internet fast and stable?

-

How often do you face technical issues?

-

Has your institution provided support for any of the technical issues you have faced?

-

Do you struggle with any software or tool you use to conduct your distance learning classes?

-

Have you missed any classes because of technical issues?

-

What devices are you using to present your distance learning classes?

4.2 Survey evaluation

The evaluation is a key factor in the analysis phase, as it is used to determine the relevancy of each survey question to the proposed topic. To develop an MCDM, we needed to gather data from different sources; therefore, we constructed two surveys, an instructor survey, and a student survey, and collected incident data from UAEU. Before distributing the surveys to the study population, we evaluated the questionnaires by using 10% to collect sample data to determine the validity and reliability of the surveys (Schwarz, 2007). To evaluate the internal consistency between the questionnaires’ items, we used the Cronbach’s alpha. Moreover, the correlations between the survey questions were examined to assess the strength of the relationships.

The survey was evaluated and reviewed by experts; a process known as expert-base evaluation. During the evaluation, the following aspects were checked: the effectiveness, efficiency, interactivity, and satisfaction of the survey; language; the structure of the questions; and relativity. Two instructors gave feedback on the instructor and student surveys, and two students gave feedback on the student survey (Ikart, 2019). The feedback covered how the questions were asked (word choice), and some feedback was related to the answer options. The survey contained only answer options and no open-ended questions. The feedback was considered, and both surveys were updated to incorporate the constructive feedback. The Cronbach’s alpha was calculated for both surveys and resulted to 98% and 93% for students and instructors respectively (Hof, 2012).

4.3 Data analysis

In the following subsections, we detail the results of data analysis using the MCDM methods of the WSM, WPM, and AHP.

4.3.1 WSM

The results of the WSM appear in Table 4.

From Table 4, we can deduce that the highest alternatives are teaching environment, satisfaction level, opportunity to demonstrate learning, motivation to learn, and professional development training.

4.3.2 WPM

The WPM and formula were applied to the normalized weighted matrix. The output of the weighted product total value is depicted in Table 5.

From Table 5, we can conclude that the highest scores were for the following alternatives, in order: participation, motivation to learn, teaching environment, ability to understand the curriculum, and effectiveness of distance learning.

4.3.3 AHP

Using the AHP methodology on the data collected from the surveys, we calculated the A1 matrix, which is shown in Table 6, to create a new column in the decision matrix with values as given in Table 7. To assign weights for each alternative in A1 matrix, we involved domain knowledge experts such as educators and distance learning specialists. The experts were selected carefully to make sure that collected the inputs are relevant and consistent. Couple of iterations with the experts have been conducted to resolve some discrepancies and consensus on the results have been reached.

The sum of these values was calculated using the following formula:

From A4 matrix we get the lambda max:\({\uplambda }_{{\varvec{m}}{\varvec{a}}{\varvec{x}}}\)= 12.49054, and the consistency index:\(CI\) = \(\frac{12.49054-11}{11-1}\) = 0.149054.

Next, the consistency ratio was calculated based on Table 8, in which the upper row is the number of alternatives, and the lower row is the corresponding index of consistency for random judgments. The random index for our model is 1.51 because we have 11 alternatives (Saaty, 1987).

Hence, since CR = \(\frac{0.149054}{1.51}\) = 0.098711 < 0.1, we can conclude that pairwise comparison matrix is consistent, and the rankings obtained from the AHP are valid.

4.3.4 Analysis of results

To understand the best outcomes of the results, we need to further analyze the best alternatives from each method. We ranked the highest score as 5 and the lowest score as 1. The best alternatives and ranking, respectively, from the highest to the lowest, are depicted in Table 4 for the WSM, Table 5 for the WPM, and Table 9 for the AHP. To determine the overall rating value for each of the best alternatives, we added the values to determine the best alternatives and their overall value from the three methods combined as shown in Fig. 6.

As shown in the table, the highest ranked alternative is teaching environment, followed by opportunity to demonstrate learning, participation, motivation to learn, professional development training, satisfaction level, ability to understand the curriculum, and effectiveness of distance learning, in descending order.

4.3.5 Discussion

In this section we provide a general discussion and detailed analysis of the results obtained by the conducted experimentations.

WSM is considered one of the most widely used approaches in MCDM. The calculation of weighted sum values reveals the most desired and best ranked alternatives for distance learning. By adopting this methodology, we scaled down a wide set of alternatives into a selected set of ranked alternatives. In our study, the WSM results ranked the teaching environment as the most important alternative for distance learning. When the environment in which learning and teaching take place is quiet and peaceful, then students and instructors could conduct good performance through distance learning. The next best alternative is the satisfaction level of the learner or educator, which also came out to be one of the important ones too. The more satisfied the learner is, the better the performance and output will be. Next in the rank list is the opportunity to demonstrate learning, which is a key point in any educational system. As every learner needs to be provided with an opportunity to express or demonstrate the knowledge they have acquired. Motivation to learn and professional development are also ranked among the best alternatives by the WSM methodology.

Another widely used methodology in MCDM is the weighted product method. This method depicted the participation in learning as the top-ranked alternative which is a key component of distance learning. Learners tend to deviate from studies in distance education, which will lower their performance level. The WPM method also ranked motivation and environment as key alternatives for distance learning in this research. Therefore, learning motivation and teaching environment are considered as best alternatives for distance education. The ability to understand the curriculum is also an important aspect selected and ranked by the WPM method. The effectiveness of the learning method is also ranked of the best alternative.

The analytic hierarchy process is used to make decisions in complicated situations where many variables or criteria are examined for prioritizing and selecting the best alternatives. The AHP methodology in our research study shows that professional development is the most important alternative in distance learning education system. Educators must constantly update their technology and pedagogical knowledge to stay current with the global education system. This can be achieved by regularly attending professional development courses. Opportunity to demonstrate learning and teaching environments are also key alternatives depicted by AHP. This result conforms with the WSM ranking results. Participation is also ranked among the best alternative for distance education in this study. The ability to understand the curriculum is also important as it allows the learner to understand the lessons of the course and participate accordingly.

We have combined the results acquired by all three methodologies WSM, WPM, and AHP to build a list of the best alternatives for distance learning. Overall, the teaching and learning environment remained the top best alternative. According to this research, an engaged learning environment has been demonstrated to boost students' attention and focus, promote meaningful learning experiences, support greater levels of student achievement, and push students to practice higher-level critical thinking abilities. The opportunity to demonstrate learning has ranked second. Students can exhibit their knowledge by participating in discussions and lessons, and by giving lectures or doing presentations. Motivation to learn is also one of the top-ranked alternatives. Motivation is a state of mind that prompts and sustains students to be active and motivated during their studies. Professional development remained a top-ranked alternative where educators are encouraged demonstrate good performance and up-to-date knowledge to deliver high quality education through distance learning. Participation during learning, which is one of the top alternatives, allows students to express their knowledge and understanding of the lessons. Other top-ranked alternatives generated in overall by this research include the ability to understand the curriculum, satisfaction level in the learning process, and the effectiveness of distance learning.

5 Conclusion

The COVID-19 pandemic disrupted the normal course of life of people all around the world, including their education. In this paper, we study the proficiency of distance learning through the adoption of a multi-criteria decision-making model. The model analyzes distance learning-related data collected from the UAEU incident reporting system and through surveys. The study seeks to present a distance learning evaluation approach that includes a comprehensive list of criteria and alternatives related to e-learning. The collected data used in the MCDM model construction underwent preprocessing and normalization to derive an optimized data model leading to a set of recommendations. The outcomes of the MCDM model were fed into a recommendation model to improve the distance learning in the perception of all the stakeholders. A thorough literature review was performed to fully understand the MCDM model as well as the different methodologies used to implement it. Our research model uses three well-known methodologies, which are the WSM, WPM, and AHP for selecting and ranking of alternatives, and eventually deriving the best alternatives to improve distance learning. The best alternatives serve as an input to a recommendation model, which consists of two main approaches: (a) a static recommendation model, which provides recommendations based on the existing data collected via surveys, while the second mode is: (b) an interactive recommendation model, which provides recommendations through an interactive web interface.

The MCDM model was used to reveal the best alternatives based on a set of criteria, which can be used to enhance the comfort of using distance learning for various stakeholders. The MCDM model in this study divulges that the best alternatives are teaching environment, opportunity to demonstrate learning, participation, motivation to learn, professional development training, satisfaction level, ability to understand the curriculum, and effectiveness of distance learning.

This research compliments the multi-criteria decision-making model with a recommendation system for distance learning. This research could serve as a roadmap or a guideline for relevant future research works. The application of machine learning associated with MCDM can be studied as future work for better analytics and predictions of distance learning efficiency. Additionally, different MCDM methodologies can be examined such as TOPSIS, and VIKOR. Integrating MCDM methodologies directly to institutional institutions can be studied as future work. This paper evaluates the distance learning alternatives and discusses how recommendations can help students and instructors develop better learning outcomes by adopting the proposed recommendations.

Data availability

The survey data collected from student’s and teacher’s questionnaires are made available, however, the data collected from the University helpdesk reporting technical issues cannot be published as we need an approval to publish this data.

References

Abuhassna, H., & Yahaya, N. (2018). Students’ utilization of distance learning through an interventional online module based on Moore transactional distance theory. Eurasia Journal of Mathematics, Science and Technology Education, 14(7), 3043–3052.

Adnan, M., & Anwar, K. (2020). Online learning amid the COVID-19 pandemic: Students' perspectives. Online Submission, 2(1), 45–51.

G Adomavicius N Manouselis Y Kwon 2011 Multi-Criteria Recommender Systems Recommender Systems Handbook Springer US

al Lily, A. E., Ismail, A. F., Abunasser, F. M., & Alqahtani, R. H. A. (2020). Distance Education as a response to pandemics: coronavirus and arab culture. Technology in Society, 63, 101317.

Ali, W. (2020). Online and remote learning in higher education institutes: A necessity in light of COVID-19 pandemic. Higher Education Studies, 10(3), 16.

Alqahtani, A. Y., & Rajkhan, A. A. (2020). E-learning critical success factors during the Covid-19 pandemic: a comprehensive analysis of e-learning managerial perspectives. Education Sciences, 10(9), 1–16.

Alonso, J. A., & Lamata, M. T. (2006). Consistency in the analytic hierarchy process: a new approach. International journal of uncertainty, fuzziness and knowledge-based systems, 14(04), 445–459.

Ameri, A. (2013). Application of the analytic hierarchy process (AHP) for prioritize of concrete pavement. Global Journal of human social science interdisciplinary, 13(3), 19–28.

Awan, M., Bhaumik, A., Hassan, S., & Haq, I. U. (2020). Covid-19 pandemic, outbreak educational sector and students online learning in Saudi Arabia. Journal of Entrepreneurship Education, 23(3), 1–14. https://www.researchgate.net/publication/341714040_Covid-19_pandemic_outbreak_educational_sector_and_students_online_learning_in_Saudi_Arabia. Accessed 18 Jan 2023.

Bozkurt, A. (2019). From distance education to open and distance learning: A holistic evaluation of history, definitions, and theories. In Handbook of Research on Learning in the Age of Transhumanism, pp. 252–273.

Bronston, W. G. (1976). Concepts and theory of normalization. The Mentally Retarded Child and His Family, ed. Richard Koch and James Dobson, 490–516. https://mn.gov/mnddc/parallels2/pdf/70s/74/74-CTN-WGB.pdf. Accessed 18 Jan 2023.

Budiharjo, A. P. W., & Muhammad, A. (2017). Comparison of weighted sum model and multi attribute decision making weighted product methods in selecting the best elementary school in indonesia. International Journal of Software Engineering and Its Applications, 11(4), 69–90.

Burgstahler, S., Corrigan, B., & McCarter, J. (2004). Making distance learning courses accessible to students and instructors with disabilities: A case study. Internet and Higher Education, 7(3), 233–246.

Chitra, A. P., & Raj, M. A. (2018). Recent trend of teaching methods in education. Organised by Sri Sai Bharath College of Education Dindigul-624710. India Journal of Applied and Advanced Research, 2018(3), 11–13. https://www.phoenixpub.org/journals/index.php/jaar.

Dawson, R., & British Computer Society. (2006). Learning and teaching issues in software quality. British Computer Society.

Deniz, D. Z., & Ersan, I. (2002). An academic decision support system based on academic performance evaluation for student and program assessment. International Journal of Engineering Education, 18(2), 236–244.

Dhawan, S. (2020). Online learning: A panacea in the time of COVID-19 crisis. Journal of Educational Technology Systems, 49(1), 5–22.

Erol, K., & Danyal, T. (2020). Analysis of distance education activities conducted during COVID-19 pandemic. Educational Research and Reviews, 15(9), 536–543.

Farkaš, B., & Hrastov, A. (2021). Multi-criteria analysis for the selection of the optimal mining design solution—A case study on quarry ‘Tambura.’” Energies 14(11).

Fatonia, N. A., Nurkhayatic, E., Nurdiawatid, E., Fidziahe, G. P., Adhag, S., Irawanh, A. P., ... & Azizik, E. (2020). University students online learning system during Covid-19 pandemic: Advantages, constraints and solutions. Systematic reviews in pharmacy, 11(7), 570–576.

Forman, E. H., & Gass, S. I. (2001). The analytic hierarchy process—an exposition. Operations Research, 49(4), 469–486.

Griffiths, B. (2016). A faculty’s approach to distance learning standardization. Teaching and Learning in Nursing, 11(4), 157–162.

Hof, Melanie. 2012. “Questionnaire Evaluation with Factor Analysis and Cronbach’s Alpha An.”

Hunter, M., & Carr, P. (2004). Technology in distance education. Journal of Global Information Management, 10, 50–54.

Hwang, CL., & Yoon, K. (1981). Methods for multiple attribute decision making. In Multiple Attribute Decision Making. Lecture Notes in Economics and Mathematical Systems, vol 186. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-48318-9_3

Ikart, E. M. (2019). Survey questionnaire survey pretesting method: An evaluation of survey questionnaire via expert reviews technique. Asian Journal of Social Science Studies, 4(2), 1.

Jassbi, J. J., Ribeiro, R. A., & Varela, L. R. (2014). Dynamic MCDM with future knowledge for supplier selection. Journal of Decision Systems, 23(3), 232–248.

Joshi, A., Kale, S., Chandel, S., & Pal, D. (2015). Likert scale: Explored and explained. British Journal of Applied Science & Technology, 7(4), 396–403.

Khanna, P., & Basak, P. C. (2014). An integrated information management system based DSS for problem solving and decision making in open & distance learning institutions of India. Decision Science Letters, 3(2), 209–224.

Ko, H., Lee, S., Park, Y., & Choi, A. (2022). A survey of recommendation systems: Recommendation models, techniques, and application fields. Electronics (Switzerland), 11(1), 141.

König, J., Jäger-Biela, D. J., & Glutsch, N. (2020). Adapting to online teaching during COVID-19 school closure: Teacher education and teacher competence effects among early career teachers in Germany. European Journal of Teacher Education, 43(4), 608–622.

Kumar, S., & Chong, I. (2018). Correlation analysis to identify the effective data in machine learning: Prediction of depressive disorder and emotion states. International Journal of Environmental Research and Public Health, 15(12), 2907.

Lalitha, T. B., & Sreeja, P. S. (2020). Personalised self-directed learning recommendation system. Procedia Computer Science, 171, 583–592. https://doi.org/10.1016/j.procs.2020.04.063

Mardani, A., et al. (2015). Multiple criteria decision-making techniques and their applications–a review of the literature from 2000 to 2014. Economic Research-Ekonomska Istraživanja, 28(1), 516–571.

Marlinda, L., Baidawi, T., & Durachman, Y. (2017, August). A multi-study program recommender system using ELECTRE multicriteria method. In 2017 5th International Conference on Cyber and IT Service Management (CITSM) IEEE, pp. 1–5.

Martin, F., & Oyarzun, B. (2017). Distance learning. Foundations of Learning and Instructional Design Technology, Edtech Books. https://www.researchgate.net/publication/335798695_Distance_Learning. Accessed 18 Jan 2023.

Miljković, Bo. ˇza, Žiˇzović, M. R., Petojević, A., & Damljanović, N. (2017). New weighted sum model. Filomat, 31(10), 2991–2998.

Mishra, L., Gupta, T., & Shree, A. (2020). Online teaching-learning in higher education during lockdown period of COVID-19 pandemic. International Journal of Educational Research Open, 1, 100012.

Moore, J. L., Dickson-Deane, C., & Galyen, K. (2011). E-learning, online learning, and distance learning environments: Are they the same? Internet and Higher Education, 14(2), 129–135.

Mulliner, E., Malys, N., & Maliene, V. (2015). Comparative analysis of MCDM methods for the assessment of sustainable housing affordability. Omega, 59, 146.

Zaied, A. N. (2012). Multi-criteria evaluation approach for e-learning technologies selection criteria using AHP. International Journal on E-Learning, 11(4), 465–485. https://www.researchgate.net/publication/255567082_Multi-Criteria_Evaluation_Approach_for_e-Learning_Technologies_Selection_Criteria_Using_AHP. Accessed 18 Jan 2023.

Neroni, J., et al. (2019). Learning strategies and academic performance in distance education. Learning and Individual Differences, 73, 1–7.

Niemann, R. (2017). A Scalable Distance Learning Support Framework for South Africa: Applying the Interaction Equivalency Theorem. International Journal of Economics & Management, 11. https://www.researchgate.net/publication/317222729. Accessed 18 Jan 2023.

Pourjavad, E., & Shirouyehzad, H. (2011). A MCDM approach for prioritizing production lines: a case study. International Journal of Business and Management, 6(10), 221–229. https://doi.org/10.5539/ijbm.v6n10p221

Rawat, T., & Khemchandani, V. (2017). Feature engineering (FE) tools and techniques for better classification performance. International Journal of Innovations in Engineering and Technology, 8(2), 169–179. https://doi.org/10.21172/ijiet.82.024

Roberts, J., & Crittenden, L. (2009). Accessible distance education 101. Research in Higher Education Journal, 4, 1.

Saaty, R. W. (1987). The analytic hierarchy process—what it is and how it is used. Mathematical Modelling, 9(3–5), 161–176.

Schwarz, N. (2007). Evaluating surveys and questionnaires. Critical thinking in psychology, 54–74. https://doi.org/10.1017/CBO9780511804632.005

Shahzad, A., et al. (2021). Effects of COVID-19 in e-learning on higher education institution students: The group comparison between male and female. Quality and Quantity, 55(3), 805–826.

Shih, T. K., et al. (2003). A survey of distance education challenges and technologies. International Journal of Distance Education Technologies (IJDET), 1(1), 1–20.

Stepanyan, K., Littlejohn, A., & Margaryan, A. (2013). sustainable e-learning: Toward a coherent body of knowledge. Journal of Educational Technology & Society, 16(2), 91–102.

Traxler, J. (2018). Distance learning—predictions and possibilities. Education Sciences, 8(1), 35. http://www.mdpi.com/2227-7102/8/1/35.

Triantaphyllou, E. (2000). Multi-criteria decision making methods. In Multi-criteria decision making methods: A comparative study (pp. 5–21). Springer.

Utomo, M. N., Yasir, M. S., & Saddhono, K. (2020). Tools and strategy for distance learning to respond COVID-19 pandemic in Indonesia. Ingenierie Des Systemes D’information, 25(3), 383–390.

Willis, B. D. (1994). Distance education: Strategies and tools. Educational Technology.

Wu, W. B., & Xiao, H. (2012). Covariance matrix estimation in time series. In Handbook of Statistics (Vol. 30, pp. 187–209). Elsevier.

Xu, L., Yang, J.-B., & Manchester School of Management. (2001). Introduction to multi-criteria decision making and the evidential reasoning approach. Manchester School of Management.

Yazdani, M., Zolfani, S. H., & Zavadskas, E. K. (2016). New integration of MCDM methods and QFD in the selection of green suppliers. Journal of Business Economics and Management, 17(6), 1097–1113.

Zare, M., et al. (2016). Multi-criteria decision making approach in e-learning: A systematic review and classification. Applied Soft Computing, 45, 108–128.

Zhang, Q., Lu, J., & Zhang, G. (2021). Recommender systems in E-learning. Journal of Smart Environments and Green Computing, 1(2), 76–89. https://doi.org/10.20517/jsegc.2020.06

Zorrilla, M., García, D., & Álvarez, E. (2010). A decision support system to improve e-learning environments. In Proceedings of the 2010 EDBT/ICDT Workshops, pp. 1–8.

Acknowledgements

We would like to thank all involved students and faculty members in responding to the survey and providing their feedbacks, we also thank the UAEU IT technical support for providing incidents data. Finally, our thanks go to the domain knowledge experts for their inputs in assessing alternatives and criteria and on the overall feedbacks they provide on this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

Not Applicable.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Alshamsi, A.M., El-Kassabi, H., Serhani, M.A. et al. A multi-criteria decision-making (MCDM) approach for data-driven distance learning recommendations. Educ Inf Technol 28, 10421–10458 (2023). https://doi.org/10.1007/s10639-023-11589-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-023-11589-9