Abstract

Background

Prolonged cold ischemic time (CIT) and increased donor age are well-known factors negatively influencing outcomes after liver transplantation (LT).

Aims

The aim of this study was to evaluate whether the magnitude of their negative effects is related to recipient model for end-stage liver disease (MELD) score.

Methods

This retrospective study was based on a cohort of 1402 LTs, divided into those performed in low-MELD (<10), moderate-MELD (10–20), and high-MELD (>20) recipients.

Results

While neither donor age (p = 0.775) nor CIT (p = 0.561) was a significant risk factor for worse 5-year graft survival in low-MELD recipients, both were found to yield independent effects (p = 0.003 and p = 0.012, respectively) in moderate-MELD recipients, and only CIT (p = 0.004) in high-MELD recipients. However, increased donor age only triggered the negative effect of CIT in moderate-MELD recipients, which was limited to grafts recovered from donors aged ≥46 years (p = 0.019). Notably, utilization of grafts from donors aged ≥46 years with CIT ≥9 h in moderate-MELD recipients (p = 0.003) and those with CIT ≥9 h irrespective of donor age in high-MELD recipients (p = 0.031) was associated with particularly compromised outcomes.

Conclusions

In conclusion, the negative effects of prolonged CIT seem to be limited to patients with moderate MELD receiving organs procured from older donors and to high-MELD recipients, irrespective of donor age. Varying effects of donor age and CIT according to recipient MELD score should be considered during the allocation process in order to avoid high-risk matches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Liver transplantation provides the only chance for long-term survival in patients with chronic end-stage liver disease or acute liver failure and additionally represents the optimal treatment modality for selected patients with malignancies [1]. Due to a wide variety of indications for the procedure and the widespread distribution of liver diseases, the number of potential liver transplantation candidates is steadily growing [2]. Since this growing demand for liver transplants faces the severe limitation of a scarcity of available organs, various efforts are being undertaken to widen the donor pool [3, 4]. These efforts comprise strategies such as utilization of partial grafts procured from living donors, splitting a deceased donor graft for two recipients, and most importantly, transplantation of grafts recovered from the extended-criteria donors [1, 3, 5–7].

Although a uniform, worldwide-accepted definition of an extended-criteria deceased liver donor does not yet exist, a number of donor factors are associated with the results of liver transplantation [8]. In order to quantify overall graft quality, Feng et al. [9] developed the concept of a donor risk index (DRI) based on age, race, cause of death, donation after cardiac death, type of graft, height, duration of cold ischemia, and type of sharing. Following a slight modification, the prognostic significance of the DRI was validated by Braat et al. [10] using Eurotransplant data. Some factors not included either in the original DRI or in its subsequent Eurotransplant modification have also been reported to influence the outcome of transplantation, such as graft steatosis, donor’s length of hospital stay prior to procurement, and body mass index, whereas other factors such as donor hypernatremia are no longer considered relevant [11–14]. Most importantly, donor age and duration of cold ischemia are essential in the assessment of graft quality [15, 16]. Although utilization of the extended-criteria grafts largely widens the donor pool, it may worsen the overall liver transplantation results, particularly when using grafts accumulating more than one of the negative factors [11].

Notably, recipient characteristics are at least as important as donor factors when predicting the results of liver transplantation. A significant association between posttransplant outcomes and variables such as the model for end-stage liver disease (MELD) score, etiology of liver disease, recipient age, United Network for Organ Sharing (UNOS) status, and a history of previous liver transplantation has been reported [17, 18]. Moreover, due to its high ability to predict pretransplant mortality [19], the MELD score is used to allocate organs according to a “sickest first” policy. Since MELD-based allocation does not incorporate donor characteristics, much attention has been paid to the development of an optimal transplant risk score that would be useful in donor–recipient matching.

The complexity of the several proposed transplant risk indices ranges from the simple and easily applicable D-MELD score, which is based on the recipient’s MELD score and the donor’s age, to the balance of risk (BAR) score based on four recipient and two donor variables, to the most complex survival outcomes following liver transplantations (SOFT) score, which utilizes a total of 18 donor and recipient parameters [20–22]. In addition, the use of other risk scores for donor–recipient matching in order to avoid potentially futile transplantations has also been reported [23, 24]. Most importantly, majority of these proposals are based on the assumption that the magnitude of the negative effects of risk factors associated with graft quality is independent of the severity of recipient status. Accordingly, the primary purpose of the present study was to evaluate whether the impact of two major determinants of graft quality, namely donor age and cold ischemia, on long-term outcomes after deceased donor liver transplantation depends upon the recipient’s MELD score.

Methods

This retrospective study was based on the data of 1402 liver transplantations performed between January 2000 and June 2014 in the Department of General, Transplant, and Liver Surgery at the Medical University of Warsaw in Poland. The three main variables of interest were recipient laboratory MELD score (without any exception points), duration of cold ischemia, and donor age. Graft survival at 5 years, defined as a time interval between liver transplantation and retransplantation or death, irrespective of the cause, was set as the primary outcome measure. Observations were censored at the date of last available follow-up or at 5 years. Details on the operative techniques and immunosuppression protocol used have been described elsewhere [25]. The study protocol has been reviewed by the appropriate ethics committee.

First, recipient MELD score, duration of cold ischemia, and donor age were evaluated as risk factors for worse 5-year outcomes in a group of all transplantations included in the study. Second, the latter two variables were evaluated as risk factors for inferior outcomes in separate subgroups of patients with low (<10), moderate (10–20), and high (>20) MELD scores. We searched for optimal cutoffs that could be used to divide donor age and duration of cold ischemia into low- and high-risk values in MELD-derived subgroups of recipients, provided that an independent significant impact on outcomes was found in that particular subgroup. Inclusion of variables into multivariable models was based on clinical significance rather than on results of univariable analyses.

Quantitative and qualitative data are presented as medians with interquartile ranges and numbers with percentages, respectively. The Chi-square test and Kruskal–Wallis test were used for comparisons of categorical and continuous variables between subgroups, respectively. The Kaplan–Meier estimator was used to calculate survival rates, and the reverse Kaplan–Meier method was applied to estimate the median follow-up period. The log-rank test was used to compare survival curves. Multivariable Cox’s proportional hazards models were used to evaluate the association between factors of interest and the primary outcome measure. Receiver operating characteristics (ROC) curves were constructed to find the optimal cutoffs for continuous variables in the prediction of retransplantation or death. Hazard ratios (HRs) and areas under the ROC (AUROC) curves are presented with 95 % confidence intervals (95 % CIs). All p values are two-sided, and the level of significance was set at 0.05. STATISTICA version 10 (StatSoft. Inc., Tulsa, USA) software was used for all statistical analyses.

Results

The baseline characteristics of 1402 liver transplantations included in the study are presented in Table 1. The median follow-up period was 4.3 years. A total of 335 liver transplant recipients underwent retransplantation or died over the 5-year posttransplant period, with an overall graft survival rate of 86.8 % at 90 days, 82.5 % at 1 year, 75.6 % at 3 years, and 71.1 % at 5 years.

In the entire study group, both duration of cold ischemia (p < 0.001) and donor age (p = 0.003) were significant risk factors for worse 5-year graft survival in univariable analyses (Table 2). Out of the remaining factors, significant effects were observed for a history of previous transplantation (p < 0.001) and MELD score (p < 0.001). Multivariable analyses confirmed the independent impact of duration of cold ischemia (p < 0.001), donor age (p = 0.017), and MELD score (p < 0.001). Moreover, an independent significant effect of a history of prior liver transplantation was found (p = 0.002), while the influence of the presence of hepatitis C virus (HCV) infection was on the verge of significance (p = 0.053).

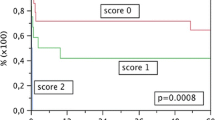

Graft survival at 5 years in patients with MELD scores of <10, 10–20, and >20 was 80.7, 73.6, and 62.4 %, respectively (p < 0.001, Fig. 1). Although significant differences in patient demographics, etiology of liver disease, and cumulative number of previous transplantations performed in the department were observed, donor age and duration of cold ischemia were similar among the three groups of recipients (Table 3). According to the results of multivariable analyses, neither duration of cold ischemia (p = 0.561) nor donor age (p = 0.775) had a significant impact on graft survival in patients with a low MELD score (Table 4). On the contrary, both donor age (p = 0.003) and duration of cold ischemia (p = 0.012) were independent risk factors for worse graft outcomes in patients with a moderate MELD score. In a subgroup of high-MELD recipients, duration of cold ischemia (p = 0.004), but not donor age (p = 0.305), was an independent risk factor for worse graft survival. Moreover, the hazards of prolonged cold ischemia were higher in high-MELD recipients than moderate-MELD recipients.

In moderate-MELD recipients, AUROC for prediction of inferior graft survival based on donor age was 0.574 (95 % CI 0.520–0.628), with an optimal cutoff of 46 years (Fig. 2a). In order to evaluate whether the negative effects of cold ischemia were similar in transplantations of grafts recovered from younger and older donors, two additional multivariable analyses were performed. Following adjustment for the effects of potential confounders comprising recipient age, hepatitis C virus infection status, and a history of previous transplantation, duration of cold ischemia was found to be independently associated with 5-year graft survival after transplantations from donors aged 46 years or older (HR 1.17; 95 % CI 1.03–1.34 per 1-h increase; p = 0.019), but not after transplantations from younger donors (HR 1.11; 95 % CI 0.96–1.27 per 1-h increase; p = 0.151). Consequently, the optimal cutoff for duration of cold ischemia in moderate-MELD recipients of grafts from donors aged 46 years or older was 9 h (AUROC 0.581; 95 % CI 0.500–0.661; Fig. 2b). The graft survival rate at 5 years was 80.2 % for recipients of grafts from donors younger than 46 years irrespective of the duration of cold ischemia, 75.1 % for recipients of grafts from donors aged 46 years or older and duration of cold ischemia under 9 h, and 54.5 % for recipients of grafts from donors aged 46 years or older and duration of cold ischemia of 9 h or more (overall p = 0.003; Fig. 3). Although the difference between the two former subgroups was not significant (p = 0.531), the differences between the latter subgroup and either of the former subgroups were highly significant (p < 0.001 and p = 0.008, respectively).

Receiver operating characteristics curves for prediction of 5-year graft survival based on a donor age in patients with moderate model for end-stage liver disease (MELD) score and on b duration of cold ischemia in patients with moderate MELD score receiving grafts from donors aged ≥46 years. Areas under curves are presented with 95 % confidence intervals (95 % CI)

Comparison of graft survival rate at 5 years between recipients of grafts form donors aged less than 46 years irrespective of the duration of cold ischemia (solid line), recipients of grafts form donors aged 46 years or more and duration of cold ischemia under 9 h (dashed line), and recipients of grafts form donors aged 46 years or more and duration of cold ischemia of 9 h or more (dotted line) in a group of patients with moderate model for end-stage liver disease (MELD) score. Numbers of patients at risk are presented at the bottom

In recipients with high MELD scores, prediction of worse 5-year graft survival based on duration of cold ischemia was associated with an AUROC of 0.629 (95 % CI 0.556–0.702), respectively, and an optimal cutoff of 8.8 h (Fig. 4). For simplicity, the cutoffs for duration of cold ischemia in moderate- and high-MELD recipients were merged into a single value of 9 h. Accordingly, graft survival at 5 years was 71.4 and 57.4 % in high-MELD recipients of grafts with duration of cold ischemia of <9 h or more, respectively (p = 0.031; Fig. 5).

Finally, 5-year graft survival was similar between low-MELD patients receiving grafts from donors aged 46 years or older with cold ischemic time of 9 h or more (81.2 %) and the remaining low-MELD recipients (82.5 %; p = 0.496; Fig. 6a). Similarly, moderate-MELD recipients of grafts recovered from donors younger than 46 years with cold ischemic time over 9 h exhibited a 5-year graft survival (74.9 %) rate similar to those receiving grafts with cold ischemic time of <9 h (81.8 %; p = 0.169; Fig. 6b).

Comparison of 5-year graft survival between a patients with low model for end-stage liver disease (MELD) score receiving grafts from donors aged 46 years or more and cold ischemic time of 9 h (dashed line) and remaining low-MELD recipients (solid line), and b between moderate-MELD recipients of grafts recovered from donors younger than 46 years with cold ischemic time over 9 h (dashed line) and those receiving grafts with cold ischemic time under 9 h (solid line). Numbers of patients at risk are presented at the bottom

Discussion

The results of the present study indicate that the negative effects of increased donor age and prolonged cold ischemia are largely dependent upon the severity of a recipient’s status, as reflected by the MELD score. Accordingly, it seems that the optimal strategy of donor–recipient matching using a step-by-step process is a reasonable alternative to the calculation of a single risk score, which will never be able to adjust for more complex associations between variables. Given the widespread utilization of organs recovered from extended-criteria donors [3, 26, 27], inclusion of at least the major variables defining general quality of the graft into the currently used MELD-based “sickest first” allocation policy has a strong potential to improve the general results of liver transplant programs by avoiding the highest risk matches.

One of the crucial issues in defining a proposal for an allocation strategy is related to the choice of recipient and donor factors that ought to be taken under consideration. As the current policy of evaluating the urgency status is based on the recipient’s MELD score, which is a strong predictor of pretransplant and posttransplant negative outcomes [17, 19], considering it in the allocation process seems natural. Similar to previously published reports, the MELD score was a major determinant of long-term graft survival in the present study. However, the choice of donor variables is much more controversial. Although both donor age and duration of cold ischemia are repeatedly reported to have a profound impact on the risk of graft failure and posttransplant mortality, the list of significant donor risk factors contains many other variables [8–12]. Among these variables, the most relevant include percentage of graft steatosis and donation after cardiac death [23, 28, 29]. Given that there were no transplantations from donors after cardiac death or split liver transplantations in the study group, the results of the present study are obviously limited to transplantations of full-sized grafts recovered from brain-dead donors. The inclusion of percentage of graft steatosis into the allocation strategy would be impractical, as such information is not available during the initial step of the process and requires performance of graft biopsies, particularly in doubtful cases. Therefore, donor age and duration of cold ischemia were selected, with the former being available immediately and the latter being mostly predictable. Most importantly, both factors have been frequently applied for such a purpose and are included in various models, such as the BAR, SOFT, DRI, and Eurotransplant-DRI, among other risk scores [9, 10, 20–22]. We created a proposal for the allocation strategy based on the differences in effects of donor age and cold ischemia on long-term posttransplant outcomes in patients with low, moderate, and high MELD scores and using the established cutoffs. This comprises the allocation of all grafts with an anticipated cold ischemic time of less than 9 h based only on recipient MELD score and those with longer anticipated cold ischemic times to patients with a MELD score of up to 20 or <10, depending on donor age (<46 and ≥46 years, respectively; Fig. 7).

Although multivariable models revealed an independent association between donor age and long-term outcomes, subsequent analyses pointed toward its rather adjunct character to the negative effects of prolonged cold ischemia. More specifically, increased donor age only triggered the negative impact of prolonged cold ischemia in moderate-MELD recipients. Furthermore, no significant impact of increased donor age or cold ischemia was observed in this particular subgroup following exclusion of the high-risk grafts recovered from older donors with increased cold ischemic time. Therefore, the results of the present study are paradoxically in line with those reporting safe utilization of grafts recovered from older donors [30], demonstrating their safe use in high- and low-MELD patients, as well as in moderate-MELD recipients if the duration of cold ischemia remains within a safe limit. Moreover, increased susceptibility to ischemic injury has previously been reported for grafts recovered from older donors [31], yet based on the results of the present study, the clinical relevance of this phenomenon seems to be limited to patients with a moderate MELD score. In high-MELD patients at highest risk of negative outcomes, the negative effects of prolonged cold ischemia were independent of donor age. Therefore, a combination of increased risk of negative outcomes secondary to high recipient MELD scores and those secondary to even mild ischemic injury of the graft should ideally be avoided, as it leads to deterioration of graft survival at 5 years by approximately 15 %.

The proposed strategy for allocation of grafts recovered from donors after brain death was based on the establishment of high-risk donor–recipient matches, and thus, its implementation might lead to a general improvement in liver transplant programs results. A second and equally important finding was that the utilization of moderate- and high-risk grafts in moderate- and low-MELD recipients, respectively, did not harm these patients. Nevertheless, the shape of the ROC curves and the similarity between the established cutoffs and medians indicate that the definitions of prolonged cold ischemia and increased donor age should ideally not be uniform, but rather selected by particular centers or programs. Moreover, although donor age is readily available at the initial step of allocation, it may not always be possible to predict the duration of cold ischemia with sufficient precision. Therefore, the current simple proposal of donor–recipient matching in liver transplantation should not be considered as a strict rule, but rather as scheme to be adjusted for regional availability of donors and their characteristics, as well as for the situation on the waiting list.

Given the major impact of prolonged cold ischemia and approximate character of the established cutoff, extensive efforts should be made to minimize to magnitude of graft ischemic injury. According to the results of several recent studies, it may be achieved by utilization of novel preservation techniques, such as hypothermic, subnormothermic, or normothermic continuous perfusion of the graft [32–36]. Although there is a wide variation in these techniques and their utilization has been reported mostly within the donation after cardiac death setting, future developments in graft preservation might contribute to a general improvement in outcomes.

One of the most important potential limitations of the proposed allocation strategy is related to the availability of adequate grafts for high-MELD recipients, a subgroup with the highest risk of pretransplant mortality [19]. However, defining prolonged cold ischemia based on the median value might overcome this potential limitation, leading to allocation of 50 % of available grafts to these recipients. Considering that approximately 25 % of further grafts (a combination of two independent median values) would additionally support moderate-MELD recipients, only 25 % of the highest risk grafts should be specifically allocated to patients with a MELD score <10. Notably, a subgroup of low-MELD recipients comprises a major proportion of patients with hepatocellular cancer, which is currently an indication for approximately 10–30 % of performed liver transplantations [37, 38]. Utilization of grafts recovered from older donors with prolonged cold ischemia in cancer patients, as well as in other patients in whom liver failure is not the main indication for the procedure (i.e., those with recurrent bleeding from esophageal varices or selected patients with primary sclerosing cholangitis), may therefore facilitate wider use of the proposed scheme of donor–recipient matching. On the other hand, results of a recent study by Nagai et al. point toward increased risk of posttransplant cancer recurrence associated with worse graft quality, particularly in the setting of more severe ischemia–reperfusion injury [39]. However, no significant effects of transplantation of high-risk grafts in low-MELD recipients were observed in the present study, with a superior 5-year graft survival rate exceeding 80 %, despite the fact that nearly a quarter of these patients had hepatocellular cancer. Moreover, analysis of nearly 30,000 liver transplants performed by Salgia et al. [40] revealed a similar impact of donor factors on posttransplant outcomes in patients with hepatocellular cancer. Moreover, the proposed strategy would partly balance the current unjustifiably favorable priority of cancer patients on waiting lists.

Nevertheless, limitation of the pool of potential donors for high-MELD recipients may substantially increase waiting-list mortality in this population. In the present study, less than a quarter of patients underwent transplantation with a high MELD score, and therefore, the ratio of the number of grafts available for these patients according to the proposed scheme to the number of high-MELD recipients was approximately two. However, it would be below one if the proportion of high-MELD patients was over 50 %. Therefore, selection of optimal grafts for high-MELD recipients based on the proposed strategy without increasing the risk of waiting-list mortality might not be possible in centers with a high proportion of high-MELD recipients.

Notably, results of the present study are partly in line with those of Bonney et al. [41] in which the negative effects of using high-DRI grafts were observed in intermediate-MELD recipients. In high-MELD recipients, the effects of high-DRI grafts were also present, though to a lesser extent. These previous finding are probably related to the higher impact of increased donor age in intermediate-MELD recipients, as the DRI is largely dependent upon this factor. In contrast, based on survival-benefit-driven analyses, Schaubel et al. [42] observed that transplantation of high-DRI grafts is associated with increased risk of mortality in recipients with the lowest MELD scores as compared to that of patients remaining on the waiting list. However, despite a lack of statistical significance, absolute HRs were also increased for transplantation of medium- and low-DRI grafts in this subgroup, suggesting that this observation only reflected the increased risk of early mortality associated with the operative procedure.

Several limitations of the present study should be acknowledged. First, it is subject to the disadvantages of its retrospective and single-center nature. Most importantly, the proposed strategy based on the data from a single center is not easily transferable to other centers and is presented as a general alternative to the existing allocation strategies rather than a strict rule. Third, the obtained results do not apply for the allocation of split grafts or those recovered from donors after cardiac death, yet one may expect that the negative effects of the two studied donor variables are even more pronounced in these types of transplantations.

In conclusion, the effects of prolonged cold ischemia and donor age vary in patients according to the severity of liver dysfunction. The combination of these variables with recipient MELD score in a step-by-step process of organ allocation has the strong potential to improve the results of liver transplant programs. Moreover, it seems to be a reasonable alternative to the use of complex risk scores or indices in the process of donor–recipient matching, as the latter lack the ability to adapt to more complex associations between variables.

References

Dutkowski P, Linecker M, DeOliveira ML, Müllhaupt B, Clavien PA. Challenges to liver transplantation and strategies to improve outcomes. Gastroenterology. 2015;148:307–323.

Kim WR, Lake JR, Smith JM, et al. OPTN/SRTR 2013 annual data report: liver. Am J Transpl. 2015;15:1–28.

Johnson RJ, Bradbury LL, Martin K, Neuberger J, UK Transplant Registry. Organ donation and transplantation in the UK-the last decade: a report from the UK national transplant registry. Transplantation. 2014;97:S1–S27.

Klein AS, Messersmith EE, Ratner LE, Kochik R, Baliga PK, Ojo AO. Organ donation and utilization in the United States, 1999–2008. Am J Transpl. 2010;10:973–986.

Chu KK, Chan SC, Sharr WW, Chok KS, Dai WC, Lo CM. Low-volume deceased donor liver transplantation alongside a strong living donor liver transplantation service. World J Surg. 2014;38:1522–1528.

Wan P, Li Q, Zhang J, Xia Q. Right lobe split liver transplantation versus whole liver transplantation in adult recipients: a systematic review and meta-analysis. Liver Transpl. 2015. doi:10.1002/lt.24135.

Müllhaupt B, Dimitroulis D, Gerlach JT, Clavien PA. Hot topics in liver transplantation: organ allocation-extended criteria donor–living donor liver transplantation. J Hepatol. 2008;48:S58–67.

Silberhumer GR, Rahmel A, Karam V, et al. The difficulty in defining extended donor criteria for liver grafts: the Eurotransplant experience. Transpl Int. 2013;26:990–998.

Feng S, Goodrich NP, Bragg-Gresham JL, et al. Characteristics associated with liver graft failure: the concept of a donor risk index. Am J Transpl. 2006;6:783–790.

Braat AE, Blok JJ, Putter H, et al. The Eurotransplant donor risk index in liver transplantation: ET-DRI. Am J Transpl. 2012;12:2789–2796.

Cameron AM, Ghobrial RM, Yersiz H, et al. Optimal utilization of donor grafts with extended criteria: a single-center experience in over 1000 liver transplants. Ann Surg. 2006;243:748–753.

Bloom MB, Raza S, Bhakta A, et al. Impact of deceased organ donor demographics and critical care end points on liver transplantation and graft survival rates. J Am Coll Surg. 2015;220:38–47.

Subramanian V, Seetharam AB, Vachharajani N, et al. Donor graft steatosis influences immunity to hepatitis C virus and allograft outcome after liver transplantation. Transplantation. 2011;92:1259–1268.

Mangus RS, Fridell JA, Vianna RM, et al. Severe hypernatremia in deceased liver donors does not impact early transplant outcome. Transplantation. 2010;90:438–443.

Stahl JE, Kreke JE, Malek FA, Schaefer AJ, Vacanti J. Consequences of cold-ischemia time on primary nonfunction and patient and graft survival in liver transplantation: a meta-analysis. PLoS One. 2008;3:e2468.

Cassuto JR, Patel SA, Tsoulfas G, Orloff MS, Abt PL. The cumulative effects of cold ischemic time and older donor age on liver graft survival. J Surg Res. 2008;148:38–44.

Agopian VG, Petrowsky H, Kaldas FM, et al. The evolution of liver transplantation during 3 decades: analysis of 5347 consecutive liver transplants at a single center. Ann Surg. 2013;258:409–421.

Burroughs AK, Sabin CA, Rolles K, et al. 3- and 12-month mortality after first liver transplant in adults in Europe: predictive models for outcome. Lancet. 2006;367:225–232.

Kim WR, Biggins SW, Kremers WK, et al. Hyponatremia and mortality among patients on the liver-transplant waiting list. N Engl J Med. 2008;359:1018–1026.

Halldorson JB, Bakthavatsalam R, Fix O, Reyes JD, Perkins JD. D-MELD, a simple predictor of post liver transplant mortality for optimization of donor/recipient matching. Am J Transpl. 2009;9:318–326.

Dutkowski P, Oberkofler CE, Slankamenac K, et al. Are there better guidelines for allocation in liver transplantation? A novel score targeting justice and utility in the model for end-stage liver disease era. Ann Surg. 2011;254:745–753.

Rana A, Hardy MA, Halazun KJ, et al. Survival outcomes following liver transplantation (SOFT) score: a novel method to predict patient survival following liver transplantation. Am J Transpl. 2008;8:2537–2546.

Angelico M, Nardi A, Romagnoli R, et al. A Bayesian methodology to improve prediction of early graft loss after liver transplantation derived from the liver match study. Dig Liver Dis. 2014;46:340–347.

Stey AM, Doucette J, Florman S, Emre S. Donor and recipient factors predicting time to graft failure following orthotopic liver transplantation: a transplant risk index. Transpl Proc. 2013;45:2077–2082.

Krawczyk M, Grąt M, Barski K, et al. 1000 liver transplantations at the Department of General, Transplant and Liver Surgery, Medical University of Warsaw—analysis of indications and results. Pol Przegl Chir. 2012;84:304–312.

Marti J, Fuster J, Navasa M, et al. Effects of graft quality on non-urgent liver retransplantation survival: Should we avoid high-risk donors? World J Surg. 2012;36:2914–2922.

Routh D, Sharma S, Naidu CS, Rao PP, Sharma AK, Ranjan P. Comparison of outcomes in ideal donor and extended criteria donor in deceased donor liver transplant: a prospective study. Int J Surg. 2014;12:774–777.

Callaghan CJ, Charman SC, Muiesan P, et al. Outcomes of transplantation of livers from donation after circulatory death donors in the UK: a cohort study. BMJ Open. 2013;3:e003287.

de Graaf EL, Kench J, Dilworth P, et al. Grade of deceased donor liver macrovesicular steatosis impacts graft and recipient outcomes more than the Donor Risk Index. J Gastroenterol Hepatol. 2012;27:540–546.

Chapman WC, Vachharajani N, Collins KM, et al. Donor age-based analysis of liver transplantation outcomes: short- and long-term outcomes are similar regardless of donor age. J Am Coll Surg. 2015. doi:10.1016/j.jamcollsurg.2015.01.061.

Tekin K, Imber CJ, Atli M, et al. A simple scoring system to evaluate the effects of cold ischemia on marginal liver donors. Transplantation. 2004;77:411–416.

Schlegel A, Dutkowski P. Role of hypothermic machine perfusion in liver transplantation. Transpl Int. 2015;28:677–689.

Oniscu GC, Randle LV, Muiesan P, et al. In situ normothermic regional perfusion for controlled donation after circulatory death–the United Kingdom experience. Am J Transplant. 2014;14:2846–2854.

Guarrera JV, Henry SD, Samstein B, et al. Hypothermic machine preservation facilitates successful transplantation of “orphan” extended criteria donor livers. Am J Transplant. 2015;15:161–169.

Fontes P, Lopez R, van der Plaats A, et al. Liver preservation with machine perfusion and a newly developed cell-free oxygen carrier solution under subnormothermic conditions. Am J Transplant. 2015;15:381–394.

Bruinsma BG, Yeh H, Ozer S, et al. Subnormothermic machine perfusion for ex vivo preservation and recovery of the human liver for transplantation. Am J Transplant. 2014;14:1400–1409.

Adam R, Delvart V, Karam V, et al. Compared efficacy of preservation solutions in liver transplantation: a long-term graft outcome study from the European Liver Transplant Registry. Am J Transplant. 2015;15:395–406.

Wong RJ, Chou C, Bonham CA, Concepcion W, Esquivel CO, Ahmed A. Improved survival outcomes in patients with nonalcoholic steatohepatitis and alcoholic liver disease following liver transplantation: an analysis of 2002-2012 United Network for Organ Sharing data. Clin Transplant. 2014;28:713–721.

Nagai S, Yoshida A, Facciuto M, et al. Ischemia time impacts recurrence of hepatocellular carcinoma after liver transplantation. Hepatology. 2015;61:895–904.

Salgia RJ, Goodrich NP, Marrero JA, Volk ML. Donor factors similarly impact survival outcome after liver transplantation in hepatocellular carcinoma and non-hepatocellular carcinoma patients. Dig Dis Sci. 2014;59:214–219.

Bonney GK, Aldersley MA, Asthana S, et al. Donor risk index and MELD interactions in predicting long-term graft survival: a single-centre experience. Transplantation. 2009;87:1858–1863.

Schaubel DE, Sima CS, Goodrich NP, Feng S, Merion RM. The survival benefit of deceased donor liver transplantation as a function of candidate disease severity and donor quality. Am J Transplant. 2008;8:419–425.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. For this type of study, formal consent is not required.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution-NonCommercial 4.0 International License (http://creativecommons.org/licenses/by-nc/4.0/), which permits any noncommercial use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Grąt, M., Wronka, K.M., Patkowski, W. et al. Effects of Donor Age and Cold Ischemia on Liver Transplantation Outcomes According to the Severity of Recipient Status. Dig Dis Sci 61, 626–635 (2016). https://doi.org/10.1007/s10620-015-3910-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10620-015-3910-7