Abstract

Time Series Classification (TSC) is essential in fields like medicine, environmental science, and finance, enabling tasks such as disease diagnosis, anomaly detection, and stock price analysis. While machine learning models like Recurrent Neural Networks and InceptionTime are successful in numerous applications, they can face scalability issues due to computational requirements. Recently, ROCKET has emerged as an efficient alternative, achieving state-of-the-art performance and simplifying training by utilizing a large number of randomly generated features from the time series data. However, many of these features are redundant or non-informative, increasing computational load and compromising generalization. Here we introduce Sequential Feature Detachment (SFD) to identify and prune non-essential features in ROCKET-based models, such as ROCKET, MiniRocket, and MultiRocket. SFD estimates feature importance using model coefficients and can handle large feature sets without complex hyperparameter tuning. Testing on the UCR archive shows that SFD can produce models with better test accuracy using only 10% of the original features. We named these pruned models Detach-ROCKET. We also present an end-to-end procedure for determining an optimal balance between the number of features and model accuracy. On the largest binary UCR dataset, Detach-ROCKET improves test accuracy by 0.6% while reducing features by 98.9%. By enabling a significant reduction in model size without sacrificing accuracy, our methodology improves computational efficiency and contributes to model interpretability. We believe that Detach-ROCKET will be a valuable tool for researchers and practitioners working with time series data, who can find a user-friendly implementation of the model at https://github.com/gon-uri/detach_rocket.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Time series classification (TSC) refers to the task of assigning a specific label or category to a given sequence of data points that are ordered in time. Its significance extends across various domains, including both foundational research and practical real-world applications, such as heart disease diagnosis (Ebrahimi et al. 2020), speech recognition (Hinton et al. 2012), environmental monitoring (Sarp and Ozcelik 2017; Davranche et al. 2010) and industrial quality control (Mehdiyev et al. 2017; Chadha et al. 2020). With the ever-increasing availability of sensor data and computing devices, the demand for accurate and efficient time series classification algorithms has grown exponentially. This need becomes particularly critical in the domains of healthcare and wearable devices, where the ability to robustly classify time series data in real time carries immense practical implications.

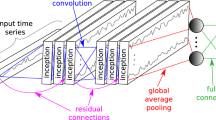

Machine learning (ML) models have proven to be a highly effective solution to TSC problems in a wide range of applications. Models such as Recurrent Neural Networks (RNNs), HIVE-COTE, and InceptionTime have demonstrated remarkable performance in this domain (Yong et al. 2019; Fawaz et al. 2020; Vaswani et al. 2017). However, the large number of learnable parameters and the complexity of the training procedure in such models can affect their scalability and efficiency, especially in the case of large numbers of instances or longer time series. For example, HIVE-COTE has a high training time complexity that scales as \(O\left( n^2 \cdot l^4\right)\), where n is the number of training samples and l is the length of the time series (Fawaz et al. 2020). InceptionTime, on the other hand, is faster to train, but the stochastic nature of the process leads to high variability in the results, making it necessary to use an ensemble of networks for prediction (Fawaz et al. 2020).

To address these challenges, alternative models such as ROCKET, MiniRocket, and MultiRocket were introduced (Dempster et al. 2020, 2021; Tan et al. 2022). They achieve state-of-the-art performance by training a shallow classifier on a large set of precomputed features generated by random convolutional kernels. Despite the significant reduction in learnable parameters, which greatly improves their training scalability, a large number of convolutions are still required to compute the features for inference. Considering the arbitrary nature of the kernels, we hypothesize that a significant fraction of the generated features are either redundant due to correlations or lack relevance for the classification task at hand. These superfluous features may not only add unnecessary computational load, but may also induce overfitting and compromise model generalization.

In this study, we present a novel methodology to sequentially select and prune the features generated by random convolutional kernel transformations in TSC, which we call Sequential Feature Detachment (SFD). Leveraging the linear nature of the classifier, our method uses model coefficients to estimate feature importance at each step. Unlike prior feature selection algorithms such as Thresholded Least-Squares (STLSQ) and Stepwise Sparse Regressor (SSR) (Brunton et al. 2016; Boninsegna et al. 2018), which were developed for sparse regression in the context of system identification, SFD is designed to efficiently handle tasks with large feature sets and eliminates the need for sensitive hyperparameter selection. Throughout this work, we will use the prefix ‘Detach’ to refer to SFD applied to any given base model (e.g., Detach-ROCKET).

To evaluate our methodology, we conducted experiments using the ROCKET model on the UCR archive, a standard benchmark for TSC. For the datasets studied, we show that models can be pruned to retain as little as \(5\%\) of the original number of features while maintaining performance equal to or better than the full initial model. In particular, when retaining \(10\%\) of the features, Detach-ROCKET shows on average a relative accuracy gain of \(0.2\%\) compared to the full model. We also tested SFD in other variants of ROCKET, namely MiniRocket and MultiRocket, where it showed similar pruning performance. In a direct comparison with alternative pruning strategies for the ROCKET model, specifically S-ROCKET and POCKET, Detach-ROCKET achieved the same level of accuracy while utilizing only \(10\%\) of the full model features, compared to an average of \(35.10\%\) and \(38.93\%\) for the other methods on the same datasets.

Additionally, we introduce an end-to-end procedure for finding the optimal model size when pruning a ROCKET model. This optimal configuration balances the trade-off between the number of pruned features and the accuracy of the pruned model on a validation set. Evaluation of the procedure on the largest binary UCR dataset reveals that it can reduce model size by \(98.9\%\), while delivering an average increase in test accuracy of \(0.6\%\) and attenuating overfitting in the training set from \(99.8\%\) to \(96.5\%\).

The rest of this paper is structured as follows. In Sect. 2, we review ROCKET models and previous work on feature selection. In Sect. 3, we introduce SFD and the end-to-end Detach-ROCKET model. In Sect. 4, we present our experimental results on the UCR dataset. In Sect. 5, we show a comparison of Detach-Rocket with alternative pruning strategies. In Sect. 6, we compute the complexity of our algorithm. Finally, Sect. 7 presents the discussion and Sect. 8 the conclusions.

2 Background

2.1 Time series classification

Reflecting the dominant trend in machine learning, the majority of state-of-the-art solutions for TSC are characterized by models containing a large number of parameters (Fawaz et al. 2019; Bagnall et al. 2017; Ruiz et al. 2021; Middlehurst et al. 2023). These include neural network architectures tailored for processing sequential data, such as RNNs, one-dimensional convolutions, and transformers (Yong et al. 2019; Uribarri and Mindlin 2022; Fawaz et al. 2020; Vaswani et al. 2017). A particularly successful example is InceptionTime, a deep convolutional neural network inspired by Inception models for images (Fawaz et al. 2020). Another category with an extensive number of parameters comprises ensemble methods, which usually combine several different types of classifiers into a large meta-ensemble. flat-COTE, TS-CHIEF, HIVE-COTE 1.0, and HIVE-COTE 2.0 are prominent examples of this approach (Shifaz et al. 2020; Bagnall et al. 2015; Lines et al. 2018; Middlehurst et al. 2021).

Despite their high performance, large models are not without limitations, which become critical in sparse data scenarios or on systems constrained by computational resources. Their large dataset requirements, scalability challenges, and deployment complexity underscore the need for alternative approaches. Among the available alternatives, random convolution kernel models offer compelling performance.

2.2 Random convolutional kernel transformation (ROCKET)

ROCKET is a computationally efficient model designed for time series classification (Dempster et al. 2020). It employs a large set of K non-trainable random convolutional kernels to process the input time series \(\textbf{s}_i\) and generate a collection of feature maps \(\{\mathbf {f_{ik}}\}\) by applying the following convolution operation:

The set of K randomly generated kernels are characterized by their coefficient values \(\{\mathbf {R_k}\}\), bias values \(\{b_k\}\), and their associated convolution properties, such as dilation and padding. Then, from each feature map \(\textbf{f}_{ik}\), the maximum value (MAX) and the proportion of positive values (PPV) are extracted, yielding a total of \(F=2K\) final attributes:

Here \(\textbf{x}_i\) refers to the feature set associated to the the input time series \(\textbf{s}_i\). After this transformation stage, which requires no training, a ridge linear classifier is fitted to the training dataset. In the binary case, the target labels \(y_i\) are converted to \(\{-1,1\}\) and the following optimization problem is solved:

where N is the size of the training dataset, and \(\theta _k\) are the coefficients of the regression to be found. For smaller to medium-sized datasets, solving the ridge regression using matrix factorization techniques is usually the best option (Rifkin 2007; Dempster et al. 2020), while for larger datasets, an iterative method like stochastic gradient descent provides fast training with a fixed memory cost (Dempster et al. 2020).

The performance of ROCKET is comparable to other leading larger models, but it requires significantly less computation (Dempster et al. 2020; Ruiz et al. 2021). Since ROCKET’s convolutional phase consists of non-learnable kernels, only the linear classifier needs to be trained. As a result, the training process is substantially simpler and faster, allowing ROCKET to handle larger datasets that were previously inaccessible to more complex models (Dempster et al. 2020). However, this efficiency may come at a cost. It is reasonable to expect that when using fixed (non-learnable) basis functions, the error in the worst-case scenario may be considerably larger compared to the use of adaptable (learnable) basis functions, as seen in neural networks. As shown in Barron (1993), in a quite general setting, the lower bound for the integrated squared error of a linear combination of n non-learnable basis functions scales as \(\propto (1/n)^{(2/d)}\), where d is the dimensionality of the space, which in our case is the length of the time series. Therefore, a large number of basis functions, or kernels in the context of ROCKET, is required for effective class discrimination.

Building on the foundation of ROCKET, numerous variants have emerged (Dempster et al. 2021; Tan et al. 2022; Schlegel et al. 2022; Wang et al. 2023; Dempster et al. 2023), of which two are noteworthy: MiniRocket and MultiRocket. MiniRocket elaborates on the approach by employing a dictionary of fixed diverse kernels instead of randomly generating them, providing similar accuracy through a more efficient process. MultiRocket, on the other hand, advances the MiniRocket design by including the first order difference of the time series as part of the input and integrating a wider array of pooling operators for each feature map. This increases the expressiveness of the extracted features and improves model performance at the cost of greater computational load.

All variants of ROCKET, regardless of their differences, share the same core strategy. First, the time series is subjected to a non-learnable transformation stage that produces a massive amount of features. Then, a regularized linear classifier is trained within this high-dimensional representation space. While effective, this strategy is not optimal. In particular, due to the arbitrary generation process, numerous features may be irrelevant to the classification task. Furthermore, even among the relevant features, many could potentially be highly correlated due to their sheer number. This excess of redundant and non-informative features can not only create unnecessary computational overhead, but also increase the potential for overfitting.

2.3 Previous work

In a previous study (Salehinejad et al. 2022), the authors investigated kernel selection and pruning for TSC with random convolutional kernels. They propose a population-based evolutionary optimization algorithm to find the optimal combination of kernels to keep in a ROCKET classifier, which they called S-ROCKET. By training a new ridge classifier on these selected features, they were able to achieve similar performance to the original full models while pruning \(60\%\) of the kernels.

In contrast to the aforementioned work, which uses a model-agnostic approach to prune the less relevant features, our methodology exploits the simplicity and shallowness of the linear classifier employed in ROCKET models. The problem of feature selection within linear models has been extensively studied in the field of statistical learning, providing a broad body of knowledge to draw upon. Particularly, when the number of features suprasses the number of data samples, this problem is referred to as sparse regression or compressive sensing (Hastie et al. 2009; James et al. 2013; Tibshirani 1996; Donoho 2006; Candès et al. 2006). The main challenge in our particular context lies in developing a methodology that can effectively handle the substantial number of features present in our models, with 20,000 in the case of the regular ROCKET model and up to 80,000 in MultiRocket.

A standard solution to address feature selection in a linear model is to apply a lasso (L1) regularization to the linear classifier (James et al. 2013; Tibshirani 1996). Then, by changing the regularization parameter, one could detect and discard the coefficients which are the ones first approaching zero as the regularization parameter increases. However, there is no closed-form expression for the solution of a lasso regression, and the available numerical algorithms for solving it are computationally expensive, making this option challenging for large datasets such as those produced by the ROCKET models. Nevertheless, in a recent preprint study, the authors explore the use of a particular variant of lasso regression, group elastic net, for feature selection in ROCKET models (Chen et al. 2023). They call their algorithm POCKET and show that it produces similar results to S-ROCKET.

An alternative strategy to Lasso regularization involves utilizing a backward-stepwise selection technique, which systematically removes less relevant features from the classification problem (Hastie et al. 2009). Recent studies in system identification have showcased the effectiveness of two specific variations of this strategy, namely Sequential Thresholded Least-Squares (STLSQ) and Stepwise Sparse Regressor (SSR) (Brunton et al. 2016; Boninsegna et al. 2018). Both algorithms exhibit robust performance and usually outperform other alternatives such as lasso or forward-stepwise selection, especially in scenarios where datasets are large or noisy (Kaptanoglu et al. 2023; de Silva et al 2020; Kaptanoglu et al. 2021). However, they are suboptimal for ROCKET feature selection because they require either a sensitive and unintuitive choice of hyperparameters or very heavy computation.

In the STLSQ algorithm, the one used in the popular SINDy method for systems identification, features are sequentially eliminated at each step if their corresponding regression coefficient does not exceed a predetermined threshold value. The elimination process ends when all remaining coefficients exceed the threshold (Brunton et al. 2016). This stands in contrast to SSR, where the feature with the smallest coefficient is discarded at each step until a model with only one feature remains. Subsequently, STLSQ applies a cross-validation procedure to select the optimal step and estimate the most suitable model configuration (Boninsegna et al. 2018).

In the following section, we present our proposed feature selection algorithm called Sequential Feature Detachment (SFD), which is a novel variant of the backward-stepwise selection technique specifically designed to address the computational challenge of the large number of features present in the classification problem after a ROCKET transformation.

3 Methods

In this section, we provide a comprehensive description of the methods used in this paper. Section 3.1 introduces SFD, the proposed algorithm for performing feature selection in linear classifiers with a large number of features. Section 3.2 describes the end-to-end procedure for training a pruned ROCKET model, including the criteria for selecting the optimal model size.

3.1 Sequential feature detachment (SFD)

Let \(\varvec{ \mathbb {F} }\) denote the set of features produced by the ROCKET transformation, which has a cardinality \(F=2K=\# \varvec{\mathbb {F}}\). In the case of a standard ROCKET model, \(F=20{,}000\), while for MultiRocket \(F=80{,}000\). The dataset to be used must be normalized along these features. We define \(\varvec{\mathbb {S}} \subseteq \varvec{\mathbb {F}}\) as the set of active features, which is initialized to be equal to \(\varvec{\mathbb {F}}\) and updated at each step.

The feature selection procedure involves iterative detachment steps, where a fixed percentage of the current active features is removed from \(\varvec{\mathbb {S}}\) at each step. The proportion of removed features, denoted as p, is the only relevant hyperparameter in the process.

At each step t, a ridge classifier is trained on the set of active features at that step \(\varvec{\mathbb {S}}_{t}\). This is done by solving the following optimization problem:

The training yields a set of optimal coefficients, \({\hat{\theta \ }_t}^{\textrm{ridge}} = \{\hat{\theta }_k \}\), each of which is associated with one particular active feature. The features are subsequently ranked based on the absolute value of these coefficients, {\(|\hat{\theta }_k|\)}. These values are proportional to the influence of each feature on the classifier’s decision. The lowest \(100\cdot p\) percentage of ranked features are then discarded, while the remaining \(100\cdot (1-p)\) percentage is retained as the new set of active features \(\varvec{\mathbb {S}}_{t+1}\). The pseudocode for this process is presented in Algorithm 1. Note that during this detachment process, the regularization parameter \(\lambda\) of the ridge regressors is set to the value obtained for the initial full model using cross-validation.

The value of the parameter p controls the trade-off between the computational cost and precision in the procedure. A higher p value speeds up pruning, but increases the risk of eliminating an entire family of correlated features that encode similar but relevant information from the time series. Note that in the case of correlated features, the magnitude of their individual coefficients may be small because the weight of their contribution to the classifier is distributed among all of them. To maintain a conservative default value, we set \(p=0.05\), which corresponds to removing \(5\%\) of the features at each step. In scenarios where computational training time or procedure precision is a concern, the value can be adjusted.

The percentage of total features removed at step t is independent of the number of initial features F, and it is given by the expression \(1-(1-p)^{t}\). As the number t increases, the computational cost of each step decreases as the trained model becomes smaller. The total number of steps performed, M, determines the maximum proportion of pruned features explored during the selection process. The choice of M is not critical, as long as the number of removed features extends beyond the optimal. For \(p=0.05\), setting \(M=150\) will explore removals of up to \(99.95\%\) of the features, which should be sufficient for most applications.

This methodology can also be extended to the case of multi-class problems, where there are more than 2 possible classes for each instance. Given a classification problem with C number of classes, C ridge classifiers are trained simultaneously, and each feature k now has C associated coefficients, \(\hat{\theta }_{kc}\). To determine the relevance of each feature and rank them accordingly, we implement a max-pooling operation along the class dimension. That is, at each step t, the features are ranked based on the values {\(|{{\text {max}}}_{c}(\hat{\theta }_{kc})|\)} instead of {\(|\hat{\theta }_k|\)}, and the rest of the procedure remains the same as for the binary case.

3.2 End-to-end feature pruning optimization

To determine the optimal number of features to retain in a given dataset, we introduce an end-to-end procedure. The procedure starts by dividing the dataset into three subsets: a training set, a validation set, and a test set. A standard ROCKET model is then trained on the training set and the SFD process is applied (as described in Sect. 3.1). Evaluation of the model at each pruning step on the validation set yields an accuracy curve. To select the optimal fraction of pruned features on this curve, \(Q_c\), a straightforward optimization problem is solved:

In this equation, q represents the proportion of pruned features and \(\alpha (q)\) the accuracy of the pruned model on the validation set. The hyperparameter c serves as a weighting factor that determines the tradeoff between these two variables which we want to maximize. The choice of c depends on the specific requirements of the use-case, with smaller values favoring accuracy and larger values favoring size.

Once \(Q_c\) is determined, the selected features in the pruned ROCKET are used to retrain a ridge classifier on the combined training and validation sets. This results in the final Detach-ROCKET model, an optimal reduced model containing only a subset of the original kernels. A final evaluation of Detach-ROCKET is then performed on the test dataset.

4 Experiments

4.1 Datasets and model description

To test the SFD methodology, we used all 42 binary classification datasets available in the UCR Time Series Classification Archive (Dau et al. 2019), a widely recognized benchmark for time series classification (Bagnall et al. 2017; Middlehurst et al. 2023). These datasets cover a wide range of univariate time series, varying in size and characteristics. Our decision to focus exclusively on binary classification tasks was primarily driven by computational constraints and a desire to evaluate SFD in its simplest setting, without class mixing. It is important to note that, as described in Sect. 3.1, the SFD algorithm can also handle multiclass scenarios. In Sect. 5, we compare Detach-ROCKET to alternative methods in a benchmark that includes both binary and multiclass problems.

In the feature generation phase of our experiments, we employed the standard ROCKET algorithm with a kernel count of \(K=10000\), as recommended by its original developers. For the implementation we used the sktime library (Löning et al. 2019, 2022). This transformation resulted in each input time series being mapped to a 20,000-dimensional feature vector, representing the PPV and MAX values of the convolution with each kernel. Subsequently, a ridge classifier was trained on these generated features. For training, we respected the original training/test split for each dataset. The regularization parameter for this classifier was determined using Leave One Out cross-validation across 20 alpha values, uniformly distributed on a logarithmic scale between \(e^{-10}\) and \(e^{10}\). In the text, we refer to this classifier as the Full Model.

All experiments were performed with the default SFD parameters, comprising the proportion of removed features p set to 0.05 and number of iteration steps M set to 150.

4.2 SFD results

We evaluated the SFD process on the 42 binary datasets from the UCR archive. The feature detachment process was applied 25 times to each dataset, using a different starting set of random kernels for each run. To evaluate its performance, we compare the accuracy obtained on the test set by the pruned model with the accuracy obtained on the test set by the full ROCKET model.

4.2.1 Comparative performance of pruned models to full ROCKET

Performance comparison of pruned models using SFD relative to the full ROCKET model. The plot shows the number of pruned models with better, equal, and worse performance compared to the full model, plotted against the percentage of retained features. The solid lines represent the mean number over 25 iterations, while the shaded area indicates the standard deviation. The dashed vertical line marks \(10\%\) of retained features (Color figure online)

Figure 1 shows the number of pruned models with better, equal, and worse performance compared to the full model, plotted against the percentage of retained features. The mean across the 25 iterations is depicted by solid lines, with the shaded region denoting the standard deviation. At the beginning of the feature detachment process, all pruned models (across the 42 datasets) mirror the performance of the full ROCKET model. As the feature reduction progresses, there is an even division among the models that become better or worse compared to the full model. Once the percentage of retained features drops below \(40\%\), the pruned models that outperform the full model become more prevalent. Specifically, when feature retention is between \(35.85\%\) and \(5.10\%\), there are significantly more pruned models that outperform the full model than pruned models that perform worse than the full model, as confirmed by a Kolmogorov-Smirnov (KS) test with \(p<0.05\). Results of the KS test are presented in Fig. 5 of the Appendix section.

Conversely, after \(95\%\) feature reduction, there is a noticeable drop in the number of pruned models that outperform the full model. With less than \(1.73\%\) of features remaining, the underperforming models significantly outnumber the better-performing models (KS test with \(p<0.05\), see Fig. 5). This drop in performance is to be expected when only a few features remain, since many classification tasks will not be linearly separable in a highly reduced feature space.

It is noteworthy that many pruned models, despite extensive feature elimination, maintain the exact same accuracy as the original full ROCKET model. This is due to two primary reasons: First, the full ROCKET model achieves \(100\%\) test set accuracy in 5 of the 42 datasets, making it impossible for the pruned model to perform better. Second, certain datasets have a limited number of test set instances, resulting in insensitive accuracy metrics and thus identical results.

4.2.2 Quantification of accuracy change relative to feature retention

Percentage change in test set accuracy (relative to the full ROCKET model) of pruned models using SFD as a function of retained features. Results are shown for the 42 studied datasets, each averaged over 25 realizations (1050 realizations in total). The solid line represents the mean, the dashed line represents the median, and the shaded area represents the interquartile range (25th to 75th percentile). For comparison, the figure also includes two alternative pruning strategies: random selection (in blue) and inverse-SFD (in green) (Color figure online)

To quantify the percentage gained or lost by the pruned models compared to the full model, we computed the percentage change in accuracy on the test set as a function of the features retained. Figure 2 displays the results for the 42 datasets, with each represented as the mean over 25 realizations (1050 realizations in total). We provide statistics including the mean, median, and quartiles (25th and 75th percentiles) across all 42 datasets. The graph reveals that between a level of \(30\%\) and \(10\%\) of feature retention, the gains from the improved pruned models outweigh the losses from the degraded models. Since many of the pruned models match the performance of the full models, the median of the percentage change is zero for most of the pruning process. Given that the average accuracy of the full ROCKET models across all realizations is \(91\%\), many of the pruned models have limited potential for improvement. This limitation is reflected in the skewed distribution of percentage change and the modest percentage gains over the full model.

Another interesting aspect to evaluate is the relevance of the optimal selection of features to discard during the pruning process. For this purpose, we present two alternative pruning strategies: one that randomly removes \(5\%\) of the features at each stage (random selection) and another that removes the top \(5\%\) most informative features (inverse-SFD). The comparison of SFD with both alternatives is shown in Fig. 2. On average, SFD outperforms random selection, while inverse-SFD is clearly the worst of the three. This result shows that SFD captures a hierarchy of importance in the features, and further supports the hypothesis that only a subset of features produced by ROCKET are relevant to the classification task.

Some datasets maintain strong performance even when a considerable percentage of features are randomly removed, suggesting that some binary classification tasks in UCR are relatively straightforward and can be efficiently handled by ROCKET models with a smaller number of kernels.

4.3 Detach-ROCKET with 10% of retained features

Accuracy (Acc) comparison between the pruned models with \(10\%\) of retained features and the full ROCKET model, for both test and training sets. Each point depicts the average accuracy of one of the 42 datasets over 25 realizations. The color indicates the training size of the dataset, with the color range saturated at 800 instances for enhanced contrast (Color figure online)

When employing SFD to prune a ROCKET classifier, the optimal final percentage of features to retain will ultimately depend on the dataset and the intended use case. In the following section, we show the results of our proposed method to determine this optimal number of features using a validation set. This selection is governed by a hyperparameter that controls the tradeoff between accuracy and model size.

Considering the results obtained for the binary UCR datasets, when a validation split is not feasible, we recommend retaining \(10\%\) of features for a standard ROCKET with 10,000 kernels (20,000 features). This recommendation is based on the results presented in Fig. 1, where it is shown that the proportion of pruned models that are better than the full models peaks around \(10\%\).

Figure 3 shows the accuracy comparison between the \(10\%\) pruned model and the full ROCKET model, in both test and training sets. Each point represents the average accuracy of a data set over 25 realizations. The pruned models performed better for \(52\%\) of the datasets, equally well for \(17\%\), and worse for \(31\%\), with an average accuracy improvement of \(0.2\%\). Although this selection is suboptimal, it can be useful in scenarios where the number of training samples is too small to separate a representative validation set. Another notable aspect of the pruned models is their reduction in accuracy on the training set, indicating a reduction in overfitting compared to the full model. The detailed results for each of the datasets can be found in Table 3 in the Appendix section.

In addition, we tested the effectiveness of our prunning methodology on other relevant variants of random convolutional kernel models: MiniRocket and MultiRocket. The results comparing the accuracies of the full models and their corresponding pruned versions are presented in Table 3 of the Appendix section.

In the case of Detach-MiniRocket, the average relative change in accuracy when retaining 10% of the features was \(-0.4\%\). In contrast to the ROCKET case, there is a slight decrease in accuracy for the pruned models. This can be explained by the fact that MiniRocket starts with an initial set of features that is smaller (10,000) and less redundant, and after pruning 90% of them, we keep half the amount of features compared to ROCKET.

The default MultiRocket transformation produces a substantially larger initial feature pool than ROCKET (80,000). To compensate for this difference, we set the fixed percentage of retained features in our experiments to be 5%. The resulting average relative change in accuracy for Detach-MultiRocket was 0.4%, twice that of Detach-ROCKET. In contrast to the MiniRocket case, the larger set of features created with MultiRocket seems to benefit more from feature selection and pruning.

4.4 Detach-ROCKET end-to-end procedure

Selection of optimal number of features using Detach-ROCKET. The grey curve represents the validation set accuracy achieved by the ridge classifier as a function of the percentage of retained features. Optimal values corresponding to \(c=0.1\) and \(c=10\) are highlighted in violet and orange, respectively. Dashed lines indicate the level sets for the optimized objective functions (see Eq. 7), showing that the function value changes perpendicularly to these lines (Color figure online)

To conduct a comprehensive evaluation of the end-to-end procedure, we selected the largest dataset within the UCR binary set, FordB, ensuring an adequate number of samples in each of the training, validation, and test sets. The goal in FordB is to diagnose whether or not a particular symptom is present in an automotive subsystem by analyzing engine noise. As provided by UCR, the dataset comprises a training set with 3636 samples and a test set with 810 samples. For validation, we employed a random subsampling method to select \(33\%\) of the elements from the training set.

Figure 4 illustrates the feature count optimization for one of the pruned models, where we show the accuracy on the validation set as a function of the percentage of retained features and the selected optimal points for weighting factors \(c=0.1\) and \(c=10\).

To control for stochastic variability arising from training set subsampling and kernel generation, the experiment to evaluate the end-to-end procedure was conducted 25 times. Results are summarized in Table 1. For Detach-ROCKET with \(c=0.1\), all 25 pruned models consistently surpassed the accuracy of their corresponding full ROCKET models. The mean test set accuracy shows an improvement of \(0.68\%\), validated by a KS test with \(p=0.01\). For Detach-ROCKET models with \(c=1\) and \(c=10\), the average accuracy differed by \(0.41\%\) and \(-0.5\%\), respectively. In these latter scenarios, however, the difference relative to the full ROCKET model was not statistically significant (\(\text {KS test } p>0.05\)). In Table 1 we also present the performance of Small ROCKET models. These are regular ROCKET models with a number of features equal to the average number of features of the Detach-ROCKET models. These models perform significantly worse than the Detach-ROCKET models, highlighting the benefits of starting with a large model and reducing it with SFD, rather than directly training a small ROCKET model from scratch.

5 Comparison with alternative methodologies

We evaluated the performance of Detach-ROCKET against state-of-the-art pruning methods, specifically Small ROCKET or S-ROCKET (Salehinejad et al. 2022) and Pruned ROCKET or POCKET (Chen et al. 2023). To ensure a fair comparison, we utilized the first 30 datasets from the UCR catalog, consistent with the benchmarks performed in their respective works.

The results, formatted as an extension of those presented in the POCKET paper, are shown in Table 2. The pruning rate for Detach-ROCKET was set to 10%, as determined by the analysis in the previous section. Additionally, we included results for the end-to-end version of Detach ROCKET, where the pruning rate is set automatically.

In terms of accuracy, all methods demonstrate similar performance when considering deviations. However, they operate at different levels of retained features. On average, S-ROCKET retains 39% of the features. POCKET does not have an automated method for setting the retention rate and instead uses the values determined by S-ROCKET, with exceptions for the first and last records, resulting in an average retention rate of 35%. In contrast, our methodology maintains the same accuracy with a fixed pruning level of 10% and can be pushed even further to retain only 2% of the features in the end-to-end mode.

It is worth noting that the end-to-end methodology relies on having a representative validation set to select the optimal model size. This condition is not always met by UCR datasets, where some datasets have a small training size. Nevertheless, we have included these results to show that even under these suboptimal settings, Detach-ROCKET is able to prune up to \(98\%\) of the total features without significant loss of accuracy.

6 Complexity, training time and code availability

6.1 Complexity analysis

We introduced the Selective Feature Detachment as a novel methodology for feature selection. To understand the computational implications of employing this methodology, we need to break down the complexity of the associated algorithms into its subcomponents. Let the following variables be defined:

-

\(N\): Number of training instances

-

\(K\): Number of kernels

-

\(F\): Number of features

-

\(L\): Length of the time series

-

\(M\): Number of steps, which suggested default value is 150

According to the original article (Dempster et al. 2020), the ROCKET transformation stage, which is required as an initial step, has a complexity of:

In our implementation, which is the one provided in the scikit-learn library, the complexity of the ridge classifier is governed by the relationship between the number of features F and the number of training instances N (Pedregosa et al. 2011; Dempster et al. 2020; Dongarra et al. 2018). Specifically, the solving method alternates between eigen decomposition and singular value decomposition, with an effective complexity of:

The overall complexity of SFD is determined by the sum of the complexities over the M iteration steps. At each step t, the dominant computation is the training of the ridge classifier. Let \(F_t\) denote the number of features retained at step t, then the complexity of SFD can be expressed as:

Considering that \(F=F_{0}\) and \(F_{t+1} < F_t\), then F is the largest value in \(F_t\), and an upper bound for the complexity of the algorithm is given by:

6.2 Training time

In our end-to-end experiments presented in section 4.4, we evaluated the computational overhead introduced by the SFD pruning methodology. While the training time for the full ROCKET was 305 s on average, the inclusion of the subsequent SFD procedure added an average of 58 s. These times were derived from 25 independent runs on the selected dataset using the same hardware for all computations. This additional \(19\%\) of training time invested in feature selection is relatively small considering that SFD efficiently reduces model size by \(98.86\%\) while providing an average increase in test accuracy of \(0.6\%\).

6.3 Code availability

The code used in this study is publicly available in the following GitHub repository: https://github.com/gon-uri/detach_rocket. It includes functions for end-to-end training of Detach-ROCKET for time series classification, as well as a standalone function for Sequential Feature Detachment. The latter is not limited to time series and is applicable to other domains where data have a large number of features and feature selection is required, such as bioinformatics and finance (Ma and Huang 2008; Liang et al. 2015).

7 Discussion

7.1 Comparison with previous methodologies

Comparing SFD with a standard backward-stepwise selection like SSR reveals the efficiency and adaptability of SFD, especially when dealing with large feature sets. SSR takes a step-by-step approach, removing features one at a time. This requires training a ridge classifier for each feature, which leads to computational bottlenecks, especially when the number of features (f) is large. SFD, on the other hand, uses a more granular strategy. It prunes several features in each iteration, with the number adaptively determined based on the remaining number of features. Early in the pruning process, when many features are still available, SFD can aggressively remove more of them. As the pool shrinks, SFD becomes more conservative, ensuring that it does not inadvertently remove an entire set of correlated features, which could adversely affect model performance.

Another notable characteristic of SFD is its reduced sensitivity to hyperparameter choices; the default value for the proportion of removed features (p) suffices in most cases. This contrasts with STLSQ, where the feature coefficient threshold is critical for the pruning result, and its selection can be very challenging, especially for randomly generated features.

In a direct comparison with alternative pruning strategies for the ROCKET model, S-ROCKET and POCKET, Detach-ROCKET provides equivalent classification accuracy, but with a significantly larger reduction in the number of features. Furthermore, unlike previous methods, Detach-ROCKET provides a way to automatically control the relationship between model size and model accuracy by varying the value of an interpretable parameter.

7.2 Interpretability and theoretical considerations

Selecting a subset of features not only provides an advantage in terms of accuracy, inference time, and model size, but also provides an opportunity for model interpretability. By comparing the properties of the initial set of kernels with the properties of the set of kernels retained after pruning (those associated with the retained features), it is possible to learn characteristics of the time series that are relevant to classification. For example, the distribution of dilation values in the selected kernels carries information about which are the relevant time scales in the problem. Furthermore, it is possible to analyze in which part of the time series the selected kernels are predominantly activated, indicating whether there are certain time periods that are more relevant for class determination. The idea of using pruning as a way to improve interpretability is further explored in follow-up publications (Solana et al. 2024; Uribarri et al. 2023).

From a theoretical viewpoint, our results can be contextualized within broader machine learning paradigms, such as neural network pruning and the lottery ticket hypothesis (Frankle and Carbin 2018; Hoefler et al. 2021). The latter theory postulates the existence of a smaller, "winning" subnetwork within a larger neural network, analogous to our concept of "winning kernels". Our feature pruning methodology in Detach-ROCKET could be seen as an analog to finding these winning subnetworks, but in the realm of time series classification models. Furthermore, the remarkable robustness of SFD results let us conjecture that the winning kernels may incorporate useful inductive biases for the different classification tasks, as is the case for lottery ticket subnetworks initialized with random weights (Zhou et al. 2019) (Fig. 6 pag. 7), and also may contain the maximum amount of information about the time series generative process, as can be seen in compressed data representations (Marsili and Roudi 2022).

7.3 Limitations and future work

One limitation observed in our study is that the pruned model does not significantly outperform the full model in terms of accuracy. One of the reasons for this may be due to the already high performance of the full model on the standard UCR benchmark. Future investigations on more challenging datasets would be valuable to further evaluate the performance of the pruned models.

A salient aspect of random convolutional kernel models like ROCKET is their reliance on a simple linear classifier for training, which can limit the expressiveness of the model. This design choice is motivated by the need to avoid overfitting on the inherently large number of features, a number that is required due to the nonlearnable (random) nature of the kernels, as discussed in Sect. 2.2. Our approach effectively prunes this feature space, retaining only the most informative kernels for classification. This reduction in dimensionality opens the door for future research to investigate the utility of fitting more complex classifiers to the selected feature set, as the risk of overfitting would be mitigated by the reduced feature count.

8 Conclusion

In this study, we introduce Sequential Feature Detachment (SFD), a novel method for pruning random convolutional kernel-based time series classifiers such as ROCKET, MiniRocket, and MultiRocket. Our results show that Detach-ROCKET models, which are ROCKET models pruned using SFD, are able to improve the performance of ROCKET while significantly reducing the number of features.

Our methodology identifies the minimal set of discriminative kernels essential for high-accuracy classification, facilitating the construction of compact models that rival the performance of more complex counterparts. This shallow and light architecture not only enhances interpretability, but also offers computational advantages, particularly in resource-constrained environments, as it requires less memory and fewer convolutions for inference compared to traditional ROCKET models. Furthermore, the SFD procedure can be applied to select relevant features generated by other types of time series transformations, as we exemplify in our github repository with the features produced by the popular tsfresh Python package (Christ et al. 2018). These contributions advance the field’s ongoing efforts to develop efficient and interpretable machine learning models for time series classification.

References

Bagnall A, Lines J, Hills J, Bostrom A (2015) Time-series classification with cote: the collective of transformation-based ensembles. IEEE Trans Knowl Data Eng 27(9):2522–2535

Bagnall A, Lines J, Bostrom A, Large J, Keogh E (2017) The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 31:606–660

Barron AR (1993) Universal approximation bounds for superpositions of a sigmoidal function. IEEE Trans Inf Theory 39(3):930–945

Boninsegna L, Nüske F, Clementi C (2018) Sparse learning of stochastic dynamical equations. J Chem Phys 148(24):241723

Brunton SL, Proctor JL, Kutz JN (2016) Discovering governing equations from data by sparse identification of nonlinear dynamical systems. Proc Natl Acad Sci 113(15):3932–3937

Candès EJ et al (2006) Compressive sampling. In: Proceedings of the international congress of mathematicians, Madrid, Spain, vol 3, pp 1433–1452

Chadha GS, Panambilly A, Schwung A, Ding SX (2020) Bidirectional deep recurrent neural networks for process fault classification. ISA Trans 106:330–342

Chen S, Sun W, Huang L, Li X, Wang Q, John D (2023) P-rocket: Pruning random convolution kernels for time series classification. arXiv preprint arXiv:2309.08499

Christ M, Braun N, Neuffer J, Kempa-Liehr AW (2018) Time series feature extraction on basis of scalable hypothesis tests (tsfresh-a python package). Neurocomputing 307:72–77

Dau HA, Bagnall A, Kamgar K, Yeh C-CM, Zhu Y, Gharghabi S, Ratanamahatana CA, Keogh E (2019) The UCR time series archive. IEEE/CAA J Autom Sin 6(6):1293–1305

Davranche A, Lefebvre G, Poulin B (2010) Wetland monitoring using classification trees and spot-5 seasonal time series. Remote Sens Environ 114(3):552–562

de Silva BM, Champion K, Quade M, Loiseau J-C, Kutz J N, Brunton SL (2020) Pysindy: a python package for the sparse identification of nonlinear dynamics from data. arXiv preprint arXiv:2004.08424

Dempster A, Petitjean F, Webb GI (2020) Rocket: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min Knowl Disc 34(5):1454–1495

Dempster A, Schmidt DF, Webb GI (2021) Minirocket: a very fast (almost) deterministic transform for time series classification. In: Proceedings of the 27th ACM SIGKDD conference on knowledge discovery and data mining, pp 248–257

Dempster A, Schmidt DF, Webb GI (2023) HYDRA: competing convolutional kernels for fast and accurate time series classification. Data Min Knowl Discov 37(5):1779–1805

Dongarra J, Gates M, Haidar A, Kurzak J, Luszczek P, Tomov S, Yamazaki I (2018) The singular value decomposition: anatomy of optimizing an algorithm for extreme scale. SIAM Rev 60(4):808–865

Donoho DL (2006) Compressed sensing. IEEE Trans Inf Theory 52(4):1289–1306

Ebrahimi Z, Loni M, Daneshtalab M, Gharehbaghi A (2020) A review on deep learning methods for ECG arrhythmia classification. Expert Syst Appl: X 7:100033

Fawaz HI, Forestier G, Weber J, Idoumghar L, Muller P-A (2019) Deep learning for time series classification: a review. Data Min Knowl Disc 33(4):917–963

Fawaz HI, Lucas B, Forestier G, Pelletier C, Schmidt DF, Weber J, Webb GI, Idoumghar L, Muller P-A, Petitjean F (2020) Inceptiontime: finding alexnet for time series classification. Data Min Knowl Disc 34(6):1936–1962

Frankle J, Carbin M (2018) The lottery ticket hypothesis: finding sparse, trainable neural networks. arXiv preprint. arXiv:1803.03635

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning: data mining, inference, and prediction, vol 2. Springer, Berlin

Hinton G, Deng L, Dong Yu, Dahl GE, Mohamed A, Jaitly N, Senior A, Vanhoucke V, Nguyen P, Sainath TN et al (2012) Deep neural networks for acoustic modeling in speech recognition: the shared views of four research groups. IEEE Signal Process Mag 29(6):82–97

Hoefler T, Alistarh D, Ben-Nun T, Dryden N, Peste A (2021) Sparsity in deep learning: Pruning and growth for efficient inference and training in neural networks. J Mach Learn Res 22(1):10882–11005

James G, Witten D, Hastie T, Tibshirani R et al (2013) An introduction to statistical learning, vol 112. Springer, Berlin

Kaptanoglu AA, de Silva BM, Fasel U, Kaheman K, Goldschmidt AJ, Callaham JL, Delahunt CB, Nicolaou ZG, Champion K, Loiseau J-C et al (2021) Pysindy: a comprehensive python package for robust sparse system identification. arXiv preprint arXiv:2111.08481

Kaptanoglu AA, Zhang L, Nicolaou ZG, Fasel U, Brunton SL(2023) Benchmarking sparse system identification with low-dimensional chaos. Nonlinear Dyn, pp 1–22

Liang D, Tsai C-F, Hsin-Ting W (2015) The effect of feature selection on financial distress prediction. Knowl Based Syst 73:289–297

Lines J, Taylor S, Bagnall A (2018) Time series classification with hive-cote: the hierarchical vote collective of transformation-based ensembles. ACM Trans Knowl Discov Data (TKDD) 12(5):1–35

Löning M, Bagnall A, Ganesh S, Kazakov V, Lines J, Király FJ (2019) SKTIME: a unified interface for machine learning with time series. arXiv preprint arXiv:1909.07872

Löning M, Király F, Bagnall T, Middlehurst M, Ganesh S, Oastler G, Lines J, Walter M, ViktorKaz, Mentel L, Chrisholder, Tsaprounis L, Kuhns RN, Parker M, Owoseni T, Rockenschaub P, Canbartl, Jesellier, Shell E, Gilbert C, Bulatova G, Lovkush, Schäfer P, Khrapov S, Buchhorn K, Take K, Subramanian S, Meyer SM, Rushbrooke A, Rice B (2022) sktime/sktime: v0.13.4, September

Ma S, Huang J (2008) Penalized feature selection and classification in bioinformatics. Brief Bioinform 9(5):392–403

Marsili M, Roudi Y (2022) Quantifying relevance in learning and inference. Phys Rep 963:1–43

Mehdiyev N, Lahann J, Emrich A, Enke D, Fettke P, Loos P (2017) Time series classification using deep learning for process planning: a case from the process industry. Procedia Comput Sci 114:242–249

Middlehurst M, Large J, Flynn M, Lines J, Bostrom A, Bagnall A (2021) Hive-cote 2.0: a new meta ensemble for time series classification. Mach Learn 110(11–12):3211–3243

Middlehurst M, Schäfer P, Bagnall A (2023) Bake off redux: a review and experimental evaluation of recent time series classification algorithms. arXiv preprint arXiv:2304.13029

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) SCIKIT-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Rifkin RM, Lippert RA (2007) Notes on regularized least squares. Technical report, MIT. https://dspace.mit.edu/handle/1721.1/37318

Ruiz AP, Flynn M, Large J, Middlehurst M, Bagnall A (2021) The great multivariate time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 35(2):401–449

Salehinejad H, Wang Y, Yu Y, Jin T, Valaee S (2022) S-rocket: selective random convolution kernels for time series classification. arXiv preprint arXiv:2203.03445

Sarp G, Ozcelik M (2017) Water body extraction and change detection using time series: a case study of lake Burdur, Turkey. J Taibah Univ Sci 11(3):381–391

Schlegel K, Neubert P, Protzel P (2022) HDC-Minirocket: explicit time encoding in time series classification with hyperdimensional computing. In: 2022 International Joint Conference on Neural Networks (IJCNN). IEEE, pp 1–8

Shifaz A, Pelletier C, Petitjean F, Webb GI (2020) TS-chief: a scalable and accurate forest algorithm for time series classification. Data Min Knowl Disc 34(3):742–775

Solana A, Fransén E, Uribarri G (2024) Classification of raw MEG/EEG data with detach-rocket ensemble: an improved rocket algorithm for multivariate time series analysis. arXiv preprint arXiv:2408.02760

Tan CW, Dempster A, Bergmeir C, Webb GI (2022) Multirocket: multiple pooling operators and transformations for fast and effective time series classification. Data Min Knowl Disc 36(5):1623–1646

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B Stat Methodol 58(1):267–288

Uribarri G, Mindlin GB (2022) Dynamical time series embeddings in recurrent neural networks. Chaos Solitons Fractals 154:111612

Uribarri G, von Huth SE, Waldthaler J, Svenningsson P, Fransén E (2023) Deep learning for time series classification of Parkinson’s disease eye tracking data. arXiv preprint arXiv:2311.16381

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser Ł, Polosukhin I (2017) Attention is all you need. In: Advances in Neural Information Processing Systems 30

Wang P, Jiang W, Wei Y, Li T (2023) CEEMD-multirocket: Integrating CEEMD with improved multirocket for time series classification. Electronics 12(5):1188

Yong Yu, Si X, Changhua H, Zhang J (2019) A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput 31(7):1235–1270

Zhou H, Lan J, Liu R, Yosinski J (2019) Deconstructing lottery tickets: zeros, signs, and the supermask. In: Advances in neural information processing systems, vol 32

Acknowledgements

This work was in part financially supported by Digital Futures. Authors would like to thank Yago J.S. Cagnacci for engaging in insightful discussions.

Funding

Open access funding provided by Royal Institute of Technology.

Author information

Authors and Affiliations

Contributions

Conceptualization: GU and EF; methodology and investigation: GU and FB; visualization and software: GU and FB; writing—original draft preparation: GU and FB; writing—review and editing: GU, FB, EF and AA; funding acquisition and supervision: EF and AA.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Responsible editor: Eyke Hüllermeier.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Kolmogorov–Smirnov test results

See Fig. 5.

Results of the two-sample Kolmogorov–Smirnov test as a function of the percentage of features retained. At each pruning step, for the 25 experimental realizations, we test whether the number of pruned models that are better than the full model differs from the number of pruned models that are worse than the full model (violet and orange curves in Fig. 1). When feature retention is between 35.85 and 5.10%, both distributions are significantly different because there are more better than worse models. When the feature retention is less than \(1.73\%\), the distributions differ because there are significantly more worse models

Appendix B: Results table

See Table 3.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Uribarri, G., Barone, F., Ansuini, A. et al. Detach-ROCKET: sequential feature selection for time series classification with random convolutional kernels. Data Min Knowl Disc (2024). https://doi.org/10.1007/s10618-024-01062-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10618-024-01062-7