Abstract

We show that it is possible to achieve the same accuracy, on average, as the most accurate existing interval methods for time series classification on a standard set of benchmark datasets using a single type of feature (quantiles), fixed intervals, and an ‘off the shelf’ classifier. This distillation of interval-based approaches represents a fast and accurate method for time series classification, achieving state-of-the-art accuracy on the expanded set of 142 datasets in the UCR archive with a total compute time (training and inference) of less than 15 min using a single CPU core.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Interval methods represent a long-standing and prominent approach to time series classification. Most interval methods are strikingly similar, closely following a paradigm established by Rodríguez et al. (2000) and Geurts (2001), and involve computing various descriptive statistics and other miscellaneous features over multiple subseries of an input time series, and/or some transformation of an input time series (e.g., the first difference or discrete Fourier transform), and using those features to train a classifier, typically an ensemble of decision trees (e.g., Deng et al. 2013; Lines et al. 2018). This represents an appealingly simple approach to time series classification (see Middlehurst and Bagnall 2022; Henderson et al. 2023).

We observe that it is possible to achieve the same accuracy, on average, as the most accurate existing interval methods simply by sorting the values in each interval and using the sorted values as features or, in order to reduce the size of the feature space (and, accordingly, computational cost), to subsample these sorted values, i.e., to use the quantiles of the values in the intervals as features. We name this approach Quant.

Descriptive statistics (i.e., as used in existing interval methods) provide information in relation to the distribution of values in a given interval. The motivating idea behind Quant is to use the distribution of values (in the form of quantiles) directly as features, in conjunction with the location information provided implicitly through the use of intervals. In other words, Quant encodes a prior that class can be distinguished based on the distribution of values in different locations of a time series. Quantiles allow for representing the distribution of values in an interval in more or less detail (i.e., by computing more or fewer quantiles), and are simple and efficient to compute. We demonstrate empirically with results on the datasets in the UCR archive that quantiles provide an effective basis for time series classification.

The difference in mean accuracy and the pairwise win/draw/loss between Quant and several other prominent interval methods, namely, TSF (Deng et al. 2013), STSF (Cabello et al. 2020), rSTSF (Cabello et al. 2023), CIF (Middlehurst et al. 2020), and DrCIF (Middlehurst et al. 2021b), for a subset of 112 datasets from the UCR archive (for which published results are available for all methods), are shown in the Multiple Comparison Matrix (MCM) in Fig. 1 (see Ismail-Fawaz et al. 2023). Results for the other methods are taken from Middlehurst et al. (2024). As shown in Fig. 1, Quant achieves higher accuracy on more datasets, and higher mean accuracy, than existing interval methods. Total compute time for Quant is significantly less than that of even the fastest of these methods (see further below).

When using quantiles (or sorted values) as features, as we increase or decrease interval length, we move between two extremes: (a) a single interval where the quantiles (or sorted values) represent the distribution of the values over the whole time series (distributional information without location information); and (b) intervals of length one, together consisting of all of the values in the time series in their original order (location information without distributional information): see Fig. 2.

Quantiles represent a superset of many of the features used in existing interval methods (min, max, median, etc.). Using quantiles allows us to trivially increase or decrease the number of features, by increasing or decreasing the number of quantiles per interval which, in turn, allows us to balance accuracy and computational cost. We find that quantiles can be used with fixed (nonrandom) intervals, without any explicit interval or feature selection process, and with an ‘off the shelf’ classifier, in particular, extremely randomised trees (Geurts et al. 2006), following Cabello et al. (2023). The hyperparameters for Quant and their default values (including the configuration of the classifier) are set out in detail in Sect. 3.

The key advantages of distilling interval methods down to these essential components are simplicity and computational efficiency. Quant represents one of the fastest methods for time series classification. The cost of computing the quantiles, in particular, is very low. Median transform time over 142 datasets in the UCR archive is less than one second. Total compute time (training and inference) is under 15 min for the same 142 datasets using a single CPU core. This is approximately \(5 \times\) faster than the fastest existing interval method, rSTSF (Cabello et al. 2023), which is already one of the fastest methods for time series classification.

The rest of this paper is structured as follows. In Sect. 2, we discuss relevant related work. In Sect. 3, we set out the key aspects of the method. In Sect. 4, we present experimental results including a sensitivity analysis.

2 Background

2.1 Interval methods

A time series is a sequence of values ordered in time, \({X = \left( x_{0}, x_{1}, \ldots , x_{n - 1}\right) }\), where n is time series length. An interval is a contiguous subset of values, \(\left( x_{a}, \ldots , x_{b}\right)\), defined by start and end points a and b, where \({a \ge 0}\), \({b > a}\), and \({b \le n - 1}\). Given a set of time series, for a given interval defined by start and end points a and b, interval methods involve extracting features from that same interval for each time series in the set. Interval methods typically compute features from a large number of such intervals.

Methods closely resembling current state-of-the-art interval methods have been applied to the domain of time series classification at least since Rodríguez et al. (2000) and Geurts (2001). Most existing interval methods are strikingly similar, closely following the basic concept as set out in, e.g., Rodríguez and Alonso (2004), namely:

-

for a set of intervals (subseries) taken from the input time series, and/or some transformation of the input time series such as the first difference or discrete Fourier transfom;

-

compute descriptive statistics (e.g., mean and variance) and other features for the values in each interval; and

-

use the computed features to train a classifier, typically an ensemble of decision trees.

Different interval methods are characterised by the set of transformations applied to the input time series, the characteristics of the intervals, the use of interval and/or feature selection, and the choice of classifier.

Many methods use one or more transformations of the input time series. RISE replaces the input time series with spectral, autocorrelation, and autoregressive representations (Lines et al. 2018; Flynn et al. 2019). More recently, the use of the original input time series in combination with the first difference, and some form of frequency domain representation (e.g., the discrete Fourier transform), has lead to significant improvements in accuracy over earlier methods (Cabello et al. 2020, 2023; Middlehurst et al. 2021b).

Some methods use fixed (i.e., nonrandom) intervals, recursively splitting the input time series in half (e.g., Rodríguez and Alonso 2004), while some use random intervals, recursively splitting the input time series at random points, or sampling intervals with random length and position (e.g., Deng et al. 2013; Baydoğan et al. 2013; Cabello et al. 2023). Others methods use heuristic approaches (e.g., Cabello et al. 2020; Altay and Baydoğan 2021).

All or almost all proposed methods use a fixed set of summary statistics (e.g., mean and variance), often combined with other features such as slope (e.g., Deng et al. 2013; Middlehurst et al. 2020). Several methods employ some form of explicit feature and/or interval selection process (e.g., Cabello et al. 2020, 2023; Li et al. 2023).

Other variations to the basic concept of interval methods include forming ‘bag of words’ representations of the features extracted from intervals (e.g., Baydoğan et al. 2013; Baydoğan and Runger 2016), fitting Gaussian process models to intervals (Berns et al. 2021), and approaches incorporating clustering (Schmidt and Lohweg 2021).

Most methods use an ensemble of decision trees, including specialised decision trees for interval features such as ‘time series trees’ (e.g., Deng et al. 2013; Middlehurst et al. 2020, 2021b), or standard ensembles such as boosted decision trees (e.g., Rodríguez et al. 2001; Geurts 2001), random forests, or extremely randomised trees (e.g., Cabello et al. 2023). Some methods use other classifiers such as support vector machines (e.g., Rodríguez and Alonso 2005). In this context, it is worth noting that some earlier methods were proposed prior to the introduction of what are now considered canonical classifiers such as random forests or extremely randomised trees, and prior to or only shortly after the introduction of the UCR archive (Dau et al. 2019).

The two most accurate current interval methods on the datasets in the UCR archive are DrCIF (Middlehurst et al. 2021b), and rSTSF (Cabello et al. 2023). Both, in turn, build on TSF (Deng et al. 2013). TSF uses random intervals (intervals with random position and length), and computes the mean, variance, and slope of the values in each interval. TSF uses an ensemble of specialised decision trees (‘time series trees’), using a splitting criteria that combines entropy and a tie-breaking procedure, and trains each tree separately using a different set of random intervals (Deng et al. 2013). Bagnall et al. (2017) found that TSF was faster and at least as accurate as other interval methods on the datasets in the UCR archive at the time.

DrCIF builds on CIF (Middlehurst et al. 2020), sampling random intervals (random position and length) from the input time series, first difference, and a periodogram, and computes features including the mean, standard deviation, slope, median, interquartile range, min, max, as well as the catch22 features (Lubba et al. 2019). DrCIF uses a version of ‘time series trees’ as per TSF, training each tree separately with a random set of intervals and a random subset of features. DrCIF is one of the four components of HIVE-COTE 2 (HC2), the most accurate method for time series classification on the datasets in the UCR archive (Middlehurst et al. 2021b).

rSTSF builds on STSF (Cabello et al. 2020). For each of the original time series, first difference, a periodogram, and an autoregressive representation (the coefficients of an autoregressive model), and for each of the mean, standard deviation, slope, min, max, median, interquartile range, and two additional features (the number of intersections with the mean and the number of values greater than the mean), rSTSF recursively splits the input at random points, selecting intervals using the Fisher score, performing a kind of interval or feature selection. Unlike DrCIF, rSTSF uses an ‘off the shelf’ classifier, namely, extremely randomised trees. While DrCIF and rSTSF produce similar accuracies on the datasets in the UCR archive, rSTSF is considerably faster (Middlehurst et al. 2023).

Quant represents an attempt to condense these various approaches into their essential ingredients. In particular, as set out in Sect. 3, Quant uses fixed dyadic intervals, and uses quantiles in place of all other features, i.e., using the distribution of values in each interval directly as features. Quant uses multiple input representations, and uses an off-the-shelf classifier without any additional interval or feature selection process.

2.2 Other state-of-the-art methods

There is a great diversity of methods for time series classification, reflecting the diversity of features which can be relevant to the classification of time series data. Different methods for time series classification represent different approaches to making use of the temporal ordering of values and extracting relevant information from time series data.

In the recent ‘bake off redux’, Middlehurst et al. (2024) evaluate the most accurate current methods for time series classification over an expanded set of 142 datasets from the UCR archive. Middlehurst et al. (2024) determine that the most accurate methods from each of a diverse set of different approaches to time series classification are: Proximity Forest, FreshPRINCE, Quant, WEASEL-2, H-InceptionTime, RDST, MultiRocket+Hydra, and HC2.

Proximity Forest (PF) belongs to a category of methods which classify time series based on their similarity to a set of exemplar time series under different distance measures. In particular, PF comprises an ensemble of decision trees using distance measures as splitting criteria (Lucas et al. 2019). In its use of exemplars and distance measures, which do not explicitly capture the distribution of values, this represents a substantially a different approach to classification as compared to interval methods.

FreshPRINCE combines features drawn from the TSFresh feature set, computed over the whole of the input time series, with a rotation forest classifier (Middlehurst and Bagnall 2022). The use of these features bears a broad similarity to the use of descriptive statistics in interval methods, characterising the distribution of values in the time series, but without the location information provided by the use of intervals.

WEASEL-2 is a dictionary method, involving extracting and counting symbolic patterns in time series, building on WEASEL (Schäfer and Leser 2017), and uses dilated sliding windows and the Symbolic Fourier Transform with random parameters to extract patterns, in conjunction with a ridge regression classifier (Schäfer and Leser 2023). There is arguably a loose similarity between dictionary methods such as WEASEL-2 and interval methods to the extent that dictionary methods classify time series based on the distribution of counts of recurring patterns, albeit for a heavily-transformed symbolic representation of the input time series.

InceptionTime is an ensemble of convolutional neural network models based on the Inception architecture (Ismail Fawaz et al. 2020). InceptionTime performs classification based on a transform of the input time series using multiple layers of convolutional kernels. InceptionTime uses global pooling and does not explicitly capture location information. H-InceptionTime is a variant of InceptionTime which combines learned and fixed convolutional kernels (Ismail-Fawaz et al. 2022).

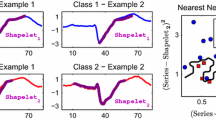

RDST is a shapelet method, computing features based on the distance between input time series and a set of discriminative subseries drawn from the training set, which uses randomly-selected shapelets with various dilations, and a ridge regression classifier (Guillaume et al. 2022). Shapelet methods classify time series based on the correspondence between class and the minimum Euclidean distance to a each of a set of subseries, and do not explicitly consider the distribution of values in time series.

MultiRocket+Hydra combines features from both MultiRocket and Hydra. MultiRocket is an extension of Rocket and MiniRocket. Rocket transforms input time series using a large set of random convolutional kernels (random in terms of their length, weights, bias, dilation, and padding), and uses both PPV (‘proportion of positive values’) and max pooling (Dempster et al. 2020). MiniRocket uses a small, fixed set of convolutional kernels and PPV pooling, allowing for highly-optimised computation, and is significantly faster than Rocket (Dempster et al. 2021). MultiRocket combines the kernels from MiniRocket with an expanded set of pooling functions, and is close to the most accurate method for time series classification on the datasets in the UCR archive, while being only marginally slower than MiniRocket (Tan et al. 2022). Hydra combines aspects of both Rocket and dictionary methods, counting the occurrence of random patterns, represented by random convolutional kernels, in input time series (Dempster et al. 2023). Hydra is both faster and, with the exception of WEASEL-2, more accurate than other dictionary methods. All four methods employ a ridge regression classifier by default. With the exception of MultiRocket, which includes a pooling operator which takes into account location, the Rocket ‘family’ of methods use global pooling, and do not explicitly capture location information.

HC2 is an ensemble which makes use of the different types of features extracted by each of its constituent methods, namely, TDE (Middlehurst et al. 2021a), a dictionary method predating WEASEL-2, DrCIF, STC, a shapelet method predating RDST, and Arsenal, an ensemble of Rocket models (Middlehurst et al. 2021b). HC2 is the most accurate method for time series classification on the datasets in the UCR archive.

While there has been significant progress in terms of both accuracy and computational cost since Bagnall et al. (2017), there is still great variability in the computational efficiency of the most accurate methods, with total compute time on the expanded set of 142 datasets ranging between hours, for the faster methods, and several weeks (Middlehurst et al. 2024).

3 Method

Quant involves computing quantiles over a fixed set of intervals on the input time series (and three transformations of the input time series), and using the computed quantiles to train a classifier. Compared to both DrCIF and rSTSF, we use: (a) a single type of feature (quantiles); and (b) fixed, dyadic intervals. In contrast to rSTSF, we use no explicit interval or feature selection process (in this sense, feature selection is delegated entirely to the classifier) and, in contrast to DrCIF, we use a standard classifier. The simplicity of our approach allows for exceptional computational efficiency, and helps to clarify the factors which are material to classification accuracy.

The key characteristics of Quant are:

-

the set of input representations;

-

the set of intervals;

-

the features (quantiles); and

-

the classifier.

As set out in detail below, by default, Quant operates on the input time series as well as the first and second differences and a Fourier transform of the input time series. Quant uses a fixed set of dyadic intervals, with the specific properties as set out in detail in Sect. 3.2. Quant involves computing \(m \, / \, 4\) quantiles per interval (where m is interval length), and subtracting the interval mean from every second quantile. Quant uses the computed features to train an extremely randomised trees classifier, with 200 trees, and considers 10% of the total number of features at each split.

We emphasise that Quant is robust to different choices for most of these hyperparameters. That is, while the default value for each hyperparameter represents the optimal value per the sensitivity analysis in Sect. 4.2, using other values (e.g., for the number of intervals, or the number of quantiles per interval) has a relatively limited effect on overall accuracy. In other words, Quant will produce approximately the same results under a wide set of different hyperparameter configurations.

We implement Quant in Python, using the implementation of extremely randomised trees from scikit-learn (Pedregosa et al. 2011). Our code and results will be made available at https://github.com/angus924/quant.

3.1 Input representations

Using additional representations allows for capturing information not otherwise captured directly through the distribution of values in the original time series. Following Middlehurst et al. (2021b), we use the original time series, the first difference, \(X'=\left( x_{1}-x_{0}, x_{2}-x_{1},\ldots ,x_{n-1}-x_{n-2}\right)\), and the discrete Fourier transform, \(\mathcal {F}(X)\). We find that it is also beneficial to use the second difference, \(X''=\left( x'_{1}-x'_{0}, x'_{2}-x'_{1},\ldots ,x'_{n-1}-x'_{n-2}\right)\), although the improvement in accuracy is marginal: see Sect. 4.2.2. We find that it is beneficial to smooth the first difference by applying a simple moving average. Again, the effect seems to be relatively small. We found no consistent improvement in accuracy by smoothing the other input representations. (The second difference is computed independently of the smoothed first difference.)

3.2 Intervals

As noted above, for a time series \({X = \left( x_{0}, x_{1}, \ldots , x_{n - 1}\right) }\), where n is time series length, an interval is a contiguous subset of values \(\left( x_{a}, \ldots , x_{b}\right)\), where \({a \ge 0, b > a, b \le n - 1}\). We define interval length as \(m = b - a + 1\), and the number of quantiles per interval as a fraction of interval length, e.g., \(m \, / \, 2\) corresponds to computing a number of quantiles equal to half the number of values in a given interval. For present purposes, we assume that all time series are univariate and of the same length. We leave the extension of the method to variable-length and multivariate time series to future work.

In contrast to Cabello et al. (2023), and Middlehurst et al. (2021b), we use fixed, dyadic intervals. Dyadic intervals arguably represent the simplest possible approach for forming systematic intervals, i.e., by recursively dividing the input in half. We define our set of intervals in terms of ‘depth’, d, such that we divide the input time series into \(\left( 2^{0},2^{1},\ldots ,2^{d-1}\right)\) intervals of length \(\left( n \, / \, 2^{0}, n \, / \, 2^{1},\ldots , n / 2^{d-1}\right)\), as shown in Fig. 3. For each depth greater than one we also add the same set of intervals shifted by half the interval length. In the context of the tradeoff between distribution information and location information discussed in Sect. 1, the inclusion of shifted intervals allows us to recover location information otherwise discarded by dyadic intervals, i.e., by capturing the distribution of the values in the ‘middle’ 50% of a region otherwise divided into ‘left’ and ‘right’ halves.

Accordingly, the total number of intervals is \(2^{d - 1} \times 4 - 2 - d\) for each input representation. By default, we use a depth of \(d=\text {min}(6, \lfloor \log _{2} n \rfloor + 1)\), meaning that there are 120 intervals per representation (for time series of length 32 or more), and the smallest intervals are of length \(\text {max}(1, n \, / \, 32)\).

As noted above, we set the number of quantiles as a proportion of interval length. As a result, the total number of features is always proportional to time series length. For example, for a time series of length \(n = 64\), if we split the time series into four intervals of length \(m = n \, / \, 4 = 64 \, / \, 4 = 16\), and we compute \(m \, / \, 4 = 16 \, / \, 4 = 4\) quantiles per interval, we produce a total of 16 features: 4 quantiles per interval for each of 4 intervals equates to a total of 16 features. If, instead, we were to split the same series into 8 intervals of length \(m = n \, / \, 8 = 64 \, / \, 8 = 8\), we would compute \(m \, / \, 4 = 8 \, / \, 4 = 2\) quantiles per interval producing, again, a total of 16 features: 2 quantiles per interval for each of 8 intervals equates to a total of 16 features. Including the shifted intervals approximately doubles the total number of features.

3.3 Features

The sorted values represent the empirical distribution of values in each interval. As noted above, using the sorted values as features allows us to capture the distribution of the values in each interval directly, and use that information as the basis for classification.

Quantiles, being a subsample of the sorted values, represent an approximation of the full set of values, that is, an approximation of the empirical distribution. Importantly, this approximation reduces the size of the feature space which, in turn, reduces computational complexity (in particular, in relation to classifier training).

As noted above, we define the number of quantiles per interval in proportion to interval length. We compute \(k = m \, / \, v\) evenly-spaced quantiles per interval in the range \(\left( 0 / (k - 1), 1 / (k - 1),...,(k - 1) / (k - 1)\right)\), where m is interval length and, by default, \(v = 4\). (For intervals of length one, we simply use the given value. Where \(m \, / \, v \le 1\), we use the median.) So, for example, for an interval of length \(m = 16\), by default we take \(m \, / \, v = 16 / 4 = 4\) quantiles from this interval. Taking \(m \, / \, v\) quantiles is essentially equivalent to sorting the m values in the interval and keeping only every \(v^{\text {th}}\) value. (We note, however, that strictly speaking, it is not necessary to sort all of the values in an interval in order to compute the quantiles.) Taking m quantiles per interval (i.e., \(v = 1\)) is equivalent to using the sorted values directly (i.e., not subsampling).

Broadly speaking, we find that accuracy increases as the number of quantiles per interval increases, although the actual differences in accuracy are small, and computing more quantiles per interval results in proportionally higher computational cost: see Sect. 4.2.1.

We find that it is beneficial, to subtract the interval mean (that is, the mean of all of the values in the interval) from every second quantile: see Fig. 4. Subtracting the mean from every second quantile allows for capturing both the ‘raw’ distribution of values and the ‘shape’ of the distribution (i.e., divorced from mean of the values in a given interval). (Note that we only subtract the mean where both the interval length, and the number of quantiles per interval, are greater than or equal to two.) Although there are multiple potential approaches to integrating this information into the features, we find that it is efficient and effective to simply subtract the interval mean from every second quantile. The advantage of this approach is that it has no effect on the total number of features. Note, however, that the effect of subtracting the mean from half of the quantiles versus not subtracting the mean is relatively small: see Sect. 4.2.3.

An illustration of quantiles drawn from intervals of length \(n \, / \, 4\). We subtract the interval mean from every second quantile, such that we compute features representing both the distribution of the values in the interval and the distribution of the values in the interval relative to the mean

3.4 Classifier

We use extremely randomised trees (Geurts et al. 2006), as per rSTSF (Cabello et al. 2023). The key distinctions with random forests are that extremely randomised trees do not use bagging, and extremely randomised trees consider a random split point for each candidate feature. (We demonstrate the differences in accuracy between using extremely randomised trees versus a random forest and a ridge regression classifier in Sect. 4.2.4.)

Interval methods can potentially produce a large number of features, depending on the number of input representations, the number of intervals, and the number of features per interval. For extremely randomised trees, the typical ‘default’ number of candidate features per split is the square root of the total number of features (Geurts et al. 2006).

However, a large number of features in combination with a sublinear number of candidate features per split could potentially result in the classifier ‘running out’ of training examples before adequately exploring the feature space, especially in the context of smaller datasets. In other words, with a sublinear number of candidate features per split, as the size of the feature space grows, the probability of any given feature being considered decreases.

To this end, we find that it is beneficial to increase the number of candidate features per split to a linear proportion of the total number of features, in particular, \(10\%\) of the total number features (\(10\%\) of all features are considered at each split). In effect, we delegate interval and feature selection entirely to the classifier. The results show that this approach is both effective and computationally efficient.

3.5 Complexity

We treat the computational cost of sorting the values as an upper bound on the cost of computing the quantiles: \(O(n \log n)\), where n is time series length. Naively, computing the quantiles for all intervals requires sorting the values in each interval, for each input representation. However, as we use a fixed number of input representations, and a fixed number of intervals, we treat these as constant factors.

In principle, we could sort each input representation once, keeping track of the indices of the sorted values, and then form any interval by selecting the already-sorted values using their indices. In practice, even the naive approach incurs negligible overall computational cost. Median transform time over 142 datasets in the expanded UCR archive is less than one second. The majority of compute time is spent in training the classifier. In other words, any attempt at optimising total compute time should concentrate on reducing the size of the feature space, and/or improving the efficiency of classifier training. We leave further optimisation for future work.

Assuming approximately balanced trees, the complexity of training the classifier is \({O(p \cdot q \log q)}\), where p is the total number of features, and q is the number of training examples (see Louppe 2014). As we consider a linear proportion of the total number of features at each split, complexity is linear with p (which is, in turn, linear with n). The number of trees is not proportional to the number of training examples, or the number of features, so we treat this as a constant factor.

4 Experiments

We evaluate Quant on the datasets in the UCR archive, including 30 datasets recently incorporated into the archive, showing that Quant is at least as accurate, on average, as the most accurate existing interval methods, while being meaningfully faster. We also show the effect of key hyperparameter choices including the number of features, the set of input representations, the number of trees, and the number of candidate features per split. Unless otherwise stated, the results presented are for Quant using the default hyperparameters as set out in Sect. 3.

4.1 Comparison with state of the art

We compare Quant with the most accurate existing interval methods, and other state-of-the-art methods for time series classification. In order to allow for direct compatibility with published results, we evaluate Quant on the same datasets and using exactly the same 30 predefined resamples as used in Middlehurst et al. (2024).

Results for the other methods included in this comparison are taken from Middlehurst et al. (2024). In an earlier version of this paper, we compared Quant to results from Middlehurst et al. (2023), a preprint version of the same paper. We have now updated this analysis to reflect the changes between the preprint and published versions of the paper. In particular: (a) four of the datasets have been modified (the results for the relevant methods have been updated accordingly); (b) the configuration of HC2 has changed, resulting in a significant (approximately \(2.5\times\)) improvement in overall compute time (as well as very marginal differences in accuracy); (c) H-InceptionTime has replaced InceptionTime as the most accurate deep learning method; and (d) Quant has replaced rSTSF as the most accurate interval method. We acknowledge that there are many potential sources of variability when training a given method, and there is therefore a certain margin of error when comparing against an existing reference set of results, as we do in comparing Quant to results from Middlehurst et al. (2024).

For the purposes of these experiments, we are presenting our own results for Quant. We note that, in the process of including Quant in their analysis, Middlehurst et al. (2024) have also independently produced results for Quant. Figure 17 (Appendix) shows our accuracy results versus the results for Quant presented in Middlehurst et al. (2024). The two sets of results are very similar, the small differences being attributable to how the random seed has been set for the classifier.

The difference in mean accuracy, the pairwise win/draw/loss, and the p value for a Wilcoxon signed rank test—between Quant and other prominent interval methods, namely, TSF, STSF, rSTSF, CIF, and DrCIF, over a subset of 112 datasets from the UCR archive—are shown in the Multiple Comparison Matrix (MCM) in Fig. 1 on page 1. In addition, Fig. 5 shows the pairwise accuracy of Quant versus the two most accurate existing interval methods, namely, DrCIF (left), and rSTSF (right), on the same subset of 112 datasets. (Note that published results for TSF, STSF, CIF and DrCIF are available only for 112 datasets, and not for the additional datasets added to the archive per Middlehurst et al. (2024), and so this analysis is limited to these 112 datasets.)

Quant is more accurate on average than existing interval methods, including DrCIF and rSTSF, although the actual differences in accuracy are small. Quant is more accurate than DrCIF on 65 datasets, and less accurate on 43. Similarly, Quant is more accurate than rSTSF on 65 datasets, and less accurate on 42. However, as the results for all three methods are highly correlated, and the differences in accuracy are mostly small, even small changes in accuracy could change the appearance of the results, in particular, the ratio of wins and losses. We note that Middlehurst et al. (2024) also conclude that Quant is the both the most accurate and the fastest interval method on these datasets.

As noted above, thirty additional datasets were added to the UCR archive per the recent ‘bake off redux’ (Middlehurst et al. 2024). Figure 6 shows the MCM for Quant versus current state-of-the-art methods, namely, HC2, MultiRocket+Hydra, RDST, WEASEL-2, H-InceptionTime, rSTSF, FreshPRINCE, and PF (see Sect. 2), over 30 resamples of the expanded set of 142 datasets. Figure 7 shows the pairwise accuracy of Quant versus rSTSF (left), and HC2 (right), for all 142 datasets. (Results for rSTSF are taken from Middlehurst et al. (2023), except for the four updated datasets for which we have produced the results.)

Over these 142 datasets, Quant is reasonably similar to both WEASEL-2 and InceptionTime in terms of mean accuracy and win/draw/loss. However, Quant is clearly somewhat less accurate than the most accurate methods (RDST, MultiRocket+Hydra, and HC2). Quant is more accurate than rSTSF on 82 datasets, and less accurate on 55. In contrast, Quant is more accurate than HC2 on only 44 datasets, and less accurate on 94.

However, Quant is noticeably faster than any of these methods. Total compute time (training and inference) over all 142 datasets, averaged over 30 resamples, is less than 15 min using a single CPU core, compared to approximately 1 h 20 min for MultiRocket+Hydra, 1 h 35 min for rSTSF, almost 2 h for WEASEL-2, more than 4 h for RDST, more than one day for FreshPRINCE, several days for InceptionTime, and more than a week for HC2 and PF. (Timings for Quant are averages over 30 resamples, run on a cluster using Intel Xeon E5-2680 and Xeon Gold 6150 CPUs, restricted to a single CPU core per dataset per resample. Timings for rSTSF are taken from Middlehurst et al. (2023). Timings for other methods are taken from Middlehurst et al. (2024). We emphasise that, while the timings for other methods are also taken from experiments run on a cluster and using a single CPU core per method per dataset per resample, these timings are not necessarily directly comparable, due to various residual hardware and software differences. There is an inevitable margin of error when comparing compute times on different hardware, and different runs are likely to produce at least slightly different results. We note that the total training time for Quant reported by Middlehurst et al. (2024) is actually faster than our own recorded training time: 13 min 12 s vs 14 min 53 s.) Using 8 CPU cores, compute time is reduced to 6 min.

Accordingly, while HC2 is, on average, more accurate than Quant on these datasets, that additional accuracy comes at significant additional computational expense: Quant is more than three orders of magnitude (more than \(1{,}000\times\)) faster than HC2 in terms of total compute time.

Nevertheless, Quant is as accurate or more accurate than HC2 on 48 of the 142 datasets. We note also that there are multiple datasets where, at least among the methods included in this comparison, interval methods produce the highest accuracy. Quant, in particular, produces the highest accuracy of any method including HC2 on, e.g., the ‘FreezerRegularTrain’ dataset. We suggest that this dataset is representative of datasets where class can be distinguished by the distribution of values in a particular region of the time series (or transformations thereof), i.e., using the features extracted by Quant.

The training time for the classifier is proportional to the total number of features. Accordingly, we can improve overall compute time by reducing the number of intervals and the number of quantiles per interval. To this end, Fig. 18 (Appendix) shows the pairwise accuracy for a faster configuration of Quant (informally, QuantFAST), using approximately half the number of intervals (\(d = 5\)), and half the number of quantiles per interval (\(m \, / \, 8\)), versus rSTSF. Over 142 datasets, QuantFAST is more accurate than rSTSF on 70 datasets, and less accurate on 66. Total compute time for QuantFAST is approximately 7 min 40 s using a single CPU core. In other words, QuantFAST achieves almost the same accuracy, on average, as rSTSF, but is approximately \(10\times\) faster.

4.2 Sensitivity analysis

We demonstrate the effect of key hyperparameters, namely:

-

the number of features;

-

the set of input representations (including smoothing);

-

the inclusion of shifted intervals;

-

subtracting the mean; and

-

the number of trees and the number of features per split.

Following Herrmann et al. (2023), in an effort to avoid the peculiarities of the smallest datasets and the original training/test splits, we conduct the sensitivity analysis using stratified 5-fold cross-validation (such that, for each fold, \(80\%\) of the data is used for training, and \(20\%\) of the data is used for validation), using a random sample of 50 of the datasets from the subset of 112 datasets from the UCR archive used in, e.g., Middlehurst et al. (2021b). In particular, from the subset of 112 datasets, we randomly sample 50 of the 100 datasets where there are at least 100 training examples on an 80/20 split, and at least 5 examples of each class.

4.2.1 Number of features

Figure 8 shows mean accuracy (left), as well as total compute time (right), in terms of both: (a) the number of intervals, expressed in terms of depth, d; and (b) the number of quantiles per interval, expressed as a proportion of interval length, m.

Accuracy improves modestly as the number of quantiles per interval increases, although the accuracy for \(m \, / \, 4\), \(m \, / \, 2\), and m quantiles per interval are very similar. The spread of accuracy values is very small. However, computing more quantiles per interval results in proportionally greater computational cost due to the expanded feature space: m quantiles per interval requires twice the total compute time of \(m \, / \, 2\) quantiles per interval.

It is apparent that, when computing a relatively small number of quantiles per interval, accuracy tends to increase as depth increases, up to a depth of approximately \(d=6\), and then decreases. The same effect is not evident for \(m \, / \, 4\) or more quantiles per interval. We believe that this relates to the balance between distributional information and location information in larger versus smaller intervals: see Sect. 1. The results suggest that, broadly speaking, larger intervals are more informative than smaller intervals. With fewer quantiles per interval, more of the information in larger intervals is discarded, and smaller intervals dominate, which leads to lower accuracy. (It may be possible to counteract this effect by sampling features from larger intervals with higher probability when training the classifier. We leave this for future work.) Configurations using more quantiles per interval appear to be relatively immune to this effect.

While increasing depth significantly increases the number of intervals, the corresponding computational cost is linear with depth, as the total number of features computed at each depth is proportional to input length, rather than the number of intervals: see Sect. 3.2.

Figure 9 shows the pairwise accuracy for a depth of \(d=6\) with \(m \, / \, 4\) quantiles per interval (the default) versus two extremes in terms of the total number of features, namely, a depth of \(d=4\) with \(m \, / \, 16\) quantiles per interval (left), and a depth of \(d=8\) with m quantiles per interval (right). While a smaller number of features clearly results in lower accuracy on several datasets, the differences in accuracy compared to a larger number of features are relatively small.

Figure 19 (Appendix) shows compute time versus the number of quantiles per interval for a depth of \(d=6\). This emphasises the extent to which compute time is dominated by the time required to train the classifier which, in turn, is determined by the size of the feature space.

We note that the results presented here relate to the characteristics of the datasets used in these experiments. In particular, the lengths of most of the time series are relatively short: see Fig. 20 (Appendix). (However, the relationship between time series length and the accuracy of Quant, if any, appears to be weak.) In practice, it may be appropriate to adjust the parameters of the transform, e.g., depth, in order to suit the characteristics of a particular dataset.

4.2.2 Input representations and shifted intervals

Figure 10 shows mean accuracy (left), and total compute time (right), for different combinations of input representation. Figure 11 shows pairwise accuracy for the default combination of the input time series, X, first difference, \(X'\), second difference, \(X''\), and discrete Fourier transform, \(\mathcal {F}(X)\), versus:

-

\(X,X',X''\) (left);

-

\(X,X',\mathcal {F}(X)\) (centre); and

-

\(X,X'',\mathcal {F}(X)\) (right).

There is at least some advantage to using each of the three additional representations. Adding the discrete Fourier transform corresponds to the largest improvements in accuracy on individual datasets (Fig. 11, left), while adding the second difference makes the least difference (Fig. 11, centre). (For all configurations, we maintain the same number of features per representation.)

Figure 21 (Appendix) shows the pairwise accuracy for smoothing versus not smoothing the first difference, via a simple moving average with a window length of 5. Smoothing the first difference clearly improves accuracy on several datasets. We found no consistent improvement in accuracy by smoothing any of the other representations.

Figure 22 (Appendix) shows the pairwise accuracy for including shifted intervals versus not including shifted intervals: see Sect. 3.2. Removing shifted intervals approximately halves the total number of features. We compare using shifted intervals (default) against: (a) no shifted intervals and all other parameters remaining the same; (b) no shifted intervals and increasing depth to 10 (such that there are approximately the same number of total features as for shifted intervals); and (c) no shifted intervals and using \(m \, / \, 2\) quantiles per interval (again, such that there are approximately the same number of total features as for shifted intervals). Figure 22 shows that including shifted intervals results in higher accuracy on a majority of datasets and higher average accuracy, whether or not adjusting the total number of features, although the differences are mostly very small. While excluding shifted intervals (without increasing depth or the number of quantiles per interval) reduces the total number of features, and therefore the training time for the classifier, the absolute difference in compute time is relatively small.

4.2.3 Subtracting the mean

Figure 12 shows the pairwise accuracy for subtracting the mean from half of the quantiles (the default) versus not subtracting the mean from any quantiles (left), and subtracting the mean from all quantiles (right). Subtracting the mean from half of the quantiles results in higher accuracy than either not subtracting the mean, or subtracting the mean from all quantiles. There is no practical effect on compute time.

4.2.4 Classifier

Figure 23 (Appendix) shows the pairwise accuracy for extremely randomised trees (the default), versus a random forest (left), and a ridge regression classifier (right). (We use the same number of trees and features per split for the random forest as for extremely randomised trees.) Figure 23 shows that extremely randomised trees is more accurate on the majority of datasets compared to either a random forest or a ridge regression classifier, although for the random forest the differences in accuracy are mostly relatively small. However, the random forest is more than an order of magnitude slower to train than extremely randomised trees.

4.2.5 Number of trees

Figure 13 shows mean accuracy (left), and total compute time (right), versus the number of trees used in the classifier. Figure 14 shows the pairwise accuracy for 200 trees (the default) versus 50 trees (left), and 800 trees (right).

Unsurprisingly, accuracy tends to increase as the number of trees increases, with a proportional increase in computational expense. However, while there are small but clear differences in accuracy between 50 trees and 200 trees, the differences in accuracy for more than approximately 200 trees are minimal.

4.2.6 Number of features per split

Figure 15 shows mean accuracy (left), and total compute time (right), versus the number of candidate features per split as a proportion of the total number of features, p. Figure 16 shows the pairwise accuracy for \(0.1 \times p\) (the default) versus \(\sqrt{p}\) (left), and \(0.2 \times p\) candidate features per split (right). Note that \(\sqrt{p} > 0.01\times {p}\) for \(p < 10{,}000\).

There is a clear advantage in terms of accuracy from increasing the number of candidate features per split to a linear proportion (\(\ge 0.05 \times p\)) of the total number of features, with a proportional increase in computational expense. However, the differences in accuracy between sampling \(5\%\), \(10\%\), or \(20\%\) of the features are minimal.

5 Conclusion

We demonstrate that a simplified interval method, Quant, using a single type of feature (quantiles), fixed intervals, and a standard classifier, without any separate interval or feature selection process, can achieve the same accuracy as the most accurate current interval methods. Compared to most current state-of-the-art methods for time series classification—many of which require considerable computational resources—Quant is both simpler, and represents a significant improvement in terms of accuracy relative to computational cost. Middlehurst et al. (2024) state that ‘special mention must be given to Quant. It achieves high accuracy remarkably fast...’ (p 53), and that ‘...there is a case to be made for using Quant by default, at least for exploratory analysis, because it is so fast’ (p 56). In future work, we intend to explore the extension of the method to variable-length and multivariate time series, as well as further improvements to computational efficiency.

References

Altay T, Baydoğan MG (2021) A new feature-based time series classification method by using scale-space extrema. Eng Sci Technol Int J 24(6):1490–1497

Bagnall A, Lines J, Bostrom A et al (2017) The great time series classification bake off: A review and experimental evaluation of recent algorithmic advances. Data Min Knowl Disc 31(3):606–660

Baydoğan MG, Runger G (2016) Time series representation and similarity based on local autopatterns. Data Min Knowl Disc 30(2):476–509

Baydoğan MG, Runger G, Tuv E (2013) A bag-of-features framework to classify time series. IEEE Trans Pattern Anal Mach Intell 35(11):2796–2802

Berns F, Hüwel JD, Beecks C (2021) LOGIC: probabilistic machine learning for time series classification. In: 2021 IEEE international conference on data mining, pp 1000–1005

Cabello N, Naghizade E, Qi J, et al (2020) Fast and accurate time series classification through supervised interval search. In: 2020 IEEE international conference on data mining, pp 948–953

Cabello N, Naghizade E, Qi J, et al (2023) Fast, accurate and explainable time series classification through randomization. Data Min Knowl Discov

Dau HA, Bagnall A, Kamgar K et al (2019) The UCR time series archive. IEEE/CAA J Automatica Sin 6(6):1293–1305

Dempster A, Petitjean F, Webb GI (2020) Rocket: exceptionally fast and accurate time series classification using random convolutional kernels. Data Min Knowl Disc 34(5):1454–1495

Dempster A, Schmidt DF, Webb GI (2021) MiniRocket: a very fast (almost) deterministic transform for time series classification. In: Proceedings of the 27th ACM SIGKDD conference on knowledge discovery and data mining. ACM, New York, pp 248–257

Dempster A, Schmidt DF, Webb GI (2023) Hydra: competing convolutional kernels for fast and accurate time series classifcation. Data Min Knowl Discov

Deng H, Runger G, Tuv E et al (2013) A time series forest for classification and feature extraction. Inf Sci 239:142–153

Flynn M, Large J, Bagnall T (2019) The contract random interval spectral ensemble (c-RISE): the effect of contracting a classifier on accuracy. In: Pérez García H, Sánchez González L, Castejón Limas M et al (eds) Hybrid Artif Intell Syst. Springer, Cham, pp 381–392

Geurts P (2001) Pattern extraction for time series classification. In: De Raedt L, Siebes A (eds) Princip Data Min Knowl Discov. Springer, Berlin, pp 115–127

Geurts P, Ernst D, Wehenke L (2006) Extremely randomized trees. Mach Learn 63(1):3–42

Guillaume A, Vrain C, Elloumi W (2022) Random dilated shapelet transform: a new approach for time series shapelets. In: El Yacoubi M, Granger E, Yuen PC et al (eds) Pattern Recognit Artif Intell. Springer, Cham, pp 653–664

Henderson T, Bryant AG, Fulcher BD (2023) Never a dull moment: Distributional properties as a baseline for time-series classification. In: International workshop on temporal analytics PAKDD

Herrmann M, Tan CW, Salehi M, et al (2023) Proximity forest 2.0: a new effective and scalable similarity-based classifier for time series. arXiv:2304.05800

Ismail-Fawaz A, Devanne M, Weber J, et al (2022) Deep learning for time series classification using new hand-crafted convolution filters. In: IEEE international conference on big data, pp 972–981

Ismail-Fawaz A, Dempster A, Tan CW, et al (2023) An approach to multiple comparison benchmark evaluations that is stable under manipulation of the comparate set. arXiv:2305.11921

Ismail Fawaz H, Lucas B, Forestier G et al (2020) InceptionTime: finding AlexNet for time series classification. Data Min Knowl Disc 34(6):1936–1962

Li G, Xu S, Wang S, et al (2023) Forest based on interval transformation (FIT): a time series classifier with adaptive features. Expert Syst Appl 213

Lines J, Taylor S, Bagnall A (2018) Time series classification with HIVE-COTE: the hierarchical vote collective of transformation-based ensembles. ACM Trans Knowl Discov Data 12(5):521–5235

Louppe G (2014) Understanding random forests: from theory to practice. PhD thesis, University of Liège, arXiv:2305.11921

Lubba CH, Sethi SS, Knaute P et al (2019) catch22: CAnonical time-series characteristics. Data Min Knowl Disc 33(6):1821–1852

Lucas B, Shifaz A, Pelletier C et al (2019) Proximity forest: an effective and scalable distance-based classifier for time series. Data Min Knowl Disc 33(3):607–635

Middlehurst M, Bagnall A (2022) The FreshPRINCE: a simple transformation based pipeline time series classifier. In: El Yacoubi M, Granger E, Yuen PC et al (eds) Pattern Recognit Artif Intell. Springer, Cham, pp 150–161

Middlehurst M, Large J, Bagnall A (2020) The canonical interval forest (CIF) classifier for time series classification. In: IEEE international conference on big data, pp 188–195

Middlehurst M, Large J, Cawley G et al (2021) The temporal dictionary ensemble (TDE) classifier for time series classification. In: Hutter F, Kersting K, Lijffijt J et al (eds) Machine learning and knowledge discovery in databases. Springer, Cham, pp 660–676

Middlehurst M, Large J, Flynn M et al (2021) HIVE-COTE 2.0: a new meta ensemble for time series classification. Mach Learn 110:3211–3243

Middlehurst M, Schäfer P, Bagnall A (2023) Bake off redux: a review and experimental evaluation of recent time series classification algorithms. arXiv:2105.14876 (preprint)

Middlehurst M, Schäfer P, Bagnall A (2024) Bake off redux: a review and experimental evaluation of recent time series classification algorithms. Data Min Knowl Discov

Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Rodríguez JJ, Alonso CJ (2004) Interval and dynamic time warping-based decision trees. In: Proceedings of the 2004 ACM symposium on applied computing. ACM, New York, pp 548–552

Rodríguez JJ, Alonso CJ (2005) Support vector machines of interval-based features for time series classification. In: Bramer M, Coenen F, Allen T (eds) Research and development in intelligent systems XXI. Springer, London, pp 244–257

Rodríguez JJ, Alonso CJ, Boström H (2000) Learning first order logic time series classifiers: Rules and boosting. In: Zighed DA, Komorowski J, Żytkow J (eds) Principles of data mining and knowledge discovery. Springer, Berlin, pp 299–308

Rodríguez JJ, Alonso CJ, Boström H (2001) Boosting interval based literals. Intell Data Anal 12(3):245–262

Schäfer P, Leser U (2017) Fast and accurate time series classification with WEASEL. In: Proceedings of the 2017 ACM on conference on information and knowledge management. ACM, New York, pp 637–646

Schäfer P, Leser U (2023) WEASEL 2.0: A random dilated dictionary transform for fast, accurate and memory constrained time series classification. arXiv:2301.10194

Schmidt M, Lohweg V (2021) Interval-based interpretable decision tree for time series classification. In: Schulte H, Hoffmann F, Mikut R (eds) Workshop on computational intelligence, pp 91–111

Tan CW, Dempster A, Bergmeir C et al (2022) MultiRocket: multiple pooling operators and transformations for fast and effective time series classification. Data Min Knowl Disc 36(5):1623–1646

Acknowledgements

This work was supported by the Australian Research Council under award DP210100072. The authors would like to thank Professor Eamonn Keogh and all the people who have contributed to the UCR time series classification archive.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Rita P. Ribeiro.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dempster, A., Schmidt, D.F. & Webb, G.I. quant: a minimalist interval method for time series classification. Data Min Knowl Disc 38, 2377–2402 (2024). https://doi.org/10.1007/s10618-024-01036-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-024-01036-9