Abstract

Large scale categorical datasets are ubiquitous in machine learning and the success of most deployed machine learning models rely on how effectively the features are engineered. For large-scale datasets, parametric methods are generally used, among which three strategies for feature engineering are quite common. The first strategy focuses on managing the breadth (or width) of a network, e.g., generalized linear models (aka. wide learning). The second strategy focuses on the depth of a network, e.g., Artificial Neural networks or ANN (aka. deep learning). The third strategy relies on factorizing the interaction terms, e.g., Factorization Machines (aka. factorized learning). Each of these strategies brings its own advantages and disadvantages. Recently, it has been shown that for categorical data, combination of various strategies leads to excellent results. For example, WD-Learning, xdeepFM, etc., leads to state-of-the-art results. Following the trend, in this work, we have proposed another learning framework—WBDF-Learning, based on the combination of wide, deep, factorization, and a newly introduced component named Broad Interaction network (BIN). BIN is in the form of a Bayesian network classifier whose structure is learned apriori, and parameters are learned by optimizing a joint objective function along with wide, deep and factorized parts. We denote the learning of BIN parameters as broad learning. Additionally, the parameters of BIN are constrained to be actual probabilities—therefore, it is extremely interpretable. Furthermore, one can sample or generate data from BIN, which can facilitate learning and provides a framework for knowledge-guided machine learning. We demonstrate that our proposed framework possesses the resilience to maintain excellent classification performance when confronted with biased datasets. We evaluate the efficacy of our framework in terms of classification performance on various benchmark large-scale categorical datasets and compare against state-of-the-art methods. It is shown that, WBDF framework (a) exhibits superior performance on classification tasks, (b) boasts outstanding interpretability and (c) demonstrates exceptional resilience and effectiveness in scenarios involving skewed distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Feature engineering is the key to building better machine learning models in the era of big data (Lian et al. 2017; Nargesian et al. 2017). The ever-increasing volume of datasets in today’s world motivates the need for building models with low bias, such that higher-order interactions among features, if present—can be modeled correctly. Note, for larger quantities of data, the variance component of the error tends to be zero, and the bias component is the one that generally derives the error (Martınez et al. 2016). This is one of the reasons behind the success of parametric models such as deep Artificial Neural networks (\({\mathop {\texttt {ANN}^d}}\)) models (d characterizes the depth of the model), Higher-order Logistic Regression (\({\mathop {\texttt {LR}^n}}\)) models (n characterizes the order of the features considered in the model, e.g., \(n=1\) for linear, \(n=2\) for quadratic, etc.), Factorization Machine (\({\mathop {\texttt {FM}^m}}\)) models (where like n in higher-order logistic regression, m characterizes the order of the features considered in the model, e.g., \(m=1\) for linear, \(m=2\) for quadratic, etc.), and non-parametric models such as Random Forest (RF) (Breiman 2001), Gradient Boosting Decision Trees, (\({\mathop {\texttt {GBT}}}\)) etc. Lian et al. (2018). Generally, for large-scale datasets, parametric methods are preferred as non-parametric methods like \({\mathop {\texttt {GBT}}}\) require loading entire data into the memory. \({\mathop {\texttt {ANN}^d}}\) relies on the strategy of adding layers to build deep models, which gives them the capacity to engineer features. We refer to this form of learning as deep-learning Footnote 1. Generalized Linear Models (\({\mathop {\texttt {GLM}}}\)), represent another category of parametric models which have been proven to be effective for large datasets, though computationally inefficient (Lian et al. 2018). We refer to this form of learning as wide-learning, as the model complexity can be controlled through the width of the input layer. In general, capturing higher than quadratic-level interactions is a challenge for such models. \({\mathop {\texttt {FM}^m}}\) represents the latest category of parametric models, which relies on factorizing the interaction among the variables such that the obtained final non-linear model is linear in the input parameters (Rendle 2010). Factorization Machines and its variants have been the models of choice when learning from extremely large quantities of categorical datasets. We refer to this form of learning as Factorized-learning.

It has been shown that for large-scale categorical data, combination of various strategies leads to excellent results. E.g., WD-Learning is an end-to-end framework combining wide and deep learning (Heng-Tze et al. 2016). xdeepFM, the existing state-of-the-art (SOTA) model, is the combination of deep and factorization strategies (Lian et al. 2018). However, these frameworks do have some drawbacks:

-

Capturing higher-order interactions in the model can be difficult. E.g., in the case of WD-Learning, for moderate-size datasets, obtaining all possible cubic or higher-order features is next to impossible. Most state-of-the-art (SOTA) models rely on \({\mathop {\texttt {deep}}}\) component to engineer higher-order features.

-

Existing SOTA frameworks have limited interpretable capabilities. Though one can interpret the parameters of the wide part, since the parameters are free parameters (and not probabilities), interpretation can be difficult. Any form of interpretation in models such as xdeepFM is not possible.

-

The incorporation of human knowledge is quite limited. For example, one can make use of prior knowledge that some feature combinations never occur—leading to a form of feature selection, but this is limited to order-1 or order-2 features only in WD learning. Incorporation of such knowledge is not possible in xdeepFM.

-

Learning from biased datasets can be challenging for existing state-of-the-art (SOTA) frameworks. In fact, there is no mechanism in these frameworks that mitigate or eliminate the impact of bias present in the dataset.

How to obtain SOTA model with the capabilities of a) constructing higher-order explicit features, b) superior interpretability, and c) knowledge-guided learning for bias correctness and other related benefits has been the main motivation of this work.

How can one incorporate knowledge in machine learning models? Well, a simple solution is that of the Bayesian network. A Bayesian network is a directed acyclic graph, that depicts the dependence of features in a problem. The model is excellent for incorporating expert knowledge, which is generally done through the specification of the structure of a Bayesian network, as well as related probabilities. Bayesian networks are also super-interpretable, and we will discuss later that they can also formulate higher-order interactions, hence leading to a framework that can incorporate cubic or higher-order interactions easily. Moreover, since the Bayesian network can sample and generate synthetic data, knowledge-guided learning is achievable as one can repeatedly sample a desirable dataset (e.g., an un-biased dataset) during or prior to training.

So, are Bayesian networks a panacea? Well, the assumptions encoded by the structure of the Bayesian network can be incorrect, which can limit the efficacy of the model. A wide or deep model, on the other hand, has fewer assumptions than Bayesian networkFootnote 2. How can one get the benefits of both Bayesian networks as well as wide and deep learning? A simple strategy is that of the ensemble, where Bayesian networks, wide and deep constitute individual models. In fact, ensembling of disparate models has a long history in machine learning (Dong et al. 2020) and has shown to be quite effective. One drawback of ensembling is that the individual models are trained separately and their collaborations only occur when combining their predictions. Is there a way, we can integrate Bayesian networks with wide and deep models in a single model within an end-to-end learning framework (i.e., avoiding ensemble strategy)? In the following, we will show that we can do this by learning the parameters of Bayesian networks by optimizing a similar loss function that is typically optimized by wide and deep models. This will provide us a way to integrate Bayesian network with other parametric models including not only wide and deep, but also factorized models as well.

In this work, we denote a Bayesian network that is trained by optimizing conditional log-likelihood (a discriminative objective function) as Broad Interaction network (BIN). Of course, the parameters of BIN are constrained to be actual probabilities, and once they are learned, they allow the importance of high-order feature interactions to be observed clearly, which adds interpretable capabilities to the model ,Footnote 3. In this work, we have proposed a new framework that integrates BIN with wide and deep models. To the best of our knowledge, this is the first work that integrates Bayesian networks in an end-to-end fashion in a deep learning framework. The model is designed to be applied to extremely large categorical data. One property of categorical data is that they can be extremely sparse. For example, a city feature can be a list of all the cities in the world and can be either 1 or 0. It has been shown that on these sparse categorical datasets, factorized models, e.g., Factorization Machines can be quite effective, as they can leverage the present values to learn a weight for values that are not present in the data. Therefore, to better model categorical data, we have proposed to integrate BIN with not only wide and deep models, but also with factorized model resulting in our proposed framework wide, broad, deep and factorized learning (WBDF-Learning framework). The term broad denotes the BIN component of the framework and learning the parameters of BIN is denoted as broad learning.

About the term ‘Broad’ – The term broad learning has been introduced before in Chen and Liu (2017), where it is described as fast and accurate learning without a deep structure and is different to our work. On the other hand, terms Wide, Deep and Factorized learning are well-known terms in machine learning. To define broad-learning, let us formalize wide learning first. The term wide is used in machine learning research for models such as quadratic, cubic or higher-order linear or logistic regression. The term was first used in Heng-Tze et al. (2016), where authors proposed an ensemble of quadratic logistic regression and deep learning models. They characterized quadratic logistic regression as a wide model. We define wide learning as:

Definition 1

Wide Learning incorporates learning with all possible n-level interaction features in the data.

Based on the above definition, \({\mathop {\texttt {LR}^n}}\) is a form of wide learning. We define broad-learning as:

Definition 2

Broad Learning incorporates learning with a subset of all possible n-level interaction features, where the subset is either specified by an expert, obtained based on some pre-specified metric or learned from another data source.

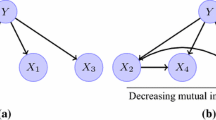

Based on the above definition, \({\mathop {\texttt {BN}^k}}\) is an example of broad learning. Another example of broad learning is higher-order feature selection based on measures such as mutual information (Zaidi et al. 2018), followed by a simple linear model.

On the inclusion of both ‘Wide’ and ‘Broad’ Components As we discussed earlier, Broad learning such as BIN is not a panacea, and subset of feature-interactions selected by the model can be incorrect (after all, we are using some metric to select a subset of interactions from all possible interactions). The main benefit of using Broad learning is because of its ability to scale to higher feature interactions. However, the inclusion of wide component can result in better results, of course at the expense of the computational cost of the size of the model. Typically, we can afford a smaller wide part and a much bigger broad part.

On the interpretability of prediction in WBDF Framework We will see that only the BIN component in our framework is interpretable, however, all the four components contribute to the prediction. So the interpretability that we get from BIN depends on how much the broad component is contributing to the actual prediction. We will show later in the experiments that the performance and contribution of the broad component is usually higher than the other components. Secondly, we will be using the attention layer that is built on top of the output of each component. This layer is also interpretable and one can easily determine the contribution of each of the components, and the interpretation analysis can be based on how relevant broad component is in the overall prediction. In summary, much of the interpretation capability of WBDF is due to its broad component, as well as attention mechanism. Attention can help in interpreting the extent of broad component in final prediction, whereas each of the parameter of BIN can be interpreted to determine the contribution of each feature. Of course, explaining model’s output in terms of all four components, i.e., wide, deep, factorized and broad is highly desirable, but is a challenging endeavour—one that is left as a future direction of this work.

On knowledge-guided learning of WBDF framework In the last few years, knowledge-guided machine learning has gained a lot of traction (Karpatne et al. 2022). The idea is to facilitate traditional learning by incorporating additional/auxiliary information (or knowledge) about the problem during the training stage. It has been shown that inclusion of such knowledge can improve traditional machine learning models. E.g., one strategy is to obtain a physical model of the process under study and obtain some additional data based on this physical model—the obtained data is used in conjunction with existing training data. The additional information that is present in this newly obtained data can improve classification but it can also correct for any bias that is present in the original data. E.g., a bank computing the credit score of its customer might be faced with data that consists of customers only from one city—however, as part of knowledge-guided machine learning, they can generate data for other cities which can mitigate some effects of bias. In WBDF framework, as we mentioned earlier, BIN has an advantage, that it can be trained prior with sufficient data, hence acting as the knowledge system. This is equivalent to structure learning of the Bayesian network, where a human in the loop can help specify or verify the structure of the network. Now, one can sample from this model, and the resulting data can be used in augmentation during the training of WBDF. In a nutsell, by leveraging the BIN’s sampling function capability, we can learn and train with some expert domain knowledge, generate additional data through sampling, expand the original (biased) dataset, and ultimately improve classification performance.

On the importance of Bayesian network structure No doubt, BIN is an important component of our proposed WBDF framework. What if the assumptions encoded by the Bayesian network in BIN are incorrect? Well, structure learning is a crucial aspect of BIN, and it is important that a correct (or a desirable) structure is specified. In this work, we have constrained ourselves to restricted Bayesian network structure learning, i.e., a structure is learned prior to learning the parameters of BIN component. Note, a restricted Bayesian network uses information such as mutual information or conditional mutual information to determine the structure, such as TAN (Friedman et al. 1997) or KDB (Sahami 1996), etc. This structure has shown to be extremely effective, leading to a scalable model which leads to state-of-the-art results on massive datasets (Martinez et al. 2015). How to incorporate structure learning with-in BIN is a challenging problem. There are several recent advances such as [16] and Deleu et al. (2022) in structure learning, that we believe can be integrated in BIN. However, this is left as a future work.

The contributions of this work are as follows:

-

1.

We propose an elegant framework that constitutes Wide, Broad, Deep and Factorized components, to obtain a low-bias model for large volumes of data. The integration is done through an interpretable Attention mechanism.

-

2.

By comparing on two standard CTR prediction datasets, 10 large categorical datasets and two synthetic datasets, we demonstrate that the WBDF-Learning framework has better performance than SOTA models such as FNN, CrossNet, xdeepFM, etc., as well as superior training time and a faster convergence profile. On standard CTR dataset Criteo, our method leads to state-of-the-art results based on the experimental settings in Zhu et al. (2021).

-

3.

We study WBDF framework under knowledge-guided learning scenario. Under this scenario, BIN is used to sample a new dataset to correct for some artificially introduced bias. We compare the performance of vanilla WBDF and WBDF with bias correction through data sampling capability of BIN.

-

4.

We evaluate the interpretability of our model and demonstrate its effectiveness by comparing it with the popular LIME model.

The rest of the paper is organized as follows. We will discuss related work in Sect. 2, and then present the proposed learning framework in Sect. 3. The experimental evaluation of our proposed framework is conducted in Sect. 4. We conclude in Sect. 5 with pointers to future works.

2 Related works

WD-Learning is the classical hybrid model, which achieves both memorization and generalization in one model by jointly training a wide linear model component and a deep neural network component (Cheng et al. 2016).

FNN model is a variant of Factorization Machines (FM) model, and can be considered as an FM-initialized ANN (Zhang et al. 2016). The idea is simple—an FM which is trained prior to training an ANN, is used as the representation of each categorical feature in the data. The structure of FNN allows the bottom layer to exploit spatially local correlation among the features. The supervised-learning embedding layer within the FNN using factorization machines reduces the dimension from sparse features to dense continuous features. After the embedding layer, multiple dense layers are built.

DeepCrossNet (DCN) (Wang et al. 2017) has a slightly different architecture compared to FNN. The CrossNet (cross-network) component in DeepCrossNet intends to explicitly generate higher-order feature by a cross operation, which is a special type of higher-order feature interaction by having the hidden layer output as the scalar product of the input layer. Repeated hidden layers can conduct the higher-order interactions according to existing ones and preserve the interactions from previous layers. Then the output of CrossNet is concatenated with a dense ANN to obtain the final prediction as DCN.

xdeepFM (Lian et al. 2018) is the recent SOTA model which achieves the most accurate performance. It has two levels of feature interaction namely, bit-wise and vector-wise. The bit-wise is the feature interaction that happens on the value of the feature directly, whereas, the vector-wise is the feature interaction that happens on the dimension of the feature matrix. The interacted object in vector-wise feature interaction is not the single value of the feature but the vector from the embedding matrix of the feature. The Compressed Interaction network (CIN) is the main component that can handle the vector-wise interaction. The xdeepFM combines ANN with an explicit vector-wise fashion compressed interaction network to learn the higher order features. It can be seen that xdeepFM is based on CrossNet but at a bit-wise level operation, and it applies the feature interaction at the vector-wise level.

3 WBDF learning

Our proposed WBDF framework is composed of wide, deep, factorized and broad components, followed by an Attention layer, resulting in an effective yet interpretable end-to-end system. An overview of the entire framework is given in Fig. 1. In the following subsections, let us discuss various components of the framework.

3.1 The Wide,Deep and Factorized components

The wide component of WBDF-Learning framework is simply a linear model with the form:

where \(\sigma _w\) denotes the Softmax for the Wide component. The parameter \({{\textbf {W}}}\) is the parameters of the wide network, and \(\textbf{c}\) is the bias term .Footnote 4 The input data \(\tilde{\textbf{x}}\) is the transformed features from the input features – \(\textbf{x}\). The cross-product features that are computed can be expressed as: \(\tilde{\textbf{x}} = \phi _{2}(\textbf{x}) = \prod _{i=1}^{p} \prod _{j=i}^{p} [x_{i} x_{j}]\), where \(\phi _{2}(.)\) represents a quadratic transformation of the input data. In general, the wide part can be as wide as possible, e.g., an order-n transformation can be written as:

As discussed in Sect. 1, going beyond \(n = 2\) is not trivial on even moderate-size datasets, as it significantly increases the time and space complexity. In our proposed formulation of WBDF-Learning, the usage of the wide component is limited to \(n = 2\), similar to other SOTA frameworks. Note that the feature transformation of Eq. 2 is preceded by discretization of numeric features, leading to all categorical features in the dataset. Therefore, \(x_i x_j \ldots x_k\) denotes the cross-product of n categorical features.

The Deep component of our WBDF-learning framework consists of a typical feed-forward deep neural network with the input embedding vector \(\hat{\textbf{x}}\). An embedding of each input feature is learned as part of the network, hence we define this transformation of input features as: \(\hat{\textbf{x}} = \Phi _{\text {Embedding-Layer}}(\textbf{x})\). In general, the Deep component can be as deep as possible, e.g., a depth-\(\textbf{d}\) network can be defined as:

Here \(\sigma _{d}\) denotes the activation function at layer d, and \({{\textbf {D}}}^{d}\) denotes the parameters to be learned at that layer. \(\textbf{h}^{d}\) denotes the output of the hidden layer d ,Footnote 5. Just like the wide component, the input data is first discretized leading to all categorical features in the data. Note that typical ANN can handle numeric features. However, for leveraging embedding layers in ANN and to be consistent with the wide component, deep component of our WBDF framework takes only categorical features.

A typical factorized model takes the following form:

Here, \(F^1\) and \(F^2\) denotes the linear and quadratic parameters of the model. However, our WBDF framework relies on the recent advancements in factorized learning, and utilize Compressed Interaction network (CIN) from Lian et al. (2018), which performs a layer-wise operation on the data to obtain feature maps:

Note, p denotes the number features, \(1 \le h \le H_k\) and \(H_k\) denotes the number of embedding feature vectors in k-th layer. Precisely, k-th layer here indicates the k-th hidden layer of the CIN and the \(k \in [1,m]\). In rest of the paper, we donate the m as the total layer size in CIN. The features maps are then pooled as: \(p_i^k = \sum _{j=1}^{D} \textbf{x}^{k}_{i,j}\) for \(i \in [1,H_k]\), where D is the dimension of embedding. A pooling vector of size \(H_k\) is generated as: \(\textbf{p} = [ p_1^k, \ldots p^k_{H_k}]\) for layer k, and for all layers as: \(\textbf{p}^{+} = [\textbf{p}^1, \ldots \textbf{p}^T]\). Here T denotes the depth of the network or the number of layers. We write the factorized component in WBDF as:

where \(\sigma _f\) denotes the Softmax, and \(F^o\) are its parameters.

3.2 The Broad component

We denote the broad component of our model as Broad Interaction network (BIN). It is based on a restricted Bayesian network and exploits the trick of Zaidi et al. (2017) by learning a separate set of parameters (\({{\textbf {B}}}\) in our framework) by optimizing a discriminative objective function, i.e., conditional log-likelihood (CLL). This discriminative training of parameters gives us the capability to use a Bayesian network alongside a Wide and Deep components in an end-to-end system. A Bayesian network is a directed acyclic graph. There are two component of a Bayesian network – structure and the parameters. The first stage of Bayesian network involves structure learning, which is followed by parameter learning. Structure learning incorporates learning the structure that is a directed edge is added among the features of the dataset. Structure learning problem comprises of adding, deleting and reversing edges such that some criteria score is optimized. Such approaches to structure learning are called un-restricted approaches. Restricted approaches on the other hand rely on simple heuristics such as mutual information or conditional mutual information to determine an optimal structure. In BIN, the structure is learned via an un-restricted structure learning. In a way – BIN has no control over the structure learning (the structure is given to BIN), it only plays the role of the second stage of Bayesian network learning – i.e., the parameter learning.

What does the parameters of Bayesian network look like? Well, let us make use of over-parameterization trick of Zaidi et al. (2017), and define our Bayesian network (broad) model as:

which is parameterized by two set of parameters: \(b_{.} \in \textbf{B}\) and \(\theta _{.} \in \Theta\). The subscript \(x_i \vert y, \Pi (\textbf{x})\) denotes the parameters correspond to following feature interaction: attribute i, class attribute with value y and set of attribute values returned by function \(\Pi ()\). Note, \(\Pi ()\) is a function that return the parents of each attribute based on a Bayesian network’s structure, and it can be seen that we have been able to learn a weight for various high-lever interactions in the data. Note, structure learning of Bayesian network is basically the specification of \(\Pi\)() function, where we specify the parents of each attribute. In structure learning, one can limit the maximum number of parents an attribute can take. This is specified by the parameter k that controls the complexity of the model.

Now that we have established the structure learning component of Bayesian network in Eq. 5, let us focus on the parameter learning in Eq. 5. There are two set of parameters—\(\Theta\) and \({{\textbf {B}}}\). The parameter \(\Theta\) constitutes of parameters which are learned by optimizing the log-likelihood (LL) of the data, and hence are equal to the actual empirical probabilities. The parameter \(\Theta\) can be called as the generative parameters. The parameter \({{\textbf {B}}}\) is optimized by optimizing the conditional log-likelihood (CLL), and often described as the discriminative parameters. The parameter \({{\textbf {B}}}\) is optimized as part of (discriminative) training of BIN in WBDF framework. Important to note that unlike \(\Theta\), which are set of probabilities – the parameter \(\textbf{B}\) is not constrained, and can take on any value. We can write conditional probabilities in succinct form as:

where \(\sigma _b\) denotes the Softmax. Again, \(\Theta\) in Eq. 6 is a generative parameter and is learned prior to training a BIN, whereas, \({{\textbf {B}}}\) is a discriminative parameter and is learned by optimizing the objective function of WBDF, i.e., learned alongside the parameters of wide, deep and factorized models.

It can be seen that Eq. 6 is over-parameterized. Rather than learning the parameter \(\Theta\) apriori, by optimizing the LL objective function, we can learn it by optimizing the CLL objective function instead. Note, the parameter \(\Theta\) are actual probabilities, and therefore, one will have to enforce the probability constraints during the optimization. The conditional probabilities in this case represent a slight variant of Eq. 6, and we define it as the output of our Broad Interaction network, which differentiates our formulation with that of Zaidi et al. (2017):

Now, \(\Theta\) in Eq. 7 is a discriminative parameter and is learned by optimizing the objective function of WBDF, i.e., learned alongside the parameters of wide, deep and factorized models.

One can use BIN in our framework by either optimizing Eqs. 6 or 7. The structure of BIN is illustrated in Fig. 2. Note, these two variants lead to similar results (in terms of the classification performance) as we show later in ablation studies, but optimizing Equation 7leads to slower training time as it maintains the probability constraints over its parameters. It, however, leads to lesser number of parameters in the model, with an easy interpretation of parameters to be optimized, i.e., \(\Theta\) – and hence is used as the default setting.

3.3 WBDF Training

WBDF learning framework offers an elegant combination of Wide, Broad, Deep and Factorized components, in which the parameters of each component are trained in an end-to-end fashion. Our joint optimization will update the weights of all four components simultaneously connected together via Attention layer, by back-propagating the error while optimizing a single objective function. The use of Attention in WBDF is motivated from its enormous success in NLP and related domains and serves two purposes:

-

Firstly, the Attention layer before the final output layer can provide the component-level interpretation. And, therefore, the importance of each component in WBDF can be easily determined.

-

Secondly, the Attention can work as the gate for controlling the influence of different components during the training. Therefore it automatically decides which component to trust more and hence wight more in order to optimize the objective function.

We define the Attention layer in WBDF framework as:

where \(\oplus\) denotes concatenation and h and \(h_0\) denotes the parameters of the Attention layer Based on Equation (8), the objective function of WBDF-Learning framework can be written as:

Here, \(\mathcal {L}\) denotes the objective function – and is typically the cross-entropy loss, which is optimized via standard gradient-descent based optimization algorithm such as adam solver. Note, for sake of completeness, we have included both \(\Theta\), and \({{\textbf {B}}}\) for broad component. As we discussed in Sect. 3.2, one can learn \(\Theta\) apriori, and can only learn \({{\textbf {B}}}\) (Eq. 6) during the optimization of Eq. 9. Other option is – one can ignore \({{\textbf {B}}}\), and learn \(\Theta\) only (Eq. 7) during the optimization of Eq. 9.

We present the pseudo-code of WBDF-Learning framework in Algorithm 1.

3.4 Knowledge-guided WBDF

Let us in this section discuss how WBDF can integrate knowledge-guided machine learning through its capacity to generate unbiased data. Note, knowledge-guided machine learning is a vast area with many applications, however, we have only considered the application of bias-correctness as a representative application in this work. As we mentioned earlier, deep, wide and factorized classification models do not possess the capacity to correct for biased data – they can only be trained using the given (biased) dataset. Therefore, with biased datasets, the training outcome of these models can be sub-optimal. The usual remediation involves resorting to other generative models for data augmentation outside the framework. Our broad component, however, presents a solution to generate data with-in the learning framework. This capability enables WBDF to correct for the bias in original data by either learning Bayesian network structure from another data source, or by involving an expert in the loop in structure learning.Footnote 6 Afterward, the WBDF can be employed to fit the generated data—i.e., it is trained on the mixture of the biased dataset along with the un-biased generated dataset. This is illustrated in Fig. 3—where we show the framework of the WBDF under knowledge-guided learning. Here, Original data signifies the comprehensive knowledge of the entire domain, whereas Biased data represents the given biased training data. If no Original data is present, another form of structure learning is through expert in the loop – where a human expert can help learn the structure, from which data can be sampled.

Unlike the proposed WBDF framework, when dealing with Biased data, the broad component is trained using the complete domain knowledge, hence obtaining a synthetic dataset—while wide, deep and factorized are trained with mixture of Generated data and Biased data. The framework does this by setting the is-learnable parameter for each component during the training process.

4 Experiments

In this section, we will empirically evaluate the efficacy of our proposed WBDF-Learning framework by comparing its performance against other related methods on standard datasets, as well as on synthetic datasets. In addition, we have constructed a contrived knowledge-guided scenario featuring biased distributions and measured the lift on accuracy afterwards.

In particular, we are interested in finding answers to the following research questions:

-

RQ1 How well does WBDF-Learning framework perform over different datasets when compared to its individual components?

-

RQ2 How does the proposed WBDF-Learning framework perform against other low-bias SOTA models which rely on effective feature engineering, such as WD, xdeepFM, etc. especially on CTR datasets.

-

RQ3 What is the effect of introducing the broad component? E.g., what interpretation capabilities does it bring?

-

RQ4 What is the effectiveness of WBDF in knowledge-guided machine learning scenario handling biased data?

Before embarking on the explanation of our results, let us start by explaining our experiments settings.

4.1 Experiment setup

We perform three different types of experiments. The first experiment tests the classification effect of our proposed WBDF model. For this we chose several datasets (details of these datasets are given in Table 1). We have used a total of 12 datasets in this work. Out of 12, there are 10 UCI datasets. There are two popular industry bench-marking datasets Criteo and Avazu mostly used for click-through-rate (CTR) prediction. Additionally, we have used 2 Synthetic datasets.

The second set of experiments study the interpretation of our proposed WBDF framework. We have made use of one dataset from Table 1— Adult—and compared the interpretation of WBDF model with that of state-of-the-art model LIME.

The third set of experiments study the knowledge-guided capability of our framework. For this, we have used four datasets from Table 1. Note, for each dataset, we adopted several techniques to construct a biased dataset.

4.1.1 On creation of synthetic datasets

The two synthetic datasets are variants of Pokerhand dataset. The motivation for synthesizing these two datasets is to create a dataset that requires a low-bias model. We will use the performance of these two datasets to determine how effective the model’s feature engineering capability is. Two synthesized datasets are based on standard Pokerhand by following below 4 steps:

-

1.

Version of the synthetic Pokerhand is specified.

-

2.

Rules of each class for Pokerhand are identified. E.g., Full house is not available for four-hand synthetic Pokerhand-4.

-

3.

The cards with respective hands are uniformly sampled.

-

4.

In each sampling round, the cards in hand are checked by the rule of each class from Pokerhand datasets. Once checked, they are stored on other cards.

4.1.2 Methods used in comparison

We compare with the following methods:

-

W High-order logistics regression (\({\mathop {\texttt {LR}^n}}\)) with \(n=2\).

-

B Discriminative k-dependence Bayesian classifier (\({\mathop {\texttt {BN}^k}}\)) with \(k=3\) (Zaidi et al. 2017).

-

D Deep Neural network (\({\mathop {\texttt {ANN}^d}}\)) with \(d=3\).

-

F Compressed Interaction network (CIN) (Lian et al. 2018) with a layer size of 3 (\(m = 3\)).

-

LR Typical logistics regression model (Jain et al. 1996)—a linear model, incldued only for bench-marking.

-

WD Typical Wide and Deep model (Heng-Tze et al. 2016) with quadratic Wide component and a Deep component with \(d = 3\).

-

xdeepFM One of SOTA methods for CTR prediction. Layer of size 3 is used with CIN.

-

FNN Factorization machine based artificial neural network (Zhang et al. 2016), which incorporates order-2 feature interactions.

-

DCN CrossNet model (Wang et al. 2017) with default size of cross-layer to be 3.

-

WBDF WBDF framework based on Algorithm 1. We use \(n=2\) for wide (wide component is equivalent to W), \(d=3\) for deep (deep component is equivalent to D) and \(m=3\) for factorized (factorized component is equivalent to F). The broad component k is set to 3 for all datasets (unless stated otherwise), note broad component is equivalent to B.

-

ONN (Zhang et al. 2021), FiBiNet (Huang et al. 2019) and IPNN (Qu et al. 2016): Three SOTA models that are specialized for CTR prediction problem on Criteo and Avazu.

In the experiments, we have tried to be systematic in terms of the comparison. E.g., we used \(m=2\) for FNN, as it is the default choice in almost all studies. For WBDF, we have used \(m=3\) as the default choice for factorized component. This is because, WBDF utilizes CIN from Lian et al. (2018) as the factorized component, where \(m=3\) is default option in most studies. It is important ot note that an ensemble model of the form: W+B+D+F is just an WBDF model with-out the attention mechanism. So it is not a competitor but just a variant of our proposed model. In our initial experiments, we have seen that attention improves the performance of W+B+D+F model, and hence we have used attention mechanism as the default setting in WBDF.

4.1.3 On relationship between wide and broad component’s size

It is important to note that, \(n=1\) and \(k=0\) encompasses linear interactions, and are equivalent models (for comparison). Whereas, \(n=2\) and \(k=1\) encompasses quadratic interactions and are equivalent, whereas \(n=3\) and \(k=2\) are equivalent as they encompass cubic interactions. In general, for a wide model with size n, the equivalent broad model will have size \(k = n - 1\).

4.1.4 On our evaluation strategy

We have used the standard settings (hyper-parameters) of each of the method which is used in the existing literature for comparison.

Our evaluation strategy is based on cross-validation, i.e., each method is tested on each dataset using 5 rounds of 2-fold cross validation, and averaged accuracy and AUC results are reported. For some datasets, we use \(10\%\) of the train data for validation (if needed by the method), resulting in a final train:validation:test (TVT) ratio of 4:1:5.

To compare results on standard CTR datasets, we follow the evaluation design strategy of Zhu et al. (2021), i.e., a train:validation:test (TVT) ratio of 8:1:1 is used for two CTR datasets.

4.2 WBDF vs. WD/W/B/D/F

Let us in this section compare the accuracy of WBDF with that of W, B, D and F models. The results are given in Table 2. We are interested in RQ1, i.e., determining if the joint learning framework leads to performance better than its constituent parts trained separately. We will also compare the performance of WBDF with WD-learning, and hence partly answer RQ2.

One can draw following conclusions from Table 2:

-

It is encouraging to see that WBDF outperforms all other compared models including its four constituent components, which demonstrates its capability as an effective model for large data quantities.

-

The difference in the performance of WBDF and with WD on Pokerhand and two synthetic datasets is massive – almost \(15\%\) improvement. This demonstrates the power of capturing higher-order interactions with Broad component. Note, Pokerhand is a dataset where each instance represents a poker hand, which has order-5 dependencies. Pokerhand-4 and Pokerhand-6 have order-4 and order-6 dependencies respectively.

It can be seen that WBDF is superior on all datasets, however, it is far more effective on multi-class datasets – there is a difference of over \(8\%\) in improvement of WBDF and the next best, i.e., WD model.

4.3 WBDF versus xdeepFM and other SOTA models

Let us compare the performance of WBDF with competing low-bias models such as FNN, DCN and xdeepFM models, to find an answer to RQ2. For the sake of completeness (and establishing a baseline), we have also presented the results with logistic regression model. Since each dataset is different, it will be interesting to see whether there is a single model that can outperform others on all datasets. We present the accuracy results in Table 3, from which it can be seen that WBDF-learning framework outperform all other baseline models on 10 out of 12 datasets. The only two losses are on Higgs and Localization to xdeepFM model. Considering that our WBDF wins the most over SOTA models, we find these result very encouraging. Overall, there is a \(3\%\) difference in the performance of WBDF over DCN and FNN and a difference of \(10\%\) over LR. It can be seen that WBDF is far more effective on multi-class datasets with a performance improvement of \(0.37\%\) over xdeepFM. Given the scale of these datasets, again, such an improvement is significant. On the two CTR datasets Criteo and Avazu , the WBDF outperforms other models. We will discuss the performance difference in terms of AUC later in the section. These experimental findings dictate that WBDF has superior feature engineering resulting in a low-bias model compared to SOTA models.

4.3.1 Convergence analysis

The comparison of the convergence of training-loss of WBDF and xdeepFM models on 12 datasets is shown in Fig. 4. We claim that a better convergence profile is one that asymptote to a better point in the optimization space, and also converges faster. Note, in previous section, we already have established that WBDF has a better performance in terms of accuracy comparing to xdeepFM. According to the convergence plots in Fig. 4, it can be seen that WBDF converges not only much faster but asymptote to a lower point on most of the datasets. These results are extremely encouraging as they demonstrate that WBDF not only has a better performance in terms of accuracy but also in terms of convergence. Furthermore, we compare the training time between WBDF and the xdeepFM in Fig. 5, where it can be seen that WBDF’s better convergence leads to faster training time as compared to xdeepFM. Please note that the training time of WBDF includes the structure learning of the Bayesian network. It is important to note that given the (massive) size of datasets, a small improvement in model’s accuracy can result in significant business value. Also, the main benefit of WBDF as compared to xdeepFM is due to its interpretation and knowledge-guided nature. The fact, that it has a similar or better performance both in terms of accuracy and training time than xdeepFM and other state-of-the-art models is an added advantage.

4.4 AUC comparison on Criteo and Avazu

Let us use the evaluation strategy of Zhu et al. (2021) and compare the performance of WBDF with SOTA methods that are specialized for CTR prediction datasets. We report the AUC results in Table 4. We use the notation of (*), (**) and (***) to denote the current best, second-best and third-best methods respectively, according to study in Zhu et al. (2021).Footnote 7 It can be seen that WBDF leads to better than current-best performance on Criteo dataset, whereas, it leads to second-best performance on Avazu. Given the specialized nature of competing baseline methods, we find these results extremely encouraging, which demonstrates that WBDF has the potential to be an effective model. In the following, we will discuss the interpretability of WBDF, which is a feature missing from all the competing baseline methods.

4.5 Interpretation

In this section, we will answer RQ3 by demonstrating how the Broad component of WBDF opens the room for a better interpretation and a step towards an explainable model in a deep learning framework. We conjecture that having this component also opens the door for adding in auxiliary information (learned either from other sources or through human experts) in a deep learning model.

4.5.1 Interpretation from BIN

As the learned parameters (\(\Theta\)) of BIN are actually conditional probabilities of the form \(\textrm{P}(x_i \vert y, \Pi (x_i))\), they are super interpretable, during and after the training. E.g., during training, one can interpret the importance of features for determining the value of class or predicting class. In this work, we used the following measure of feature-set importance:

The conditional probabilities \(\textrm{P}(y \vert x_i,\Pi (x_i))\) can be obtained by conducting the inferencing on the broad component BIN (Chavira and Darwiche 2007). Now, higher the score – \(\mathcal {I}_{x_i, \Pi (x_i)}\), higher the contribution of that feature-interaction towards the prediction of class value. Note, we assume conditional independence (among features) when doing this analysis. In practice, this is not true, however, many algorithms assume such independence, when determining the importance of a feature (or feature-interaction) for class prediction (Kraskov et al. 2004). We claim that one can do all the interpretation during the training of WBDF. On the contrary, popular state-of-the-art models such as LIME works by doing an explanatory analysis once the model is trained.

Interpretability of a model is generally observed by identifying features or feature combinations that play a role in determining the output of the model. In order to demonstrate the interpretation capabilities of WBDF, we randomly selected two instances from Adult dataset and listed feature interactions ranked by importance based on the probabilites from BIN in Table 5Footnote 8. The reason for selecting Adult dataset is that features are easily interpretable. Most of the other datasets in our analysis lacks meaningful features. The top 3 high-order features with higher conditional probability score are marked as bold, for which BIN has given a higher score for predicting class income \(>50\)K. Next, we tested the same two instances via LIME to explain the feature contribution. It can be seen from Fig. 6 that the top 3 high-order features with higher contribution via LIME are the same as those in Table 5 for both instances. It is encouraging to see that BIN can offer the same interpretability capability that LIME (and its variants) offers only after the training. The similar trend was observed for other datasets—we have only included two examples in this analysis, due to space constraints.

4.6 Knowledge-guided machine learning

To study knowledge-guided machine learning capability of WBDF – we employ four datasets (Adult, Sussy, Higgs and KDD99). Our strategy in this experiment is to first train WBDF with biased dataset. We are interested in comparing this model’s performance with following model:

-

We utilize the Broad component of WBDF to generate synthetic data. The structure of the Broad component in this model has to be either a) specified by some expert that can provide an un-biased perspective through structure learning, or b) structure can be learned on some related un-biased data.

-

The un-biased dataset generated this way is combined with existing biased datasets, and WBDF model is trained. We call this model the knowledge-guided WBDF model.

We aim to measure the boost in the performance of knowledge-guided WBDF variant with that of standard WBDF model by computing the lift in the accuracy.Footnote 9

The important question is how to produce a biased dataset? Because, none of the datasets we have used in this work has an associated expert who can provide the true structure, and also none of the datasets have a related un-biased version available. To address these issues, we used two different strategies:

-

The original dataset is firstly trained (where input features are represented in one-hot encoding format) with tree-based ensemble model such as Random Forest. Then the feature importance is obtained from the trained Random Forest. The most important feature-values in the dataset towards to the classification performance are identified. By removing a percentage of instances containing the most important feature-values – a biased dataset is obtained.

-

The second method for acquiring the biased dataset is introduced by leveraging the Shapley Additive explanations (SHAP) values (Lundberg and Lee 2017). The SHAP values can identify influential feature-values according to the cooperative gaming theory. Specifically, they can quantify the contribution of each feature towards the prediction outcome for a specific instance, considering both its own value and its interactions with other features. Therefore, it provides a more nuanced understanding of the contribution of the feature-value in the original dataset in complex models. By leveraging SHAP values, the biased dataset can be obtained by removing the instances which contain these important feature-values.

In the knowledge-guided learning experiment, the correspondingly used dataset is divided into training and testing subsets with \(80\%\) and \(20\%\) ratio. The Broad component, i.e., the structure and parameter learning are trained from the original \(80\%\) of the data—denoted as Original Data. The broad component is later used to generate samples which are equal in size to Original Data—denoted as Generated Data. Additionally, the training set is used to obtain the biased dataset—denoted as Biased Data. The testing set is used as the hold-out for evaluation purpose in this experiment.

The Generated data is blended with the biased data to fit a WBDF model for knowledge-guided machine learning—denoted as knowledge-guided WBDF. Tables 6 and 7 shows the comparative results of the knowledge-guided WBDF. It can be seen that the knowledge-guided WBDF outperforms vanilla WBDF that is trained on biased data. Overall, it can be seen that knowledge-guided WBDF can obtain an average of over \(10\%\) lift in the accuracy. This is very encouraging as it demonstrates that WBDF can not only compete with the state-of-the-art models such as xdeepFM in terms of classification performance, but it can also provide a solution for classifying with biased dataset through knowledge-guided machine learning. What also stands out in the Tables 6 and 7 is the excellent results on Generated data—i.e., WBDF trained on data generated from Broad component.

4.7 Ablation studies

4.7.1 Controlled feature engineering and k

In this section, we compare the performance of WBDF with varying level of feature interactions. We make use of 3 synthetic Pokerhand datasets, such that it has 4, 5 and 6 level interactions. The results are shown on left-hand-side in Fig. 7. We also plot the performance of xdeepFM for comparison. It can be seen that WBDF has far more superior feature engineering capability resulting in much better performance on these synthetic datasets.

We also study the role of parameter k in our WBDF model on one of the synthetic data (Pokerhand-6). The results are shown in right-hand-side of Fig. 7. As expected, the higher the value of k, the better the results are. For sake of completeness, again, we have plotted xdeepFM performance on this dataset. It can be seen that WBDF with \(k=1\) and \(k=2\) has inferior performance than xdeepFM. However, \(k=2\) leads to superior performance—highlighting the importance of BIN in WBDF framework.

4.7.2 On BIN’s Form

The output of BIN in WBDF is based on Eq. 7, where it learns parameters \(\Theta\) directly. One can be interested in the output of the form of Eq. 6, i.e., \(\sigma _b ({{\textbf {B}}}^T \log \Theta )\). Note, in this case, we aim to pre-learn the parameter \(\Theta\) (optimizing log-likelihood), and learn \({{\textbf {B}}}\) as part of WBDF training. We call this version WBDF\(*\). We conducted the ablation study on these two forms of BIN. It can be seen from Fig. 8 that both forms of BIN lead to similar results, though WBDF\(*\) is much faster in training as compared to WBDF. This is expected, as WBDF\(*\) does not have to fulfil the probability constraints during the discriminative training process. The downside of this speed is the lack of interpretation in WBDF* model.

4.7.3 On the role of Attention

In this section, we extracted the attention score from WBDF during training on Adult dataset. As we discussed earlier, one useful trait of attention is that it leads to excellent interpretability, as it explains which component is contributing more towards the final prediction. As the attention score is highly instance-specific, we have used different portions of the data to extract an average attention score (denoted as 30%data,50%data,70%data,100%data). Figure 9 shows the extracted attention score where the darker color represents a higher score. The x-axis represents the component-wise score for each of the two classes. E.g, W_1 represent the attention score of wide component for first class, and W_2 represents the attention score of wide component for the second class. We present results during two stages of the training, that is: \(\texttt {epoch}=50,\texttt {epoch}=200\)). It can be seen that the attention scores are consistent during the two training stages. Whereas, components B_2 and D_2 are the one with highest weights, and hence played a dominant role in classification. One can also see that deep component needs a lot more data (larger number of epochs) to gain confidence and play a role in deciding the class label, as it is more dominant at 200 epochs instead of 50. However, the broad component remains consistent from the start of training till finishing (attention scores do not change from 50 to 200 epochs). It is worth noting that class 2 has higher weights than class 1 (i.e., W_2 > W_1, B_2 > B_1, D_2 > D_1 and F_2 > F_1) in Fig. 9—this is because on adult dataset, the class distribution is skewed in favour of class 2. Nonetheless, the focus should be on the relative weights of each component, and it can be seen that broad component plays the biggest role in making a prediction.

5 Conclusion

In this paper, we have presented a model for addressing the growing need of building a low-bias model for extremely large quantities of data, that is also interpretable. We have shown that our proposed WBDF model based on an end-to-end learning of wide, deep, factorized learning, and a newly formulated broad interaction network, can lead to (a) better classification performance than SOTA models (hence with superior feature engineering capability); (b) faster training time, better convergence profile; (c) offers interpretability as good as SOTA frameworks, especially during the training time; (d) capability to do knowledge-guided machine learning, as demonstrated by the scenario of bias-correctness. With ever-increasing scale of today’s datasets, we believe that WBDF offers an excellent learning framework. In future, we plan to study alternative models of broad-learning, including unrestricted Bayesian networks, as well as incorporating capability to handle numeric features.

Code

The code for experiments conducted in this paper is available at: https://anonymous.4open.science/r/wbdlearning-464D/.

Notes

Various layers of \({\mathop {\texttt {ANN}}}\) (e.g., dense, convolution, recurrence) serves as feature engineering modules for modeling higher-order feature interactions and hence leading to a low-bias model.

A wide model has all possible order-n terms, and a deep model given sufficient depth (d) can be a universal approximater.

Note, in BIN one can interpret the model parameters during the training as well. This is in contrast to \({\mathop {\texttt {LIME}}}\) (Garreau and Luxburg 2020)—another popular interpretation method widely used in industry, which offers only the post-training explanation.

Note, in the following discussion, we will subsume parameter \(\textbf{c}\) in \({{\textbf {W}}}\) for sake of simplicity.

Note, generally deep models have several other layers such as batch-normalization dropout etc., and we have only shown dense layers here.

Note, in the experiments, we will use an ingenious strategy based on feature importance from tree-based models, to generate bias data, as for most of datasets used in our work, we do not have the expert available or presence of the un-biased version of the datasets.

Note, we have used (Zhu et al. 2021) as it is a reliable benchmark study. We have not used the leader board of https://paperswithcode.com/sota/click-through-rate-prediction-on-criteo, as we found that different papers have used different versions of data as well as evaluation strategy.

Note. the instance 1 selected is married, female, USA, civilian, \(40-55\), professor, high-doctorate, and the instance 2 selected is not-married, male, USA, civilian, \(0-25\), Repair, Some-college.

The lift of the accuracy is the improvement in percentage between knowledge-guided WBDF and standard vanilla WBDF.

References

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Chavira M, Darwiche A (2007) Compiling Bayesian networks using variable elimination. In: IJCAI, vol 2443

Chen CP, Liu Z (2017) Broad learning system: an effective and efficient incremental learning system without the need for deep architecture. IEEE Trans Neural Netw Learn Syst 29:10–24

Cheng H-T et al (2016) Wide & deep learning for recommender systems. arXiv https://doi.org/10.48550/ARXIV.1606.07792

Deleu T, Góis A, Emezue C, Rankawat M, Lacoste-Julien S, Bauer S, Bengio Y (2022) Bayesian structure learning with generative flow networks

Dong X, Yu Z, Cao W et al (2020) A survey on ensemble learning. Comput Sci Front. https://doi.org/10.1007/s11704-019-8208-z

Friedman N, Geiger D, Goldszmidt M (1997) Bayesian network classifiers. Mach Learn 29(2):131–163

Garreau D, Luxburg U (2020) Explaining the explainer: A first theoretical analysis of lime. In: International Conference on Artificial Intelligence and Statistics, pp. 1287–1296. PMLR

Heng-Tze C, Koc L, Harmsen J, Shaked T, Chandra T (2016) Wide and deep learning for recommender systems. arXiv:1606.07792

Huang T, Zhang Z, Zhang J (2019) Fibinet: combining feature importance and bilinear feature interaction for click-through rate prediction. In: Proceedings of the 13th ACM conference on recommender systems, pp 169–177

Jain AK, Mao J, Mohiuddin KM (1996) Artificial neural networks: a tutorial. Computer 29:31–44

Karpatne A, Kannan R, Kumar V (2022) Knowledge guided machine learning: accelerating discovery using scientific knowledge and data. CRC Press, Boca Raton

Kraskov A, Stögbauer H, Grassberger P (2004) Estimating mutual information. Phys Rev E 69:066138

Lian J, Zhang F, Xie X, Sun G (2017) Restaurant survival analysis with heterogeneous information. In: The World Wide Web Conference

Lian J, Zhou X, Zhang F, Chen Z, Xie X, Sun G (2018) xdeepfm: combining explicit and implicit feature interactions for recommender systems. In: Proceedings of the 24th ACM SIGKDD

Lundberg SM, Lee S-I (2017) A unified approach to interpreting model predictions. Adv Neural Inf Process Syst 30

Martinez A, Chen S, Webb GI, Zaidi NA (2015) Scalable learning of Bayesian network classifiers. J. Mach. Learn. Res. 17:1–35

Martınez AM, Webb GI, Chen S, Zaidi NA (2016) Scalable learning of Bayesian network classifiers. J Mach Learn Res 17:1–35

Nargesian F, Samulowitz H, Khurana U, Khalil EB, Turaga DS (2017) Learning feature engineering for classification. In: Ijcai, pp 2529–2535

Ng I, Zhu S, Fang Z, Li H, Chen Z, Wang J (2022) Masked gradient-based causal structure learning, pp 424–432. https://doi.org/10.1137/1.9781611977172.48

Qu Y, Cai H, Ren K, Zhang W, Yu Y, Wen Y, Wang J (2016) Product-based neural networks for user response prediction. In: 2016 IEEE 16th international conference on data mining (ICDM). IEEE, pp 1149–1154

Rendle S (2010) Factorization machines. In: ICDM, pp 995–1000

Sahami M (1996) Learning limited dependence Bayesian classifiers. In: Proceedings of the second international conference on KD and DM

Wang R, Fu B, Fu G, Wang M (2017) Deep & cross network for ad click predictions. In: Proceedings of the ADKDD’17, pp 1–7

Zaidi NA, Petitjean F, Webb GI (2018) Efficient and effective accelerated hierarchical higher-order logistic regression for large data quantities. In: SIAM international conference on data mining

Zaidi NA, Webb GI, Carman MJ, Petitjean F, Cerquides J (2017) Efficient parameter learning of Bayesian network classifiers. Mach Learn 106:1289–1329

Zhang W, Du T, Wang J (2016) Deep learning over multi-field categorical data: a case study on user response prediction. CoRR arXiv:1601.02376

Zhang Q, Li J, Jia Q, Wang C, Zhu J, Wang Z, He X (2021) UNBERT: User-news matching BERT for news recommendation. In: IJCAI, pp 3356–3362

Zhu J, Liu J, Yang S, Zhang Q, He X (2021) Open benchmarking for click-through rate prediction. In: Proceedings of the 30th ACM international conference on information & knowledge management, pp 2759–2769

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors do not have any funding information to report. Authors do have any actual, perceived or potential Conflict of interest (financial or non-financial) to disclose as well.

Additional information

Responsible editor: Rita P. Ribeiro.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, Y., Zaidi, N., Zhou, J. et al. Effective interpretable learning for large-scale categorical data. Data Min Knowl Disc 38, 2223–2251 (2024). https://doi.org/10.1007/s10618-024-01030-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-024-01030-1