Abstract

In recent years, we have witnessed the proliferation of knowledge graphs (KG) in various domains, aiming to support applications like question answering, recommendations, etc. A frequent task when integrating knowledge from different KGs is to find which subgraphs refer to the same real-world entity, a task largely known as the Entity Alignment. Recently, embedding methods have been used for entity alignment tasks, that learn a vector-space representation of entities which preserves their similarity in the original KGs. A wide variety of supervised, unsupervised, and semi-supervised methods have been proposed that exploit both factual (attribute based) and structural information (relation based) of entities in the KGs. Still, a quantitative assessment of their strengths and weaknesses in real-world KGs according to different performance metrics and KG characteristics is missing from the literature. In this work, we conduct the first meta-level analysis of popular embedding methods for entity alignment, based on a statistically sound methodology. Our analysis reveals statistically significant correlations of different embedding methods with various meta-features extracted by KGs and rank them in a statistically significant way according to their effectiveness across all real-world KGs of our testbed. Finally, we study interesting trade-offs in terms of methods’ effectiveness and efficiency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, we have witnessed the proliferation of knowledge graphs (KGs) in various domains, aiming to support applications like entity search (Dong et al. 2014), question answering (Ahmetaj et al. 2021), and recommendations (Tarus et al. 2018). Typically, KGs store machine-readable descriptions of real-world entities (e.g., people, movies, books) that capture both relational and factual information. In this work, we refer to an entity description as an identifiable set of property-value pairs that abstracts several data formats, such as relational, RDF, or property graphs.

As different KGs may independently describe the same real-world entity, a crucial task when integrating knowledge from several KGs is to align their entity descriptions. Entity alignment (EA), also known as entity resolution (Christophides et al. 2015, 2021), aims to identify pairs of descriptions from different KGs that refer to the same real-world entity, which we call matching pairs or simply, matches.

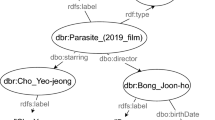

Figure 1 shows an example of two KGs, each containing four entity descriptions, represented as nodes, with their properties, represented as edges. The entities described in those KGs can be aligned. For instance, node \(v_1\) in \(KG_1\) is a description of the director Stanley Kubrick, providing his name and birth year as attributes, and the facts that he directed and wrote the movie The Shining, as well as that he also directed an entity represented by \(v_3\) as relations. Node \(v_1\) should be aligned with node \(v_5\) in \(KG_2\), even if the “name” attribute is now called “label”, and its value, “S. Kubrick”, is slightly different than the name value, “Stanley Kubrick”, used in \(v_1\). The birth year attribute is also missing in \(v_5\), compared to \(v_1\), and its only relation to \(v_6\) is “directed”, missing the relation “wrote” that was also provided in \(KG_1\) between \(v_1\) and \(v_2\). Similarly, the other entity alignments in the example of Fig. 1 should be \(v_2\) with \(v_6\) (describing the movie “The Shining”), \(v_3\) with \(v_7\) (describing the movie “Barry Lyndon”), and \(v_4\) with \(v_8\) (describing the actor Philip Stone).

One way to implement entity alignment as a machine learning (ML) task, is to learn a vector-space representation of symbolic KGs, known as embeddings. Numerical representations (embeddings) of KGs are preferred over symbolic ones in various ML tasks (link/node/subgraph prediction, matching, etc.), as they potentially mitigate the symbolic, linguistic and schematic heterogeneity of independently created KGs and thus aim to simplify knowledge reasoning. The idea is to embed the nodes (entities) and edges (relations or attributes) of a KG into a low-dimensional vector space. Particularly, we would like similar entities in the original KG to be close to each other in the embedding space and dissimilar entities to be far from each other. In this respect, both positive (i.e., actual KG edges) and negative (i.e., synthetic edges, non-existing in the actual KG) samples of the KGs are used. This way, by measuring their embeddings distance in the vector space, we can decide whether two entities are matching or not. Any available ground truth regarding the alignment of entities, called seed alignment, can be used for training and/or evaluating an embedding method.

Learning low-dimensional representations of KGs in a way such that the semantic relatedness of entities is captured by the geometrical structures of an embedding space is a challenging task that gave birth to numerous methods. Embedding-based entity alignment methods essentially exploit the relational (i.e., entity structural neighborhood) and the factual part (i.e., entity names/identities, attributes that represent literals) of descriptions. We refer to the former as relation-based methods, e.g., MTransE (Chen et al. 2017), MTransE+RotatE (Sun et al. 2020), RDGCN (Wu et al. 2019), RREA (Mao et al. 2020b), and to the latter as attribute-based methods, e.g., MultiKE (Zhang et al. 2019), AttrE (Trisedya et al. 2019), KDCoE (Chen et al. 2018), BERT_INT (Tang et al. 2020). Although this research direction is rapidly growing, there are still several open questions regarding the underlying assumptions of methods, as well as, the efficiency and effectiveness of entity alignment in realistic settings. In particular, in this work we address the following missing insights from the literature:

-

Q1.

Characteristics of methods. What are the critical factors that affect the effectiveness of relation-based (e.g., negative sampling, range of neighborhood) and attribute-based methods (e.g., usage of literals) and how sensitive are the methods to hyperparameters tuning?

-

Q2.

Families of methods. What is the improvement in the effectiveness of embedding-based entity alignment methods if we consider not only the structural relations of entities, but also their attribute values?

-

Q3.

Effectiveness vs Efficiency Tradeoff. Is the runtime overhead of each method worth paying, with respect to the achieved effectiveness?

-

Q4.

Characteristics of datasets. To which characteristics of the datasets (e.g., sparsity, number of entity pairs in seed alignment, heterogeneity in terms of literals, predicate names and entity names) are supervised, semi-supervised and unsupervised methods sensitive?

Although several recent works (Zeng et al. 2021; Choudhary et al. 2021; Wang et al. 2017; Sun et al. 2020; Zhang et al. 2020; Zhao et al. 2022; Jiang et al. 2021; Wang et al. 2021; Leone et al. 2022; Zhang et al. 2022; Chaurasiya et al. 2022) survey embedding-based entity alignment methods, only few of them (Sun et al. 2020; Zhang et al. 2020; Zhao et al. 2022; Leone et al. 2022; Zhang et al. 2022; Chaurasiya et al. 2022) conduct an experimental evaluation to obtain useful insights. The conclusions drawn from Zhang et al. (2020) are limited, as it leaves out some representative methods in embedding-based alignment such as MTransE (no negative sampling), KDCoE (semi-supervised exploiting long textual descriptionsFootnote 1), RREA (semi-supervised exploiting structural information), AttrE (unsupervised), BERT_INT (supervised exploiting both structural and factual information), while neither RREA nor BERT_INT were part of OpenEA (Sun et al. 2020), as both were published later. In addition, Leone et al. (2022) does not include MTransE, MTransE+RotatE, AttrE, KDCoE, while RREA is not included in Leone et al. (2022), Zhang et al. (2022), Chaurasiya et al. (2022) either. Moreover, benchmarking efforts such as Zhang et al. (2020), OpenEA (Sun et al. 2020), EAE (Zhao et al. 2022) and Leone et al. (2022), Zhang et al. (2022), Chaurasiya et al. (2022), do not shed light on questions Q1, Q2 and Q3, addressed in our work. Furthermore, only a subset of the dataset characteristics we study in our work, such as the density of the KGs, and the similarity of entity names, have been considered by previous works to answer question Q4.

To compare the effectiveness and efficiency of the methods in realistic settings, we have extended the testbed of datasets with pairs of KGs usually considered in related empirical studies. Specifically, OpenEA, EAE and Leone et al. (2022), Zhang et al. (2022), Chaurasiya et al. (2022) employ only datasets with a low number of entities featuring descriptions and literal values. In our testbed, we have included five additional datasets whose unique characteristics allow us to draw new insights regarding the evaluated EA methods, which were not previously reported in Sun et al. (2020), Zhang et al. (2020), Zhao et al. (2022), Leone et al. (2022), Zhang et al. (2022), Chaurasiya et al. (2022). More precisely, supervised methods like RDGCN exploiting KG relations, are outperformed by unsupervised (AttrE) and semi-supervised (KDCoE) methods that exploit the similarity of literals in datasets of decreasing density, but with rich factual information (i.e., attributes).

Rather than simply reporting the raw experimental results, we conduct a meta-level analysis, aiming to find statistically significant correlations between the methods and the dataset characteristics (meta-features). Furthermore, we consider the non-parametric Friedman test (Demsar 2006) and the post-hoc Nemenyi test (Nemenyi 1963), in order to perform a pairwise comparison of the methods and rank them based on their effectiveness across all datasets of our testbed. Finally, we are interested in the methods’ training time curve and potential effectiveness vs efficiency trade-offs instead of simply reporting overall runtimes (as in OpenEA and EAE).

In a nutshell, the contributions of this work are the following:

-

In Sect. 2, we present a qualitative comparison of state-of-the-art embedding-based entity alignment methods that span from supervised, i.e., MTransE (Chen et al. 2017), MTransE+RotatE (Sun et al. 2020), MultiKE (Zhang et al. 2019), RDGCN (Wu et al. 2019), RREA(basic) (Mao et al. 2020b), BERT_INT (Tang et al. 2020), to unsupervised, i.e., AttrE (Trisedya et al. 2019), and semi-supervised, i.e., KDCoE (Chen et al. 2018), RREA(semi) (Mao et al. 2020b), paradigms. They are representative methods of different embedding families covering both relation- and attribute-based, but also considering one-hop and multi-hop neighborhoods in KGs, as well as different negative sampling strategies.

-

In Sect. 3, we describe our framework for a fair empirical comparison of the different methods. We detail the extended testbed of datasets that exhibit diverse characteristics (w.r.t. KG density, entity naming, textual descriptions, etc.) usually encountered in reality along with the corresponding pre-processing pipelines. We additionally introduce the evaluation protocol and metrics capturing different aspects of the methods’ effectiveness.

-

In Sect. 4, we report and analyze the results of a series of experiments, including a comparison to a state-of-the-art non-embedding-based method, PARIS (Suchanek et al. 2011), conducted to answer the four open questions introduced previously, using a reliable, statistically sound methodology. First, we discover a statistical significant ranking of the methods according to their effectiveness across all real-world KGs of our testbed. Then, we study interesting trade-offs in terms of their effectiveness and efficiency. Last but not least, we extract statistically significant correlations between the methods’ performance with various characteristics of our datasets (i.e., meta features).

Finally, the main conclusions drown from our experiments, as well as the plans for future work, are discussed in Sect. 5.

2 Entity alignment with KG embeddings

In this section, we first formally define the entity alignment problem on Knowledge Graphs (KGs), along with some related constraints. Then, we provide a qualitative comparison of entity alignment methods based on KG embedding.

2.1 The entity alignment problem

Following the typical notation used in the literature (Zhang et al. 2019; Wang et al. 2020), we assume that entities (with the corresponding entity names),Footnote 2 are described in KGs by a collection of edges \(\left<h,r,t\right>\), whose head h is always an entity, and tail t may be either another entity, in which case we call this edge a relation edge and r a relation, or a literal (e.g., number, date, string), in which case we call this edge an attribute edge and r an attribute with its corresponding attribute name\(^{2}\). We represent a knowledge graph as \(KG = (E, R, A, L, X, Y)\), where E is a set of entities, R is a set of relations, A is a set of attributes, L is a set of literals, \(X \subseteq (E \times R \times E)\) and \(Y \subseteq (E \times A \times L)\) are the sets of relation and attribute edges of the KG, respectively. Given a source \(KG_1 = (E_1, R_1, A_1, L_1, X_1, Y_1)\) and a target \(KG_2\) = \((E_2, R_2, A_2\), \(L_2, X_2, Y_2)\), the task of entity alignment is to find pairs of matching entities \(M = \{(e_i,e_j)\in E_1 \times E_2 \mid e_i \equiv e_j \}\), where “\(\equiv\)” denotes the equivalence relationship (Zhang et al. 2019; Wang et al. 2020). A subset \(\delta \subseteq M\) of the matching pairs may be used as a seed alignment for training. For instance, in Fig. 1, the entities of the two KGs are \(E_1 = \{v_1, v_2, v_3, v_4\}\) and \(E_2 = \{v_5, v_6, v_7, v_8\}\). The relations are \(R_1 = \{cast, directed, wrote\}\) and \(R_2 = \{directed, actedIn\}\), while the attributes are \(A_1 = \{name, birth\)-\(year, title\}\), \(A_2=\{label\}\) and the literals are \(L_1 = \{``Stanley Kubrick'', ``1928'', ``The Shining''\}\). \(L_2 = \{``S. Kubrick'', ``Barry Lyndon'', ``P. Stone''\}\). The relation edges are \(X_1 = \{(v1,directed,v2), (v2,directed,v1), (v1,directed,v3), (v2, directed, v4)\}\), \(X_2\) = \(\{(v5,directed,v6)\), (v8, actedIn, v6), \((v8,actedIn,v7)\}\), while the attribute edges are \(Y_1\) = \(\{(v1,name, ``Stanley Kubrick'')\), (v1, birth-\(year,``1928'')\), \((v2,title,``The Shining'')\}\) and \(Y_2\) = \(\{(v5,label, ``S. Kubrick'')\), \((v8,label,``P. Stone'')\), \((v7,label,``Barry Lyndon'')\}\). The task of entity alignment is to find the matches (denoted by dashed edges in Fig. 1) M = \(\{(v1,v5), (v2, v6), (v3, v7), (v4, v8)\}\).

In practice, all the evaluated entity alignment methods rely on a number of assumptions/constraints, as listed below:

-

Every entity is assumed to be the head of at least one relation edge (so we do not consider entities that are not part of a connected component of the KG):

\(\forall e \in E, \exists r \in R, t \in E: (e, r, t) \in X.\)

-

1-to-1 constraint: Every entity in \(E_1\) should be matched to exactly one entity in \(E_2\): \(\forall e_i \in E_1\; \left( \exists e_j \in E_2: (e_i, e_j) \in M\right) \wedge \left( \not \exists e_j' \in E_2: (e_i, e_j') \in M\right)\) and vice versa \(\forall e_j \in E_2\; \left( \exists e_i \in E_1: (e_i, e_j) \in M\right) \wedge \left( \not \exists e_i' \in E_1: (e_i', e_j) \in M\right) .\) This also implies that \(\mid M \mid = \mid E_1 \mid = \mid E_2 \mid\).

2.2 Knowledge graph embeddings for entity alignment

KG embedding methods aim to learn a low-dimensional vector-space representation of symbolic KGs, known as embeddings. The idea is to embed the nodes (entities) and edges (relations or attributes) of a KG in an embedding space in a way that preserves their similarity in the original KG. Embedding methods have been proven to be effective in many machine learning tasks, such as node classification (Kipf and Welling 2017) that aims to assign entity types to KG nodes, or link prediction (Bordes et al. 2013; Sun et al. 2019) that aims to find missing relations between entities in a single KG. Lately, several embedding-based methods have been also proposed for entity alignment, exploiting either relation edges (relation-based methods), such as MTransE (Chen et al. 2017), MTransE + RotatE (Sun et al. 2020), RDGCN (Wu et al. 2019), RREA(basic) (Mao et al. 2020b), RREA(semi) (Mao et al. 2020b) or attribute edges (attribute-based methods), such as MultiKE (Zhang et al. 2019), AttrE (Trisedya et al. 2019), KDCoE (Zhang et al. 2017), and BERT_INT (Tang et al. 2020).

Figure 2 depicts the building blocks of embedding-based entity alignment methods: (i) The embedding module \(S_K\) that encodes the entities of each KG in an embedding space (L1 for \(KG_1\) and L2 for \(KG_2\)) according to the relational (i.e., entity structural neighborhood) and/or the factual part (i.e., entity names/identities, literals/text) of descriptions. (ii) The alignment module \(S_A\) that aligns the produced entity embeddings using the seed alignment (supervised) or attribute-values similarity (unsupervised), or both (semi-supervised). It produces a common embedding space for the entities of two KGs, in order to generate the alignment result according to a distance metric (e.g., Euclidean), using three different techniques known as sharing, swapping and mapping. Sharing and Swapping, update directly the entity embeddings produced by the embedding module according to the available similarity evidence of entities, while Mapping essentially learns a linear transformation between the two embedding spaces of aligned KGs. In the rest of this section, we will detail popular methods that implement those modules.

2.2.1 Embedding module

KG embedding methods proposed for the task of link prediction are used to implement the embedding module of entity alignment methods. There are several families of KG embedding methods for link prediction have been proposed in the literature, e.g., Yang et al. (2015), Trouillon et al. (2016); Nickel and Kiela (2017). In this paper, we are interested in contrasting translational methods such as TransE (Bordes et al. 2013) and RotatE (Sun et al. 2019), with Graph Neural Networks such as Graph Convolutional Networks (GCNs) (Kipf and Welling 2017) and Graph Attention Networks (GATs) (Velickovic et al. 2018).

2.2.1.1 Translational methods Translational methods use distance-based scoring functions in order to optimize a margin-based loss function and learn the embeddings of entities in a KG. A distance-based scoring function is a function that measures the plausibility of a relation edge \(\left<h,r,t\right>\) i.e., it measures the distance of the embedding of the head to the embedding of the tail entities, given the embedding of the relation. A margin-based loss function is a function that these methods aim to minimize, in order to minimize the distance of entity embeddings by a certain margin, computed by a distance-based scoring function. The key difference among all those translational methods is based on the degree that they are able to capture more complex graph structures such as cycles, by adopting the appropriate operator in the scoring function.

TransE (Bordes et al. 2013) is one of the most widely used translational KG embedding methods. In this method, both entities and relations are represented in the same vector space. The relation r is equivalent to the translation of vectors from head entity h to the tail entity t. If \(\left<h,r,t\right> \in X\), then the embedding \({{\textbf {t}}}\) of t should be close to the embedding \({{\textbf {h}}}\) of h, plus the vector \({{\textbf {r}}}\) of r , i.e., \({{\textbf {h}}}+{{\textbf {r}}}\approx {{\textbf {t}}}\). Formally, TransE minimizes the margin-based loss function:

where \(f\left( {\textbf{h}},{\textbf{r}},{\textbf{t}}\right) =\; \mid {\textbf{h}}+{\textbf{r}}-{\textbf{t}} \mid\) is the scoring function, X is the set of positive relation edges (relation edges that exists in the KG), \(X'\) is the set of negative relation edges (relation edges that do not exist in the KG), and \(\gamma\) is the margin hyperparameter. Each negative edge \(x' \in X'\) is created by replacing the head or the tail of a positive edge in X with a random entity, ensuring that \(x' \notin X\).

RotatE (Sun et al. 2019) is a translation-based embedding model that, unlike TransE, infers various relation patterns, such as symmetries. Specifically, RotatE maps the entities and relations to the complex vector space and defines each relation as a rotation from the head entity to the tail entity (Fig. 3). Given a relation edge \(\left<h,r,t\right>\), we expect that \({{\textbf {t}}} = {{\textbf {h}}} \circ {{\textbf {r}}}\), where \(\circ\) denotes the Hadamard (Million 2007) (element-wise) product.

This model aims to minimize the margin-based loss function

by maximizing the scores of positive relation edges and minimizing the scores of negative relation edges, where \(d_r\left( \left<h,r,t\right>\right) =\; \mid {\textbf{h}}\circ {\textbf{r}}-{\textbf{t}} \mid\) is a scoring function, \(\gamma\) is a fixed margin, \(\sigma\) is the sigmoid function, and \((h'_i,r,t'_i)\) is the i-th negative edge. RotatE, like TransE, creates the negative relation edges by replacing the head and the tail of positive relation edges randomly.

2.2.1.2 Graph Neural Network Methods Translational methods cannot deal with various complex graph structures. For example, TransE (Bordes et al. 2013) cannot deal with triangular structures like the one in Fig. 4, because it requires the three equations \(v_1+r_a \approx v_2\), \(v_2+r_a \approx v_3\) and \(v_1+r_a \approx v_3\) to hold at the same time. This is impossible, because for satisfying the former two equations we would have \(v_1+2 r_a \approx v_3\) which is contradictory to the equation \(v_1+r_a \approx v_3\).

In order to cope with that, Graph Neural Network (GNN) methods have been proposed. GNNs learn entity embeddings, by recursively aggregating the representations of neighboring nodes. They essentially rely on message passing, according to which, each graph node recursively receives and aggregates features (node representations) from its neighbors in order to represent the local graph structure.

There is a range of GNN variants, that implement different aggregation strategies. In this section, we focus on standard graph convolutional networks (GCNs) (Kipf and Welling 2017) and graph attention networks (GATs) (Velickovic et al. 2018), since they are the core of both RDGCN (Wu et al. 2019) and RREA (Mao et al. 2020b); two of the proposed methods that we evaluate in this study and describe in Sect. 2.3.

Triangular structure (Wu et al. 2019)

GCN (Kipf and Welling 2017) takes as input the randomly initialized entity embeddings of the KG, which is treated as an undirected graph. Then, it learns a set of layer-specific weights, known as filters or kernels, that are multiplied with the input embeddings. In essence, it acts as a sliding window across the KG that learns entity features while preserving useful structural information from the neighborhoods. GCN uses the following function

to aggregate the entity embeddings of l layers, where \(\sigma\) is an activation function, \(N_{i}\) is the set of the one-hop neighbors of the central entity i (including itself by adding a self-loop), \(c_{ij}\) is a normalization constant that defines isotropic average computation (each neighbor contributes equally to update the embedding of the central entity), \(W^{(l)}\) is a trainable layer-specific weighted matrix for feature transformation and \(H^{(l)}\) are the entity embeddings for layer l. More precisely, in order to learn the final embedding of a central entity, GCN sums its embedding with the neighbors embeddings.

GAT (Velickovic et al. 2018) expands the aggregation function of GCN, by an attention mechanism that assigns different weights to each neighbor of a central entity. GAT uses the following aggregation function

that aggregates the entity embeddings of l layers, where \(\sigma\) is an activation function, \(N_{i}\) is the set of the one-hop neighbors of the central entity i, \(H^{(l)}\) are the entity embeddings for layer l, \(z^{(l)}_{i}\) is a transformation operation and \(\alpha _{i j}^{(l)}\) is the normalized coefficient score. Particularly, \(z^{(l)}_{i}\) and \(\alpha _{i j}^{(l)}\) are calculated as:

and

where

\({\mathbf{\vec{a}}}^{{(l)^{T} }}\) is a learnable weight vector, LeakyReLU a variant of the activation function ReLU (Parisi et al. 2022) and \(\mid \mid\) is the concatenation operation.

2.2.2 Alignment module

For the alignment module \(S_A\), there exist three techniques: sharing, swapping and mapping (Sun et al. 2020). We describe them below, while we extensively compare them in Sect. 2.5.2.

2.2.2.1 Sharing Sharing aims to iteratively update the already produced entity embeddings, in order to minimize the embedding distance of each entity e and its aligned entity \(e'\) from the seed alignment \(\delta\).

In Fig. 5, we demonstrate the entity embeddings of \(KG_1\) and \(KG_2\) in L1 and L2 embedding spaces, respectively, while we also show the updates of the embeddings of the entities of seed alignment, in order to minimize their embedding distance. For simplification, we use a part of seed alignment, thus only the blue entities and the orange entities are considered as aligned. Therefore, by this technique, assuming the spatial similarity of aligned entities in two different KGs, we aim to adjust the axis of the two embedding spaces, so that entity vectors of the same entity in two KGs to overlap. It is worth mentioning that we started from two KGs encoded in two different embedding spaces (embeddings from the embedding module) and we ended up with two KGs encoded in an unified embedding space.

2.2.2.2 Swapping Swapping is a variation of sharing that produces extra positive edges, preserving the same objective as sharing. For instance, given two aligned entity pairs \((h,h') \in \delta (KG_1,KG_2)\) and \((t,t') \in \delta (KG_1,KG_2)\) and a relation edge (h, r, t) of \(KG_1\), swapping produces two new positive edges \((h^{\prime },r,t)\) and \((h,r,t^{\prime })\) and feeds them in KG embedding models (embedding module) as positive relation edges, in order to increase the training data, benefiting the quality of the embeddings as we describe in Sects.2.5.1.2 and 2.5.6. Swapping does not introduce a new loss function.

2.2.2.3 Mapping Mapping aims to learn a matrix M as a linear transformation on entity vectors from \(L_i\) to \(L_j\), in order to minimize the embedding distance of each linearly transformed entity e and its aligned entity \(e'\) from the seed alignment \(\delta\):

In Fig. 6, we demonstrate the entity embeddings of \(KG_1\) and \(KG_2\) in L1 and L2 embedding spaces respectively, while we also show the process in which we learn the matrix \(M_{i,j}\) that linearly transforms entities from L1 to L2. During this process, the linearly transformed entities of \(KG_1\) should be close to their aligned entity of \(KG_2\) according to the seed alignment. For simplification, we use a part of the seed alignment, thus only the blue entities and the orange entities are considered as aligned. Mapping, in contrary to sharing and swapping, aims to learn the mappings between the two embedding spaces (deducing the linear transformation from \(L_1\) to \(L_2\)), without assuming the similarity of spatial emergence. More precisely, it does not force the entity vectors of aligned entities to overlap, instead, it treats the learned mappings as topological transformations (one-to-one correspondence) from \(L_1\) to \(L_2\), preserving the two KGs encoded in two different embedding spaces.

2.3 Knowledge graph embeddings using relations

In this section, we discuss relation-based KG embedding methods, all of which are supervised. These methods use only the structural information (relation edges) for learning the entity embeddings.

MTransE (Chen et al. 2017) is a translation-based model for multilingual KG embeddings, but it is also applicable to general-purpose KGs, capturing their structure. The objective is to minimize the loss function

where \(S_K\) is the loss function of the embedding module, \(S_A\) is the loss function of the alignment module, and \(\alpha\) is a factor that weights \(S_K\) and \(S_A\). As the loss function \(S_K\) of the embedding module, MTransE utilizes a simplified version of TransE (Eq. 1), in which no negative relation edges are considered, while as the loss function \(S_A\) of the alignment module, it uses mapping (Eq. 9).

MTransE+RotatE (Sun et al. 2020) is a variation of MTransE that uses RotatE (Eq. 2) as \(S_K\) in Eq. 10, and sharing (Eq. 8) as \(S_A\), instead of TransE and mapping, respectively.

RDGCN (Wu et al. 2019) leverages GCNs (described in 2.2.1.2) to incorporate structural information in the entity embeddings. Particularly, given \(KG_1\) and \(KG_2\), RDGCN constructs a primal (entity) graph \(G^e\) by merging \(KG_1\) and \(KG_2\), and its dual (relation) graph \(G^r\), by creating a node in \(G^r\) for every relation type of \(G^e\), and connecting two nodes in \(G^r\) if the corresponding relations in \(G^e\) share the same head or tail entities.

Then, it uses a graph attention mechanism (a dual attention layer that assigns different importance to each neighbor’s contribution) to make interactions between \(G^e\) and \(G^r\), in order the resulting entity representations in \(G^e\) to capture the relation information, and then, to be fed to a GCN, capturing the structure of the neighborhood (Eq. 3). The resulting entity embeddings are refined using the mapping alignment technique (Sect. 2.2.2.3). The loss function that RDGCN aims to minimize is

where \((e_i, e_j)\) are entity pairs from the seed alignment \(\delta\), \((e_i', e_j')\) are negative samples generated by replacing \(e_i\) or \(e_j\) with a random entity, and d is the embedding distance function used in mapping (Sect. 2.2.2.3).

RREA (Mao et al. 2020b) integrates GCNs and GATs (described in Sect. 2.2.1) with a Relational Reflection Transformation, in order to obtain relation-specific embeddings for KG entities. This transformation utilizes a matrix that, in contrary to standard GCN and GAT, is constrained to be orthogonal, in order to reflect entity embeddings across different relational hyperplanes. The orthogonal property of the aforementioned matrix keeps the norms and the relative distances of entities in the relational space unchanged.

More precisely, RREA stacks multiple GNN layers, in order to capture and aggregate multi-hop neighborhood information for each entity embedding. The output embedding of entity \(e_i\) from the l-th layer is obtained as follows:

where ReLU (Parisi et al. 2022) is an activation function, \(N^{e}_{e_i}\) are the neighboring entities of \(e_i\), \(R_{ij}\) denotes the relations between \(e_i\) and \(e_j\), \(M_{r_{k}}\) the relational reflection matrix of \(r_k\), and \(\alpha _{i j k}^{l}\) is a weight coefficient of \(M_{r_{k}}\) (similar to GAT). The final entity embedding comes from the concatenation of the embeddings of each layer. In addition, in order to include relational information around entities, RREA concatenates the summation of the relation embeddings with entity embeddings to get dual-aspect embeddings. The resulting entity embeddings are refined using the sharing alignment technique (Sect. 2.2.2.1). The loss function that RREA aims to minimize is the following:

where \(e'_i\) and \(e'_j\) represent the negative pair of \(e_i\) and \(e_j\), generated using truncated uniform negative sampling (Sun et al. 2018; Zhu et al. 2019; Cao et al. 2019) and dist is the embedding distance function used in sharing.

The methodology described above refers to the basic version of RREA, RREA(basic). RREA also comes with a semi-supervised version, RREA(semi), that proposes possibly aligned entity pairs in different iterations, in order to enrich the training set. According to Mao et al. (2020a), the entity pair \((e_i,e_j)\) is proposed as aligned, if \(e_i\) and \(e_j\) are mutually nearest aligned.

2.4 Knowledge graph embeddings using attributes

In this section, we focus on attribute-based KG embedding methods. These methods utilize not only the structural information of the KGs (relation edges) to learn the entity embeddings, but also the attribute values (literals). In addition, in many methods the attribute embeddings help to enrich the seed alignment or even in refining the entity embeddings. We categorize the attribute-based methods depending on the usage of seed alignment as supervised, semi-supervised and unsupervised.

2.4.1 Supervised

MultiKE (Zhang et al. 2019) first constructs the embeddings of each literal l

where \(LP(o_n)\) is the pre-trained word embedding of word \(o_n\), \(encode(\cdot )\) is the encoder that does the compression of the embeddings, and [; ] is the concatenation operation. If \(o_n\) is an out-of-vocabulary word (i.e., there is no pre-trained embedding for this word), then MultiKE builds it by using pre-trained character embeddings. Then, it learns entity embeddings by exploring three different views: the name view \(\Theta ^{(1)}\), the relation view \(\Theta ^{(2)}\) and the attribute view \(\Theta ^{(3)}\).

Given an entity e, its name view (\(\Theta ^{(1)}\)) is defined as

where \(name(\cdot )\) is the name of the entity.

For the relation view \(\Theta ^{(2)}\), it adopts TransE to learn the entity embeddings of the two KGs, minimizing the following loss function

where \(X^+ = X_1 \cup X_2\) are the relation edges that exist in the two KGs, \(X^-\) are relation edges that do not exist in the two KGs (negative relation edges, created as in TransE), \(f_{\textrm{rel}}\) is the scoring function of TransE (Eq. 1), and \(\zeta _{(h, r, t)} \in \{-1,1\}\) denotes whether (h, r, t) is a positive or a negative edge.

For the attribute view \(\Theta ^{(3)}\), again it uses TransE to learn the embeddings exploiting the attributes and their values, aiming to minimize the loss function

where \(Y^+ = Y_1 \cup Y_2\) are the attribute edges of the two KGs, \(f_{\text{ attr }}\left( {\textbf{h}}^{(3)}, {\textbf{a}}, {\textbf{l}}\right) = - \mid {\textbf{h}}^{(3)} - \textbf{CNN}(\langle {\textbf{a}} ; {\textbf{l}}\rangle ) \mid\), which is using a Convolution Neural Network (CNN) representation of an attribute a and its literal value l, as follows:

where [; ] denotes the concatenation operation, \({\textbf{a}}\) the embedding of an attribute a, \({\textbf{l}}\) the embedding of a literal l, \(\Omega\) the kernel of CNN, \(\sigma\) the activation function, and \({\textbf{W}}\) a trainable weighted matrix.

For refining the entity embeddings of the relation and the attribute views, MultiKE minimizes the following two loss functions, respectively:

and

where \((h, {\hat{h}})\) and \((t, {\hat{t}})\) are entity pairs in the seed alignment, \({\mathcal {X}}'\) and \({\mathcal {X}}''\) are the relation edges whose head and tail entities are in seed alignment, respectively, and \({\mathcal {Y}}'\) are the attribute edges whose head entities are in seed alignment.

For the entity alignment (alignment module), MultiKE produces a set of aligned relations \(S_{rel}\) and a set of aligned attributes \(S_{attr}\) that are used to minimize the following loss function (cross-KG relation inference):

where \({\mathcal {X}}'''\) are the relation edges whose relations are in \(S_{rel}\) and

is the similarity measure that is used to align or not two relations, based on their name similarity (from literal embeddings) and their semantic similarity (from relation embeddings).

Finally, MultiKE jointly learns the final entity embeddings from the different views in a unified embedding space, by minimizing the loss function

where \({\tilde{\textbf{H}}}=\bigcup _{i=1}^{D} {\textbf{H}}^{(i)}\), \({\textbf{H}}\) is the view-specific entity embedding, and D is the number of views. For entity alignment, it uses swapping (Sect. 2.2.2.2).

BERT_INT (Tang et al. 2020) utilizes a well-known language model, BERT (Devlin et al. 2019), in order to embed entities based on their factual information (e.g., descriptions, names) and an interaction model, in order to compute their interactions, instead of aggregating neighbors, which in many cases causes noisy matches.

More precisely, for each entity e, it applies a pre-trained basic BERT unit that accepts the factual information as input, aiming to minimize the following loss function

to fine-tune BERT. Here, D is the seed alignment, \(e^{\prime +}\) is the correctly aligned entity known from seed alignment, \(e^{\prime -}\) is a randomly selected negative entity from the other KG - truncated uniform negative sampling (Sun et al. 2018), m the margin and g is the l1 distance, used for measuring the similarity between the embeddings C(e) and \(C(e^{\prime })\).

Regarding the interaction model, it is divided into the name/description view, the neighbor-views and the attribute-view interactions. Firstly, as name/description interaction, it leverages the embeddings generated by BERT, calculating their cosine similarity. Then, neighbor-view interaction compares names/descriptions of each neighbor pair (considering also their neighboring relations and multi-hop neighbors), producing a similarity matrix. The similarity matrix is then processed by a dual aggregation function to extract the similarity vectors, i.e., the entity embeddings. Afterwards, rather than learning embeddings of entities by aggregating their attributes, it compares each attribute pair, learning similarly to neighbor-view the attribute similarity vectors. Finally, a unified dual aggregation function is applied to extract the features from the neighbor-view and attribute-view interactions and generate the final entity embeddings.

2.4.2 Semi-supervised

KDCoE (Chen et al. 2018) leverages a weakly aligned KG for semi-supervised entity alignment using long (typically from a couple of sentences) textual descriptions of entities. It co-trains iteratively two embedding models, one on the structure of the KG (KGEM) and another on the textual descriptions of the entities (DEM), respectively, given a small seed alignment. During each iteration, each embedding model proposes a new set of aligned entity pairs alternately, in order to enrich the seed alignment. The process runs until one of the models has no entity pair to propose.

KGEM is practically the same as MTransE (Eq. 10), using the TransE (Eq. 1) embedding module (including negative samples) and mapping (Eq. 9). At the end of this model, if the embedding distance between an entity e and its closest (based on the distance function of mapping) entity \({\hat{e}}'\) is lower than a threshold, then the pair \((e,{\hat{e}}^{\prime })\) is proposed as aligned. DEM utilizes an encoder to process textual description sequences of vectors \(d_e\), that are produced by pre-trained word embeddings, and learn the description embeddings. The learning objective of DEM is to maximize the log likelihood of an entity e and its counterpart \(e'\) in terms of description embeddings, by minimizing the following loss function:

where \(\delta\) is the seed alignment and

where \(d_e\) and \(d_{e'}\) are the embeddings of the textual descriptions of the two aligned entities e and \(e'\), unrelated entities \(e_k\) are chosen randomly from a uniform distribution U, and \(\mid B_{d} \mid\) is the batched sampling size. Intuitively, the encoder aims to maximize the dot product of descriptions of aligned entities and decrease the dot product of descriptions of unrelated entities. At the end of this model, if the embedding distance between \(d_e\) and its closest entity \(d_{e'}\) is lower than a threshold (different than the one used for KGEM), then the pair \((e, e')\) is proposed as aligned.

For generating entity embeddings from textual descriptions, KDCoE utilizes a self-attention Gated Recurrent Unit (GRU), in order to preserve the sequence of words in a textual description and remove information irrelevant for the prediction, while sharing information across the different descriptions. For more details on this, we refer the reader to Chen et al. (2018).

2.4.3 Unsupervised

AttrE (Trisedya et al. 2019) is an unsupervised method that leverages both structural embeddings and attribute character embeddings for entity alignment. Instead of relying on a seed alignment to refine the structural embeddings, it uses the factual information to minimize the embedding distance between entities that have similar attribute character embeddings.

As shown in Fig. 7, AttrE consists of four modules: the schema alignment module (preprocessing step), the structure embedding module \(J_{S E}\), the attribute character embedding module \(J_{C E}\), and the alignment module \(J_{SIM}\)

The predicate alignment module merges the two KGs and renames predicates with a similar predicate name from \(KG_1\) and \(KG_2\), with a unified naming schema. For example, lgd:hasCountry and dbp:country (with lgd and dbp being the prefixes of the Linked Geo Data and DBpedia namespaces, respectively) are converted to :country. To find the predicates with similar names, it computes the Levenshtein distance of the postfixes of the predicates’ URIs. If this score is greater than a predefined threshold, then the two predicates are similar.

AttrE adopts TransE (Eq. 1) to learn the structure embeddings by minimizing the \(J_{SE}\) loss function, with \(\alpha =\frac{{\text {count}}(r)}{{\mid }X{\mid }}\), where count(r) is the number of occurrences of relation r, and \({\mid }X{\mid }\) is the total number of relation edges in the merged KG.

To learn the attribute character embedding, AttrE minimizes the following objective function:

where

and Y is the set of positive attribute edges, \(Y'\) is the set of negative attribute edges (generated by replacing the head entity with a random entity), w weights relation edges with aligned predicates, and \(f_{a}(l)=\sum _{n=1}^{N}\left( \frac{\sum _{i=1}^{t} \sum _{j=i}^{n} {\textbf{c}}_{{\textbf{j}}}}{t-i-1}\right)\) is an n-gram based compositional function that encodes literals l of attributes a in the embedding space. At the end of this process, entities that have similar attribute embeddings should also have similar entity embeddings. In the example of Fig. 7, lgd:5147 has a similar embedding with :Germany, since their attributes label:Germany have similar embeddings.

Having learned the structure embeddings and the attribute embeddings, AttrE combines them to produce the final entity embeddings, by minimizing the loss function

where \(\mathbf {e_{se}}\) the structure embedding of e, and \(\mathbf {e_{ce}}\) is the attribute character embedding of e. For example, the embedding of the entities lgd:5147 and :Germany will be updated in order their structured and attribute embeddings to be close. However, the entities lgd:2401 and dbp:Kromsdorf have tail entities with similar embeddings. At the end, the entities lgd:2401 and dbp:Kromsdorf will end up having similar embeddings too. For generating the alignment results, AttrE reports two entities as aligned, if the cosine similarity of their entity embeddings is greater than a pre-defined threshold.

2.5 Qualitative comparison of embedding methods

In this section, we describe the general assumptions of the evaluated methods, while we also compare the embedding-based entity alignment methods from different perspectives. For this purpose, we summarize in Tables 1 and 2 the basic characteristics and techniques of the methods, with respect to eight main categories: embedding module, literal size, alignment module, learning, schema alignment, embedding initialization, and negative sampling on relations and attributes, as described next.

2.5.1 Embedding module

The type of information that each method exploits in order to learn the embeddings reveals one of the most important assumptions that differentiates the methods. All embedding-based entity alignment methods utilize the relational structure of entities (relations), assuming that structural similarity is the key to entity alignment. However, there are methods that also exploit the literal values of the attributes, as well as the entity and attribute names.

Among the evaluated methods, MultiKE, RDGCN and BERT_INT are the only methods that use entity names in order to learn the final entity embeddings. Thus, for these methods, we expect an improved performance on KGs where there is some homogeneity in the naming of the entities.

2.5.1.1 Entity names

Some embedding-based entity alignment methods learn the entity embeddings by encoding the entity names in the embedding space, so as entities with similar names to have similar embeddings. These methods work under the assumption that the same real-world entities have to follow the same or semantically similar naming. However, in many real-world KGs, this assumption is not holding as entity names are replaced with source-specific ids. For this reason, several EA methods either avoid to utilise the entity names for the entity embeddings, either use them jointly with the structural or factual information of KGs.

2.5.1.2 Relations

The structural information of the KGs constitutes the main type of information that all embedding-based entity alignment methods use in order to learn the entity embeddings. Entity embedding methods exploit the structural information either in one hop or in multiple hops. The former use the local structural information of the entities, ignoring the impact of more distant neighbors. For these methods, the more relations we have per entity, the better results we expect to get, because entities have the opportunity to minimize their embedding distance with multiple similar entities (neighbors), exploiting multiple features to learn the final embeddings (more information for learning). In addition, the more relation edges we have, the more negative samples we have per entity, and as a result, entities move away from dissimilar entities in the embedding space. The latter (multi-hop methods) use the subgraph structure, exploiting a larger amount of relations between entities. This way, they focus on the extended (multi-hop) neighborhood and aggregate the embeddings of the multi-hop neighbors in order to learn their own.

Among the methods evaluated in this study, all of them use the relations to learn the entity embeddings, with most methods focusing on the one-hop neighborhood, except RDGCN, RREA(basic), RREA(semi), and BERT_INT, which follow a multi-hop approach. This way, RDGCN and both versions of RREA increase the information that an entity can exploit and the final entity embeddings are more expressive. This benefit comes at the risk of incorporating more noisy information into the embeddings, due to considering some distant neighbors, irrelevant or less important for the entity alignment task. Thus, BERT_INT, rather than aggregating the information of multi-hop neighbors, compares entity pairs from multi-hop neighbors, called interactions, based on factual information (e.g., textual descriptions, names).

2.5.1.3 Attribute names

Some embedding-based entity alignment methods use the attribute names for learning the entity embeddings. Usually, attribute names are exploited by the methods in order to enhance the similarity measure of entities and not as main source of information for learning the embeddings. That is because it requires KGs to have similar naming schemes, which is not usual in real-world KGs.

MultiKE is the only method that utilizes this type of information, requiring a homogeneous naming in terms of attributes. Thus, we expect this feature to perform better in datasets originating from the same or similar data sources (e.g., Wikipedia-based, or synthetic KGs).

2.5.1.4 Literal values and literal size

Many embedding-based entity alignment methods use literal values as auxiliary information to learn the entity embeddings. Specifically, they learn the embeddings of the literal values and they use those embeddings for learning the entity embeddings, or for enriching the seed alignment, or even for alignment. In most methods, all literal values are used, but in some methods, only the literal values of the attributes with name “description” are used. In addition, there are two methods for learning the literal-value embeddings: character-based and word-based. The first one uses the characters of the literal to learn the final literal embedding, while in the second one, the literal is tokenized (split into words) and the final embedding is resulting by employing pre-trained word embeddings.

The character-based methods usually exploit a small part of the literals (e.g., the first few characters) and they are mostly used for short literals, such as dates. In addition, there are many methods to learn the character-based embeddings: the n-gram-based that sums the n-gram combinations of the literals and the method that computes the average of the pre-trained character embeddings. The word-based methods typically exploit more information from the literal value (e.g., the first few words) and for this reason, they are mostly used for longer literals, such as names and descriptions. However, the word-based methods assume the existence of the word embeddings in the pre-trained set for all the words that appear in the literal values, which is very frequently not the case, resulting in out-of-vocabulary errors. For this reason, there are also hybrid methods, that use the word-based method first, but if a word does not exist in the pre-trained set (vocabulary), they use one of the aforementioned character-based method, in order to learn the specific word’s embedding. Regarding the literal size, it is a very important hyperparameter that defines the size of the substring of the literal which will be used, including or excluding important words or characters for the final literal embeddings.

Among the evaluated methods, KDCoE, AttrE, MultiKE and BERT_INT are the ones that use literal values for learning embeddings. KDCoE exploits the first 4 words of textual descriptions and pre-trained word embeddings, in order to learn the description embeddings and measure the entities similarity based on these embeddings. The objective is to enrich the training set (Sect. 3.4) with extra aligned entity pairs based on this similarity. Thus, if the similarity of an entity pair (based on description embeddings) is above a pre-defined threshold (95%), the entities are proposed as aligned, enriching the training set with an extra aligned entity pair that was not included in the training set before. AttrE, in contrary, exploits the first 10 characters of the literal values and follows an n-gram based method to learn the embeddings, for a combination of n values ranging from 1 to 10. The literal embeddings are used in the alignment module in order entities of the KGs with similar literal embeddings to be close in the embedding space. Specifically, AttrE uses literal embeddings for the alignment, since, as an unsupervised method, it does not use the seed alignment during training process. Furthermore, MultiKE uses the first 5 words and pre-trained word embeddings for learning the literal embeddings. In case a word is not contained in the available pre-trained word embeddings, it averages the character embeddings of this word, i.e., it follows the hybrid approach described above. MultiKE uses the literal embeddings in many ways, as described in Sect. 2.4.1, e.g., for name view, attribute view and schema alignment. BERT_INT utilizes long textual descriptions (limiting the words to 128), names, or literals for both BERT unit and interaction model, as described in Sect. 2.4.1.

All these methods require homogeneity in terms of literal values, which is not usual in real-world KGs. Finally, the character-based method that MultiKE uses as alternative to word-based methods suffers in the literals that contain the same characters in different order. For example, the number “5013” and the number “1350” will have the same embeddings. AttrE does not have this issue, since it is following an n-gram based method for character embeddings. BERT_INT, leveraging a variety of factual information and giving priorities depending on their availability and quality, deals with heterogeneity issues (e.g., it prioritizes descriptions over entity names, since descriptions contain richer information).

2.5.2 Alignment module

The alignment module aims to produce a common embedding space for the entities of the two KGs, using three typical techniques described in Sect. 2.2.2. MTransE+RotatE, AttrE, both versions of RREA, and BERT_INT utilize the sharing technique to calibrate the axis of the embedding spaces of the two KGs, in order the aligned entities to overlap. Consequently, we expect methods that rely on this technique to work well on KGs with dense and similar neighborhoods. This setting helps them to achieve the desired overlapping and preserve the initial structure of the KGs in the embedding space. On the other hand, MTransE, KDCoE and RDGCN, that rely on the mapping technique, learn a transformation matrix that aligns entities in two separate embedding spaces. In contrary to sharing, this technique does not assume the spatial similarity of the KGs. Finally, methods such as MultiKE, that unify multiple embedding spaces (multi-view) and consume more relation edges for training, rely on the swapping alignment technique.

2.5.3 Learning

We divide the evaluated methods into supervised, that require the seed alignment for the alignment module, semi-supervised, that use the seed for the alignment process, but also try to enrich it, and unsupervised, that do not use the seed alignment for the alignment process. All methods need seed alignment, either for training (alignment module) and testing (supervised and semi-supervised) or exclusively for testing (unsupervised). Supervised methods (MTransE, MTransE+RotatE, RDGCN, MultiKE RREA(basic) and BERT_INT) assume the existence of seed alignment, which in many cases is hard to find (since it includes the matches of all the entities of the two KGs), even in widely used Wikipedia-based KGs, hindering the EA task especially when the KGs scale up in terms of content and density.

In contrary to the aforementioned category, semi-supervised methods (e.g., KDCoE and RREA(semi)) work sufficiently well when a small percentage of aligned entities is available, which is more realistic in real-world KGs. However, these methods usually require auxiliary information to support the alignment task that involves the enrichment of the seed alignment. For example, KDCoE does this by assuming the existence of multiple, homogeneous textual descriptions. On the other hand, RREA(semi) does not use auxiliary information; it utilizes specific rules (e.g., the two entities have to be mutually nearest aligned, in order be proposed as aligned).

Finally, unsupervised methods carry out a more difficult task, since they use no seed alignment for the alignment process. Particularly, the lack of seed alignment makes AttrE leverage the literals similarity for alignment purposes, assuming their homogeneity. It is worth noting that unsupervised methods, in contrary to supervised and semi-supervised, do not assume that every entity of \(KG_1\) should be matched to exactly one entity of \(KG_2\).

2.5.4 Schema alignment

We refer to schema alignment as the process of finding similar relations and/or attributes. The similarity of the relations or the attributes can be measured by two different ways: measuring their name similarity (AttrE) or measuring both their name similarity and their structural (semantic) similarity (MultiKE) in the embedding space. All these methods can benefit if the initial KGs follow similar naming schemes, making the process of finding similar relations and attributes easier.

AttrE and MultiKE are two methods that perform schema alignment. MultiKE captures the schema similarity not only in terms of relation or attribute name similarity, but also exploits the semantic similarity of relations and attributes. In the case that we have KGs with heterogeneous naming at the schema level, MultiKE would have better performance than AttrE, that only exploits the name similarity for the schema alignment.

2.5.5 Embedding initialization

For initializing the entity embeddings, there are two practices: the random one, that initializes them by picking random numbers from a normal distribution, and the one that initializes them with the entity name embeddings. The latter has better results (Kocmi and Bojar 2017), because embeddings contain some information for the entities, but it works under the assumption that matching entities have similar entity names, which is not holding in many cases. RDGCN is the only method that initializes the embeddings with entity name embeddings.

2.5.6 Negative sampling

Negative sampling (Kamigaito and Hayashi 2022) is the process of generating n (a hyperparameter) negative examples of edges that do not exist in the KG, by replacing either the head or the tail entities of each positive edge with another random entity - uniform negative sampling (Bordes et al. 2013) - or with a highly similar neighbor - truncated negative sampling (Sun et al. 2018; Zhu et al. 2019; Cao et al. 2019). Truncated negative sampling, in contrary to uniform negative sampling, ensures difficult negative samples that contribute more on learning process than the easy ones. For example, if we sample a negative triple (Titanic, capitalOf, Greece) from (Athens, capitalOf, Greece), using uniform sampling, we can find that Athens and Titanic have very low similarity and the generated negative sample contributes little in the learning process. If, instead, we generate the negative triple (Thessaloniki, capitalOf, Greece) from (Athens, capitalOf, Greece), using truncated sampling, this negative triple would contribute more in the learning process, since Thessaloniki (the second biggest city in Greece) and Athens, are two very similar entities.

In general, negative sampling is widely used in KG embedding models on relations, as well as on attributes, in order to maximize the embedding distance of dissimilar entities. The more negative examples we have per positive example, the bigger the distance among dissimilar entities. In addition, an entity that participates in a high number of positive examples (high average relations per entity), will also participate in an increased number of negative examples. For instance, if an entity appears in the head or the tail of x relation edges, then the number of the negative examples for this entity can be as high as \(x*n\), where n is the corresponding hyperparameter.

All the evaluated methods use negative sampling, with the exception of MTransE. The higher the ratio of negative per positive triples, the better the performance of the methods, since the embedding distance of dissimilar entities increases. Although requiring 10 or even 125 times bigger KGs (for 10 or 125 negative samples per positive, respectively) improves the performance, this comes at the cost of increased training time and less scalable methods. MultiKE further enriches the KGs by using the swapping alignment technique that generates additional positive examples, as described in Sect. 2.2.2.2. This technique not only increases the available information for training, but it also increases the negative examples for the entities indirectly, as entities will appear in more relation edges.

2.5.7 Neural network architectures

All evaluated methods are implemented on top of a neural network architecture with multiple hidden layers, each consisting of d neurons, where d are the dimensions of the embeddings (dim). More precisely, relation-based methods utilize either shallow neural networks (MTransE, MTransE+RotatE), or Graph Neural Network (RDGCN, RREA(basic), RREA(semi)) for learning the embeddings, while attribute-based methods use shallow neural networks for encoding relations (AttrE) or Graph Neural Networks for encoding literals (MultiKE) and textual descriptions (KDCoE) or Radial Basis Function kernel (Xiong et al. 2017) for the interactions (BERT_INT).

Regarding relation-based methods, MTransE and MTransE+RotatE, utilize one layer for embedding entities and one layer for embedding entity relations. As shown in Table 7, the dimensions of both embeddings are the same (100). On the other hand, RDGCN consists of four Graph Attention Networks (GATs) (Velickovic et al. 2018), two primal layers and two dual layers. The final embeddings are output by two stacked GCN layers (Kipf and Welling 2017). Both versions of RREA utilize two stacked GATs (Relational Reflection Aggregate Layer). It is worth mentioning that both RDGCN and both versions of RREA use variations of graph attention mechanisms, not standard GATs.

Attribute-based methods, as shown in Table 2, use two standard layers (for entity and relation embeddings) and some extra layers to encode additional information of entity descriptions. For example, KDCoE utilizes two layers for embedding entities and their relations and two GRU layers for encoding their textual descriptions. It is worth mentioning that while all other examined methods learn the structure and attribute embeddings jointly, KDCoE is the only method that trains two different models alternatively (co-training). AttrE consists of five layers: one layer for embedding entities of relation edges, one layer for embedding entities of attribute edges, one layer for relations, one layer for attributes, and one layer for character embeddings. All of these layers contain the same number of neurons (100). MultiKE utilizes two layers for the word embeddings, three layers for entity, relation and attribute embeddings, one convolution layer for encoding literals, and one layer that combines them. Finally, it uses two additional convolution layers for the cross-KG entity inference based on relations and attributes. BERT_INT utilizes four Radial Basis Function kernels (one layer each one) for the interaction model and three Multilayer Perceptron for obtaining the entity embeddings.

It is worth mentioning that the simpler the architecture, the more scalable the method is (e.g., as shown in Table 14, the translation-based method MTransE is more scalable than the GNN-based method RDGCN).

3 Experimental setting

In this section, we give an overview and statistics about the datasets, we describe the evaluation protocol and the metrics that we used. We also provide implementation details about this study. The source code of this work is publicly available.Footnote 3

3.1 Datasets

For the experimental evaluation we utilized nine datasets, each consisting of a pair of KGs to be aligned, four from OpenEA (Sun et al. 2020) and five datasets from Efthymiou et al. (2015), Obraczka et al. (2021) in order to enrich the available data characteristics and ensure a trustworthy meta-level analysis.

For the datasets from OpenEA, three well-known KGs were used as sources: DBpedia (version 2016-10) (Lehmann et al. 2015), Wikidata (version 20160801) (Vrandecic and Krötzsch 2014) and YAGO3 (Rebele et al. 2016). The seed alignment is constructed by the owl:sameAs links, which were provided with those KGs. In addition, it is hard for embedding-based approaches to run on full KGs due to the large candidate space, hence the authors of OpenEA sampled real-world KGs, by their iterative degree-based sampling algorithm described in Sect. 3.4. The resulting KGs have a size of 15K entities each, constituting the datasets D_W_15K_V1 and D_Y_15K_V1. In order to examine the behaviour of the methods with respect to the density of the KGs, the authors of OpenEA generated an additional dense version for each dataset (D_W_15K_V2 and D_Y_15K_V2). For generating the dense version, they deleted entities (nodes) with low (\(\le 5\)) node degrees and they performed again their iterative degree-based sampling algorithm, described in Sect. 3.4. The above mentioned datasets are described as follows:

-

D_W_15K_V1 and D_W_15K_V2 are the sparse and the dense datasets, respectively, that were constructed from DBpedia and Wikidata KGs, describing actors, musicians, writers, films, songs, cities, football players and football teams. We chose them in order to examine the influence of KG density variations in the relation- and attribute-based methods. In addition, these datasets have both low entity name similarity and a low number of entities that have long textual descriptions, undermining the methods that use them. In these datasets, relation and attribute names have been replaced with special ids, which influences in a negative way methods that perform schema alignment using predicate names.

-

D_Y_15K_V1 and D_Y_15K_V2 are the sparse and the dense datasets, respectively, that were constructed from DBpedia and YAGO3. They contain the same entity types with D_W_15K but unlike D_W_15K, they have both high number of entities that have long textual descriptions and high entity name similarity.

Five additional datasets, from Efthymiou et al. (2015), Obraczka et al. (2021), were employed, that originate from the following eight KGs: the BTC2012 version of DBpedia,Footnote 4 BBCmusic (originating from KasabiFootnote 5), IMDb,Footnote 6 TVDB,Footnote 7 TMDb,Footnote 8 and two restaurant KGs.Footnote 9 None of these datasets originally cover the assumptions of embedding-based entity alignment methods, thus we pre-process them, as described in Sect. 3.4. The five extra datasets, built from those KGs, are the following:

-

BBC-DB (Efthymiou et al. 2015) is a sparse dataset constructed by BBCmusic and the BTC2012 version of DBpedia. It contains various entity types such as musicians, their birth places and bands, while also it has the highest number of average attributes per entity and the highest number of entities that have textual descriptions among other datasets. In addition, this dataset is better suited for the methods that perform schema alignment, since these KGs follow similar predicate naming conventions.

-

imdb-tmdb, imdb-tvdb, and tmdb-tvdb (Obraczka et al. 2021) were constructed from IMDb, TMDb, and TVDB that contain descriptions about people, movies, tv series, episodes and companies. Those datasets have a low number of entities, which makes them interesting for examining the performance of supervised vs unsupervised methods with a small training dataset. In addition, they are much sparser than the previous datasets, with low average attributes per entity, while they exhibit high homogeneity in terms of literals and predicate names. tmdb-tvdb features higher homogeneity in terms of literals and lower similarity of long-text descriptions, compared to imdb-tvdb and tmdb-tvdb.

-

Restaurants\(^{9}\) contains descriptions of real restaurants and their addresses from two different KGs. It is the smallest dataset in terms of number of entities, relation types and attribute types, but its main challenge is that it does not cover the assumptions of all the embedding-based entity alignment methods. Specifically, not all entities of KG1 are matched with all the entities KG2 and vice versa. We chose this dataset to examine whether the evaluated methods can be generalized beyond datasets that satisfy the 1-to-1 assumption.

3.2 Statistics and meta-features

In order to quantify useful information about the knowledge graphs, we calculated some basic dataset statistics regrading the type and the number of relations and attributes that are presented in Table 3 and some additional dataset statistics that are shown in Table 4, categorized in the three following categories: seed alignment size, density and heterogeneity that were used for constructing the meta-features of Table 5.

Seed alignment size refers to the number of entity pairs in the seed alignment. Density consists of the average relations per entity and the average attributes per entity, which indicate the density of the knowledge graphs on structural and attribute level, respectively. In addition, it consists of the proportion of sole and hyper relation types of the two knowledge graphs, where a sole relation type is a relation type that does not co-occur with another relation type in any entity pair (Zhang et al. 2017), otherwise, a relation is called a hyper relation (Zhang et al. 2017). Finally, in order to measure the heterogeneity of the two knowledge graphs, we calculate the textual description similarity (Descr_Sim) in the embedding space, of entities that are included in the test and validation sets, the number of entities of the two KGs that have textual descriptions (#Ents_Descr), the similarity of entity names (Ent_Name_Sim), the similarity of literals (Lit_Sim) and the similarity of predicate names (Pred_Name_Sim). Specifically, for Descr_Sim, Ent_Name_Sim and Lit_Sim, we report the average of MAX similarities of each description, entity name and literal, respectively. As for the Pred_Name_Sim, we measure the name similarity of relation and attribute types (predicates) using Levenshtein Distance, as suggested in AttrE (Trisedya et al. 2019). Specifically, we report the average of MAX similarities of each predicate (relation or attribute).

The meta-features of Table 5 were constructed by applying the aggregation functions of Table 6 on the statistics of Table 4, in order to construct features for each individual dataset instead of each individual KG of the dataset and use them in Sect. 4.3. More precisely, for Avg_Rels_per_Entity, Avg_Attrs_per_Entity, Sole_Rels, Hyper_Rels and #Ents_Descr (based on KDCoE that proposes possibly aligned entities of the entities of the first KG) were used aggregation functions, while #Entity_Pairs, Descr_Sim, Ents_Name_Sim, Lit_Sim and Pred_Name_Sim were used as meta-features without the application of any aggregation function.

3.3 Evaluation protocol and metrics

In this section, we describe the evaluation protocol that embedding-based entity alignment methods use, the metrics used for their evaluation, while we also compare those metrics.

3.3.1 Evaluation protocol

EA methods have been traditionally evaluated using classification-based metrics (e.g., precision and recall), comparing the matches proposed by those methods to the correct matches of a given ground truth (Leone et al. 2022). On the contrary, KG embedding-based EA methods, adopted rank-based evaluation metrics (see Sect. 3.3.2), possibly influenced by the recent literature on the embedding-based methods for link prediction (Bordes et al. 2013; Sun et al. 2019). In order to compare embedding-based EA methods on an equal basis and re-use the available open-source code as much as possible, we adopt the latter evaluation metrics and acknowledge that the criticism of Leone et al. (2022) is valid.

During the evaluation, the embedding-based EA methods use two embedding matrices, one for each KG, with the entity embeddings in their rows, and the test setFootnote 10 of the seed alignment (Fig. 8). The alignment methods calculate the similarity of every entity of the first matrix with every entity of the second matrix, in the embedding space using similarity measures such as Euclidean distance, cosine similarity, etc. The result of this process is a similarity list, that we sort in descending order and aim to find the index (ranking) of the aligned entity in the similarity list.

In the example of Fig. 8, \(e_1'\) is the aligned entity of entity \(e_1\), known as true entity, according to seed alignment, and it is in the first position in the similarity list. In the last similarity list, there is a tie in the scores of the two candidate entities \(e_3'\) and \(e_2'\), that is decisive for some evaluation metrics. According to Berrendorf et al. (2020), there are different behaviors for this phenomenon that are categorized as optimistic, pessimistic, non-deterministic and realistic. In optimistic behavior, it is assumed that the true entity is ranked first among other entities with the same score, in pessimistic, it is assumed that it is ranked last, and realistic is the mean of optimistic and pessimistic. In our case, all of the evaluated methods are non-deterministic, which means that handling ties depends on the inner working of the sorting algorithm that the methods use to sort each similarity list.

In the context of ensuring a reliable and unbiased experimental process, we conducted the experiments with 5-fold cross-validation (Sun et al. 2020), where each fold is split into train, validation and test\(^{10}\). Finally, the performance of each method, per dataset, for a specific metric is calculated by the average scores that the method achieves in the 5 folds for this metric.

3.3.2 Evaluation metrics

For the evaluation of the alignment methods, we use the following metrics, which depend on the individual ranks \({\mathcal {I}}\) generated by each method, as described in the previous section.

Hits@k describes the fraction of hits (true entities) that appear in the first k ranks of the sorted similarity lists:

where \(Hits@k \in [0, 1]\). In the experimental results, we report Hits@k values as percentages (i.e., \(Hits@k \cdot 100\%\)). Specifically, this metric measures the accuracy of the methods with adjustable error rate. For instance, Hits@10 allows a small error rate in contrary to Hits@1 that does not allow any errors. The weakness of this metric is that it considers only the first k position of the similarity list, and as a result, all the other positions have no effect to the final score. An advantage of this metric is its easy interpretation. In the example of Fig. 8, \(Hits@1 = 1/3 = 0.33\), since among the three similarity lists (\(\mid {\mathcal {I}} \mid = 3\)), only in one similarity list, a true entity pair is observed in the first rank.

Mean Rank (MR) computes the average ranks of the true entity pairs:

where \(MR \in [1, \mid E \mid ]\) (the lower the better). An advantage of MR is its sensitivity to any model performance changes, since it reflects the average performance and not only the fist k ranks. However, it is also sensitive to outliers, because a very high or very low rank of a true entity pair affects the mean of the ranks. Finally, MR is an easily interpretable metric, but we should keep in mind the size of the candidate entities, because MR = 10 is better for 1,000,000 entities than for 20 entities. In the example of Fig. 8, assuming that in the third similarity list the true pair is in the second rank, \(MR = (1 + 3 + 2) / 3 = 2\).

Mean Reciprocal Rank (MRR) is the inverse of harmonic mean rank:

where \(MRR \in (0,1]\). This metric is affected more by the top-ranked values rather than the bottom ones. For instance, the change is larger when moving from 1 to 2 compared to moving from 10 to 1,000. Thus, MRR is less sensitive to outliers. In the example of Fig. 8, assuming that in the third similarity list the true pair is in the second rank, \(MRR = (1/1 + 1/3 + 1/2)/3 = 0.61\).

3.4 Pre-processing pipelines

The embedding-based entity alignment methods are working under some strict assumptions that make them inapplicable to the five new datasets. However, for a reliable experimental evaluation of the methods under the same conditions and data characteristics, we pre-process those datasets in order to conform to the assumptions described in Sect. 2.

1-to-1 and structural-information assumptions Firstly, we processed the seed alignment, in order to satisfy the 1-to-1 mapping assumption, as follows. If there are two entity pairs \((e_i,e_j)\) and \((e_i,e_j')\) in the seed alignment, we need to keep one of them. In the dilemma of choosing which entity pair to remove, we examined two factors. The first factor is to keep the entity pair in which the true entity, \(e_j\) or \(e_j'\) in this example, is involved in at least one relation edge (one of the other assumptions of these methods). The second factor is to keep the entity pairs in which the true entity has the highest node degree (number of incoming and outgoing edges), in the effort to reduce as little as possible the initial KGs. In addition, we processed the initial KGs, by removing relation edges \(\left( h,r,t\right)\), whose head (h) or tail (t) entities did not appear in the seed alignment. Then, we repeated the same process for the attribute edges, removing the edges whose head entity did not appear in the seed alignment, and also removing the attribute edges whose head entity was not involved in at least one relation edge.

Pre-aligned predicates assumption In addition to these assumptions, AttrE requires predicate alignment, as described in Sect. 2.4 and merging the two KGs into one KG. The predicate alignment step is performed by manual inspection of the data, as suggested by the authors of AttrE.Footnote 11 For example, given the attributes :name and :hasName, the second one is replaced by :name.

Sampling. For sampling real-world KGs, the authors of OpenEA proposed an iterative degree-based sampling (IDS) algorithm which simultaneously removes entities of the two KGs using seed alignment. The objective of this algorithm is to generate a dataset of a specific size from real-world KGs, such that the difference of the entity degree distribution, between the original KG and the sampled KG, does not exceed a certain value. In order to determine which entities to remove without greatly affecting the entity degree distribution, they delete entities with low PageRank scores, while assessing the divergence of the two degree distributions of the KGs using the Jensen-Shannon (JS) (Lin 1991) measure. The algorithm stops when the size of the datasets is the desired one (15K) and the divergence is \(\le 5\%\).

3.5 Implementation details