Abstract

The accuracy and flexibility of Deep Convolutional Neural Networks (DCNNs) have been highly validated over the past years. However, their intrinsic opaqueness is still affecting their reliability and limiting their application in critical production systems, where the black-box behavior is difficult to be accepted. This work proposes EBAnO, an innovative explanation framework able to analyze the decision-making process of DCNNs in image classification by providing prediction-local and class-based model-wise explanations through the unsupervised mining of knowledge contained in multiple convolutional layers. EBAnO provides detailed visual and numerical explanations thanks to two specific indexes that measure the features’ influence and their influence precision in the decision-making process. The framework has been experimentally evaluated, both quantitatively and qualitatively, by (i) analyzing its explanations with four state-of-the-art DCNN architectures, (ii) comparing its results with three state-of-the-art explanation strategies and (iii) assessing its effectiveness and easiness of understanding through human judgment, by means of an online survey. EBAnO has been released as open-source code and it is freely available online.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Modern decision-making processes have been highly improved with the advent of Artificial Intelligence’s deep learning models (e.g., deep neural networks). Natural image understanding is one of the fields that benefited the most from these research efforts, with the introduction of even more accurate and complex Deep Convolutional Neural Networks (DCNN) (Simonyan and Zisserman 2015; Szegedy et al. 2016, 2017), establishing new standards in many machine-learning tasks. However, the decision-making process of DCNN models is still far from being understood by its users since it is a black-box, as widely highlighted by several researchers (Guidotti et al. 2018; Lepri et al. 2017; Ribeiro et al. 2016). Moreover, it is commonly known that deep black-box models require a huge amount of data to be trained, usually generated and evaluated by people, increasing the risk of inheriting various forms of human prejudice and bias (Lepri et al. 2017; Bolukbasi et al. 2016; Ribeiro et al. 2016). The critical social impacts that deep AI models are causing on our modern society stimulate the advancements in the eXplainable Artificial Intelligence (XAI) field of research (Confalonieri et al. 2021). Many researchers are devoting efforts to explainable and interpretable approaches, from the classification of structured and unstructured data (Datta et al. 2016; Ribeiro et al. 2016; Lundberg and Lee 2017), to recommendation systems (Lin et al. 2020), and knowledge discovery (Yeo et al. 2020).

In the literature of explanation frameworks, applicable to the image processing domain, both model-agnostic and domain-specific approaches are available. Model-agnostic solutions (Ribeiro et al. 2016; Lundberg and Lee 2017) are general-purpose and sometimes they propose visual and numerical explanations. However, their generality often limits the quality and the reliability of the provided explanations, especially when complex neural networks are used since they are not able to mine the knowledge contained in the model under analysis. Domain-specific solutions (Selvaraju et al. 2019; Petsiuk et al. 2018; Simonyan et al. 2014), on the other hand, typically exploit shallow model information (i.e., few network layers) and often provide only prediction-local visual explanations. Furthermore, very few works validate their solutions considering humans’ feedback (Ribeiro et al. 2016) to assess the quality and the ease of understanding of the provided explanations. Nevertheless, explanation frameworks are built for humans, so their validation is crucial.

To bridge such gaps, we propose EBAnO (Explaining BlAck-box mOdels), an innovative explanation framework able to analyze the decision-making process of convolutional models providing prediction-local and class-based model-wise explanations through unsupervised mining of the inner knowledge contained in multiple layers of the DCNN. EBAnO provides both visual and numerical explanations, enabling both expert and non-expert users to better understand the reasons behind the predictions by projecting them on the input image. The main contributions of this work can be summarized as follows.

-

The design of a novel explanation process exploiting the inner knowledge of multiple convolutional layers simultaneously.

-

The introduction of a new index (nPIRP) to efficiently quantify both the influence and the precision of the input features w.r.t a given prediction.

-

The unsupervised extraction of interpretable features easily understandable by humans and projected on the input image.

-

The computation of informative class-based model-wise explanations.

-

Qualitative human validation of the effectiveness and easiness of understanding of EBAnO’s explanations through an online survey.

-

Quantitative validation of the proposed approach, producing almost 10,000 explanations for four state-of-the-art DCNNs on 250 input images.

-

Qualitative, quantitative, and human-based comparison of EBAnO with state-of-the-art explanation tools, i.e., LIME (Ribeiro et al. 2016), Grad-CAM (Selvaraju et al. 2019), and Shapley values (Štrumbelj and Kononenko 2014).

EBAnO’s open-source code repository, an interactive library of explanations produced by EBAnO, and the online survey proposed to the users are available online.Footnote 1

The rest of the paper is organized as follows. Section 2 presents the XAI state-of-the-art. Sections 3, 4, 5, 6, and 7 describe the local-explanation, while Sect. 8 the model-global explanations processes implemented in EBAnO. Then, Sect. 10 reports the experiments, the qualitative and quantitative comparisons, and the human validation results. Sect. 11 discusses the current limitations of the framework. Finally, Sect. 12 provides conclusions and proposes future works.

2 Related work

More and more decisions, often critical and socially impacting, are taken by complex, intrinsically black-box, machine-learning models (Lepri et al. 2017). Thus, new explainable and interpretable approaches are starting to spread, highlighting the importance of the eXplainable Artificial Intelligence (XAI) in several domains. Many effective solutions have already been proposed, especially to explain in the domain of structured data (Proença and van Leeuwen 2020; Yeo et al. 2020), and recommendation systems (Díez et al. 2020; Zheng et al. 2019; Lonjarret et al. 2020).

This work focuses on the explanation of visual information processing performed by Deep Convolutional Neural Networks (DCNNs), a family of neural networks widely spread in computer vision (Simonyan and Zisserman 2015; Szegedy et al. 2016, 2017). In this section, we discuss the state-of-the-art explainability techniques, with a specific focus on those suitable for DCNN and computer vision.

2.1 Model-agnostic approaches

Some of the most promising efforts in the XAI field have already been collected in Guidotti et al. (2018); Adadi and Berrada (2018) where the authors try to explore and analyze all the possible requirements that an explanation process should be able to fulfill.

Several techniques exploited by data scientists when dealing with black-box models are model-agnostic explainability approaches, like LIME (Ribeiro et al. 2016) and SHAP (Lundberg and Lee 2017). In particular, LIME (Ribeiro et al. 2016) allows to produce a prediction-local explanation of any predictive model, and it applies to both structured and unstructured data (e.g., image, text); to compute the local explanation, LIME performs a local approximation of the prediction, training a simpler and interpretable local model around small variations of the input data. SHAP (Lundberg and Lee 2017) proposes a unified approach to interpret local predictions produced by any machine learning model. SHAP is based on the idea of collaborative contribution coming from the Game Theory, measured by exploiting the concept of Shapley Values (Shapley 1953). Thus, in the prediction process, the model outcomes are considered as a collaborative contribution of the elements that compose the input data and the local explanation is given by the measure of the contribution of each feature in the prediction task. A further contribution in model agnostic prediction-local explanations for structured data (i.e., tabular data) is proposed in Rajapaksha et al. (2020) by exploiting an association rule mining approach to extract not only the rules that are supporting the current prediction but also the ones that are contradicting it and the associations that the model would require to change its outcome. However, while association rule approaches are suitable for structured problems, they have limited applications on the explanation process of machine learning tasks on unstructured data (e.g. image classification). Moreover, Kliegr et al. (2021) shows, from a psychological perspective, how interpretable machine learning models, and in particular logical rules, can be affected by cognitive biases. Consequently, also rule-based explanations could be affected by them.

Model agnostic techniques are really powerful and simple to use in many domains, but often they provide very approximate explanations, limiting their reliability in critical contexts. They are not able to analyze the prediction process taking advantage of the information contained in the model under analysis and to give specific outcomes taking into account the domain of interest. For these reasons, EBAnO leverages domain knowledge (i.e., DCNN for image classification) to produce more effective and reliable explanations.

2.2 Domain-specific approaches

Image understanding requires more domain-specific approaches, enabling the production of even more accurate and reliable explanations. To study the behavior of a DCNN during the prediction process taking advantage of the knowledge contained in the model itself, two types of approaches are the most common: (i) studying the model inner behavior, layer by layer, visualizing their output and trying to infer the details of the process that brought the model to a specific decision or (ii) exploiting the information produced by the model during the prediction phase to understand which are the portions of the input that mostly affect the decision process.

Several interesting approaches based on a graphical analysis of the network’s neurons, inspecting the architecture of different convolutional layers through visualization techniques, have been proposed and summarized in Seifert et al. (2017). The effectiveness of this family of techniques is out of doubt when dealing with small architectures but they became nearly not applicable as the complexity of the network grows. Even more important, the prediction process analysis through these graphical techniques is strictly oriented to technical and domain expert users, limiting their applicability in many areas of interest.

On the contrary, many approaches proposed in literature aim to understand which portions of the input mostly affect the decision process (Binder et al. 2016; Simonyan et al. 2014; Fong and Vedaldi 2017; Zhang et al. 2018; Petsiuk et al. 2018; Selvaraju et al. 2019; Sundararajan et al. 2017; Selvaraju et al. 2019; Bach et al. 2015; Smilkov et al. 2017; Shrikumar et al. 2017). (Simonyan et al. 2014) explores strategies to produce (i) prediction-local explanations exploiting the inner information of the model, visualizing the portions of the input mostly characterizing the prediction through saliency maps and (ii) class-local explanations exploiting the CNN model under analysis to generate class-related images to maximize the probability of the class-of-interest. The authors in Binder et al. (2016) propose an extension of LRP (Bach et al. 2015), a technique which allows decomposing the prediction of a DNN into feature relevance scores, suitable for local normalization layers’ non-linearity in convolutional neural networks exploiting Deep Taylor Decomposition (Montavon et al. 2015). SPRAY (Lapuschkin et al. 2019), instead, exploits Spectral clustering on LRP (Bach et al. 2015) explanations to globally explain models over large-scale datasets identifying typical and atypical patterns in the heatmaps. Zhang et al. (2018) studies how to modify traditional CNNs to make them self explainable, leveraging the idea that each convolutional layer should be activated only by a certain object part belonging to a specific category and highlighting the object parts with feature maps. Fong and Vedaldi (2017) proposes a paradigm that learns the minimally salient part of an image, finding the smallest perturbation mask that brings down the classification score. RISE (Petsiuk et al. 2018) analyses the effect of perturbing randomized input samples to produce prediction-local explanation in a general fashion, without taking into account the internals of the model under analysis, and measuring the effects that the deletion and insertion of input pixels have on the outcomes of the prediction process.

Many approaches available in literature exploit the concept of input perturbation to analyze the model reactions, like Alvarez-Melis and Jaakkola (2017); Ventura et al. (2018); Lundberg and Lee (2017); Ribeiro et al. (2016); Selvaraju et al. (2019); Fong and Vedaldi (2017). This idea, however, requires that the input features to be perturbed contain meaningful information for the model, otherwise the perturbation will not be able to highlight the importance of the perturbed portion of the image in the prediction process. Different from most of the perturbation-based approaches that create and evaluate a very large number of small perturbations, EBAnO implements a feature extraction process itself that extracts more effective features directly from the latent information hidden into the layers of the model. Therefore, EBAnO overcomes one of the major limitations of most of the perturbation-based techniques, i.e., the quality of their explanations depends on the number of perturbations tested, being inefficient. Indeed, EBAnO perturbs the right portions of the input directly as a result of the unsupervised analysis of the input layers.

In contrast, other approaches compute features importance by back-propagating the predictions through each layer of the network until input pixels (Selvaraju et al. 2019; Sundararajan et al. 2017; Bach et al. 2015; Smilkov et al. 2017; Shrikumar et al. 2017; Kapishnikov et al. 2019). For instance, Grad-CAM (Selvaraju et al. 2019) proposes a gradient-based saliency approach to produce prediction-local explanations. It is based on the study of the gradient output of the last convolutional layer in a DCNN, generalizing the approach proposed in Zhou et al. (2016): it produces a saliency map that highlights the specific regions of the input that are mostly characterizing the prediction. Grad-CAM (Selvaraju et al. 2019) has been tested in a wide range of use cases showing its generality and it has been human-validated to assert the clearness of the produced explanations. However, this family of explanations, despite being more efficient in terms of complexity and runtime than perturbation-based methods, are often affected by noisy gradients (e.g., importance value assigned to neighboring individual pixels is affected by high-frequency variations) or issues with some typical layers frequent in CNN such as max pooling (Ancona et al. 2019).

Unlike most of the techniques discussed above, defined as feature-based explanations, the concept-based explanations attempt to provide explanations in the form of high-level human-readable concepts (Ghorbani et al. 2019; Yeh et al. 2020; Kim et al. 2018). TCAV (Kim et al. 2018) is a perturbation-based global explanation method that generates explanations by measuring the importance of human-defined concepts. Specifically, it extracts the class activation vector (CAV) by training a binary linear classifier using some positive and negative examples of the concept and extracting its weights. Then, it computes the directional derivates of the model’s predictions with respect to the class activation vector to quantify the per-concept feature importance. One weakness of the approach is that the choice of examples is subjective and strictly affects the explanations produced (and could be influenced by human biases). ACE (Ghorbani et al. 2019) is a similar approach that, instead of the human choice of concept samples, relies on unsupervised clustering analysis of different resolution segments extracted from the set of images exploiting an ImageNet-trained CNN. However, it requires a further black-box model that could not reflect the feature learned by the model to be explained, especially in domain-specific tasks (i.e., with images really different from ImageNet). ConceptSHAP (Yeh et al. 2020) adapts Shapley values (Shapley 1953) to assign importance to each concept, and defines a completeness score to measure how sufficient are the concepts in explaining the model. However, being only global techniques, these methodologies are not able to explain in an effective and simple way the specific reasons behind single predictions, but only to globally explain the model.

Finally, other new emerging approaches attempt to produce expressive and verbal explanations. For instance, Rabold et al. (2020) extracts relational information from the inner layers of a DCNN to build an expressive global explanation by combining concept analysis and inductive logic programming, supporting the idea that explanations looking directly in the inner latent space of the model to extracts semantic concepts (features) are more reliable.

In conclusion, EBAnO is a domain-specific and perturbation-based methodology to locally and globally explain deep convolutional neural networks for image classification. It exploits the hidden internal knowledge of the model by mining the embedding representation (i.e., Hypercolumns) to produce more faithful, reliable and human-readable explanations without relying on or training any additional classifier. Moreover, it is able to visualize and measure the impact of both positively and negatively influential features, exploiting two quantitative indices to measure the influence and the precision. It is a big step forward from a preliminary idea described in Ventura et al. (2018), and partially applied, as a completely different solution, in the Natural Language Processing context (Ventura et al. 2022).

EBAnO deeply reshapes the authors’ previous work by (i) combining the concepts of influence relation and influence precision,Footnote 2 (ii) extending the applicability of the approach to a new domain and considering a larger variety of DCNNs, (iii) defining a new strategy to compute the most informative explanation, (iv) revisiting the production of the visual explanations, (v) introducing class-based model global explanations, (vi) providing a deep qualitative, quantitative and human-subjective comparison with the state-of-the-art approaches, and (vii) human-validating the produced prediction-local explanations. (viii) experimentally evaluate the influence metrics on a larger multi-class problem (e.g., up to 1000 classes).

Despite the number of works that are exploring the explainability in the context of image understanding, further improvements are needed to fill some gaps. EBAnO improves the state-of-the-art by introducing a new unsupervised model-aware strategy that is able to (i) extract the information contained in multiple convolutional layers, exploiting the Hypercolumns (Hariharan et al. 2015) representation, (ii) identify relevant and interpretable input features by studying the contribution of each of them through an iterative perturbation process, (iii) quantify the positive or negative combination of influence relation and influence relation precision for each interpretable feature, (iv) produce both visual and numerical explanations (v) provide both detailed prediction-local and class-based model-global explanations. Although some of EBAnO’s features are present in other techniques, it encapsulates them in a single framework to provide human-readable, reliable and effective explanations, suitable also for both technical expert and non-expert users.

3 Explanation process overview

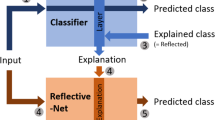

EBAnO provides a detailed prediction-local explanation of a black-box outcome, given an input image and a DCNN predictive model. The prediction-local explanation aims to explain the reasons for the specific predicted class label of the black-box model given a single instance of an input image, and its main steps are shown in Fig. 1.

Firstly, an image is given as input to the black-box DCNN model in  , producing the original predicted label and probabilities in

, producing the original predicted label and probabilities in  . Then, the hypercolumns of the input image are extracted from the black-box model in

. Then, the hypercolumns of the input image are extracted from the black-box model in  and are processed, through an unsupervised analysis, to obtain a set of interpretable features in

and are processed, through an unsupervised analysis, to obtain a set of interpretable features in  . The interpretable features are composed of semantically related groups of pixels (with similar inner representation extracted from the hypercolumns) representing human-understandable concepts that similarly influenced the original labels predicted by the model (as detailed in Sect. 4). Then, a new version of the input image is produced for each extracted feature by introducing some noise over their pixels (one new image for each feature) in

. The interpretable features are composed of semantically related groups of pixels (with similar inner representation extracted from the hypercolumns) representing human-understandable concepts that similarly influenced the original labels predicted by the model (as detailed in Sect. 4). Then, a new version of the input image is produced for each extracted feature by introducing some noise over their pixels (one new image for each feature) in  . The perturbation step is required to understand the importance of each interpretable feature with respect to the prediction probabilities of the original class labels by the DCNN model (details in Sect. 5.1). To this aim, the DCNN model is presented with the perturbed images, and the different classification probabilities are analyzed. Comparing the probabilities before and after the perturbation, we can meet one of the following three cases.

. The perturbation step is required to understand the importance of each interpretable feature with respect to the prediction probabilities of the original class labels by the DCNN model (details in Sect. 5.1). To this aim, the DCNN model is presented with the perturbed images, and the different classification probabilities are analyzed. Comparing the probabilities before and after the perturbation, we can meet one of the following three cases.

-

1.

Suppose the probability of belonging to a class label decreases. In that case, the noise over pixels of the interpretable feature (that caused an absence of the associated concept) is the main responsible for this decrease. Therefore, the feature was positively impacting (or influential) for the prediction of the original class label for the DCNN model.

-

2.

If, instead, the probability remains the same, then the feature was neutral in the prediction of the class label.

-

3.

Finally, if the probability increases, then the feature was negatively impacting the prediction of the original class label.

The amplitude and precision of the influence of each feature are measured with two quantitative indices ranging from -1 to +1, namely nPIR and nPIRP (as discussed in Sects. 5.2 and 5.3). Finally, EBAnO produces the local explanation in  , which consists of a numerical explanation (with the numerical indices for all the extracted interpretable features) and a visual explanation that shows the interpretable features over the original pixels of the image, with the corresponding influence highlighted by a color heatmap (as detailed in Sect. 7).

, which consists of a numerical explanation (with the numerical indices for all the extracted interpretable features) and a visual explanation that shows the interpretable features over the original pixels of the image, with the corresponding influence highlighted by a color heatmap (as detailed in Sect. 7).

Explaining the classifier’s prediction can be useful for understanding the reasons for a possible misleading prediction and understanding if the model focused on the correct portion of the image, even if the predicted class label is correct. Table 1 shows an example of wrong prediction by a pre-trained VGG16 model given the input image in Fig. 2. The black-box model predicted as most probable class label Bottlecap followed by Pizza with respectively probabilities 0.42 and 0.28 (even if the ground truth label was pizza). Still, the black-box nature of the model hides the reasons. In this case, an end-user can be interested in understanding the features that influenced the model’s predictions for both the class labels. We will use this as a running example to explain the whole methodology in the following sections.

In Sect. 4, we provide a detailed discussion on how EBAnO is able to extract the interpretable features from the unsupervised analysis of the hypercolumns. In Sect. 5, we discuss the measurement of the feature importance through the process of perturbation and, in particular, we formally define the two quantitative indices that measure the influence and precision. In Sect. 6, we show how EBAnO automatically discovers the best possible explanation. Finally, in Sect. 7, we discuss how the final local explanation is produced.

4 Interpretable feature extraction

The objective of EBAnO is to identify the input pixels that mostly contribute to the DCNN prediction. However, the single-pixel contribution does not provide interpretable results and is computationally demanding. Thus, we identify the sets of correlated pixels mostly influencing the outcome of the black-box model, yielding more understandable results for humans since it is simpler for a human to evaluate macro portions of an image than single pixels. Indeed, the higher is the level of the features (i.e., pixels belonging to full objects), the higher their interpretability is by humans. Moreover, the higher is the fidelity of the explanation with respect to what the model has effectively learned, the higher is the reliability of the explanation itself. The proposed feature extraction strategy aims to identify meaningful and interpretable portions of the input image exploiting the unsupervised clustering analysis of hypercolumns (Hariharan et al. 2015), which are a vectorial representation of the model across all its inner levels. The segments extracted from the input image with this strategy are called interpretable features.

Existing state-of-the-art techniques are not suitable to obtain interpretable features for our purposes. On the one hand, deep models for image segmentation (Minaee et al. 2020) are able to extract full segments of pixels from input images corresponding to entire concepts (i.e., people, animals, background, etc.) that, in theory, can be used as interpretable features for EBAnO. However, even if these high-level segments are straightforward to understand by humans, it is impossible to assume that the whole segment impacted more the predicted class label by the deep learning model (and not only a part of it), increasing the risk of losing fidelity. For example, if a DCNN model predicts the Dog class label by focusing on the dog’s eyes, by using a segmentation model that extracts the dog’s pixels, this aspect would not be captured. Moreover, another limitation of the segmentation models for our purposes is that it would be required another model performing the segmentation that would have a number of fixed classes depending on the classification task. Consequently, the explanation process would not be portable. For example, a model that extracts segments in images for self-autonomous driving cars cannot be used to extract interpretable features for the explanation of a deep model used in the medical field and vice versa.

On the other hand, other existing techniques like adversarial attacks (Akhtar and Mian 2018) are able to create new perturbed variations of the input image that are not perceptible to the human eyes but that cause a wrong prediction of the model. These perturbations are composed of adversarial pixels that, added to the input image, create a new perturbed variation that looks identical to the human eyes but that will be misclassified by the model. However, these techniques can be used to explain some global weaknesses of the model but not to locally explain a single prediction for a given input image (for how the pixels are changed). Furthermore, the pixels changed by this technique are not easily interpretable by humans and, therefore, are not suitable for a good explanation.

For these reasons, EBAnO, by implementing itself the interpretable feature extraction strategy, is able to exploit, in an unsupervised way, the inner knowledge hidden in the learned weights of the model in order to extract correlated portions of the image that influenced the final output of the model similarly. Moreover, these features represent macro-concepts that are easily interpretable by humans and reflect what the model has effectively learned. Finally, the feature extraction strategy of EBAnO does not require training any additional model, but it can be integrated directly into the model to explain itself.

4.1 Hypercolumns extraction

Hypercolumns have been defined by Hariharan et al. (2015) as a vectorial representation of every input pixel. The main idea is that, if bias or knowledge have been learned by the black-box model, they can be extracted by mining the latent information under the form of hypercolumn, thanks to their ability to collect the information of the outputs related to a specific location across all the layers of the DCNN. The first layers of the DCNN are able to generalize over the shape of objects, identifying corners and edges, whereas the final layers are more sensitive to the semantic meaning of an image (Bengio et al. 2013; Mahendran and Vedaldi 2016; Hariharan et al. 2015). The hypercolumns of a specific input can be extracted feeding into the black-box model the target image: each convolutional layer of the network outputs a tensor that is the results of the application of the weights learned by the model. These tensors contain all the latent information learned by the model during the training phase. In the case of a very deep network, using all the convolutional layers to extract the hypercolumns can produce a very deep tensor which is difficult to manage. However, EBAnO focuses on the most characterizing information of the model, which is usually included in the deepest layers of the network, i.e., the deeper the layer, the more specialized it is, and the information that can be extracted from it are very task-specific. For this reason, the number of layers that should be considered is usually much lower than the total number of available layers in the network. The number of layers exploited to extract hypercolumns is a parameter that remains empirically configured, being related to target the network’s architecture.

Figure 3 shows an example of hypercolumns extraction from a DCNN given an input image. In this example, the last 5 convolutional layers of the DCNN model are extracted. Then, an upscaling step is performed by exploiting bilinear interpolation to lead back to the original size of the input image. After the upscale, the hypercolumn representation of the input image is composed of a tensor with the same width and height of the original image and a number of channels equal to the sum of the channels in the extracted layers. Notice that each pixel is represented by a vector representation of the same dimension. In this example, after the upscaling, the final representation is a tensor of shape (224, 224, 2560), and therefore, each pixel is represented by a vector of dimension 2560.

4.2 Feature extraction

Through an unsupervised clustering analysis of hypercolumns, EBAnO can identify correlated beams of vectors and subsequently detect the input areas to which they correspond. EBAnO projects the grouped beams of hypercolumns on the input image by labeling each pixel with its cluster. A peculiar feature of EBAnO is that the clustering of the hypercolumns is not led by the pixels’ locality in the image but only by the weights learned by the DCNN model, hence driving the explanation with the inner information of the model itself. Therefore, differently from other algorithms, the image segmentation strategy implemented in EBAnO does not consider input colors or pixel positions, but it is strictly related to what the model has learned: the segments highlighted with this strategy reflect the inner knowledge of the model. Thus we expect to provide more relevant local explanations. This is because pixels with similar representation possibly represent similar and related semantic aspects that probably affected the original prediction of the model in a similar way. Consequently, the features are obtained by performing a clustering analysis in a high-dimensional embedding space defined by the hypercolumns, where each pixel is represented with a high-dimensional dense embedding vector.

To this aim, it exploits the Faiss (Johnson et al. 2021)Footnote 3 implementation of K-Means (Lloyd 1982), an efficient similarity search and clustering of dense vectors library that also supports GPUs. It was chosen because, from the experiments, it results in the best in terms of effectiveness and efficiency in extracting relevant features. However, An experimental evaluation of different clustering algorithms’ performance in the feature extraction process of EBAnO is provided in “Appendix B”. The K-Means algorithm requires the specification of the number of clusters k, which determines the number of resulting image portions (i.e., our interpretable features) extracted from the input image. However, it is impossible to know the best number of features to extract from the input in advance. For this reason, EBAnO iteratively produces the explanations for different possible divisions (i.e., different values of k) and chooses the best one. Nevertheless, the process is the same for each value of k. Thus, we first discuss the process of perturbation and influence measurement of each feature in Sect. 5. Then, in Sect. 6, we show how the best explanation is selected among the different values of k evaluated.

Figure 4 shows an example of interpretable features extracted from the running example introduced in Fig. 2 with a number of clusters \(k=3\). The features extracted are driven by the inner knowledge hidden in the model (hypercolumns) and reflect what the model has effectively learned, but also are easy to interpret and understand by humans.

5 Measuring the features’ influence

After extracting the interpretable features, a perturbation phase is required to measure the importance and the impact that each feature had in the originally predicted output of the model, given the input image. Firstly, we explain the perturbation process implemented in EBAnO in Sect. 5.1. Then, we formally define the two quantitative indices, introduced in EBAnO, to measure the influence (nPIR) and the precision (nPIRP) in Sects. 5.2 and 5.3.

5.1 Perturbation

Ideally, each interpretable feature could represent a relevant concept of the input image. Our challenge is to identify the most relevant portions of the input for both the model and the user. The perturbation of the model’s input is a well-known state-of-the-art technique (Alvarez-Melis and Jaakkola 2017; Ventura et al. 2018; Lundberg and Lee 2017; Ribeiro et al. 2016) to study the impact of input data on the prediction outcome. Our intuition is to drive the perturbation on the specific pixels of the interpretable features and measure the prediction difference on those concepts.

EBAnO implements an iterative perturbation process based on Gaussian blur.Footnote 4 Specifically, for a given feature, the relative pixels are occluded with a sequence of extended box filters, which approximates a Gaussian kernel (Gwosdek et al. 2012). It requires the Gaussian radius parameter specification that we empirically set to 10. A new perturbed image is produced for each blurred interpretable feature, and we expect the model to miss the recognition of the corresponding concept; three results are possible: (i) no change in prediction (the concepts represented by the feature were not relevant for the predicted class); (ii) stronger prediction (the probability of belonging to the predicted class increases after the perturbation, hence, removing the feature, the predicted class is better modeled); (iii) weaker prediction (the probability of belonging to the predicted class decreases after the perturbation, hence the feature was important to model the class).

Even if several possible perturbation solutions exist, EBAnO exploits Gaussian blur because it is less likely to create artifacts that would cheat the model. Figure 5 shows an example of a perturbed image, created for one of the features extracted from Fig. 4 with different perturbation techniques. The perturbation introduced by the Gaussian blur Fig. 5a is less invasive than setting the pixels values to 0 (black occlusion in Fig. 5b), to 255 (white occlusion in Fig. 5c), or to the mean value over the entire image (mean occlusion in Fig. 5d).

An experimental evaluation of the Gaussian blur radius parameter’s impact is provided in “Appendix A”.

Perturbed images examples with different occlusion techniques. Gaussian blur (a) is the one adopted by EBAnO, and it blurs the feature pixels with a sequence of extended box filters, which approximates a Gaussian kernel. Other possible occlusion techniques consist of replacing the feature pixels with black (b), white (c), or the mean value of pixels (d).

5.2 Normalized perturbation influence relation—nPIR

Once applied the perturbation over each extracted feature, we want to measure the impact that pixel occlusion has caused. Specifically, the first aspect that we want to measure is the sign, the amplitude of the impact, and the relative influence of an interpretable feature for the prediction of the class label with the normalized Perturbation Influence Relation (nPIR) index.

Formally, let’s consider a black box model able to distinguish between a set of classes \(c \in C\) and let be \(ci \in C\) the class-of-interest for which an explanation has to be computed. Given an input image I, EBAnO extracts the set of interpretable features \(f \in F\) and then for each f it performs the perturbation. Let’s consider \(p_{o,ci}\) as the probability of the original input image I (the unperturbed image) to be labeled with the class-of-interest ci by the model, and \(p_{f,ci}\) as the probability of the same image to be labeled with the same class-of-interest ci when the feature f is perturbed. If \(p_{f,ci}\) is lower than \(p_{o,ci}\), than f contains a positively influential concept for the model and vice versa. To measure this effect, we exploit the nPIR index, which includes different components, in particular (i) the amplitude of the impact and (ii) its relative influence on the perturbation process.

The amplitude of the perturbation influence, \(\varDelta I\), for feature f can be measured by: \(\varDelta I_f=p_{o,ci}-p_{f,ci}\). It ranges from \(-1\) to 1 since the domain for probability values falls in [0, 1]. If \(\varDelta I_f>0\), the feature f has a positive influence on ci, since its perturbation causes a decrease of the probability to belong to ci, and vice versa.

The relative influence of the perturbation as a simple ratio between the probabilities was proposed in Ventura et al. (2018). However, it is asymmetric, as \(\frac{p_{o,ci}}{p_{f,ci}}\) ranges from 0 to 1 in case of negative influence, but from 1 to \(\infty \) in the other case, leading to hard comparisons between positive and negative effects. We instead introduce the Symmetric Relative Influence index to harmonize the measurement of each feature f relative influence, regardless of its positiveness or negativeness: \(SRI_f=\frac{p_{o,ci}}{p_{f,ci}}+\frac{p_{f,ci}}{p_{o,ci}}\).

By combining the previously described contributions, the Perturbation Influence Relation can be defined as:

The coefficient \(\alpha \) represents the contribution of the original input w.r.t. the perturbed one and, similarly, \(\beta \) represents the contribution of the perturbation of feature f w.r.t. the original input. The PIR, which ranges in the \((-\infty ,+\infty )\) interval, is finally normalized in the \([-1;1]\) range exploiting the common Softsign function, leading to the definition of the normalized Perturbation Influence Relation (\({nPIR}_f\)):

Where:

Combining the previous equations, the formal definition of nPIR for a given class of interest ci is provided in Eq. 4, which avoids the Eq. 1 problem of being undefined for \(p_{f} = 0\) and \(p_{o} = 0\).

The normalized Perturbation Influence Relation captures both the amplitude and the relative impact. Experimental results, as reported in Sect. 10.8, show that human-appreciated features with a strong positive influence are characterized by nPIR greater than 0.75, whereas the negative-influence threshold is around -0.2.

5.3 Normalized Perturbation Influence Relation Precision–nPIRP

The second aspect that we want to measure is if an interpretable feature influenced the prediction of only one or several class labels. The wider the range of classes impacted by an interpretable feature, the less that feature can be considered focused on the class of interest: the model has not learned the concept/pattern associated with that feature as precisely relevant only to the class of interest. This behavior can bring to light possibly misleading knowledge, such as training bias or bad network design.

To this aim, we introduce the normalized Perturbation Influence Relation Precision (nPIRP) index to evaluate the precision of the absolute impact of f over ci (component \(\xi _{ci}\)) w.r.t. the sum of the positive impacts over classes \(C \setminus ci\) (component \(\xi _{C \setminus ci}\)), which are defined as:

The two measurements of influence are weighted by the probability of the original image to belong to each class so that the influences of the most probable classes are taken into greater consideration w.r.t. the influences obtained on less probable outcomes.

Then, the Perturbation Influence Relation Precision of a feature f is defined as:

By following similar reasoning to that of nPIR, we can normalize Eq. 7 exploiting the softsign function to obtain the Normalized Perturbation Influence Relation Precision index:

As nPIRP is computed for each feature and ranges in \([-1;1]\). When f is very precise on describing ci, the nPIRP has values close to 1. When f is impacting more other classes \(C \setminus ci\) than the class of interest ci, the index value is close to \(-1\). When nPIRP is close to 0, f impacts similarly the class-of-interest and other classes as well.

6 Most informative local explanation

As introduced in Sect. 4, EBAnO implements an iterative process where different k divisions are analyzed and evaluated to find the best cluster partitioning. We define the local explanation produced by the best k partitioning as the most informative local explanation.

Figure 6 shows in detail all the steps performed by EBAnO to find the most informative local explanation. Firstly, as discussed in Sect. 4.1, given an input image  , the hypercolumns are extracted from the DCNN model

, the hypercolumns are extracted from the DCNN model  . Then, the unsupervised analysis of the hypercolumns is performed to extract a set of interpretable features with the K-Means algorithm

. Then, the unsupervised analysis of the hypercolumns is performed to extract a set of interpretable features with the K-Means algorithm  . However, it is impossible to know the best number of features to extract from the input in advance. For this reason, EBAnO produces the explanations for different possible divisions in range \(K = [2, k_{max}]\), where \(k_{max}\) is a user-defined parameter of the local explanation. An important trade-off is to set \(k_{max}\) so that the number of extracted features f is small enough to be manually inspected by end-users but large enough to avoid missing details and diversity. More precisely, for each value of \(k \in K\), EBAnO extracts \(f = k\) interpretable features by clustering the hypercolumns extracted before. Then, for each feature, it performs the perturbation (as discussed in Sect. 5.1) and extracts from the model the new probabilities (of each new perturbed image). Finally, it produces, for each feature of each \(k \in K\), the influence and the precision indices, respectively nPIR and nPIRP (as discussed in Sects. 5.2 and 5.3). Even if the end-user can query all possible k divisions of the images and their relative explanations, EBAnO is able to automatically suggest the most informative local explanation (i.e., the best k division among all possible k analyzed). The most informative local explanation is defined as the one maximizing the contrast of the perturbation-influence-relation values (nPIR index defined in Sect. 7) between all their features.

. However, it is impossible to know the best number of features to extract from the input in advance. For this reason, EBAnO produces the explanations for different possible divisions in range \(K = [2, k_{max}]\), where \(k_{max}\) is a user-defined parameter of the local explanation. An important trade-off is to set \(k_{max}\) so that the number of extracted features f is small enough to be manually inspected by end-users but large enough to avoid missing details and diversity. More precisely, for each value of \(k \in K\), EBAnO extracts \(f = k\) interpretable features by clustering the hypercolumns extracted before. Then, for each feature, it performs the perturbation (as discussed in Sect. 5.1) and extracts from the model the new probabilities (of each new perturbed image). Finally, it produces, for each feature of each \(k \in K\), the influence and the precision indices, respectively nPIR and nPIRP (as discussed in Sects. 5.2 and 5.3). Even if the end-user can query all possible k divisions of the images and their relative explanations, EBAnO is able to automatically suggest the most informative local explanation (i.e., the best k division among all possible k analyzed). The most informative local explanation is defined as the one maximizing the contrast of the perturbation-influence-relation values (nPIR index defined in Sect. 7) between all their features.

Formally, for each \(k \in [2, k_{max}]\), it is provided an explanation \(e_k\) composed by a set of interpretable features \(F_k\) (with k features). Then, for each feature \(f \in F_k\), the corresponding influence e precision indices (nPIR and nPIRP) are computed. Finally, for each explanation \(e_k\) produced by each k partitioning, it is assigned a score by analyzing the different influence (nPIR) scores obtained by their features, as follows:

In other words, the score assigned to each k possible division is equal to the difference between its most influential and its least influential feature. Once computed the score for each possible k, let E be the set of all \(e_k\) explanations produced with the different k values evaluated. The most informative explanation \({\hat{e}}\) is the one maximizing the \(K_{score}\), as follows:

The most informative explanation \({\hat{e}}\) is the one proposed to the end-user as the best explanation (even if it is possible to query all the others k divisions produced). The score function proposed in EBAnO, defined by Eqs. 9 and 10, could be changed by the final user according to specific needs.

7 Local explanation

Comparing the probabilities before and after each perturbation, EBAnO is able to study the influence of each interpretable feature on a specific predicted class, producing a local explanation with a numerical contribution and a visual part. We firstly discuss the numerical and visual parts of the local explanation produced by EBAnO (Sects. 7.1 and 7.2). Then, we discuss in detail the local explanations produced for the running example (Sect. 7.3).

7.1 Numerical explanation

As discussed in Sect. 5, we introduce two indices (nPIR and nPIRP), allowing the user to objectively inspect the details of the prediction process. Differently from other works (Lundberg and Lee 2017; Ribeiro et al. 2016), our indices (i) efficiently measure the influence relation that exists between the input feature and the model outcomes in terms of neutral, positive or negative impact, and (ii) they also consider the influence precision of the features for the class-of-interest in a multi-class problem. Precise features are very focused, affecting only a specific predicted class. Low-precision features, instead, can affect many classes at the same time.

We recall that the influence sign and amplitude of a feature over the class-of-interest, quantified by the nPIR index, can be considered:

-

positive if the predicted probability decreases after the perturbation, meaning that the feature was positively relevant for the model;

-

neutral if the predicted probability remains the same after the perturbation, meaning that the feature was irrelevant for the model;

-

negative if the predicted probability increases, meaning that the feature was negatively relevant for the model.

Also, the distribution of the probabilities for the other classes can change accordingly to the perturbed feature. The precision of influence of a feature, quantified by the nPIRP index, can be considered:

-

precise if the class-of-interest is the only one affected by the perturbation process of the feature;

-

not precise if the perturbation of the feature is equally affecting the class-of-interest and at least another class;

-

negatively precise if the perturbation of the feature is affecting more any of the other classes other than the class-of-interest.

The two indices computed for each feature over a class of interest compose the numerical explanation part of the local explanation.

7.2 Visual explanation

Along with the detailed quantitative explanation, our explanation framework provides an easy-to-understand prediction-local visual explanation, where, each interpretable feature is colored with a red-green gradient according to the value of nPIR. The more a green area is intense, the more the corresponding feature is positively influential for the class-of-interest. On the contrary, the more a red area is intense, the more the feature is negatively impacting the class-of-interest. White areas instead, which results almost transparent, show input portions that have a neutral impact on the prediction process (i.e., the model is completely independent of the presence of these features). Differently from other works (Selvaraju et al. 2019; Simonyan et al. 2014; Fong and Vedaldi 2017; Zhang et al. 2018; Petsiuk et al. 2018), the proposed visualization is not based on saliency maps. A saliency map is a simple and clear visualization strategy that smoothly shows the relevance of contiguous areas of pixels. However, it does not allow to differentiate the influence of multiple input areas at the same time. Instead, EBAnO can highlight the impacts of more input regions simultaneously, with their positive and negative contributions, including more information in a single visual representation (as shown in the example in Sect. 7.3).

7.3 Example of local explanation

Example of local explanations for two classes of interest and the image shown in Fig. 2. Each explanation is organized with the Visual explanation (left), the map of features (center), the quantitative explanation (right). (a) is the explanation for the Bottlecap class label, (b) for the Pizza class label

As discussed before, for the input image of the running example in Fig. 2, are produced the output probabilities by a pre-trained VGG16 DCNN model shown in Table 1. The model predicts the wrong Bottlecap class as most probable, followed by Pizza with probabilities respectively 0.42 and 0.28 (even if the ground truth label was pizza). In this case, an end-user that wants to analyze the reasons for the wrong behavior of the DCNN model in the predictions of the class label of the input image can inspect the local explanation produced by EBAnO. For instance, in this case, it can be useful to produce the local explanation for both Bottlecap and Pizza classes of interest (as shown in Fig. 7). For both explanations, are provided the features map (center), the visual explanation (left), and the numerical explanation (right).

For the explanation of the Bottlecap class label (the most probable class predicted by the model) 7a, EBAnO finds the division with 5 interpretable features as the most informative local explanation. From this local explanation, it emerges that the parts of the image the most responsible for the wrong prediction of the model are the pixels corresponding to the pizza borders (feature 4) with an nPIR (influence) index close to 1 and a positive nPIRP (precision). Moreover, the features corresponding to the table’s pixels positively impacted the prediction of the Bottlecap class label, even if with a lower amplitude. Specifically, the table’s pixels are divided into two interpretable features based on the amplitude of their influence on the prediction. Therefore, the corresponding pixels are colored in light green in the visual explanation (with higher intensity on the pixels of feature 3 because it obtained a higher influence score). Finally, the feature composed by the upper borders of pizza (feature 1) has a very small positive impact on the prediction, obtaining an nPIR score close to 0.1.

Instead, for the explanation of the Pizza class label, EBAnO finds the partitioning with 3 interpretable features as the most informative local explanation7b. The interpretable feature composed of the pizza topping’s pixels highly positively impacted the prediction of the Pizza class label. Indeed, the corresponding pixels are highlighted in dark green in the visual explanation, and the influence score (nPIR) in the numerical explanation is close to 1. Moreover, the second feature, composed of the pizza crust’s pixels, positively impacted the prediction (even if less than the first one). Therefore, it is highlighted in light green with a corresponding influence index close to 0.5. However, this feature is not precise because the perturbation of their pixels impacted not only the Pizza label but also other labels, obtaining a negative precision index (nPIRP). Finally, the third interpretable feature shows that the pixels of the table negatively impacted the original prediction of Pizza and is highlighted in light red with an influence index close to -0.5.

The conclusion that an end-user can draw, thanks to the detailed explanations provided by EBAnO, is that the model’s prediction is unreliable because it mostly looks from the context (table’s pixels) to predict the class label. Moreover, the pizza borders are uncertainly captured by the model that assigns its pattern to different labels (Bottlecap and Pizza).

8 Class-based model explanation

A model-global explanation is usually exploited to study the influence of a specific concept on the whole prediction set provided by the model to detect possible bias, for instance. In data domains like tabular data or textual data, the explanation process takes advantage of well-defined features, i.e., columns and word tokens, that are simple to aggregate in model-global explanations. Works like Lundberg and Lee (2017); Ribeiro et al. (2016) study the behavior of the model aggregating the explanations produced for singular predictions by feature meaning.

In the case of image inputs, instead, the DCNN model processes each pixel. As previously discussed, single-pixel explanations are useless for humans. Hence, EBAnO groups prediction-local explanations according to interpretable features. Analyzed together, nPIR and nPIRP describe the influence, in terms of both the contribution and the precision, of each interpretable feature of an input image on the prediction process. This information enables EBAnO to identify behavioral patterns of the model w.r.t the prediction of each class.

The model-wise challenge is to aggregate the interpretable features belonging to different images by their semantic meaning without using another supervised model. To this aim, EBAnO provides an unsupervised class-based model explanation by aggregating the prediction-local features according to their class-of-interest. Then, each class-of-interest is described by all the features extracted during the local-explanation process, exploiting their nPIR and nPIRP values. The features are projected on the \({nPIR}\times {nPIRP}\) space, and studying their distribution allows the user to inspect the class-wise behavior of the model during the decision-making process.

Figure 8 shows an example of a class-based model explanation for the class-of-interest Pizza computed for a VGG16 model, aggregating three local explanations of three different input images. The figure shows the interpretable features distributed in the \({nPIR}\times {nPIRP}\) space and their KDE distributions (Kernel Density Estimation) on the nPIR and nPIRP axis. The plot groups the features in the four quadrants. For instance, features being both positively influential and precise for the class-of-interest are in the quadrant with \({nPIR}\ge 0\) and \({nPIRP}\ge 0\). On the top and right axis, the KDE distributions of the features w.r.t. nPIR and nPIRP are reported.

The optimal distribution of features for a model is when all the features that are representative for the class-of-interest are positioned on the top-right corner with \({nPIR}= 1, {nPIRP}= 1\), and all the other features are close to the center with \({nPIR}= 0, {nPIRP}= 0\) so that the contextual features are not influencing the decision-making process. The presence of features spread around the plot means that the model can be considered uncertain about their role in the prediction process. The plot easily enables human experts to quickly drive their evaluation towards specific features for a semantic assessment of the model behavior.

9 EBAnO-Batch

In some specific scenarios, the time required to produce an explanation could be a bottleneck. Specifically, the execution time could become an issue when multiple explanations of several images are needed. An example of this situation is the global explanations, where multiple local explanations for different images predicted with the same class-of-interest should be produced. An iterative approach that produces the explanation of single image instances, one at a time, is not efficient. For this reason, we also propose a batch version of the framework called EBAnO-Batch.

EBAnO-Batch takes as input a set of images and classes of interest (instead of a single image and a class of interest) and outputs the local explanations for the entire set, saving them persistently on disk. In EBAnO-Batch several steps of the pipeline are vectorized and optimized for an entire set of images to speed up the computation, increasing the overall efficiency of the methodology. Specifically, the hypercolumns of the entire batch are extracted in one forward pass of the DCNN, the probabilities predicted after the perturbation of each interpretable feature of all images are produced in batch, and the dimensionality reduction with the PCA is optimized for the entire batch.

Exploiting EBAnO-Batch, we reduced on average by a factor of 10 the time required to produce an explanation (by testing several batch sizes in [8, 16, 32, 64, 128] and several models).

10 Experimental evaluation

This section is structured as follows. Sect. 10.1 describes the experimental settings. Sections 10.2 and 10.3 present the prediction-local and the class-based global explanations, respectively. Sections 10.4 and 10.5 discuss the performance of the nPIR index in identifying the contribution of each feature in the prediction process, first across all the tested images and models, and then compared to the state-of-the-art Shapley values, respectively. Then, Sects. 10.6 and 10.7 qualitatively and quantitatively compare EBAnO with two state-of-the-art explanation frameworks. Section 10.8 reports the human-validation process carried out to assess the interpretability of EBAnO’s explanations. Finally, Sect.10.9 performs a brief execution time comparison.

10.1 Experimental settings

To show the effectiveness and the reliability of the framework, EBAnO has been tested on 4 different pre-trained DCNN models available in the Keras deep learning library (Chollet et al. 2015)Footnote 5: (M1) VGG16 (Simonyan and Zisserman 2015), (M2) VGG19 (Simonyan and Zisserman 2015), (M3) InceptionV3 (Szegedy et al. 2016), and (M4) InceptionResNetV2 (Szegedy et al. 2017). All the models are pre-trained on the well-known ImageNet (Russakovsky et al. 2015) dataset with 1000 classes. EBAnO has been applied to produce prediction-local explanations for the 4 different models using 250 input images, belonging to 54 different classes. The top-10 predicted classes of each image have been analyzed, for a total of 10,000 prediction-local explanations. The input images have been taken from different datasets (Coco (Lin et al. 2014), ImageNet (Russakovsky et al. 2015), Caltech (Li Fei-Fei et al. 2004), and web scraping).

The number of convolutional layers analyzed for each model has been experimentally set as follows. Models M1 and M2 are relatively small DCNNs and the last 5 and 8 convolutional layers, respectively, have been considered. Instead, models M3 and M4 have a more complex structure and the last 34 and 24 convolutional layers have been included in the analysis, respectively. We found these settings to be a fair trade-off between feature interpretability and affordable execution complexity.

The number of extracted features has been set to range between 2 and 10. The upper limit prevents too small features with poor semantic meaning and low relevance for a human user to be extracted. Thus, each explanation will be described at most by 10 features, among which the most informative explanation (Sect. 6) is automatically proposed to the user, with the others available for further manual insights.

10.2 Prediction-local explanations

In this section we discuss in detail the insights provided by the local explanations of EBAnO. We exploit models M1 and M4 for the discussion of the experimental results since they are representative architectures of the two remaining models as well. However, in “Appendix C”, a further selection of prediction-local explanations is discussed in detail, on the results of models M2 and M3. Moreover, in “Appendix D”, some other examples of local explanations for correctly classified images are reported. Finally, a larger number of prediction-local explanations of all four models is publicly available through an interactive web-based tool.Footnote 6

Figure 9 shows an example input image (named I1) showing a mouse over a tailed surface.

The predictions of M1 and M4 are shown in Table 2 and 3, respectively. By applying EBAnO to such predictions we aim to unwrap the black-box models M1 and M4, providing detailed explanations to answer the following questions:

-

Q1. “Why is Fig. 9 representing a Toilette seat for model M1?”

-

Q2. “Why is Fig. 9 not a Mouse for model M1?”

-

Q3. “Why is Fig. 9 a Mouse for model M4?”

Answering Q1. Model M1 (VGG16) fails to predict the correct class for input I1, providing the label Toilet seat with the highest probability, and the correct class Mouse follows with lower probability. Figure 10a shows the explanation provided by EBAnO for model M1 and the class of interest Toilet seat. It identifies the most informative explanation to consist of 9 features (Fig. 10a center). Figure 10a-left shows the visual explanation and Fig. 10a-right shows the numerical explanation.

We recall that the visual explanation highlights in green the interpretable features positively influencing the class-of-interest, while in red the negatively ones. We notice that the decision of assigning the class Toilet seat is mainly due to the presence of the horizontal lines of the background tiles. Hence, the prediction Toilet seat has been taken because of contextual information and not because of the subject itself. Based on the numerical explanation, where we recall that the bar chart reports the values of nPIR (influence) and nPIRP (precision) for each feature, we confirm that feature 6, corresponding to the lines of the tiles, is the most positively influencing. However, its nPIRPvalue is close to 0, meaning that it is not precise at all: it is a contextual feature similarly influencing also many other classes.

Answering Q2. Figure 10b shows the explanation produced by EBAnO for model M1 and for the class of interest Mouse, with 4 interpretable features. From the visual explanation, we notice that the Mouse is correctly associated with feature 1. However, (i) feature 4 is strongly affecting the Mouse prediction (very negative nPIR), and (ii) the nPIRPof feature 1 is negative, as highlighted by the numerical explanation in Fig. 10b-right. The case of positive nPIRand negative nPIRP(feature 1) describes the behavior of the DCNN model: even if feature 1 is positively influencing the (correct) class, other classes are more influenced by this feature w.r.t. the one under analysis. we can blame feature 4 (portions of the background tiles), with its negative nPIRand negative nPIRP, for the incorrect prediction. The decision process was mainly affected by the context of the subject: the model correctly distinguishes among the different sections of the input, but it evaluates the context more than the subject. Furthermore, the influence of different features on more than one class means that possibly the training set contained some bias for this subject in the given context (e.g., toilet objects often represented with background tiles).

Answering Q3. Model M4 (InceptionResNetV2) correctly classifies the image as a Mouse. The EBAnO explanation is reported in Fig. 10c with 5 features. Feature 1, the mouse body, is the only feature with high influence and precision, whereas all other features are neutral. Differently from model M1, M4 is well focused on the subject, ignoring the context around it. Its predictions can be considered generally reliable and it can be trusted with higher confidence than M1: it is not by chance that the prediction is correct.

EBAnO local explanations. The input image is shown in Fig. 9. Visual explanation (left), features (center), numerical explanation (right)

10.3 Class-based explanations

Figure 11 shows the results of a class-based model explanation computed on 50 images classified as Dalmatian by models M1 and M4 (further considerations about models M2 and M3 in “Appendix E”). While the features of M1 are scattered across the whole \({nPIR}\times {nPIRP}\) area (Fig. 11a), M4 presents tidier patterns (Fig. 11b). Recalling the optimal distribution of features introduced in Sect. 8, the class-based explanation of M1 shows a significant uncertainty in the prediction process of the class Dalmatian. Instead, M4 features are concentrated mostly in \(0 \le {nPIR}\le 1\) and \({nPIRP}\approx 1\). In details, we notice that M4 contextual features are in general in the \(0 \le {nPIRP}\le 1\), \({nPIR}\approx 0\) area. Such explanation describes a much more reliable prediction process of M4 for the class of interest Dalmatian, with the model assigning a much clearer role to each feature. This result is also coherent with the state-of-the-art knowledge: M4, i.e. InceptionResNetV2 is known in the literature as a much more accurate and reliable model w.r.t. M1, i.e. VGG16.

We finally note that EBAnO highlights the model uncertainty in a totally unsupervised approach: it does not know the ground truth labels, and often they are not available. Hence, the class-based model explanations are not only widely applicable, but they also empower the end-user to better choose the model to trust based on easily readable and visual information. Moreover, exploiting EBAnO-Batch, they are produced more efficiently and quickly, explaining entire batches of images at a time.

10.4 Feature-relevance index assessment

To provide a wide analysis of the behavior of the proposed nPIR index w.r.t. the different DCNN models across all the 250 images of the experimental set, the distributions of the nPIR minimum and maximum values have been computed and reported in Fig. 12. Such values represent the most informative explanations computed by EBAnO when the class of interest is equal to the top-1 prediction. If the difference between the minimum and the maximum nPIR distributions is large, then we can support the wide applicability of the proposed approach, besides the limited number of examples reported in the experimental results due to the space constraints.

The top influential features, with maximum nPIR, are mostly included in the [0.8, 1.0] bin. Only models M3 and M4 have some features of the top influential ones falling in the [0.0, 0.2] bin. However, the value of maximum nPIR never goes below 0 for any model, hence EBAnO is always able to identify at least one positively influential feature.

The features with minimum nPIR are predominantly located in the \([-0.2, 0.2]\) range, meaning that most of the less influential features are from slightly negative to almost neutral for the prediction process. Minimum values higher than 0.2 are very rare, confirming the large distance from the minimum and the maximum nPIR values, which drives the right choice of the most informative explanation by EBAnO.

10.5 Feature-relevance index comparison

In the state-of-the-art (see Sect. 2), the Shapley values (Štrumbelj and Kononenko 2014; Lundberg and Lee 2017) are widely used to explain the relevance of each feature in the decision-making process. Shapley values compute the effect of all the permutations of the possible societies of features, i.e., all the possible combinations of portions (features) of the input images, being computationally expensive.

Figure 13 shows the comparison between nPIR and Shapley values for the input image in Fig. 9. we analyze (a) model M1 for the class Toilet seat, (b) model M1 for the class Mouse, and (c) model M4 for the class Mouse. In Fig. 13a Shapely values have an almost flat trend with only feature 1 showing a slightly negative value, whereas nPIR greatly amplifies the different contributions of each feature, it better highlights the negative impact of feature 1 and it shows more clearly the positive impacts of features 5 and 6.

In Fig. 13b the nPIR is more effective than Shapley values for the explanation task. Feature 1 is identified by both indices as positively influencing, but nPIR marks it more prominently. Feature 4 is negatively influential, as correctly highlighted by nPIR, whereas Shapely values miss this contribution, being slightly positive.

In Fig. 13c, both indicators show the same behavior, with a positive contribution of feature 1 and a neutral contribution of all the other features.

To sum up, the proposed nPIR index is more computationally efficient and better emphasizes the contribution of the different input features, being always equal to or better than the state-of-the-art Shapely values in all the experiments performed.

10.6 Local-explanation qualitative comparison

In this section, the local explanations of EBAnO are qualitatively compared with those provided by state-of-the-art techniques: LIME (Ribeiro et al. 2016) and Grad-CAM (Selvaraju et al. 2019), as representative of perturbation-based and gradient-based explainability families, respectively. Discussions on model M1 (i.e. VGG16) explanations are reported in this section, and further results on model M4 are reported in “Appendix F”.

EBAnO local explanations. The input image is shown in Fig. 14. Visual explanation (left), features (center), numerical explanation (right)

Figure 14 shows the input image I2 taken from Ribeiro et al. (2016) as a comparison example. M1 predicts Acoustic Guitar with a probability of 0.22 (see Table 4). The visual explanation of LIME is reported in Fig. 15a, and the Grad explanation is in Fig. 15b. LIME highlights in green the areas that are important for the class of interest, and in red those negatively impacting the prediction. Instead, Grad-CAM uses warm colors (e.g., red) for the most important areas and cold colors (e.g., blue) for the least important portions.

The explanation provided by LIME is quite confusing, due to the presence of many small green portions that are difficult to be interpreted or associated with a concept of the image. we notice that LIME performs the segmentation of the image without exploiting the knowledge contained in the network.

Grad-CAM is more precise in identifying the area of interest around the neck of the guitar, ignoring the background areas that were identified by LIME as important. However, Grad-CAM loses the information about the portions of the input that are negatively impacting the prediction. We notice that Grad-CAM extracts the information provided by the last convolutional layer of the network.

In general, none of the two state-of-the-art methods propose a human-readable numerical explanation of the prediction. In details, the explanation provided by EBAnO (Fig. 15c) (i) accurately identify concept-wise portions of the input that are responsible for the model’s outcome (feature map in Fig. 15c-center), (ii) highlight both the positively-influential portions and the negatively-influential ones for each class of interest (visual explanation in Fig. 15c-left) and (iii) quantify not only the influence but also the precision of each image portion with numerical explanations (Fig. 15c-right).

Regarding the example under analysis, EBAnO identifies the image portions corresponding to the guitar as very influential with high nPIR values and positive nPIRP values, while, the face of the dog has been correctly identified as negatively impacting the class Acoustic Guitar.

For completeness, the EBAnO’s explanations for the class Golden Retriever have been provided in Fig. 15d, showing that the guitar has now a very negative impact and the dog face is identified as playing the main role in the decision-making process.

10.7 Local-explanation quantitative comparison

In this section, we quantitatively evaluate and compare the explanations produced by EBAnO, LIME (Ribeiro et al. 2016), and Grad-CAM (Selvaraju et al. 2019) exploiting two quality measures: Pointing Game and Pixels Flipping (Samek and Müller 2019). For these evaluation tasks, we created a new dataset, consisting of 150 randomly selected images from the validation set of ImageNet Russakovsky et al. (2015) with the relative ground truth labels and bounding boxes.Footnote 7

To evaluate EBAnO, we selected the most positively influential feature for the predicted label (i.e., the one with max nPIR). For LIME, since its features have smaller dimensions, to make a fair comparison, we selected the combination of the top-n positive features for different \(n \in [5, 10, 15, 20, 25, 50, 75, 100]\) as the most important feature. For instance, for LIME-10 the most important feature is the combination of the top-10 features. Finally, GradCAM assigns an importance score (only positive) to each pixel based on the values of the activation map. Thus, we selected the combination of the top-n percentiles of pixels with higher activation values as the most important feature. We experimented with several percentiles of the non-zero activation values \(top_{perc} \in [5, 10, 25, 50, 75]\). For instance, the feature for GradCAM-5 is computed, for each image, by selecting the \(perc_{95}\) as the 95-percentile of the non-zero activation values, and taking the pixels with activation \(\ge perc_{95}\) (i.e., top-5 percentile of activation values). For each model and task, we report in the paper and discuss the features with sizes similar to those selected by EBAnO. However, full results for all combinations are reported in “Appendix G”.

10.7.1 Pointing game