Abstract

This work considers the general task of estimating the sum of a bounded function over the edges of a graph, given neighborhood query access and where access to the entire network is prohibitively expensive. To estimate this sum, prior work proposes Markov chain Monte Carlo (MCMC) methods that use random walks started at some seed vertex and whose equilibrium distribution is the uniform distribution over all edges, eliminating the need to iterate over all edges. Unfortunately, these existing estimators are not scalable to massive real-world graphs. In this paper, we introduce Ripple, an MCMC-based estimator that achieves unprecedented scalability by stratifying the Markov chain state space into ordered strata with a new technique that we denote sequential stratified regenerations. We show that the Ripple estimator is consistent, highly parallelizable, and scales well. We empirically evaluate our method by applying Ripple to the task of estimating connected, induced subgraph counts given some input graph. Therein, we demonstrate that Ripple is accurate and can estimate counts of up to 12-node subgraphs, which is a task at a scale that has been considered unreachable, not only by prior MCMC-based methods but also by other sampling approaches. For instance, in this target application, we present results in which the Markov chain state space is as large as \(10^{43}\), for which Ripple computes estimates in less than 4 h, on average.

Similar content being viewed by others

Notes

The spectral gap is defined as \(\delta = 1-\max \{|\lambda _2|, |\lambda _{|{\mathcal {V}}{}{^{}}|}|\}\), where \(\lambda _i\) denotes the i-th eigenvalue of the transition probability matrix of \({\varvec{\varPhi }}{}{^{}}\).

References

Aldous D, Fill JA (2002) Reversible Markov chains and random walks on graphs. Unfinished monograph, recompiled 2014. Available at http://www.stat.berkeley.edu/~aldous/RWG/book.html

Athreya KB, Ney P (1978) A new approach to the limit theory of recurrent Markov chains. Trans Am Math Soc 245:493–501

Avrachenkov K, Ribeiro B, Sreedharan JK (2016) Inference in osns via lightweight partial crawls. In: ACM SIGMETRICS, pp 165–177

Avrachenkov K, Borkar VS, Kadavankandy A, Sreedharan JK (2018) Revisiting random walk based sampling in networks: evasion of burn-in period and frequent regenerations. Comput Soc Netw 5(1):1–19

Bhuiyan MA, Rahman M, Rahman M, Al Hasan M (2012) Guise: uniform sampling of graphlets for large graph analysis. In: IEEE 12th international conference on data mining. IEEE, pp 91–100

Bremaud P (2001) Markov chains: Gibbs Fields, Monte Carlo simulation, and queues. Texts in applied mathematics. Springer, New York

Bressan M, Chierichetti F, Kumar R, Leucci S, Panconesi A (2018) Motif counting beyond five nodes. ACM TKDD 12(4):1–25. https://doi.org/10.1145/3186586

Bressan M, Leucci S, Panconesi A (2019) Motivo: fast motif counting via succinct color coding and adaptive sampling. In: Proc VLDB Endow

Chen X, Lui JC (2018) Mining graphlet counts in online social networks. ACM TKDD 12(4):1–38

Cooper C, Radzik T, Siantos Y (2016) Fast low-cost estimation of network properties using random walks. Internet Math 12:221–238. https://doi.org/10.1080/15427951.2016.1164100

Diaconis P, Stroock DW (1991) Geometric bounds for eigenvalues of Markov chains. Ann Appl Probab 1:36–61

Geman S, Geman D (1984) Stochastic relaxation, Gibbs distributions, and the Bayesian restoration of images. IEEE PAMI PAMI 6(6):721–741

Geyer CJ (1992) Practical Markov chain Monte Carlo. Stat Sci 7:473–483

Glynn PW, Rhee Ch (2014) Exact estimation for Markov chain equilibrium expectations. J Appl Probab 51(A):377–389

Han G, Sethu H (2016) Waddling random walk: fast and accurate mining of motif statistics in large graphs. In: ICDM. IEEE, pp 181–190

Hastings WK (1970) Monte Carlo sampling methods using Markov chains and their applications

Hopcroft J, Tarjan R (1973) Algorithm 447: efficient algorithms for graph manipulation. Commun ACM 16(6):372–378

Iyer AP, Liu Z, Jin X, Venkataraman S, Braverman V, Stoica I (2018) \(\{\)ASAP\(\}\): fast, approximate graph pattern mining at scale. In: OSDI, pp 745–761

Jacob PE, O’Leary J, Atchadé YF (2020) Unbiased Markov chain monte Carlo methods with couplings. JRSS Ser B 82(3):543–600

Jain S, Seshadhri C (2017) A fast and provable method for estimating clique counts using turán’s theorem. In: WWW, WWW ’17, pp 441–449

Jain S, Seshadhri C (2020) Provably and efficiently approximating near-cliques using the turán shadow: peanuts. WWW 2020:1966–1976

Kashtan N, Itzkovitz S, Milo R, Alon U (2004) Efficient sampling algorithm for estimating subgraph concentrations and detecting network motifs. Bioinformatics 20(11):1746–1758

Leskovec J, Krevl A (2014) SNAP datasets: stanford large network dataset collection. http://snap.stanford.edu/data

Liu G, Wong L (2008) Effective pruning techniques for mining quasi-cliques. In: ECML-PKDD

Massoulié L, Le Merrer E, Kermarrec AM, Ganesh A (2006) Peer counting and sampling in overlay networks: random walk methods. In: PODC

Matsuno R, Gionis A (2020) Improved mixing time for k-subgraph sampling. In: Proceedings of the (2020) SIAM international SIAM, conference on data mining, pp 568–576

Mykland P, Tierney L, Yu B (1995) Regeneration in Markov Chain samplers. J Am Stat Assoc 90(429):233–241

Neal RM (2001) Annealed importance sampling. Stat Comput 11(2):125–139

Neiswanger W, Wang C, Xing EP (2014) Asymptotically exact, embarrassingly parallel MCMC. UAI

Nummelin E (1978) A splitting technique for Harris recurrent Markov Chains. Mag Probab Theory Relat Areas 43(4):309–318

Pinar A, Seshadhri C, Vishal V (2017) Escape: efficiently counting all 5-vertex subgraphs. In: WWW, pp 1431–1440

Propp JG, Wilson DB (1996) Exact sampling with coupled Markov chains and applications to statistical mechanics. Random Struct Algor 9(1-2):223–252

Ribeiro B, Towsley D (2012) On the estimation accuracy of degree distributions from graph sampling. In: CDC

Ribeiro P, Paredes P, Silva ME, Aparicio D, Silva F (2019) A survey on subgraph counting: concepts, algorithms and applications to network motifs and graphlets. arXiv:191013011

Robert C, Casella G (2013) Monte Carlo statistical methods. Springer, Berlin

Rosenthal JS (1995) Minorization conditions and convergence rates for Markov Chain Monte Carlo. J Am Stat Assoc

Savarese P, Kakodkar M, Ribeiro B (2018) From Monte Carlo to Las Vegas: Improving restricted Boltzmann machine training. In: AAAI

Sinclair A (1992) Improved bounds for mixing rates of Markov Chains and multicommodity flow. Comb Probab Comput 1(4)

Teixeira CH, Cotta L, Ribeiro B, Meira W (2018) Graph pattern mining and learning through user-defined relations. In: ICDM. IEEE, pp 1266–1271

Teixeira CHC, Kakodkar M, Dias V, Meira Jr W, Ribeiro B (2021) Sequential stratified regeneration: MCMC for large state spaces with an application to subgraph count estimation. arXiv:2012.03879

Vitter JS (1985) Random sampling with a reservoir. ACM Trans Math Software (TOMS) 11(1):37–57

Wang P, Lui JCS, Ribeiro B, Towsley D, Zhao J, Guan X (2014) Efficiently estimating motif statistics of large networks. ACM TKDD 9(2):1–7

Wang P, Zhao J, Zhang X, Li Z, Cheng J, Lui JCS, Towsley D, Tao J, Guan X (2017) MOSS-5: a fast method of approximating counts of 5-node graphlets in large graphs. IEEE Trans Knowl Data Eng 30(1):73–86

Wernicke S (2006) Efficient detection of network motifs. IEEE/ACM Trans Comput Biol Bioinf 3(4):347–359

Wilkinson DJ (2006) Parallel Bayesian computation. Statist Textbooks Monogr 184:477

Yang C, Lyu M, Li Y, Zhao Q, Xu Y (2018) Ssrw: a scalable algorithm for estimating graphlet statistics based on random walk. In: International Springer, conference on database systems for advanced applications, pp 272–288

Acknowledgements

This work was funded in part by Brazilian agencies CNPq, CAPES and FAPEMIG, by projects Atmosphere, INCTCyber, MASWeb, and CIIA-Saude, and by the National Science Foundation (NSF) awards CAREER IIS-1943364 and CCF-1918483. Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the sponsors.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Annalisa Appice, Sergio Escalera, Jose A. Gamez, Heike Trautman.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Notation

The most important notations from the paper are summarized in Table 4.

Proofs for Section 2

1.1 MCMC Estimates

Given a graph \({\mathcal {G}}{}{^{}}\), when the \(|{\mathcal {E}}{}{^{}}|\) is unknown, the MCMC estimate of \(\nicefrac {\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\) is given by:

Proposition 5

(MCMC Estimate (Geyer 1992; Geman and Geman 1984; Hastings 1970)) When \({\mathcal {G}}{}{^{}}\) from Definition 1 is connected, the random walk \({\varvec{\varPhi }}{}{^{}}\) is reversible and positive recurrent with stationary distribution \(\pi _{{\varvec{\varPhi }}{}{^{}}}(u) = \nicefrac {\mathbf{d}{}{^{}}(u)}{2|{\mathcal {E}}{}{^{}}|}\). Then, the MCMC estimate \( {\hat{\mu }}_{0}\left( (X_i)_{i=1}^{t}\right) = \frac{1}{t-1}\sum _{i=1}^{t-1} f(X_i,X_{i+1})\,, \) computed using an arbitrarily started sample path \((X_i)_{i=1}^{t}\) from \({\varvec{\varPhi }}{}{^{}}\) is an asymptotically unbiased estimate of \(\nicefrac {\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\). When \({\mathcal {G}}{}{^{}}\) is non-bipartite, i.e., \({\varvec{\varPhi }}{}{^{}}\) is aperiodic, and t is large, \({\hat{\mu }}_{0}\) converges to \(\nicefrac {\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\) as \( \Big |{\mathbb {E}}[{\hat{\mu }}_{0}\left( (X_i)_{i=1}^{t}\right) ] - \nicefrac {\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\Big | \le B \, \frac{C}{t \delta ({\varvec{\varPhi }}{}{^{}})} \,, \) where \(\delta ({\varvec{\varPhi }}{}{^{}})\) is the spectral gap of \({\varvec{\varPhi }}{}{^{}}\) and \(C\triangleq \sqrt{\frac{1-\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}{\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}}\) such that \(f\left( \cdot \right) \le B\).

Proof

(Asymptotic unbiasedness) Because \({\mathcal {G}}{}{^{}}\) is undirected, finite and connected, \({\varvec{\varPhi }}{}{^{}}\) is a finite state space, irreducible, time-homogeneous Markov chain and is therefore positive recurrent (Bremaud 2001, 3-Thm.3.3). The reversibility and stationary distribution holds from the detailed balance test (Bremaud 2001, 2-Cor.6.1): \( \pi _{{\varvec{\varPhi }}{}{^{}}}(u) \, p_{{\varvec{\varPhi }}{}{^{}}}(u,v) = \pi _{{\varvec{\varPhi }}{}{^{}}}(v) \, p_{{\varvec{\varPhi }}{}{^{}}}(v,u) = \frac{\mathbf{1}{\left\{ (u,v)\in {\mathcal {E}}{}{^{}}\right\} }}{2|{\mathcal {E}}{}{^{}}|}\,. \) The ergodic theorem (Bremaud 2001, 3-Cor.4.1) then applies because f is bounded and \( \lim _{t\rightarrow \infty } \frac{1}{t-1}\sum _{i=1}^{t-1} f(X_i,X_{i+1}) = \sum _{(u,v) \in {\mathcal {V}}{}{^{}}\times {\mathcal {V}}{}{^{}}}\pi _{{\varvec{\varPhi }}{}{^{}}}(u) \, p_{{\varvec{\varPhi }}{}{^{}}}(u,v) f(u,v) = \frac{\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\,. \) \(\square \)

Proof

(Bias) Let the i-step transition probability of \({\varvec{\varPhi }}{}{^{}}\) be given by \(p^{i}_{{\varvec{\varPhi }}{}{^{}}}(u,v)\). The bias at the i-th step is given by

where \(f\left( \cdot \right) \le B\), and the final inequality is due to Jensen’s inequality. From (Diaconis and Stroock 1991, Prop-3), \( \textsc {bias}_{i} \le B \sqrt{\frac{1-\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}{\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}} \beta _{*}^{i} \,,\) where \(\beta _{*} = 1-\delta ({\varvec{\varPhi }}{}{^{}})\) is the SLEM of \({\varvec{\varPhi }}{}{^{}}\). Because of Jensen’s inequality and by summing a GP, \( \Big |{\mathbb {E}}[{\hat{\mu }}_{0}\left( (X_i)_{i=1}^{t}\right) ] - \frac{\mu ({\mathcal {E}}{}{^{}})}{|{\mathcal {E}}{}{^{}}|}\Big | \le \frac{1}{t-1} \sum _{i=1}^{t-1} \textsc {bias}_i \le \frac{B}{t-1} \sqrt{\frac{1-\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}{\pi _{{\varvec{\varPhi }}{}{^{}}}(X_1)}} \frac{1-\beta _{*}^{t}}{1-\beta _{*}} \,.\)

Assuming that \(\beta _{*}^{t} \approx 0\) and \(t-1 \approx t\) when t is sufficiently large completes the proof. \(\square \)

Lemma 2

(Avrachenkov et al. 2016) Let \({\varvec{\varPhi }}{}{^{}}\) be a finite state space, irreducible, time-homogeneous Markov chain, and let \(\xi \) denote the return time of RWT started from some \(x_{0}\in {\mathcal {S}}{}{^{}}\) as defined in Definition 2. If \({\varvec{\varPhi }}{}{^{}}\) is reversible, then \( {\mathbb {E}}\left[ \xi ^{2} \right] \le \frac{3}{\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})^{2}\delta ({\varvec{\varPhi }}{}{^{}})} \,,\) where \(\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})\) is the stationary distribution of \(x_{0}\), and \(\delta ({\varvec{\varPhi }}{}{^{}})\) is the spectral gap of \({\varvec{\varPhi }}{}{^{}}\). When \({\varvec{\varPhi }}{}{^{}}\) is not reversible, the second moment of return times is given by Eq. (11).

Proof

Using (Aldous and Fill 2002, Eq 2.21), we have

where \({\mathbb {E}}_{\pi _{{\varvec{\varPhi }}{}{^{}}}}(T_{x_{0}})\) is the expected hitting time of \(x_{0}\) from the steady state. Combining (Aldous and Fill 2002, Lemma 2.11 & Eq 3.41) and accounting for continuization yields \( {\mathbb {E}}_{\pi _{{\varvec{\varPhi }}{}{^{}}}}(T_{x_{0}}) \le \frac{1}{\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})\delta ({\varvec{\varPhi }}{}{^{}})} \,\) and therefore, \( {\mathbb {E}}\left[ \xi ^{2} \right] \le \frac{1+\frac{2}{\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})\delta ({\varvec{\varPhi }}{}{^{}})}}{\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})} < \frac{3}{\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})^{2}\delta ({\varvec{\varPhi }}{}{^{}})} \) because \(\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})\) and \(\delta ({\varvec{\varPhi }}{}{^{}})\) lie in the interval (0, 1). \(\square \)

Proposition 6

Given a positive recurrent Markov chain \({\varvec{\varPhi }}{}{^{}}\) over state space \({\mathcal {S}}{}{^{}}\) and a set of m RWTs \({\mathcal {T}}\) and assuming an arbitrary ordering over \({\mathcal {T}}\), where \(\mathbf{X}^{(i)}\) is the ith RWT in \({\mathcal {T}}\), \(\mathbf{X}^{(i)}\) and \(|\mathbf{X}^{(i)}|\) are i.i.d. processes such that \({\mathbb {E}}[|\mathbf{X}^{(i)}|] < \infty \), and when the tours are stitched together as defined next, the sample path is governed by \({\varvec{\varPhi }}{}{^{}}\). For \(t\ge 1\), define \(\varPhi _{t} = X^{N_{t}}_{t - R_{N_{t}}}\), where \(R_i = \sum _{i'=1}^{i-1} |\mathbf{X}^{i}|\) when \(i>1\) and \(R_{1} = 0\) and \(N_t = \max \{i :R_i < t\}\).

Proof

\(R_i\) is a sequence of stopping times. Therefore, the strong Markov property (Bremaud 2001, 2-Thm.7.1) states that sample paths before and after \(R_i\) are independent and are governed by \({\varvec{\varPhi }}{}{^{}}\). Because \({\varvec{\varPhi }}{}{^{}}\) is positive recurrent and \(x_{0}\) is visited i.o., the regenerative cycle theorem (Bremaud 2001, 2-Thm.7.4) states that these trajectories are identically distributed and are equivalent to the tours \({\mathcal {T}}\) sampled according to Definition 2. \({\mathbb {E}}[|\mathbf{X}^(i)|] < \infty \) due to positive recurrence. \(\square \)

1.2 Proof of Lemma 1

Proof

(Unbiasedness and Consistency) Because \({\mathcal {G}}{}{^{}}\) is connected, \({\varvec{\varPhi }}{}{^{}}\) is positive recurrent with steady state \(\pi _{{\varvec{\varPhi }}{}{^{}}}(u) \propto \mathbf{d}{}{^{}}(u)\) due to Proposition 5. Consider the reward process \(F^{(i)} = \sum _{j=1}^{|\mathbf{X}^{(i)}|} f(X_j^{(i)},X_{j+1}^{(i)})\), \(i\ge 1\). From Proposition 6, \(F^{(i)}\) and \(|\mathbf{X}^{(i)}|\) are i.i.d. sequences with finite first moments, because \(F^{(i)} \le B |\mathbf{X}^{(i)}|\). Let \(N_t\) and \(R_i\) be as defined in Proposition 6.

Therefore, from the renewal reward theorem (Bremaud 2001, 3-Thm.4.2), we have

where the final equality holds because \(\lim _{t\rightarrow \infty }\frac{R_{N_{t}}}{t} = 1-\lim _{t\rightarrow \infty }\frac{t-R_{N_{t}}}{t}\), and \(\lim _{t\rightarrow \infty }\frac{t-R_{N_{t}}}{t}\) converges to 0 as \(t\rightarrow \infty \) because \(|\mathbf{X}^{\left( \cdot \right) }|<\infty \) w.p. 1 because \({\varvec{\varPhi }}{}{^{}}\) is positive recurrent.

From Proposition 6 and the definition of \(F^{(i)}\), \(\sum _{i=1}^{N_t} F^{(i)} = \sum _{j=1}^{R_{N_{t}}} f(\varPhi _j,\varPhi _{j+1})\), and because f and \(\pi _{{\varvec{\varPhi }}{}{^{}}}\) are bounded, we have from the ergodic theorem (Bremaud 2001, 3-Cor.4.1),

From Kac’s formula (Aldous and Fill 2002, Cor.2.24), \(\nicefrac {1}{{\mathbb {E}}[|\mathbf{X}^{(i)}|]} = {\pi _{{\varvec{\varPhi }}{}{^{}}}(x_{0})} = \frac{\mathbf{d}{}{^{}}(x_{0})}{2|{\mathcal {E}}{}{^{}}|}\), and

\({\hat{\mu }}_{*}({\mathcal {T}}; f, {\mathcal {G}}{}{^{}})\) is unbiased by linearity of expectations on the summation over \({\mathcal {T}}\), and consistency is a consequence of Kolmogorov’s SLLN (Bremaud 2001, 1-Thm.8.3).

\(\square \)

Proof

(Running Time) From Kac’s formula (Aldous and Fill 2002, Cor.2.24), \({\mathbb {E}}[|\mathbf{X}^{(i)}|] = \frac{2|{\mathcal {E}}{}{^{}}|}{\mathbf{d}{}{^{}}(x_{0})}\). From Proposition 6, tours can be sampled independently and thus parallelly. All cores will sample an equal number of tours in expectation, yielding the running time bound. \(\square \)

Proof

(Variance) Because \(f\left( \cdot \right) <B\), and tours are i.i.d., the variance is

From Lemma 2 and Kac’s formula (Aldous and Fill 2002, Cor.2.24), \({\text {Var}}\left( |\mathbf{X}| \right) \) is given by

\(\square \)

Proofs for Section Crefsec.estimator

Assumption 2

For each \({\mathcal {G}}{}{^{}}_{r}\), \(1<r\le R\) from Definition 5, assume \(\mathbf{d}{}{^{}}(\zeta _{r})\) is known and that \(p_{{\varvec{\varPhi }}{}{^{}}_{r}}(\zeta _{r}, \cdot )\) can be sampled from.

Proposition 7

(RWTs in \({\varvec{\varPhi }}{}{^{}}_{r}\)) Under Assumption 2, given access only to the original chain \({\varvec{\varPhi }}{}{^{}}\) and stratifying function \(\rho \), let \({\varvec{\varPhi }}{}{^{}}_{r}\) be the random walk in the graph stratum \({\mathcal {G}}{}{^{}}_{r}\) from Definition 5. To sample an RWT \((X_{i})_{i=1}^{\xi }\) over \({\varvec{\varPhi }}{}{^{}}_{r}\) from the supernode \(\zeta _{r}\), we set \(X_{1} = \zeta _{r}\), sample \(X_2 \sim p_{{\varvec{\varPhi }}{}{^{}}_{r}}(\zeta _{r},\cdot )\), and then, until \(\rho (X_{\xi +1}) < r\), we sample

Proof

The proof is a direct consequence of Definitions 5 and 1. \(\square \)

Proposition 8

(Perfectly Stratified Estimate) Under Assumption 2, given the EPS (Definition 6) stratum \({\mathcal {G}}{}{^{}}_{r}\) (Definition 5), bounded \(f :{\mathcal {E}}{}{^{}}\rightarrow {\mathbb {R}}\) and a set of m RWTs \({\mathcal {T}}_{r}\) over \({\varvec{\varPhi }}{}{^{}}_{r}\) from \(\zeta _r\) from Proposition 7, the per stratum estimate is given by

where \(X_j\) is the jth state visited in the RWT \(\mathbf{X}\in {\mathcal {T}}_{r}\). For all \(r>1\), \({\hat{\mu }}({\mathcal {T}}_{r}; f, {\mathcal {G}}{}{^{}}_{r})\) is an unbiased and consistent estimator of \(\mu ({\mathcal {J}}{}{^{}}_{r}) = \sum _{(u,v)\in {\mathcal {J}}{}{^{}}_{r}} f(u,v)\), where \({\mathcal {J}}{}{^{}}_{r}\) is the r-th edge stratum defined in Definition 4.

Proof

Define \(f' :{\mathcal {E}}{}{^{}}_{r} \rightarrow {\mathbb {R}}\) as \(f'(u,v) \triangleq \mathbf{1}{\left\{ u,v \ne \zeta _{r}\right\} } f(u,v)\). By Definition 2, in each RWT \(\mathbf{X}\in {\mathcal {T}}_{r}\), \(f'(X_1,X_2) = f'(X_{|\mathbf{X}|},X_{|\mathbf{X}|+1}) = 0\), and therefore, \({\hat{\mu }}({\mathcal {T}}_{r}; f, {\mathcal {G}}{}{^{}}_{r}) = {\hat{\mu }}_{*}({\mathcal {T}}; f', {\mathcal {G}}{}{^{}}_{r})\), where \({\hat{\mu }}_{*}\) is the RWT Estimate from Lemma 1. As \({\mathcal {G}}{}{^{}}_{r}\) is connected, \( {\mathbb {E}}\left[ {\hat{\mu }}({\mathcal {T}}_{r}; f, {\mathcal {G}}{}{^{}}_{r}) \right] = {\mathbb {E}}\left[ {\hat{\mu }}_{*}({\mathcal {T}}; f', {\mathcal {G}}{}{^{}}_{r}) \right] = \sum _{(u,v) \in {\mathcal {E}}{}{^{}}_{r}} f'(u,v) = \sum _{(u,v) \in {\mathcal {J}}{}{^{}}_{r}} f(u,v) \,, \) where the final equality holds because \({\mathcal {E}}{}{^{}}_{r}\) is the union of \({\mathcal {J}}{}{^{}}_{r}\) and edges incident on the supernode. Consistency is also due to Lemma 1. \(\square \)

1.1 Proof of Proposition 1

Proof

Proposition 1 (a) is necessary because when Proposition 1 (a) does not hold, there exists a component such that the minimum value of \(\rho \) in that component is \(\ddot{r}>0\) such that in \({\mathcal {G}}{}{^{}}_{\ddot{r}}\) (Definition 5), and the supernode \(\zeta _{\ddot{r}}\) will be disconnected from all vertices. If Proposition 1 (b) is violated, a vertex \(\ddot{u}\) exists that is disconnected in \({\mathcal {G}}{}{^{}}_{\rho (\ddot{u})}\), and if Proposition 1 (c) is violated, the supernode is disconnected. Finally, it is easily seen that these conditions sufficiently guarantee that each stratum is connected, and the stratification is an EPS. \(\square \)

1.2 Proof of Theorem 1

We begin by defining the multi-set containing the end points of edges between vertex strata.

Definition 12

Given \({\mathcal {G}}{}{^{}}\) stratified into R strata, \(\forall 1 \le q < t \le R\) define border multi-sets as \( {\mathcal {B}}_{q,t} \triangleq \{v \, \forall (u,v) \in {\mathcal {E}}{}{^{}}:u \in {\mathcal {I}}{}{^{}}_{q} {{\,\mathrm{and}\,}}v \in {\mathcal {I}}{}{^{}}_{t}\} \,. \) The degree of the supernode in \({\mathcal {G}}{}{^{}}_{r}\) (Definition 5) is then given by \(\mathbf{d}{}{^{}}(\zeta _{r}) = \sum _{q=1}^{r-1}|{\mathcal {B}}_{q,r}|\), and transitions out of \(\zeta _{r}\) can be sampled by sampling \(q \in \{1,\ldots ,r-1\}\) w.p. \(\propto |{\mathcal {B}}_{q,r}|\) and then by uniformly sampling from \({\mathcal {B}}_{q,r}\).

Proposition 9

Given the setting in Definitions 8 and 9, for all \(1 \le r < t \le R\),

i.e., each tour in \({\mathcal {T}}^{\dagger }_{r}\) is perfectly sampled from \({\varvec{\varPhi }}{}{^{}}_{r}\).

Proof

(By Strong Induction) The base case for \(r=1\) holds by the base case in Definition 8. Now assume that Proposition 9 holds for all strata up to and including \(r-1\). Because of the inductive claim and by Definition 12, \(\lim _{|{\mathcal {T}}^{\dagger }_{2}| \rightarrow \infty } \ldots \lim _{|{\mathcal {T}}^{\dagger }_{r-1}| \rightarrow \infty }, {\widehat{\mathbf{d}{}{^{}}}}(\zeta _{r}) = \sum _{q=1}^{r-1} {\widehat{\beta }}_{q,r} \overset{a.s}{=}\sum _{q=1}^{r-1} |{\mathcal {B}}_{q,r}| = \mathbf{d}{}{^{}}(\zeta _{r}) \,, \) and similarly, \(\lim _{|{\mathcal {T}}^{\dagger }_{2}| \rightarrow \infty } \ldots \lim _{|{\mathcal {T}}^{\dagger }_{r-1}| \rightarrow \infty }, {\widehat{p}}_{{\varvec{\varPhi }}{}{^{}}_{r}}(\zeta _{r},\cdot ) \equiv p_{{\varvec{\varPhi }}{}{^{}}_{r}}(\zeta _{r},\cdot ) \) because the inductive claim makes the procedure of sampling transitions out of \(\zeta _{r}\) in Definition 8 equivalent to Definition 12. Equation (15) holds because transition probabilities at all states other than \(\zeta _{r}\) are equivalent in \({\varvec{\varPhi }}{}{^{}}_{r}\) and \(\widehat{\varvec{\varPhi }}{}{^{}}_{r}\) according to Definition 7. Now recall that \( {\widehat{\beta }}_{r,t} = \frac{{\widehat{\mathbf{d}{}{^{}}}}(\zeta _{r})}{|{\mathcal {T}}^{\dagger }_{r}|} \sum _{\mathbf{X}\in {\mathcal {T}}^{\dagger }_{r}}\sum _{j=2}^{|\mathbf{X}|} \mathbf{1}{\left\{ \rho (X_{j}) = t\right\} } \,. \) Because \({\widehat{\mathbf{d}{}{^{}}}}(\zeta _{r}) = \mathbf{d}{}{^{}}(\zeta _{r})\) and the tours are sampled perfectly, \(\lim _{|{\mathcal {T}}^{\dagger }_{2}| \rightarrow \infty } \ldots \lim _{|{\mathcal {T}}^{\dagger }_{r-1}| \rightarrow \infty } {\widehat{\beta }}_{r,t} = {\hat{\mu }}_{*}\left( {\mathcal {T}}^{\dagger }_{r}; f' \right) \,, \) where \(f'(u,v) = \mathbf{1}{\left\{ \rho (v) = t\right\} }\) and \({\hat{\mu }}_{*}\) is from Lemma 1, from which we also use the consistency guarantee to show that under an EPS, Eq. (13) holds as \( \lim _{|{\mathcal {T}}^{\dagger }_{2}| \rightarrow \infty } \ldots \lim _{|{\mathcal {T}}^{\dagger }_{r}| \rightarrow \infty } {\widehat{\beta }}_{r,t} \overset{a.s}{=}\sum _{(u,v) \in {\mathcal {E}}{}{^{}}_{r}} f'(u,v) = |{\mathcal {B}}_{r,t}|\,. \) Because of Proposition 6, concatenating tours \(\mathbf{X}\in {\mathcal {T}}^{\dagger }_{q}\) yields a sample path from \({\varvec{\varPhi }}{}{^{}}_{r}\), and these samples are distributed according to \(\pi _{{\varvec{\varPhi }}{}{^{}}_{r}}\) as \(|{\mathcal {T}}^{\dagger }_{r'}| \rightarrow \infty \), \(r'\le r\). Therefore, \( \lim _{|{\mathcal {T}}^{\dagger }_{2}| \rightarrow \infty } \ldots \lim _{|{\mathcal {T}}^{\dagger }_{r}| \rightarrow \infty } \uplus _{\mathbf{X}\in {\mathcal {T}}^{\dagger }_{q}}\uplus _{j=2}^{|\mathbf{X}|} \left\{ X_{j} :\rho (X_{j}) = t \right\} \sim \pi '_{{\varvec{\varPhi }}{}{^{}}_{r}} \,, \) where \(\pi '_{{\varvec{\varPhi }}{}{^{}}_{r}}(u) \propto \mathbf{1}{\left\{ \rho (u) = t\right\} } \mathbf{d}{}{^{}}_{{\mathcal {G}}{}{^{}}_{r}}(u)\), which is equivalent to \(\textsc {unif}({\mathcal {B}}_{r,t})\) by Definitions 5 and 12, thus proving Eq. (14). \(\square \)

Proof

(Main Theorem) Combining Propositions 9 and 8 proves Theorem 1. \(\square \)

1.3 Proof of Theorem 2

Definition 13

(\(L^{2}\) Distance between \({\widehat{\pi }}\) and \(\pi \) Aldous and Fill 2002) The \(L^{2}\) distance between discrete probability distribution \({\widehat{\pi }}\) and reference distribution \(\pi \) with sample space \(\varOmega \) is given by \(\Vert {\widehat{\pi }} - \pi \Vert _2 = \sum _{i \in \varOmega } \frac{({\widehat{\pi }}(i) - \pi (i))^{2}}{\pi (i)}\).

Definition 14

(Distorted chain) Given a Markov chain \({\varvec{\varPhi }}{}{^{}}\) over finite state space \({\mathcal {S}}{}{^{}}\) and an arbitrary \(x_{0}\in {\mathcal {S}}{}{^{}}\), let \(\widehat{\varvec{\varPhi }}{}{^{}}\) be the distorted chain such that \(\forall \,u\ne x_{0}\), \(p_{\widehat{\varvec{\varPhi }}{}{^{}}}(u,\cdot ) = p_{{\varvec{\varPhi }}{}{^{}}}(u,\cdot )\), and \(p_{\widehat{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot )\) is an arbitrary distribution with support \({{\,\mathrm{supp}\,}}(p_{\widehat{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot ))\subseteq {{\,\mathrm{supp}\,}}(p_{{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot ))\). The distortion is given by \(\Vert p_{\widehat{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot ) - p_{{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot )\Vert \) as defined in Definition 13.

Lemma 3

Given a finite state, positive recurrent Markov chain \({\varvec{\varPhi }}{}{^{}}\) over state space \({\mathcal {S}}{}{^{}}\), let \(\widehat{\varvec{\varPhi }}{}{^{}}\) be the chain distorted at some \(x_{0}\in {\mathcal {S}}{}{^{}}\) from Definition 14.

Let \( {\mathcal {X}}= \Bigg \{(X_{1}, \ldots , X_{\xi }) :X_{1} = x_{0}\,,\, \xi = \min \{t>0 :X_{t+1} = x_{0}\} \,,\, p_{{\varvec{\varPhi }}{}{^{}}}(X_{1}, \ldots , X_{\xi })>0\Bigg \} \,, \) denote the set of all possible arbitrary lengths RWTs that begin and end at \(x_{0}\) from Definition 2. Given a tour \(\mathbf{Y}\in {\mathcal {X}}\) sampled from \({\varvec{\varPhi }}{}{^{}}\) and a bounded function \(F:{\mathcal {X}}\rightarrow {\mathbb {R}}\),

where \({\mathbb {E}}_{{\varvec{\varPhi }}{}{^{}}}\) and \({\mathbb {E}}_{\widehat{\varvec{\varPhi }}{}{^{}}}\) are expectations under the distribution of tours sampled from \({\varvec{\varPhi }}{}{^{}}\) and \(\widehat{\varvec{\varPhi }}{}{^{}}\).

Proof

All tours in \({\mathcal {X}}\) are of finite length because of the positive recurrence of \({\varvec{\varPhi }}{}{^{}}\). The ratio of the probability of sampling the tour \(\mathbf{Y}= (Y_1, \ldots , Y_{\xi '})\) from the chain \(\widehat{\varvec{\varPhi }}{}{^{}}\) to \({\varvec{\varPhi }}{}{^{}}\) is given by

because \(p_{{\varvec{\varPhi }}{}{^{}}}(Y_{j}, \cdot ) = p_{\widehat{\varvec{\varPhi }}{}{^{}}}(Y_{j}, \cdot )\), \(\forall 1<j\le \xi '\) because \(Y_j \ne x_{0}\) by the definitions of \({\mathcal {X}}\) and \(\widehat{\varvec{\varPhi }}{}{^{}}\). Because \({{\,\mathrm{supp}\,}}(p_{\widehat{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot )) \subseteq {{\,\mathrm{supp}\,}}(p_{{\varvec{\varPhi }}{}{^{}}}(x_{0},\cdot ))\), \({{\,\mathrm{supp}\,}}(p_{\widehat{\varvec{\varPhi }}{}{^{}}}(\mathbf{Y})) \subseteq {{\,\mathrm{supp}\,}}(p_{{\varvec{\varPhi }}{}{^{}}}(\mathbf{Y}))\). The theorem statement therefore directly draws from the definition of importance sampling (Robert and Casella 2013, Def 3.9) with the importance weights derived in Eq. (17).

\(\square \)

Lemma 4

Given a simple random walk \({\varvec{\varPhi }}{}{^{}}\) on the connected non-bipartite graph \({\mathcal {G}}{}{^{}}\) from Definition 1, let \(\widehat{\varvec{\varPhi }}{}{^{}}\) be the chain distorted at some \(x_{0}\in {\mathcal {S}}{}{^{}}\) from with distortion \(\nu \) Definition 14. Let \(\lambda = \nicefrac {{\widehat{\mathbf{d}{}{^{}}}}(x_{0})}{\mathbf{d}{}{^{}}(x_{0})}\). Let \(f:{\mathcal {E}}{}{^{}}\rightarrow {\mathbb {R}}\) bounded by B, and \(F(\mathbf{X}) = \sum _{j=1}^{|\mathbf{X}|}f(X_{j}, X_{j+1})\), where \(\mathbf{X}\) is an RWT as defined in “Appendix B.2”. The bias of an RWT Estimate (Eq. 2) computed using tours sampled over \(\widehat{\varvec{\varPhi }}{}{^{}}\) and using \({\widehat{\mathbf{d}{}{^{}}}}(x_{0})\) as the degree is given by \( \textsc {bias}= \left| {\mathbb {E}}_{\widehat{\varvec{\varPhi }}{}{^{}}}\left[ \frac{{\widehat{\mathbf{d}{}{^{}}}}(x_{0})}{2}F(\mathbf{X})\right] - \mu ({\mathcal {E}}{}{^{}})\right| \le \left( \lambda \nu + |1-\lambda | \right) \frac{\sqrt{3} B|{\mathcal {E}}{}{^{}}|}{\sqrt{\delta }}\,, \) where \(\delta \) is the spectral gap of \({\varvec{\varPhi }}{}{^{}}\), and B is the upper bound of f.

Proof

Subtracting the two, squaring both sides and using the Cauchy–Schwarz inequality decomposes the squared bias into

where the expectation is under \({\varvec{\varPhi }}{}{^{}}\). Using definitions from the theorem statement,

Because \(F(\mathbf{X}) \le B\xi \), the tour length, from Lemma 2, we see that

and combining both biases completes the proof for \(\textsc {bias}\). \(\square \)

Proof

(Main Theorem) Note that by linearity of expectations

where \(\mathbf{X}\) is an RWT on \(\widehat{\varvec{\varPhi }}{}{^{}}_r\) that depends on \({\mathcal {T}}^{\dagger }_{2:r-1}\) and \(f'(u,v) \triangleq \mathbf{1}{\left\{ u,v \ne \zeta _{r}\right\} } f(u,v)\). Applying Lemma 4 completes the proof because \(\widehat{\varvec{\varPhi }}{}{^{}}_{r}\) is a distorted chain by Definition 14. \(\square \)

Proofs for Section 4

1.1 Proof of Proposition 2

Proof

From Wang et al. (2014, Thm-3.1), we know that each disconnected component of G leads to a disconnected component in \({\mathcal {G}}{}{^{k-1}}\), and if \({\mathcal {I}}{}{^{}}_{1}\) contains a subgraph in each connected component, Proposition 1 (a) is satisfied. We now prove that \(\forall \, s\in {\mathcal {V}}{}{^{k-1}}\), if \(\rho (s) = r > 1\), \(\exists \, s' \in \mathbf{N}{}{^{}}(s) :\rho (s')<r\) which simultaneously satisfies Proposition 1 (b) and Proposition 1 (c) .

W.l.o.g. let the vertex with the smallest distance from the seed vertices be denoted by \({\hat{u}} = {{\,\mathrm{argmin}\,}}_{u \in V(s)} \textsc {dist}(u)\). When \(\textsc {dist}({\hat{u}}) > 0\), there exists \(v \in \mathbf{N}{}{^{}}_{G}({\hat{u}})\) such that \(\textsc {dist}(v) < \textsc {dist}({\hat{u}})\) by the definition of \(\textsc {dist}\). More concretely, v would be the penultimate vertex in the shortest path from the seed vertices to \({\hat{u}}\). Let \(v' \ne {\hat{u}}\) be a nonarticulating vertex of s, which is possible because any connected graph has at least 2 nonarticulating vertices. Let \(s_1 = G\left( V(s)\backslash \{v'\} \cup \{v\}\right) \in {\mathcal {V}}{}{^{k-1}}\). Now, \(\rho (s_1) < \rho (s)\) because \(v'\) has been replaced with a vertex at necessarily a smaller distance and because the indicator in the definition of rho will always be 0 in this case. Moreover,  , and hence an edge exists between the two.

, and hence an edge exists between the two.

When \(\textsc {dist}({\hat{u}}) = 0\), there exists \(v \in \mathbf{N}{}{^{}}_{G}({\hat{u}})\) such that \(\textsc {dist}(v)=0\). There exists a nonarticulating \(v' \in V(s)\backslash V^{*}\) because otherwise \(V^{*}\) would have been disconnected. Observing that \(\textsc {dist}(v') + \mathbf{1}{\left\{ v' \in V({\mathcal {I}}{}{^{}}_{1}) \backslash V^{*})\right\} } >0\) completes the proof of ergodicity. \(\square \)

1.2 Proof of Proposition 3

Proof

(Sampling Probability) Consider the lines Lines 3 to 5. The probability of sampling the pair (u, v) from \( V(s) \times \mathbf{N}{}{^{}}_{G}\left( V(s)\right) \) is given by

where \(\textsc {bias}\) is defined in Line 6 and corrected for in Line 7. After the rejection, therefore, \((u,v) \sim \textsc {unif}( V(s) \times \mathbf{N}{}{^{}}_{G}\left( V(s)\right) )\).

Line 9 constitutes an importance sampling with unit weight for pairs (u, v), where removing u from and adding v to V(s) produces a \(k-1-\)CIS and zero otherwise. In Line 9, because removing a nonarticulating vertex and adding another vertex to s cannot lead to a disconnected subgraph, we can avoid a DFS when \(u \notin {\mathcal {A}}_{s}\). This completes the proof. \(\square \)

Proof

(Time Complexity) Assuming access to a precomputed vector of degrees, the part up to Line 1 is \({{\,\mathrm{O}\,}}(k-1^{2})\). In each proposal, Lines 3 and 4 are \({{\,\mathrm{O}\,}}(k-1)\), and Line 5 is \({{\,\mathrm{O}\,}}(\varDelta _s)\). Line 6 is \({{\,\mathrm{O}\,}}(k-1)\), and the expected complexity of Line 9 is \({{\,\mathrm{O}\,}}(k-1^{2} \, \nicefrac {|{\mathcal {A}}_{s}|}{k-1})\) because in expectation only \(\nicefrac {|{\mathcal {A}}_{s}|}{k-1}\) graph traversals will be required. The acceptance probability is \(\ge \nicefrac {1}{k-1}\) is Line 7 and \(\ge \frac{k-1-|{\mathcal {A}}_{s}|}{k-1}\). The expected number of proposals is therefore \(\le \frac{k-1^{2}}{k-1-|{\mathcal {A}}_{s}|}\). As such, the expected time complexity is \({{\,\mathrm{O}\,}}(k-1^2 (1 +\frac{\varDelta _s + k-1|{\mathcal {A}}_{s}|}{k-1-|{\mathcal {A}}_{s}|} ))\). \(\square \)

Additional implementation details

1.1 Parallel sampling with a reservoir matrix

Given a reasonably large \({ \textsc {m}} \) and the number of strata R, we initialize an upper triangular matrix of empty reservoirs \([\widehat{\mathbf{U}}_{r,t}]_{2\le r < t \le R}\) and a matrix of atomic counters \([{\hat{{ \textsc {m}}}}_{q,r}]_{2\le r < t\le R}\) initialized to 0. In each stratum r, while being sampled in parallel whenever a tour enters the t-th stratum, \({\hat{{ \textsc {m}}}}_{r,t}\) is incremented, and with a probability \(\min (1, \nicefrac {{ \textsc {m}}}{{\hat{{ \textsc {m}}}}_{r,t}})\), the state is inserted into a random position in the reservoir \(\widehat{\mathbf{U}}_{r,t}\) and rejected otherwise. The only contention between threads in this scheme is at the atomic counter and in the rare case where two threads choose the same location to overwrite, wherein ties are broken based on the value of the atomic counter at the insertion time, guaranteeing thread safety. The space complexity of a reservoir matrix is therefore \(O(R^2 { \textsc {m}})\).

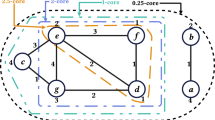

A toy example of this matrix is presented in Fig. 4, where \(R=5\), and the RWTs are being sampled on the graph stratum \({\mathcal {G}}{}{^{}}_{2}\). Whenever (non-gray) states in \({\mathcal {I}}{}{^{}}_{3:5}\) are visited, they are inserted into the corresponding reservoirs–\(\widehat{\mathbf{U}}_{2,5}\) is depicted in detail.

Parallel RWTs and reservoirs: a The set of m RWTs sampled on \({\mathcal {G}}{}{^{}}_{2}\) in parallel, where the supernode \(\zeta _{2}\) is colored black. The gray, blue, red and green colors represent states in stratum 2–5, respectively. b The upper triangular reservoir matrix in which the cell in the r-th row and t-th column contains samples from \(\widehat{\mathbf{U}}_{r,t}\)

1.2 PSRW neighborhood

The neighborhood of a \(k-\)CIS s in \({\mathcal {G}}{}{^{k}}\) is the set of all vertices \(u,v \in V\) such that replacing u with v in s yields a \(k-\)CIS. Formally,

where \(\mathbf{N}{}{^{}}_{G}(V(s)) = \cup _{x \in V(s)}\mathbf{N}{}{^{}}_{G}(x)\) is the union of the neighborhood of each vertex in s. The size of the neighborhood is then \({{\,\mathrm{O}\,}}(k\,\mathbf{N}{}{^{}}_{G}(V(s)))\in {{\,\mathrm{O}\,}}(k^2\varDelta _{G})\) because \(\mathbf{N}{}{^{}}_{G}(V(s)) \in {{\,\mathrm{O}\,}}(k \varDelta _{G})\), where \(\varDelta _{G}\) is the maximum degree in G. Each potential neighbor further requires a connectivity check in the form of a BFS or DFS, which implies that the naive neighborhood sampling algorithm requires \({{\,\mathrm{O}\,}}(k^{4} \varDelta _{G})\) time.

1.2.1 Articulation points

Apart from the rejection sampling algorithm from Algorithm 1, we use articulation points to efficiently compute the subgraph bias \(\gamma \) from Eq. (9). Specifically, given the \(k-1-\)CIS, s, \(\gamma (s) = \left( {\begin{array}{c}\kappa -{\mathcal {A}}_{s}\\ 2\end{array}}\right) \), \({\mathcal {A}}_{s}\) is the set of articulation points of s. This draws directly from (Wang et al. 2014, Sec-3.3) and the definition of articulation points. Hopcroft and Tarjan (1973) showed that for any simple graph s the set of articulation points can be computed in \(O(|V(s)| + |E(s)|)\) time.

1.3 Proof of Proposition 4

Proposition 10

(Extended Version of Proposition 4) We assume a constant number of tours m in each stratum and ignore graph loading. The Ripple estimator of \(k-\)CIS counts described in Algorithm 2 has space complexity in

where \({\widehat{{{\,\mathrm{O}\,}}}}\) ignores all factors other than k and \(|{\mathcal {H}}|\), \({ \textsc {m}} \) is the size of the reservoir from Sect. 4.3, \(D_{G}\) is the diameter of G, and \(|{\mathcal {H}}|\) is the number of patterns of interest.

The total number of random walk steps is given by \({{\,\mathrm{O}\,}}(k^3 m D_{G} \varDelta _{G} C_{\textsc {rej}})\), where \(C_{\textsc {rej}}\) is the number of rejections in Line 21 of Algorithm 2, \(\varDelta _{G}\) is the largest degree in G, and the total time complexity is \({\widehat{{{\,\mathrm{O}\,}}}}(k^7 + |{\mathcal {H}}|)\).

Remark 2

In practice, we adapt the proposals in Algorithm 1 to minimize \(C_{\textsc {rej}}\) using heuristics over the values of \(\textsc {dist}\left( \cdot \right) \) from Proposition 2.

Lemma 5

Given a graph stratum \({\mathcal {G}}{}{^{}}_{r}\) from Definition 5, for some \(r>1\), define \(\alpha _r = \nicefrac {|\{u \in {\mathcal {I}}{}{^{}}_{r} :\mathbf{N}{}{^{}}(u) \cap {\mathcal {I}}{}{^{}}_{1:r-1} \ne \emptyset \}|}{|{\mathcal {I}}{}{^{}}_{r}|}\) as the fraction of vertices in the r-th vertex stratum that share an edge with a previous stratum. The return time \(\xi _r\) of the chain \({\varvec{\varPhi }}{}{^{}}_{r}\) to the supernode \(\zeta _r \in {\mathcal {V}}{}{^{}}_{r}\) follows \({\mathbb {E}}_{{\varvec{\varPhi }}{}{^{}}_{r}}[\xi _r] \le \frac{2 {\bar{\mathbf{d}}}{}{^{}}_{r}}{\alpha _{r}}\), where \({\bar{\mathbf{d}}}{}{^{}}_{r}\) is the average degree in \({\mathcal {G}}{}{^{}}\) of all vertices in \({\mathcal {I}}{}{^{}}_{r}\).

Proof

Because \(\alpha _r {\mathcal {I}}{}{^{}}_{r}\) vertices have at least one edge incident on \(\zeta _r\), \(\mathbf{d}{}{^{}}_{{\mathcal {G}}{}{^{}}_{r}}(\zeta _r) \ge \alpha _r {\mathcal {I}}{}{^{}}_{r}\). From Definition 5, because all edges not incident on \({\mathcal {I}}{}{^{}}_{r}\) are removed from \({\mathcal {G}}{}{^{}}_{r}\), \({{\,\mathrm{Vol}\,}}({\mathcal {G}}{}{^{}}_{r}) \le 2 \sum _{u \in {\mathcal {I}}{}{^{}}_{r}} \mathbf{d}{}{^{}}_{{\mathcal {G}}{}{^{}}}(u)\). Therefore, from Lemma 1,

\(\square \)

Proposition 11

The Ergodicity-Preserving Stratification from Proposition 2 is such that \(\alpha _{r}=1\) for all \(r>1\) as defined in Lemma 5, and consequently, the diameter of each graph stratum is \(\le 4\). The total number of strata \(R \in {{\,\mathrm{O}\,}}(k\,D_{G})\), where \(D_{G}\) is the diameter of G.

Proof

We show in “Appendix D.1” that for each vertex \(s\in {\mathcal {V}}{}{^{k-1}}\), if \(\rho (s) = r > 1\), there exists \(s' \in \mathbf{N}{}{^{}}(s)\) such that \(\rho (s')<r\). This implies that \(\alpha _{r} = 1\). In \({\mathcal {G}}{}{^{}}_{r}\), therefore, from \(\zeta _{r}\), all vertices in \({\mathcal {I}}{}{^{}}_{r}\) are at unit distance from \(\zeta _{r}\), and vertices in \(\mathbf{N}{}{^{}}({\mathcal {I}}{}{^{}}_{r}) \backslash {\mathcal {I}}{}{^{}}_{r}\) are at a distance of 2 from \(\zeta _{r}\). Because no other vertices are present in \({\mathcal {G}}{}{^{}}_{r}\), this completes the proof of the first part. Trivially, \(R \le (k-1) \cdot \max _{u\in V} \textsc {dist}(u) \in {{\,\mathrm{O}\,}}(k \cdot D_{G})\). \(\square \)

Proof

(Memory Complexity) From Algorithm 2, we compute a single count estimate per stratum and maintain reservoirs and inter-partition edge count estimates for each \(2\le q<t \le R\). Because a reservoir \(\widehat{\mathbf{U}}_{q,t}\) needs \({{\,\mathrm{O}\,}}(k { \textsc {m}})\) space (“Appendix E.1”), the total memory requirement is \({{\,\mathrm{O}\,}}( R^{2} \, k { \textsc {m}})\), where R is the number of strata. From Proposition 11, plugging \(R \in {{\,\mathrm{O}\,}}(k D_{G})\), and because storing the output \({\hat{\mu }}\) requires \({{\,\mathrm{O}\,}}(|{\mathcal {H}}|)\) memory the proof is completed. \(\square \)

Proof

(Time Complexity) The stratification requires a single BFS \(\in {{\,\mathrm{O}\,}}(|V|+|E|)\) from Sect. 4.2. In Line 3, the estimation phase starts by iterating over the entire higher-order neighborhood of each subgraphs in \({\mathcal {I}}{}{^{}}_1\). Based on “Appendix E.2.1”, Line 5 is in \({{\,\mathrm{O}\,}}(k^2)\). Because the size of the higher-order neighborhood of each subgraph is \({{\,\mathrm{O}\,}}(k^2 \varDelta _{G})\) from “Appendix E.2”, the initial estimation phase will require \({{\,\mathrm{O}\,}}(|{\mathcal {I}}{}{^{}}_1| \, k^4 \varDelta _{G})\) time.

In all other strata \(r=2, \ldots , R\), we assume that m tours are sampled in Line 8. Starting each tour (Lines 9 to 11) requires order of magnitude R time, leading to a total time of \({{\,\mathrm{O}\,}}(m\,R^2) \in {{\,\mathrm{O}\,}}(m k^2 D_{G}^2)\) because \(R \in {{\,\mathrm{O}\,}}(k D_{G})\) from Proposition 11. The total time for these ancilliary procedures is \({{\,\mathrm{O}\,}}(m k^2 D_{G}^2 + |{\mathcal {I}}{}{^{}}_1| \, k^4 \varDelta _{G})\)

Therefore, the time complexity of bookkeeping and setup is \({{\,\mathrm{O}\,}}(m k^2 D_{G}^2 + |{\mathcal {I}}{}{^{}}_1| \, k^4 \varDelta _{G} + |V| + |E|) \in {\widehat{{{\,\mathrm{O}\,}}}}(k^4)\). The time complexity at each random walk step is \({{\,\mathrm{O}\,}}(k-1^{2}\varDelta _{G} + k-1^4)\in {\widehat{{{\,\mathrm{O}\,}}}}(k^4)\) from “Appendix D.2” D.2 and “Appendix E.2.1”. We assume that the expected number of rejections in Line 21 is given by \(C_{\textsc {rej}}\). The total number of random walk steps is given by \({{\,\mathrm{O}\,}}(R\,m\,C_{\textsc {rej}})\) times the expected tour length. By Lemma 5 and Proposition 11, the expected tour length is \({{\,\mathrm{O}\,}}(\varDelta _{{\mathcal {G}}{}{^{k-1}}})\equiv {{\,\mathrm{O}\,}}(k^2 \varDelta _{G})\). Therefore, the total number of random walk steps is \({{\,\mathrm{O}\,}}(k^3 m D_{G} \varDelta _{G} C_{\textsc {rej}})\).

\({{\,\mathrm{O}\,}}(|{\mathcal {H}}|)\) time is to print the output \({\hat{\mu }}\). We assume that updating \({\hat{\mu }}\) is amortized in constant order if we use a hashmap to store elements of the vector, and because updating a single key in said hashmap is by Eq. (9) increments, the proof is completed. \(\square \)

Rights and permissions

About this article

Cite this article

Teixeira, C.H.C., Kakodkar, M., Dias, V. et al. Sequential stratified regeneration: MCMC for large state spaces with an application to subgraph count estimation. Data Min Knowl Disc 36, 414–447 (2022). https://doi.org/10.1007/s10618-021-00802-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-021-00802-3