Abstract

Statistically sound pattern discovery harnesses the rigour of statistical hypothesis testing to overcome many of the issues that have hampered standard data mining approaches to pattern discovery. Most importantly, application of appropriate statistical tests allows precise control over the risk of false discoveries—patterns that are found in the sample data but do not hold in the wider population from which the sample was drawn. Statistical tests can also be applied to filter out patterns that are unlikely to be useful, removing uninformative variations of the key patterns in the data. This tutorial introduces the key statistical and data mining theory and techniques that underpin this fast developing field. We concentrate on two general classes of patterns: dependency rules that express statistical dependencies between condition and consequent parts and dependency sets that express mutual dependence between set elements. We clarify alternative interpretations of statistical dependence and introduce appropriate tests for evaluating statistical significance of patterns in different situations. We also introduce special techniques for controlling the likelihood of spurious discoveries when multitudes of patterns are evaluated. The paper is aimed at a wide variety of audiences. It provides the necessary statistical background and summary of the state-of-the-art for any data mining researcher or practitioner wishing to enter or understand statistically sound pattern discovery research or practice. It can serve as a general introduction to the field of statistically sound pattern discovery for any reader with a general background in data sciences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Pattern discovery is a core technique of data mining that aims at finding all patterns of a specific type that satisfy certain constraints in the data (Agrawal et al. 1993; Cooley et al. 1997; Rigoutsos and Floratos 1998; Kim et al. 2010). Common pattern types include frequent or correlated sets of variables, association and correlation rules, frequent subgraphs, subsequencies, and temporal patterns. Traditional pattern discovery has emphasized efficient search algorithms and computationally well-behaving constraints and pattern types, like frequent pattern mining (Aggarwal and Han 2014), and less attention has been paid to the statistical validity of patterns. This has also restricted the use of pattern discovery in many applied fields, like bioinformatics, where one would like to find certain types of patterns without risking costly false or suboptimal discoveries. As a result, there has emerged a new trend towards statistically sound pattern discovery with strong emphasis on statistical validity. In statistically sound pattern discovery, the first priority is to find genuine patterns that are likely to reflect properties of the underlying population and hold also in future data. Often the pattern types are also different, because they have been dictated by the needs of application fields rather than computational properties.

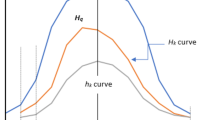

The problem of statistically sound pattern discovery is illustrated in Fig. 1. Usually, the analyst has a sample of data drawn from some population of interest. This sample is typically only a very small proportion of the total population of interest and may contain noise. The pattern discovery tool is applied to this sample, finding some set of patterns. It is unrealistic to expect this set of discovered patterns to directly match the ideal patterns that would be found by direct analysis of the real population rather than a sample thereof. Indeed, it is clear that in at least some cases, the application of naive techniques results in the majority of patterns found being only spurious artifacts. An extreme example of this problem arises with the popular minimum support and minimum confidence technique (Agrawal et al. 1993) when applied to the well-known Covtype benchmark dataset from the UCI repository (Lichman 2013). The minimum support and minimum confidence technique seeks to find the frequent positive dependencies in data using thresholds for minimum frequency (‘support’) and precision (‘confidence’). For the Covtype dataset, the top 197,183,686 rules found by minimum support and minimum confidence are in fact negative dependencies (Webb 2006). This gives rise to the suggestion that the oft cited problem of pattern discovery finding unmanageably large numbers of patterns is largely due to standard techniques returning results that are dominated by spurious patterns (Webb 2011).

There is a rapidly growing body of pattern discovery techniques being developed in the data science community that utilize statistics to control the risk of such spurious discoveries. This tutorial paper grew out of tutorials presented at ECML PKDD 2013 (Hämäläinen and Webb 2013) and KDD-14 (Hämäläinen and Webb 2014). It introduces the relevant statistical theory and key techniques for statistically sound pattern discovery. We concentrate on pattern types that express statistical dependencies between categorical attributes, such as dependency rules (dependencies between condition and consequent parts) and dependency sets (mutual dependencies between set elements). The same techniques of testing statistical significance of dependence also apply to situations where one would like to test dependencies in other types of patterns, like dependencies between subgraphs and classes or between frequent episodes.

To keep the scope manageable, we do not describe actual search algorithms but merely the statistical techniques that are employed during the search. We aim at a generic presentation that is not bound to any specific search method, pattern type or school of statistics. Instead, we try to clarify alternative interpretations of statistical dependence and the underlying assumptions on the origins of data, because they often lead to different statistical methods and also different patterns to be selected. We describe the preconditions, limitations, strengths, and shortcomings of different approaches to help the reader to select a suitable method for the problem at hand. However, we do not make any absolute recommendations, as there is no one correct way to test statistical significance or reliability of patterns. Rather, the appropriate choice is always problem-dependent.

The paper is aimed at a wide variety of audiences. The main goal is to offer a general introduction to the field of statistically sound pattern discovery for any reader with a general background in data sciences. Knowledge on the main principles, important concerns, and alternative techniques is especially useful for practical data miners (how to improve the quality or test the reliability of discovered patterns) and algorithm designers (how to target the search into the most reliable patterns). Another goal is to introduce possibilities of pattern discovery to researchers in other fields, like bioscientists, for whom statistical significance of findings is the main concern and who would like to find new useful information from large data masses. As a prerequisite, we assume knowledge of the basic concepts of probability theory. The paper provides the necessary statistical background and summary of the state of the art, but a knowledgeable reader may well skip preliminaries (Sects. 2.2–2.3) and the overview of multiple hypothesis testing (Sect. 6.1).

In the paper we have tried to use terminology that is consistent with statistics for two reasons. First, knowing statistical terms makes it easier to consult external sources, like textbooks in statistics, for further knowledge. Second, common terminology should make the paper more readable to wider audience, like reseachers from applied science who would like to extend their repertoire of statistical analysis with pattern discovery techniques. To achieve this goal, we have avoided some special terms originated in pattern discovery that have another meaning in statistics or may become easily confused in this context (see Appendix).

The rest of the paper is organized as follows. In Sect. 2 we give definitions of various types of statistical dependence and introduce the main principles and approaches of statistical significance testing. In Sect. 3 we investigate how statistical significance of dependency rules is evaluated under different assumptions. Especially, we contrast two alternative interpretations of dependency rules that are called variable-based and value-based interpretations and introduce appropriate tests for different situations. In Sect. 4 we discuss how to evaluate statistical significance of the improvement of one rule over another one. In Sect. 5 we survey the key techniques that have been developed for finding different types of statistically significant dependency sets. In Sect. 6 we discuss the problem of multiple hypothesis testing. We describe the main principles and popular correction methods and then introduce some special techniques for increasing power in the pattern discovery context. Finally, in Sect. 7 we summarize the main points and present conclusions.

2 Preliminaries

In this paper, we consider patterns that express statistical dependence. Dependence is usually defined in the negative, as absence of independence. Therefore, we begin by defining different types of statistical independence that are needed in defining dependence patterns and their relationships, like improvement of one pattern over another one. After that, we give an overview of the main principles and approaches of statistical significance testing. These approaches are applicable to virtually any pattern type, but we focus on how they are used in independence testing. In the subsequent sections, we will describe in detail how to evaluate statistical significance of dependency patterns or their improvement under different sets of assumptions.

2.1 Notations

The mathematical notations used in this paper are given in Table 1. We note that the sample space spun by variables \(A_1,\ldots ,A_k\) is \({\mathcal {S}}= Dom (A_1) \times \ldots \times Dom (A_k)\). When \(A_i\)s are binary variables \({\mathcal {S}}=\{0,1\}^k\). Sample points \({\mathbf {r}}\in {\mathcal {S}}\) correspond to atomic events and all other events can be presented as their disjunctions. Often these can be presented in a reduced form, for example, event \((A_1{=}1,A_2{=}1)\vee (A_1{=}1,A_2{=}0)\) reduces to \((A_1{=}1)\). In this paper, we focus on events that can be presented as conjunctions \(\mathbf {X\,{=}\,x}\), where \({\mathbf {X}}\,{\subseteq }\, \{A_1,\ldots ,A_k\}\). When it is clear from the context, we notate elements of \({\mathbf {X}}\), \(|{\mathbf {X}}|=m\), by \(A_1,\ldots ,A_m\) instead of more complicated \(A_{i_1},\ldots ,A_{i_m}\), \(\{i_1,\ldots ,i_m\}\subseteq \{1,\ldots ,k\}\). We also note that data set \({\mathcal {D}}\) is defined as a vector of data points so that duplicate rows (i.e., rows \({\mathbf {r}}_i\), \({\mathbf {r}}_j\) where \({\mathbf {r}}_i={\mathbf {r}}_j\) but \(i\ne j\)) can be distinguished by their index numbers.

2.2 Statistical dependence

The notion of statistical dependence is equivocal and even the simplest case, dependence between two events, is subject to alternative interpretations. Interpretations of statistical dependence between more than two events or variables are even more various. In the following, we introduce the main types of statistical independence that are needed for defining dependency patterns and evaluating their statistical significance and mutual relationships.

2.2.1 Dependence between two events

Definitions of statistical dependence are usually based on the classical notion of statistical independence between two events. We begin from a simple case where the events are variable-value combinations, \(A\,{=}\,a\) and \(B\,{=}\,b\).

Definition 1

(Statistical independence between two events) Let \(A\,{=}\,a\) and \(B\,{=}\,b\) be two events, \(P(A\,{=}\,a)\) and \(P(B\,{=}\,b)\) their marginal probabilities, and \(P(A\,{=}\,a,B\,{=}\,b)\) their joint probability. Events \((A\,{=}\,a)\) and \((B\,{=}\,b)\) are statistically independent, if

Statistical dependence is seldom defined formally, but in practice, there are two approaches. If dependence is considered as a Boolean property, then any departure from complete independence (Eq. 1) is defined as dependence. Another approach, prevalent in statistical data analysis, is to consider dependence as a continuous property ranging from complete independence to complete dependence. Complete dependence itself is an ambiguous term, but usually it refers to equivalence of events: \(P(A\,{=}\,a,B\,{=}\,b)=P(A\,{=}\,a)=P(B\,{=}\,b)\) (perfect positive dependence) or mutual exclusion of events: \(P(A\,{=}\,a,B{\ne }b)=P(A\,{=}\,a)=P(B{\ne }b)\) (perfect negative dependence).

The strength of dependence between two events can be evaluated with several alternative measures. In pattern discovery, two of the most popular measures are leverage and lift.

Leverage is equivalent to Yule’s \(\delta \) (Yule 1912), Piatetsky-Shapiro’s unnamed measure (Piatetsky-Shapiro 1991), and Meo’s ‘dependence value’ (Meo 2000). It measures the absolute deviation of the joint probability from its expectation under independence:

We note that this is the same as covariance between binary variables A and B.

Lift has also been called ‘interest’ (Brin et al. 1997), ‘dependence’ (Wu et al. 2004), and ‘degree of independence’ (Yao and Zhong 1999). It measures the ratio of the joint probability and its expectation under independence:

For perfectly independent events, leverage is \(\delta =0\) and lift is \(\gamma =1\), for positive dependencies \(\delta >0\) and \(\gamma >1\), and for negative dependencies, \(\delta <0\) and \(\gamma <1\).

If the real probabilities of events were known, the strength of dependence could be determined accurately. However, in practice, the probabilities are estimated from the data. The most common method is to approximate the real probabilities with relative frequencies (maximum likelihood estimates) but other estimation methods are also possible. The accuracy of these estimates depends on how representative and error-free the data is. The size of the data affects also precision, because continuous probabilities are approximated with discrete frequencies. Therefore, it is quite possible that two independent events express some degree of dependence in the data (i.e., \({\hat{P}}(A\,{=}\,a,B\,{=}\,b)\ne {\hat{P}}(A\,{=}\,a){\hat{P}}(B\,{=}\,b)\), where \({\hat{P}}\) is the estimated probability, even if \(P(A\,{=}\,a,B\,{=}\,b)=P(A\,{=}\,a)P(B\,{=}\,b)\) in the population). In the worst case, two events always co-occur in the data, indicating maximal dependence, even if they are actually independent. To some extent the probability of such false discoveries can be controlled by statistical significance testing, which is discussed in Sect. 2.3. In the other extreme, two dependent events may appear independent in the data (i.e., \({\hat{P}}(A\,{=}\,a,B\,{=}\,b)={\hat{P}}(A\,{=}\,a){\hat{P}}(B\,{=}\,b)\)). However, this is not possible if the actual dependence is sufficiently strong (i.e., \(P(A\,{=}\,a,B\,{=}\,b)=P(A\,{=}\,a)\) or \(P(A\,{=}\,a,B\,{=}\,b)=P(B\,{=}\,b)\)), assuming that the data is error-free. Such missed discoveries are harder to detect, but to some extent the problem can be alleviated by using powerful methods in significance testing (Sect. 2.3).

2.2.2 Dependence between two variables

For each variable, we can define several events which describe its values. If the variable is categorical, it is natural to consider each variable-value combination as a possible event. Then, the independence between two categorical variables can be defined as follows:

Definition 2

(Statistical independence between two variables) Let A and B be two categorical variables, whose domains are \( Dom (A)\) and \( Dom (B)\). A and B are statistically independent, if for all \(a \in Dom(A)\) and \(b\in Dom(B)\)\(P(A\,{=}\,a,B\,{=}\,b)=P(A\,{=}\,a)P(B\,{=}\,b)\).

Once again, dependence can be defined either as a Boolean property (lack of independence) or a continuous property. However, there is no standard way to measure the strength of dependence between variables. In practice, the measure is selected according to data and modelling purposes. Two commonly used measures are the \(\chi ^2\)-measure

and mutual information

If the variables are binary, the notions of independence between variables and the corresponding events coincide. Now independence between any of the four value combinations AB, \(A\lnot B\),\(\lnot AB\), \(\lnot A\lnot B\) means independence between variables A and B and vice versa. In addition, the absolute value of leverage is the same for all value combinations and can be used to measure the strength of dependence between binary variables. This is shown in the corresponding contingency table (Fig. 2). Unfortunately, this handy property does not hold for multivalued variables. Figure 3 shows an example contingency table for two three-valued variables where some value combinations are independent and others dependent.

2.2.3 Dependence between many events or variables

The notion of statistical independence can be generalized to three or more events or variables in several ways. The most common types of independence are mutual independence, bipartition independence, and conditional independence (see e.g., Agresti 2002, p. 318). In the following, we give general definitions for these three types of independence.

In statistics and probability theory, mutual independence of a set of events is classically defined as follows (see e.g., Feller 1968, p. 128):

Definition 3

(Mutual independence) Let \({\mathbf {X}}=\{A_1,\ldots ,A_m\}\) be a set of variables, whose domains are \( Dom (A_i)\), \(i=1,\ldots ,m\). Let \(a_i\in Dom (A_i)\) notate a value of \(A_i\). A set of events \((A_1\,{=}\,a_1,\ldots ,A_m\,{=}\,a_m)\) is called mutually independent if for all \(\{i_1,\ldots ,i_{m'}\}\subseteq \{1,\ldots ,m\}\) holds

If variables \(A_i{\in }{\mathbf {X}}\) are binary, the conjunction of true-valued variables \((A_1{=}1,\ldots ,\)\(A_m{=}1)\) can be expressed as \(A_1,\ldots ,A_m\) and the condition for mutual independence reduces to \(\forall {\mathbf {Y}}\,{\subseteq }\, {\mathbf {X}}\)\(P({\mathbf {Y}})=\prod _{A_i\in {\mathbf {Y}}}P(A_i)\). An equivalent condition is to require that for all truth value combinations \((a_1,\ldots ,a_m)\in \{0,1\}^m\) holds \(P(A_1{=}a_1,\ldots ,A_m{=}a_m)=\prod _{i=1}^m P(A_i{=}a_i)\) (Feller 1968, p. 128). We note that in data mining this property has sometimes been called independence of binary variables and independence of events has referred to a weaker condition (e.g., Silverstein et al. 1998)

The difference is that in the latter it is not required that all \({\mathbf {Y}}\,{\subsetneq }\,{\mathbf {X}}\) should express independence. Both definitions have been used as a starting point to define interesting set-formed dependency patterns (e.g., Webb 2010; Silverstein et al. 1998). In this paper we will call this type of patterns dependency sets (Sect. 5).

In addition to mutual independence, a set of events or variables can express independence between different partitions of the set. The only difference to the basic definition of statistical independence is that now single events or variables have been replaced by sets of events or variables. In this paper we call this type of independence bipartition independence.

Definition 4

(Bipartition independence) Let \({\mathbf {X}}\) be a set of variables. For any partition \({\mathbf {X}}={\mathbf {Y}}\cup {\mathbf {Z}}\), where \({\mathbf {Y}}\cap {\mathbf {Z}}=\emptyset \), possible value combinations are notated by \({\mathbf {y}}\in Dom ({\mathbf {Y}})\) and \({\mathbf {z}}\in Dom ({\mathbf {Z}})\).

-

(i)

Event \(\mathbf {Y\,{=}\,y}\) is independent of event \(\mathbf {Z\,{=}\,z}\), if \(P(\mathbf {Y\,{=}\,y},\mathbf {Z\,{=}\,z})=P(\mathbf {Y\,{=}\,y})P(\mathbf {Z\,{=}\,z})\).

-

(ii)

Set of variables \({\mathbf {Y}}\) is independent of \({\mathbf {Z}}\), if \(P(\mathbf {Y\,{=}\,y},\mathbf {Z\,{=}\,z})=P(\mathbf {Y\,{=}\,y})P(\mathbf {Z\,{=}\,z})\) for all \({\mathbf {y}}\in Dom ({\mathbf {Y}})\) and \({\mathbf {z}}\in Dom ({\mathbf {Z}})\).

Now one can derive a large number of different dependence patterns from a single set \({\mathbf {X}}\) or event \(\mathbf {X\,{=}\,x}\). There are \(2^{m-1}-1\) ways to partition set \({\mathbf {X}}\), \(|{\mathbf {X}}|=m\), into two subsets \({\mathbf {Y}}\) and \({\mathbf {Z}}={\mathbf {X}}{\setminus } {\mathbf {Y}}\) (\(|{\mathbf {Y}}|=1,\ldots ,\lceil \frac{m-1}{2}\rceil \)). In data mining, patterns expressing bipartition dependence between sets of events are often expressed as dependency rules\(\mathbf {Y\,{=}\,y}\rightarrow \mathbf {Z\,{=}\,z}\). Because both the rule antecedent and consequent are binary conditions, the rule can be interpreted as dependence between two new binary (indicator) variables \(I_\mathbf {Y\,{=}\,y}\) and \(I_\mathbf {Z\,{=}\,z}\) (\(I_\mathbf {Y\,{=}\,y}\,{=}\,1\) if \(\mathbf {Y\,{=}\,y}\) and \(I_\mathbf {Y\,{=}\,y}\,{=}\,0\) otherwise). In statistical terms, this is the same as collapsing a multidimensional contingency table into a simple \(2\times 2\) table. In addition to statistical dependence, dependency rules are often required to fulfil other criteria like sufficient frequency, strength of dependency or statistical significance. Corresponding patterns between sets of variables are less often studied, because the search is computationally much more demanding. In addition, collapsed contingency tables can reveal interesting and statistically significant dependencies between composed events, when no significant dependencies could be found between variables.

The third main type of independence is conditional independence between events or variables:

Definition 5

(Conditional independence) Let \({\mathbf {X}}\) be a set of variables. For any partition \({\mathbf {X}}={\mathbf {Y}}\cup {\mathbf {Z}}\cup {\mathbf {Q}}\), where \({\mathbf {Y}}\cap {\mathbf {Z}}=\emptyset \), \({\mathbf {Y}}\cap {\mathbf {Q}}=\emptyset \), \({\mathbf {Z}}\cap {\mathbf {Q}}=\emptyset \), possible value combinations are notated by \({\mathbf {y}}\in Dom ({\mathbf {Y}})\), \({\mathbf {z}}\in Dom ({\mathbf {Z}})\), and \({\mathbf {q}}\in Dom ({\mathbf {Q}})\).

-

(i)

Events \(\mathbf {Y\,{=}\,y}\) and \(\mathbf {Z\,{=}\,z}\) are conditionally independent given \(\mathbf {Q\,{=}\,q}\), if

\(P(\mathbf {Y\,{=}\,y},\mathbf {Z\,{=}\,z}\mid \mathbf {Q\,{=}\,q})=P(\mathbf {Y\,{=}\,y}\mid \mathbf {Q\,{=}\,q})P(\mathbf {Z\,{=}\,z}\mid \mathbf {Q\,{=}\,q})\).

-

(ii)

Sets of variables \({\mathbf {Y}}\) and \({\mathbf {Z}}\) are conditionally independent given \({\mathbf {Q}}\), if

\(P(\mathbf {Y\,{=}\,y},\mathbf {Z\,{=}\,z}\mid \mathbf {Q\,{=}\,q})=P(\mathbf {Y\,{=}\,y}\mid \mathbf {Q\,{=}\,q})P(\mathbf {Z\,{=}\,z}\mid \mathbf {Q\,{=}\,q})\) for all

\({\mathbf {y}}\in Dom ({\mathbf {Y}})\), \({\mathbf {z}}\in Dom ({\mathbf {Z}})\), and \({\mathbf {q}}\in Dom ({\mathbf {Q}})\).

Conditional independence can be defined also for more than two sets of events or variables, given a third one. For example, in set \(\{A,B,C,D\}\) we can find four conditional independencies given D: \(A \perp BC\), \(B\perp AC\), \(C\perp AB\), and \(A\perp B \perp C\). However, these types of independence are seldom needed in practice. In pattern discovery notions of conditional independence and dependence between events are used for inspecting improvement of a dependency rule \({\mathbf {Y}}{\mathbf {Q}}\rightarrow C\) over its generalization \({\mathbf {Y}}\rightarrow C\) (Sect. 4). In machine learning conditional independence between variables or sets of variables is an important property for constructing full probability models, like Bayesian networks or log-linear models.

2.3 Statistical significance testing

Often when searching dependency rules and sets the aim is to find dependencies that hold in the population from which the sample is drawn (cf. Fig. 1). Statistical significance tests are the tools that have been created to control the risk that such inferences drawn from sample data do not hold in the population. This section introduces the key concepts that underlie significance testing and gives an overview of the main approaches that can be applied in testing dependency rules and sets. The same principles can be applied in testing other types of patterns, but a reader would be well advised to consult a statistician with regard to which tests to apply and how to apply them.

The main idea of statistical significance testing is to estimate the probability that the observed discovery would have occurred by chance. If the probability is very small, we can assume that the discovery is genuine. Otherwise, it is considered spurious and discarded. The probability can be estimated either analytically or empirically. The analytical approach is used in the traditional significance testing, while randomization tests estimate the probability empirically. Traditional significance testing can be further divided into two main classes: the frequentist and Bayesian approaches. These main approaches to statistical significance testing are shown in Fig. 4.

2.3.1 Frequentist approach

The frequentist approach of significance testing is the most commonly used and best studied (see e.g. Freedman et al. 2007, Ch. 26, or Lindgren 1993, Ch. 10.1). The approach is actually divided into two opposing schools, Fisherian and Neyman–Pearsonian, but most textbooks present a kind of synthesis (see e.g., Hubbard and Bayarri 2003). The main idea is to estimate the probability of the observed or a more extreme phenomenon O under some null hypothesis, \(H_0\). In general, the null hypothesis is a statement on the value of some statistic or statistics S in the population. For example, when the objective is to test the significance of dependency rule \({\mathbf {X}}\rightarrow A\), the null hypothesis \(H_0\) is the independence assumption: \(N_{XA}=nP({\mathbf {X}})P(A)\), where \(N_{XA}\) is a random variable for the absolute frequency of \({\mathbf {X}}A\). (Equivalently, \(H_0\) could be \({\varDelta }=0\) or \({\varGamma }=1\), where \({\varDelta }\) and \({\varGamma }\) are random variables for the leverage and lift.) In independence testing the null hypothesis is usually an equivalence statement, \(S\,{=}\,s_0\) (nondirectional hypothesis), but in other contexts it can also be of the form \(S\le s_0\) or \(S\ge s_0\) (directional hypothesis). Often, one also defines an explicit alternative hypothesis, \(H_A\), which can be either directional or nondirectional. For example, in pattern discovery dependency rules \({\mathbf {X}}\rightarrow A\) are assumed to express positive dependence, and therefore it is natural to form a directional hypothesis \(H_A\): \(N_{XA}>nP({\mathbf {X}})P(A)\) (or \({\varDelta }>0\) or \({\varGamma }>1\)).

When the null hypothesis has been defined, one should select a test statistic \( T \) (possibly S itself) and define its distribution (null distribution) under \(H_0\). The p-value is defined from this distribution as the probability of the observed or a more extreme \( T \)-value, \(P( T \ge t \mid H_0)\), \(P( T \le t \mid H_0)\), or \(P( T \le - t \text { or } T \ge t \mid H_0)\) (Fig. 5). In the case of independence testing, possible test statistics are, for example, leverage, lift, and the \(\chi ^2\)-measure (Eq. 4). The distribution under independence is defined according to the selected sampling model, which we will introduce in Sect. 3. The probability of observing positive dependence whose strength is at least \(\delta ({\mathbf {X}},A)\) is \(P_{{\mathcal {M}}}({\varDelta }\ge \delta ({\mathbf {X}},A)\mid H_0)\), where \(P_{{\mathcal {M}}}\) is the complementary cumulative distribution function for the assumed sampling model \({\mathcal {M}}\).

Up to this point, all frequentist approaches are more or less in agreement. The differences appear only when the p-values are interpreted. In the classical (Neyman–Pearsonian) hypothesis testing, the p-value is compared to some predefined threshold \(\alpha \). If \(p\le \alpha \), the null hypothesis is rejected and the discovery is called significant at level \(\alpha \). Parameter \(\alpha \) (also known as the test size) defines the probability of committing a type I error, i.e., accepting a spurious pattern (and rejecting a correct null hypothesis). Another parameter, \(\beta \), is used to define the probability of committing a type II error, i.e., rejecting a genuine pattern as non-significant (and keeping a false null hypothesis). The complement \(1-\beta \) defines the power of the test, i.e., the probability that a genuine pattern passes the test. Ideally, one would like to minimize the test size and maximize its power. Unfortunately, this is not possible, because \(\beta \) increases when \(\alpha \) decreases and vice versa. As a solution it has been recommended (e.g., Lehmann and Romano 2005, p. 57) to select appropriate \(\alpha \) and then to check that the power is acceptable given the sample size. However, the power analysis can be difficult and all too often it is skipped altogether.

The most controversial problem in hypothesis testing is how to select an appropriate significance level. A convention is to use always the same standard levels, like \(\alpha =0.05\) or \(\alpha =0.01\). However, these values are quite arbitrary and widely criticized (see e.g., Lehmann and Romano 2005, p. 57; Lecoutre et al. 2001; Johnson 1999). Especially in large data sets, the p-values tend to be very small and hypotheses get too easily rejected with conventional thresholds. A simple alternative is to report only p-values, as advocated by Fisher and also many recent statisticians (e.g., Lehmann and Romano 2005, pp. 63-65; Hubbard and Bayarri 2003). Sometimes, this is called ‘significance testing’ in distinction from ‘hypothesis testing’ (with fixed \(\alpha \)s), but the terms are not used systematically. Reporting only p-values may often be sufficient, but there are still situations where one should make concrete decisions and a binary judgement is needed.

Deciding threshold \(\alpha \) is even harder in data mining where numerous patterns are tested. For example, if we use threshold \(\alpha =0.05\), then there is up to 5% chance that a spurious pattern passes the significance test. If we test 10 000 spurious patterns, we can expect up to 500 of them to pass the test erroneously. This so called multiple testing problem is inherent in knowledge discovery, where one often performs an exhaustive search over all possible patterns. We will return to this problem in Sect. 6.

2.3.2 Bayesian approach

Bayesian approaches are becoming increasingly popular in both statistics and data mining (see e.g., Corani et al. 2016). However, to date there has been little uptake of them in statistically sound pattern discovery. We include here a brief summary of the Bayesian approach for completeness and in the hope that it will stimulate further investigation of this promising approach.

The idea of Bayesian significance testing (see e.g., Lee 2012, Ch. 4; Albert 1997; Jamil et al. 2017) is quite similar to the frequentist approach, but now we assign some prior probabilities \(P(H_0)\) and \(P(H_A)\) to the null hypothesis \(H_0\) and the alternative research hypothesis \(H_A\). Next, the conditional probabilities, \(P(O{\mid }H_0)\) and \(P(O{\mid }H_A)\), of the observed or a more extreme phenomenon O under \(H_0\) and \(H_A\) are estimated from the data. Finally, the probabilities of both hypotheses are updated by the Bayes’ rule and the acceptance or rejection of \(H_0\) is decided by comparing posterior probabilities \(P(H_0{\mid }O)\) and \(P(H_A{\mid }O)\). The resulting conditional probabilities \(P(H_0{\mid }O)\) are asymptotically similar (under some assumptions even identical) to the traditional p-values, but Bayesian testing is sensitive to the selected prior probabilities (Agresti and Min 2005). One attractive feature of the Bayesian approach is that it allows to quantify the evidence for and against the null hypothesis. However, the procedure tends to be more complicated than the frequentist one; specifying prior distributions may require a plethora of parameters and the posterior probabilities cannot always be evaluated analytically (Agresti and Hitchcock 2005; Jamil et al. 2017).

2.3.3 Randomization testing

Randomization testing (see e.g., Edgington 1995) offers a relatively assumption-free approach for testing statistical dependencies. Unlike traditional significance testing, there is no need to assume that the data would be a random sample from the population or to define what type of distribution the test statistic has under the null hypothesis. Instead, the significance is estimated empirically, by generating random data sets under the null hypothesis and checking how often the observed or a more extreme phenomenon occurs in them.

When independence between A and B is tested, the null hypothesis is exchangeability of the A-values on rows when B-values are kept fixed, or vice versa. This is the same as stating that all permutations of A-values in the data are equally likely. A similar null hypothesis can be formed for mutual independence in a set of variables. If only a single dependency set \({\mathbf {X}}\) is tested, it is enough to generate random data sets \({\mathcal {D}}_1,\ldots ,{\mathcal {D}}_b\) by permuting values of each \(A_i\), \(A_i\in {\mathbf {X}}\). Usually, it is required that all marginal probabilities \(P(A_i)\) remain the same as in the original data, but there may be additional constraints, defined by the permutation scheme. Test statistic \( T \) that evaluates goodness of the pattern is calculated in each random data set. For simplicity, we assume that the test statistic \( T \) is increasing by goodness (a higher value indicates a better pattern). If the original data set produced \( T \)-value \( t _0\) and b random data sets produced \( T \)-values \( t _1,\ldots , t _b\), the empirical p-value of the observed pattern is

If the data set is relatively small and \({\mathbf {X}}\) is simple, it is possible to enumerate all possible permutations where the marginal probabilities hold. This leads to an exact permutation test, which gives an exact p-value. On the other hand, if the data set is large and/or \({\mathbf {X}}\) is more complex, all possibilities cannot be checked, and the empirical p-value is less accurate. In this case, the test is called a random permutation test or an approximate permutation test. There are also some special cases, like testing a single dependency rule, where it is possible to express the permutation test in a closed form that is easy to evaluate exactly (see Fisher’s exact test in Sect. 3.2).

An advantage of randomization testing is that the test statistic can have any kind of distribution, which is especially handy when the statistic is new or poorly known. With randomization one can test also such null hypotheses for which no closed form test exists. Randomization tests are technically valid even if the data are not a random sample because strictly speaking the population to which the null hypotheses relate is the set of all permutations of the sample defined by the permutation scheme. However, the results can be generalized to the reference population only to the extent of how representative the sample was for that population (Legendre and Legendre 1998, p. 24). One critical problem with randomization testing is that it is not always clear how the data should be permuted, and different permutation schemes can produce quite different results in their assessment of patterns (see e.g., Hanhijärvi 2011). The number of random permutations plays also an important role in testing. The more random permutations are performed, the more accurate the empirical p-values are, but in practice, extensive permuting can be too time consuming. Computational costs restrict also the use of randomization testing in search algorithms especially in large data sets.

The idea of randomization tests can be extended for estimating the overall significance of all mining results or even for tackling the multiple testing problem. For example, one may test the significance of the number of all frequent sets (given a minimum frequency threshold) or the number of all sufficiently strong pair-wise correlations (given a minimum correlation threshold) using randomization tests (Gionis et al. 2007). In this case, it is necessary to generate complete data sets randomly for testing. The difficulty is to decide what properties of the original data set should be maintained. One common solution in pattern mining is to keep both the column margins (\( fr (A_i)\)s) and the row margins (numbers of 1s on each row) fixed and generate new data sets by swap randomization (Cobb and Chen 2003). A prerequisite for this method is that the attributes are semantically similar (e.g. occurrence or absence of species) and it is sensible to swap their values. In addition, there are some pathological cases, where no or only a few permutations exist with the given row and column margins, resulting in a large p-value, even if the original data set contains a significant pattern (Gionis et al. 2007).

3 Statistical significance of dependency rules

Dependency rules are a famous pattern type that expresses bipartition dependence between the rule antecedent and the consequent. In this section, we discuss how statistical significance of dependency rules is evaluated under different assumptions. Especially, we contrast two alternative interpretations of dependency rules that are called variable-based and value-based interpretations and introduce appropriate tests in different sampling models.

3.1 Dependency rules

Dependency rules are maybe the simplest type of statistical dependency patterns. As a result, it has been possible to develop efficient exhaustive search algorithms. With these, dependency rules can reveal arbitrarily complex bipartite dependencies from categorical or discretized numerical data without any additional assumptions. This makes dependency rule analysis an attractive starting point for any data mining task. In medical science, for example, an important task is to search for statistical dependencies between gene alleles, environmental factors, and diseases. We recall that statistical dependencies are not necessarily causal relationships, but still they can help to form causal hypotheses and reveal which factors predispose or prevent diseases (see e.g., Jin et al. 2012; Li et al. 2016). Interesting dependencies do not necessarily have to be strong or frequent, but instead, they should be statistically valid, i.e., genuine dependencies that are likely to hold also in future data. In addition, it is often required that the patterns should not contain any superfluous variables which would only obscure the real dependencies. Based on these considerations, we will first give a general definition of dependency rules and then discuss important aspects of genuine dependencies.

Definition 6

(Dependency rule) Let \({\mathbf {R}}\) be a set of categorical variables, \({\mathbf {X}}{\subseteq } {\mathbf {R}}\), and \({\mathbf {Y}}{\subseteq } {\mathbf {R}}{\setminus } {\mathbf {X}}\). Let us denote value vectors of \({\mathbf {X}}\) and \({\mathbf {Y}}\) by \({\mathbf {x}}\in Dom ({\mathbf {X}})\) and \({\mathbf {y}}\in Dom ({\mathbf {Y}})\). Rule \(\mathbf {X\,{=}\,x}\rightarrow \mathbf {Y\,{=}\,y}\) is a dependency rule, if \(P(\mathbf {X\,{=}\,x},\mathbf {Y\,{=}\,y})\ne P(\mathbf {X\,{=}\,x})P(\mathbf {Y\,{=}\,y})\).

The dependency is (i) positive, if \(P(\mathbf {X\,{=}\,x},\mathbf {Y\,{=}\,y})> P(\mathbf {X\,{=}\,x})P(\mathbf {Y\,{=}\,y})\), and (ii) negative, if \(P(\mathbf {X\,{=}\,x},\mathbf {Y\,{=}\,y})< P(\mathbf {X\,{=}\,x})P(\mathbf {Y\,{=}\,y})\). Otherwise, the rule expresses independence.

It is important to recognize that while the convention is to specify the antecedent and consequent and use a directed arrow to distinguish them, statistical dependence is a symmetric relation and strictly speaking the direction is arbitrary. Often, the rule is expressed with the antecedent and consequent selected so that the precision (‘confidence’) of the rule (\(\phi (\mathbf {X\,{=}\,x}\rightarrow \mathbf {Y\,{=}\,y})=P(\mathbf {Y\,{=}\,y}\mid \mathbf {X\,{=}\,x})\) or \(\phi (\mathbf {Y\,{=}\,y}\rightarrow \mathbf {X\,{=}\,x})=P(\mathbf {X\,{=}\,x}\mid \mathbf {Y\,{=}\,y})\)) is maximal. An exception is supervised descriptive rule discovery (including class association rules (Li et al. 2001), subgroup discovery (Herrera et al. 2011), emerging pattern mining (Dong and Li 1999) and contrast set mining (Bay and Pazzani 2001)), where the consequent is fixed (Novak et al. 2009).

For simplicity, we will concentrate on a common special case of dependency rules where 1) all variables are binary, 2) the consequent \(\mathbf {Y{=}y}\) consists of a single variable-value combination, \(A{=}i\), \(i\in \{0,1\}\), and 3) the antecedent \(\mathbf {X{=}x}\) is a conjunction of true-valued attributes, i.e., \(\mathbf {X\,{=}\,x}\equiv (A_1{=}1,\ldots ,A_l{=}1)\), where \({\mathbf {X}}=\{A_1,\ldots ,A_l\}\). With these restrictions the resulting rules can be expressed in a simpler form \({\mathbf {X}}\rightarrow A\,{=}\,i\), where \(i\in \{0,1\}\), or \({\mathbf {X}}\rightarrow A\) and \({\mathbf {X}}\rightarrow \lnot A\). Allowing negated consequents means that it is sufficient to represent only positive dependencies (a positive dependency between \({\mathbf {X}}\) and \(\lnot A\) is the same as a negative dependency between \({\mathbf {X}}\) and A). We note that this restriction is purely representational and the following theory is easily extended to general dependency rules as well. Furthermore, we recall that this simpler form of rules can still represent all dependency rules after suitable data transformations (i.e., creating new binary variables for all values of the original variables).

Finally, we note that dependency rules deviate from traditional association rules (Agrawal et al. 1993) in their requirement of statistical dependence. Traditional association rules do not necessarily express any statistical dependence but relations between frequently occurring attribute sets. However, there has been research on association rules where the requirement of minimum frequency (‘minimum support’) has been replaced by requirements of statistical dependence (see e.g., Webb and Zhang 2005; Webb 2008, 2007; Hämäläinen 2012, 2010b; Li 2006; Morishita and Sese 2000; Nijssen and Kok 2006; Nijssen et al. 2009). For clarity, we will use here the term ‘dependency rule’ for all rule type patterns expressing statistical dependencies, even if they had been called association rules, classification rules, or other similar patterns in the original publications.

Statistical dependence is a necessary requirement of a dependency rule, but in addition, it is frequently useful to impose further constraints like that of statistical significance and absence of superfluous variables. The following example illustrates some of these properties of dependency rules.

Example 1

Let us consider an imaginary database consisting of 1000 patients (50% female, 50% male), 30% of them with heart disease. The database contains information on patients and their life style like smoking status, drinking coffee, having stress, going for sports, and using natural products. Table 2 lists some candidate dependency rules related to heart disease together with their frequency, precision, leverage, and lift.

The first two rules are examples of simple positive and negative dependencies (predisposing and protecting factors for heart disease). Rules 3 and 4 are included as examples of so called independence rules that express statistical independence between the antecedent and consequent. Normally, such rules would be pruned out by dependency rule mining algorithms.

Rule 5 is an example of a spurious rule, which is statistically insignificant and likely due to chance. The database contains only one person who uses pine bark extract regularly and who does not have heart disease. Note that the lift is still quite large, the maximal possible for that consequent. Rule 6 is also statistically insignificant, but for a different reason. The rule is very common, but the difference in the prevalence of heart disease among female and male patients is so small (148 vs. 152) that it can be explained by chance.

Rule 7 demonstrates non-monotonicity of statistical dependence. The combination of stress and female gender correlates positively with heart disease, even though stress alone was independent of heart disease and the female gender was negatively correlated with it.

The last four rules illustrate the problem of superfluous variables. In rule 8, neither of the condition attributes is superfluous, because the dependency is stronger and more significant than simpler dependencies involving only stress or only smoking. However, rules 9–11 demonstrate three types of superfluous rules where extra factors (i) have no effect on the dependency, (ii) weaken it, or (iii) apparently improve it but not significantly. Rule 9 is superfluous, because coffee has no effect on the dependency between smoking and heart disease (coffee consumption and heart disease are conditionally independent given smoking). Rule 10 is superfluous, because going for sports weakens the dependency between smoking and heart disease. This kind of modifying effect might be interesting in some contexts, if it were statistically significant. However, dependency rule mining algorithms do not usually perform such analysis. Rule 11 is the most difficult to judge, because the dependence is itself significant and the rule has larger precision and lift than either of simpler dependencies involving only the female gender or only sports. However, the improvement with respect to rule 2 is so small (\(\phi =0.808\) vs. \(\phi =0.800\)) that it is likely due to chance.

In the previous example we did not state which measure should be preferred for measuring the strength of dependence or how the statistical significance should be evaluated. The reason is that the selection of these measures as well as evaluation of statistical significance and superfluousness depend on the interpretation of dependency rules. In principle there are two alternative interpretations for rule \({\mathbf {X}}\rightarrow A\,{=}\,i\), \(i\in \{0,1\}\): either it can represent a dependency between events \({\mathbf {X}}\) (or \(I_{\mathbf {X}}{=}1\)) and \(A\,{=}\,i\) or between variables \(I_{\mathbf {X}}\) and A, where \(I_{\mathbf {X}}\) is an indicator variable for event \({\mathbf {X}}\). These two interpretations have sometimes been called value-based and variable-based semantics (Blanchard et al. 2005) of the rule. Unfortunately, researchers have often forgotten to mention explicitly which interpretation they follow. This has caused much confusion and, in the worst case, led to missed or inappropriate discoveries. The following example demonstrates how variable- and value-based interpretations can lead to different results.

Example 2

Let us consider a database of 100 apples describing their colour (green or red), size (big or small), and taste (sweet or bitter). Let us notate A=sweet, \(\lnot A\)=bitter (not sweet), \({\mathbf {Y}}\)={red}, \(\lnot {\mathbf {Y}}{=}\{green\}\) (not red), \({\mathbf {X}}{=}\{red,big\}\) and \(\lnot {\mathbf {Y}}{=}\lnot \{red,big\}\) (i.e., green or small).

We would like to find strong dependencies related to either variable ‘taste’ (variable-based interpretation) or value ‘sweet’ (value-based interpretation). Figure 6 represents two such rules: \({\mathbf {Y}}\rightarrow A\) (red\(\rightarrow \)sweet) and \({\mathbf {X}}\rightarrow A\) (red and big\(\rightarrow \)sweet).

The first rule expresses a strong dependency between binary variables \(I_{{\mathbf {Y}}}\) and A (i.e., colour and taste) with \(P(A|{\mathbf {Y}})=0.92\), \(P(\lnot A|\lnot {\mathbf {Y}})=1.0\), \(\delta ({\mathbf {Y}},A)=0.22\), and \(\gamma ({\mathbf {Y}},A)=1.67\). So, with this rule we can divide the apples into two baskets according to colour. The first basket contains 60 red apples, 55 of which are sweet, and the second basket contains 40 green apples, which are all bitter. This is quite a good rule if the goal is to classify well both sweet apples (for eating) and bitter apples (for juice and cider).

The second rule expresses a strong dependency between the value combination \({\mathbf {X}}\) (red and big) and value \(A\,{=}\,1\) (sweet) with \(P(A|{\mathbf {X}})=1.0\), \(P(\lnot A|\lnot {\mathbf {X}})=0.75\), \(\delta ({\mathbf {X}},A)=0.18\), \(\gamma ({\mathbf {X}},A)=1.82\). This rule produces a basket of 40 big, red apples, all of them sweet, and another basket of 60 green or small apples, 45 of them bitter. This is an excellent rule if we would like to predict sweetness better (e.g., get a basket of sweet apples for our guests) without caring how well bitterness is predicted.

So, the choice between variable-based and value-based interpretation results in a preference for a different rule. Either one can be desirable for different modelling purposes. This decision affects also which goodness measure should be used. Leverage suits the variable-based interpretation, because its absolute value is the same for all truth value combinations (\({\mathbf {X}}A\), \({\mathbf {X}}\lnot A\), \(\lnot {\mathbf {X}}A\), \(\lnot {\mathbf {X}}\lnot A\)), but it may miss interesting dependencies related to particular values. Lift, on the other hand, suits the value-based interpretation, because it favours rules where the given values are strongly dependent. However, it is not a reliable measure alone, because it ranks well also coincidental ‘noise rules’ (e.g., apple maggot\(\rightarrow \)bitter). Therefore, it has to be accompanied with statistical significance tests.

In general, the variable-based interpretation tends to produce more reliable patterns, in the sense that the discovered dependencies hold well in future data (see e.g., Hämäläinen 2010a, Ch.5). However, there are applications where the value-based interpretation may better identify interesting dependency rules. One example could be analysis of predisposing factors (like gene alleles) for a serious disease. Some factors \({\mathbf {X}}\) may be rare, but still their occurrence could strongly predict the onset of some disease D. Medical scientists would certainly want to find such dependencies \({\mathbf {X}}\rightarrow D\), even if the overall dependency between variables \(I_{{\mathbf {X}}}\) and D would be weak or insignificant.

In the following sections, we will examine how statistical significance is tested in the variable-based and value-based interpretations.

3.2 Sampling models for the variable-based interpretation

In the variable-based interpretation, the significance of dependency rule \({\mathbf {X}}\rightarrow A\) is determined by classical independence tests. The task is to estimate the probability of the observed or a more ‘extreme’ contingency table, assuming that variables \(I_{\mathbf {X}}\) and A were actually independent. There is no consensus how the extremeness relation should be defined, but intuitively, contingency table \(\tau _i\) is more extreme than table \(\tau _j\), if the dependence between \({\mathbf {X}}\) and A is stronger in \(\tau _i\) than in \(\tau _j\). So, any measure for the strength of dependence between variables can be used as a discrepancy measure, to order contingency tables. The simplest such measure is leverage, but also odds ratio

is commonly used. We note that odds ratio is not defined when \(N_{X\lnot A}N_{\lnot X A}=0\) and some special policy is needed for these cases. In the following, we will notate the relation “table \(\tau _i\) is equally or more extreme to table \(\tau _j\)” by \(\tau _i \succeq \tau _j\).

The probability of each contingency table \(\tau _i\) depends on the assumed statistical model \({\mathcal {M}}\). Model \({\mathcal {M}}\) defines the space of all possible contingency tables \({\mathcal {T}}_{\mathcal {M}}\) (under the model assumptions) and the probability \(P(\tau _i\mid {\mathcal {M}})\) of each table \(\tau _i\in {\mathcal {T_M}}\). Because the task is to test independence, the assumed model should satisfy the independence assumption \(P({\mathbf {X}}A)=P({\mathbf {X}})P(A)\) in some form. For the probabilities \(P(\tau _i\mid {\mathcal {M}})\) holds

Now the probability of the observed contingency table \(\tau _ o \) or any \(\tau _i\), \(\tau _i\succeq \tau _ o \), is the desired p-value

Classically, statistical models for independence testing have been divided into three main categories (sampling schemes) (Barnard 1947; Pearson 1947), which we call multinomial, double binomial, and hypergeometric models. In the statistics literature (e.g. Barnard 1947; Upton 1982), the corresponding sampling schemes are called double dichotomy, 2\(\times \)2 comparative trial, and 2\(\times \)2 independence trial.

In the following we describe the three models using the classical urn metaphor. However, because there are two binary variables of interest, \(I_{\mathbf {X}}\) and A, we cannot use the basic urn model with white and black balls. Instead, we will use an apple basket model, with red and green, sweet and bitter apples, like in Example 2.

3.2.1 Multinomial model

In the multinomial model, it is assumed that the real probabilities of sweet red apples, bitter red apples, sweet green apples, and bitter green apples are defined by parameters \(p_{X A}\), \(p_{X\lnot A}\), \(p_{\lnot X A}\), and \(p_{\lnot X \lnot A}\). The probability of red apples is \(p_X\) and of green apples \(1-p_X\). Similarly, the probability of sweet apples is \(p_A\) and of bitter apples \(1-p_A\). According to the independence assumption, \(p_{X A}=p_X p_A\), \(p_{X\lnot A}=p_X(1-p_A)\), \(p_{\lnot X A}=(1-p_X)p_A\), and \(p_{\lnot X\lnot A}=(1-p_X)(1-p_A)\). A sample of n apples is taken randomly from an infinite basket (or from a finite basket with replacement). Now the probability of obtaining \(N_{X A}\) sweet red apples, \(N_{X\lnot A}\) bitter red apples, \(N_{\lnot X A}\) sweet green apples, and \(N_{\lnot X\lnot A}\) bitter green apples is defined by multinomial probability

Since data size n is given, the contingency tables can be defined by triplets \(\langle N_{X A},\)\(N_{X\lnot A},N_{\lnot X A}\rangle \) or, equivalently, triplets \(\langle N_X, N_A, N_{X A}\rangle \). Therefore, the space of all possible contingency tables is

For estimating the p-value with Eq. (10), we should still solve two problems. First, the parameters \(p_X\) and \(p_A\) are unknown. The most common solution is to estimate them by the observed relative frequencies (maximum likelihood estimates). Second, we should decide when a contingency table \(\tau _i\) is equally or more extreme than the observed contingency table \(\tau _ o \). For this purpose, we have to select the discrepancy measure, which evaluates the overall dependence in a contingency table, when only the data size n is fixed. Examples of such measures are leverage and the odds ratio.

In practice, the multinomial test is seldom used, but the multinomial model is an important theoretical model, from which other models can be derived as special cases.

3.2.2 Double binomial model

In the double binomial model, it is assumed that we have two infinite baskets, one for red and one for green apples. Let us call these the red and the green basket. In the red basket the probability of sweet apples is \(p_{A|X}\) and of bitter apples \(1-p_{A|X}\), and in the green basket the probabilities are \(p_{A|\lnot X}\) and \(1-p_{A|\lnot X}\). According to the independence assumption, the probability of sweet apples is the same in both baskets: \(p_A=p_{A|X}=p_{A|\lnot X}\). A sample of \( fr ({\mathbf {X}})\) apples is taken randomly from the red basket and another random sample of \( fr (\lnot {\mathbf {X}})\) apples is taken from the green basket. The probability of obtaining \(N_{X A}\) sweet apples among the selected \( fr ({\mathbf {X}})\) red apples is defined by the binomial probability

Similarly, the probability of obtaining \(N_{\lnot X A}\) sweet apples among the selected green apples is

Because the two samples are independent from each other, the probability of obtaining \(N_{X A}\) sweet apples from \( fr ({\mathbf {X}})\) red apples and \(N_{\lnot X A}\) sweet apples from \( fr (\lnot {\mathbf {X}})\) green apples is the product of the two binomials

where \(N_A=N_{X A}+N_{\lnot X A}\) is the total number of the obtained sweet apples. (Here \( fr (\lnot {\mathbf {X}})\) was dropped from the condition, because n is given.) We note that the double binomial probability is not exchangeable with respect to the roles of \({\mathbf {X}}\) and A, i.e., generally \(P(N_{X A},N_{\lnot X A}\mid n, fr ({\mathbf {X}}),p_A)\ne P(N_{X A},N_{X\lnot A}\mid n, fr (A),p_X).\) In practice, this means that the probability of obtaining \( fr ({\mathbf {X}}A)\) sweet red apples, \( fr ({\mathbf {X}}\lnot A)\) bitter red apples, \( fr (\lnot {\mathbf {X}}A)\) sweet green apples, and \( fr (\lnot {\mathbf {X}}\lnot A)\) bitter green apples is (nearly always) different in the model of the red and green baskets from the model of the sweet and bitter baskets.

Since \( fr ({\mathbf {X}})\) and \( fr (\lnot {\mathbf {X}})\) are given, each contingency table is defined as a pair \(\langle N_{X A},N_{\lnot X A}\rangle \) or, equivalently, \(\langle N_{A},N_{X A}\rangle \). The space of all possible contingency tables is

We note that \(N_A\) is not fixed, and therefore \(N_A\) is generally not equal to the observed \( fr (A)\).

For estimating the significance with Eq. (10), we should estimate the unknown parameter \(p_A\) and select a discrepancy measure, like leverage or odds ratio. Then the exact p-value is obtained by summing over all possible values of \(N_{XA}\) and \(N_{\lnot XA}\) where the dependence is sufficiently strong. However, often this is considered impractical and the p-value is approximated with asymptotic tests, which are discussed later.

3.2.3 Hypergeometric model

In the hypergeometric model, there is no sampling from an infinite basket. Instead, we can assume that we are given a finite basket of n apples, containing exactly \( fr ({\mathbf {X}})\) red apples and \( fr (\lnot {\mathbf {X}})\) green apples. We test all n apples and find that \( fr ({\mathbf {X}}A)\) of red apples and \( fr (\lnot {\mathbf {X}}A)\) of green apples are sweet. The question is how probable is our basket, or the set of all at least equally extreme baskets, among all possible apple baskets with \( fr ({\mathbf {X}})\) red apples, \( fr (\lnot {\mathbf {X}})\) green apples, \( fr (A)\) sweet apples, and \( fr (\lnot A)\) bitter apples.

Now the baskets correspond to contingency tables. The number of all possible baskets with the fixed totals \( fr ({\mathbf {X}})\), \( fr (\lnot {\mathbf {X}})\), \( fr (A)\), and \( fr (\lnot A)\) is

(We recall that customarily \({m \atopwithdelims ()l }=0\), when \(l>m\).) Assuming that all baskets with these fixed totals are equally likely, the probability of a basket with \(N_{X A}\) sweet red apples is

Because all totals are fixed, the extremeness relation is also easy to define. Positive dependence is stronger than observed, when \(N_{X A}> fr ({\mathbf {X}}A)\). For the p-value it is enough to sum the probabilities of baskets containing at least \( fr ({\mathbf {X}}A)\) sweet red apples. The resulting p-value is

where \(J_1=\min \{ fr ({\mathbf {X}}\lnot A), fr (\lnot {\mathbf {X}}A)\}\). (Instead of \(J_1\) we could give an upper range \( fr (A)\), because the zero terms disappear.) This p-value is known as Fisher’s p, because it is used in Fisher’s exact test, an exact permutation test. We give it a special symbol \(p_F\), because it will be used later. For negative dependence between red and sweet apples (or positive dependence between green and sweet apples) the p-value is

where \(J_2=\min \{ fr ({\mathbf {X}}A), fr (\lnot {\mathbf {X}}\lnot A)\}\).

3.2.4 Asymptotic measures

We have seen that the p-values in the multinomial and double binomial models are quite difficult to calculate. However, the p-value can often be approximated easily using asymptotic measures. With certain assumptions, the resulting p-values converge to the correct p-values, when the data size n (or \( fr ({\mathbf {X}})\) and \( fr (\lnot {\mathbf {X}})\)) tend to infinity. In the following, we introduce two commonly used asymptotic measures for independence testing: the \(\chi ^2\)-measure and mutual information. In statistics, the latter corresponds to the log likelihood ratio (Neyman and Pearson 1928).

The main idea of asymptotic tests is that instead of estimating the probability of the contingency table as such, we calculate some better behaving test statistic \( T \). If \( T \) gets value \( t \), we estimate the probability of \(P( T \ge t )\) (assuming that large \( T \)-values indicate a strong dependency).

In the case of the \(\chi ^2\)-test, the test statistic is the \(\chi ^2\)-measure. Now the variables are binary and Eq. (4) reduces into a simpler form:

So, in principle, each term measures how much the observed frequency \( fr (I_{\mathbf {X}}{=}i,A{=}j)\) deviates from its expectation \(nP(I_{\mathbf {X}}{=}i)P(A{=}j)\) under the independence assumption. If the data size n is sufficiently large and none of the expected frequencies is too small, the \(\chi ^2\)-measure follows approximately the \(\chi ^2\)-distribution with one degree of freedom. As a classical rule of thumb (Fisher 1925), the \(\chi ^2\)-measure can be used only, if all expected frequencies \(nP(I_{\mathbf {X}}{=}i)P(A{=}j)\), \(i, j\in \{0,1\}\), are at least 5. However, the approximations can still be poor in some situations, when the underlying binomial distributions are skewed, e.g., if P(A) is near 0 or 1, or if \( fr ({\mathbf {X}})\) and \( fr (\lnot {\mathbf {X}})\) are far from each other (Yates 1984; Agresti 1992). According to Carriere (2001), this is quite typical for data in medical science.

One reason for the inaccuracy of the \(\chi ^2\)-measure is that the original binomial distributions are discrete while the \(\chi ^2\)-distribution is continuous. A common solution is to make a continuity correction and subtract 0.5 from the expected frequency \(nP({\mathbf {X}})P(A)\). According to Yates (1984) the resulting continuity corrected \(\chi ^2\)-measure can give a good approximation to Fisher’s \(p_F\), if the underlying hypergeometric distribution is not markedly skewed. However, according to Haber (1980) the resulting \(\chi ^2\)-value can underestimate the significance, while the uncorrected \(\chi ^2\)-value overestimates it.

Mutual information is another popular asymptotic measure, which has been used to test independence. For binary variables Eq. (5) becomes

Mutual information is actually an information theoretic measure, but in statistics \(2n\cdot \textit{MI}\) is known as log likelihood ratio or the G-test of independence. It follows asymptotically the \(\chi ^2\)-distribution (Wilks 1935) and often it gives similar results to the \(\chi ^2\)-measure (Vilalta and Oblinger 2000). However, sometimes the two tests can give totally different results (Agresti 1992).

3.2.5 Selecting the right model

Selecting the right sampling model and defining the extremeness relation is a controversial problem, which statisticians have argued for the last century (see e.g., Yates 1984; Agresti 1992; Lehmann 1993; Upton 1982; Howard 1998). Therefore, we cannot give any definite recommendations which model to select but each situation should be judged in its own context.

The main decision is whether the analysis should be done conditionally or unconditionally and which variables N, \(N_X\), or \(N_A\) should be considered fixed. In the multinomial model all variables except \(N{=}n\) are randomized. However, if the model is conditioned with \(N_X= fr ({\mathbf {X}})\), it leads to the double binomial model. If the double binomial model is conditioned with \(N_A= fr (A)\), it leads to the hypergeometric model. For completeness, we could also consider the Poisson model where all variables, including N, are unfixed Poisson variables. If the Poisson model is conditioned with the given data size, \(N{=}n\), it leads to the multinomial model (Lehmann and Romano 2005, ch. 4.6–4.7).

In principle, the sampling scheme should be decided before the data is gathered. However, in pattern discovery the data may not be sampled according to a particular scheme. In this situation the main choices are to perform an unconditional analysis where none of the margins are considered fixed or a conditional analysis where all margins are considered fixed. The main argument of the unconditional approach is that the results are better generalizable outside the data set, if some variables are kept unfixed. However, both multinomial and double binomial models are computationally demanding, and in practice the corresponding asymptotic tests have been used instead. The opponents have argued that the unconditional approach is also conditional on the data, since the unknown parameters (\(p_X\) and/or \(p_A\)) are anyway estimated from the observed counts (\( fr ({\mathbf {X}})\) and/or \( fr (A)\)). Therefore, Fisher and his followers have suggested that we should always assume both \(N_X\) and \(N_A\) fixed and use Fisher’s exact test or—when it is heavy to compute—a suitable asymptotic test.

In pattern discovery the most popular choices for evaluating dependency rules and other similar bipartition dependence patterns in the variable-based interpretation have been Fisher’s exact test (e.g., Hämäläinen 2012; Terada et al. 2013b, 2015; Llinares López et al. 2015; Jabbar et al. 2016) and the \(\chi ^2\)-test (e.g., Morishita and Sese 2000; Morishita and Nakaya 2000; Nijssen and Kok 2006; Hämäläinen 2011; Jin et al. 2012; Terada et al. 2015). Both of these tests have also been used for evaluating significance of improvement (see Sect. 4). According to our cross-validation experiments (Hämäläinen 2012), the \(\chi ^2\)-measure can be quite unreliable, in the sense that the discovered dependency rules may not hold in the test data at all or their lift and leverage values differ significantly between the training and test sets. The problem is alleviated to some extent when the continuity correction is used, but still the errors can be considerable. On the contrary, Fisher’s p has turned out to be a very robust and reliable measure in the dependency rule search and we recommend it as a first choice whenever applicable. There is also an accurate approximation of Fisher’s p when faster evaluation is needed (Hämäläinen 2016). Mutual information is also a good alternative and it often produces the same rules as \(p_F\).

3.3 Sampling models for the value-based interpretation

In the value-based interpretation the idea is that we would like to find events \({\mathbf {X}}A\) or \({\mathbf {X}}\lnot A\), which express a strong positive dependency, even if the dependency between variables \(I_{\mathbf {X}}\) and A were relatively weak. In this case the strength of the dependency is usually measured by lift, because leverage has the same absolute value for all events \({\mathbf {X}}A\), \({\mathbf {X}}\lnot A\), \(\lnot {\mathbf {X}}A\), \(\lnot {\mathbf {X}}\lnot A\). However, lift alone is not a reliable measure, because it obtains its maximum value also when \( fr ({\mathbf {X}}A\,{=}\,i)= fr ({\mathbf {X}})= fr (A\,{=}\,i)=1\) (\(i\in \{0,1\}\))—i.e., when the rule occurs on just one row (Hahsler et al. 2006). Such a rule is quite likely due to chance and hardly interesting (see Example 1). Therefore, we should evaluate the probability of observing such a large lift value, if \({\mathbf {X}}\) and A were actually independent (independence testing, \(H_0\): \({\varGamma }=1\) (Benjamini and Leshno 2005)) or, alternatively, that the lift is at most some threshold \(\gamma _0>1\) (\(H_0\): \({\varGamma }\le \gamma _0\) (Lallich et al. 2007)).

The p-value is defined like in the variable-based testing by Eq. (10). The only difference is how to define the extremeness relation \(\tau _i \succeq \tau _j\). A necessary condition for the extremeness of table \(\tau _i\) over \(\tau _j\) is that in \(\tau _i\) the lift is larger than in \(\tau _j\). However, since the lift is largest, when \(N_X\) and/or \(N_A\) are smallest (and \(N_{X A}=N_X\) or \(N_{X A}=N_A\)), it is sensible to require that also \(N_{X A}\) is larger in \(\tau _i\) than in \(\tau _j\). If both \(N_X\) and \(N_A\) are fixed, then the lift is larger than observed if and only if the leverage is larger than observed, and it is enough to consider tables where \(N_{X A}\ge fr ({\mathbf {X}}A)\). However, if either \(N_X\), \(N_A\), or both are unfixed, then we should always check the lift \({\varGamma }=\frac{nN_{X A}}{N_X N_A}\) and compare it to the observed lift \(\gamma ({\mathbf {X}},A)\).

In the following, we will describe different approaches for evaluating statistical significance of dependency rules in the value-based interpretation. The approaches fall into two categories depending on whether the dependence is tested only in the part of data where the rule antecedent holds or in the whole data. We will call these main strategies partial and complete evaluation of significance according to corresponding measures that are called partial and complete evaluators (Vilalta and Oblinger 2000). We introduce three approaches: partial evaluation with a single binomial test, complete evaluation under the classical sampling models, and complete evaluation with a single binomial test. Finally, we discuss the problem of selecting the right model.

3.3.1 Partial evaluation with a single binomial test

In the previous research on association rules, some authors (Dehaspe and Toivonen 2001; Lallich et al. 2007, 2005; Bruzzese and Davino 2003; Megiddo and Srikant 1998) have speculated how to test the null hypothesis \({\varGamma }=1\). For some reason, it has often been taken for granted that one should perform partial evaluation and evaluate significance of rule \({\mathbf {X}}\rightarrow A\)in the part of the data where\({\mathbf {X}}\)is true. As a solution, it has been suggested to use only a single binomial from the double binomial model. This is equivalent to assuming two infinite baskets of apples, the red and green one, but taking only a sample of \( fr ({\mathbf {X}})\) apples from the red basket and trying to decide whether there is a dependency between the red colour and sweetness. It is assumed that \(N_{X A}\sim Bin( fr ({\mathbf {X}}),p_A)\) and the unknown parameter \(p_A\) is estimated from the data, as usual. For positive dependence the p-value is defined as (Dehaspe and Toivonen 2001)

and for negative dependence as (Dehaspe and Toivonen 2001)

We see that \(N_X= fr ({\mathbf {X}})\) is the only variable that has to be fixed—even N can be unfixed. We note that since \(N_A\) is unfixed, i goes from \( fr ({\mathbf {X}}A)\) to \( fr ({\mathbf {X}})\) (and not to \(\min \{ fr ({\mathbf {X}}), fr (A)\}\)) in the case of positive dependence (Lallich et al. 2005). The idea is that when \(N_{X A}\ge fr ({\mathbf {X}}A)\), then \(\frac{N_{X A}}{ fr ({\mathbf {X}})}\ge P(A|{\mathbf {X}})\), and since \(p_A=P(A)\) was fixed, then also \({\varGamma }\ge \gamma ({\mathbf {X}},A)\). Similarly, in the negative case \({\varGamma }\le \gamma ({\mathbf {X}},A)\). So, the test checks correctly all cases where the lift is at least as large (or as small) as observed.

Since the cumulative binomial probability is quite difficult to calculate, it is common to estimate it asymptotically by the z-score. The z-score measures how many standard deviations the observed frequency deviates from its expectation. In the case of positive dependence, the binomial variable \(N_{X A}\) has expected value \({\hat{\mu }}= fr ({\mathbf {X}})P(A)\) and standard deviation \({\hat{\sigma }}=\sqrt{ fr ({\mathbf {X}})P(A)P(\lnot A)}\). The corresponding z-score is (Lallich et al. 2005; Bruzzese and Davino 2003)

If \( fr ({\mathbf {X}})\) is sufficiently large and P(A) is not too near to 1 or 0, the z-score follows the standard normal distribution. However, when the expected frequency \( fr ({\mathbf {X}})P(A)\) is low (as a rule of thumb \(<5\)), the binomial distribution is positively skewed. This means that the z-score overestimates the significance.

It is also possible to construct a partial evaluator from the mutual information (Eq. 16) by ignoring terms related to \(\lnot {\mathbf {X}}\). The result is known as J-measure (Smyth and Goodman 1992):

However, it is an open problem how the corresponding p-value could be evaluated and whether the J-measure could be used for estimating statistical significance.

The problem of all partial evaluators is that two rules with different antecedents \({\mathbf {X}}\) are not comparable. So, all rules (with different \({\mathbf {X}}\)) are thought to be from different populations and are tested in different parts of the data. We also note that the single binomial probability (like the double binomial probability) is not an exchangeable measure in the sense that generally \(p({\mathbf {X}}\rightarrow A)\ne p(A\rightarrow {\mathbf {X}})\). The same holds for the corresponding z-score and J-measure. This can be counter-intuitive when the task is to search for statistical dependencies, and these measures should be used with care. In addition, with this binomial model the significance of the positive dependence between \({\mathbf {X}}\) and A is generally not the same as the significance of the negative dependence between \({\mathbf {X}}\) and \(\lnot A\). With the corresponding z-score the significance values are related, and

where \( z_{pos} \) denotes the z-score of positive dependence and \( z_{neg} \) the z-score of negative dependence. With the J-measure the significance of positive dependence between \({\mathbf {X}}\) and A and the significance of negative dependence between \({\mathbf {X}}\) and \(\lnot A\) are equal.

3.3.2 Complete evaluation under the classical sampling models

Let us now analyze the value-based significance of dependency rules using the classical statistical models. For simplicity we consider only positive dependence. We assume that the extremeness relation is defined by lift \({\varGamma }\) and frequency \(N_{X A}\), i.e., a contingency table is more extreme than the observed contingency table, if it has \({\varGamma }\ge \gamma ({\mathbf {X}},A)\) and \(N_{X A}\ge fr ({\mathbf {X}}A)\).

In the multinomial model only the data size \(N=n\) is fixed. Each contingency table, described by triplet \(\langle N_X, N_A, N_{X A}\rangle \), has probability \(P(N_{X A},N_{X}-N_{X A},N_A-N_{X A},n-N_X-N_A+N_{X A}\mid n,p_X,p_A)\), defined by Eq. (11). The p-value is obtained when we sum over all possible triplets where \({\varGamma }\ge \gamma ({\mathbf {X}},A)\):

where \(Q_1=\frac{nN_{X A}}{\gamma ({\mathbf {X}},A) N_X}\). (We note that the terms are zero, if \(N_X<N_{X A}\).)

In the double binomial model \(N_X= fr ({\mathbf {X}})\) is also fixed. Each contingency table, described by pair \(\langle N_A,N_{X A}\rangle \), has probability \(P(N_{X A},N_A-N_{X A}\mid n, fr ({\mathbf {X}}),p_A)\) by Eq. (12). Now we should sum over all possible pairs, where \({\varGamma }\ge \gamma ({\mathbf {X}},A)\):

where \(Q_2=\frac{nN_{X A}}{\gamma ({\mathbf {X}},A) fr ({\mathbf {X}})}\).

In the hypergeometric model also \(N_A= fr (A)\) is fixed. As noted before, the extremeness relation is now the same as in the variable-based case and the p-value is defined by Eq. (13). This is an important observation, because it means that Fisher’s exact test tests significance also in the value-based interpretation. The same is not true for the first two models, where rule \({\mathbf {X}}\rightarrow A\) can get a different p-value in variable-based and value-based interpretations.

3.3.3 Complete evaluation with a single binomial test

When \(N_X\) and/or \(N_A\) are unfixed the p-values are quite heavy to compute. Therefore, we will now introduce a simple binomial model (suggested by Hämäläinen (2010b) and as model 2 by Lallich et al. (2005)), where it is enough to sum over just one variable. The binomial probability can be further estimated by an equivalent z-score or the z-score can be used as an asymptotic test measure as such. Contrary to the previously described binomial test, this test performs a complete evaluation in the whole data set, which means that the p-values of different rules are comparable.

Let us suppose that we have an infinite basket of apples where the probability of red and sweet apples is \(p_{XA}\). According to the independence assumption \(p_{XA}=p_Xp_A\). A sample of n apples is taken randomly from the basket. The probability of obtaining \(N_{XA}\) sweet red apples among all n apples is defined by binomial probability

Since \(N_{X A}\) is the only variable which occurs in the probability, the extremeness relation is defined simply by \(\tau _i \succeq \tau _ o \Leftrightarrow N_{X A}\ge fr ({\mathbf {X}}A)\). When the unknown parameters \(p_X\) and \(p_A\) are estimated from the data, the p-value of rule \({\mathbf {X}}\rightarrow A\) becomes

Since \(N_{X A}\) is a binomial variable with expected value \({\hat{\mu }}=nP({\mathbf {X}})P(A)\) and standard deviation \({\hat{\sigma }}=\sqrt{nP({\mathbf {X}})P(A)(1-P({\mathbf {X}})P(A))}\), the corresponding z-score is

Because the discrete binomial distribution is approximated by the continuous normal distribution, the continuity correction can be useful, like with the \(\chi ^2\)-measure.

We note that this binomial probability and the corresponding z-score are exchangeable, which is intuitively a desired property. However, the statistical significance of positive dependence between \({\mathbf {X}}\) and A is generally not the same as the significance of negative dependence between \({\mathbf {X}}\) and \(\lnot A\). For example, the z-score for negative (or, equally, positive) dependence between \({\mathbf {X}}\) and \(\lnot A\) is

3.3.4 Selecting the right model