Abstract

With the increase in the non-linearity and complexity of the driving system’s environment, developing and optimizing related applications is becoming more crucial and remains an open challenge for researchers and automotive companies alike. Model predictive control (MPC) is a well-known classic control strategy used to solve online optimization problems. MPC is computationally expensive and resource-consuming. Recently, machine learning has become an effective alternative to classical control systems. This paper provides a developed deep neural network (DNN)-based control strategy for automated steering deployed on FPGA. The DNN model was designed and trained based on the behavior of the traditional MPC controller. The performance of the DNN model is evaluated compared to the performance of the designed MPC which already proved its merit in automated driving task. A new automatic intellectual property generator based on the Xilinx system generator (XSG) has been developed, not only to perform the deployment but also to optimize it. The performance was evaluated based on the ability of the controllers to drive the lateral deviation and yaw angle of the vehicle to be as close as possible to zero. The DNN model was implemented on FPGA using two different data types, fixed-point and floating-point, in order to evaluate the efficiency in the terms of performance and resource consumption. The obtained results show that the suggested DNN model provided a satisfactory performance and successfully imitated the behavior of the traditional MPC with a very small root mean square error (RMSE = 0.011228 rad). Additionally, the results show that the deployments using fixed-point data greatly reduced resource consumption compared to the floating-point data type while maintaining satisfactory performance and meeting the safety conditions

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

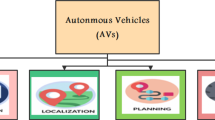

The rapid increase in the number of cars on the roads has increased the risks associated with safety and traffic congestion. Recently, autonomous vehicles are being considered as a potential solution to overcome these problems. The autonomous vehicle can achieve more robust systems by providing more efficient driving systems and automated control [1]. The core functions of the autonomous vehicle can be divided into three main categories: perception, planning, and control (See Fig. 1). The environment perception provides the vehicle with the required information about the surrounding driving environment, including the vehicle’s location, the drivable areas, the velocity, etc. Different sensors and tools can be implemented to tackle the perception task, such as using ultrasonic sensors, cameras, LiDARs (Light Detection And Ranging), or even a combination of these (sensor fusion) to decrease the uncertainty of the data [2]. Based on the collected data, the best scenarios are obtained and the required control actions are made in the planning module in order to drive the vehicle efficiently to the desired location. In the control function, the commands are sent to the actuators to put the control strategy into action [3]. Model predictive control (MPC) is one of the most commonly used control strategies due to its ability to solve an online optimization problem and handle soft and hard constraints. However, for complex and high non-linear systems, the implementation of MPC is a great challenge. It is often infeasible due to its high computational demands, especially with resource-limited embedded platforms [4]. Consequently, the competition in this field has concentrated on developing more efficient control strategies in terms of minimizing the computational loads and the execution time. The significant performance of the machine learning methods with a variety of applications in different fields brought attention to the importance of making use of them with automotive driving systems. The promising results of the deep neural networks associated with environment perception and motion planning sparked interest in developing deep neural network-based control strategies [5, 6]. The deep neural network is considered a self-optimized method due to its ability to optimize its behavior based on the provided information, and that makes DNNs suitable for complex dynamic systems [7].

Additionally, deep neural networks offer many other benefits in regards of reducing the execution time and the computational load, which makes implementations on limited-resource HW (Hardware) more efficient [8].

The main contribution of this work is the presentation of a novel FPGA implementation method of deep neural networks “IP Generator Tool”, where MPC-based DNN model for automated driving system is the application case that is used to prove the robustness of the new tool. Some papers propose implementations of model predictive controllers on FPGAs considering different implementation methods such as high-level synthesis [9], a Xilinx System Generator [10] or even HDL (Hardware Description Language) [11]. In this paper, the suggested solution is to develop a deep neural network model based on the behavior of the traditional MPC controller so that the DNN model can replace the MPC controller in high complexity driving system environments. Additionally, a new automatic IP generator tool is developed to optimize the deployments of the DNN on FPGA, meaning that low-end FPGA can be used. The paper is structured as follows: The second section provides background and discusses the state of the art concerning the most commonly used control strategies and deployment methods on different embedded platforms. In the third section, the designing process of the vehicle model and the traditional MPC controller are presented. In section four, the the DNN model was designed. The procedures of the auto-generation of the DNN IP and the implementations process of the entire solution for DNN on a hardware accelerator are presented in section five. Results and discussion are found in section six, while the conclusions and the future direction of this research are presented in the final section.

2 Background

Different control strategies can be used to perform the automated steering task, such as classical feedback control (such as the PID controller), dynamic control and model-based control. In this context, model predictive control is one of the most commonly used control strategies for the steering task, known for its efficiency due to its ability to solve an online optimization problem through handling multiple inputs and outputs taking into consideration the interactions between these variables. The prediction strategy of the MPC controller is performed over the prediction horizon, which represents the next P time steps that the controller looks forward to the future [12]. The MPC controller simulates several future scenarios, and the optimizer chooses the best scenario based on the cost function, which represents the error between the reference target and the predicted outputs. The optimal scenario corresponds to the minimum cost function. Figure 2 shows the main structure of the MPC controller. The MPC controller is computationally expensive since it solves the online optimization problems every time step, which requires high computing capabilities and large memory. The computational load and the high resource consumption make the implementations of MPC on limited resources a big challenge [13]. Despite the increasing popularity of Python, MATLAB is still a powerful environment that users in various fields are utilizing for a huge range of applications. A MATLAB toolbox was released in September 2020 for the creation of DNN IPs for FPGAs [14], but this tool forces the developer to use high-end FPGA, even for small DNN structures. Some works propose different methods or tools to implement DNNs on FPGA [15,16,17,18,19,20,21]. As mentioned earlier and beside developing the DNN model, one of the motivations behind the work performed in this article is to propose an alternative tool to implement deep neural networks on low-end FPGA. The work includes creating the back-end of an automated tool to generate deep neural network Intellectual Properties (IPs) for further FPGA implementation.

3 Design of the MPC controller

The MPC drives the vehicle to the target point along the desired trajectory by controlling the lateral deviation d and the relative yaw angle \(\theta \) of the vehicle. Maintaining these variables to be zero or as close as possible to zero is the online optimization problem that the MPC controller must handle in real time. Since MPC is a model-based controller, the design process has two main steps, designing the plant model (the vehicle) first and then designing the MPC controller in the second step. The design process includes tuning the parameters of the controller and formulating the operating conditions that are imposed by the system in the form of soft and hard constraints.

3.1 The vehicle model

The dynamic model is represented by Eqs. 1, 2 and 3. Figure 3 shows the global position of the vehicle, where \(v_x\), \(v_y\) are the longitudinal and the lateral velocities respectively, d is the lateral deviation, m is the total mass of the vehicle, \(l_r\) is the distance between the rear tire and the center of the gravity, \(l_f\) is the distance between the front tire and the center of the gravity, \(l_z\) is yaw moment, \(c_f\), \(c_r\) are the corner stiffness of the front and rear tires respectively, \(\delta \) is the steering angle, \(\theta \) is the yaw angle, \(\rho \) is the curvature, and \(\omega \) is the yaw rate. The lateral and yaw motions are determined by the fundamental laws of motion, meaning that they are determined by the forces that are applied on the front and rear tires.

3.2 The MPC model

The first step in designing the MPC model is to determine the input–output signals of the vehicle model and the second step is to set the parameters and determine the constraints. The manipulated variable (steering angle \(\delta \)) and the disturbance (\(v_x\) \(\rho \)) are determined as inputs, while lateral velocity \(v_y\), lateral deviation d, yaw angle \(\theta \), and yaw rate \(\omega \) are determined as outputs. The design parameters of the MPC controller were tuned during the design process and several standard recommendations were taken into consideration to determine their values. Sample time (\(T_s\)) determine the rate that MPC controller executes the control algorithm. In the case of long \(T_s\), the controller will not be able to response in time to the disturbance. On the other hand, in case of too small \(T_s\), the controller will response faster, but the computational loads will increase. Prediction horizon (P) is chosen in a way that covers the dynamic changes of the environment. The recommendation is to chose P to be 20 to 30 samples. By taking into consideration that the first two control actions have the highest impact on the response behaviour, determining a large control horizon (M) increases the computational load, while a small M increase the stability. The parameters of the MPC model are determined as flows: the sample time \(T_s = 0.1\) s, the prediction horizon \(P = 2\) s, and the control horizon \(M = 2\) s. The constraints are determined as follows: the steering angle is in the range [\(-\)1.04, 1.04] rad and the yaw angle rate is in the range [\(-\)0.26, 0.26] rad. The parameters were maintained during the design process until satisfactory behavior was obtained. The overall design of the MPC and plant model is shown in Fig. 4.

4 Design of the DNN model

The model architecture, the data preparation, the training, the validation and the testing processes were carried out taking into consideration the nature of the task that the controller is dedicated for. The architecture of the suggested model consists of an input layer with 6 observations and inputs (yaw angle \(\theta \), lateral deviation d, lateral velocity \(v_x\), yaw angle rate \(\omega \), distribution \(\rho \hspace{1pt} v_x\), and the previous control action \(\delta ^*\)), an output layer (regression layer) with one output (steering angle) and fully connected hidden layers. The output layer holds the mean-squared error as a loss function (Fig. 5). After designing the MPC controller, the efficiency of the MPC controller in solving the determined optimization problem (driving the vehicle to the desired trajectory) is verified in order to generate the data set that is used to train the DNN model.

4.1 Data preparation and training process

The data set is generated by implementing the MPC controller against a massive number of scenarios that cover the maximum number of the possible environment’s states, and then obtaining and recording the control actions in the data set. The size and type of the generated data set is (120,000 \(\times \) 6), double data type, where 6 refer to the number of the state space variables. The generated data set is divided into three sets, which are: training set that is used to train the model, validation set that is used to validate the model during the training and testing set that is used to test the model after being trained. After designing the deep neural network, defining the training options, the training process is performed using the training and the validation data sets. The training stops after the final iteration. The details showed that 9680 iterations are needed to perform the training, 40 epochs and 242 iterations per each (40 *242 = 9680). The validation process was performed every 50 iterations. The validation loss (root mean square error RMSE) was almost the same for each mini batch (RMSE = 0.010799), which means that the trained DNN does not over fit. After, the trained neural network was tested using the testing data set. The performance of the DNN model is evaluated comparing to the performance of the MPC controller, where the RMSE between the outputs of the controllers is calculated. The obtained root mean square error by the end of the testing process was: RMSE = 0.011228 rad, which is a very small compared to the range of the steering angle [\(-\)1.04, 1.04] rad. This small value indicates to that the DNN model successfully imitated the behavior of the MPC controller. The training options that is used to train the DNN model are presented in table 1.

5 Auto generation of DNN IP procedures

Custom IP generation is a tricky step but crucial for FPGA implementations. Various tools are provided for the designers to perform such steps, but all seem to be time-consuming, especially for applications that consume huge computational resources. Deep neural networks are becoming widely used in all fields, hence, their deployment must be simplified for developers, engineers, and scientists [22]. However, DNNs are time and resource consuming if they are implemented on sequential computational systems such as \(\mu P\)/\(\mu C\) (microprocessors/microcontrollers) or Digital Signal Processors (DSPs) [23]. That is why DNNs are more likely to be implemented with parallel computing systems such as FPGAs and graphical processing units (GPUs). It is evident that the most economical solution for these applications is to adapt a dedicated Application-specific integrated circuit (ASIC) conditionally upon mass production. GPU are known for their ability to execute several parallel processes, which makes their application for image-processing favorable [24]. Since the neural networks can be computed in a parallel way, GPU can be dedicated for such applications [9, 25,26,27]. However, they are known to be power consuming, which makes their use inadequate for embedded applications. Re-configurable computing is an efficient alternative solution for parallel processes where all the computations can be executed at the same time. The most common re-configurable technologies are FPGAs [10, 28], in addition, recently field-programmable analog array (FPAAs) have become a hot topic for research [26, 27]. However, because of their lack of hardware resources, the use of FPAAs are limited to scientific research applications [29]. Many studies have proven that FPGA-based neural network implementations provide much better results in terms of power consumption and timing performances [10, 22, 30]. However, the lack of FPGA hardware resources restricts their use to limited DNN sizes. In this context, the provided tool is developed to implement deep neural networks on low-end FPGAs, where the user is given the options to optimize the model (parameters, datatype,...ets) in away that achieve the balance between the available resources and the desired performance. The targeted FPGA which is used in this work is the Xilinx Kintex-7- KC705 chip, which is known for its hardware resources limitations. Nevertheless, the new tool has the ability to deploy the generated IP on larger FPGAs/SoCs. The tool is based on the Xilinx System Generator (XSG), where blocks are automatically invoked, parameterized, and linked from the script. The procedure of the neural network’s IP auto-generating has two main steps, as shown in Fig. 6.

The most common re-configurable technologies are FPGAs [18, 26], in addition, recently field-programmable analog array (FPAAs) have become a hot topic for research [16, 17]. However, because of their lack of hardware resources, the use of FPAAs are limited to scientific research applications [19]. Many studies have proven that FPGA-based neural network implementations provide much better results in terms of power consumption and timing performances [20, 24, 26]. However, the lack of FPGA hardware resources restricts their use to

First, the user is asked to define the parameters concerning the DNN (the structure, the data type and the activation function) and the targeted computational HW and the values of weights and biases parameters are imported from the pre-trained DNN. Then comes the step of setting the input/output interfacing mode, where 4 ways are available: UART, AXI, constrained parallel, and no interface modes. If the UART interfacing mode is selected, two additional pre-designed IPs are invoked that are responsible for receiving and transmitting UART data from/to the DNN IP. In this mode, the user is asked to specify the ports to be used for Tx and Rx. If the AXI mode is selected, it provides the possibility for the DNN IP to communicate with the processing system (PS) or the soft microprocessor core. If UART or AXI modes are set, additional IPs will be invoked (UART_Tx IP, UART_Rx IP, AXI-interface IP, and Processor System Reset IP), hence, some additional hardware resources and power are required. The other disadvantage of the AXI interfacing mode is its power consumption since PS consumes 1.53 W. In this paper, only the AXI interfacing mode is utilized (the UART mode will be treated in a future work). The third interfacing mode is the constrained parallel I/O where the user is asked to specify the ports to be used. This mode allows parallel communication from/to DNN, and hence there will be no latency caused by the data transmission and reception, however, this method consumes a lot of I/O resources. It is therefore not practical for the majority of the DNN applications. If no interface mode is selected, the connection is unconstrained, which permits IP-IP interconnection. Table 2 summarizes the interfacing possibilities for the presented tool, where AXI interface remains the appropriate one for the studied application in this paper After setting up the DNN-IP preferences, the XSG automation part begins, which consists of invoking the elementary computational components needed for each neuron, linking the components and the neurons, setting the weights and biases accordingly, implementing the I/O, and then generating the IP. Figure 7 shows the auto-generated DNN circuit on the Xilinx System Generator to be implemented on a low-end FPGA.

The implementation of the FPGA design is not a straightforward process due to the lack of a direct connection between the algorithms’ design and the hardware, in addition to the deviations that can be caused by the difference between the fixed-point and floating-point implementations of the algorithm’s specifications. Also, the hand-written code is error-prone and can be hard to debug. In order to address these problems and provide an integrated workflow with an unified environment for algorithm design, simulation, validation, and implementation, the suggested solution was performed using Matlab, Simulink, and the Xilinx System Generator-based tool “Automatic DNN IP Generator”. Figure 8 shows the detailed steps of implementation.

6 Results and discussion

The implementations of the traditional MPC, the DNN model, and the deployment of the DNN on FPGA are discussed in terms of performance, taking the response of the traditional MPC as reference behavior. In addition to the performance, the deployments using floating point and fixed-point data type can be discussed in terms of resource consumption. The performance of the controllers was evaluated based on the settling time \(T_s\), the overshoot \(M_p\), and the final value (steady-state) of the yaw angle and the lateral deviation of the vehicle. The overshooting shows the amount that the lateral deviation/yaw angle overshoots (exceeds) its target (final) value, while the settling time shows the time required to settle and reach the final value within a certain percentage. The settling time of the performance indicators were determined to be the time that the signal reach 5% (commonly used) of its final value. Figure 9 clearly shows that the DNN model and the traditional MPC have a very similar response, meaning that the DNN model successfully imitated the behavior of the traditional MPC, while the variant of DNN on FPGA has a slightly different response. In order to evaluate these behaviors, Fig. 10, Fig. 11 and Table 3 present the performance indicators, which show that the traditional MPC, the DNN model, and the DNN on FPGA version all successfully drive the lateral deviation and yaw angle to be zero or very close to zero as a desired steady state. The detailed results show that the settling time for both indicators is almost the same in the case of the traditional MPC and the DNN model, while approximately 0.342 s in the case of lateral deviation and 0.3013 s in regards of yaw angle were noticed in the behavior of the DNN on FPGA version. On the other hand, the behavior was very similar in regards of the overshooting, where only 0.0492 m for the lateral deviation and 0.0339 rad for the yaw angle were noticed in the response of the DNN on FPGA compared to the response of the traditional MPC. These results demonstrate that the trained DNN model provided satisfactory performance and the vehicle was driven smoothly to the desired destination. Despite the slightly higher overshooting and settling time that are noticed in the behaviour of the DNN after being deployed on hardware (FPGA) compared to the simulation, the DNN on FPGA provided a satisfactory performance and met the safety requirements that were determined in the designing process. In addition to performance, in order to evaluate the efficiency of deploying the DNN on FPGA in terms of resource consumption, the main estimated resource utilization of the deployments using fixed-point and floating-point data types were compared and presented in Table 4. The results show that DNN on FPGA using fixed-point data consumes fewer resources compared to using floating-point data, where \(86.29 \% \) of the LUTs and \(51.54 \%\) of the DSPs were saved from the overall resource availability of the FPGA board.

7 Conclusions

In this paper, a deep neural network was designed and trained based on the behavior of the traditional MPC controller. A new tool based on the Xilinx System Generator was developed to perform and optimize the deployments of the DNN model on FPGAs. Results showed that the trained model successfully imitated the behavior of the MPC controller with a very small root mean square error (\(RMSE = 0.011228\) rad). The trained DNN model was efficiently deployed on low-end FPGA Xilinx Kintex-7 FPGA KC705 using fixed-point data type, achieving satisfactory performance and meeting the design’s constraints.

References

Yurtsever E, Lambert J, Carballo A, Takeda K (2020) A survey of autonomous driving: common practices and emerging technologies. IEEE Access 8:58443–58469. https://doi.org/10.1109/ACCESS.2020.2983149

Best A, Narang S, Barber D, Manocha D (2017) AutonoVi: autonomous vehicle planning with dynamic maneuvers and traffic constraints. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp 2629–2636. https://doi.org/10.1109/IROS.2017.8206087

Pendleton SD et al (2017) Perception planning control and coordination for autonomous vehicles. Machines 5(1):6

Swief A, El-Zawawi A, El-Habrouk M, Eldin AN (2020) Approximate neural network model for adaptive model predictive control. In: 6th international computer engineering conference (ICENCO), pp 135–140. https://doi.org/10.1109/ICENCO49778.2020.9357373

Masmoudi M, Ghazzai H, Frikha M, Massoud Y (2019) Object detection learning techniques for autonomous vehicle applications. In: 2019 IEEE international conference on vehicular electronics and safety (ICVES), pp 1–5. https://doi.org/10.1109/ICVES.2019.8906437

Lamouik I, Yahyaouy A, Sabri MA (2018) Deep neural network dynamic traffic routing system for vehicles. In: 2018 international conference on intelligent systems and computer vision (ISCV), pp 1–4. https://doi.org/10.1109/ISACV.2018.8354012

Rausch V, Hansen A, Solowjow E, Liu C, Kreuzer E, Hedrick JK (2017) Learning a deep neural net policy for end-to-end control of autonomous vehicles. In: 2017 American control conference (ACC), pp 4914–4919. https://doi.org/10.23919/ACC.2017.7963716

Li Q, Xiao Q, Liang Y (2017) Enabling high performance deep learning networks on embedded systems. In: IECON 2017—43rd annual conference of the IEEE industrial electronics society, pp 8405–8410. https://doi.org/10.1109/IECON.2017.8217476

Bin Khalid SA, Liegmann E, Karamanakos P, Kennel R (2020)High-level synthesis of a long horizon model predictive control algorithm for an FPGA. In: PCIM Eur. digit days 2020, Int. Exhib. Conf. Power Electronics, Intell. Motion, Renewable Energy and Energy Manage, pp 1–8

Singh VK, Tripathi RN, Hanamoto T (2021) Implementation strategy for resource optimization of FPGA-based adaptive finite control set-MPC using XSG for a VSI system. IEEE J Emerg Sel Top Power Electron 9(2):2066–2078

Ingole D, Holaza J, TakÃics B, Kvasnica M (2015)FPGA-based explicit model predictive control for closed-loop control of intravenous anesthesia. In: 20th international conference on process control (PC), pp 42–47. https://doi.org/10.1109/PC.2015.7169936

Kumar K, Davim JP (2019) Brief review of mathematical optimization. International Society for Technology in Education (ISTE)

Ghaffari A, Savaria Y (2020) CNN2Gate: an implementation of convolutional neural networks inference on FPGAS with automated design space exploration. Electronics 9(12):2200. https://doi.org/10.3390/electronics9122200

Deep Learning HDL Toolbox. MathWorks [Online]. https://www.mathworks.com/help/pdf_doc/deep-learning-hdl/dlhdl_ug.pdf

Moolchandani D, Kumar A, Sarangi SR (2021) Accelerating CNN inference on ASICs: a survey. J Syst Architect. https://doi.org/10.1016/j.sysarc.2020.101887

Moreno DG, Garcia AAD, Botella G, Hasler J (2021) A cluster of FPAAs to recognize images using neural networks. IEEE Trans Circuits Syst Exp Briefs. https://doi.org/10.36227/techrxiv.14060954.v1

Shah S, Hasler J (2018) SOC FPAA hardware implementation of a VMM+WTA embedded learning classifier. IEEE J Emerg Sel Top Circuits Syst 8(1):28–37

Wu R, Guo X, Du J, Li J (2021) Accelerating neural network inference on FPGA-based platforms—a survey. Electronics. https://doi.org/10.3390/electronics10091025

Diab MS, Mahmoud SA (2020) Survey on field programmable analog array architectures eliminating routing network. IEEE Access. https://doi.org/10.1109/ACCESS.2020.3043292

Lee J, He J, Wang K (2021) FPGA-based neural network accelerators for millimeter-wave radio-over-fiber systems. Opt Express 28(9):13384–13400

HernÃindez WC, Pelcat M, Berry F (2021) Why is FPGA-GPU heterogeneity the best option for embedded deep neural networks. In: Workshop system-level design methods for deep learning on heterogeneous architectures (SLOHA)

Shi S, Wang Q, Xu P, Chu X (2016) Benchmarking state-of-the-art deep learning software tools. In: International conference on cloud computing and big data (CCBD), pp 99–104. https://doi.org/10.1109/CCBD.2016.029

Que Z, Zhu Y, Fan H, Meng J, Niu X, Luk W (2020) Mapping large LSTMs to FPGAs with weight reuse. J. Signal Process. Syst. 92(9):965–979

Colbert I, Daly J, Kreutz-Delgado K, Das S (2021) A competitive edge: can FPGAs beat GPUs at DCNN inference acceleration in resource-limited edge computing applications?. Preprint arXiv:2102.00294

Shahshahani M, Sabri M, Khabbazan B, Bhatia D (2021) An automated tool for implementing deep neural networks on FPGA. In: 34th International conference on VLSI design and 2021 20th international conference on embedded systems (VLSID), pp 322–327. https://doi.org/10.1109/VLSID51830.2021.00060

Shawahna A, Sait SM, El-Maleh A (2019) FPGA-based accelerators of deep learning networks for learning and classification: a review. IEEE Access 7:7823–7859. https://doi.org/10.1109/ACCESS.2018.2890150

Ma Y, Suda N, Cao Y, Vrudhula S, Seo JS (2018) ALAMO: FPGA acceleration of deep learning algorithms with a modularized RTL compiler. Integration 62:14–23

Zhang X et al, (2018) DNNBuilder: an automated tool for building high-performance DNN hardware accelerators for FPGAs. In 2018 IEEE/international conference on computer-aided design (ICCAD), pp 1–8. https://doi.org/10.1145/3240765.3240801

Guan Y et al, (2017)“FP-DNN: An Automated Framework for Mapping Deep Neural Networks onto FPGAs with RTL-HLS Hybrid Templates,” in 2017 IEEE 25th Annu. Int. Sympo. Field-Programmable Custom Comput. Machines (FCCM), pp. 152-159 ,https://doi.org/10.1109/FCCM.2017.25,

Riazati M, Daneshtalab M, Sjödin M, Lisper B (2020) DeepHLS: a complete toolchain for automatic synthesis of deep neural networks to FPGA. In: 2020 27th IEEE international conference on electronics, circuits and systems (ICECS), pp 1–4. https://doi.org/10.1109/ICECS49266.2020.9294881

Acknowledgements

The authors thank you for continuous support of AMD under the Heterogeneous Accelerated Compute Clusters (HACC) program (formerly known as the XACC program—Xilinx Adaptive Compute Cluster program) and also are grateful for Xilinx of KRIA board.

Funding

Open access funding provided by University of Miskolc. The research was not funded by an organisation nor any other person.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no any potential Conflict of interest (financial or non-financial).

Ethical approval

To ensure objectivity and transparency in research and to ensure that accepted principles of ethical and professional conduct have been followed, authors declare.

Human participant nor animals

The research did not involved any human participant nor animals.

Informed consent

There is no need for any person informed consent.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Reda, A., Bouzid, A.A., Zghaibe, A. et al. Model predictive-based DNN control model for automated steering deployed on FPGA using an automatic IP generator tool. Des Autom Embed Syst (2024). https://doi.org/10.1007/s10617-024-09287-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10617-024-09287-x