Abstract

Calibration of multidimensional economic problems proven to be difficult, as there is a high risk of problem miss-identification. In this paper we propose a multi-stage calibration method to estimate the six parameters of a commodity market price model that includes storage. We assume that the commodity prices are derived from the optimal commodity storage time when the demand process follows a mean-reverting log-Ornstein–Uhlenbeck process. Using two alternative value functions, first we propose a two-stage method to maximize the likelihood functions obtained by Milstein method. Then by considering a regularized likelihood functions we propose a multi-stage method to calibrate the parameters of our problem. After we realize our method is perfectly performing on the simulated data, we encounter it to actual data and calibrate the parameters. We observe that our multi-stage calibration method is robust and that the storage model outperforms the non-storage model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Storage models for commodity prices have been developed over the past decades and proven to be very useful in economic discussions. However, there is a gap in the literature to use these models in quantitative risk management. For that purpose, a robust calibration of the model is a major consideration which is the main goal we are pursuing in this paper. We have realized that the calibration of the models in the extant literature on storage models, which will shortly be reviewed, is a challenging task mainly due to the non-linear structure of the demand function and high number of parameters. That warrants to introduce a novel method which is a combination of statistical and machine learning methods through a multi-stage process.

We base our discussion on the storage model in the economics literature, which dates back to Gustafson (1958), with further development by incorporating rational expectations in Muth (1961) and Samuelson (1997). In Deaton and Laroque (1992, 1995, 1996) the authors developed a partial equilibrium structural model of commodity price determination and applied numerical methods to test and estimate the model parameters. More recently, many authors improved the storage model in order to better capture the statistical characteristics; see for instance (Chambers and Bailey 2001; Ng and Ruge-Murcia 2000; Cafiero et al. 2011; Miao and Funke 2011; Arseneau and Leduc 2012); Assa 2015). Many articles in the literature consider the problem of calibrating their models according to the market data, (see Deaton and Laroque (1992; 1995; 1996)). The difficulty of the calibration in the storage models comes from the fact that the demand function is usually a nonlinear and intractable function. Most commodity prices display mean-reverting behavior that is documented in several papers in the literature, see, e.g., Barlow (2002), Bessembinder et al. (1995) Casassus and Collin-Dufresne (2005), Casassus et al. (2018), Pindyck (2001), Routledge et al. (2000), Gibson and Schwartz (1990a) and Schwartz (1997), among others. For instance, a simple model to describe the mean-reverting characteristic of the process is the Ornstein–Uhlenbeck (OU) process (Chaiyapo and Phewchean 2017; Vega (2018). In Gibson and Schwartz (1990b) the authors proposed to use OU process to model the commodity prices when there is storage at play see also Lautier (2005) and Schwartz (1997). On the other hand, the storage models are closely related to optimal stopping problems. There are many articles which have used this approach and applied various methods to solve the optimal stopping time problem. For instance, the authors in Peskir and Shiryaev (2006) studied the general theory of optimal stopping time and considered a set of optimal stopping time problems in areas such as mathematical statistics, mathematical finance, and financial engineering. The optimal stopping time problem with deterministic drift is considered in Shiryaev et al. (2008) in various cases. For the estimation of the parameters of the optimal stopping problem and their applications in finance one can see Lipster and Shiryaev (2001), Shiryaev (2008), Khanh (2012, 2014) and Van Khanh (2015). However, in this paper we need to use an infinite, and not finite, horizon unlike most of the papers we mentioned above.

Following the literature, in this paper we have chosen a mean-reverting model. However, as we are using a more fundamental equilibrium argument based on the demand process and function, we use a mean-reverting process to first describe the demand process, then we use this process to find the market prices. This process yields a so-called stationary rational expectation equilibrium demand function, later will be called the value functionFootnote 1, whose six parameters are the main objects to be estimated. As there is no close form solution for the value function we consider two alternatives, namely the power and the exponential demand functions to estimate the parameters. After that, observing the poor performance of the popular Euler-Maruyama method (see Kloeden and Pearson 1977), we adopt a higher order Milstein method (see Mil’shtein 1974) to come up with an efficient likelihood function. Then, after a simple change of variable that includes a Jacobean term, that enables us to use estimation of the price model parameters rather than the latent demand model, we generate our log-likelihood function which has two parts. This proposes the introduction of a two-stage estimator which has performed very well on the simulated data. Then we combine this method with a regularization rule that more accurately estimates the parameters of the simulated data and validate it by using the cross-validation. With this additional step we introduce a multi-stage calibration method (more precisely it is three-stage). Finally, we encounter our multi-stage method to the monthly data of the real commodity prices. We observe that our estimations are robust; for instance, they do not significantly change by changing the alternative demand functions, or the hyper-parameters domain.

The paper is organized as follows: Sect. 2 introduces the storage model and the alternative models. Section 3, introduces and applies a two-stage estimation methods to calibrate the demand function and demand process parameters for the two proposed alternative value functions for the simulated and the actual data. Finally, Sect. 4 introduces a multi-stage calibration method by adding a regularization part to the likelihood function and applies the cross-validation method to validate the calibration. Section 5 concludes.

2 Model

Let us consider a filtered space \(\left( \Omega ,{\mathbb {P}},{\mathcal {F}},\left\{ {\mathcal {F}}_{t}\right\} _{0\le t\le T}\right) \), where \(\Omega \) consists of the state of the world, \({\mathbb {P}}\) is the probability measure, \({\mathcal {F}}\) is the set of the measurable sets, \(\left\{ {\mathcal {F}}_{t}\right\} _{0\le t\le T}\) is a filtration with usual conditions, and \(T\in (0,\infty ]\) is the time horizon. We also consider a continuous Brownian motion \(B_{t}\), adopted with \(\left\{ {\mathcal {F}}_{t}\right\} _{0\le t\le T}\).

We consider two kinds of agents in the market: consumers and risk-neutral producers. Section 2.1 is devoted to the consumer behavior by modeling its demand quantity with a mean-revering process, and Sect. 2.2 elaborates on the risk-neutral producer and storage.

2.1 Demand Quantity

Suppose that the demand quantity at time t has an exponential form \(x_{t}=\exp \left( y_{t}\right) \) where \(y_{t}\) satisfies the Ornstein–Uhlenbeck (OU) process

here \(\mu \) is the long-term average value, \(\sigma \) denotes the volatility (standard deviation), and \(\theta \) denotes the speed of mean reversion which characterizes the velocity at which trajectories will regroup around \(\mu \) in time. So, by Itô’s lemma we have

with \(x_{0}=x>0\). As discussed in the Introduction, this model is within a general group of mean-reverting processes that are used for the prices, (see Chaiyapo and Phewchean 2017; Vega 2018). However, as we are using a more fundamental economic discussion to include the impact of the storage on the prices we use the log-OU first for modeling the demand. Then we will proceed to apply our economic argument to introduce the prices.

2.2 Storage Model

Consider an inverse demand P as follows

where a and b are positive parameters, representing the demand elasticity and other economic fundamentals. When there is no possibility of storage, the producers sell their products directly to consumers based on spot prices. As we are considering a linear inverse demand form (2.3), this essentially means the price process is also log-OU. However, our aim is to study the market when storage is available. In this case, if the prices are high enough then the producer sells the good at market prices; otherwise, the producer starts to store the commodity and account for a future better prices. Our model is a continuous time model, but in order to explain the decision process of the producer, we first state it in a discrete time context. Hence assume that \(t=0,1,2,\ldots \) denote the time steps in which the producers can trade. Therefore, producer’s decision is based on the spot price of the commodity as follows:

where \(p_{t}\) is the spot price, \(x_{t}\) is the demand \(F_{t}\) is the price of the futures contract on commodity. In this model we used the fundamental economic argument adopted from Deaton and Laroque (1992, 1995, 1996). Indeed, we have \(F_{t}=e^{-r}E^{{\mathbb {Q}}}\left( p_{t+1}\right) \) under the risk neutral measure \({\mathbb {Q}}\) in which r denotes the interest rate. Note that since all the producers behave in a similar manner, \(p_{t}\) turns out to be the spot price of the commodity.

If we denote the discounted price process by \({\tilde{p}}_{t}=e^{-rt}p_{t}\) then we simply have

in which \(\tilde{F_{t}}=E^{{\mathbb {Q}}}\left( {\tilde{p}}_{t+1}\right) \). As the expectation in the formation of the prices is under \({\mathbb {Q}},\)we can assume \({\mathbb {P}}={\mathbb {Q}}\).

Now we want to find a solution for this equation. In principle, there is no closed form solution for this price dynamic, however, with some changes and considering some assumptions one can get a fair approximation of the solution.

First of all suppose that the economy has a finite horizon, say \(T<\infty \). If so, the commodity producer needs to sell everything at the market price as T. As a result, we have \({\tilde{p}}_{T}=e^{-rT}P(x_{T})\). From the construction of \({\tilde{p}}_{t}\) one can see that it is nothing but the Snell envelop of the process \({\tilde{p}}_{t}=e^{-rt}p(x_{t})\) with \({\tilde{p}}_{T}=e^{-rT}p(x_{T})\). Now since our demand process is continuous time, we will need a continuous time version of the Snell envelope as follows:

where the supremum runs over the set of all stopping times under the filtration \({\mathcal {F}}_{t}\).

We also need to make another assumption in our economy, to solve the pricing process. We assume that the prices are at the steady state that is equivalent to assuming that \(T=\infty \). As is well known in the classical theory of optimal stopping, in such a case the solution turns out to be a function of the starting point of the state process (here demand process). Hence, we get \({\tilde{p}}_{t}={\tilde{P}}(x_{t})\). So we can rewrite (2.6) as follows.

where the x superscript indicates that the process starts from \(x_{0}=x\). This is called the stationary rational expectations equilibrium demand function in the literature (see Deaton and Laroque 1992; 1995; 1996) but we will call it a value function in the sequel.

Remark 1

It is very important to differentiate between the inverse demand functions P and V. The first one is specifying the relationship between the demand quantity and the prices if there is no storage in the market, and the second one is specifying the same relationship when there is storage. Since we assume our goods are store-able, therefore, our aim is to specify V through our calibration that will be introduced in the next sections.

Remark 2

The relationship between the demand quantity and the prices are given by demand function. From an economic perspective one may ask whether the former influences the latter, or the opposite. This is an economically valid question, however, the focus of this paper is on the technical aspects of the problem which is calibration. As a result what really matters to us is that there is a reverse relation between prices and demand quantity given by V, so, if either is known the other can be specified. In this paper we have chosen to model the demand process \(x_{t}\), and through our calibration, specify the parameters that give both the demand quantity and the inverse demand parameters.

2.3 Value Function and Alternatives

The problem in 2.7 is a well-studied problem and can be solved in optimal stopping time framework (see (Peskir and Shiryaev 2006) for more details). It is easy to see that our problem is linked to the perpetuate American option pricing. It is well-known (see Peskir and Shiryaev (2006)) that the solution of an American option problem satisfies a free boundary partial differential equation whose boundary may be a moving boundary (see Carr and Itkin (2021)). But in our case, since the horizon is infinite and the coefficients of the model are time-independent, the boundary will be constant and we denote it by \(x^{*}\). Hence V satisfies the following free boundary PDE,

where L is the infinitesimal generator of \(x_{t}\) and is given by

Moreover, according to the principle of smooth fit (see Peskir and Shiryaev (2006) section 8 page 144), V has to be a continuously differentiable function over its domain (particularly in the critical value \(x^{*}\), \(V^{\prime }(x^{*})=P^{\prime }(x^{*})\)). Furthermore, V is necessarily a decreasing function which converges to zero when \(x\rightarrow \infty \).

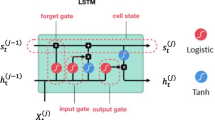

Figure 1 shows the typical shape of the value function V alongside P. As one can see in the figure, \(x^{*}\) is a critical value for demand process, that means when the demand is below \(x^{*}\), \(V(x)=P(x)\), but when demand is above \(x^{*}\), \(V(x)>P(x)\). The function V can be interpreted as the demand function after including the storage demand.

Many authors have tried to solve the American option problem for Ornstein-Uhlenbeck model but non of them have succeeded in finding a closed form solution for it (see (Svetlana Boyarchenko 2007; Carr and Itkin 2021)). Instead they have resorted to semi-closed or numerical solutions.

Inspired by the properties of the function V which we mentioned in the previous section, we define two parametric families, the power family:

and the exponential family:

Power family is a natural choice as the solution to the free boundary problem (2.8) for geometric Brownian motoin has the power form (2.10), see Peskir and Shiryaev (2006) and Oksendal (2003). All functions from both families are \(C^{1}\) (i.e., differntiable with continuous derivative), greater than or equal to P(x), non-increasing, tend to zero and coincide with P(x) in the interval \((0,x^{*}]\).

3 Statistical Estimation of Parameters

Before we use our methods on the actual data we validate them on the simulated data. Our simulated data consists of M time series which are simulations of \(y_{t}\) using the Euler-Maruyama method for (2.1). After that we apply the exponential function to \(y_{t}\) in order to obtain simulations of \(x_{t}\). Then we apply the value function V, with pre-specified parameters to obtain \(p_{t}\). This time series will then be fed into our estimation method as described later. The parameters that we wish to estimate are \((\mu ,\theta ,\sigma )\), and \((a,b,x^{*})\) while the value for r is known. Any calibration method to estimate the parameters of the model must be dealing with a six dimensional optimization problem which makes the problem so challenging, as to avoid the risk of the model miss-identification. In the following we will do this in different steps, first by introducing a statistical two-stage estimation method, and then in Section 4 by adding a regularization rule to introduce a multi-stage method.

3.1 A Two-Stage Estimation Method

To estimate the parameters, we will use the discrete maximum likelihood approach, which is commonly used for approximating the parameters of SDEs. In discrete maximum likelihood method, we discretize the time range by \(0=t_{0}<t_{1}<t_{2}<\cdots <t_{n}=T\), and then try to calculate the likelihood of a single step, i.e the pdf of \(x_{t_{k+1}}\) given \(x_{t_{k}}\). The total likelihood then is the product of the likelihoods of single steps. We now explain the discrete maximum likelihood method in its simplest form. Assume we have observed samples of the following SDE to find the parameter \(\Theta \) (which may consist of several parameters):

Consider the Euler-Maruyama algorithm (see Kloeden and Pearson (1977)) with the time step \(\Delta =t_{k+1}-t_{k}\). For simplicity, denote \(x_{t_{k}}\) by \(x_{k}\). Now, we have the approximation

where \(\varepsilon _{k}\sim \mathrm {N}(0,\Delta )\) is i.i.d. Hence the conditional distribution of \(x_{k+1}\) given \(x_{k}\) would be a normal distribution with mean value \(x_{k}+m\left( x_{k};\,\Theta \right) \Delta \) and variance \(g^{2}\left( x_{k};\,\Theta \right) \Delta \). Therefore, the likelihood of a single step would be

We have tried the above method for approximating the parameters of our model but the results were not satisfying. Hence we tried to use a more advanced method of Joseph (2005) which is based on the Milstein algorithm (see Mil’shtein (1974)) instead of Euler-Maruyama , which will be described below.

The Milstein method is a higher-order method for simulating the solution of stochastic differential equations. Each step of the Milstein method for (3.1) is as follows,

where \(m(x,t)=\left[ x_{t}\theta (\mu -\ln (x_{t}))+\dfrac{\sigma ^{2}}{2}x_{t}\right] \), and \(g(x,t)=\sigma x_{t}\). This equation is now treated as a mapping defining the random variable \(Y=\varepsilon _{k}\) and then is used as the likelihood function. When expressed in terms of the constants \(A_{k},B_{k}\) and \(C_{k}\) defined by

the mapping embodied in the Milstein (3.2) takes the simplified form

the probability density function (PDF) may be constructed from the mapping (3.3) by first noting that each value of x arises from two values of Y, namely,

The usual formula for the PDF, \(f_{x}\), of x in terms of the PDF, \(f_{Y}\), of Y gives immediately,

Now substituting \(Y_{1}\) and \(Y_{2}\) from (3.4), into the current relation yields

One can now simply multiply the value of \(f_{x}\) over observed values of the time series \(x_{t}\) to obtain the total likelihood.

There is yet another difficulty in our model. The above method assumes that we have directly observed \(x_{t}\). But in our model, we can only observe the price series \(p_{t}=V(x_{t})\), and \(x_{t}\) is latent. Actually \(p_{t}\) appears as a change of variable for \(x_{t}\). Hence this change of variable modifies the likelihood function by adding a Jacobean factor as follows:

where \(Jac=|V^{\prime }(x)|\).

Although in principle the above method should work, it turns out that the estimation for the parameters of the first group was poor. This may be due to the fact that the parameters of the first group affect the likelihood in a more non-linear manner than those of second group. Such a difficulty may be surmounted by noting that the Jacobean term is independent of the first group parameters \((\mu ,\theta ,\sigma )\), and hence can be neglected when we estimate those parameters. In fact we have already noticed that after the elimination of Jacobean term, the estimation of first group parameters worked well. Hence, the minimization part of our estimation algorithm consists of two stages. In the first stage we estimate the first group parameters by minimizing \(likelihood_{x}\) and in the second stage, we estimate the second group parameters by minimizing \(likelihood_{p}\). Note that the second stage uses the estimated parameters in the first stage.

3.2 Results of the Two-stage Estimation for Simulated Data

We generate simulated data in two cases of demand functions for the specified values of parameters \(a=1,b=1,r=0.15,\mu =0.5,\sigma =0.1,\theta =1\). The number of the simulations are \(M=200\) and we have chosen time interval \(\Delta =0.01\). We also used Python programming language for all of our assessment.

The (Log) likelihood value and parameters estimates for the model without-storage and two alternative models with storage are shown in Table 1.

As one can see in terms of the likelihood functions the power function outperform the other two, while the exponential function outperforms the linear one. Even though in the actual data the two non-linear value functions will again outperform the linear one, which is a clear sign of the impact of storage on commodity prices, but in some cases the exponential can outperform the power function. On the actual data we use the likelihood ratio test to understand how the different value functions outperform the others.

So far, we have discussed the estimation of the parameters of two models, a model that assumes the storage and the other one that does not assume the storage which are called with-storage and non-storage models from now on. A common tool for comparison of two models is the Likelihood Ratio Test (LRT). In the LRT framework, one of the models (usually the simpler model) is chosen as the null hypothesis (\(H_{0}\)) and the other as the alternative hypothesis (\(H_{1}\)). We assume the with-storage model as \(H_{0}\). Hence consider

The rejection criteria in the LRT, is based on the ratio of the maximum likelihood realized in each model which is called the likelihood ratio test statistic and is given by

where \({{{\mathcal {L}}}}\) is the likelihood of each model. The quantity inside the brackets is called the likelihood ratio. The logarithmic-likelihood-ratio test statistic is expressed as a difference between the log-likelihoods, \(LLR=-2\left[ \ell _{non-storage}-\ell _{with-storage}\right] \) and has an asymptotic \(\chi ^{2}\)-distribution under the null hypothesis. \(\ell _{with-storage}\) and \(\ell _{non-storage}\) are (Log) likelihoods for with-storage and non-storage models given by (3.5), respectively. Thus, the likelihood ratio is small if the alternative model is better than the null model at a significant level \(\alpha \). The likelihood-ratio test provides the decision rule as follows:

-

If p-value > \(\alpha \), do not reject \({\displaystyle H_{0}}\);

-

If p-value < \(\alpha \), reject \({\displaystyle H_{0}}\);

where \(\alpha \) is the significance level. In Table 2 we have reported the results for the LRT and the p-value at \(\alpha =0.01\) level for the simulated data.

Due to p-values in Table 2 for simulated data, one can see that, the proposed storage model for both alternative models are significant.

3.3 Results of Estimation on the Actual Data

In this study we use the agricultural commodity data. The commodity classes include Live cattle, Sugar, Cotton, Wheat and Soybean . The prices are taken from the Commodity Research Bureau, from March 1983 to July 2008. The data is the monthly data as the average of the daily data. This period of time is chosen to be consistent with the data studied in Assa (2015). In addition, we have chosen the monthly rate \(r=0.004\), taken from the same reference.

From (2.3) and the distribution of the demand, we have to estimate \(\mu ,\theta ,\sigma ,a,b,x^{*}\) both under (2.10) and (2.11). For this, first, we use the initial values in Table 3 in the optimization method.

Then we obtain the estimated values for all parameters, and we compare the values of each of these parameters in Table 4 for non-storage model, Table 5 for (2.10) and (2.11) and also the likelihood function values for all of them have been shown in Table 6. From the Table 6 , we can compare the performance of (2.10) and (2.11) on actual data. For instance, the likelihood values for Wheat and Soybean show that (2.11) outperforms (2.10) for this data. Whilst, for other commodities, (2.10) outperforms (2.11). However, an interesting observation is that the likelihood of the estimation using (2.11) and (2.10) do not differ significantly, which shows the estimations are robust and the impact of the model miss-specification is little.

In order to make an educated comparison of the with-storage and non-storage models we also run the likelihood ratio test in this part. After running the test the results are reported in Table 7 for \(\alpha =0.01\) level. Due to p-values for actual data one can see that, the proposed storage model is significant at \(\alpha =0.01\) level.

4 Calibration with Regularization and Validation, a Multi-Stage Method

Regularization is a method to reduce the model over-fitting problem that is particularly helpful when the data is not large enough as with a small data set, models are prone to over-fitting. In this section, we use the key concept of regularization used in the machine learning to parameter estimation and validate our finding with the cross-validation error. The results show this additional step can help the accuracy of the estimations in our simulated data, which constitutes the last step of our multi-stage method proposed in this paper. More precisely, this means a method that consists of a two stage estimation method from Sect. 3 followed by one stage for regularization that will be explained.

4.1 Regularization and Cross Validation

For the penalty term we consider a fraction of \(\mu ^{2}+\sigma ^{2}+\theta ^{2}+a^{2}+b^{2}+x^{*2}\), hence we introduce the final cost function as follows:

where \(\lambda \) is a hyper-parameter that balances the trade-off between the likelihood and regularization terms. A standard method for choosing the best values of hyper-parameters is the cross validation method. A common practice is to use a 70/30 split of the data; \(70\%\) is used for training and \(30\%\) is used for validation, or tuning the parameters. The validation procedures uses the same cost function that the testing procedure uses. In our method, we will focus on splitting the data, as discussed previously, while noting that there is room for improvement with cross validation, if training time is of lesser concern. After splitting our data into train and validation sets by a ratio of \(70-30\%\), the optimal \(\lambda \) is chosen as the minimizer of the cross validation error, which is the sum of the negative log-likelihood of the Euler-Maruyama discretization from the observations:

Here \(m(x_{i})\) and \(g(x_{i})\) are drift and volatility terms of (2.1) respectively and N is the number of the observed prices. For more details on cross validation we refer the reader (Hastie et al. 2009).

4.2 Calibration with Simulated Data

First we make an assessment of our proposed method on the simulated data. For the cross validation we have shown the results for different values of simulation number \(N_{sim}\), specifically \(N_{sim}=100\), \(N_{sim}=200\) and \(N_{sim}=300\). Note that this is important as the number of the actual data that we have is also 300 (25 years of 12 month data), so this give us the confidence that the results in the next part on actual data is reliable. To make sure that the increase in the value of the training set also can consistently be captured by the hyper-parameter we also increase the interval for \(\lambda \) from [0, 1] to [0, 2]. This helps us to make an assessment of the model robustness with the changes as the number of the training increases. Table 8 reports the resulting value of \(\lambda \) together with optimal values of other parameters for the simulated data under (2.10). It is evident that comparing to the real values of the parameters \(a=1,b=1,r=0.15,\mu =0.5,\sigma =0.1,\theta =1\) the values improve by the number of the training data. In addition, we need also to compare the results to the estimations we made in the previous section. We report the error percentage of the estimation in Table 9. As one can see the regularized model with \(N_{sim}=200\) and \(N_{sim}=300\) is doing similar or better than the two other cases. In particular, the un-regularized model is poorly performing in estimating \(\mu \), \(\theta \) and a which accounts for half of the parameters (bold in Table 9). We also can understand that estimations with \(N_{sim}=300\) can be reliably good.

4.3 Calibration with Actual Data

Now in this section we make an assessment of our multi-stage calibration method on the actual data from our list of commodities introduced in Section 3. As we have seen in the previous part for a data set of size 300, we can rely on the calibration method that we have developed. The results are reported in Table 10. As one can see the estimations are pretty robust and do not change a lot by changing the hyper-parameter interval and moving from one model to another. Comparing to the estimations we reported in Table 5 we do not see significant changes except for the values of a and \(x^{*}\). So the more realistic values for both parameters are smaller than the ones estimated before. (Tables 11, 12, 13 and 14)

Even though the interpretation of the results is beyond the scope of the current paper but we can make one interesting observation about the store-ability feature of the commodities. For example let’s take the sugar. As one can see the value of the storage parameter \(x^{*}\) for sugar, thanks to our robust estimations, is almost %15 of the value of the economic value b. This shows how limited the scope for the storage is; meaning that, the value above which the market prices do not provoke storage is rather high. In other words, it is very likely that the commodity be partly stored in the normal times.

We leave the interpretation of the other parameters to the reader.

5 Conclusion

In this paper, we introduced a two-stage method to estimate the parameters of the storage model in the continuous time framework. We have shown that the decision of the producers under the storage model satisfies a free boundary PDE. Since we have no access to the closed form solution of this PDE, we proposed two parametric families of alternative value functions. Then, we provided a two-stage estimation method based on the maximum likelihood obtained by the Milstein method for both simulated and actual data. We used the Likelihood Ratio Test (LTR) to compare the storage and the non-storage models. For all commodities, we have observed that the storage model outperforms the non-storage model. Then we introduce a regularization rule by adding a penalty term to the likelihood function. This resulted in a two-stage calibration method that on the simulated data outperformed the primary two-stage method. To validate our results we have used a cross validation method. Finally, we have encountered our calibration method to the actual data and have observed the robustness of the results.

Data Availibility

The data that support the findings of this study are available from Commodity Research Bureau but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of Commodity Research Bureau.

Notes

We use the term value function since this demand function is the value function of a stopping problem as we see later. In addition this avoids mixing this demand function with the market demand function which will be denoted by P.

References

Arseneau, D., & Leduc, S. (2012). Commodity price movements in a general equilibrium model of storage. IMF Economic Review, 61(1), 199–224.

Assa, H. (2015). A financial engineering approach to pricing agricultural insurances. Agricultural Finance Review, 75(1), 63–76.

Barlow, M. (2002). A diffusion model for electricity prices. Mathematical Finance, 12(4), 287–298.

Bessembinder, H., Coughenour, J., Seguin, P., & Smoller, M. (1995). Mean reversion in equilibrium asset prices: Evidence from the futures term structure. The Journal of Finance, 50(1), 361–375.

Cafiero, C., Bobenrieth, E. S., Bobenrieth, J. R., & Wright, B. (2011). The empirical relevance of the competitive storage model. Journal of Econometrics, 162(1), 44–54.

Carr, P., & Itkin, A. (2021). Semi-analytical solutions for barrier and American options written on a time-dependent Ornstein–Uhlenbeck process. The Journal of Derivatives, 29, 9–26.

Casassus, J., & Collin-Dufresne, P. (2005). Stochastic convenience yield implied from commodity futures and interest rates. The Journal of Finance, 60(5), 2283–2331.

Casassus, J., Collin-Dufresne, P., & Routledge, B. R. (2018). Equilibrium commodity prices with irreversible investment and non-linear technologies. Journal of Banking and Finance, 95, 128–147.

Chaiyapo, N., & Phewchean, N. (2017). An application of Ornstein–Uhlenbeck process to commodity pricing in Thailand. Advances in Difference Equations, 2017(1), 179.

Chambers, M. J., & Bailey, R. E. (2001). A theory of commodity price fluctuations. Journal of Political Economy, 104(1), 924–957.

Deaton, A., & Laroque, G. (1992). On the behaviour of commodity prices. The Review of Economic Studies, 59(1), 1–23.

Deaton, A., & Laroque, G. (1995). Estimating a nonlinear rational expectations commodity price model with unobservable state variables. Journal of Applied Econometrics, 10(5), 9–40.

Deaton, A., & Laroque, G. (1996). Competitive storage and commodity price dynamics. Journal of Political Economy, 104(5), 896–923.

Gibson, R., & Schwartz, E. S. (1990). Stochastic convenience yield and the pricing of oil contingent claims. The Journal of Finance, 45(3), 959–976.

Gibson, R., & Schwartz, E. S. (1990). Stochastic convenience yield and the pricing of oil contingent claims. The Journal of Finance, 45(3), 959–976.

Gustafson, R. L. (1958). Implications of recent research on optimal storage rules. Journal of Farm Economics, 40(2), 290–300.

Hastie, T., Tibshirani, R., & Friedman, J. (2009). The elements of statistical learning: Data mining, inference, and prediction (2nd ed.). New York: Springer Series in Statistics. Springer.

Joseph, J. (2005). Estimation of the parameters of stochastic differential equations. PhD. Thesis, Queensland University of Technology.

Khanh, P. (2012). Optimal stopping time for holding an asset. American Journal of Operations Research, 2, 527–535.

Khanh, P. (2014). Optimal stopping time to buy an asset when growth rate is a two-state Markov chain. American Journal of Operations Research, 4, 132–141.

Kloeden, P. E., & Pearson, R. A. (1977). The numerical solution of stochastic differential equations. The Journal of the Australian Mathematical Society. Series B. Applied Mathematics, 20(1), 8–12.

Lautier, D. (2005). Term structure models of commodity prices: A review. Journal of Alternative Investments, 8(1), 42–64.

Lipster, R. S., & Shiryaev, A. (2001). Statistics of random process. General theory. Berlin, Heidelberg: Springer-Verlag.

Miao, Y. W. W., & Funke N. (2011). Reviving the competitive storage model. A holistic approach to food commodity prices. IMF Working Paper.

Mil’shtein, G. N. (1974). Approximate integration of stochastic differential equations teor. veroyatnost. i primenen. Theory of Probability and its Applications, 19(3), 583–588.

Muth, J. F. (1961). Rational expectations and the theory of price movements. Econometrica, 29(3), 315–335.

Ng, S., & Ruge-Murcia, F. J. (2000). Explaining the persistence of commodity prices. Computational Economics, 16, 149–171.

Oksendal, B. (2003). Stochastic differential equations: An introduction with applications. Springer Science and Business Media.

Peskir, G., & Shiryaev A. N. (2006). Optimal stopping and free-boundary problems. (Lectures in Mathematics ETH Zürich, Birkhäuser, Basel.

Pindyck, R. S. (2001). The dynamics of commodity spot and futures markets: A primer. The Energy Journal, 22(3), 1–29.

Routledge, B. R., Seppi, D. J., & Spatt, C. S. (2000). Equilibrium forward curves for commodities. The Journal of Finance, 55(3), 1297–1338.

Samuelson, P. A. (1997). Stochastic speculative price. Proceedings of the National Academy of Sciences of the United States of America, 68(2), 335–337.

Schwartz, E. S. (1997). The stochastic behavior of commodity prices: Implications for valuation and hedging. Journal of Finance, 52(3), 923–73.

Shiryaev, A. (2008). Optimal stopping rules. Berlin, Heidelberg: Springer-Verlag.

Shiryaev, A., Xu, Z., & Zhou, X. (2008). Thou shalt buy and hold. Quantitative Finance, 8, 765–776.

Svetlana Boyarchenko, S. L., (2007). Perpetual American and real options under Ornstein–Uhlenbeck processes. Irreversible decisions under uncertainty: Optimal stopping made easy.

Van Khanh, P. (2015). When to sell an asset where its drift drops from a high value to a smaller one. American Journal of Operations Research, 5, 514–525.

Vega, C. A. M. (2018). Calibration of the exponential Ornstein–Uhlenbeck process when spot prices are visible through the maximum log-likelihood method. Example with gold prices. Advances in Difference Equations, 2018, 1–14.

Funding

No funding was supporting this work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

There is no conflict of interest and competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Karimi, N., Assa, H., Salavati, E. et al. Calibration of Storage Model by Multi-Stage Statistical and Machine Learning Methods. Comput Econ 62, 1437–1455 (2023). https://doi.org/10.1007/s10614-022-10304-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-022-10304-z