Abstract

A number of recent papers have proposed a time-varying-coefficient (TVC) procedure that, in theory, yields consistent parameter estimates in the presence of measurement errors, omitted variables, incorrect functional forms, and simultaneity. The key element of the procedure is the selection of a set of driver variables. With an ideal driver set the procedure is both consistent and efficient. However, in practice it is not possible to know if a perfect driver set exists. We construct a number of Monte Carlo experiments to examine the performance of the methodology under (i) clearly-defined conditions and (ii) a range of model misspecifications. We also propose a new Bayesian search technique for the set of driver variables underlying the TVC methodology. Experiments are performed to allow for incorrectly specified functional form, omitted variables, measurement errors, unknown nonlinearity and endogeneity. In all cases except the last, the technique works well in reasonably small samples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A series of papers have proposed the use of time-varying coefficient (TVC) models to uncover the bias-free estimates of a set of model coefficients in the presence of omitted variables, measurement error and an unknown true functional form. Footnote 1 There have also been a reasonably-large number of successful applications of the technique.Footnote 2 However, it is difficult to establish the usefulness of a technique strictly through applications since we can never be certain of the accuracy of the results. This paper attempts to bridge the gap between the asymptotic theoretical results of the theoretical papers and the apparently good performance of the applied papers by constructing a set of Monte Carlo experiments to examine (1) how well the technique performs under clearly-defined conditions and (2) the limits on the technique’s ability to perform successfully under a broad range of model misspecifications.

The technique is motivated by an important theorem that was first proved by Swamy and Mehta (1975) and has recently been confirmed by Granger (2008) who quoted a proof that he attributed to Hal White. This theorem states that any nonlinear function may be exactly represented by a linear relationship with time-varying parameters. The importance of this theorem is that it allows us to capture an unknown true functional form in this framework. The parameters of this time-varying-coefficient model are, of course, not consistent estimates of the true functional form since they will be contaminated by the usual biases due to omitted variables, measurement error and simultaneity. The technique being investigated here allows us, in principal, to decompose the TVCs into two components; we associate the first component with the true nonlinear structure, which we interpret as the derivative of the dependent variable with respect to each of the independent variables in the unknown, nonlinear, true function; we associate the second component with the biases emanating from misspecification, and which we then remove from the TVC to give us our consistent estimates. Potentially, this technique offers an interesting way forward in dealing with model misspecification. It has generally been applied in a time series setting but it can equally well be interpreted as a cross sectionFootnote 3 or panel estimation technique.

The remainder of this paper is structured as follows. Section 2 outlines the basic (TVC) theoretical framework. Section 3 discusses some computational issues associated with estimating the model. Section 4 reports on a series of Monte Carlo experiments. Section 5 concludes. An “Appendix” provides details on the computational methods used in the Monte Carlo simulations.

2 The Theoretical Framework

We follow Swamy et al. (2010) who set the groundwork for uncovering causal economic laws. We assume

where \( {\mathbf{x}}_{\text{t}}^{*},{\mathbf{e}}_{\text{t}}^{*} \) are the true determinants of \( {\text{y}}_{\text{t}} \). Alternatively we can represent this relationship by

We have the auxiliary equations:

Substituting (3) into (2) gives:

To deal with errors in variables we assume:

Substituting into (4), we obtain:

where \( \upbeta_{{0{\text{t}}}} = \upalpha_{{0{\text{t}}}} + {\boldsymbol{\upalpha}}^{\prime }_{{2{\text{t}}}} {\mathbf{v}}_{\text{t}}^{*} + {\text{v}}_{\text{t}} \), \( {\boldsymbol{\upbeta }}_{{{\text{xt}}}}^{\prime } = \left( {{\boldsymbol{\upalpha }}_{{1{\text{t}}}}^{\prime } + {\boldsymbol{\upalpha }}_{{2{\text{t}}}}^{\prime } {\boldsymbol{\Psi }}_{{\text{t}}} } \right)\left( {{\text{I}} - {\text{D}}_{{{\text{wt}}}} {\text{D}}_{{{\text{xt}}}}^{{ - 1}} } \right) \), \( {\mathbf{x}}_{\text{et}} = \left[ {1,{\mathbf{x}}^{\prime }_{\text{t}} } \right]^{\prime } \), \( {\boldsymbol{\upbeta }}_{\text{t}} = \left[ {\upbeta_{{0{\text{t}}}} ,{\boldsymbol{\upbeta }}^{\prime }_{\text{xt}} } \right]^{\prime } \) and \( {\text{D}}_{\text{wt}},{\text{D}}_{\text{xt}} \) are diagonal containing \( {\mathbf{w}}_{\text{t}} \) and \( {\mathbf{x}}_{\text{t}} \), respectively, along the diagonal. Finally, we assume there exists a vector \( {\mathbf{z}}_{\text{t}} \) of drivers such that

where \( {\mathbf{z}}_{\text{et}} = \left[ {1,{\mathbf{z}}^{\prime }_{\text{t}} } \right]^{\prime } \). Under the assumption:

It is straightforward to obtain the following

In matrix notation we have

where \( {\text{X}}_{\text{z}} = \left( {{\mathbf{z}}_{{{\text{e}}1}} \otimes {\mathbf{x}}_{{{\text{e}}1}} ,\ldots,{\mathbf{z}}_{\text{eT}} \otimes {\mathbf{x}}_{\text{eT}} } \right)^{\prime } \), \( \upsigma_{\text{a}}^{2} {\boldsymbol{\Omega}} = {\mathbf{D}}_{\text{x}} \left( {{\text{I}}_{\text{T}} \otimes \upsigma_{\text{a}}^{2} {\boldsymbol{\Sigma}}} \right){\mathbf{D}}^{\prime }_{\text{x}} \), and \( {\mathbf{D}}_{\text{x}} = {\text{diag}}\left[ {{\mathbf{x}}^{\prime }_{{{\text{e}}1}} ,\ldots,{\mathbf{x}}^{\prime }_{\text{eT}} } \right] \). A restrictive version of (7) is

where \( \beta_{0t} \) is redefined as \( {{\upalpha }}_{{0{\text{t}}}} + {\text{v}}_{{\text{t}}} \) and \( \beta_{t} \) is independent of \( {\boldsymbol{\upalpha^{\prime}}}_{{2{\text{t}}}} {\mathbf{v}}_{\text{t}}^{*} \) = \( u_{t} \) and (8) as

where the first row of \( {\Pi }_{r} \) post-multiplied by \( {\mathbf{z}}_{\text{et}} \) does not contain the mean of \( {\boldsymbol{\upalpha^{\prime}}}_{{ 2 {\text{t}}}} {\mathbf{v}}_{\text{t}}^{*} \), and the first element of \( {\boldsymbol{\upvarepsilon}}_{\text{rt}} \) is independent of \( {\boldsymbol{\upalpha^{\prime}}}_{{ 2 {\text{t}}}} {\mathbf{v}}_{\text{t}}^{*} \). The error vectors \( {\upvarepsilon }_{{\text{t}}} \) and \( {\boldsymbol{\upvarepsilon}}_{\text{rt}} \) are introduced in (8) and (13). Substituting we have

Defining \( {\text{z}}_{\text{et}} \otimes {\text{x}}_{\text{et}} = {\mathbf{X}}_{\text{t}} \), and \( {\boldsymbol{\uppi}}_{\text{r}} = {\text{vec}}\left( {{\boldsymbol{\Pi}}_{\text{r}} } \right) \), we have:

By assumption, \( {\text{E}}\left( {{\text{u}}_{\text{t}} + {\mathbf{x}}^{\prime }_{\text{et}} {\boldsymbol{\upvarepsilon}}_{\text{rt}} } \right) = 0 \) and \( {\text{var}}\left( {{\text{u}}_{\text{t}} + {\mathbf{x}}^{\prime }_{\text{et}} {\boldsymbol{\upvarepsilon}}_{\text{rt}} } \right) = \upsigma_{\text{u}}^{2} + {\mathbf{x}}^{\prime }_{\text{et}} \upsigma_{\text{r}}^{2} {\boldsymbol{\Sigma}}_{\text{r}} {\mathbf{x}}_{\text{et}} \).

3 Computational Aspects

Under a normality assumption in both \( {\text{u}}_{\text{t}} \) and \( {\boldsymbol{\upvarepsilon}}_{\text{rt}} \) the likelihood function is

where the parameter vector is \( {\uptheta } = \left[ {{\boldsymbol{\uppi}}^{\prime }_{\text{r}} ,\upsigma_{\text{r}} ,\upsigma_{\text{u}} ,{\text{vech}}({\boldsymbol{\Sigma}}_{\text{r}} )^{\prime } } \right]^{\prime } \). Coupled with a prior, \( {\text{p}}\left(\uptheta \right) \), by Bayes’ theorem we get the kernel posterior distribution:

We assume a standard non-informative prior:

where \( d \) is the dimensionality of \( \varSigma_{r} \). The prior of \( \pi \) will be detailed below.

Markov Chain Monte Carlo (MCMC) techniques can be used to obtain a sample \( \{ {\uptheta }^{{({\text{s}})}} ,{\text{s}} = 1,\ldots,{\text{S}}\} \) that converges in distribution to the posterior \( {\text{p}}({\uptheta }|{\text{Y}}) \). One efficient MCMC strategy is the following.

-

(i)

Obtain \( {\boldsymbol{\uppi}}_{\text{r}} \) from its conditional distribution:

-

(ii)

$$ {\boldsymbol{\uppi}}_{\text{r}} |\upsigma_{\text{r}} ,\upsigma_{\text{u}} ,{\boldsymbol{\Sigma}}_{\text{r}} ,{\mathbf{Y}} \sim {\text{N}}\left( {{\hat{\boldsymbol{\uppi }}}_{\text{r}} ,{\mathbf{V}}} \right) $$(18)

where \( {\hat{\boldsymbol{\uppi }}}_{\text{r}} = \left( {{\mathbf{X}}^{\prime } {\boldsymbol{\Omega}}^{ - 1} {\mathbf{X}}} \right)^{ - 1} {\mathbf{X}}^{\prime } {\boldsymbol{\Omega}}^{ - 1} {\mathbf{y}},{\mathbf{V}} = \left( {{\mathbf{X}}^{\prime } {\boldsymbol{\Omega}}^{ - 1} {\mathbf{X}}} \right)^{ - 1} \), \( {\boldsymbol{\Omega}} = {\text{diag}}( \upsigma_{\text{u}}^{2} + {\mathbf{x}}^{\prime }_{\text{et}} {\boldsymbol{\Sigma}}_{\text{r}} {\mathbf{x}}_{\text{et}} ,{\text{t}} = 1,\ldots,{\text{T}}) \).

-

(iii)

Reparametrize \( {\boldsymbol{\Sigma}}_{\text{r}} \) using \( {\mathbf{C}} \) where \( {\boldsymbol{\Sigma}}_{\text{r}} = {\mathbf{C^{\prime}}}_{\text{r}} {\mathbf{C}}_{\text{r}} \), \( \sigma_{\text{u}} \propto \exp \left({c_{0}} \right) \) and \( \sigma_{r} \propto \exp (C_{00}) \). Assuming that different non-zero elements of \( {\mathbf{C}}_{\text{r}} \) are \( {\text{c}}_{1},\ldots,{\text{c}}_{\text{p}} \) the new parameter vector is \( {\boldsymbol{\uppi}}_{\text{r}} \) and \( {\mathbf{c}} = [{\text{c}}_{0} ,{\text{c}}_{{00}} ,{\text{c}}_{1} , \ldots ,{\text{c}}_{{\text{p}}} ]^{\prime } \in \mathbb{R}^{{{\text{p}} + 2}} \). Drawings from the conditional posterior distribution of \( {\mathbf{c}}|{\boldsymbol{\uppi}}_{\text{r}},{\mathbf{Y}} \) can be realized using the Girolami and Calderhead (2011) Metropolis Adjusted Langevin Diffusion method described in the “Appendix”.

If we define \(\widehat{{\boldsymbol{\upbeta }}}_{{\text{t}}} = \left( {{\mathbf{x}}_{{\text{t}}} {\mathbf{x}}^{\prime } _{{\text{t}}} + \upsigma ^{2} {\boldsymbol{\Sigma }}^{{ - 1}} } \right)^{{ - 1}} \left( {{\mathbf{x}}_{{\text{t}}} {\text{y}}_{{\text{t}}} + {\text{ }}\upsigma ^{2} {\boldsymbol{\Sigma }}^{{ - 1}} {\boldsymbol{\Pi} \mathbf{z}}_{{\text{t}}} } \right) \) and \( {\mathbf{V}}_{\upbeta {\text{t}}} = \left({{\mathbf{x}}_{\text{t}} {\mathbf{x^{\prime}}}_{\text{t}} + \upsigma^{2} {\boldsymbol{\Sigma}}^{- 1}} \right)^{- 1} \) we obtain:

In this form we can avoid a possibly inefficient Gibbs sampler which relies on drawing \( {\boldsymbol{\Pi}} \) and \( {\boldsymbol{\Sigma}} \) from (13), \( \{{\boldsymbol{\upbeta}}_{\text{t}},{\text{t}} = 1,\ldots,{\text{T}}\} \) from (19) and \( \upsigma \) from (12).

Selecting the drivers

Suppose we have (12) and instead of (13) we have

where \( {\mathbf{z}}_{\text{t}}^{{({\text{m}})}} \) is a potential set of drivers (subset) from a universe \( {\mathcal{Z}} = \left\{{{\mathbf{z}}_{{{\text{t}}1}},\ldots,{\mathbf{z}}_{{{\text{tG}}}}} \right\} \). Equations (12) and (20) define different models indexed by \( m \). As searching through all possible combinations of variables in \( {\mathcal{Z}} \) is infeasible, we follow the Stochastic Search Variable Selection (SSVS) approach of George et al. (2008).Footnote 4 The SSVS involves a specific prior of the form:

where \( {\boldsymbol{\updelta}} \) is a vector of unknown parameters and its elements can be \( \updelta_{\text{j}} \in \{0,1\} \) . Also \( {\mathbf{D}} = {\text{diag}}\left[ {{\text{d}}_{1}^{2} , \ldots ,{\text{d}}_{{{\text{G}}^{2} }} } \right] \):

The prior implies a mixture of two normals:

If \( \underline{\upkappa } _{{0{\text{j}}}} \) is “small” and \( \underline{\kappa } _{{1{\text{j}}}} \) is “large”, then, when \( \updelta_{\text{j}} = 0 \) chances are that variable j will be excluded from the model while if \( \updelta_{\text{j}} = 1 \) chances are that variable j will be included in the model. The prior for the indicator parameter \( {\boldsymbol{\updelta}} \) is:

and we set \( \underline{{\text{q}}} _{{\text{j}}} = \tfrac{1}{2} \). For \( \underline{\kappa } _{{0{\text{j}}}} \) and \( \underline{\kappa } _{{1{\text{j}}}} \), George et al. (2008) propose a semi-automatic procedure based on \( \underline{\upkappa } _{{0{\text{j}}}}^{2} = {\text{c}}_{0} \hat{\text{v}}\left( {\uppi _{\text{j}} } \right) \) and \( \underline{\upkappa } _{{1{\text{j}}}}^{2} = {\text{c}}_{1} \hat{\text{v}}\left( {\uppi _{\text{j}} } \right) \) for \( {\text{c}}_{0} = \tfrac{1}{{10}},\;{\text{c}}_{1} = 10 \) and \( {\hat{\text{v}}}\left( {{\uppi }_{{\text{j}}} } \right) \) is any preliminary estimate of the variance of \( {\uppi }_{{\text{j}}} \).

For the elements of \( {\mathbf{c}} \) we follow a similar approach. If \( {\text{c}}_{{\text{j}}} \) corresponds to a diagonal element it is always included in the model. If not, we use a mixture-of-normals SSVS approach as above.

4 Monte Carlo results

In all cases below \( \upgamma_{0} = \upgamma_{1} = \upgamma_{2} = .1 \). The number of Monte Carlo simulations is set to 10,000. All \( \upvarepsilon_{tj} \sim iidN(0,1) \). In case IV, we set \( \upsigma_{\upvarepsilon} = .3 \).

4.1 Model I: Incorrect Functional Form

The true model is \( {\text{y}}_{\text{t}} = \upgamma_{0} + \upgamma_{1} {\text{x}}_{\text{t}} + \tfrac{1}{2}\upgamma_{2} {\text{x}}_{\text{t}}^{2},\:{\text{t}} = 1, \ldots,{\text{T}} \) and we have omitted the nonlinear term. The driver is \( {{z}}_{{t}} = \alpha {{x}}_{{t}} + \upvarepsilon_{{t}} \). We have \( \upvarepsilon_{\text{t}} \sim {\text{iidN(0,1)}} \) and \( {\text{x}}_{{\text{t}}} \sim{\text{iidN(1,1)}} \). In this case the correlation between \( {\text{z}}_{\text{t}} \) and \( {\text{x}}_{{\text{t}}}^{2} \) is \( \uprho = \frac{3\alpha}{{\sqrt {3\left({3\alpha^{2} + 1} \right)}}} \).

If the correlation were equal to 1, then this would be a perfect driver as it exactly recreates the missing quadratic term. The estimation procedure would then be unbiased and efficient. If the correlation were zero, then zt would contain no information about the missing nonlinearity. We are, therefore, interested in varying this correlation and seeing how low the correlation can fall before the estimator ceases to be useful.

In this case the true effect is \( \upgamma_{1} + \upgamma_{2} {\text{x}}_{\text{t}} \), that is, the derivative of \( y \) with respect to \( x \). There are no omitted variables or other misspecifications other than the nonlinearity so the set S2 is empty and the estimate of the derivative is given by \( \upgamma_{1} + \upgamma_{2} {\text{x}}_{\text{t}} = \beta_{1} - \varepsilon_{{t}} \).

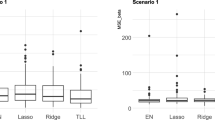

Table 1 gives the results of this set of Monte Carlo experiments for sample sizes of 50, 100, 200 and 1000. When the correlation between z and x is very high, then even for small samples the bias is very small and the standard deviation of the results is also small at around 1%. As the sample size grows, both the bias and the standard deviation fall, and the estimator is clearly consistent and efficient. As we look across the table, where the correlation between the driver and the true x variable falls the estimation procedure still does very well until the correlation falls to about .5; at that point the bias and the standard error begins to rise quite substantially. This happens even more clearly with the very large sample size of T = 1000 where both the bias and standard error are very small until the correlation falls below .5.

4.2 Model II: Omitted Variables

The second model focuses on omitted variables. The true model is \( {\text{y}}_{\text{t}} = \upgamma_{0} + \upgamma_{1} {\text{x}}_{{{\text{t}}1}} + \upgamma_{2} {\text{x}}_{{{\text{t}}2}} \). The \( {\text{x}}_{{{\text{t}}1}},x_{{{\text{t}}2}} \) are correlated: \( {\text{x}}_{{{\text{t}}2}} = \upgamma {\text{x}}_{{{\text{t}}1}} + \upxi_{\text{t}},\upxi_{\text{t}},{\text{x}}_{{{\text{t}}1}} \sim {\text{iidN(0,1)}} \). The squared correlation between the two variables is \( \uprho_{12}^{2} = \frac{{\upgamma^{2}}}{{\upgamma^{2} + 1}} \). We set \( \upgamma = 2 \) so that this is .80.

We estimate the TVC model \( {\text{y}}_{\text{t}} = \upbeta_{{0{\text{t}}}} + \upbeta_{{1{\text{t}}}} {\text{x}}_{{{\text{t}}1}} \) and again use a driver \( {\text{z}}_{\text{t}} = \alpha {\text{x}}_{{{\text{t}}2}} + \upvarepsilon_{\text{t}} \) and we see how well the estimator performs as the correlation between \( {\text{z}}_{\text{t}} \) and \( {\text{x}}_{{{\text{t}}2}} \) falls. The correlation between \( {\text{z}}_{\text{t}} \) and \( {\text{x}}_{{{\text{t}}2}} \) is \( \uprho = \frac{\upalpha}{{\sqrt {\upalpha^{2} + 1}}} \).

In this case, the true effect is \( \upgamma_{1} \) and the bias free estimate is \( \upbeta_{1\text{t}} - \uppi_{1} {\text{z}}_{\text{t}} - {\text{e}}_{\text{t}} \).

The results of this experiment are given in Table 2. The results show a similar picture to case 1 above. Both the bias and the standard deviation clearly decrease as the sample size increases. Even for the smallest sample size both the bias and the standard deviation are quite small while the correlation between the driver and the misspecification is above .5. Again, as the correlation falls below .5 the bias and standard deviation rise quite quickly.

4.3 Model III: Measurement Error

The third model deals with measurement error, so we generate data from \( {\text{y}}_{\text{t}} = \upgamma_{0} + \upgamma_{1} {\text{x}}_{\text{t}} \) then create \( {\text{y}}_{\text{t}}^{*} = {\text{y}}_{\text{t}} + \upvarepsilon_{t1} \) and \( {\text{x}}_{\text{t}}^{*} = {\text{x}}_{\text{t}} + \upvarepsilon_{\text{t2}} \) then we estimate the TVC model \( {\text{y}}_{{\text{t}}}^{*} = \upbeta _{0} {\text{t}} + \upbeta _{{1{\text{t}}}} {\text{x}}_{{\text{t}}}^{*} \) and use two z’s as drivers \( {\text{z}}_{\text{t1}} = \upalpha_{1} \upvarepsilon_{\text{t1}} + \upvarepsilon_{\text{t3}} \) and \( {\text{z}}_{{{\text{t2}}}} = {\upalpha }_{2} {\upvarepsilon }_{{{\text{t2}}}} + {\upvarepsilon }_{{{\text{t4}}}} \) and again see how things change as \( \upalpha \) gets bigger.

The results of this experiment are reported in Table 3. The results are entirely consistent with the results in the earlier two cases. The technique is clearly consistent, as the sample rises the bias falls considerably. Even for a small sample the bias is quite low for correlations between the driver and the measurement error which is .5 or above.

4.4 Detecting Irrelevant Drivers

Next, we examine whether the SSVSFootnote 5 procedure, which we have not applied so far, can correctly identify the drivers \( {\text{z}}_{\text{t1}},{\text{z}}_{\text{t2}} \). To this end, we construct ten other drivers, say \( {\text{z}}_{\text{t2}},\ldots,{\text{z}}_{t,12} \) from a multivariate normal distribution with zero means and equal correlations of .70. In Table 4 we report the equivalent of Table 3 plus the proportion of cases, say \( \Pi^{*} \), in which SSVS has correctly excluded \( {\text{z}}_{\text{t2}},\ldots,{\text{z}}_{\text{t,12}} \) from the set of possible drivers.Footnote 6

There is again a remarkable cut off at the correlation level of .5. Above this level the true driver set is correctly identified in around 60% of cases and for the largest sample in over 90% of cases, even for small samples. Once the correlation falls below .5, however, the proportion of correct identifications falls dramatically. An obvious conclusion here is that when we have drivers that are effective enough so that we will get reasonably good parameter estimates, the SSVS algorithm is very effective at detecting them.

4.5 Model IV: A More Complex Nonlinearity

The true model is \( {\text{y}}_{\text{t}} ={\upgamma}_{ 0} +{\upgamma}_{ 1} {\text{x}}_{\text{t}} + {\text{ exp}}\left({- {\updelta}{\text{x}}_{\text{t}}^{2}} \right) +{\upvarepsilon}_{\text{t}} \), t = 1,…,T and we have omitted the nonlinear term. The drivers form a Fourier basis \( \left\{ {\cos ({\text{jx}}_{\text{t}} ),\sin ({\text{jx}}_{\text{t}} ),{\text{j = 1,}} \ldots {\text{J}}} \right\} \) after transforming all series to lie in (− π, π). We have \( {\upvarepsilon}_{\text{t}} \sim {\text{iidN(0,1)}} \) and \( {\text{x}}_{\text{t}} \sim {\text{iidN(0,1)}} \) ordered from smallest to largest. The drivers, that is powers of \( {\text{x}}_{\text{t}} \) are selected through the SSVS procedure. We set the maximum value of J to 10.

We again estimate the TVC model \( {\text{y}}_{\text{t}} = \upbeta_ 0 {\text{t}} + \upbeta_{\text{1t}} x_{t1} \) and this time the derivative of y with respect to x is \( \upgamma_{1} - 2\delta {\text{x}}_{\text{t}} \exp (- \delta {\text{x}}_{\text{t}}^{2}) \). Our estimate of this is again given by \( \beta_{{1{t}}} - {\text{e}}_{\text{t}} \).

The results for this exercise are given in Table 5. In this case for δ in the range .1–5 the bias remains very small, as does the standard deviation. There is also a noticeable reduction in both bias and standard deviation as the sample size increases.

4.6 Model V: An Endogeneity Experiment

In this experiment we have: \( {\text{y}}_{\text{t}} = {\upgamma}_{1} + {\upgamma}_{2} {\text{x}}_{\text{t1}} + {\upgamma}_{3} {\text{x}}_{\text{t2}} + {\text{u}}_{\text{t}} \). The correlation between \( {\text{u}}_{\text{t}} \) and \( {\text{x}}_{\text{tj}} \) is .80 (j = 1, 2) so that endogeneity is quite strong in this model. Our drivers are four variables \( {\text{z}}_{{{\text{t}}5}}, \ldots,{\text{z}}_{{{\text{t}}8}} \)orthogonal to the error \( {\text{u}}_{\text{t}} \) and four drivers \( {\text{z}}_{{{\text{t}}1}}, \ldots,{\text{z}}_{{{\text{t}}4}} \) which are correlated with the error \( {\text{u}}_{\text{t}} \) but they are orthogonal to each other as well as orthogonal to the other four drivers. The degree of correlation between the drivers and \( {\text{u}}_{\text{t}} \) is \( {\uprho} \). We are interested in \( \Pi^{*} \), the proportion of cases where all the drivers \( {\text{z}}_{{{\text{t}}1}}, \ldots,{\text{z}}_{{{\text{t}}4}} \) are included in the model and the drivers \( {\text{z}}_{{{\text{t}}5}}, \ldots,{\text{z}}_{{{\text{t}}8}} \) are all excluded. Of course, we do not force the correct drivers in final estimation.

The results for this experiment are given in Table 6. Here the table has some rather different results than the earlier tables. The bias remains quite high, even for quite high correlations for the sample size of 50 or 100. It is only for much larger sample sizes that the bias becomes negligible. For larger sample sizes the bias remains small again for correlations above .5 and the SSVS selection procedure works reasonably well.

5 Conclusions

This paper has investigated the performance of the TVC estimation procedure in a Monte Carlo setting. The key element of TVC estimation is the identification and selection of a set of driver variables. With an ideal driver set, it is straightforward to show that the procedure is both consistent and efficient. However, in practice it is not possible to know if we have a perfect driver set. Therefore, we need to know how the procedure performs when the driver set is less than perfect. In this paper, we dealt with this issue in a Monte Carlo setting.

We construct a number of Monte Carlo experiments to examine the performance of the methodology under (i) clearly-defined conditions and (ii) a range of model misspecifications. We also propose a new Bayesian search technique for the set of driver variables underlying the TVC methodology. Experiments are performed to allow for incorrectly specified functional form, omitted variables, measurement errors, unknown nonlinearity and endogeneity. Our broad conclusion is that, even for relatively small samples, the technique works well so long as the correlation between the driver set and the misspecification in the model is greater than about .5. Both the bias and the efficiency of the estimators also improve as the sample size grows, but again a correlation of over .5 seems to be required. The only caveat to this result is that if we are considering strong simultaneity bias; in that case the sample size needs to be quite large (over 500) before the technique works reasonably well. Finally, we find that the SSVS technique also seems to perform well in finding an appropriate driver set from a much larger set of possible drivers.

Notes

Time varying coefficients are meaningless in a cross section setting; in such a setting the coefficients vary across the individual units in the cross section. We simply re-interpret the t-subscript as i-subscripts.

See also Jochmann et al (2010).

An alternative to using the SSVS procedure would be the LASSO prior. The procedures are similar in terms of timing and purpose. There is some evidence that both perform well (Pavlou et al. 2016) and in a similar manner but further work is needed in this area.

There is an issue here as to whether we need to start from a superset of drivers which includes the true ones. Clearly, if we do this then this is an ideal situation and the Monte Carlo tells us how well the procedure performs. However, from a theoretical point of view what we need is that the superset includes variables be highly correlated with the true drivers. In a data rich environment this would not be a strong restriction. Bai and Ng (2010) prove that common factors which drive all the variables in a system are valid instrumental variables. By the same reasoning, we could construct a set of factors from a large set of variables which would work well as drivers.

This guarantees the existence of moments up to order four.

References

Bai, J., & Ng, S. (2010). Instrumental variable estimation in a data rich environment. Econometric Theory,26, 1577–1606.

George, E., Sun, D., & Ni, S. (2008). Bayesian stochastic search for VAR model restrictions. Journal of Econometrics,142, 553–580.

Girolami, M., & Calderhead, B. (2011). Riemann manifold Langevin and Hamiltonian Monte Carlo methods. Journal of the Royal Statistical Society: Series B (Statistical Methodology),73(2), 123–214.

Granger, C. W. J. (2008). Nonlinear models: Where do we go next—Time-varying parameter models? Studies in Nonlinear Dynamics and Econometrics,12(3), 1–9.

Hall, S. G., Hondroyiannis, G., Swamy, P. A. V. B., & Tavlas, G. S. (2009). Where has all the money gone? Wealth and the demand for money in South Africa. Journal of African Economies,18(1), 84–112.

Hall, S. G., Hondroyiannis, G., Swamy, P. A. V. B., & Tavlas, G. S. (2010). The fisher effect puzzle: A case of non-linear relationship. Open Economies Review. https://doi.org/10.1007/s11079-009-9157-1.

Hall, S. G., Swamy, P. A. V. B., & Tavlas, G. S. (2017). Time-varying coefficient models: A proposal for selecting the coefficient driver sets. Macroeconomic Dynamics,21(5), 1158–1174.

Hondroyiannis, G., Kenjegaliev, A., Hall, S. G., Swamy, P. A. V. B., & Tavlas, G. S. (2013). Is the relationship between prices and exchange rates homogeneous. Journal of International Money and Finance,37, 411–436. https://doi.org/10.1016/j.jimonfin.2013.06.014.

Jochmann, M., Koop, G., & Strachan, R. W. (2010). Bayesian forecasting using stochastic search variable selection in a VAR subject to breaks. International Journal of Forecasting,26(2), 326–347.

Kenjegaliev, A., Hall, S. G., Tavlas, G. S., & Swamy, P. A. V. B. (2013). The forward rate premium puzzle: A case of misspecification? Studies in Nonlinear Dynamics and Econometrics,3, 265–280. https://doi.org/10.1515/snde-2013-0009.

Pavlou, M., Ambler, G., Seaman, S., De Lorio, M., & Omar, Z. (2016). Review and evaluation of penalised regression methods for risk prediction in low-dimensional data with few events. Statistics in Medicine,37(7), 1159–1177.

Swamy, P. A. V. B., Chang, I.-L., Mehta, J. S., & Tavlas, G. S. (2003). Correcting for omitted-variable and measurement-error bias in autoregressive model estimation with panel data. Computational Economics,22, 225–253.

Swamy, P. A. V. B., Hall, S. G., & Tavlas, G. S. (2012). Generalized cointegration: A new concept with an application to health expenditure and health outcomes. Empirical Economics,42, 603–618. https://doi.org/10.1007/s00181-011-0483-y.

Swamy, P. A. V. B., Hall, S. G., & Tavlas, G. S. (2015). A note on generalizing the concept of cointegration. Macroeconomic Dynamics,19(7), 1633–1646. https://doi.org/10.1017/S1365100513000928.

Swamy, P. A. V. B., Hall, S. G., Tavlas, G. S., & Hondroyiannis, G. (2010). Estimation of parameters in the presence of model misspecification and measurement error. Studies in Nonlinear Dynamics & Econometrics,14, 1–35.

Swamy, P. A. V. B., & Mehta, J. S. (1975). Bayesian and non-Bayesian analysis of switching regressions and a random coefficient regression model. Journal of the American Statistical Association,70, 593–602.

Tavlas, G. S., Swamy, P. A. V. B., Hall, S. G., & Kenjegaliev, A. (2013). Measuring currency pressures: The cases of the Japanese Yen, the Chinese Yuan, and the U.K. pound. Journal of the Japanese and International Economies,29, 1–20. https://doi.org/10.1016/j.jjie.2013.04.001.

Author information

Authors and Affiliations

Corresponding author

Additional information

We are grateful to Fredj Jawadi, and two referees for helpful comments on an earlier draft.

Appendix

Appendix

This Appendix provides details on the computational methods used in the Monte Carlo simulations. Following Girolami and Calderhead (2011) we utilize Metropolis-adjusted Langevin and Hamiltonian Monte Carlo sampling methods defined on the Riemann manifold, since we are sampling from target densities with high dimensions that exhibit strong degrees of correlation. Consider the Langevin diffusion:

where \( {\mathbf{B}} \) denotes the D-dimensional Brownian motion. The first-order Euler discretization provides the following candidate generation mechanism:

where \( {\mathbf{z}}\sim\mathcal{N}_{D} \left({{\mathbf{0}},{\mathbf{I}}} \right) \), and \( \varepsilon > 0 \) is the integration step size. Since the discretization induces an unavoidable error in approximation of the posterior, a Metropolis step is used, where the proposal density is

with acceptance probability \( a\left({{\boldsymbol{\uptheta}}^{o},{\boldsymbol{\uptheta}}^{*}} \right) = \hbox{min} \left\{{1,\frac{{p\left({{\boldsymbol{\uptheta}}^{*} |{\boldsymbol{\mathbb{Y}}}} \right)q\left({{\boldsymbol{\uptheta}}^{o} |{\boldsymbol{\uptheta}}^{*}} \right)}}{{p\left({{\boldsymbol{\uptheta}}^{o} |{\boldsymbol{\mathbb{Y}}}} \right)q\left({{\boldsymbol{\uptheta}}^{*} |{\boldsymbol{\uptheta}}^{o}} \right)}}} \right\} \). Here \( {\boldsymbol{\mathbb{Y}}} \) denotes the available data. The Brownian motion of the Riemann manifold is given by:

for \( i = 1,\ldots,D \).

The discrete form of the above stochastic differential equations is:

The proposal density is \( {\boldsymbol{\uptheta}}^{*} |{\boldsymbol{\uptheta}}^{o} \sim\mathcal{N}_{d} \left({{\boldsymbol{\upmu}}\left({{\boldsymbol{\uptheta}}^{o},\varepsilon} \right),\varepsilon^{2} {\mathbf{G}}^{- 1} \left({{\boldsymbol{\uptheta}}^{o}} \right)} \right) \) and the acceptance probability has the standard Metropolis form:

The gradient and the Hessian are computed using analytic derivatives. All computations are performed in Fortran 77 making extensive use of IMSL subroutines.

The Metropolis–Hastings procedure we use is a simple random walk whose candidate generating density is a multivariate Student-t distribution with 5 degrees of freedomFootnote 7 and covariance equal to a scaled version of the covariance obtained from the Langevin Diffusion MCMC. The scale parameter is adjusted so that approximately 25% of the draws are accepted.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hall, S.G., Gibson, H.D., Tavlas, G.S. et al. A Monte Carlo Study of Time Varying Coefficient (TVC) Estimation. Comput Econ 56, 115–130 (2020). https://doi.org/10.1007/s10614-018-9878-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-018-9878-6