Abstract

In this paper, we analyze and compare the finite sample properties of alternative factor extraction procedures in the context of non-stationary Dynamic Factor Models (DFMs). On top of considering procedures already available in the literature, we extend the hybrid method based on the combination of principal components and Kalman filter and smoothing algorithms to non-stationary models. We show that if the idiosyncratic noises are stationary, procedures based on extracting the factors using the non-stationary original series work better than those based on differenced variables. We apply the methodology to the analysis of cross-border risk sharing by fitting non-stationary DFM to aggregate Gross Domestic Product and consumption of a set of 21 industrialized countries from the Organization for Economic Co-operation and Development (OECD). The goal is to check if international risk sharing is a short- or long-run issue.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Dynamic Factor Models (DFMs) were first introduced in economics by Geweke (1977) and Sargent and Sims (1977) with the aim of extracting the underlying common factors in a system of time series. In macroeconomics, these common factors are useful for building indicators and to predict key variables of the economy, among many other applications. Recently, econometricians have to deal with data sets consisting of hundreds of series, making the use of large-dimensional DFMs very attractive in practice; see Breitung and Eickmeier (2006), Bai and Ng (2008), Stock and Watson (2011), Breitung and Choi (2013) and Bai and Wang (2016) for reviews of the existing literature.

It is well known that macroeconomic time series are frequently non-stationary and cointegrated. The connection between cointegration and common factors is analyzed by Stock and Watson (1988), Johansen (1991), Vahid and Engle (1993), Escribano and Peña (1994), Gonzalo and Granger (1995), Bai (2004), Bai and Ng (2004), Moon and Perron (2004), Banerjee et al. (2014, 2017), Barigozzi et al. (2016, 2017) and Barigozzi and Luciani (2017), among others.

In the context of univariate time series, it is common to deal with non-stationarity by differencing. However, when dealing with multivariate systems, differencing should be considered with care; see Box and Tiao (1977). It is well known that, when differencing a cointegrated system, the long-run information, crucial to understand comovements between the variables, is lost; see Seong et al. (2013) who point out that differencing is an inefficient transformation to stationarity in the presence of cointegration. Canova (1998) qualifies the detrending issue as “delicate and controversial” and compares the properties of the cyclical components of a system of seven real macroeconomic series obtained using seven univariate and three multivariate techniques. He concludes that the properties of the extracted business cycles vary widely across detrending methods. Sims (2012) claims that “when cointegration may be present, simply getting rid of the non-stationarity by differencing individual series so that they are all stationary throws away vast amounts of information and may distort inference.”

As a consequence of this controversy, the number of works dealing with non-stationary and possibly cointegrated DFMs is increasing. In the context of non-stationary systems, Bai (2004) proposes factor extraction implementing principal components (PC) to data in levels and derives the rates of convergence and limiting distributions of the estimated common trends, loading weights and the common component when the idiosyncratic components are stationary; see Engel et al. (2015) for an application to exchange rates. However, Barigozzi et al. (2016, 2017) point out that stationarity of the idiosyncratic components would produce cointegration relations for the observed system that are not observed in the large sets of time series that are standard in the DFMs literature as, for example, those of Stock and Watson (2012) and Forni et al. (2009). One possible explanation could be that the idiosyncratic component in those datasets is likely to be non-stationary, and consequently, an estimation strategy robust to the assumption that some of the idiosyncratic components are non-stationary could be preferred.

Alternatively, PC can be implemented to first-differenced data with the estimated factors obtained either by integration of their estimated first differences as proposed by Bai and Ng (2004) or by projecting the original system onto the space spanned by the estimated loadings as proposed by Barigozzi et al. (2016).Footnote 1 Bai and Ng (2004) prove the consistency of PC factor estimates when they are obtained from first-differenced data using the “differencing and recumulating” method; see Greenway et al. (2018) who obtain recumulated factors in the context of exchange rates.

Finally, Bai and Ng (2004) carry out a Monte Carlo analysis to evaluate and compare the finite sample properties of implementing PC procedures to data in levels or to their first differences and show that the non-stationary common factors can be properly recovered by both approaches when the idiosyncratic components are stationary. However, when the idiosyncratic components are non-stationary, PC cannot be directly implemented to the original data and it is convenient to use the “differencing and recumulating” method.Footnote 2

PC-based approaches have a major limitation in that they are not exploiting in any way the dynamic nature of the factors, nor the serial and cross-sectional dependence. Consequently, they are not efficient. Instead of implementing PC procedures, factor extraction can be carried out using two-step Kalman Smoothing (2SKS) techniques based on combining PC factor extraction and a Kalman Smoother. The main advantage of the 2SKS comes from the flexibility of the Kalman filter to explicitly model the factor dynamics. In the stationary case, Doz et al. (2011, 2012) show that 2SKS outperforms PC in terms of the precision of the factor estimates and derive its asymptotic properties; see also Poncela and Ruiz (2016). 2SKS has been implemented to non-stationary systems by Seong et al. (2013) in a low-dimensional setting and in Quah and Sargent (1993) in a large but finite cross-sectional dimension case with orthogonal idiosyncratic components.

The contributions of this paper are twofold. First, we extend the analysis of Bai and Ng (2004, 2010) comparing the factors extracted using PC implemented to the original non-stationary system with those obtained by “differencing and recumulating.” We consider a wide range of structures of the idiosyncratic noises, including heteroscedasticity and temporal and/or cross-sectional dependences. We also consider systems with two factors with the factors being either both non-stationary or one stationary and another non-stationary. With respect to the idiosyncratic components, we consider cases in which all of them are either stationary or non-stationary and cases in which some of them are stationary and others are not. Finally, we compare PC and 2SKS factor extraction procedures.Footnote 3 We analyze the performance of the 2SKS procedure when extracting factors using the first-differenced data and estimating the original factors by recumulating. Furthermore, we propose a new 2SKS procedure which can be implemented to the original non-stationary system.Footnote 4

The second contribution of this paper is an empirical application in which we extract common factors from a non-stationary system of aggregate output and consumption variables of a set of 21 industrialized countries of the Organization for Economic Co-operation and Development (OECD). International risk sharing focuses on cross-border mechanisms to smooth consumption when a country is hit by an output shock. The goal is to check if Gross Domestic Product (GDP) fluctuations are directly passed to consumption or can be at least partially cross-border smoothed, allowing to check the resilience of domestic consumption when the national economies are hit by GDP shocks. The use of possible non-stationary DFMs allows to distinguish between long-run and short-run issues in consumption smoothing through international risk sharing. As it has been recognized since Lucas (1987), smoothing purely transitory fluctuations in consumption will result in very small welfare benefits. However, Artis and Hoffmann (2012) point out that if shocks are persistent, the benefits from better consumption insurance may be huge. Since this issue has been hardly addressed in the literature, it is still an open question which the degree of risk sharing at lower frequencies is. As far as we know, this is the first time that non-stationary DFMs are used in this context.Footnote 5 In fact, any method able to address this issue will be useful for policy making: not only the benefits from insuring permanent shocks are higher, but also the channels to do so are different; see, Artis and Hoffmann (2008).

The rest of this paper is structured as follows. Section 2 describes the DFM and the factor extraction procedures considered. Section 3 presents the results of Monte Carlo experiments. Section 4 contains the empirical application to measure risk sharing. Finally, Sect. 5 concludes.

2 Factor Extraction Algorithms

In this section, we introduce notation and describe the DFM considered in this paper. Furthermore, the PC and 2SKS factor extraction procedures are described both when implemented to original and first-differenced data.

2.1 Dynamic Factor Model

We consider the following static DFM where the unobserved common factors, \( F_{t}\), and the idiosyncratic noises, \(\varepsilon _{t}\), follow potentially non-stationary VAR(1) processes:

where \(Y_{t}=(y_{1t},\dots ,y_{Nt})^{\prime }\) and \(\varepsilon _{t}=(\varepsilon _{1t},\dots ,\varepsilon _{Nt})^{\prime }\) are \(N\times 1\) vectors of the variables observed at time t and idiosyncratic noises, respectively. The common factors, \(F_{t}=(F_{1t},\dots ,F_{rt})^{\prime }\), and the factor disturbances, \(\eta _{t}=(\eta _{1t},\dots ,\eta _{rt})^{\prime }\), are \(r\times 1\) vectors, with \(r\,(r<N)\) being the number of common factors which is assumed to be known. The \(N\times 1\) vector of idiosyncratic disturbances, \(a_{t}\), is distributed independently from the factor disturbances, \(\eta _{t}\), for all leads and lags. Furthermore, \(\eta _{t}\) and \(a_{t}\) are assumed to be Gaussian white noises with positive definite covariance matrices \(\Sigma _{\eta }=\text {diag}(\sigma _{\eta _{1}}^{2},\ldots ,\sigma _{\eta _{r}}^{2})\) and \(\Sigma _{a},\) respectively. \(P=(p_{1},\dots ,p_{N})^{\prime }\) is the \(N\times r\) matrix of factor loadings, where, \( p_{i}=(p_{i1},\dots ,p_{ir})^{\prime }\) is an \(r \times 1\) vector. Finally, \(\Phi =\text {diag}(\phi _{1},\dots ,\phi _{r})\) and \(\Gamma =\text {diag}(\gamma _{1},\ldots ,\gamma _{N})\) are \(r\times r\) and \(N\times N\) matrices containing the autoregressive parameters of the factors and idiosyncratic components, respectively, which can be equal to one; see, for example, Stock and Watson (1989) and Barigozzi and Luciani (2017) for static DFMs for non-stationary data.

Note that, according to economic theory, there is full agreement that some factors (related with, for example, technology shocks) may have permanent effects while others (such as monetary policy shocks) have only transitory ones. Furthermore, there are also arguments to assume non-stationary idiosyncratic components. Barigozzi et al. (2016, 2017) point out that stationarity of the idiosyncratic components would produce an amount of cointegration relations for the observed system that it is not consistent with that found in the systems that are standard in the DFMs literature as, for example, those of Stock and Watson (2002) and Forni et al. (2009). The idiosyncratic component in those datasets is likely to be non-stationary. The implausibility of a stationary idiosyncratic component is also confirmed empirically by Barigozzi et al. (2016) in a large macroeconomic system of quarterly series describing the US economy with about half of the estimated idiosyncratic components found to be non-stationary according to the test proposed by Bai and Ng (2004).

The DFM in Eqs. (1)–(3) is not identified. To solve the identification problem and uniquely define the factors, a normalization is necessary. In the context of PC factor extraction, it is common to impose the restriction \(P^{\prime }P/N=I_{r}\) and \(F^{\prime }F\) being diagonal, where \(F=(F_{1},\dots ,F_{T})\) is the \(r\times T\) matrix of common factors; see, for example, Bai and Wang (2014) and Barigozzi et al. (2016). Bai and Ng (2013) consider alternative identification restrictions in the context of PC factor extraction.

2.2 PC Factor Extraction

The most popular factor extraction procedures in large datasets are based on PC. The distinctive feature of PC is that it allows a consistent factor extraction in large datasets without assuming any particular error distribution and specifications of the factors and idiosyncratic noises further than the correlation of the latter being weak and the variability of the common factors being not too small.Footnote 6 Furthermore, PC is computationally simple which explains its wide implementation among practitioners when dealing with very large systems of economic variables.

PC factor extraction separates the common component, \(PF_{t}\), from the idiosyncratic component, \(\varepsilon _{t},\) through cross-sectional averages of \(Y_{t}\) in such a way that when N and T tend to infinity, the effect of the idiosyncratic component converges to zero remaining only the effects associated with the common factors. PC estimators of P and \( F_{t}\) are obtained as the solution to the following least squares problem

subject to the identification restrictions \(P^{\prime }P/N=I_{r}\) and \(F^{\prime }F\) being diagonal, where \( V_{r}(P,F)=\frac{1}{NT}\sum _{t=1}^{T}(Y_{t}-PF_{t})^{\prime }(Y_{t}-PF_{t}).\) The solution to (4) is obtained by setting \({\hat{P}}^{PCL}\) equal to \(\sqrt{N}\) times the eigenvectors corresponding to the r largest eigenvalues of \(YY^{^{\prime }}\) where \(Y=(Y_{1},\dots ,Y_{T})\) is an \( N\times T\) matrix of observations. The corresponding PC estimator of F using data in levels is given by

Alternatively, when the common factors are I(0), Bai and Ng (2002) consider the restriction \(FF^{\prime }/T=I_{r}\) with \(P^{\prime }P\) being diagonal, such that the estimator of the matrix of common factors, \({\hat{F}}^{PCL}\), is by rows \(\sqrt{T}\) times the eigenvectors corresponding to the r largest eigenvalues of the \(T\times T\) matrix \(Y^{\prime }Y\), with estimated factor loadings, \({\hat{P}}^{PCL}=Y{\hat{F}}^{PCL^{\prime }}/T\). When the common factors are I(1), Bai (2004) proposes to use the restriction \( FF^{\prime }/T^{2}=I_{r}\) with \(P^{\prime }P\) being diagonal. In this case, \( {\hat{F}}^{PCL}\) is by rows T times the eigenvectors corresponding to the r largest eigenvalues of the \(T\times T\) matrix \(Y^{\prime }Y\) and \({\hat{P}} ^{PCL}=Y{\hat{F}}^{PCL^{\prime }}/T^{2}\). These latter restrictions are less costly when \(N>T\), while the former are less costly when \(N<T\).

In the context of stationary systems, if the common factors are pervasive and the serial and cross-sectional correlations of the idiosyncratic components are weak, Bai (2003) proves the consistency of \({\hat{F}}^{PCL}\), \({\hat{P}}^{PCL}\) and the common component, deriving their asymptotic distributions when N and T tend simultaneously to infinity, allowing for heteroscedasticity in both the temporal and cross-sectional dimensions; see, also, Bai and Ng (2002) and Stock and Watson (2002). Bai (2004) extends these asymptotic results to PC factor extraction in the DFM in Eqs. (1)–(3) when \(F_{t}\) is I(1) and \( \varepsilon _{t}\) is I(0). When the idiosyncratic components are I(1), Bai and Ng (2008) show that PC factor extraction implemented to data in levels yields inconsistent estimates of the common factors.

Alternatively, instead of extracting the factors implementing PC to the original data, Bai and Ng (2004) propose differencing the data in a univariate fashion and extract the factors from the following differenced model

where \(f_t=\Delta F_t\), \(u_t=\Delta \eta _t\), \(e_t=\Delta \varepsilon _t\) and \(v_t=\Delta a_t\) with \(\Delta =(1-L)\) and L being the lag operator such that \( LY_{t}=Y_{t-1}\). The weights are estimated as \(\sqrt{N}\) times the first r normalized eigenvectors of the \(N\times N\) sample covariance matrix of \( \Delta Y_{t}\) and denoted by \({\hat{P}}^{PCD}\). The corresponding estimated factors are given by

Once the factors are extracted from the first-differenced variables, the estimated factors can be obtained either by integration of their estimated first differences as proposed by Bai and Ng (2004) or by projecting the original system onto the space spanned by the estimated loadings as proposed by Barigozzi et al. (2016). The “differencing and recumulating” estimated factor is given by

Note that assuming \(Y_{0}=0,\) the estimated differenced factor at time \(t = 1\) is given by \({\hat{f}}_{1}=N^{-1}{\hat{P}}^{PCD^{\prime }}Y_{1}\), and consequently, the estimated recumulated factor coincides with the projected factor which is given by

Bai and Ng (2004) and Barigozzi et al. (2016) show that \({\hat{F}}_{t}^{PCD}\) and \({\hat{F}}_{t}^{BLL}\), respectively, are consistent estimators for a rotation of \(F_{t}\) up to a level shift regardless of whether the idiosyncratic component, \(\varepsilon _{t},\) is I(0) or I(1). Note that the factor estimators proposed by Bai and Ng (2004) and Barigozzi et al. (2016) are asymptotically equivalent with some finite sample differences when there are deterministic trends in the DFMs. Note that the elements in \({\hat{f}}_t\) are orthogonal, but those in \({\hat{F}}_{t}^{PCD}\) are not. This fact makes difficult the interpretation of this latest estimator.

For the properties of the PC-extracted factor when implemented to the original non-stationary system, it is crucial knowing whether the idiosyncratic errors are stationary or not. Bai and Ng (2004) propose the PANIC procedure to determine the order of integration of both the common factors and idiosyncratic components. Its objective is to determine the number of non-stationary common factors, \(r_1\), and to test if the idiosyncratic noises are non-stationary. If there is only one factor, PANIC tests are carried out through simple unit root test. If there are multiple factors, Bai and Ng (2004) consider two tests to determine the number of independent stochastic trends underlying the r common factors. The first test filters the factors under the assumption that they have a finite VAR representation. The second corrects for serial correlation of arbitrary form. On the other hand, when testing the non-stationarity of the idiosyncratic noises, univariate unit root tests have lower power, and consequently, the following pooled test is proposed

where \(s_i\) is the p-value corresponding to the Dickey–Fuller test of the ith idiosyncratic residuals. Pooled tests could not be used in the original data because of strong cross-correlation due to the common factors, but they can be used in the specific components since this strong cross-correlation has been removed after extracting the common factors. Bai and Ng (2010) analyze the finite sample properties of the pooled test.

2.3 Two-Step Kalman Smoother

The 2SKS procedure was proposed by Doz et al. (2011) for stationary DFMs. Therefore, 2SKS can be implemented to \(\Delta Y_{t}\). The 2SKS factor extraction procedure is based on combining PC and Kalman Smoother techniques. First, the common factors and factor loadings are estimated using PC obtaining \({\hat{P}}^{PCD}\) and \({\hat{f}}_{t}\) and the corresponding idiosyncratic and factor residuals, \({\hat{e}}=\Delta Y-{\hat{P}}^{PCD}{\hat{f}} \) and \({\hat{u}}_{t}={\hat{f}}_{t}-{\hat{\Phi }}{\hat{f}}_{t-1}\) where \({\hat{\Phi }}\) is the ordinary least squares (OLS) estimator of the regression of \({\hat{f}}_{t}\) on \({\hat{f}}_{t-1}.\) These residuals are used to estimate the covariance matrices \({\hat{\Psi }}=\text {diag}\left( {\hat{\Sigma }}_{e}\right) \) where \({\hat{\Sigma }}_{e}={\hat{e}} {\hat{e}} ^{\prime }/(T-1)\) with \({\hat{e}}=({\hat{e}}_{2},\dots ,{\hat{e}}_{T})\) is an \(N\times (T-1)\) matrix and \({\hat{\Sigma }} _{\eta }={\hat{u}}{\hat{u}}^{\prime }/(T - 1)\) where \(u=({\hat{u}}_{2},\dots ,{\hat{u}}_{T})\) is an \(r\times (T-1)\) matrix. Assuming that \(f_{0}\sim N(0,\Sigma _{f})\), the unconditional covariance of the factors can be estimated as vec\(( {\hat{\Sigma }} _{f}) =( I_{r^{2}}-{\hat{\Phi }}\otimes {\hat{\Phi }}) ^{-1}\)vec\( ( {\hat{\Sigma }}_{\eta }) .\) After writing the DFM in Eqs. (6)–(8) in state-space form, with the system matrices substituted by \({\hat{P}}^{PCD}\), \({\hat{\Psi }}\), \({\hat{\Phi }}\), \({\hat{\Sigma }} _{\eta }\) and \({\hat{\Sigma }}_{f},\) the Kalman smoother is run to obtain an updated estimation of the factors denoted by \({\hat{f}}_{t}^{KS}\). Finally, estimates of the common factors, \({\hat{F}}_{t}^{KSD}\), are obtained by recumulating analogously to Eq. (10).

Doz et al. (2011) prove the consistency of \({\hat{f}}_{t}^{KS}\) when N and T are large considering assumptions slightly different to those in Bai and Ng (2002), Stock and Watson (2002) and Bai (2003) but with a similar role. The 2SKS works well in finite samples obtaining more accurate factor estimates of \(f_{t}=\Delta F_{t}\) even in the presence of correlation and heteroscedasticity in the idiosyncratic noises; see Doz et al. (2011).Footnote 7

Considering the possibility of non-stationary common factors, we propose to extend the 2SKS algorithm as followsFootnote 8

- 1.

Obtain PC estimates of P and \(F_{t}\) with data in levels given by expression (5). Compute the idiosyncratic residuals \({\hat{\varepsilon }}=Y-{\hat{P}}^{PCL}{\hat{F}}^{PCL}\) and the covariance matrix of the idiosyncratic residuals \({\hat{\Psi }} = \text {diag}\left( {\hat{\Sigma }}_ \varepsilon \right) \).

- 2.

For each estimated factor, \({\hat{F}}_{jt}^{PCL}\), \(j=1,\dots ,r\), carry out the Augmented–Dickey–Fuller (ADF) test.

- (a)

If the null hypothesis of a unit root is rejected, obtain the OLS estimate of the autoregressive coefficient, \({\hat{\phi }}_{j}\), the residuals \( {\hat{u}}_{jt}={\hat{F}}_{jt}^{PCL}-{\hat{\phi }}_i{\hat{F}}_{jt-1}^{PCL}\) and the sample variance of the factor disturbance, \({\hat{\sigma }}_{\eta _{j}}^{2}=\sum _{t=1}^{T}u_{jt}^{2}/T\). The initial state of the factor is assumed to have zero mean and variance estimated by \({\hat{\sigma }} _{F_{j}}^{2}={\hat{\sigma }}_{\eta _{j}}^{2}/(1-{\hat{\phi }}_{j}^{2})\).

- (b)

If the null hypothesis is not rejected, then \({\hat{\phi }}_{j}=1\) and the residuals are computed as \({\hat{u}}_{jt}=\Delta {\hat{F}}_{jt}^{PCL}\). Calculate the variance of the factor residuals, \({\hat{\sigma }}_{\eta j}^{2}=\sum _{t=2}^{T}\Delta {\hat{F}}_{jt}^{PCL^{2}}/(T-1)\). Assume a diffuse prior for the initial factor with mean zero and variance \({\hat{\sigma }} _{F_{j}}^{2}=\kappa \), where \(\kappa \) is a large constant that empirically performs well (for instance, \(\kappa =10^{7}\)); see Harvey and Phillips (1979), Burridge and Wallis (1985) and Harvey (1989).Footnote 9

- (a)

- 3.

Obtain \({\hat{\Phi }}=\text {diag}({\hat{\phi }}_{1},\dots ,{\hat{\phi }}_{r})\), \(\hat{ \Sigma }_{\eta }=\text {diag}({\hat{\sigma }}_{\eta _{1}}^{2},\dots ,{\hat{\sigma }}_{\eta _{r}}^{2})\), \({\hat{\Sigma }}_{F} = \text {diag}({\hat{\sigma }}_{F_1}^2, \dots , {\hat{\sigma }}_{F_r}^2)\) and use them together with \({\hat{P}}^{PCL}\) and \({\hat{\Psi }}\) in the KS to obtain the estimated common factors \({\hat{F}}^{KSL}\).

3 Finite Sample Performance

In this section, we carry out Monte Carlo experiments in order to study the performance of the factor extraction procedures described in the previous section.

The experiments are based on \(R=500\) replicas generated by the DFM in Eqs. (1)–(3) with sample sizes \( T=(100,500)\) and \(N=(12,50,200)\). The factor loadings are generated once as \( P\thicksim U\left[ 0,1\right] \), and the autoregressive matrix of the idiosyncratic components is diagonal, \(\Gamma =\gamma I,\) with \(\gamma =(-\,0.8,0,0.7,1)\).Footnote 10 We consider three specifications of dependence of the idiosyncratic noises: a) homoscedastic and cross-sectionally uncorrelated, with \(\Sigma _{a}=\sigma _{a}^{2}I\) where \(\sigma _{a}^{2}=(0.1,1,10);\) b) heteroscedastic and cross-sectionally uncorrelated with the variances generated by \(\sigma _{a_{i}}^{2}\thicksim U\left[ 0.05,0.15\right] ,\)\(\sigma _{a_{i}}^{2}\thicksim U\left[ 0.5,1.5\right] \) and \(\sigma _{a_{i}}^{2}\thicksim U\left[ 5,15\right] \); c) homoscedastic and cross-sectionally correlated with weak cross-correlation generated following Kapetanios (2010) as \(\Sigma ^{1/2}\varepsilon _{t}\) where \(\Sigma =[\sigma _{i,j}],\sigma _{i,j}=\sigma _{j,i}\sim U(-\,0.1,0.1)\) for \(|i-j|\le 5\) for \(i,j=1,\dots N\). Finally, with respect to the unobserved factors, we consider four different data generating processes (DGPs). The first DGP, denoted as model 1 (M1), has \(r=1\), \(\Phi =1\) and \(\sigma _{\eta }^{2}=1\) so that the factor is given by a random walk. The second and third models (M2 and M3) introduce a second random walk with \(r=2\) and \(\Phi =I\) while \(\Sigma _{\eta }=I\) (M2) and \(\Sigma _{\eta }=\text {diag}(1,5)\) (M3). Finally, the fourth model considered (M4) also has two factors, but one is stationary while the other is not. In particular, in model M4, \(\Sigma _{\eta }=I\) and \( \Phi =\text {diag}(1,0.5).\)

For each DGP considered, the common factors are estimated using the procedures described in Sect. 2 obtaining \({\hat{F}}_{t}^{PCD}\) and \({\hat{F}} _{t}^{KSD},\) based on “differencing and recumulating,” and \({\hat{F}}_{t}^{PCL}\), \({\hat{F}}_{t}^{GPCE}\) and \({\hat{F}}_{t}^{KSL}\), based on data in levels.Footnote 11 Following Bai (2004), the performance of the factor extraction procedures is evaluated by computing the sample correlation between the true factor, \(F_{t},\) and a rotation of the estimated factors, \( {\hat{\delta }}_{j}^{\prime }{\widehat{F}}_{t}^{(j)}\), estimated by the following regression

Figure 1 plots the Box-plots of the sample correlations between the true and rotated estimated factors obtained through the Monte Carlo replicates when the systems are generated by the M1 model with homoscedastic idiosyncratic errors with \(\sigma _{a}^{2}=10\) when the temporal and cross-sectional dimensions are \((N,T)=(12,50),(12,100),(50,100),(200,100)\) and (200, 500).

Box-plots of the sample correlations between \(\{{\hat{\delta }}_{j}^{\prime } {\hat{F}}_{t}^{PCD}\}\), \(\{{\hat{\delta }}_{j}^{\prime }{\hat{F}} _{t}^{KSD}\}\), \(\{{\hat{\delta }}_{j}^{\prime }{\hat{F}}_{t}^{PCL}\}\) and \(\{\hat{\delta } _{j}^{\prime }{\hat{F}}_{t}^{KSL}\}\) with \(\{F_{t}\}\). We consider the M1 model with homoscedasticity in idiosyncratic errors with \(\sigma _{a}^{2}=10\). First row indicates \(N=12\) and \(T=50\); second row \(N=12\) and \( T=100\); third row \(N=50\) and \(T=100\); fourth row \(N=200\) and \(T=100\) and fifth row \(N=200\) and \(T=500\). The first column plots \(\gamma =-\,0.8\), second column \(\gamma =0\), third column \(\gamma =0.7\) and fourth column \(\gamma =1\)

Box-plots of the sample correlations between \(\{\hat{\delta }_{j}^{\prime } {\hat{F}}_{t}^{PCD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}} _{t}^{KSD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}}_{t}^{PCL}\}\) and \(\{\hat{\delta } _{j}^{\prime }{\hat{F}}_{t}^{KSL}\}\) with \(\{F_{t}\}\). We consider the M1 model with homoscedasticity in idiosyncratic errors with \(\gamma =-\,0.8\). First row indicates \(N=12\) and \(T=50\); second row \(N=12\) and \(T=100\) ; third row \(N=50\) and \(T=100\); fourth row \(N=200\) and \(T=100\); and fifth row \(N=200\) and \(T=500\). The first column plots \(\sigma _{a}^{2}=0.1\), second column \(\sigma _{a}^{2}=1\) and third column \(\sigma _{a}^{2}=10\)

Box-plots of the sample correlations between \(\{\hat{\delta }_{j}^{\prime } {\hat{F}}_{t}^{PCD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}} _{t}^{KSD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}}_{t}^{PCL}\}\) and \(\{\hat{\delta } _{j}^{\prime }{\hat{F}}_{t}^{KSL}\}\) with \(\{F_{t}\}\). We consider the M1 model with homoscedasticity in idiosyncratic errors with \(\gamma =1\). First row indicates \(N=12\) and \(T=50\); second row \(N=12\) and \(T=100\); third row \(N=50\) and \(T=100\); fourth row \(N=200\) and \(T=100\); and fifth row \( N=200\) and \(T=500\). First column plots \(\sigma _{a}^{2}=0.1\), second column \(\sigma _{a}^{2}=1\) and third column \(\sigma _{a}^{2}=10\)

Several conclusions are obtained from Fig. 1. First, all procedures based on differencing and recumulating are similar among them. The same can be said about the procedures based on extracting factors directly from the data in levels. Second, regardless of N and T, the correlations of the “differencing and recumulating” PC procedure can be rather low when the temporal dependence of the idiosyncratic component is negative. Furthermore, using the “differencing and recumulating” estimator implemented with the 2SKS procedure, named KSP in the graph, generates even smaller correlations, mainly when \(\gamma =-\,0.8.\) Note that, when the serial dependence of the idiosyncratic components is such that \(\gamma <0.5\), the variance of the differenced idiosyncratic component, \(\sigma _{e}^{2},\) is larger than the corresponding variance of the original component, \(\sigma _{\varepsilon }^{2}\); see, for example, Corona et al. (2017). Consequently, the performance of the procedures using data in first differences deteriorates in this case. However, if \(\gamma \ge 0.5, \) then \(\sigma _{e}^{2}<\sigma _{\varepsilon }^{2}\) and, consequently, the procedures based on “differencing and recumulating” may have advantages. Third, if the idiosyncratic noises are white noise, the 2SKS procedures implemented to raw data generate correlations which are always close to 1. Note that the two-step procedure proposed in this paper does a remarkably good job. Only when the cross-sectional and temporal dimensions are very large, the procedures based on first differences estimate factors with correlations close to one. Fourth, if the dependence of the idiosyncratic noises is positive, differencing or extracting the factors using the original non-stationary system yields similar correlations. Only when N and T are relatively small, differencing performs worse. Finally, when the idiosyncratic errors are non-stationary, i.e., \(\gamma =1,\) extracting the factors using differenced or original data yields similar moderate correlations. Only when N is very large, we observe the result established by the asymptotic theory with the procedures based on “differencing and recumulating” having correlations close to one while the non-consistent procedures based on original non-stationary data having smaller correlations.

The Box-plots in Fig. 1 help to understand the role of the dynamic dependence of the idiosyncratic noises on the performance of the alternative factor extraction procedures considered. In order to evaluate the effect of the variance of the disturbance of the idiosyncratic noises, Fig. 2 shows the Box-plots of the correlations of the common factor estimates and the simulated ones for model M1 with \(\gamma =-\,0.8\) and the same dimensions considered above and \(\sigma _{a}^{2}=0.1,\) 1 and 10. Note that if \(\sigma _{a}^{2}\) is small, then all procedures have correlations close to 1 regardless of the cross-sectional and temporal dimensions and whether they are based on first differences or original data. The deterioration of the procedures based on “differencing and recumulating” is already observed for \(\sigma _{a}^{2}=1\) with the exception of very large N and T. Finally, in Fig. 3, we study the role of the variance of the idiosyncratic noises when \(\gamma =1\). In this case, it is clearly better to take first differences to the original series. The performance of the procedures based on extracting factors from the original data is only reasonable when \(\sigma _{a}^{2}=0.1.\)

To evaluate the precision of the factor estimates and summarizing the results, we carry out a response surface analysis by regressing the sample correlation averages on the cross-sectional and temporal dimensions, N and T, and the temporal dependence and variance of the idiosyncratic noises, \( \gamma \) and \(\sigma _{a}^{2},\) for model M1 with homoscedastic, heteroscedastic and cross-correlated idiosyncratic noises. In the case of heteroscedastic idiosyncratic errors, the value of \(\sigma _{a}^{2}\) considered as regressor is the expected value of the variances for each idiosyncratic noise. The regression parameter estimates together with the corresponding standard errors and adjusted \(R^{2}\) are reported in Table 1. First, we can observe that the average correlation of the procedures based on “differencing and recumulating” is clearly smaller than that of the procedures implemented to original data. As above, we also observe that the correlations are similar among methods based on first differences and among methods based on original systems. Second, it is also clear that the correlations between the true factors and the rotated estimates obtained using procedures based on differenced data increase with \(\gamma ,\) the temporal dependence of the idiosyncratic noise. This result could be expected given that, as explained above, when \(\gamma <0.5,\) the variance of the differenced idiosyncratic component, \(\sigma _{e}^{2},\) is larger than the corresponding original variance, \( \sigma _{\varepsilon }^{2},\), and consequently, the recovery of the common factors is less precise. Furthermore, note that the increase in the correlations between true and rotated extracted factors is larger for KSL than for the PCL procedure, as expected given the flexibility of the Kalman filter to explicitly model the idiosyncratic dynamics. However, the correlations decrease with \(\gamma \) when the factor extraction procedures are implemented to original data. Third, increasing \(\sigma _{a}^{2}\) negatively affects factor extraction for all procedures. However, for the same reasons explained above, the effect of \(\sigma _{a}^{2}\) is less important if the factors are extracted using original non-stationary observations than when they are extracted using first-differenced data. Finally, Table 1 shows that the results are almost the same regardless of the particular specifications of the idiosyncratic components. It is remarkable that, for the particular specifications of the heteroscedasticity considered in this paper, the correlations between the true and rotated estimated factors obtained when the PCL and GPCE procedures are implemented are very similar.

Box-plots of the sample correlations between \(\{\hat{\delta }_{j}^{\prime } {\hat{F}}_{t}^{PCD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}} _{t}^{KSD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}}_{t}^{PCL}\}\) and \(\{\hat{\delta } _{j}^{\prime }{\hat{F}}_{t}^{KSL}\}\) with \(\{F_{t}\}\). We consider the \(N=50\) and \(T=100\) with \(\sigma _{a}^{2}=10\) and \(\gamma =-\,0.8\). First row plots M2 model, second row M3 model and third row M4 model. First column indicates the homoscedasticity, second column heteroscedasticity and third column cross-sectionally correlated idiosyncratic errors

Finally, we consider the three models with two factors. Figure 4 plots the Box-plots of the correlations across the Monte Carlo experiments between the true and rotated estimated common factors through the Monte Carlo experiments for models M2, M3 and M4 (by rows) with \(\sigma _{a}^{2}=10 \) and \(\gamma =-\,0.8\). In each case, we consider homoscedastic, heteroscedastic and cross-correlated idiosyncratic errors (by columns). The cross-sectional and temporal dimensions are \(N=50\) and \(T=100\). First of all, as far as the two factors are non-stationary, models M2 and M3, we can observe the same patterns as those described for the case of one single factor. However, when one factor is a random walk and the second factor is stationary, model M4, none of the procedures estimate this factor adequately. The results are drastically deteriorated when extracting the stationary common factor.Footnote 12 Finally, Fig. 5 plots the Box-plots of the correlations across Monte Carlo replicates when the idiosyncratic noise is I(1) and \(\sigma _{a}^{2}=1\). As expected, we can observe that the common factors are better extracted when we use first-differenced data.

Box-plots of the sample correlations between \(\{\hat{\delta }_{j}^{\prime } {\hat{F}}_{t}^{PCD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}} _{t}^{KSD}\}\), \(\{\hat{\delta }_{j}^{\prime }{\hat{F}}_{t}^{PCL}\}\) and \(\{\hat{\delta } _{j}^{\prime }{\hat{F}}_{t}^{KSL}\}\) with \(\{F_{t}\}\). We consider the \(N=50\) and \(T=100\) with \(\sigma _{a}^{2}=1\) and \(\gamma =1\). First row plots M2 model, second row M3 model and third row M4 model. First column indicates the homoscedasticity, second column heteroscedasticity and third column cross-sectionally correlated idiosyncratic errors

In the context of determination of the number of factors, Corona et al. (2017) conclude that if \(\varepsilon _{t}\) is stationary, with autoregressive parameters smaller than 0.5 while \(F_{t}\) is non-stationary, then overdifferencing the idiosyncratic components may introduce distortions on the determination of the number of factors given that the relation between the variances of the common and idiosyncratic components is modified with the variances of \(\Delta F_{t}\) decreasing and the variances of \(e_{t}\) increasing in relation to the variance of \(F_{t}\) and \(\varepsilon _{t}\), respectively. Recall as well that some procedures do not yield consistent estimates when the idiosyncratic noises are I(1).

4 Empirical Analysis

International or cross-border risk sharing focuses on the smoothing of consumption when a country is hit by an output shock. In an ideal world of perfect risk sharing, consumption should be insured. However, in practice, risk sharing is far from being full or complete and a percentage of GDP shocks are passed into consumption and not smoothed.

In a time series context, risk sharing has been traditionally addressed in the literature as a short-run issue and, consequently, analyzed within the context of stationary models. Nevertheless, more recently, some authors question this view and bring in the long-run perspective to the problem, although the results are not conclusive. For instance, Becker and Hoffmann (2006) and Pierucci and Ventura (2010) analyze risk sharing within a cointegration context. Artis and Hoffmann (2008, 2012) argue that risk sharing has increased at lower frequencies and relate their results to the permanent income hypothesis. On the contrary, Leibrecht and Scharler (2008), using cointegration techniques and vector error correction models, found that while consumption risk sharing in the short run was around 30%, it only accounts for a 10% in the long run. Fuleky et al. (2015) deviate from the usual homogeneous economies assumption. They provide country-specific measures of risk sharing both in the short and the long run and conclude that countries performing poorly in the short run, relative to other countries, also tend to have poor performance over the long run. As regards factor models, Del Negro (2002) implement a stationary DFM to disentangle movements in US state output and consumption due to national, regional or state-specific factors. Very recently, for capital flows, Byrne and Fiess (2016) apply non-stationary factor models to analyze the common and idiosyncratic elements in emerging markets’ capital inflows.

The variables in risk sharing are usually computed in deviations from the aggregate (see, among many others, the seminal paper by Asdrubali et al. 1996) even in the non-stationary framework (see, for instance, Becker and Hoffmann 2006; Artis and Hoffmann 2008, 2012, or Pierucci and Ventura 2010). The aim is to create the series of pure idiosyncratic or domestic shocks. Other than that, a common factor would pick up that consumption is generally 2/3 or more of GDP. These variables are usually known in the literature of risk sharing as idiosyncratic GDP and consumption, or GDP and consumption shocks. In order to avoid confusions with the idiosyncratic errors in the DFM literature, we will use the terms GDP and consumption shocks, respectively.

The economic interpretation of the common factor analysis in our model should be as follows. If there is full risk sharing, consumption and output shocks cannot share a common factor since these two variables should be orthogonal in an ideal case of complete risk sharing where, under certain assumptions, domestic consumption should be a constant fraction of the aggregate world output. Hence, lack of complete full risk sharing should be detected through commonalities between output and consumption shocks. If we can find non-stationary common factors among them, we could conclude that there is no risk sharing in the long run.

Our sample covers the following 21 industrialized OECD countries: Australia (AUS), Austria (AUT), Belgium (BEL), Canada (CAN), Denmark (DEN), Finland (FIN), France (FRA), Germany (DEU), Greece (GRC), Ireland (IRL), Italy (ITA), Japan (JPN), Netherlands (NLD), New Zealand (NZL), Norway (NOR), Portugal (PRT), Spain (ESP), Sweden (SWE), Switzerland (CHE), United Kingdom (GBR) and United States (USA). The data are annual observations of GDP and Consumption (C) from National Accounts and cover the time span 1960–2014 with \(N =42\) and \(T =55\). The main source of data is AMECO, the annual macroeconomic database of the European Commission’s Directorate General for Economic and Financial Affairs (DG ECFIN), which provides harmonized statistics on all of the variables required to perform the analysis. Real GDP and consumption corrected from power purchasing parity are converted into per capita variables. We build aggregate GDP and C for the set of countries included in the analysis following the weighting procedure described in Beyer et al. (2001), where the aggregation is performed directly on growth rates (first difference of logs) but using time-varying weights of countries that are given by their relative share in real GDP, in levels. The aggregate GDP and consumption growth rates are integrated to get the log of the aggregate variables. To define the idiosyncratic variables or gaps in log levels, we subtract the log of the aggregate from the log level of a specific country. The resulting gap could be interpret as the log of the percentage of a particular country GDP (consumption) over the aggregate variable (see Giannone and Reichlin 2006, for the same interpretation).

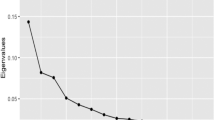

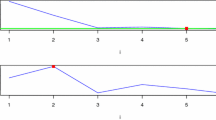

Unit root tests are performed for the GDP and consumption gaps for all countries and, overall, we can consider that the series are I(1). In order to determine the number of common factors, we implement the procedure proposed by Onatski (2010) and choose \(r =5\) regardless whether it is implemented to data in levels or first differences; see Corona et al. (2017) for a comparison on alternative procedures to determine the number of common factors in non-stationary DFMs.

Since we do not know if the idiosyncratic errors are stationary or not, we differentiate the data and extract 5 common factors using PCD. These 5 common factors explain 60\(\%\) of the total variability in the model with data in first differences. Then, we recumulate the extracted common factors and the specific components. We use PANIC to check if the idiosyncratic errors are non-stationary. We performed individual tests for each idiosyncratic error and the pooled test proposed by Bai and Ng (2004) where the pooled statistic of the log of the p-values (\(s_{i}\)) of the individual tests follows a standard normal distribution.

Both the individual tests over the idiosyncratic components as well as the pooled test (the S statistic was 0.19) indicate the idiosyncratic components are non-stationary. In this case, we have to choose any of the methods to extract the common factors that work with the data in first differences, since if the errors are non-stationary, the procedures that work with the data in levels do not yield to consistent estimates. This was reflected in our simulations by the low correlations between the generated common factors and the estimated ones.

The rationale for finding that the idiosyncratic errors are non-stationary should be as follows. A large part of the commonality has been removed when generating the data as the variables that enter into the model are already in deviations from the aggregate. This aggregate might proxy world comovements. Nevertheless, there are still strong correlations in the data that we remove through the common factors. If what it is left is non-stationary, as it might seem the case, it means that there are persistent movements that are generated internally and not shared among countries or due to interactions with third countries, as it might happen with the USA and Mexico. Another way of looking at this result is as follows: if after removing \(r_{1}\) non-stationary common factors, what is left is stationary, it means that we should find \(2N -r_{1}\) cointegrating relations among the data. This is not the case, and therefore, we conclude that in our model after removing r common factors (\(r_{1}\) being non-stationary), what is left is non-stationary as well.

We proceed using PCD to recover the common factors and the factor loadings. As mentioned before, we applied the “differencing and recumulating” method suggested by Bai and Ng (2004), although any method that works with the data in first differences could be used as well. We test how many of the common factors are non-stationary. The extracted sample factors in first differences are orthogonal as this condition is imposed for identification purposes; however, the recumulated common factors do not need to be orthogonal. Therefore, we test how many of the common factors are non-stationary using the variant of the test for common trends of Stock and Watson (1988) proposed by Bai and Ng (2004). Basically, the test consists of deciding how many of the eigenvalues of the first-order autoregressive matrix after correcting for serial correlation in the residuals are close enough to 1. The estimated eigenvalues are 0.66, 0.83, 0.90, 0.91 and 1.02. We cannot reject the null hypothesis of 5 common trends, even though the fifth eigenvalue is only 0.66. Since T is not so large, we can conclude that there are 5 common factors in the data and, at least, 4 of them are non-stationary factors.

The next step is to decide if the factor loadings are different from zero and if we find loadings different from zero associated with GDP and consumption for the same country. Since the factor loading matrix is the same for the model in first differences than for the model in levels, and in the model in levels the idiosyncratic errors are I(1), we perform inference about the factor loadings using the factor model in first differences (the asymptotic distribution of the loadings is given in Bai 2003).

We analyze the factor loadings of the first common factor (see Fig. 6) related to GDP. The factor loadings could be considered different from zero for all countries but Australia, Canada, Denmark, UK and Switzerland. It gives positive weights to the Anglo-Saxon countries (USA, CAN, GBR, NZL and AUS) although it can be only considered different from zero for USA and New Zealand while the weights have the opposite sign for the rest of European countries (other than the UK) and Japan. Within the last set, the highest, in absolute value, are given to Greece, Portugal and Spain followed by Japan. We can interpret it as a wealth factor.

Curious enough, Greece, Portugal and Spain (jointly with Ireland and Italy that also have significant factor loadings of the same sign) constitute the so called PIIGS group, peripheral European countries where risk sharing has collapsed during the last recession and subsequent sovereign debt crisis. Kalemki-Ozcan et al. (2014) point out that the governments of these countries did not save during the expansionary phases of the business cycle and were not able to borrow on the international markets during the crisis due to the high levels of outstanding public debt. Ireland is also included in this set although its case is slightly different, with government deficits related to banking failures; see Kalemki-Ozcan et al. (2014). This might be the reason why Ireland is included in this group instead of within the Anglo-Saxon countries. Japan has experienced a long-lasting recession and sluggish output growth since the early 1990s.

The factor loadings associated with consumption shocks seem to follow very closely those of output shocks, indicating lack of risk sharing. This interpretation should be in accordance with Becker and Hoffmann (2006) and Pierucci and Ventura (2010).

The second common factor gives the highest positive weight to New Zealand. On the negative side appears Japan. The next 2 common factors are devoted to separate Greece from other countries. Basically, the third common factor separates Greece from Portugal and the fourth one to separates Greece from Ireland and Norway. The fifth common factor loads on several countries and has a difficult interpretation.

There are \(21 \times 5=105\) loadings associated with each country for GDP and the same quantity associated with consumption. We find that when a loading is significant for GDP for one country, it is usually significant and of the same sign for consumption for the same country, indicating lack of risk sharing. Only in 27 out of the 105 possible cases, factor loadings were significant for one of the variables (GDP or consumption) and not for the other (which could be an indication of risk sharing). The fact that most of the common factors are non-stationary indicates that it is harder to find risk sharing in the long run than in the short run.

5 Conclusions

In this paper, we contribute to the literature on non-stationary DFMs in two different directions. First, we examine the finite sample performance of alternative factor extraction procedures when estimating non-stationary common factors in the context of large DFMs. We consider the case where the common factors are cointegrated with the observed series (and, therefore, the idiosyncratic errors are stationary) and the case where they are not (and, then, the idiosyncratic errors are non-stationary). Second, we fit a non-stationary DFM to analyze the existence of risk sharing among OECD industrialized economies.

With respect to the finite sample performance of factor extraction procedures, we first extend the results in Bai (2004), Bai and Ng (2010) on the behavior of PC when implemented to original non-stationary observations or to their fist differences by considering a larger range of situations about the properties of the underlying factors and/or about the idiosyncratic noises. As expected, we show that, when the idiosyncratic errors are non-stationary, PC based on estimating the common factors using non-stationary time series in levels do not perform well and that the procedures based on first differences should be used; see Bai and Ng (2008).

Furthermore, we extend the hybrid method by Doz et al. (2011) based on combining PC and Kalman smoothing, applying the technique to original non-stationary observations. We show that the finite sample properties of the hybrid method are very similar to those of the corresponding PC procedure both when they are applied to non-stationary levels and to their first differences. When the idiosyncratic errors are stationary, the methods based on levels have a clear advantage while if the errors are non-stationary, the approaches based on estimating the common factors using the levels do not perform well and the procedures based on first differences should be used.

Finally, the empirical application shows that for a non-stationary system of 21 OECD industrialized economies, at least four common factors are non-stationary, such that consumption and GDP share common trends. Furthermore, we apply PANIC to the estimated idiosyncratic errors, concluding that this component is non-stationary. Hence, these facts suggest the lack of full risk sharing in the short but specially in the long run.

From a policy point of view, there is a need of measures to increase the resilience of the different economies to output shocks. Of course, these measures can be different depending on whether we focus on long-run or short-run consumption smoothing.

The nature of shocks is usually linked in most economic theories with different types of shocks: permanent shocks are usually related to the supply side while transitory or business cycle shocks are usually linked to the demand side. Policy measures, if automatic stabilizers do not work out, should be of different nature: structural in case of permanent shocks and discretionary in the case of transitory shocks. If, however, we should rely on automatic stabilizers, those should also work through different channels depending on the nature of shocks. Becker and Hoffmann (2006) and Artis and Hoffmann (2008) point out that insurance against permanent shocks requires ex-ante diversification which is generally only possible through state-contingent assets such as equities, whereas transitory variation in income can also be smoothed ex-post through borrowing and lending, for instance, through loans.

Notes

In this paper, we focus on DFMs without deterministic trends. In this case, both approaches are equivalent.

Other authors dealing with non-stationary DFMs are Eickmeier (2009), who analyze the comovements and heterogeneity in the euro area by fitting a non-stationary DFM similar to Bai and Ng (2004), augmented with a structural factor setup from Forni and Reichlin (1998). Also, Bai and Ng (2004) extend the results of Bai and Ng (2004), and Forni et al. (2014) who evaluate the role of news shocks in generating the business cycle. Finally, Choi (2017) extends the Generalized PC estimator (GPCE) to the case of unit roots in the common factors, deriving the asymptotic distribution of the common factors and factor loadings. He shows that the GPCE is more efficient than the traditional PC estimator.

In this paper, we focus on non-stationary DFMs based on time domain. For non-stationary DFMs based on frequency domain, see Eichler et al. (2011).

In independent work, Barigozzi and Luciani (2017) also propose a generalization of Doz et al. (2011, 2012) to the non-stationary case. They show empirically that the 2SKS extraction is more efficient than integrating the PC estimator of the first differences of the factors. However, they do not consider the comparison with recumulating the 2SKS estimates.

From the point of view of financial flows, Byrne and Fiess (2016) use PANIC for capital inflows in emerging markets. These inflows are part of the capital markets for risk sharing according to the traditional channel decomposition of Asdrubali et al. (1996). However, they do not check the effects on consumption smoothing.

Onatski (2012) considers a DFM in which the explanatory power of the factors does not strongly dominate the explanatory power of the idiosyncratic components.

Doz et al. (2012) propose iterating the 2SKS procedure until convergence is achieved in terms of two consecutive log-likelihood values.

Barigozzi and Luciani (2017) propose an alternative extension in which, in order to isolate common trends and stationary factors, they use a nonparametric approach which identifies the common trends as those linear combinations of the factors obtained by the leading eigenvectors of a transformation of the long-run covariance matrix as proposed by Peña and Poncela (2006), Pan and Yao (2008), Lam et al. (2011) and Zhang et al. (2018).

Koopman (1997) gives an exact solution for the initialization of the Kalman filter and smoothing for state- space models with diffuse initial conditions.

Alternatively, we generate artificial systems by model M1 where the temporal dependence of the idiosyncratic errors is \(\Gamma =\text {diag} (-\,0.8I_{N/2},1I_{N/2})\) and \(\Gamma =\text {diag}(0I_{N/2},0.7I_{N/2})\). The results are very similar to those when all idiosyncratic errors have the same dependence with \(\gamma =-\,0.8\) and \(\gamma =0,\) respectively. It seems that the results are driven by the smallest temporal dependence among the idiosyncratic noises. These results are available upon request.

Note that, in the context of the DFM considered in this paper, the Monte Carlo results for the procedure proposed by Barigozzi et al. (2016) (BLL) are almost identical to those obtained by the procedure proposed by Bai and Ng (2004). Results from the GPCE and BLL procedures are available from the authors upon request.

The results are similar even if the cross-sectional and temporal dimensions are increased.

References

Artis, M. J., & Hoffmann, M. (2008). Financial globalization, international business cycles and consumption risk sharing. Scandinavian Journal of Economics, 110(3), 447–471.

Artis, M. J., & Hoffmann, M. (2012). The home bias, capital income flows and improved long-term consumption risk sharing between industrialized countries. International Finance, 14(3), 481–505.

Asdrubali, P., Sorensen, B., & Yosha, O. (1996). Channels of interstate risk sharing: United States 1963–1990. The Quarterly Journal of Economics, 111(4), 1081–1110.

Bai, J. (2003). Inferential theory for factor models of large dimensions. Econometrica, 71(1), 135–171.

Bai, J. (2004). Estimating cross-section common stochastic trends in nonstationary panel data. Journal of Econometrics, 122(1), 137–183.

Bai, J., & Ng, S. (2002). Determining the number of factors in approximate factor models. Econometrica, 70(1), 191–221.

Bai, J., & Ng, S. (2004). A PANIC attack on unit roots and cointegration. Econometrica, 72(4), 1127–1177.

Bai, J., & Ng, S. (2008). Large dimensional factor analysis. Foundations and Trends in Econometrics, 3(2), 89–163.

Bai, J., & Ng, S. (2010). Panel unit root tests with cross-section dependence: A further investigation. Econometric Theory, 26, 1088–1114.

Bai, J., & Ng, S. (2013). Principal components estimation and identification of static factors. Journal of Econometrics, 176, 18–29.

Bai, J., & Wang, P. (2014). Identification theory for high dimensional static and dynamic factor models. Journal of Econometrics, 178(2), 794–804.

Bai, J., & Wang, P. (2016). Econometric analysis of large factor models. Annual Review of Economics, 8, 53–80.

Banerjee, A., Marcellino, M., & Masten, I. (2014). Forecasting with factor-augmented error correction models. International Journal of Forecasting, 30(3), 589–612.

Banerjee, A., Marcellino, M., & Masten, I. (2017). Structural factor error correction models: Cointegration in large-scale structural FAVAR models. Journal of Applied Econometrics, 32(6), 1069–1086.

Barigozzi, M., Lippi, M., & Luciani, M. (2016). Non-stationary dynamic factor models for large datasets. Finance and Economics Discussion Series Divisions of Research & Statistics and Monetary Affairs Federal Reserved Board, 024, Washington, DC.

Barigozzi, M., Lippi, M., & Luciani, M. (2017). Dynamic factor models, cointegration, and error correction mechanisms. Working paper. arXiv:1510.02399v3.

Barigozzi, M., & Luciani, M. (2017). Common factors, trends, and cycles in large datasets. Manuscript.

Becker, S., & Hoffmann, M. (2006). Intra- and international risk-sharing in the short run and the long run. European Economic Review, 50(6), 777–806.

Beyer, A., Doornik, J., & Hendry, D. (2001). Constructing historical Euro-zone data. The Economic Journal, 111(469), 102–121.

Box, G. E. P., & Tiao, G. C. (1977). A canonical analysis of multiple time series. Biometrika, 64, 355–365.

Breitung, J., & Choi, I. (2013). Factor models. In N. Hashimzade & M. A. Thorton (Eds.), Handbook of research methods and applications in empirical macroeconomics. Cheltenham: Edward Elgar.

Breitung, J., & Eickmeier, S. (2006). Dynamic factor models. In O. Hubler & J. Frohn (Eds.), Modern econometric analysis. Berlin: Springer.

Burridge, P., & Wallis, K. F. (1985). Calculating the variance of seasonally adjusted series. Journal of the American Statistical Association, 80, 541–552.

Byrne, J., & Fiess, N. (2016). International capital flows to emerging markets: National and global determinants. Journal of International Money and Finance, 61, 82–100.

Canova, F. (1998). Detrending and business cycle facts. Journal of Monetary Economics, 41, 475–512.

Choi, I. (2017). Efficient estimation of nonstationary factor models. Journal of Statistical Planning and Inference, 183, 18–43.

Corona, F., Poncela, P., & Ruiz, E. (2017). Determining the number of factors after stationary univariate transformations. Empirical Economics, 53, 351–372.

Del Negro, M. (2002). Asymmetric shocks among U.S. states. Journal of International Economics, 56, 273–297.

Doz, C., Giannone, D., & Reichlin, L. (2011). A two step estimator for large approximate dynamic factor models. Journal of Econometrics, 164(1), 188–205.

Doz, C., Giannone, D., & Reichlin, L. (2012). A quasi maximum likelihood approach for large, approximate dynamic factor models. The Review of Economics and Statistics, 94(4), 1014–1024.

Eichler, M., Motta, G., & von Sachs, R. (2011). Fitting dynamic factor models to non-stationary time series. Journal of Econometrics, 163(1), 51–70.

Eickmeier, S. (2009). Comovements and heterogeneity in the Euro area in a non-stationary dynamic factor model. Journal of Applied Econometrics, 24, 933–959.

Engel, C., Mark, N., & West, K. (2015). Factor model forecasts of exchange rates. Econometric Reviews, 34(1–2), 32–55.

Escribano, A., & Peña, D. (1994). Cointegration and common factors. Journal of Time Series Analysis, 15(6), 577–586.

Forni, M., Gambetti, L., & Sala, L. (2014). No news in business cycles. Economic Journal, 124, 1168–1191.

Forni, M., Giannone, D., Lippi, M., & Reichlin, L. (2009). Opening the black box: Structural factor models versus structural VARs. Econometric Theory, 25, 1319–1347.

Forni, M., & Reichlin, L. (1998). Let’s get real: A factor analytical approach to disaggregated business cycle dynamics. Review of Economic Studies, 65(3), 453–473.

Fuleky, P., Ventura, L., & Zhao, Q. (2015). International risk sharing in the short and in the long run under country heterogeneity. International Journal of Finance and Economics, 20, 374–384.

Geweke, J. (1977). The dynamic factor analysis of economic time series. In D. J. Aigner & A. S. Goldberger (Eds.), Latent variables in socio-economic models. Amsterdam: North-Holland.

Giannone, D., & Reichlin, L. (2006). Does information help recovering structural shocks from past observations? Journal of the European Economic Association, 4(2–3), 455–465.

Gonzalo, J., & Granger, C. W. J. (1995). Estimation of common long-memory components in cointegrated systems. Journal of Business & Economic Statistics, 13(1), 27–35.

Greenway-McGrevy, R., Mark, N., Sul, D., & Wu, J.-L. (2018). Identifying exchange rate common factors. International Economic Review, 59, 2193–2218.

Harvey, A. C. (1989). Forecasting structural time series models and the Kalman filter. Cambridge: Cambridge University Press.

Harvey, A. C., & Phillips, G. (1979). Maximum likelihood estimation of regression models with autoregressive-moving average disturbances. Biometrika, 152, 49–58.

Johansen, S. (1991). Estimation and hypothesis testing of cointegration vectors in Gaussian vector autoregressive models. Econometrica, 59(6), 1551–1580.

Kalemki-Ozcan, S., Luttini, E., & Sensen, B. (2014). Debt crises and risk-sharing: The role of markets versus sovereigns. Scandinavian Journal of Economics, 116(1), 253–276.

Kapetanios, G. (2010). A testing procedure for determining the number of factors in approximate factor models with large datasets. Journal of Business & Economic Statistics, 28(3), 397–409.

Koopman, S. (1997). Exact initial Kalman filtering and smoothing for nonstationary time series models. Journal of the American Statistical Association, 92(440), 1630–1638.

Lam, C., Yao, Q., & Bathia, N. (2011). Estimation of latent factors using high-dimensional time series. Biometrika, 98(4), 901–918.

Leibrecht, M., & Scharler, J. (2008). Reconsidering consumption risk sharing among OECD countries: Some evidence based on panel cointegration. Open Economies Review, 19(4), 493–505.

Lucas, R. J. (1987). Models of business cycles. Oxford: Basil Blackwell.

Moon, H. R., & Perron, B. (2004). Testing for a unit root in panels with dynamic factors. Journal of Econometrics, 122(1), 81–126.

Onatski, A. (2010). Determining the number of factors from empirical distribution of eigenvalues. The Review of Economics and Statistics, 92(4), 1004–1016.

Onatski, A. (2012). Asymptotics of the principal components estimator of large factor models with weakly influential factors. Journal of Econometrics, 168, 244–258.

Pan, J., & Yao, Q. (2008). Modelling multiple time series via common factors. Biometrika, 95(1), 365–379.

Peña, D., & Poncela, P. (2006). Non-stationary dynamic factor analysis. Journal of Statistical Planning and Inference, 136(1), 237–257.

Pierucci, E., & Ventura, L. (2010). Risk sharing: A long run issue? Open Economies Review, 21(5), 705–730.

Poncela, P., & Ruiz, E. (2016). Small versus big data factor extraction in dynamic factor models: An empirical assessment. In E. Hillebrand & S. J. Koopman (Eds.), Advances in econometrics (Vol. 35, pp. 401–434). Emerald: Bingley.

Quah, D., & Sargent, T. J. (1993). A dynamic index model for large cross-sections. In J. H. Stock & M. W. Watson (Eds.), Business cycles, indicators and forecasting. Chicago: University of Chicago Press.

Sargent, T. J., & Sims, C. A. (1977). Business cycle modeling without pretending to have too much a priori economic theory. In C. A. Sims (Ed.), New methods in business cycle research. Minneapolis: Federal Reserve Bank of Minneapolis.

Seong, B., Ahn, A. K., & Zadrozny, P. A. (2013). Estimation of vector error correction models with mixed-frequency data. Journal of Time Series Analysis, 34, 194–205.

Sims, C. A. (2012). Comments and discussion: Disentangling the channels of the 2007–2009 recession. Brooking Papers on Economic Activity, Spring 2012, 141–148.

Stock, J. H., & Watson, M. W. (1988). Testing for common trends. Journal of the American Statistical Association, 83(1), 1097–1107.

Stock, J. H., & Watson, M. W. (1989). New indexes of coincident and leading economic indicators. In O. J. Blanchard & S. Fischer (Eds.), NBER macroeconomics Annual 1989. Cambridge: MIT Press.

Stock, J. H., & Watson, M. W. (2002). Forecasting using principal components from a large number of predictors. Journal of the American Statistical Association, 97(460), 1167–1179.

Stock, J. H., & Watson, M. W. (2011). Dynamic factor models. In M. P. Clements & D. F. Hendry (Eds.), Oxford handbook of economic forecasting. Oxford: Oxford University Press.

Stock, J. H., & Watson, M. W. (2012). Disentangling the channels of the 2007–2009 recession. Brooking Papers on Economic Activity, Spring 2012, 81–130.

Vahid, F., & Engle, R. F. (1993). Common trends and common cycles. Journal of Applied Econometrics, 81(1), 341–360.

Zhang, R., Robinson, P., & Yao, Q. (2018) Identifying cointegration by eigenanalysis. Journal of the American Statistical Association. https://doi.org/10.1080/01621459.2018.1458620.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Financial support from the Spanish Government Projects ECO2015-70331-C2-1-R and ECO2015-70331-C2-2-R (MINECO/FEDER) is gratefully acknowledged. This paper was started while Pilar Poncela was still at Universidad Autónoma de Madrid. We are very grateful for the detailed comments of an anonymous referee which have been very useful to improve the presentation of this paper. The views expressed in this paper are those of the authors and should not be attributed neither to the European Commission nor to INEGI.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Corona, F., Poncela, P. & Ruiz, E. Estimating Non-stationary Common Factors: Implications for Risk Sharing. Comput Econ 55, 37–60 (2020). https://doi.org/10.1007/s10614-018-9875-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-018-9875-9