Abstract

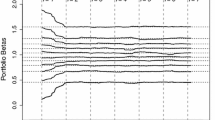

The Substantial-Gain–Loss-Ratio (SGLR) was developed to overcome some drawbacks of the Gain–Loss-Ratio (GLR) as proposed by Bernardo and Ledoit (J Polit Econ 108(1):144–172, 2000). This is achieved by slightly changing the condition for a good-deal, i.e. on the most extreme but at the same time very small part of the state space. As an empirical performance measure the SGLR can naturally handle outliers and is not easily manipulated. Additionally, the robustness of performance is illuminated via so-called \(\beta \)-diagrams. In the present paper we propose an algorithm for the computation of the SGLR in empirical applications and discuss its potential usage for theoretical models as well. Finally, we present two exemplary applications of an SGLR-analysis on historic returns.

Similar content being viewed by others

Notes

For a detailed discussion see Voelzke (2015).

Index data for DAX and S&P500 were provided by Thomson Reuters.

For the model parameters \(R_{f},E(R^{\textit{DAX}})\) and \(Var(R^{\textit{DAX}})\) we use empirical values, based on data starting in 1972. E.g. the risk-free rate is implied by the average normalized monthly returns of the 3-month T-bill for the last 43 years.

Cp. (Cochrane 2001, p. 139).

A risk aversion parameter of 50 should not be considered as realistic, but is a value that is necessary to meet stylized asset pricing facts in that model. Cp. (Cochrane 2001, p. 24).

Consumption data is taken from (Statistisches Bundesamt 2015, p. 8).

Here this is manly driven by the extreme SDF values in some years, due to the unrealistic choice of \(\gamma \).

To be precise, one has to assure that \(\tilde{M}'\) is a measurable function of \(\tilde{M}, \tilde{X}\) and U. This can be shown by considering simple functions (step functions) which approximate \(\tilde{M}'\).

References

Bernardo, A., & Ledoit, O. (2000). Gain, loss, and asset pricing. Journal of Political Economy, 108(1), 144–172.

Biagini, S., & Pinar, M. (2013). The best gain–loss ratio is a poor performance measure. SIAM Journal on Financial Mathematics, 4, 228–242.

Cherny, A., & Madan, D. (2008). New measures for performance evaluation. The Review of Financial Studies, 22(7), 2571–2606.

Cochrane, J. (2001). Asset pricing. Princeton: Princeton University Press.

Statistisches Bundesamt, (2015). Volkswirtschaftliche Gesamtrechnung - Private Konsumausgaben und verfügbares Einkommen. Beiheft zur Fachserie, 18.

Voelzke, J. (2015). Weakening the gain–loss-ratio measure to make it stronger. Finance Research Letters, 12, 58–66.

Acknowledgements

We thank Sascha Rüffer for his comprehensive editing of the manuscript. Furthermore, we thank Fabian Gößling for many helpful comments and discussions.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Lemma 1

There is a sequence \((M_n)_{n \in \mathbb {N}} \subset \mathrm {SDF}_\beta (M)\) such that \(\lim _{n \rightarrow \infty } \mathbb {E}(M_nX)^+ / \mathbb {E}(M_nX)^- = \mathrm {SGLR}_\beta ^M(X)\). Since \(\mathrm {Var}(M_n) \le \mathrm {Var}(M)+\beta \), the sequence is bounded in \(L^2\). Thus, the sequence \(\mu _n:=P((M,M_n,X) \in \cdot )\) is tight. Hence, it is weakly compact, i.e. there is a subsequence

\((M,M_{k_n}, X)_{n \in \mathbb {N}}\) which converges in law to a triple of random variables \((\tilde{M}, M^*, \tilde{X})\) which satisfies that \((\tilde{M}, \tilde{X})\) has the same law as (M, X) and

By the Portmanteau theorem,

Upon possibly extending the underlying probability space, a copy of \(M^*\) can be realized on the same probability space as M, hence becoming an element of \(\mathrm {SDF}_\beta (M)\).

Now assume (A1). For any \(M' \in \mathrm {SDF}_\beta ^+(M)\), using that \(P(X=x_j)=1/T\) for each j,

This shows that the Gain–Loss-Ratio depends on \(M'\) only through the values \(\mathbb {E}[M' |X=x_j]\), \(j=1, \ldots , T\). This can be further separated into (assuming that the conditions have nonzero probability)

In order to optimize \(M'\) in the sense of a minimal Gain–Loss-Ratio, one has to decrease its value at large positive \(x_j\) and / or increase its value at large negative \(x_j\), with the constraints on expectation and variance. It follows from the formula of total variance that the variance of \(M'\) is minimal if it holds \(M'=\mathbb {E}[M' | X=x_j, M' \ne M]\) on the sets \(\{X=x_j, M'\ne M\}\), compared to the case where \(M'\) is not constant on these sets. By the above considerations, the Gain–Loss-Ratio remains the same irrespective of whether \(M'\) is constant or not. Hence, the optimal \(M'\) is constant on such sets.

Observe furthermore that the value in (2) depends only on the probabilities of the sets not on the explicit realisation of \(\{X=x_j, M' \ne M\}\) as subsets of \(\varOmega \). Thus, the following defines a (M, X, U)-measurable random variable which is an element of \(\mathrm {SDF}_\beta ^+(M)\) and has the same Gain–Loss-Ratio as \(M^*\). Let \(p_j:=P(X=x_j, M^* \ne M)\), \(m_j^*:= \mathbb {E}[M^* \, | \, X=x_j, M^* \ne M)\) and define

Turning to the last assertion, we may as before choose a sequence \(\big ((m_i^n)_{i=1}^{Tk}\big )_n\) such that the associated Gain–Loss-Ratios converge to the minimal one. Since each \(m_i^n \ge 0\) and \(\frac{1}{Tk}\sum _{i=1}^{Tk} m_i^n =1\), the numbers \(m_i^n\) are uniformly bounded (by Tk), and hence there is a convergent subsequence, the limit of which we denote by \((m_i^*)_{i=1}^{Tk}\). It is then readily checked that \((m_i^*) \in d\mathrm {SDF}_\beta ^+(M)\), and that

\(\square \)

Now we are in a position to prove Proposition 1.

Proof of Proposition 1

Observe that \(d\mathrm {SDF}_{\beta ,k}^+(M) \subset \mathrm {SDF}_\beta ^+(M)\) (with the obvious identifications), hence it suffices to show that

where \(M^*\) is given by Lemma 1. Since (A1) holds, \(M^*\) is constant a.s. on each of the sets \(\{M^* \ne M, X=x_i\}\), \(i=1, \ldots , T\), i.e. there are \(m_i^*\), \(i=1, \ldots , T\), such that

Now the right hand side can easily be approximated by a sequence in \(d\mathrm {SDF}_{\beta ,k}\) of increasing fineness k. \(\square \)

Proof of Lemma 2

Assume the converse, i.e.,

Thus there is \(i \in I\) and \(j \in \{1, \ldots , T_k\} \setminus I\) such that j dominates i. Then define for \(1\le r \le Tk\)

Hence \(m^{new}\in {\textit{dSDF}}_{\beta ,k}(m)\) and a case-by-case consideration shows that

which contradicts the optimality of \(m^*\). \(\square \)

Proof of Lemma 3

In order to minimize the Gain–Loss-Ratio, we have to decrease M on the set \(\{X>0\}\), and to increase M on the set \(\{X<0\}\). The total mass of the set where we change M is restricted to \(\beta \), hence we cannot do better than changing M on both a subset \(A^+\) of \(\{X>0\}\)and a subset \(A^-\) of \(\{X <0\}\), both of which have mass \(\beta \). Obviously, changing M has the most effect on the subsets as described above. Since M has to remain nonnegative, the optimal choice on \(A^+\) is \(M'=0\). To justify the choice on \(A^-\), we take into account the restriction on the variance; \(\mathrm {Var}(M') {\mathop {\le }\limits ^{!}} \mathrm {Var}{M} + \beta \). Increasing the value of M “away” from its expectation by 1 on a set of mass \(\beta \) increases the variance by \(\beta \). But one has to take into account that at the same time, on a different set, the value of M is brought closer to its expectation. This might reduce the variance by at most \((m_0-1)^{1/2} \beta \). This difference can be “invested” on \(A^+\), too. Finally, since we increase uniformly on the set \(A^-\), we have to bound X by \(-x_0\). \(\square \)

Proof of Lemma 4

This follows from similar considerations as in the proof of Lemma 3. \(\square \)

Proof of Lemma 5

Choose a sequence \((M'_{T_n})_{n \in \mathbb {N}}\), such that \(M'_{T_n}\) is an element of \(\mathrm {SDF}_\beta ^+(M_{T_n})\) for each n and

This can be done by a diagonal argument: for each fixed T there is a sequence in \(\mathrm {SDF}_\beta ^+(M_T)\) such that the associated Gain–Loss-Ratio converges to \(\mathrm {SGLR}_\beta ^{M_T}(X_T)\) and there is a subsequence of \(\big (\mathrm {SGLR}_\beta ^{M_T}(X_T)\big )_T\) that converges to \(\underline{C}\).

The sequence \((M'_{T_n}, M_{T_n}, X_{T_n})_{n \in \mathbb {N}}\) is tight: \((M_{T_n}, X_{T_n})\) converges in \(L^2\) and \(\mathrm {Var}(M'_{T_n}) \le \mathrm {Var}(M_{T_n})+\beta \), hence the sequence \((M'_{T_n})_{n \in \mathbb {N}}\) is bounded in \(L^2\) as well. Thus, it is weakly compact, i.e. there are random variables \((\tilde{M}', \tilde{M}, \tilde{X})\) and a subsequence \((t_n)_{n \in \mathbb {N}}\) of \((T_n)_{n \in \mathbb {N}}\), such that

Since \((M_T, X_T)\) converges in law to (M, X), it follows that \((\tilde{M}, \tilde{X})\) has the same law as (M, X). Moreover, the Portmanteau theorem for weak convergence (applied to the closed set \(\{0\}\) and the open set \((-\infty ,0)\), resp.) yields that

Moreover, the \(L^2\) convergence implies

Thus, \(\tilde{M}' \in \mathrm {SDF}_\beta ^+(\tilde{M})\).Footnote 9 Consequently, since \((\tilde{M}, \tilde{X})\) has the same law as (M, X),

\(\square \)

Rights and permissions

About this article

Cite this article

Voelzke, J., Mentemeier, S. Computing the Substantial-Gain–Loss-Ratio. Comput Econ 54, 613–624 (2019). https://doi.org/10.1007/s10614-018-9845-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-018-9845-2