Abstract

The potential impacts of sea level rise (SLR) due to climate change have been widely studied in the literature. However, the uncertainty and robustness of these estimates has seldom been explored. Here we assess the model input uncertainty regarding the wide effects of SLR on marine navigation from a global economic perspective. We systematically assess the robustness of computable general equilibrium (CGE) estimates to model’s inputs uncertainty. Monte Carlo (MC) and Gaussian quadrature (GQ) methods are used for conducting a Systematic sensitivity analysis (SSA). This design allows to both explore the sensitivity of the CGE model and to compare the MC and GQ methods. Results show that, regardless whether triangular or piecewise linear Probability distributions are used, the welfare losses are higher in the MC SSA than in the original deterministic simulation. This indicates that the CGE economic literature has potentially underestimated the total economic effects of SLR, thus stressing the necessity of SSA when simulating the general equilibrium effects of SLR. The uncertainty decomposition shows that land losses have a smaller effect compared to capital and seaport productivity losses. Capital losses seem to affect the developed regions GDP more than the productivity losses do. Moreover, we show the uncertainty decomposition of the MC results and discuss the convergence of the MC results for a decomposed version of the CGE model. This paper aims to provide standardised guidelines for stochastic simulation in the context of CGE modelling that could be useful for researchers in similar settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sea-level rise (SLR) is one of the most studied impacts of climate change within the environmental economics literature. Researchers have used, among other methods, computable general equilibrium (CGE) models to assess the wider economic implications of SLR for a number of different climate change scenarios (Bosello et al. 2007, 2012a, b; Darwin and Tol 2001; Deke et al. 2001). An extension to the aforementioned models includes the assessment of the broader economic impacts of SLR-induced coastal land and capital losses and their effect on sea transportation networks (Chatzivasileiadis et al. 2016).

Climate change-induced transportation disruptions, through productivity losses in sea transport, can have a substantial effect on the global economy. Productivity losses affect the transportation costs which in turn may increase the market prices of transport–intensive products. Based on the IPCC RCP8.5 scenario, Chatzivasileiadis et al. (2016) show that climate change-induced transportation disruptions including coastal land and capital losses, could causes global welfare losses of circa USD 61 billion in 2050.

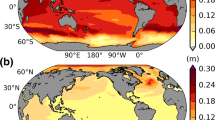

Most of the preceding studies have used CGE models to look at the economic implications of SLR using input data from a variety of sources to estimate the exogenous shock to the economy caused by SLR. Land loss is the most used link between SLR and changes in economic activity. In models such as the dynamic interactive vulnerability assessment (DIVA) model (Hinkel 2005; Hinkel et al. 2013, 2014; Vafeidis et al. 2008), land loss is estimated as a function of SLR. Based on the IPCC RCP8.5 scenario, the expected mean SLR in cm by the year 2100 is 74 cm with a range of [52, 98] relative to the mean over 1986–2005 (IPCC 2013). This range of almost half a meter reflects the level of uncertainty regarding the estimates of SLR. Consequently, land loss estimates and economic assessments connected to those SLR values will suffer from a much higher level of uncertainty that gets compounded in the different modelling stages.

A well-documented approach to address input uncertainty within a CGE model consists in performing a systematic sensitivity analysis (SSA). In order to perform a SSA we make a set of explicit assumptions on the probability distribution of the exogenous inputs for which uncertainty is high (Arndt 1996; Arndt and Pearson 1996; DeVuyst and Preckel 1997; Horridge et al. 2011). The end goal of this process is to estimate the statistical moments of the model outputs that are driven by the underlying uncertainties of the exogenous model inputs, such as stochastic shocks or parameters (Villoria and Preckel 2017).

This paper is the first to address the model input uncertainty regarding the wide effects of SLR on marine navigation from a global economic perspective. The analysis is based on a model that assesses the macroeconomic implications, both direct and indirect (general equilibrium), of climate change induced transportation disruptions by taking into account the direct loss of land and capital (Chatzivasileiadis et al. 2016). Our goal is to systematically assess the robustness of the CGE model results under the existing uncertainty of the model’s inputs by means of a Monte Carlo (MC) and a Gaussian Quadrature (GQ) SSA design. We look at the differences in results produced by distinct choices of probabilistic distributions for the MC analysis and we also include an uncertainty decomposition of the results.

Section 2 reviews the literature on CGE assessments of the SLR wide economic impacts, and the different methods of SSA applied within CGE context. Section 3 discusses the methods and data we used for our assessment. Section 4 presents the results of the SSA and Sect. 5 concludes.

2 Literature Review

2.1 Loss of Productive Resources

The literature simulating the effects of SLR on the economy using a GCE model is rather limited. Starting from Darwin and Tol (2001), SLR is linked to the economy-wide effects through a decrease in the endowment of land and capital in the economy. Based on the FUND model the authors estimate the exogenous shocks for land and capital based on the Global Vulnerability Assessment by Hoozemans et al. (1993) and other sources. The authors conclude that the uncertainty surrounding land and capital endowments threatened by sea level rise is substantial. Bosello et al. (2007) estimate the costs of 0.25 m of global SLR based on a CGE model where SLR is also modelled as a reduction of the endowment land available. Their data sources are the same as in Darwin and Tol (2001) but the analysis is based on the GTAP model. Similarly, Bosello et al. (2012a) based on input data from the DIVA model estimate the wide-economic effects of SLR focusing on land losses for Europe only using the same link between SLR and economy-wide effects as all the articles above. The aforementioned literature has focused on the full economic effects of SLR through land loss and coastal protection, ignoring changes to the transportation sector.

Chatzivasileiadis et al. (2016) look at the impact of SLR on transport infrastructure based on the GTAP model using the DIVA output as input. SLR is linked to economy-wide effects as a reduction of the available regional endowments of land and capital. SLR is then linked to regional productivity changes in the water transport sector based on the amount of land lost and on the flood costs each region will face in 2050.

To our knowledge, none of the existing papers has extended the analysis of the full economic effects of SLR to reflect the combined effects of input uncertainty in a systematic way. The novelty of this paper is that it addresses the model input uncertainty through a systematic sensitivity analysis in the link between SLR and economy wide effects by extending the analysis of Chatzivasileiadis et al. (2016).

2.2 Applications of SSA in the CGE Literature

The results of CGE models depend heavily on the calibration of the model and the exogenous shocks applied to the system. Harrison and Vinod (1992) stress the need for SSA that can capture, to some extent, the uncertainties surrounding CGE models. Different methods of stochastic modelling have been explored to address the underlining uncertainties of CGE models, namely the GQ approach and MC methods. Both methodologies have addressed the sensitivity of CGE models with respect to parameters (endogenous variables) and model inputs (exogenous shocks).

Stochastic modelling is computationally intensive, thus the literature has focused mostly on the GQ approach for SSA which requires only a few data points to approximate the central moments of stochastic variables. Practical examples of this method can be found in Arndt (1996), Arndt and Pearson (1996), Preckel et al. (2011) and Artavia et al. (2015).

In general, the literature has mostly avoided the use of MC methods for sensitivity analysis in CGE models. Looking back, we can find (Harrison and Vinod 1992) that focus on two elasticities for 15,000 separate solutions to derive the central moments of the results. The same methodology was applied by Harrison et al. (1997) based on 1000 realisations of the model elasticities in order to obtain the central moments of the outputs. Much later, Villoria and Preckel (2017) directly compare the GQ approach to MC for systematic sensitivity analysis within a CGE model. The authors conclude that the use of MC methods for implementing stochastic simulations has some advantages over GQ due to the advances in software and hardware available and the flexibility of the MC methods. Elliott et al. (2012) conduct a sensitivity analysis on the base year calibration data and elasticity of substitution parameters by using a MC experiment. The authors use a bootstrap resampling methodology to identify the possibility of reducing the number of simulations required by providing a good statistical approximation of the full set with a 95% confidence level.

We contribute to the existing literature by applying a Monte Carlo SSA on a pre-existing static CGE model (Chatzivasileiadis et al. 2016), where the input uncertainty regarding the SLR, as derived from the DIVA model, is substantial. Our purpose is threefold: to explore the sensitivity of the CGE model; to compare the MC and the GQ SSA methods; and more importantly, to set foundations for more standardised analysis for the future research by discussing the appropriate distributions that could be used in similar settings. We additionally discuss, for the first time, the uncertainty decomposition of the MC results and discuss the effects of decomposing the MC analysis into parts based on the shocks used in the CGE model. Last but not least, we developed a Stata program code that can be used to conduct the analysis discussed below using the DIVA dataset as input.

3 Methods and Data

3.1 The Base CGE Model

Following Chatzivasileiadis et al. (2016), we explore the input sensitivity of the latest version of the GTAP multi-sector/multi-country model that assesses the impact of sea level rise on transport infrastructure. The authors link SLR to economy-wide effects through a reduction of the available land and capital endowments, using the benchmark equilibrium dataset of GTAP 8. The effect of SLR on water transportation infrastructure is modelled as a reduction of the sea-port productivity relative to land and capital loss in two separate scenarios named DIVA_L and DIVA_C. Both scenarios include a combination of the same exogenous shocks for land and capital losses and their differentiation is in the source of the productivity shocks. In DIVA_L productivity reduction is dependent on land losses and in DIVA_C on sea-flood damage costs.

Data on the input variables described above are derived from the dynamic interactive vulnerability assessment model (DIVA). The DIVA model is an integrated impact-adaptation model of coastal systems that analyses the biophysical and socio-economic effects of SLR and socio-economic development on a regional and global scale. The DIVA model incorporates coastal erosion, coastal flooding, wetland changes and salinity intrusion. Additionally, adaptation to SLR is taken into account in terms of raising dikes and nourishing shores and beaches (Hinkel 2005; Hinkel et al. 2013, 2014; Vafeidis et al. 2008).

The outputs of DIVA for the year 2050 used in this study were constructed using the RCP 8.5 (J14) radiative forcing and the SSP2 socio-economic scenarios. For the analyses below , we use the two outputs of the DIVA model described above: land losses due to submergence and, expected sea-flood damage costs due to SLR. The projected global mean SLR for 2050 and the uncertainty distribution (5th, 17th, 50th, 83th, 95th and 99th percentiles) are derived from Jevrejeva et al. (2014). Those values are then used in the DIVA model to estimate the land losses due to submergence and expected sea-flood damage costs due to SLR for each percentile separately (e.g Spencer et al. 2016).

3.2 Systematic Sensitivity Analysis Design

Similar to Arndt (1996), we define the general form of a computable general equilibrium model as:

where x represents a vector of results or endogenous variables (such as prices, welfare etc.) and \(\beta \) a vector of exogenous variables. The solutions of the system of Eq. 1 can be defined as \( x^{\star }(\beta ) \). Then, given the non-zero probability density function (pdf) p over a multiple dimension domain \(\Omega \) for the exogenous random variables part of \(\beta \) we can define \( x^{\star }(\beta ) \equiv K(\beta )\) as a vector of results for each given parameter \(\beta \). The calculation of the mean in the univariate case takes the form of:

and the calculation of the variance can be done by:

In order to evaluate Eqs. 2 and 3, two distinct approaches have been used, methods based on quadrature formulas (i.e. GQ) and MC sampling. Each approach has its advantages and limitations.Footnote 1 These methods are commonly used due to the difficulty or impossibility of analytically evaluating Eqs. 2 and 3.

Going back to Eq. 2, based on a Riemann sum we can approximate the integrand by:

and in the multivariate version the central \(k\mathrm{th}\) moment is approximated up to order d by:

where \( \sum _{j=1}^{J}r_{j} \le d \) and N is the number or realizations and \(w_{n}\) is the weight associated with each realization.

Up to this point both SSA methods (GQ and MC) follow the same methodology. The differentiation comes in the number or realizations required and the way \(w_{n}\) is defined. In the MC sampling method, we generate N pseudo-random numbers based on the \(p(\beta )\) distribution and evaluate Eqs. (2) or (5), N times, where \(w_{n}=\left( \frac{1}{N}\right) \) for each realization n. If N is sufficiently large then \(\bar{x}\) as defined by Eqs. 4 and 5 is an unbiased estimator of \(K(\beta )\).

The idea behind the GQ is to keep the number of the integrand evaluations N small by choosing the most appropriate points within the interval [a, b] and associated weights \(w_{n}\) [see Eq. (5)]. The choice of points and probabilities is done in such a way that the crude moments of the approximating distribution equals the moments of the true distribution from zero to some specified order (Villoria and Preckel 2017). Once points and probabilities have been chosen, the moments can be calculated through Eqs. (4) and (5) as above.

The GEMPACK software (Harrison et al. 2014) provides an easy implementation of SSA based on GQ.Footnote 2 The SSA is constrained for up to degree 3 quadratures for symmetric distributions. The equally weighted points estimated by the GEMPACK software are between 2S and 4S,Footnote 3 based on the Struod and Liu quadrature respectively (Stroud 1960; Liu 1997). The build-in version of the SSA in the GEMPACK allows for use of the Uniform and Triangular distributions.

As mentioned in Sect. 2.2, the CGE literature has focused mostly on the GQ approach for SSA. An important issue with the GQ proposed by Struod and Liu is that they restrict the variation around the mean of each random variable to no more than \(\sqrt{2}\sigma \) in Struod quadrature and \(\sigma \) in Liu quadrature. Villoria and Preckel (2017) mention on the matter that:

[...] by taking the GQ approach, considerable information has been lost regarding the shape of the distribution, its higher order moments, and its range. This may be especially important in instances where the shocks can be expected to have asymmetric impacts. These applications likely include productivity shocks associated with climate change[...]

Preckel et al. (2011) attempt to solve this issue by proposing a change in the sampling method of Struod and Liu by implementing a broader sample technique that samples beyond the \(\sqrt{2}\sigma \) in Struod quadrature and \(\sigma \) in Liu quadrature. Similarly, Artavia et al. (2015), propose the use of MC methods to tackle this problem of low accuracy where the shocks’ distribution is constrained by the Struod and Liu requirements.

Given the aforementioned information, the advantages of the MC method used in SSA for the model developed by Chatzivasileiadis et al. (2016) become clear. On the one hand, the biggest advantage of GQ which is its ability to economise the SSA to only a few model runs does not necessarily hold in practice. In our case, the required number of simulations is 4096, based on the parameters used in the SSA within the GEMPACK software. On the other hand, the analysis of Chatzivasileiadis et al. (2016) is based on sea-port productivity shocks associated with climate change and thus the problem of asymmetric impacts within the GQ method, as described above, becomes prominent. Another advantage of the MC method lies on the estimation of the error. In the MC method, the error is estimated from the generated data, whereas in the QG more global measures of error estimation are required such as the Chebyshev’s inequality for the confidence bounds. The Chebyshev’s inequality, will produce confidence bounds that are extremely conservative compared to the Central Limit Theorem which provides narrower confidence intervals if the available number of data points is sufficiently large (Fishman 1996).

3.3 Monte Carlo Sampling Method

Before performing SSA with the MC method, we need to select the input variables of interest and make assumptions about the distributions they follow.

In the original model of Chatzivasileiadis et al. (2016), the exogenous parameters that are shocked to simulate the impacts caused by SLR to the global economy and in particular to the water-transportation sector (see Sect. 3), are: (1) Land loss due to submergence, (2) Sea-flood damage costs and (3) Sea-port productivity losses. It is important to note that if data for a goodness of fit test is not available, the selection of a probability distribution is rather subjective and arbitrary. However, the selection ideally has to reflect our perception regarding the characteristics of the process we are trying to represent (and its uncertainty). One important point to take into account can be how fast the tail of the distribution that is proposed decreases [e.g., a uniform distribution may considerably overestimate the probability of very large land losses; a triangular distribution may still be over estimating the probability of large losses but less than the uniform; a normal distribution has faster decaying tails, but it would be inadequate because it is symmetric and it supports the interval \((-\,\infty ,\infty )\)]. The selection of the distribution should also reflect that the values the process can take may be limited by some physical constraints. For example, a probability distribution for land loss may be limited beyond a certain value from which it is impossible to have larger losses (right tail truncation). For sea-flood damage costs, apart from the problem of how fast the tails should decrease, the selected distribution should reflect that this variable cannot take negative values (left tail truncation). In this case, the exponential or the Gamma distribution could be appropriate. For sea-port productivity losses a triangular distribution could be used, although a distribution with faster decaying tails could be more desirable, such as the Beta distribution.

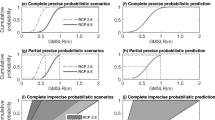

Figure 1 presents for MC and GQ percentiles and maximum values of percentage changes in sea port productivity for the DIVA_C and DIVA_L scenarios. As indicated in Sect. 3, only the 5th, 17th, 50th, 83th, 95th and 99th percentiles of the DIVA data are available. Given the limited information about the DIVA data distribution, a triangular distribution (td) was chosen to represent all parameters. This is a common choice to represent parameters and variables when little information about their distribution is available.Footnote 4 Above that, this selection was made to preserve comparability with the GQ method, as implemented in the GEMPACK software. Otherwise, the parameters used in the two methods would probably not have similar mean and variance. This is due to the fact that the Struod and Liu quadrature was developed for symmetric distributions, whereas asymmetries are present in our data (see Fig. 1). An additional set of analyses is conducted using the piecewise linear probability distribution (lpd) (Kaczynski et al. 2012). The lpd is a non-parametric probability distribution created using a piecewise linear representation of the cumulative distribution function.Footnote 5 Based on the DIVA data we have six points of the cumulative density function (cdf), thus six different slopes are used to generate the lpd data. This method does not impose a distribution shape as the td does and follows the data more closely. An advantage of the lpd over the td is that it uses all available information provided by the DIVA data and not just three points.

For the triangular distribution we assume that the 50th percentile for each region is the most plausible value and we restrict the distribution to the interval [99th, 5th]. This ensures the values for capital to be positive. We then generate 10,000 realizations for each of the three variables of interest, for every region in the model. This process was repeated twice once for the DIVA_C and once for the DIVA_L scenario. For the pld, we generate 15,000 realizations for each of the three shocks of interest, for every region in the model as above. A higher number of realizations is used in the lpd to avoid convergence problems that could be created by this sampling method. Table 1 shows the first two moments of the 10,000 td and 15,000 lpd realizations for each of the three variables, for every region, in combination with Figs. 1 and 2 that show the productivity shock distributions for every region/scenario.

Additional attention is required in the estimation of the sea-port productivity shocks for the minimum and the maximum of the triangular distribution. Following Chatzivasileiadis et al. (2016), we get the land and capital shocks separately from the data for the 5th and the 99th percentile from the DIVA model. Then we estimate the ratio between each limitFootnote 6 and the 50th percentile. So, we generate the minimum and the maximum for the sea-port productivity shocks by multiplying each ratio to the Chatzivasileiadis et al. (2016) sea-port productivity shocks.Footnote 7 In cases where the new productivity shocks for the 99th percentile are higher than \(50\%\) we cut the value to 50%.Footnote 8

In the generation process of the pseudo-random realizations for both MC SSA we made a set of additional assumptions regarding the distribution of the input parameters. Taking a closer look at Fig. 1, we see that there are differences between the GQ and the MC maximum values. For the DIVA_C the difference is only in North East Europe, whereas in the DIVA_L the differences are apparent in all regions. Africa is a special case in the DIVA_L scenario, for the upper limit used in the GQ, where the upper limit is negative. The assumption of Chatzivasileiadis et al. (2016) is that the productivity changes due to SLR are proportional to the land loss or the sea-flood costs each region faces by 2050. Based on the DIVA model, and considering the left tail of the distribution (5th percentile), North East Europe sees gains in land due to negative SLR. That would mean, according to Chatzivasileiadis et al. (2016), that the sea-port productivity would increase due to the lower water levels. Jonkeren et al. (2007) show that, for inland water transport, lower water levels increase the freight prices as a result of lower productivity. Moreover, looking at the GQ upper limit in Fig. 1, for the DIVA_L model, we see that due to the fact that the Struod and Liu quadratures where developed for symmetrical distributions, given the distance between the 50th and the 99th percentile the maximum is consistently positive, thus assuming productivity gains from sea level changes. For those reasons, we have decided to restrict the distribution in the MC SSA to zero on the one side. This way, we can include cases where no changes to sea-port productivity have occurred even though there are land and capital losses.

3.4 Supplementary Code

This paper is coupled with a code for Stata that, based on the DIVA data for our 13 regions, recreates the analysis discussed above. Starting from the initial dataset (downloaded through the code automatically), the user can choose the type of SSA to be conducted. The idea is based on the fact that the GEMPACK software, usually used for simulations based on the GTAP model, does not have a built-in function for conducting MC SSA yet. Due to the nature of the data, to this point, the code allows for sampling based on the td and the pld. When the number of simulations (chosen by the user) is completed, the program collects the data (inputs and outputs) into a csv file that is then fed to Stata for further analysis. A first version of the code can be found on GitHub.Footnote 9

4 Results

For simplicity, we focus on just two results of the CGE model, namely the Hicksian equivalent variation (HEV) and the percentage change of regional GDP (qGDP, see Appendix 6.1). The HEV can be thought of as the dollar amount that the consumer would be indifferent about accepting in lieu of the shock caused by, in our case SLR; it is negative if the consumer would be worse off after the shock due to SLR (Mas-Colell et al. 1995). The other variable discussed, qGDP is the percentage change of regional GDP between the two equilibria i.e pre and post SLR. Tables 2, 3 and 4 show the results for both the DIVA_C and DIVA_L scenarios using both MC and GQ SSA, respectively. The tables also include the first two central moments for HEV and qGDP. Additionally, the upper and lower 95% confidence interval for each region have been included.Footnote 10 In order to make the comparison easier we have calculated the ratio of the MC means to the GQ means for each region (Table 5). To better explain the differences between DIVA_C and DIVA_L produced by the MC and QG method we also present the histograms of productivity shocks by region in Figs. 2 and 3.

We start the analysis of the results from the DIVA_C scenario where the inputs for all three SSA methods are the same (see Fig. 1) with the exception for North-East Europe. Even though the three points of the cdf used in the sampling process of the shocks are the same for each region the distribution of shocks used by the MC-td and the QG SSA methods seem to be different (see Fig. 3). As indicated by Villoria and Preckel (2017), a significant amount of information is ignored by the GQ method regarding the shape of the distribution, its higher order moments, and its range. This information is very important in our case given that we are interested in productivity shocks associated with SLR, which potentially can have asymmetric impacts. The ratio of the two means presented in Table 5 shows that the two methods produce different results for all regions, even though they have similar input. The ratio of the mean is higher than 1.0Footnote 11 indicating that the MC-td gives consistently higher estimates for both HEV and qGDP. China is the exception, where HEV results are identical. This underestimation of the QG could be due to poorly chosen discrete shock values produced by Liu Quadrature for variables that the model results are sensitive to. In North-East Europe with just a slight difference in the upper bound of the input distribution (0% productivity reduction in MC compared to 2.49% increase in GQ), we see that the HEV and qGDP results of the MC-td method is 2.4 and 2.7 times higher respectively (in absolute terms) compared to the GQ estimates.

Tables 2, 3 and 4 show that the results of the MC–lpd are similar to the ones produced by the GQ method with the exception of the three European regions where the MC–lpd results are higher. Additionally, there seems to be a significant difference between the two MC methods. This result is not surprising since the distribution chosen in the sampling process affects the final results significantly as discussed above (see Sect. 3.3§2). The Box-Plot in Fig. 4 can give a clearer representation of the output distributions for each region under both MC methods.Footnote 12

The SSA methods show that the DIVA_C results produced by Chatzivasileiadis et al. (2016) are robust to the variations of SLR. The SSA mean results are similar to the ones reported by Villoria and Preckel (2017), but not identical (see columns SIM and Mean in Tables 2, 3 and 4), except North-East Europe. It seems that the asymmetric td used in MC method for North-East Europe generates significantly larger means for HEV and qGDP (in absolute terms) compared to the GQ method. The sign and magnitude of the effects are robust to the variations of SLR based on the 95% confidence intervals.Footnote 13 indicating that there is no evidence of input sensitivity present. Taking a closer look at Tables 2 and 3, we see that the global HEV losses are consistently higher in all SSA methods indicating possibly that the existent SLR CGE literature has underestimated the effects SLR on the global economy.

4.1 Convergence Speed and Decomposition

Figures 5 and 6 show the convergence speed of the mean and confidence intervals as the number of simulations increases in the MC-td SSA for HEV and qGDP respectively. These plots illustrate the sample size required by the MC process based on the speed of convergence of the results. Even though there are regional differences, it seems that 4000 simulations are sufficient for the mean and confidence intervals (CI) to settle down. The theoretical point of convergence as shown on the graphs as reference lines is based on the Raftery and Lewis’ diagnosticFootnote 14 for Markov Chain MC (Raftery and Lewis 1992).

Knowing the point of convergence for each output we expand our analysis by decomposing the CGE simulation to its components i.e.; (1) Land loss due to submergence, (2) Sea-flood damage costs and (3) Sea-port productivity losses. This is possible because CGEs are locally linear, i.e., the effect of a joint shock is the sum of the effects of the single shocks. This implies that we can run three MCs, one for each exogenous variable, with K runs rather than one MC with 10,000 runs. We have used \(K=4000\) based on the Raftery and Lewis’ diagnostic. The purpose of this experiment is to identify potential differences between the total and the decomposed CGEs results within the MC SSA. As above, we sample values for each region/exogenous variable based on the triangular distribution using the same seed for each region in the sampling process as before. After all simulations for each component are done, the separate HEVs and qGDPs are summed to produce the total effect of the three exogenous variables. Since the GTAP model is not linear this type of decomposition possibly creates an error as illustrated by the difference between the total and the decomposed CGE results. In the MC analysis though, this error is expected to be averaged out as the number of simulation increases.

Looking at Figs. 5 and 6, the results of the total and decomposed CGEs for the MC-td of the DIVA_C scenario are identical. Exception is JaKoSingFootnote 15 where the decomposed CGE produces slightly higher results. Interestingly, the upper and lower bound in North West and South Europe for qGDP, only, seem to be wider in the decomposed CGEs. This shows that the interaction component of the CGE model actually has an effect. Additionally the lower bound of the Raftery and Lewis’s diagnostic is slightly lower in decomposed CGE experiment. This difference though is not large enough to indicate that the decomposed MCs require a smaller number of runs in total (here \(3*K\)) than the initial MC.

In the interest of reducing the computation time, apart from the decomposition analysis discussed above, we implement the methodology proposed by Elliott et al. (2012). We have used a bootstrap resampling method, on our results for each region separately, in order to identify a sub-sample size, from our original 10,000 simulations, that provides a good statistical approximation of the full set with a 95% confidence level. We have limited the bootstrap resamples to 1000, in order to reduce the computation time. Starting from sample sizes between 5 and 9999 observations, we do a bootstrap resampling for each sample size. Based on the results of the bootstrap resampling, we conduct a series of tests in order to evaluate how closely each sub-sample size can approximate the full data set. As in Elliott et al. (2012), we present the bootstrap mean with the 95% confidence interval and the standard deviation, for each sample size. Next we conduct: (i) a t test based on the Welch’s approximation in order to asses the equality of the sub-sample mean with that of the full sample; (ii) an F-test or equality of variance test between the sub-sample and the full sample; (ii) and last a Kolmogorov–Smirnov test of the equality of distributions.

Here we present the analysis of West-Asia’s HEV, on Fig. 7. The results show that the mean and standard error converges after 8000 simulations. Based on the Welch’s approximation t test for unequal variances, a total of 1164 simulations is required in order for the bootstrap mean and the total sample mean to be equal. Nevertheless this finding is not conclusive since the p values of the test are not stable. Next, based on the F-test, the variances seam equal after 8870 simulations. Last, only after 9500 simulations the bootstrap samples come from the same distribution using the Kolmogorov–Smirnov test. Thus, these results are inconclusive and this is probably due to a combination of factors. First, the number of resamples (here 1000) might be a problem but increasing that number will increase the computation time significantly. Second, the complexity of our shocks and their underline uncertainty might be another reason for the very high number of simulations required based on the bootstrap resampling methodology.

4.2 Uncertainty Decomposition

The limited up-to-date literature on MC SSA of CGE models does not pay attention to assessing the impact of parameter uncertainty on the uncertainty surrounding the MC CGE results. Following Anthoff and Tol (2013), we run a regression of the MC inputs on the CGE outputs and compute the standardized regression coefficients. The standardized regression coefficient shows how many standard deviations the dependent variable will change for a one standard deviation change of a given independent variable, ceteis paribus (Landis 2014). Essentially, the regression will indicate the impact of a model parameter on the results after removing the impact of all other parameters. We use this method to identify the relative importance of each exogenous variable by linearising the model in the regression and thus capturing local sensitivities only. For simplicity we present the results of the uncertainty decomposition for the DIVA_C MC–lpd HEV and qGDP results only.

Figure 8 shows the four regional parameters that have the largest effect (in absolute terms) on HEV and qGDP by region. Tables 11 and 12 in the Appendix include all 39 coefficients of the standardized regression for each region/parameter. As expected in most regions the regional productivity losses has the highest effect on HEV and qGDP. Additionally, land seems to have little to no effect on the final results since the coefficients are not significant for most regions. These result are not surprising and somewhat expected, given the magnitude of the shocks (see Table 1) and the economic significance of capital and productivity in comparison to agricultural land. Interestingly, the developed regions’ qGDP is affected more by the capital changes rather than the productivity losses in comparison to HEV. Figure 8 also shows the way regions are connected. For example the HEV and qGDP in Africa are not only affected by capital and productivity losses in Africa, but also by changes in Central Asia and North West Europe. In order to understand these connections better we show the two heat-map tablesFootnote 16 (Tables 6, 7, 8, 9). It is clear that the HEV is generally more affected by the productivity changes rather than the capital changes. JaKoSing and the three European regions, seem to have a negative response (on both HEV and qGDP) to seaport productivity changes from almost all other regions.

5 Discussion and Conclusion

We estimate the input sensitivity in the simulation of the economy wide effects of productivity losses in the marine transportation sector due to sea level rise using the GTAP CGE model with 13 regions. Based on Chatzivasileiadis et al. (2016), we analyse two different scenarios for productivity changes. By comparing the MC SSA with the Liu GQ it becomes clear that small differences in the parameters of even the same distribution (here td) can cause significant changes in the outputs of the SSA. We show that in the case of productivity changes due to SLR—where shocks have asymmetric impacts—the MC method is the best choice for conducting SSA due to the potential information loss in the shocks generated by the GQ method. Based on the results of this paper, there is no evidence of input sensitivity in the DIVA_C scenario. Comparing the welfare losses of the two scenarios between Chatzivasileiadis et al. (2016) and the SSA methods for the year 2050, we see that in the DIVA_C scenario the welfare losses are higher in both MC SSA’s based on the triangular and piecewise linear probability distributions. This indicates that the CGE economic literature has potentially underestimated the total economic effects of SLR, thus stressing the necessity of SSA when simulating the general equilibrium effects of SLR.

We show that since CGEs are locally linear, a decomposition of the MC is possible based on the CGE parameters. Our results indicate that the decomposed MC converges approximately at the same speed as the total MC and the two methods produce identical results with the exception of the confidence bounds for qGDP in Europe. An important addition to the MC SSA of CGE literature is the uncertainty decomposition analysis. We show that land losses have a smaller effect compared to capital and seaport productivity losses. Additionally, capital losses seem to affect the developed regions GDP more than the seaport productivity losses.

This paper does not include any policy implications and the reason it twofold. First, this is a methodological paper on the different methods of sensitivity analysis in CGE models and the use of different distributions. As such, no policy implications are derived from this analysis but only methodological suggestions for analysts and researchers; that SSA and the choices made by them on the method (i.e GQ or Monte Carlo) or the distributions used (i.e., lpd or td etc.) can affect the results significantly. Second, the initial model used in our analysis of Chatzivasileiadis et al. (2016), does not include adaptation in the model. Therefore, the only policy implication is that climate data have a certain level of uncertainty that should be dealt with the proper sensitivity analysis.

There are issues that call for further research. First and foremost, both SSA methods we applied assume that the shocks vary independently across regions and sectors. Based on the DIVA model, land loss due to submergence and sea-flood damage costs are a function of the level of SLR each region faces which indicates a certain level of correlation between them. Although, the assumption of independence can be sensible due to the fact that land loss due to submergence and sea-flood damage costs are also dependent on the level of protection to SLR each region has which we assumed to be uncorrelated among regions. Furthermore, we compare the MC SSA method with the relatively restrictive Liu QG of the GEMPACK software. Future analysis is needed on the use of; broader sampling methods in the GQ as in Preckel et al. (2011); orthogonal polynomials in the GQ as in Hermeling et al. (2013) and the use of the MC filtering approach as in Mary et al. (2013).

Notes

For more information see Fishman (1996).

Different extensions of the QA SSA method have been developed, such as: Hermeling et al. (2013) based on on the theory of orthogonal polynomials; and Preckel et al. (2011) based on expansions of the sampling in the Stroud and Liu Gaussian Quadratures. Both methods increases the approximation quality compared to the Gaussian Quadrature proposed by Struod and Liu, as implemented in the GEMPACK software, but still suffer from the same problems mentioned in this paper.

Where S is the number of individual shocks included in the simulation.

Based on the MathWorks Matlab 2016b.

The 5th and the 99th percentiles.

The Chatzivasileiadis et al. (2016) sea-port productivity shocks where calculated using the 50th percentile form the same dataset.

We assume that \(50\%\) of sea-port productivity losses is the maximum level of extreme losses ports can endure before urgent protection measures, in all regions, take place.

In the GQ method the 95% Chebyshev’s bound has been calculated based on: \(\left( { mean} - \sqrt{20}\cdot {{ SD}}, { mean}+\sqrt{20}\cdot {{ SD}}\right) \).

Ratios are rounded at one decimal point.

The Box-Plot for for GQ was not produced since the method generates approximations of the mean and standard deviation from numerical integration, but not distributions.

Or the Lower and Upper Chebyshev’s bound for 2 SDs.

See regional aggregation in Appendix Table 10.

References

Anthoff, D., & Tol, R. S. J. (2013). The uncertainty about the social cost of carbon: A decomposition analysis using fund. Climatic Change, 117(3), 515–530. https://doi.org/10.1007/s10584-013-0706-7.

Arndt, C. (1996). An introduction to systematic sensitivity analysis via Gaussian quadrature. GTAP technical papers (2):2. http://docs.lib.purdue.edu/cgi/viewcontent.cgi?article=1002&context=gtaptp.

Arndt, C., & Pearson, K. R. (1996). How to carry out systematic sensitivity analysis via Gaussian quadrature and gempack. GTAP technical paper (3). https://www.jgea.org/resources/download/1209.pdf.

Artavia, M., Grethe, H., & Zimmermann, G. (2015). Stochastic market modeling with Gaussian quadratures: Do rotations of Stroud’s octahedron matter? Economic Modelling, 45(C), 155–168. https://doi.org/10.1016/j.econmod.2014.10. https://ideas.repec.org/a/eee/ecmode/v45y2015icp155-168.html.

Bosello, F., Eboli, F., & Pierfederici, R. (2012a). Assessing the economic impacts of climate change. FEEM (Fondazione Eni Enrico Mattei), Review of Environment, Energy and Economics (Re3).

Bosello, F., Nicholls, R. J., Richards, J., Roson, R., & Tol, R. S. (2012b). Economic impacts of climate change in Europe: Sea-level rise. Climatic Change, 112(1), 63–81.

Bosello, F., Roson, R., & Tol, R. S. (2007). Economy-wide estimates of the implications of climate change: Sea level rise. Environmental and Resource Economics, 37(3), 549–571.

Caralis, G., Diakoulaki, D., Yang, P., Gao, Z., Zervos, A., & Rados, K. (2014). Profitability of wind energy investments in China using a Monte Carlo approach for the treatment of uncertainties. Renewable and Sustainable Energy Reviews, 40, 224–236. https://doi.org/10.1016/j.rser.2014.07.189. http://www.sciencedirect.com/science/article/pii/S1364032114006418.

Chatzivasileiadis, T., Hofkes, M., Kuik, O., & Tol, R. (2016). Full economic impacts of sea level rise: Loss of productive resources and transport disruptions. Working paper series, Department of Economics, University of Sussex. http://EconPapers.repec.org/RePEc:sus:susewp:09916.

Darwin, R. F., & Tol, R. S. (2001). Estimates of the economic effects of sea level rise. Environmental and Resource Economics, 19(2), 113–129.

Deke, O., Hooss, K. G., Kasten, C., Klepper, G., & Springer, K. (2001). Economic impact of climate change: Simulations with a regionalized climate-economy model. Report, Kiel Working Papers.

DeVuyst, E. A., & Preckel, P. V. (1997). Sensitivity analysis revisited: A quadrature-based approach. Journal of Policy Modeling, 19(2), 175–185.

Elliott, J., Franklin, M., Foster, I., Munson, T., & Loudermilk, M. (2012). Propagation of data error and parametric sensitivity in computable general equilibrium models. Computational Economics, 39(3), 219–241.

Fishman, G. (1996). Monte Carlo: Concepts, algorithms, and applications. Berlin: Springer Science and Business Media.

Harrison, G. W., Rutherford, T. F., & Tarr, D. G. (1997). Economic implications for turkey of a customs union with the European union. European Economic Review, 41(3), 861–870.

Harrison, G. W., & Vinod, H. D. (1992). The sensitivity analysis of applied general equilibrium models: Completely randomized factorial sampling designs. The Review of Economics and Statistics, 74(2), 357–362. http://www.jstor.org/stable/2109672.

Harrison, J., Horridge, J., Jerie, M., & Pearson, K. (2014). Gempack manual. GEMPACK Software.

Hermeling, C., Löschel, A., & Mennel, T. (2013). A new robustness analysis for climate policy evaluations: A CGE application for the EU 2020 targets. Energy Policy, 55, 27–35.

Hertel, T. W. (1997). Global trade analysis: Modeling and applications. Cambridge: Cambridge University Press.

Hinkel, J. (2005). Diva: An iterative method for building modular integrated models. Advances in Geosciences, 4, 45–50.

Hinkel, J., Lincke, D., Vafeidis, A. T., Perrette, M., Nicholls, R. J., Tol, R. S., et al. (2014). Coastal flood damage and adaptation costs under 21st century sea-level rise. Proceedings of the National Academy of Sciences, 111(9), 3292–3297.

Hinkel, J., Nicholls, R. J., Tol, R. S., Wang, Z. B., Hamilton, J. M., Boot, G., et al. (2013). A global analysis of erosion of sandy beaches and sea-level rise: An application of diva. Global and Planetary Change, 111, 150–158.

Hoffman, F. O., & Hammonds, J. S. (1994). Propagation of uncertainty in risk assessments: The need to distinguish between uncertainty due to lack of knowledge and uncertainty due to variability. Risk Analysis, 14(5), 707–712. https://doi.org/10.1111/j.1539-6924.1994.tb00281.x.

Hoozemans, F. M., Stive, M. J., & Bijlsma, L. (1993). Global vulnerability assessment: vulnerability of coastal areas to sea-level rise. In Coastal zone’93. Vol. 1; proceedings of the eighth Symposium on Coastal and Ocean Management, July 19-23, 1993, New Orleans, Louisiana 1, 390–404. (1993). American Shore and Beach Preservation Association; ASCE; Coastal Zone Foundation; Guenoc Winery; Louisiana Department of Natural Resources; et al.

Horridge, J. M., Pearson, K., et al. (2011). Systematic sensitivity analysis with respect to correlated variations in parameters and shocks. GTAP technical paper (30).

IPCC (2013). Summary for policymakers. In T. F. Stocker, D. Qin, G. K. Plattner, M. Tignor, S. K. Allen, J. Boschung, A. Nauels, Y. Xia, V. Bex, P. M. Midgley (Eds.), Climate change 2013: the physical science basis. Contribution of working Group I to the fifth assessment report of the intergovernmental panel on climate change. Cambridge: Cambridge University Press.

Jevrejeva, S., Grinsted, A., & Moore, J. C. (2014). Upper limit for sea level projections by 2100. Environmental Research Letters, 9(10):104008. http://stacks.iop.org/1748-9326/9/i=10/a=104008.

Johnson, D. (1997). The triangular distribution as a proxy for the beta distribution in risk analysis. Journal of the Royal Statistical Society: Series D (The Statistician), 46(3), 387–398. https://doi.org/10.1111/1467-9884.00091.

Jonkeren, O., Rietveld, P., & van Ommeren, J. (2007). Climate change and inland waterway transport: Welfare effects of low water levels on the river rhine. Journal of Transport Economics and Policy (JTEP), 41(3), 387–411.

Kaczynski, W., Leemis, L., Loehr, N., & McQueston, J. (2012). Nonparametric random variate generation using a piecewise-linear cumulative distribution function. Communications in Statistics—Simulation and Computation, 41(4), 449–468. https://doi.org/10.1080/03610918.2011.606947.

Korteling, B., Dessai, S., & Kapelan, Z. (2013). Using information-gap decision theory for water resources planning under severe uncertainty. Water Resources Management, 27(4), 1149–1172. https://doi.org/10.1007/s11269-012-0164-4.

Landis, R. S. (2014). Standardized regression coefficients. London: Wiley. https://doi.org/10.1002/9781118445112.stat06588.

Leckie, G., Charlton, C., et al. (2013). Runmlwin—A program to Run the MLwiN multilevel modelling software from within stata. Journal of Statistical Software, 52(11), 1–40.

Liu, S. (1997). Gaussian quadrature and its applications. PhD dissertation, Department of Agricultural Economics, Purdue University.

Mary, S., Phimister, E., Roberts, D., & Santini, F. (2013). Testing the sensitivity of CGE models: A Monte Carlo filtering approach to rural development policies in Aberdeenshire. JRC Scientific and Policy Reports European Commission.

Mas-Colell, A., Whinston, M. D., & Green, J. R. (1995). Microeconomic theory (Vol. 1). New York: Oxford University Press.

Preckel, P. V., Verma, M., Hertel, T. W., & Martin, W. (2011). Implications of broader Gaussian quadrature sampling strategy in the contest of the special safeguard mechanism. In: Paper presented at the 14th annual conference on global economic analysis. https://www.researchgate.net/profile/Will_Martin3/publication/267231833_Implications_of_Broader_Gaussian_Quadrature_Sampling_Strategy_in_the_Contest_of_the_Special_Safeguard_Mechanism_(Preliminary_Draft)/links/54d0e6220cf20323c21a098f.pdf.

Raftery, A. E., & Lewis, S. M. (1992). Practical Markov Chain Monte Carlo: Comment: One long run with diagnostics: Implementation strategies for Markov Chain Monte Carlo. Statistical Science, 7(4), 493–497.

Rasbash, J., Charlton, C., Jones, K., & Pillinger, R. (2012). Manual supplement for MLwiN version 2.26. Bristol: Centre for Multilevel Modelling, University of Bristol.

Spencer, T., Schuerch, M., Nicholls, R. J., Hinkel, J., Lincke, D., Vafeidis, A., Reef, R., McFadden, L., & Brown, S. (2016) Global coastal wetland change under sea-level rise and related stresses: The DIVA wetland change model. Global and Planetary Change, 139, 15–30. https://doi.org/10.1016/j.gloplacha.2015.12.018. https://www.sciencedirect.com/science/article/pii/S0921818115301879.

Stroud, A. H. (1960). Quadrature methods for functions of more than one variable. Annals of the New York Academy of Sciences, 86(3), 776–791.

Vafeidis, A. T., Nicholls, R. J., McFadden, L., Tol, R. S., Hinkel, J., Spencer, T., et al. (2008). A new global coastal database for impact and vulnerability analysis to sea-level rise. Journal of Coastal Research, 24, 917–924.

Villoria, N. B., Preckel, P. V., et al. (2017). Gaussian quadratures vs. Monte Carlo experiments for systematic sensitivity analysis of computable general equilibrium model results. Economics Bulletin, 37(1), 480–487.

Acknowledgements

Funding was provided by Fourth Framework Programme (Grant No. 603396).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 qGDP

As in Chatzivasileiadis et al. (2016), approaching GDP from the expenditure side, GDP can be expressed by the following accounting identity:

where C denotes final consumption of goods and services (both private and government), I is (gross) investment, E is exports of goods and services, and M is imports of goods and services. In GTAP, exports E is divided into exports of goods and services to all other countries (E) and exports of transportation services to the “global” transportation sector (T). The “global” transportation sector as designed in the GTAP model purchases transport services from all regions and in return it supplies transport services to every region. The accounting identity then becomes:

Further analysis on the topic can be found in Hertel (1997).

Total differentiation of this identity shows how relative changes in GDP can be decomposed into relative changes in its factors, where percentage changes are denoted by q: qGDP \(=\) (dGDP/GDP):

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Chatzivasileiadis, T., Estrada, F., Hofkes, M.W. et al. Systematic Sensitivity Analysis of the Full Economic Impacts of Sea Level Rise. Comput Econ 53, 1183–1217 (2019). https://doi.org/10.1007/s10614-017-9789-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-017-9789-y