Abstract

Public and private organizations regularly run awareness campaigns to combat financial fraud. However, there is little empirical evidence as to whether such campaigns work. This paper considers a campaign by a systemically important Danish bank, targeting clients over 40 years of age with a mass message. We utilize the campaign as a quasi-experiment and consider a multitude of linear probability models, employing difference-in-differences and regression discontinuity designs. None of our models, though controlling for age, sex, relationship status, financial funds, urban residence, and education, find any evidence that the campaign had a significant effect. The results indicate that awareness campaigns relying on mass messaging, such as the one considered in our paper, have little effect in terms of reducing financial fraud.

Similar content being viewed by others

Introduction

To combat financial fraud, public and private organizations regularly run awareness campaigns (see, e.g., campaigns by the Swedish Bankers’ Association (2023), U.S. Immigration and Customs Enforcement (2023), and UK Finance (2022)). Despite good intentions, there is little empirical evidence as to whether such campaigns work (Gotelaere & Paoli, 2022; Button & Cross, 2017; Cross & Kelly, 2016; Prenzler, 2020). However, there could be multiple reasons to suspect that they do not. For one thing, the behavior targeted by campaigns may be strongly influenced by situational and emotional factors, including relationship status and mental health problems (Poppleton et al., 2021). Furthermore, criminals may imitate bank or police officers (Choi et al., 2017) and, as one anti-fraud expert from the bank considered in our paper puts it, “(...) most awareness campaigns contain a message á la; don’t trust people that call you and say they are from the police or a bank... But there is something weird about the police or a bank promoting that message.” In other words, the people influenced by an awareness campaign, launched by a public authority or bank, might disregard the campaign’s message when a fraudster claims to be from a public authority or bank. This could especially be the case when we consider people susceptible to fraud.

In this paper, we consider an awareness campaign by Spar Nord, a systemically important Danish bank. In September 2020, the bank sent out a warning message through its online banking portal, targeting clients over 40 years of age. We consider this message a concrete example of an awareness campaign and utilize it as a quasi-experiment. To this end, we employ choice-based data collection; we collect data on all of the bank’s known fraud victims and a sample of “baseline” clients (i.e., clients assumed not to be fraud victims). The strategy is motivated by the rarity of fraud cases and how some of our features are expensive to collect. To account for the fact that we undersample baseline clients, we weigh observations inversely proportional to the probability that they are in our data. For analysis, we use linear probability models with difference-in-differences and regression discontinuity designs. Our features include information about age, sex, relationship status, financial funds, and residence. For a subset of observations, we also have access to and employ educational information. None of our models find that the message had a significant effect, indicating that awareness campaigns relying on mass messaging have little effect on financial fraud.

The rest of our paper is organized as follows. The “Related Literature on FinancialFraud” section reviews related literature on financial fraud. The “Institutional Setting” section presents the institutional setting of our study. The “Data” section presents our data. The “Methodology” section presents our methodology and difference-in-differences and regression discontinuity models. The “Results” section presents our results. Finally, the “Discussionand Conclusion” section ends the paper with a discussion and conclusion.

Related Literature on Financial Fraud

Despite generally declining crime rates, financial fraud remains a major concern across the globe (Prenzler, 2020; Button & Cross, 2017). To address the issue, some scholars argue for a “public health approach,” recommending, among other things, public campaigns targeting high-volume types of fraud (Levi et al., 2023). Button and Cross (2017), in particular, advocate for campaigns targeted to those most likely to be victimized by fraudsters. In this connection, several studies have identified key risk factors, including (age-related) decline in cognitive ability (Gamble et al., 2014; Han et al., 2016; James et al., 2014), emotional responses (Kircanski et al., 2018), overconfidence about financial knowledge (Gamble et al., 2014; Engels et al., 2020), and depression and lacking social needs-fulfillment (Lichtenberg et al., 2013, 2016). A different approach, as explored by Wang et al. (2020), targets criminals with warnings about the consequences of their actions; see Prenzler (2020) for a comprehensive review. In general, however, there exists little empirical evidence as to whether awareness campaigns actually work, i.e., help reduce financial fraud (Gotelaere & Paoli, 2022; Button & Cross, 2017; Cross & Kelly, 2016; Prenzler, 2020).

A study by the AARP Foundation (2003) found that direct peer counseling may help reduce telemarketing fraud. The study considered people deemed to be “at-risk” as they appeared on fraudster call sheets seized by the U.S. Federal Bureau of Investigation. Trained volunteers (i.e., peer counselors) called and reached 119 subjects, randomly delivering a control message (asking about TV shows) or a message warning about telemarketing fraud. Within five days, subjects were then recalled and given a fraudulent sales pitch. Results showed that the warning message significantly reduced fraud susceptibility.

Scheibe et al. (2014) showed that warning past victims may reduce future fraud susceptibility. The study considered 895 people identified as fraud victims by the U.S. Postal Inspection Service. Volunteers called the subjects and delivered either (i) a specific warning (about a type of fraud emulated later), (ii) a warning about a different type of fraud, or (iii) a control (asking people about TV preferences). Two or four weeks later, the subjects were recalled and exposed to a mock fraud. Both types of warning messages reduced fraud susceptibility, though outright non-cooperation (instead of mere skepticism) was more prevalent for participants who had gotten the specific warning message. This warning message, however, appeared to lose effectiveness over time as opposed to the warning about a different type of fraud.

Burke et al. (2022) showed that brief online educational interventions with video or text may reduce fraud susceptibility. The study invited 2600 panelists (selected from a representative U.S. internet panel) to randomly receive either (i) a short video warning about investment fraud, (ii) a short text warning about investment fraud, or (iii) no educational information. Subsequently, participants were presented and asked to consider three investment opportunities, two of which exhibited signs of fraud. Results showed that intervention recipients were significantly less likely to express interest in the fraudulent investment schemes. Furthermore, a follow-up experiment showed that while the effect decayed over time, it persisted at least 6 months after the initial intervention if a reminder was provided after 3 months.

Smith and Akman (2008) examined a comprehensive New Zealand and Australian campaign to raise awareness about consumer fraud. The month-long campaign involved radio and TV appearances and the dissemination of 2600 printed posters and 282,000 flyers. The study concluded that the campaign was effective in raising public awareness about fraud and increased reporting. However, evaluation in terms of preventing and reducing fraud was limited.

Looking at the literature, there are several reasons why we might suspect fraud awareness campaigns to have a limited effect. Firstly, if the behavior targeted by fraud awareness campaigns, by its very nature, is strongly influenced by situational and emotional factors, it might be unreasonable to expect that potential victims remember campaigns when they are contacted by a fraudster. Secondly, as argued by Cross and Kelly (2016), awareness campaigns may overwhelm clients with details. Thirdly, there is no guarantee that clients will even notice an awareness campaign. In this sense, awareness campaigns are more “passive” than direct educational interventions as considered, for example, by Burke et al. (2022). Finally, we note that banks may have other reasons, besides combating financial fraud, to run awareness campaigns. Campaigns may, for instance, serve as an opportunity to strengthen client relationships (Hoffmann & Birnbrich, 2012).

Institutional Setting

Spar Nord (henceforth denoted as “the bank’’), is a systemically important Danish bank providing both retail and wholesale services. The bank is headquartered in Northern Jutland and has approximately 60 branches across Denmark. It estimates its own market share to equal about 5% of the Danish market (Spar Nord, 2023); a market characterized by a high degree of digitization (Danmarks Nationalbank, 2022). In particular, (Statistics Denmark, 2023) estimates that 94% of Danes used mobile or online banking in 2022.

In late September 2020, the bank sent out a warning message through its online banking portal. An English translation of the message is given in Fig. 1 (the original message, in Danish, is given in Appendix A). The message warned clients to safeguard their NemID, a Danish government, and online banking log-on solution (see Medaglia et al. (2017) and the Danish Agency for Digital Government (2023) for more information). The message also warned about fraud in a broad sense, asking clients not to share their personal information over the phone. We note that the message had a focus on criminals contacting clients. In this regard, some of the fraud types considered in our paper may differ from the rest, motivating robustness checks (see the “Data” section and Appendix G). We also note that the message was short and, in line with suggestions by Cross and Kelly (2016), did not overwhelm clients with details. At the same time, however, the message did not warn about particular types of fraud, a strategy that may be more effective as shown by Scheibe et al. (2014). The bank targeted the message to clients over 40 years of age. However, we note some treatment non-compliance, i.e., some clients under 40 received it while some over 40 did not (see Fig. 3 and the “Explorative Analysis” section). We are not aware of other warning messages being sent out to the bank’s clients during the data collection period.

Following Gotelaere and Paoli (2022), we define financial fraud as “intentionally deceiving someone in order to gain an unfair or illegal (financial) advantage.” The definition excludes robberies (where threats or physical violence are used). We do, however, allow credential theft (e.g., stealing and misusing a password) to be encompassed by the definition, although victims may not be deceived in a direct sense.

Data

Our study employs choice-based data collection (i.e., endogenous stratification); we collect data on all known fraud victims at the bank and a sample of “baseline” clients (i.e., clients assumed not to have been defrauded). The strategy is motivated by two factors: (i) fraud victims are rare and (ii) some of our features are encoded by hand and thus expensive to collect. We only consider private individuals (i.e., not firms or businesses) over 18 years of age. In total, we have 1447 observations, including:

-

1.

247 fraud victims, and

-

2.

1200 baseline clients.

As fraud victims, we consider clients with a case registered in the database of the bank’s fraud department 6 months before and up to 6 months after the warning message was sent (denoted as our data collection period). If a client was subject to multiple fraud cases in the data collection period, we only consider the case that was registered first; yielding a one-to-one relationship between fraud cases and (unique) victims. This choice is motivated by the observation that it can be difficult to accurately determine if the fraud in a case is new or a continuation of previous fraud. Notably, the choice affects less than five clients in our data. However, it means our study does not account for revictimization. We record each case (along with victim features) relative to the date where the first fraudulent transaction in the case occurred, denoted as our time of (feature) recording.Footnote 1 This may be a few days before a case is opened.Footnote 2 We also restrict ourselves to cases where the first fraudulent transaction lies in the data collection period.Footnote 3 Motivated by a desire to discard petty fraud, we only consider cases involving more than 1000 Danish Kroner (approximately €135 or $150). In some cases, the bank successfully recovers funds (immediately) after a fraud is discovered. By our definition, however, fraud has already taken place. Table 1 lists the different types of fraud in our data. Cases of non-delivery fraud may differ from the rest, as these (often) are client initialized (imagine, e.g., a client that finds a “too-good-to-be-true” offer online). This motivates us to do a robustness check (see the “Results” section and Appendix G).

Our baseline clients are sampled as follows. We consider all clients with an active account at any point in time 6 months before and up to 6 months after the warning message was sent.Footnote 4 For each client, we sample a random date in the data collection period (used for feature recording) where the client had an active account. The procedure is motivated by a desire to mimic how our fraud victims are collected over a time period. We further remove any client with a fraud case and draw a random sample of 1200 clients. Any of these could, in principle, be a fraud victim; they may have failed to report or notice a fraud. Consider, for example, an embarrassed victim of romance fraud. We do, however, argue that victims have strong incentives to report frauds, hoping to recover funds. Furthermore, fraud is relatively rare (although the probability that a client is mislabeled naturally increases with the size of our sample).

Features

For any bank client i (including both fraud victims and baseline clients), we record five main features:

-

\(\textit{Age}_{i}\); client i’s age in years (i.e., number of days lived divided by 365.25),

-

\(\textit{Female}_{i}\); equal to 1 if client i is female (otherwise 0),

-

\(\textit{Partner}_{i}\); equal to 1 if client i is (i) married, (ii) in a registered civil partnership, or (iii) shares a bank account with a life partner (otherwise 0),

-

\(\textit{Funds}_{i}\); summed investment and pension funds held by client i at the bank (before a potential fraud case; measured in (i.e., divided by one) million Danish Kroner), and

-

\(\textit{Urban}_{i}\); equal to 1 if client i resides in a major Danish municipality; Copenhagen, Aarhus, Odense, or Aalborg (otherwise 0).

Our \(\textit{Partner}\) feature is encoded by hand, using manual look-ups in multiple banking systems. A client failing all three listed criteria is assumed to be single. This includes people in relationships unknown to the bank (e.g., people married abroad).

For 669 observations, we also have educational information. Whenever we use the information in a model, we drop observations where it is missing. The information is encoded in a feature:

-

\(\textit{Educ}_{i}\); denoting client i’s educational level on an ordinal scale \(\{0,1,2\}\).

The feature is encoded by hand, using free-text descriptions of occupational and educational histories, primarily written by bank advisors. Encoding is done using the Qualifications Framework of the Danish Ministry of Higher Education and Science (2021), placing educations on ordinal levels 1–8 (see Table 2). We apply a grouping such that levels 1–3 (anything less than a high school degree) yield \(\textit{Educ}=0\), levels 4–5 (including a high-school degree) yield \(\textit{Educ}=1\), and levels 6–8 (including a university degree) yield \(\textit{Educ}=2\). A detailed description of the encoding process is provided in Appendix B. Recognizing that it might not be meaningful to run a regression directly on (nor take the mean of) an ordinal feature, we one-hot encode \(\textit{Educ}\) in all our regression models. Using standard indicator functions, we let

dropping the former (i.e., \(\textit{Educ0}\)) in all models to avoid multicollinearity.

We provide statistics on our full data set in Tables 3 and 4. Statistics on observations recorded after the warning message was sent (relevant to our regression discontinuity setup) can be found in Appendix C.

Explorative Analysis

Figure 2 illustrates the fraction of fraud victims recorded in our data set per month relative to when the warning message was sent. Prior to the message, clients below and above 40 years of age followed roughly similar trends. Thus, we believe the figure supports that a difference-in-differences setup is reasonable. After the warning message, we see a disproportionately large jump in the proportion of fraud victims over 40, suggesting that the warning message did not reduce fraud susceptibility.Footnote 5

Figure 3 illustrates the relationship between client age and whether a client received the warning message. Non-compliant clients amount to approximately 8.9% of all observations. If we only consider clients recorded after the message was sent (relevant to our regression discontinuity setup; see Fig. 6), non-compliant clients constitute approximately 9.0%. We believe that the observed non-compliance is due to several factors: (i) wrong database entries, (ii) clients not being registered in the bank’s messaging system, and (iii) the fact that some clients may only have opened a bank account after the message was sent. Figure 4 further illustrates the relationship between client age and observation type at the time of feature recording.

In preparation for our regression discontinuity setup, we follow recommendations by Imbens and Lemieux (2008) and provide a number of statistics and plots in Appendices C and D, only considering observations recorded after the warning message was sent. Based on these, we have no concerns about discontinuity at the treatment limit (i.e., being 40 years old).Footnote 6

In agreement with the data-providing bank, we keep the distribution of losses associated with fraud cases confidential. However, we note that neither a standard Student’s nor a Welch’s t-test indicates a difference in the average loss associated with fraud cases recorded before and after the warning message was sent (regardless of whether one considers (i) all fraud cases, (ii) cases associated with clients over 40 years of age when the warning message was sent, or (iii) cases associated with clients that actually received/would receive the warning message).Footnote 7

Methodology

We employ two types of linear probability models, relying on (i) difference-in-differences and (ii) regression discontinuity designs. All models are implemented in Python with the statsmodels module (Seabold & Josef Perktold, 2010), use weighting as described in the “Weighting” section, and employ robust standard errors.Footnote 8

Difference-in-differences

A difference-in-differences design compares the outcomes of two groups over time; one group receiving a treatment and one group not receiving it. Assuming that outcomes in the two groups, absent any treatment, would have followed parallel trends, any difference over time between the relative group differences can be ascribed to the treatment. The approach has been heavily shaped by works within labor economics (see, e.g., works by Card and Krueger (1994); Ashenfelter (1978); Ashenfelter and Card (1985)). For a general introduction, we refer to Angrist and Krueger (1999).

Let \(y_{i}\in \{0,1\}\) denote if client i is a fraud victim (with \(y_{i}=1\)) or baseline client (with \(y_{i}=0\)). Our basic difference-in-differences model is given by

where \(\beta _{0}\in \mathbb {R}\) is an intercept, \(\beta _{1},\beta _{2},\beta _{3}\in \mathbb {R}\) are regression coefficients, and

-

\(\textit{Time}_{i}\in \{0,1\}\) denotes if client i is observed before (\(\textit{Time}_{i}=0\)) or after (\(\textit{Time}_{i}=1\)) the warning message was sent, and

-

\(\textit{Warn}_{i}\in \{0,1\}\) denotes if client i is in the group that received the warning message (\({\textit{Warn}_{i}=1}\)) or not (\({\textit{Warn}_{i}=0}\)). Notably, we record \(\textit{Warn}\) without regard for the time of feature recording (see the “Data” section).

We progressively add control variables (i.e., features from the “Features” section) to the model in Eq. 2. In connection to this, a correlation of 0.66 between \(Age_{i}\) and \(Warn_{i}\) might lead to worries about multicollinearity. To address concerns, we report variance inflation factors in Appendix E (see James et al. (2021) for an introduction). Disregarding interaction terms (and features directly involved in interaction terms), our variance inflation factors indicate that multicollinearity is not a cause of concern for any of our models. We note that models employing our \(\textit{Educ}\) feature (with the one-hot encoding given in the “Features” section) use fewer observations than our other models (as we drop observations with missing data). Our primary interest is always the treatment effect on the treated group, i.e., the coefficient associated with \(\textit{Warn}\times \textit{Time}\).

Regression Discontinuity

A regression discontinuity design employs the idea that observations within some bandwidth of a threshold are similar; except that those above it receive a treatment while those below do not. The approach has been heavily shaped by works on education (see, e.g., Thistlethwaite and Campbell (1960); Angrist and Krueger (1991); Angrist and Lavy (1999)). For a general introduction, we refer to Imbens and Lemieux (2008).

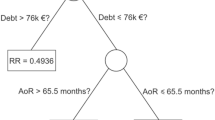

Our regression discontinuity design is heavily inspired by Imbens and Lemieux (2008) and employs local linear estimation without a kernel (but uses weighting to account for undersampling). We discard all observations recorded before the warning message was sent. Furthermore, we only consider clients within a bandwidth \(h^{*}\) of being 40 years old (see the “Bandwidth Selection” section). Due to non-compliance (see Fig. 6), we employ a fuzzy design (Roberts & Whited, 2013). Consider client i’s age when the warning message was sent and subtract the message threshold (i.e., 40). Denote the result as \(\textit{Chron}_{i}\).Footnote 9 Recall that \(\textit{Warn}_{i}\in \{0,1\}\) denotes if client i received the warning message. We first fit

where \(\mu _{0}\in \mathbb {R}\) is an intercept, \(\mu _{1},\mu _{2},\mu _{3}\in \mathbb {R}\) are regression coefficients, and \(\mathbbm {1}_{\{\textit{Chron}_{i}\ge 0\}}\) is a standard indicator function. Recall, furthermore, that \(y_{i}\in \{0,1\}\) denotes if client i is a fraud victim. We secondly fit

where \(\rho _{0}\in \mathbb {R}\) is an intercept, \(\rho _{1},\rho _{2},\rho _{3}\in \mathbb {R}\) are regression coefficients, and \(\textit{WarnPred}_{i}\) is a prediction calculated from the fitted model in Eq. 3.

As in our difference-in-differences setup, we progressively add control variables to both Eqs. 3 and 4. This includes our \(\textit{Educ}\) feature, though it means using fewer observations (as we drop observations with missing data). In all specifications, our primary interest is the local average treatment effect (at 40 years of age), i.e., the coefficient associated with \(\textit{WarnPred}\).

Bandwidth Selection

To select a bandwidth for our regression discontinuity models, we employ a slightly modified version of the cross-validation procedure by Imbens and Lemieux (2008). Let \(\textit{WarnPred}_{i}\) and \(\hat{y}_{i}\) denote predictions from Eqs. 3 and 4, respectively. Our procedure considers symmetric bandwidths \(h=11,\dots ,20\),Footnote 10 uses \(K=10\) fold cross validation, and goes as follows:

-

1.

consider clients with \(-h\le \textit{Chron}_{i}\le h\) (i.e., within h years of being 40 years old),

-

2.

construct K folds of the data,

-

3.

for each fold, \(k=1,\dots ,K\),

-

1.

estimate Eqs. 3 and 4 using all observations but those in fold k,

-

2.

using observations \(i=1,\dots ,n_{k}\) in fold k, calculate the sums of squared errors,

$$\begin{aligned} CV_{W}(h,k)=\frac{1}{n_{k}}\sum _{i=1}^{n_{k}}(\textit{Warn}_{i}-{\textit{WarnPred}_{i}})^{2} \text { and } CV_{Y}(h,k)=\frac{1}{n_{k}}\sum _{i=1}^{n_{k}}(y_{i}-\hat{y}_{i})^{2}, \end{aligned}$$(5)

-

1.

-

3.

finally, calculate the cross validation estimated sums of squared errors associated with h,

$$\begin{aligned} CV_{W}(h)=\frac{1}{K}\sum _{k=1}^{K}CV_{W}(h,k) \text { and } CV_{Y}(h)=\frac{1}{K}\sum _{k=1}^{K}CV_{Y}(h,k). \end{aligned}$$(6)

We select the smallest bandwidth \(h^{*}\) implied by the cross validation procedure, i.e.,

Results from our procedure yield an optimal bandwidth \( h^{*}=17\). For simplicity, we do not change the bandwidth as we add control variables. In all tables with model results, we report the number of clients used to run our analysis (i.e., the number of clients falling within the bandwidth).

Weighting

We use weighting to account for the fact our baseline clients are undersampled. The approach is inspired by a maximum-likelihood interpretation of linear regression; we weigh each observation inversely proportional to the probability that it should be in our sample. To be specific, we weigh each baseline observation by

where n denotes the total number of observations in a sample, f denotes the number of fraud cases in said sample, and N denotes our population size (i.e., the total number of clients at the bank).Footnote 11 All fraud observations are simply weighted by \(w_{1}=1\).

We use the weighting scheme in all our regression models, i.e., both in Eqs. 2, 3, and 4 (including when we calculate our regression discontinuity bandwidth \(h^{*}\), as per “BandwidthSelection” section). Notably, both n and f will change depending on the model specification we consider (i.e., how many observations are available).

Results

Results from our difference-in-differences setup are given in Table 5. As a robustness check, we also run all models on observations recorded just 3 months before and 3 months after the warning message was sent. As one might expect (see the “Related Literature on FinancialFraud” section), age generally appears to increase fraud susceptibility. On the other hand, having a partner generally appears to decrease fraud susceptibility. The latter result holds for all our difference-in-differences models except Model 14, incorporating all features and considering data recorded only 3 months before and after the warning message was sent (with a corresponding reduction in the number of employed observations). The result might indicate that people with a partner talk to them before falling victim to fraud (though more research is needed to support this). In relation to our treatment of interest, i.e., the bank’s warning message, all models display insignificant treatment effects on the treated group (i.e., insignificant \(\textit{Warn}\times \textit{Time}\) coefficients). Disregarding interaction terms (and features directly involved in interaction terms), our variance inflation factors indicate that multicollinearity is not a cause of concern for any of our models (see Appendix E). Results using unweighted models (all showing insignificant treatment effects) are included in Appendix F.

Table 6 displays results from our regression discontinuity setup. In all models, the local average treatment effect associated with the warning message (i.e., the coefficient associated with \(\textit{WarnPred}\)) is insignificant. Disregarding interaction terms (and features directly involved in interaction terms), our variance inflation factors indicate that multicollinearity is not a cause of concern for any of our models (see Appendix E). Results using unweighted models (all showing insignificant treatment effects) are included in Appendix F.

As argued in the “Data” section, non-delivery and romance fraud may differ from the other types of fraud in our data. As a robustness check, we run our models on data where (i) all victims of non-delivery fraud and (ii) all victims of non-delivery and romance fraud are dropped (see Appendix G). All models, still, show insignificant treatment effects.

Discussion and Conclusion

We consider a fraud awareness campaign by a systemically important Danish bank, targeting clients over 40 years of age with a mass warning message. To evaluate the campaign’s effect, we use two different quasi-experimental designs. Our difference-in-differences design compares clients before and after the warning message was sent (assuming parallel trends over time). Our regression discontinuity design compares clients within a bandwidth of being 40 years old (assuming that these are comparable). Our study employs data on all known fraud victims at the bank and a sample of “baseline” clients (i.e., clients assumed not to have been defrauded). We consider a multitude of models, controlling for age, sex, relationship status, financial funds, residence, and (for a subset of our data) education. No model finds any evidence that the message had a significant effect. Robustness checks, excluding non-delivery and romance fraud (as these might differ from other types of fraud), also fail to find any significant effect.

Our results show that the considered campaign, relying on mass messaging, had little effect in terms of reducing financial fraud. This can be due to the particular message or how it was delivered. The message is, however, in line with recommendations from Cross and Kelly (2016), arguing that warnings should not be too specific or overwhelm clients with details. At the same time, though, the message did not warn about particular types of fraud, a strategy that may be more effective as shown by Scheibe et al. (2014). Our results might prompt one to question whether mass messaging campaigns are worthwhile endeavors for banks and public authorities. Moreover, skepticism about other types of awareness campaigns (e.g., TV spots) might be warranted. Limitations of our study include an absence of information regarding whether clients opened and read the warning message. This, however, illustrates a fundamental challenge of fraud awareness campaigns (as opposed to direct interventions): it is impossible to guarantee that people will pay attention to, or even notice, awareness campaigns. It might also explain why our results contrast with those showing that direct counseling or educational interventions can reduce fraud susceptibility, such as AARP Foundation (2003); Scheibe et al. (2014); Burke et al. (2022). We stress that our study does not take revictimization into account. Furthermore, we note that the warning message in our study was sent in late September 2020, resulting in data collection spanning from March 2020 to March 2021 during the COVID-19 pandemic. This might influence our results, though Denmark saw varying degrees of restrictions both before and after the warning message was sent.

Data Availability

Data is not available due to confidentiality.

Notes

This way, we avoid a potential time-problem in our analysis; it could be that the warning message prompted some victims to report frauds that happened before the warning message was sent.

Most fraud cases are opened soon after the first fraudulent transaction. In our data, the median time between when a case is opened and the first transaction equals approximately 1 day, the mean 5 days, and the standard deviation 14 days.

This means that we drop two fraud cases, opened at the start of our data collection period, both involving transactions that occurred (just) more than 6 months before the warning message was sent.

By an active account, we mean that at least one transaction must have been made to or from the account in the data collection period.

In fact, the jump right after the warning message might indicate the opposite. We are aware of anecdotes from fraud experts, describing how criminals use warning messages actively in their scams, referring to them as they try to convince victims to provide personal information.

A plot pertaining to our Funds feature is omitted; the data is kept confidential in agreement with the data-providing bank.

For larger cases involving more than simple online banking (e.g., multiple misused credit cards), our data on fraud losses is associated with a high degree of uncertainty.

Note the subtle, but principle, difference between a client’s age (i) when the warning message was sent and (ii) at the time of our feature recording (see the “Data” section). We use the notation Chron (shorthand for chronological) to stress the difference.

We do not consider values \(h<11\) to ensure a sufficient amount of observations.

We measure N by considering all clients over 18 years of age with an active account at any point in the data collection period and an open account when the warning message was sent. The exact number is kept confidential in agreement with the data-providing bank.

References

AARP Foundation. (2003). Off the hook: Reducing participation in telemarketing fraud. Retrieved November 8, 2023, from https://assets.aarp.org/rgcenter/consume/d17812_fraud.pdf.

Angrist, J. D., & Krueger, A. B. (1999). Chapter 23 - Empirical strategies in labor economics. In O. C. Ashenfelter & D. Card (Eds.), Handbook of labor economics (Vol. 3, pp. 1277–1366). https://doi.org/10.1016/S1573-4463(99)03004-7.

Angrist, J. D., & Lavy, V. (1999). Using Maimonides’ rule to estimate the effect of class size on scholastic achievement. The Quarterly Journal of Economics, 114(2), 533–575. Retrieved May 4, 2023, from http://www.jstor.org/stable/2587016.

Angrist, J. D., & Krueger, A. B. (1991). Does compulsory school attendance affect schooling and earnings? The Quarterly Journal of Economics, 106(4), 979–1014.

Ashenfelter, O. (1978). Estimating the effect of training programs on earnings. The Review of Economics and Statistics, 60(1), 47–57. Retrieved May 4, 2023, from http://www.jstor.org/stable/1924332.

Ashenfelter, O., & Card, D. (1985). Using the longitudinal structure of earnings to estimate the effect of training programs. The Review of Economics and Statistics, 67(4), 648–660. Retrieved November 8, 2023, from http://www.jstor.org/stable/1924810.

Bennett, D. A. (2001). How can I deal with missing data in my study? Australian and New Zealand Journal Of Public Health, 25(5), 464–469.

Burke, J., Kieffer, C., Mottola, G., & Perez-Arce, F. (2022). Can educational interventions reduce susceptibility to financial fraud? Journal of Economic Behavior & Organization, 198, 250–266. https://doi.org/10.1016/j.jebo.2022.03.028

Button, M., & Cross, C. (2017). Cyber frauds, scams and their victims. Taylor & Francis.

Card, D., & Krueger, A. B. (1994). Minimum wages and employment: A case study of the fast-food industry in New Jersey and Pennsylvania. The American Economic Review, 84(4), 772–793. Retrieved April 25, 2023, from http://www.jstor.org/stable/2118030.

Choi, K., Lee, J.-L., & Chun, Y.-T. (2017). Voice phishing fraud and its modus operandi. Security Journal, 30, 454–466. https://doi.org/10.1057/sj.2014.49

Cross, C., & Kelly, M. (2016). The problem of “white noise": Examining current prevention approaches to online fraud. Journal of Financial Crime, 23(4), 806–818. https://doi.org/10.1108/JFC-12-2015-0069

Danish Agency for Digital Government. (2023). Three generations of eID in Denmark. Retrieved November 8, 2023, from https://en.digst.dk/systems/mitid/three-generations-of-eid-in-denmark.

Danish Ministry of Higher Education and Science. (2021). Qualifications framework for lifelong learning. Retrieved September 2, 2022, from https://ufm.dk/en/education/recognition-and-transparency/transparency-tools/qualifications-frameworks.

Danmarks Nationalbank. (2022). Denmark is among the most digitalised countries when it comes to payments. Retrieved February 22, 2022, from https://www.nationalbanken.dk/media/mujcrjnf/analysis-nr-2-denmark-is-among-the-most-digitalised-countries-when-it-comes-to-payments.pdf.

Engels, C., Kumar, K., & Philip, D. (2020). Financial literacy and fraud detection. The European Journal of Finance, 26(4–5), 420–442. https://doi.org/10.1080/1351847X.2019.1646666

Gamble, K. J., Boyle, P., Yu, L., & Bennett, D. (2014). The causes and consequences of financial fraud among older Americans. Boston College Center for Retirement Research WP, 13. https://doi.org/10.2139/ssrn.2523428

Gotelaere, S., & Paoli, L. (2022). Prevention and control of financial fraud: A scoping review. European Journal on Criminal Policy and Research. https://doi.org/10.1007/s10610-022-09532-8

Han, D., Boyle, P., James, B., Yu, L., & Bennett, D. (2016). Mild cognitive impairment and susceptibility to scams in old age. Journal of Alzheimer’s Disease, 49(3), 845–851. https://doi.org/10.3233/JAD-150442

Hoffmann, A. O. I., & Birnbrich, C. (2012). The impact of fraud prevention on bank-customer relationships: An empirical investigation in retail banking. International Journal of Bank Marketing, 30, 390–407. https://doi.org/10.1108/02652321211247435

Imbens, G. W., & Lemieux, T. (2008). Regression discontinuity designs: A guide to practice. Journal of Econometrics, 142(2), 615–635. https://doi.org/10.1016/j.jeconom.2007.05.001

James, B., Boyle, P., & Bennett, D. (2014). Correlates of susceptibility to scams in older adults without dementia. Journal Of Elder Abuse & Neglect, 26, 107–22. https://doi.org/10.1080/08946566.2013.821809

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2021). An introduction to statistical learning: With applications in R (2nd ed.). New York, NY: Springer.

Kircanski, K., Nottho, N., DeLiema, M., Samanez-Larkin, G., Shadel, D., Mottola, G., & Gotlib, I. (2018). Emotional arousal may increase susceptibility to fraud in older and younger adults. Psychology and Aging, 33, 325–337. https://doi.org/10.1037/pag0000228

Levi, M., Doig, A., Luker, J., Williams, M., & Shepherd, J. (2023). Towards a public health approach to frauds. Retrieved November 8, 2023, from https://www.westmidlands-pcc.gov.uk/wp-content/uploads/2023/04/Fraud-report-Vol-2-PCC-final.pdf?x57454.

Lichtenberg, P. A., Stickney, L., & Paulson, D. (2013). Is psychological vulnerability related to the experience of fraud in older adults? Clinical Gerontologist, 36(2), 132–146. https://doi.org/10.1080/07317115.2012.749323

Lichtenberg, P. A., Sugarman, M. A., Paulson, D., Ficker, L. J., & Rahman-Filipiak, A. (2016). Psychological and functional vulnerability predicts fraud cases in older adults: Results of a longitudinal study. Clinical Gerontologist, 39(1), 48–63. https://doi.org/10.1080/07317115.2015.1101632

MacKinnon, J. G., & White, H. (1985). Some heteroskedasticity-consistent covariance matrix estimators with improved finite sample properties. Journal of Econometrics, 29(3), 305–325. https://doi.org/10.1016/0304-4076(85)90158-7

Medaglia, R., Hedman, J., & Eaton, B. (2017). Public-private collaboration in the emergence of a national electronic identification policy: The case of NemID in Denmark. In T. Bui & R. Sprague (Eds.), Proceedings of the 50th hawaii international conference on system sciences, HICSS 2017 (pp. 2782–2791). https://doi.org/10.24251/HICSS.2017.336.

Poppleton, S., Lymperopoulou, K., & Molina, J. (2021). Who suffers fraud? Understanding the fraud victim landscape. The Victims’ Commissioner. Retrieved November 8, 2023, from https://cloud-platform-e218f50a4812967ba1215eaecede923f.s3.amazonaws.com/uploads/sites/6/2021/12/VC-Who-Suffers-Fraud-Report-1.pdf.

Prenzler, T. (2020). What works in fraud prevention: A review of real-world intervention projects. Journal of Criminological Research, Policy and Practice, 6(1), 83–96. https://doi.org/10.1108/JCRPP-04-2019-0026

Roberts, M. R., & Whited, T. M. (2013). Chapter 7 - Endogeneity in empirical corporate finance. In G. M. Constantinides, M. Harris, & R. M. Stulz (Eds.), Handbook of the economics of finance (Vol. 2, pp. 493–572). https://doi.org/10.1016/B978-0-44-453594-8.00007-0.

Scheibe, S., Notthoff, N., Menkin, J., Ross, L., Shadel, D., Deevy, M., & Carstensen, L. L. (2014). Forewarning reduces fraud susceptibility in vulnerable consumers. Basic And Applied Social Psychology, 36(3), 272–279. https://doi.org/10.1080/01973533.2014.903844

Skipper Seabold, & Josef Perktold. (2010). Statsmodels: Econometric and statistical modeling with python. In Stéfan van der Walt & Jarrod Millman (Eds.), Proceedings of the 9th python in science conference (pp. 92–96). Version 0.13.5. https://doi.org/10.25080/Majora-92bf1922-011.

Smith, R. G., & Akman, T. (2008). Raising public awareness of consumer fraud in Australia. Trends and issues in crime and criminal justice, (349). Retrieved November 8, 2023, from https://www.aic.gov.au/publications/tandi/tandi349.

Spar Nord. (2023). Spar Nord annual report 2022. Retrieved February 9, 2023, from https://media.sparnord.dk/com/investor/financial_communication/reports/2022/annual-report-2022.pdf.

Statistics Denmark. (2023). It-anvendelse i befolkningen 2022. Only available in Danish. Retrieved November 8, 2023, from https://www.dst.dk/Site/Dst/Udgivelser/GetPubFile.aspx?id=44692 &sid=itbef2022.

Swedish Bankers’ Association. (2023). Svårlurad. Retrieved November 8, 2023, from https://svarlurad.se/en/.

Thistlethwaite, D. L., & Campbell, D. T. (1960). Regression-discontinuity analysis: An alternative to the ex post facto experiment. Journal of Educational Psychology, 51(6), 309–317.

UK Finance. (2023). Take five. Retrieved April 11, 2023, from https://www.takefive-stopfraud.org.uk/.

U.S. Immigration and Customs Enforcement. (2023). HSI launches new awareness campaign for digital romance scams on valentine’s day. Retrieved February 15, 2023, from https://www.ice.gov/news/ releases/hsi-launches-new-awareness-campaign-digital-romance-scams-valentines-day.

Wang, F., Howell, C. J., Maimon, D., & Jacques, S. (2020). The restrictive deterrent effect of warning messages sent to active romance fraudsters: An experimental approach. CrimRxiv. https://doi.org/10.21428/cb6ab371.c6eae022.

Funding

Open access funding provided by Aarhus Universitet.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Original Version of Warning Message

The original warning message sent by Spar Nord, in Danish, can be found in Fig. 5.

B Detailed Description of Educational Encoding

Our \(\textit{Educ}\) feature is encoded by hand, using free-text descriptions of occupational and educational histories, primarily written by bank advisors. Encoding is done using the Qualifications Framework of the Danish Ministry of Higher Education and Science (2021), placing educations on ordinal levels 1–8 (see Table 2). We apply a grouping such that levels 1–3 (anything less than a high school degree) yield \(\textit{Educ}=0\), levels 4–5 (including a high-school degree) yield \(\textit{Educ}=1\), and levels 6–8 (including a university degree) yield \(\textit{Educ}=2\). If a client has multiple educations, we record the highest level. For a lot of clients, encoding is straightforward. For instance, a professional degree in nursing equals level 6, yielding \(\textit{Educ}=2\). For some clients, however, we only have good occupational (and not educational) histories. In such cases, we try to infer a client’s education based on their occupational history (acknowledging that it is an imprecise approach). For example, a client that has worked as a licensed plumber for 25 years almost certainly has vocational training in plumbing (level 4, yielding \(\textit{Educ}=1\)). In cases of doubt (or completely missing information), we encode \(\textit{Educ}\) as missing. In total, the described occupational inference is done for over half of all observations where \(\textit{Educ}\) is assigned a value (i.e., where the feature is not missing). We observe a trend where educational and occupational histories tend to be lacking for clients with little bank involvement (e.g., no loans or investments). Furthermore, we believe that our educational and occupational histories are of a worse quality for clients with less education. Thus, \(\textit{Educ}\) is not missing completely at random (see Bennett (2001) for an introduction to different types of missing data). The degree to which it is missing at random, versus missing not at random, depends on its relationship to our other features. While \(\textit{Educ}\) does show correlation with our other features (see Table 4), we do not investigate this further; stressing that \(\textit{Educ}\) only is used as a control variable.

C Regression Discontinuity Statistics

In preparation for our regression discontinuity setup, we provide a number of statistics in Tables 7 and 8, considering observations recorded after the warning message was sent.

D Regression Discontinuity Plots

In preparation for our regression discontinuity setup, we provide a number of plots (Figs. 6, 7, 8, 9, 10, and 11), considering observations recorded after the warning message was sent.

E Variance Inflation Factors

To address concerns about multicollinearity, we include variance inflation factors associated with each feature in our primary (weighted) models (Tables 9 and 10).

F Unweighted Models

As a robustness check, we include unweighted model estimates in Tables 11 and 12. Note that we run our bandwidth selection procedure (see the “Bandwidth Selection” section) again. However, we still get an optimal bandwidth \(h^{*}=17\).

G Excluding Non-delivery and Romance Fraud

As a robustness check, we run all (weighted) models on data where (i) all victims of non-delivery fraud (see Tables 13 and 14) and (ii) all victims of non-delivery and romance fraud are dropped (see Tables 15 and 16). Note that we, for both (i) and (ii), run our bandwidth selection procedure (see the “Bandwidth Selection” section) again. However, we still get optimal bandwidths \(h^{*}=17\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jensen, R.I.T., Gerlings, J. & Ferwerda, J. Do Awareness Campaigns Reduce Financial Fraud?. Eur J Crim Policy Res (2024). https://doi.org/10.1007/s10610-024-09573-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s10610-024-09573-1