Abstract

Presently, most business-to-consumer interaction uses consumer profiling to elaborate and deliver personalized products and services. It has been observed that these practices can be welfare-enhancing if properly regulated. At the same time, risks related to their abuses are present and significant, and it is no surprise that in recent times, personalization has found itself at the centre of the scholarly and regulatory debate. Within currently existing and forthcoming regulations, a common perspective can be found: given the capacity of microtargeting to potentially undermine consumers’ autonomy, the success of the regulatory intervention depends primarily on people being aware of the personality dimension being targeted. Yet, existing disclosures are based on an individualized format, focusing solely on the relationship between the professional operator and its counterparty; this approach operates in contrast to sociological studies that consider interaction and observation of peers to be essential components of decision making. A consideration of this “relational dimension” of decision making is missing both in consumer protection and in the debate on personalization. This article defends that consumers’ awareness and understanding of personalization and its consequences could be improved significantly if information was to be offered according to a relational format; accordingly, it reports the results of a study conducted in the streaming service market, showing that when information is presented in a relational format, people’s knowledge and awareness about profiling and microtargeting are significantly increased. The article further claims the potential of relational disclosure as a general paradigm for advancing consumer protection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Preliminary Considerations

It is widely acknowledged that the majority of business-to-consumer (B2C) interaction is based on consumer profiling and that individuals’ data are increasingly used as a tool to elaborate and deliver personalized products and services (Helberger, 2016).

With the development of automated analysis strategies and AI-based techniques, firms are able to personalize different aspects of commercial interaction, ranging from the modes of the offer—e.g., via behavioural advertising and microtargeting (Boerman et al., 2017)—to the prices (Ezrachi & Stucke, 2016; Wallheimer, 2018) and even the specific features of products (Domurath, 2019).

In general terms, it has been observed (Article 29 Data Protection Working Party, 2017) that these practices are—or at least can be—welfare-enhancing if they are properly regulated. From the perspective of the firm, the personalization of B2C interaction by means of profiling can help manage the costs for production, simplify the identification of the target market, and support the development of effective commercial strategies and advertising. As far as consumers are concerned, profiling reduces information search costs, helps identify desired products, and—if certain conditions are met—enhances consumers’ access to goods and services by allowing for price discrimination (Zuiderveen Borgesius & Poort, 2017).

At the same time, risks related to unregulated abuse of personalized commercial practices are present and significant: using personalizing technologies to match individual users to target audiences and even to create predictive profiles might result inter alia in violation of users’ data protection and privacy, unjust discrimination based on the analysis of protected factors, and manipulation of consumers’ decision making to the detriment of competitors (Wachter, 2020).

Also—from a macro perspective—the growing relevance of data as a functional element being incorporated into personalized commercial interactions has been identified as a major power shift asset for Big Tech and platforms able to leverage economies of scale related to data processing and therefore to acquire overwhelming market power (Graef, 2015; Petit & Teece, 2021).

These risks operate at the crossroads of different interests and rights related to individuals and to the market as a whole; it is no surprise, therefore, that in recent times, profiling and microtargeting have found themselves at the centre of the scholarly and regulatory debate across the USA and Europe (Busch, 2019).

In the European framework, as far as existing bodies of law are concerned, the capability of the General Data Protection RegulationFootnote 1 (GDPR) to provide effective regulation of the data management and processing methods implemented in profiling algorithms has been thoroughly inspected (Dobber et al., 2019; Eskens, 2016; Malgieri, 2021; Wachter, 2018); also, competition law was identified as a potential tool to tackle the structural distortions caused by personalized practices occurring at market level (Ezrachi & Stucke, 2019; Mundt, 2020; Picht & Tazio Loderer, 2019).

Personalized practices are also taken into major consideration in currently in-development regulatory projects. The manipulative capacity of AI is identified as a pivotal risk in the Proposal for a Regulation on Artificial Intelligence (AI Act or AIA)Footnote 2; the AIA expressly forbids the use of AI techniques that deploy subliminal methods to materially distort a person’s behaviour or that exploit the vulnerabilities of a specific group of persons to cause them physical or psychological harm (Art. 5(a) and (b)). In light of recent debates, it is possible that even a ban of these technologies might be approved in the near future, especially when they are used in extremely delicate areas related to the preservation of the democratic process and fundamental rights—as in the case of political microtargeting (Eskens et al., 2017).

Yet, given the currently intense use of profiling in the commercial context, and the potential of tailored market practices to limit the autonomy of consumers in choosing and identifying products of their interest or, in general, in their transactional decision making, a substantive body of literature exists that is focused on the role that consumer and private law can play in empowering individuals against these phenomena.

As far as private law is concerned, the impact of tailored interactions has been scrutinized under the lens of rules on defective consent (Davola, 2021); on the other hand, when examining the role that consumer law can play in tackling the risks connected to unregulated personalization of commercial interactions, the framework laid down by the Unfair Commercial Practices Directive (UCPD)Footnote 3 and by the Directive on Consumer RightsFootnote 4 (especially in light of the innovations proposed in the New Deal for ConsumersFootnote 5 and the amendments subsequently introduced by the Modernization DirectiveFootnote 6) has been of particular interest (Galli, 2021; Hacker, 2021; Laux et al., 2021; Sartor et al., 2021).

Within such a heterogeneous framework, a common perspective across the different regulations seems nevertheless identifiable: given the capacity of microtargeting to potentially undermine consumers’ autonomy, the success of the regulatory intervention depends primarily on people being aware of the personality dimension being targeted. Enabling consumers to understand what platforms do with their data and what users’ choices imply and to then translate this knowledge into measurable behaviours (e.g., by prompting people to adjust their privacy settings) is identified as an essential step towards regaining autonomy and promoting genuine self-determination (Lorenz-Spreen et al., 2021).

As a consequence—and consistent with the traditional role that information is acknowledged to have in consumer law—disclosure rules arose as a cornerstone in regulating microtargeting; various bodies of law mandate that consumers are provided with information regarding the personalized nature of what they observe on the market, and this approach is likely to be followed in forthcoming regulations as well.

At the same time, it shall be underlined that information-based strategies do not come free from any concern, and doubts have been cast over their functioning. This is mostly because consumers experience a significant number of vulnerabilities and bias when assessing the relevance and quality of information: Individuals systematically suffer from cognitive bias, experience information overload, and are overall less efficient in understanding information than what the rationalist economic analysis of decision-making postulates (Jacoby, 1984; Kahneman, 2011; Mahlotra, 1982; Thaler, 1985). In order to overcome these shortcomings, scholars have argued that information should be limited to a minimum, functional amount (Ayres & Schwartz, 2014; Marotta-Wurgler, 2012; Sunstein, 2020), that bias should be taken into account when designing disclosure in order to unconsciously stimulate pro-social behaviours (Alemanno & Sibony, 2015; Hacker, 2016; Thaler & Sunstein, 2008), or that a more prominent role should be given to market supervision over consumers’ empowerment (Larsson, 2018). Yet, even if there is wide consensus regarding the shortcomings of information obligations, they still constitute a primary recommendation for legislative actions, with the regulation of personalized practices being no exception (BEUC, 2021).

Alongside, recent contributions have also focused on the format of disclosures: Legal design studies aim at providing effective and empowering information by re-designing their structure and simplifying them by using tools related to visualization and graphics (Corrales Compagnucci et al., 2021; Hagan, 2016; Rossi et al., 2019).

Nonetheless, it is worth observing that all attempts to rethink disclosure across regulations are still based on the individualized format of the model, focusing solely on the relationship between the professional operator and its counterparty that characterizes existing rules. For example, when a disclosure duty regarding profiling is present, a company must inform the consumer about which of her data it is using, that she is being profiled, and that the price she receives is indeed personalized.

This approach operates in contrast with sociological studies that consider interaction and observation of the surrounding environment to be essential components of decision making: growing evidence shows that people do not learn from their own personal experience only, but also from watching what their peers do and what happens to them when they do it. In particular, social learning theory (SLT) relies on the idea that people learn from their interactions with others in social contexts and that observing third parties’ experiences is a determinant to becoming aware of phenomena (Bandura, 1977). Interestingly—if the conditions allow for peer observation—this effect also takes place in online environments (Hill et al., 2009). Over the years, the SLT framework has been employed to investigate—among other issues—the consumption dynamics for different sets of goods and services such as digital content, energy, and traditional physical products (respectively Kent & Rechavi, 2020; Moretti, 2011; Wilhite, 2014), as well as for the development of policy strategies related to governance models, crime prevention measures, and public expenditure (Castaneda & Guerrero, 2019; Nicholson & Higgins, 2017; Hall, 1993).

A consideration of this, we might say, “relational dimension” of decision making, is largely absent in the current framework for consumer protection, however, and it is missing in the debate on regulating personalization as well. This aspect is particularly problematic when we consider that the creation of micro-segmented markets—in which consumers are not able to observe their peers and compare respective choices—is a structural element in personalized practices. Consumers exposed to a personalized offer for a product only see a minor (individually created) subset within the whole assortment of products of the same kind that are present on the market; no information is provided about what is happening to other people, what they are seeing, and at which conditions and prices. Hence, consumers are deprived of general understanding regarding the state of the market and the behaviour of their peers, which might be significant for them to develop purchase preferences consciously and autonomously.

Building on SLT, we defend that the introduction of a relational dimension in consumers’ decision making regarding personalized practices—i.e., the creation of a condition in which consumers are able to compare their own personalized offers to what is being offered to individuals with similar or different characteristics—can significantly improve consumers’ awareness. For such a situation to occur, information should be offered according to a relational format that is a form allowing for comparison and peer observation.

In our view, such an amendment would promote effective transparency in terms of consumers’ understanding of personalization and its consequences, which demonstrably enables them to better fathom what platforms do with their data, how profiling algorithms process them, and what their choices imply. Accordingly, we envisioned a system of contextualized disclosure and tested it in the provision of personalized services. This article reports the result of a study conducted in the streaming service market, in which we show that when information is presented in a relational format, people’s knowledge and awareness about profiling and microtargeting are significantly increased, and consumers understand with better clarity what these techniques are, how they function, and are consequently more open to evaluate if they want their information to be acquired and to eventually reconsider the transactions that require them to provide data. Overall—and considering the need for further research in different settings—the study provides a first set of evidence suggesting that relational disclosure could arise as a regulatory paradigm able to advance consumer protection in the digital environment.

The “Disclosure as a Regulatory Tool in Consumer Law” section begins by describing the conceptual background behind the research hypothesis, briefly reflecting on the role of disclosure as a regulatory strategy in consumer law and in addressing personalization. The “The Absence of a “Relational” Concept in the EU Disclosure Framework and Its Relevance in Regulating Personalized Interactions” section then highlights the individualized nature of disclosure rules in existing and in-development EU laws that are relevant to the topic of personalization; it then introduces—drawing from SLT—the concept of “relational disclosure” and highlights its relevance in the context of personalized interactions. The “Testing Relational Disclosure on Consumers: a Study on Personalized Services” section provides the article’s core contribution by reporting the design, methods, and results of our online experiment: We show that employing a system of relational disclosure in the field of personalized services has a major effect on consumers’ subjective understanding of these techniques and their willingness to disclose (WTD) their data. The “Discussion” section looks at the implications of our findings for consumer law, in order to argue that the introduction of a “relational” approach that improves people’s competence to detect and understand personalization should be part of ongoing policy developments aimed at increasing platforms’ transparency and users’ autonomy. Lastly, the “Towards a General Paradigm of Relational Disclosure? Limitation of the Study, Perspective for Further Research, and Concluding Remarks” section illustrates the current limitation of the study, laying the ground for further research to be conducted in the field of relational disclosure, and explores the possibility of extending our findings in order to establish a general paradigm of relational disclosure.

Disclosure as a Regulatory Tool in Consumer Law

Disclosure duties are a major cornerstone in consumer protection. European directives contemplate long lists of standardized information duties that companies must provide consumers before, throughout, and after the provision of goods and services (Busch, 2016). Mandatory information pertains to the main characteristics of marketed products and services, to the obligations of the provider, and to the rights to which her counterparty is entitled; information must be given regarding a product’s expected functioning, its modes of use, and its conformity with the contract (Carvalho, 2019; Wilhelmsson & Twigg-Flesner, 2006). More generally, consumer law requires that a consumer disclosed all the information deemed relevant for her transactional decision making(see, e.g., Art. 5 UCPD). Such information must be provided in a clear, correct, and comprehensible way; therefore, information that is incomplete, misleading, factually incorrect, or likely to cause errors on the side of the consumer is susceptible to activating a set of remedies in her favour and, eventually, actions by consumer organizations and administrative authorities (Whittaker, 2008). Besides public sanctions, remedies against misinformation are heterogeneous, going inter alia from the general voidability of the contract to the non-application of a specific clause, from establishing a right to withdrawal and compensation in favour of the consumer, to shifting the burden of proof and liability regime to the producer’s side (Micklitz, 2013; Wilhelmsson, 2003).

The reasons behind consumer law’s heavy reliance on disclosure duties as means of protection have been thoughtfully inspected by legal scholars. First, duties to inform are a “cheap” regulatory tool: once they are created, extending their scope or content does not usually require significant expenditure or economic resources (Seizov et al., 2019). In addition, disclosure rules are perceived as less invasive than interventions that directly constrain behaviours, such as command-and-control policies; therefore, they only occasionally face strong opposition by the industries and usually enjoy bipartisan political support. Furthermore, and in accordance with the neoclassical theory of decision making and with the conception of consumers as homini oeconomici, information is expected to reduce search costs and to enable market actors to better identify the products and services they are interested in. From a market perspective, increased availability of information is also supposed to lead to better pricing and, at the same time, to foster competitiveness on the market, as it reduces the information asymmetry that professionals enjoy over consumers (Beales et al., 1981; Fung et al., 2007; Marotta-Wurgler, 2012; Schwartz & Wilde, 1979).

Even if, due to these expectations, disclosure duties emerged as a founding feature of consumer law, in recent years, the abovementioned rationale for their adoption has been strongly disputed: many behavioural and experimental scholars defend that disclosure is largely overrated as a regulatory and empowerment tool, since consumers systematically experience cognitive bias and information overload, and, more generally, display bounded rationality when processing information for decision-making purposes (Bar-Gill, 2008; Gigerenzer & Selten, 2001; Simon, 1982). Hence, it has been questioned whether the quantity of information provided to a consumer and her awareness thereof should be deemed to be strictly related or if, on the contrary, after reaching a certain threshold, providing additional information should be considered relatively insignificant or even detrimental to decision making (Issacharoff, 2011).

Yet, and even if EU institutions acknowledged that behavioural studies could provide insightful evidence regarding the modes and effectiveness of disclosure obligations—and have critically re-evaluated their utility (see Baggio et al., 2021)—information duties are still dominant in consumer law.

This can be observed also when regulation specifically devoted to profiling and personalized commercial practices is considered; as we will observe in short, EU regulation heavily employs disclosure (alongside reporting to public authorities and supervision) as a regulatory tool to empower consumers when dealing with microtargeting, tailored advertisement, and, more generally, when they are subject to processes based on automated decision making including profiling.

It goes beyond the scope of this article to discuss whether disclosure as a whole constitutes a viably effective strategy for consumer protection, or if it should rather be reconsidered at its roots. It is undeniable, though, on the basis of the abovementioned studies, that disclosure can be framed more effectively and that choosing among different framings is likely to have a huge impact in raising the level of consumer protection.

The Absence of a “Relational” Concept in the EU Disclosure Framework and Its Relevance in Regulating Personalized Interactions

Whereas it is now commonly understood that many consumer behaviours do not arise from purely rational or analytical considerations, consumer law rarely takes into account the fact that choices are also the result of insights coming from relations and observation. Theories related to the sociology of consumption acknowledge that interaction with the environment constitutes an essential aspect of any learning process and that the observation of peers is a major force in experience development (Bandura, 1986; Hoffman, 1994).

In particular, SLT defends that—besides personal, lived experience—individuals learn primarily from analysing what their peers are doing and from comparing their choices to what they would do in analogous or similar situations. In this sense, any conduct should not be considered exclusively as an individual action but rather as a social act that shapes the behaviour and the experiences of the surrounding community (Crane, 2008).

Accordingly, awareness would operate by means of a three-way learning relationship, in which personal factors and cognitive competencies are intertwined with the analysis of the regulatory environment (comprising social norms) and with the observation of the behaviour of other people (McGregor, 2009). Eventually, social learning theorists argue that oftentimes people can learn through observation alone, without a need for first-hand experience. As a consequence, consumers should not be conceived as atomistic, independent, decision-making units but rather as being shaped in their choices by interaction with the social environment (Chen et al., 2017; McGregor, 2006).

Marketing studies support this narrative too. With consumers often regarding shopping as a social experience (Huang & Benyoucef, 2013), scholars have been long aware of the social dimension of consumer decision making and its potential: research on social commerce and information management shows that access to social knowledge and experience (as in the case of online reviews) allows for the clear identification of purchase interest, as well as informed and accurate consumption decisions (Lorenzo et al., 2012).

As far as legal studies are considered, such a discourse in general is not unknown to sociology and philosophy of law (Ambrosino, 2014) and, with specific consideration to the debates that have occurred in the field on consumer law, some scholars have put forth similar ideas in the discourse on libertarian paternalism and nudging (Thaler & Sunstein, 2003), especially, while peer observation has been seen as a resource to promote socially desirable behaviours. Also, Helberger et al. (2021) recently identified relationality as a structural characteristic of consumers’ digital vulnerability.

If, as these studies defend, aware decision making requires the diffusion of information related to peers and their experiences to allow consumers to examine and (eventually) revise their beliefs and preferences, the risks arising from personalized practices emerge with clarity.

When stringent profiling techniques—and the subsequent tailoring of product and services offered—are employed, consumers are exposed to individually crafted advertising based on their preferences and interests. Everyone sees a (different, individually created) subset within the whole assortment of products of the same kind that are present on the market and is not able to observe what is shown to her peers. This is likely to profoundly affect general understanding regarding the state of the market and consumers’ conscious decision making overall. Furthermore, this effect weakens the ability of consumers to recognize and understand the way profiling algorithms can craft what is offered to them, as they are deprived of a yardstick. Also, even if a website allows for the use of comparing tools within their systems—such as the “product related to this item” function employed by Amazon—this option is always functional to the platform’s market strategy (e.g., promoting sponsored products) and no information is provided to the consumer regarding why those specific elements are shown for comparison.

If the learning process encompasses the observation of similar and different group entities and individuals as a structural means to infer information that can guide decision making, then the pervasive market segmentation determined by personalization operates as an exogenous effect that potentially undermines consumers’ consciousness and awareness.

Indirect support for this consideration stems from studies grounding the appropriateness of profiling and microtargeting for political messages: scholars inspecting the effects and risks of political filter bubbles underline that uncontrolled profiling might enhance polarization and undermine critical thinking (Balkin, 2018; Crain & Nadler, 2019; Micklitz et al., 2021; Zuiverdeen Borgesius et al., 2018) and, as the events surrounding Cambridge Analytica made clear, many voices asserted that confrontation is needed for rationalization and aware decision making (Rhum, 2021).

Against this background, though, disclosure rules pertaining to profiling and microtargeting are generally inconsiderate of the social dimension of decision making. The abovementioned Modernization Directive amends the Directive 2011/83/EU by clarifying that consumers must be informed if the price of products they are offered is personalized on the basis of automated decision making (Art. 4(4)(ii)(ea)). According to EU institutions, the fact that consumers are clearly informed that the price is personalized is in fact sufficient “to take into account the potential risks in their purchasing decision” (see Recital 45 of the Modernisation Directive). If and when personal data are processed, the GDPR requires users’ consent to subject them to a decision based solely on automated processing, including profiling (Art. 22) and, in order to ensure this consent is well informed, the data subject needs to be informed of the existence of such techniques, the logic involved, their significance, and the envisaged consequences of such processing (Art. 13). Once again, this model of disclosure is deemed sufficient to enable the consumer’s (acting in her capacity as data subject) informed decision, as well as her ability to enforce the rights awarded by the Regulation.

The situation does not change when looking at more recent regulations, where it is possible to distinguish between proposals for platform governance and interventions specifically aimed at regulating artificial intelligence. As for the first group, the recently approved Digital Services ActFootnote 7 indirectly regulates certain aspects of profiling when such a technique is used to craft personalized advertisements; under the DSA (Art. 24(c)), online platforms must provide their recipients meaningful information regarding the main parameters used to determine to whom a specific advertisement is displayed. In addition (Art. 30(2)(d)), very large online platforms must create publicly available repositories including information on whether the advertisements they show were intended to be displayed specifically to one or more particular groups of recipients of the service and, if so, to illustrate the main parameters used for that purpose. On the other hand, the Digital Markets Act (DMA) introduces disclosure obligations related to microtargeting and profiling only towards the EU institutions. According to the DMA (Art. 13), gatekeepers shall, in fact, submit to the Commission independently audited descriptions of any technique for profiling of consumers that they apply to or across their core platform services. No information obligation, therefore, is established in direct favour of consumers.

With regard to proposals regulating artificial intelligence, profiling and microtargeting are taken into account in the AI Act as indirect outcomes of AI-based practices: according to the AIA, when a high-risk AI (defined in accordance with Art. 6 of the Act) is employed, users must be informed about the characteristics, capabilities, and limitations of performance of such a system, including inter alia information about its intended purpose, level of accuracy, and performance as regards the persons or groups of persons on which the system is intended to be used (Art. 13(3)). It should be observed that even if this obligation is referred to deployers of AI systems developed by third parties and therefore does not mandate information to be directly provided to consumers, this approach still heavily relies on information as the main tool for empowerment regarding personalization.

As can be observed, both existing and in-development bodies of law, while generally acknowledging that consumers must be advised about profiling and personalization, conceive the provision of information as a fundamentally individualized interaction; all disclosures pertain either to neutral aspects concerning the technology (how the AI or the algorithm is designed, what are the purposes of personalization, the fact that profiling and personalization techniques are implemented in the first place, etc.) or the characteristics and data of the user that are employed through the processing. This assumption is maintained even in those proposals arguing in favour of more personalized disclosures (Ben-Shahar & Porat, 2021; Porat & Strahilevitz, 2021). No “relational” information—useful for allowing the consumer to put the personalized outcome in context—must be provided.

In contrast with this approach, we argue that SLT can operate as a credible theoretical framework for advancing consumers’ awareness of profiling and personalization. In particular, we defend that an integrated perspective informed by SLT can assist in designing disclosures that are effective for consumers’ empowerment and that providing a relationally attentive disclosure can empower individuals regarding the features and functioning of personalization.

Testing Relational Disclosure on Consumers: A Study on Personalized Services

In order to understand how effective a system of relational disclosure would be in raising consumers’ empowerment regarding the characteristics and functioning of personalized interactions, our intention was to replicate an interaction that consumers have with a service based on profiling. Hence, we recruited participants on Prolific, and simulated their involvement in the beta testing of a new streaming media service (“Stream Now,” hereinafter SN).

In the first phase of the study, the participants were informed that they were involved in the development of SN, a media service offering subscription-based videos on demand from a library of movies. Participants were also informed that SN provides personalized movie recommendations based on users’ characteristics and preferences. Hence, we asked participants to identify the three movie genres they like the most (in no particular order) and the three genres they like the least (in no particular order). Study participants also answered various demographic questions including age, gender, and education. At the end of this phase, participants were informed that they could be contacted in the forthcoming weeks in order to participate to the subsequent phases of the SN beta test. After this phase, participants were compensated via Prolific.

In the second phase of the study, participants were exposed to traditionally intended individual (i.e., recommendations for movies based on the consumer’s preferences only) vs. “relational” (i.e., recommendations for movies based on the consumer’s preferences, but also for movies suggested to randomly selected users with different preferences and tastes) types of disclosure (TOD). This was done to observe how consumers would react to different TODs and how a relational TOD would impact consumers’ interaction with AI-based products, both in terms of subjective understanding of how personalization operates and willingness to provide information (willingness to disclose, WTD) for the sake of subsequent profiling by the platform.

Across the experiment, we tested the following hypotheses:

-

H1: Presenting relational TOD increases consumers’ WTD more than providing them with individual, traditional, TOD.

-

H2: A condition of increased WTD, in response to the presentation of relational TOD, is mediated by consumers’ subjective understanding of how AI-based personalization operates.

Overview of the Study

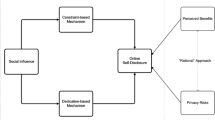

We conducted an online experimental study using Prolific, an online platform that enables large-scale data collection by connecting researchers to respondents who participate in the studies in exchange for money (Palan & Schitter, 2018; Paolacci et al., 2010). We followed Sauter et al.’s (2020) protocol for ensuring high-quality responses on Prolific (e.g., questionnaire length). The study tests H1 and H2 (see Fig. 1) and aims at demonstrating that TOD affects WTD. Specifically, when TOD is provided in a relational format, the WTD increases compared to when TOD is presented in an individual format. In addition, the study seeks to demonstrate that this effect occurs through the mediation of participants’ subjective understanding of how AI-based personalization operates.

The study identifies AI-enabled TV-streaming services as its empirical context; such a venue is particularly appropriate to test consumers’ interaction with personalized recommendations since these services are commonly used by consumers (Baine, 2021), who are therefore already familiar with their characteristics and with the fact that these platforms collect and aggregate extensive personal data to generate personalized recommendations (Gomez-Uribe & Hunt, 2015). Furthermore, we include two control variables in the study to address their potential influence on WTD: ownership of a subscription to an AI-enabled TV-streaming service and data collection concerns. To avoid any confounding effects of brand familiarity or brand awareness, the study uses a fictitious brand by the name of Stream Now (SN).

Method

Participants and Study Design

We recruited 246 US respondents via Prolific and asked them to participate in a 10-min survey in exchange for money. Each participant received 1.10 £ once the survey was completed. Five of the respondents were excluded from the sample because of uncompletedness of the answers (see Table 1 for more details about sociodemographic information). Respondents were selected on the basis of a preliminary survey run on Prolific. The preliminary survey lasted 3 min, and each participant received 0.30 £. In the preliminary survey, 783 respondents were asked to engage in an imaginative task about a possible subscription to SN and to simulate the subscription for the 1-month free trial, providing their preferences regarding movie genres. These preferences were used to categorize respondents. Respondents reporting similar preferences were then invited to take part in the study and were randomly assigned to one of the two TOD (individual vs. relational) conditions. Figure 2 illustrates the process through which participants were selected.

Procedure

In the preliminary survey, all respondents read a passage introducing SN, a newly released AI-enabled TV-streaming service offering subscription-based video on demand from a library of movies; they were also informed that SN is equipped with an AI algorithm that would leverage users’ personal data to provide personalized movie recommendations.

Then, respondents were prompted to engage in an imaginative task, hypothesizing that they were considering subscribing to SN and that they decided to subscribe to SN for a one-month free trial. They were then told that they had to provide information about their movie preferences to SN to start using the service. Therefore, participants were asked to select three movie genres that interested them most and three movie genres they liked least, choosing from a list of nine movie genres (i.e., comedy, action, crime, drama, fantasy, horror, romance, thriller, and sci-fi).

The aim of this survey was twofold. First, it was important to involve participants in a credible experience of subscribing and receiving a personalized recommendation. Second, this survey permitted the identification and selection of participants with similar tastes in order to simulate the functioning of a profiling system that delivers its recommendation using a relational TOD.

The preliminary survey showed that the most-liked movie genre among participants was comedy and the least liked was horror; hence, participants who reported comedy among their three most-liked genres and horror among their three least liked were selected and invited to participate in the main study. The information arising from the preliminary survey and that retrieved from the analysis of the functioning of actual AI-powered streaming service companies’ websites—e.g., Netflix, NowTV (Johnson, 2017)—was also used to craft the realistic stimuli for the 10-min study. In the 10-min study, participants were exposed to the stimuli reflecting one of the two TOD conditions (Fig. 3); then, they provided information regarding their subjective understanding (3-item, 7-point Likert scale, e.g., “My understanding of how Stream Now’s recommendation algorithm elaborates users’ data is complete,” 1 = “strongly disagree,” 7 = “strongly agree”) and WTD (5-item, 7-point Likert scale, e.g., “I am willing to continue to provide my personal information to Stream Now,” 1 = “strongly disagree,” 7 = “strongly agree”). For more details about the operationalization and measurement of constructs, see Table 2.

The ownership of a subscription to an AI-powered TV-streaming service was assessed via a multiple-choice question asking participants to declare whether they already owned a subscription to a video-on-demand service.

Participants also provided insights about their data collection concerns with reference to companies’ requests for and collection of personal information (4-item, 7-point Likert scale, e.g., “It usually bothers me when companies ask me for personal information,” 1 = “strongly disagree,” 7 = “strongly agree”).

Lastly, to assess the correct functioning of the manipulation of TOD, i.e., the independent variable, we conducted a manipulation check. In other words, we assessed whether the manipulation of TOD represented the precise concept we had in mind (i.e., the concept of individual vs. relational TOD), and therefore whether the manipulation is related to a direct measure of the variable we wanted to alter (Cook et al., 1979; Festinger, 1953; Perdue & Summers, 1986). In our case, TOD has been manipulated by representing the outcome of the recommendation produced by SN’s algorithm in two different ways: individual vs. relational TOD. Specifically, as Fig. 3 shows, we created two images representing the users’ homepages for SN, and each image represented one experimental condition. In the image about the individual TOD condition, we reported the movies that the SN’s algorithm recommends based on the user’s preferences, while in the image about the relational TOD condition, we reported the movies that the SN’s algorithm recommends based on the user’s preferences and on the preferences of users who are different from her in terms of movie tastes. Through our manipulation check, we wanted to determine whether participants in the individual TOD condition rated their level of agreement (on a scale from 1 = “strongly disagree” to 7 = “strongly agree”) lower than those in the relational TOD condition when asked whether their SN homepage showed both movies that matched their preferences and movies that did not match their preferences. Finally, all respondents provided demographic information.

Results

Manipulation Check

The manipulation of TOD was successful: respondents in the relational condition perceived the TOD as composed of movie suggestions based on both their own preferences and those of users different from them (MrelationalTOD = 5.96, SD = 0.99), compared with respondents in the individual condition (MindividualTOD = 4.02, SD = 1.05; t(239) = − 14.84, p < 0.001).

Mediation Analysis

With a one-way ancova of the effect of TOD (0 = individual; 1 = relational) on WTD, it was possible to observe significant effects (Table 3).

The mean WTD level reported by respondents exposed to the relational TOD condition (MrelationalTOD = 4.98, SD = 1.20) is significantly higher than the mean WTD level reported by those exposed to the individual TOD condition (MindividualTOD = 4.62, SD = 1.26; F(1, 237) = 4.93, p = 0.027, η2 = 0.02). These results support H1.

Then, to assess the variables that might explain the relationship between TOD and WTD, a simple mediation model with confidence intervals (CI) and 5,000 bootstrap iterations (Hayes, 2018, PROCESS model 4)—in which subjective understanding of how AI-based personalization functions as mediator—was employed. The results shown in Fig. 4 and Table 4 indicate a significant indirect effect of TOD on WTD, through subjective understanding (bindirect = 0.18, 95% CI: 0.07 to 0.31).

Results of the study: mediation via subjective understanding. Notes: Mediation analysis with 5,000 bootstrap samples (model 4 in PROCESS; Hayes, 2018). Coefficients significantly different from zero are indicated by asterisks (*p < .05; **p < .01; ***p < .001). Non-significant coefficients are indicated by dashed arrows

After accounting for this indirect effect, the direct effect of TOD on WTD is no longer significant (b1 = 0.15, 95% CI: − 0.14 to 0.43). That is, relational TOD increases participants’ subjective understanding of how AI-based personalization operates (b2 = 0.73, p < 0.001) which, in turn, increases WTD (b3 = 0.24, p < 0.001).

These results support H2 and clarify how increased WTD results from relational TOD, namely, through a mediation effect by which greater subjective understanding of how AI-based personalization functions increases WTD.

Discussion

The experiment demonstrated that prompting people to reflect on the targeted dimension for personalized services by introducing relational disclosures—instead of the current individual format—boosts their subjective understanding regarding the functioning of personalization algorithms as well as their willingness to adhere to processes based on profiling, e.g., by providing further data to the service platform.

In particular, it was observed that exposing users to relational TODs results in an enhanced understanding regarding the modes and functioning of personalization techniques, as well as in an increased willingness to interact with profiling algorithms.

Both of these aspects are indeed significant in order to strike a balance between the need to achieve a high level of consumer protection and the interest not to curb innovations which—while still presenting risks to be addressed—are potentially welfare-enhancing for users and the market as a whole. Thusly, as was observed with regard to AI systems more generally (High-Level Expert Group, 2019), the creation of a clear and effective regulatory framework must go hand in hand with interventions aimed at establishing a system of “trustworthy AI.”

In addition, these findings provide further relevant insights from (at least) a twofold perspective. First, and consistent with information design studies (Corrales Compagnucci et al., 2021; Hagan, 2016), they develop upon the concept that the framing of information provided is a pivotal aspect of promoting consumers’ awareness in making choices regarding personalized techniques; second, this consideration has, of course, a potentially broader relevance for the goal of reaching transparency in online commerce. Boosting individuals’ ability to perceive microtargeting, to understand its meaning, and to consciously decide whether they want to be profiled—and provide their data for such purpose—is essential in moving from nominal to technical transparency, consistent with the idea that providing information is useful only as long as users are able to engage with such content and understand what it means for them.

Lastly, the study offers valuable insights in terms of policy making, and possible proposals for currently in-development regulations addressing AI and personalization: if the introduction of a “relational” approach can improve people’s competence to detect and understand personalization, and therefore could operate as an amendment to the traditional way of providing disclosures, then encompassing this approach into regulatory interventions could play a key role in increasing platforms’ transparency and users’ autonomy, advancing consumers’ protection in the online environment.

Towards a General Paradigm of Relational Disclosure? Limitation of the Study, Perspective for Further Research, and Concluding Remarks

The analysis demonstrates that the employment of a system of relational disclosure can enhance users’ understanding of the modes and functioning of personalization features in the case of streaming services.

At the same time, the study is not exempt from limitations that leave a margin for further promising research directions. First, we showed that relational TOD increases consumers’ subjective understanding, which increases WTD. These effects arise along with a significant effect of the control variables (ownership of a subscription and data collection concerns) confirming the fact that consumers’ WTD is in part conditioned by the two variables (Aiello et al, 2020; Cloarec et al., 2022; Martin & Murphy, 2017). However, we did not assess the role of these variables in influencing the effect of TOD on WTD, as this aim is beyond the scope of this work. Future research should investigate whether variables related to consumers’ personality traits or habits, such as the ownership of a subscription to the service, familiarity with the service, or data collection concerns, may moderate the relationship between TOD and WTD.

Second, our study shows that increasing consumers’ intentions to disclose their personal data is possible using relational disclosure. Previous psychological theories have established that the intention to perform a behaviour is a predictor of the actual behaviour (Ajzen, 1991). However, other theories have shown that this is not always true: people’s actual behaviour is not always consistent with the declared intention (Sheeran & Webb, 2016), especially in sharing personal information in AI-powered contexts (Sun et al., 2020). For this reason, in several sectors (e.g., health care and food), scholars prefer to directly measure the actual behaviour rather than the respective intention (de Bekker-Grob et al., 2020; Lizin et al., 2022). Future research should therefore extend the external validity of our study testing whether consumers’ WTD in this context translate into actual disclosure behaviour, recurring in both online scenarios and field experiments.

Third, the experimental approach helps establish causality and high levels of internal validity; we used a fictitious brand to avoid the potentially confounding influence of brand attitudes. Still, in a realistic environment, brand attitudes might affect consumers’ WTD (Daems et al., 2019). Brand-related factors then might moderate the effect of TOD on WTD. Additional research should therefore explore these influences.

Lastly, our experimental approach establishes some degree of generalizability. Our results can be extended to the sector of personalized recommendations in online streaming services, as we conducted the experiment in this context. For further external validity, additional studies might test our hypotheses using other empirical contexts, platforms, or types of data. This aspect is, furthermore, particularly relevant to evaluating whether relational disclosure should be analysed as a general strategy to inform consumer protection in digital markets or, rather, as a sector-specific tool. Despite the achieved results being significant per se, in order to hypothesize the application of relational disclosure as a more comprehensive paradigm in digital interactions, it is in fact necessary to investigate its deployment and functioning in other services and in relation to heterogeneous contexts in which personalization occurs.

Therefore, another aspect worth further investigation concerns the validity of the findings of the study beyond its material scope, id est, evaluating if relational disclosure can rise as a general paradigm suitable to incorporation within the traditional understanding of decision making.

While further research will be conducted in the future to empirically assess the effectiveness of relational disclosure in relation to different services, it is nevertheless already possible to hypothesize how disclosures might work in other fields in which personalization is utilized.

An interesting alternative setting—which has already been mentioned in this work—is the case of personalized pricing; as previously clarified, the Modernization Directive currently requires consumers to be informed if the price of products they are offered is personalized on the basis of automated decision making, without providing details regarding the modes of such disclosure. This provision was mostly interpreted as requiring a piece of generic information regarding the personalized nature of the offered price (Loos, 2020), while some scholars recently argued—also by leveraging the rights enshrined in the GDPR—in favour of the existence of a “right to impersonal prices” for consumers (Esposito, 2022).

In our view, in the case of personalized prices, a relational disclosure system could operate, for example, by mandating the business operator to show users, besides the price that is offered to them for the product or service of interest, a randomized sampling of the prices that have been offered to other consumers for that same good in a pre-defined timelapse (e.g., a set of ten other prices at which the product was sold in that same year). This way, the consumer would be able to locate herself within the spectrum of offers that have been made based on personalization and to be aware of the functioning of the system. As an alternative setting, it is also conceivable that consumers should be provided with information regarding the most (and the least) statistically offered prices.

As far as the case of disclosures regarding personalized product offers is concerned, a similar system might be employed as well: instead of merely informing the consumer that a product is being shown as the result of profiling, she might be shown the targeted advertisement combined either with random sorting of other search results responding to the same query, or with results sorted based on the search history of other individuals showing different preferences. An exploratory study on online market segmentation through personalized pricing and offers in the EU applying a similar methodology—yet without specifically using relational disclosure—was carried out by the European Commission in 2018 in the car rental market (European Commission, 2018), and it would be possible to capitalize on that first experience to conduct further research and accordingly identify the best format for relational disclosure.

Considering these aspects, it is certainly conceivable for relational disclosure to operate beyond the isolated problem of personalization in the streaming services market and to emerge as a comprehensive paradigm to advance consumer protection, similarly to how, in the current framework, each disclosure (while being intended as a general mode of regulation) is differently declined on the basis of the category of products and services considered (and subsequently standardized within sets of homogeneous ones). Relational disclosure could then operate in different scenarios depending on the specific application of the personalization and the relevant technology.

Notes

Regulation (EU) 2016/679 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (2016) OJ L 119.

Proposal for a Regulation of the European Parliament and of the Council laying down harmonized rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union Legislative Acts Com/2021/206 final.

Directive 2005/29/EC of the European Parliament and of the Council of 11 May 2005 concerning unfair business-to-consumer commercial practices in the internal market and amending Council Directive 84/450/EEC, Directives 97/7/EC, 98/27/EC and 2002/65/EC of the European Parliament and of the Council and Regulation (EC) No 2006/2004 of the European Parliament and of the Council [2005] O JEC L 149/22 (UCPD).

Directive 2011/83/EU of the European Parliament and of the Council of 25 October 2011 on consumer rights, amending Council Directive 93/13/EEC and Directive 1999/44/EC of the European Parliament and of the Council and repealing Council Directive 85/577/EEC and Directive 97/7/EC of the European Parliament and of the Council [2011] OJ L 304, 64–88 (hereafter CRD).

Communication from the Commission to the European Parliament, the Council and the European Economic and Social Committee. A new deal for consumers Brussels [2018] COM 183 final.

Directive (EU) 2019/2161 of the European Parliament and of the Council of 27 November 2019 amending Council Directive 93/13/EEC and Directives 98/6/EC, 2005/29/EC and 2011/83/EU of the European Parliament and of the Council as regards the better enforcement and modernisation of Union consumer protection rules [2019] OJ L 328/7 (Modernisation Directive).

Proposal for a Regulation of the European Parliament and of the Council on a Single Market for Digital Services (Digital Services Act) and amending Directive 2000/31/EC (2020)—hereafter, DSA.

References

Aiello, G., Donvito, R., Acuti, D., Grazzini, L., Mazzoli, V., Vannucci, V., & Viglia, G. (2020). Customers’ willingness to disclose personal information throughout the customer purchase journey in retailing: The role of perceived warmth. Journal of Retailing, 96(4), 490–506.

Ajzen, I. (1991). The theory of planned behaviour. Organizational Behaviour and Human Decision Processes, 50(2), 179–211.

Alemanno, A., & Sibony, A. L. (2015). Nudge and the law: A European perspective. Hart Publishing.

Ambrosino, A. (2014). A cognitive approach to law and economics: Hayek’s legacy. Journal of Economic Issues, 48(1), 19–48.

Article 29 Data Protection Working Party (2017). Guidelines on automated individual decision-making and profiling for the purposes of Regulation 2016/679, 17/EN WP 251. https://ec.europa.eu/newsroom/article29/items/612053 (accessed 8 September 2022).

Ayres, I. A., & Schwartz, A. (2014). The no-reading problem in consumer contract law. Stanford Law Review, 66(3), 545–609.

Baggio, M., Ciriolo, E., Marandola, G., & van Bavel, R. (2021). The evolution of behaviourally informed policy-making in the EU. Journal of European Public Policy, 28(5), 658–676.

Baine, D. (2021). How many streaming services can people consume? OTT services & vMVPDs continue to soar. https://www.forbes.com/sites/derekbaine/2021/12/22/how-many-streaming-services-can-people-consume-ott-services--vmvpds-continue-to-soar/?sh=7157712031bb (accessed 21 October 2022).

Balkin, J. (2018). Free speech in the algorithmic society: Big data, private governance, and new school speech regulation. University of California, Davis Law Review, 51, 1151–1194.

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice Hall.

Bandura, A. (1977). Social Learning Theory. Prentice Hall.

Bar-Gill, O. (2008). The behavioural economics of consumer contracts. Minnesota Law Review, 92(3), 749–802.

Beales, H., Craswell, R., & Salop, S. C. (1981). Information remedies for consumer protection. The American Economic Review, 71(2), 410–413.

Ben-Shahar, O., & Porat, A. (2021). Personalized Law: Different rules for different people. Oxford University Press.

BEUC (2021). EU consumer protection 2.0. Structural asymmetries in digital consumer markets. A joint report from research conducted under the EUCP2.0 project. https://www.beuc.eu/publications/beuc-x-2021-018_eu_consumer_protection.0_0.pdf (accessed 1 April 2022).

Boerman, S. C., Kruikemeier, S., & Zuiderveen Borgesius, F. J. (2017). Online behavioural advertising: A literature review and research agenda. Journal of Advertisings, 46(3), 363–376.

Busch, C. (2019). Implementing personalized law: Personalized disclosures in consumer law and data privacy law. The University of Chicago Law Review, 80(2), 309–332.

Busch, C. (2016). The future of pre-contractual information duties: From behavioural insights to big data. In C. Twigg-Flesner (Ed.), Research Handbook on EU Consumer and Contract Law (pp. 221–240). Elgar Publishing.

Carvalho, J. (2019). Sale of goods and supply of digital content and digital services – Overview of Directives 2019/770 and 2019/771. Journal of European Consumer and Market Law, 8(5), 194–201.

Castaneda, G., & Guerrero, O. A. (2019). The importance of social and government learning in ex ante policy evaluation. Journal of Policy Modeling, 41(2), 273–293.

Chen, A., Lu, Y., & Wang, B. (2017). Customers’ purchase decision-making process in social commerce: A social learning perspective. International Journal of Information Management, 37(6), 627–638.

Cloarec, J., Meyer-Waarden, L., & Munzel, A. (2022). The personalization–privacy paradox at the nexus of social exchange and construal level theories. Psychology & Marketing, 39(3), 647–661.

Cook, T. D., Campbell, D. T., & Day, A. (1979). Quasi-experimentation: Design & analysis issues for field settings. Houghton Mifflin.

Corrales Compagnucci, M., Haapio, H., Hagan, M., & Doherty, M. (2021). Legal design: Integrating business, design and legal thinking with technology. Edward Elgar Publishing.

Crain, M., & Nadler, A. (2019). Political manipulation and internet advertising infrastructure. Journal of Information Policy, 9, 370–410.

Crane, J. P. (2008). Social learning theory. http://www.cranepsych.com/Psych/Social_learning_Theory.pdf (accessed 21 January 2022).

Daems, K., De Pelsmacker, P., & Moons, I. (2019). The effect of ad integration and interactivity on young teenagers’ memory, brand attitude and personal data sharing. Computers in Human Behaviour, 99, 245–259.

Davola, A. (2021). Fostering consumer protection in the granular market: The role of rules on consent, misrepresentation and fraud in regulating personalized practices. Technology & Regulation, 2021, 76–86. https://doi.org/10.26116/techreg.2021.007

De Bekker-Grob, E. W., Donkers, B., Bliemer, M. C., Veldwijk, J., & Swait, J. D. (2020). Can healthcare choice be predicted using stated preference data? Social Science & Medicine, 246, 112736.

Dobber, T., Fathaigh, R. O., & ZuiderveenBorgesius, F. (2019). The regulation of online political micro-targeting in Europe. Internet Policy Review, 8(4), 1–20.

Domurath, I. (2019). Technological totalitarianism: Data, consumer profiling, and the law. In L. de Almeida, M. C. Gamito, M. Durovic, & K. P. Purnhagen (Eds.), The transformation of economic law: Essays in honour of Hans-W. Micklitz (pp. 65–90). Hart Publishing.

Eskens, S. (2016). Profiling the European citizen in the Internet of Things: How will the General Data Protection Regulation apply to this form of personal data processing, and how should it? https://doi.org/10.2139/ssrn.2752010 (accessed 20 January 2022).

Eskens, S., Helberger, N., & Moeller, J. (2017). Challenged by news personalisation: Five perspectives on the right to receive information. Journal of Media Law, 9, 259–284.

Esposito, F. (2022). The GDPR enshrines the right to the impersonal price. Computer Law & Security Review, 45, 1–14.

European Commission. (2018). Consumer market study on online market segmentation through personalised pricing/offers in the European Union. https://ec.europa.eu/info/files/final-report-4_en (accessed 2 August 2022).

Ezrachi, A., & Stucke, M. E. (2019). Virtual competition. Harvard University Press.

Ezrachi, A., & Stucke, M. E. (2016). The rise of behavioural discrimination. European Competition Law Review, 37(12), 485–492.

Festinger, L. (1953). Laboratory experiments. In L. Festinger & D. Katz (Eds.), Research methods in the behavioral sciences (pp. 136–172). Holt, Rinehart and Winston.

Fung, A., Graham, M., & Weil, D. (2007). Full disclosure: The perils and promise of transparency. Cambridge University Press.

Galli, F. (2021). Online behavioural advertising and unfair manipulation between the GDPR and the UCPD. In M. Ebers & M. CanteroGamito (Eds.), Algorithmic governance and governance of algorithms. Data science, machine intelligence, and law (pp. 109–135). Springer.

Gigerenzer, G., & Selten, R. (2001). Bounded rationality: The adaptive toolbox. MIT Press.

Gomez-Uribe, C. A., & Hunt, N. (2015). The Netflix recommender system: Algorithms, business value, and innovation. ACM Transactions on Management Information Systems, 6(4), 1–19.

Graef, I. (2015). Market definition and market power in data: The case of online platforms. World Competition: Law and Economics Review, 38(4), 473–505.

Hacker, P. (2021). Manipulation by algorithms. Exploring the triangle of unfair commercial practice, data protection, and privacy law. European Law Journal, 1–34.

Hacker, P. (2016). Nudge 2.0: The future of behavioural analysis of law in Europe and beyond. European Review of Private Law, 24, 297–322.

Hagan, M. (2016). 6 core principles for good legal design. https://medium.com/legal-design-and-innovation/6-core-principles-for-good-legal-design-1cde6aba866 (accessed 21 October 2022).

Hall, P. (1993). Policy paradigms, social learning, and the State: The case of economic policy making in Britain. Comparative Politics, 25(3), 275–296.

Hayes, A. F. (2018). Introduction to mediation, moderation, and conditional process analysis (2nd ed.). Guilford Press.

Helberger, N., Sax, M., Strycharz, J., & Micklitz, H.-W. (2021). Choice architectures in the digital economy: Towards a new understanding of digital vulnerability. Journal of Consumer Policy, 45(2), 175–200.

Helberger, N. (2016). Profiling and targeting consumers in the Internet of Things – A new challenge for consumer law. In R. Schulze & D. Staudenmayer (Eds.), Digital revolution: Challenges for contract law in practice (pp. 135–164). Hart Publishing.

High-Level Expert Group on Artificial Intelligence set up by the European Commission. (2019). Ethics guidelines for trustworthy AI. https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai. Accessed 7 Feb 2022

Hill, J. R., Song, L., & West, R. E. (2009). Social learning theory and web-based learning environments: A review of research and discussion of implications. American Journal of Distance Education, 23(2), 88–103.

Hoffman, L. W. (1994). Developmental psychology today. McGraw-Hill.

Huang, Z., & Benyoucef, M. (2013). From e-commerce to social commerce: A close look at design features. Electronic Commerce Research and Applications, 12(4), 246–259.

Issacharoff, S. (2011). Disclosure, agents, and consumer protection. Journal of Institutional and Theoretical Economics, 167(1), 56–71.

Jacoby, J. (1984). Perspectives on information overload. Journal of Consumer Research, 10(4), 432–435.

Johnson, C. (2017). Goodbye stars, hello thumbs. https://about.netflix.com/en/news/goodbye-stars-hello-thumbs (accessed 14 October 2022).

Kahneman, D. (2011). Thinking fast and slow. Farrarm Straus and Giroux.

Kent, C., & Rechavi, A. (2020). Deconstructing online social learning: Network analysis of the creation, consumption and organization types of interactions. International Journal of Research & Method in Education, 43(1), 16–37.

Larsson, S. (2018). Algorithmic governance and the need for consumer empowerment in data-driven markets. Internet Policy Review, 7(2), 1–13.

Laux, J., Wachter, S., & Mittelstadt, B. (2021). Neutralizing online behavioural advertising: Algorithmic targeting with market power as an unfair commercial practice. Common Market Law Review, 58(3), 719–750.

Lizin, S., Rousseau, S., Kessels, R., Meulders, M., Pepermans, G., Speelman, S., & Verbeke, W. (2022). The state of the art of discrete choice experiments in food research. Food Quality and Preference, 102, 104678.

Loos, M. (2020). The modernization of European consumer law (continued): More meat on the bone after all. European Review of Private Law, 2, 407–424.

Lorenz-Spreen, P., Geers, M., Pachur, T., Hertwig, R., Lewandowsky, S., & Herzog, S. M. (2021). Boosting people’s ability to detect microtargeted advertising. Scientific Reports, 11(1), 1–9.

Lorenzo, O., Kawalek, P., & Ramdani, B. (2012). Enterprise applications diffusion within organizations: A social learning perspective. Information & Management, 49(1), 47–57.

Mahlotra, N. K. (1982). Information load and consumer decision making. The Journal of Consumer Research, 8(4), 419–430.

Malgieri, G. (2021). In/acceptable marketing and consumers' privacy expectations: Four tests from EU data protection law. Journal of Consumer Marketing.

Marotta-Wurgler, F. (2012). Does contract disclosure matter? Journal of Institutional and Theoretical Economics, 168(1), 94–119.

Martin, K. D., & Murphy, P. E. (2017). The role of data privacy in marketing. Journal of the Academy of Marketing Science, 45(2), 135–155.

McGregor, S. L. T. (2009). Reorienting consumer education using social learning theory: Sustainable development via an authentic consumer pedagogy. International Journal of Consumer Studies, 33(2), 258–266.

McGregor, S. L. T. (2006). Understanding consumer moral consciousness. International Journal of Consumer Studies, 30(2), 164–178.

Micklitz, H., Pollicino, O., Reichman, A., Simoncini, A., Sartor, G., & De Gregorio, G. (2021). Constitutional challenges in the algorithmic society. Cambridge University Press.

Micklitz, H.-W. (2013). Do consumers and businesses need a new architecture of consumer law? A thought-provoking impulse. Yearbook of European Law, 32(1), 266–367.

Moretti, E. (2011). Social learning and peer effects in consumption: Evidence from movie sales. The Review of Economic Studies, 78(1), 356–393.

Mundt, A. (2020). Algorithms and competition in a digitalized world. In D.S. Evans, A. Fels & C. Tucker (Eds.), The evolution of antitrust in the digital era: Essays on competition policy (Vol. 1). CPI Publishing.

Nicholson, J., & Higgins, G.E. (2017). Social structure social learning theory: Preventing crime and violence. In B. Teasdale & M. Bradley (Eds.), Preventing crime and violence. Advances in prevention science (pp. 11–20). Springer.

Palan, S., & Schitter, C. (2018). Prolific.ac—A subject pool for online experiments. Journal of Behavioural and Experimental Finance, 17, 22–27.

Paolacci, G., Chandler, J., & Ipeirotis, P. G. (2010). Running experiments on Amazon Mechanical Turk. Judgment and Decision Making, 5(5), 411–419.

Perdue, B. C., & Summers, J. O. (1986). Checking the success of manipulations in marketing experiments. Journal of Marketing Research, 23(4), 317–326.

Petit, N., & Teece, D. J. (2021). Innovating big tech firms and competition policy: Favoring dynamic over static competition. Industrial and Corporate Change, 30(5), 1168–1198.

Picht, P. G., & Tazio Loderer, G. (2019). Framing algorithms–competition law and (other) regulatory tools. World Competition, 42(3), 391–417.

Porat, A., & Strahilevitz, L. (2021). Personalizing default rules and disclosure with big data. In C. Busch & A. De Franceschi (Eds.), Algorithmic regulation and personalized law: A handbook (pp. 5–51). Hart Publishing.

Rhum, K. (2021). Information fiduciaries and political microtargeting: A legal framework for regulating political advertising on digital platforms. Northwestern University Law Review, 115(6), 1831–1872.

Rossi, A., Ducato, R., Haapio, H., & Passera, S. (2019). When design met law: Design patterns for information transparency. Droit De La Consommation, 122–123, 79.

Sartor, G., Lagioia, F. & Galli, F. (2021). Regulating targeted and behavioural advertising in digital services. How to ensure users’ informed consent. Study commissioned by the European Parliament’s committee on legal affairs. http://www.europarl.europa.eu/RegData/etudes/STUD/2021/694680/IPOL_STU(2021)694680_EN.pdf (accessed 20 January 2022).

Sauter, M., Draschkow, D., & Mack, W. (2020). Building, hosting and recruiting: A brief introduction to running behavioural experiments online. Brain Sciences, 10(4), 251.

Schwartz, A., & Wilde, L. (1979). Intervening in markets on the basis of imperfect information: A legal and economic analysis. University of Pennsylvania Law Review, 127(3), 630–682.

Seizov, O., Wulf, A., & Luzak, J. (2019). The transparent trap. Analyzing transparency in information obligations from a multidisciplinary empirical perspective. Journal of Consumer Policy, 42(1), 149–173.

Sheeran, P., & Webb, T. L. (2016). The intention–behaviour gap. Social and Personality Psychology Compass, 10(9), 503–518.

Simon, H. (1982). Models of bounded rationality. MIT Press.

Sun, Q., Willemsen, M. C., & Knijnenburg, B. P. (2020). Unpacking the intention-behaviour gap in privacy decision making for the internet of things (IoT) using aspect listing. Computers & Security, 97, 101924.

Sunstein, C. R. (2020). Too much information. MIT Press.

Thaler, R. (1985). Mental accounting and consumer choice. Marketing Science, 4, 199–214.

Thaler, R., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. Penguin books.

Thaler, R., & Sunstein, C. R. (2003). Libertarian paternalism. The American Economic Review, 93(2), 175–179.

Wachter, S. (2020). Affinity profiling and discrimination by association in online behavioural advertising. Berkeley Technology Law Journal, 35(2), 369–430.

Wachter, S. (2018). Normative challenges of identification in the Internet of Things: Privacy, profiling, discrimination, and the GDPR. Computer Law & Security Review, 34(3), 436–449.

Wallheimer, B. (2018). Are you ready for personalized pricing? https://review.chicagobooth.edu/marketing/2018/article/are-you-ready-personalized-pricing (accessed 14 October 2022).

Wilhelmsson, T. (2003). Private law remedies against the breach of information requirements of EC law. In Informationspflichten und Vertragsschluss im Acquis communautaire (pp. 245–265). Mohr Siebeck.

Wilhelmsson, T., & Twigg-Flesner, C. (2006). Pre-contractual information duties in the acquis communautaire. European Review of Contract Law, 2(4), 441–470.

Wilhite, H. (2014). Insights from social practice and social learning theory for sustainable energy consumption. Dans Flux, 96(2), 24–30.

Whittaker, S. (2008). Standard contract terms and information rules. In H. Collins (Ed.), Standard contract terms in Europe: A basis for and a challenge to European contract law (pp. 163–175). Wolters Kluwer.

ZuiderveenBorgesius, F., Möller, J., Kruikemeier, S., Fathaigh, R. Ó., Irion, K., Dobber, T., Bodo, B., & de Vreese, C. (2018). Online political microtargeting: Promises and threats for democracy. Utrecht Law Review, 14(1), 82–96.

Zuiderveen Borgesius, F., & Poort, J. (2017). Online price discrimination and EU data privacy law. Journal of Consumer Policy, 40, 347–366.

Legislation

European Union

Directive 2005/29/EC of the European Parliament and of the Council of 11 May 2005 concerning unfair business-to consumer commercial practices in the internal market and amending Council Directive 84/450/EEC, Directives 97/7/EC, 98/27/EC and 2002/65/EC of the European Parliament and of the Council and Regulation (EC) No 2006/2004 of the European Parliament and of the Council [2005] O JEC L 149/22.

Directive 2011/83/EU of the European Parliament and of the Council of 25 October 2011 on consumer rights, amending Council Directive 93/13/EEC and Directive 1999/44/EC of the European Parliament and of the Council and repealing Council Directive 85/577/EEC and Directive 97/7/EC of the European Parliament and of the Council (2011) OJ L 304, 64–88.

Directive (EU) 2019/2161 of the European Parliament and of the Council of 27 November 2019 amending Council Directive 93/13/EEC and Directives 98/6/EC, 2005/29/EC and 2011/83/EU of the European Parliament and of the Council as regards the better enforcement and modernisation of Union consumer protection rules (2019) OJ L 328/7.

Proposal for a Regulation of the European Parliament and of the Council on a single market for digital services (Digital Services Act) and amending Directive 2000/31/EC (2020).

Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on Artificial Intelligence (Artificial Intelligence Act) and amending certain Union Legislative Acts Com/2021/206 final.

Regulation (EU) 2016/679 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (2016) OJ L 119.

Acknowledgements

The authors wish to thank Joasia Luzak, Chantal Mak, and the members of the European University Institute Private Law Working Group for their comments on the first versions of this work.

Funding

The article has been developed as part of the Marie-Sklodowska Curie Project “FairPersonalization” (MSCA-IF-2019–897310) with the support of the European Commission.

Author information

Authors and Affiliations

Contributions

The present paper is the joint effort of all the authors, who contributed equally to the paper. In particular, Antonio Davola contributed mostly to Sects. 1, 2, 3, and 5. Ilaria Querci and Simona Romani contributed mostly to Sects. 4, 4.1, 4.2, and 4.3. All the mistakes rest with the authors.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Davola, A., Querci, I. & Romani, S. No Consumer Is an Island—Relational Disclosure as a Regulatory Strategy to Advance Consumer Protection Against Microtargeting. J Consum Policy 46, 1–25 (2023). https://doi.org/10.1007/s10603-022-09530-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10603-022-09530-7