Abstract

Subsurface datasets commonly are big data, i.e., they meet big data criteria, such as large data volume, significant feature variety, high sampling velocity, and limited data veracity. Large data volume is enhanced by the large number of necessary features derived from the imposition of various features derived from physical, engineering, and geological inputs, constraints that may invoke the curse of dimensionality. Existing dimensionality reduction (DR) methods are either linear or nonlinear; however, for subsurface datasets, nonlinear dimensionality reduction (NDR) methods are most applicable due to data complexity. Metric-multidimensional scaling (MDS) is a suitable NDR method that retains the data's intrinsic structure and could quantify uncertainty space. However, like other NDR methods, MDS is limited by its inability to achieve a stabilized unique solution of the low dimensional space (LDS) invariant to Euclidean transformations and has no extension for inclusions of out-of-sample points (OOSP). To support subsurface inferential workflows, it is imperative to transform these datasets into meaningful, stable representations of reduced dimensionality that permit OOSP without model recalculation.

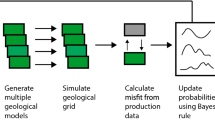

We propose using rigid transformations to obtain a unique solution of stabilized Euclidean invariant representation for LDS. First, compute a dissimilarity matrix as the MDS input using a distance metric to obtain the LDS for \(N\)-samples and repeat for multiple realizations. Then, select the base case and perform a rigid transformation on further realizations to obtain rotation and translation matrices that enforce Euclidean transformation invariance under ensemble expectation. The expected stabilized solution identifies anchor positions using a convex hull algorithm compared to the \(N+1\) case from prior matrices to obtain a stabilized representation consisting of the OOSP. Next, the loss function and normalized stress are computed via distances between samples in the high-dimensional space and LDS to quantify and visualize distortion in a 2-D registration problem. To test our proposed workflow, a different sample size experiment is conducted for Euclidean and Manhattan distance metrics as the MDS dissimilarity matrix inputs for a synthetic dataset.

The workflow is also demonstrated using wells from the Duvernay Formation and OOSP with different petrophysical properties typically found in unconventional reservoirs to track and understand its behavior in LDS. The results show that our method is effective for NDR methods to obtain unique, repeatable, stable representations of LDS invariant to Euclidean transformations. In addition, we propose a distortion-based metric, stress ratio (SR), that quantifies and visualizes the uncertainty space for samples in subsurface datasets, which is helpful for model updating and inferential analysis for OOSP. Therefore, we recommend the workflow's integration as an invariant transformation mitigation unit in LDS for unique solutions to ensure repeatability and rational comparison in NDR methods for subsurface energy resource engineering big data inferential workflows, e.g., analog data selection and sensitivity analysis.

Similar content being viewed by others

Data availability

The data and well-documented workflow used is publicly available on the corresponding author's GitHub Repository: https://github.com/Mide478/LowerDimensionalSpace-Stabilization-RT-UQI on publication. No proprietary data is within the GitHub repository and the data used for the synthentic case study is publicly available in the GeoDataSets respository: https://github.com/GeostatsGuy/GeoDataSets/blob/master/unconv_MV_v4.csv.

References

Eldawy, A., Mokbel, M.F.: The era of Big Spatial Data. In: Proceedings of the VLDB Endowment. pp. 1992–1995 (2017)

Zhu, L., Yu, F.R., Wang, Y., Ning, B., Tang, T.: Big data analytics in intelligent transportation systems: A Survey. IEEE Trans. Intell. Transp. Syst. 20, 383 (2019). https://doi.org/10.1109/TITS.2018.2815678

Mohammadpoor, M., Torabi, F.: Big Data analytics in oil and gas industry: An emerging trend, (2020)

Mabadeje, A., Salazar, J., Garland, L., Ochoa, J., Pyrcz, M.: A machine learning workflow to support the identification of subsurface resource analogs. Energy Explor. Exploit. 1, 23 (2023). https://doi.org/10.1177/01445987231210966

Aziz, K., Sarma, P., Durlofsky, L.J., Chen, W.H.: Efficient real-time reservoir management using adjoint-based optimal control and model updating Optimal Reconstruction of State-Dependent Constitutive Relations for Complex Fluids in Earth Science Applications View project Efficient real-time reservoir management using adjoint-based optimal control and model updating. (2014). https://doi.org/10.1007/s10596-005-9009-z

He, J., Sarma, P., Durlofsky, L.J.: Reduced-order flow modeling and geological parameterization for ensemble-based data assimilation. Comput. Geosci. 55, 54–69 (2013). https://doi.org/10.1016/J.CAGEO.2012.03.027

Jiang, S., Durlofsky, L.J.: Treatment of model error in subsurface flow history matching using a data-space method. J. Hydrol. 603, 127063 (2021). https://doi.org/10.1016/J.JHYDROL.2021.127063

Shirangi, M.G., Durlofsky, L.J.: Closed-loop field development under uncertainty by use of optimization with sample validation. SPE J. 20, 908–922 (2015). https://doi.org/10.2118/173219-PA

Bellman, R.: A Markovian Decision Process. Indiana Univ. Math. J. 6, 679–684 (1957). https://doi.org/10.1512/IUMJ.1957.6.56038

Aggarwal, C.C., Hinneburg, A., Keim, D.A.: On the surprising behavior of distance metrics in high dimensional space. In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). pp. 420–434. Springer Verlag (2001)

Giannella, C.R.: Instability results for Euclidean distance, nearest neighbor search on high dimensional Gaussian data. Inf. Process. Lett. 169, 106115 (2021). https://doi.org/10.1016/j.ipl.2021.106115

Kabán, A.: Non-parametric detection of meaningless distances in high dimensional data. Stat. Comput. 22, 375–385 (2012). https://doi.org/10.1007/s11222-011-9229-0

Tan, X., Tahmasebi, P., Caers, J.: Comparing training-image based algorithms using an analysis of distance. Math. Geosci. 46, 149–169 (2014). https://doi.org/10.1007/s11004-013-9482-1

Josset, L., Ginsbourger, D., Lunati, I.: Functional error modeling for uncertainty quantification in hydrogeology. Water Resour. Res. 51, 1050–1068 (2015). https://doi.org/10.1002/2014WR016028

Josset, L., Demyanov, V., Elsheikh, A.H., Lunati, I.: Accelerating Monte Carlo Markov chains with proxy and error models. Comput. Geosci. 85, 38–48 (2015). https://doi.org/10.1016/J.CAGEO.2015.07.003

Pachet, F., Mining, C.S.-M. data, 2012, U.: Hit song science. api.taylorfrancis.com. (2012)

Turchetti, C., Falaschetti, L.: A manifold learning approach to dimensionality reduction for modeling data. Inf. Sci. (Ny) 491, 16–29 (2019). https://doi.org/10.1016/J.INS.2019.04.005

London, K.P.-T., Edinburgh, undefined, philosophical, and D., 1901, undefined: LIII. On lines and planes of closest fit to systems of points in space. Taylor Fr. 2, 559–572 (2010). https://doi.org/10.1080/14786440109462720

Jolliffe, I.: Principal Component Analysis. Encycl. Stat. Behav. Sci. (2005). https://doi.org/10.1002/0470013192.BSA501

Schmid, P.J.: Dynamic mode decomposition of numerical and experimental data. J. Fluid Mech. 656, 5–28 (2010). https://doi.org/10.1017/S0022112010001217

Rowley, C., Mezić, I., Bagheri, S., P.S.-J. of fluid, 2009, undefined: Spectral analysis of nonlinear flows. cambridge.orgCW Rowley, I Mezić, S Bagheri, P Schlatter, DS HenningsonJournal fluid Mech. 2009•cambridge.org. 641, 115–127 (2009). https://doi.org/10.1017/S0022112009992059

Kutz, J., Brunton, S., Brunton, B., Proctor, J.: Dynamic mode decomposition: data-driven modeling of complex systems. (2016)

Rao, C.R.: The utilization of multiple measurements in problems of biological classification. J. R. Stat. Soc. Ser. B 10, 159–193 (1948). https://doi.org/10.1111/j.2517-6161.1948.tb00008.x

Fisher, R.A.: The use of multiple measurements in taxonomic problems. Ann. Eugen. 7, 179–188 (1936). https://doi.org/10.1111/j.1469-1809.1936.tb02137.x

He, X., Niyogi, P.: Locality preserving projections. In: Advances in Neural Information Processing Systems (2004)

He, X., Cai, D., Yan, S., Zhang, H.J.: Neighborhood preserving embedding. Proc. IEEE Int. Conf. Comput. Vis. II, 1208–1213 (2005). https://doi.org/10.1109/ICCV.2005.167

Sarma, P., Durlofsky, L.J., Aziz, K.: Kernel principal component analysis for efficient, differentiable parameterization of multipoint geostatistics. Math. Geosci. 40, 3–32 (2008). https://doi.org/10.1007/S11004-007-9131-7/METRICS

Schölkopf, B., Smola, A., Müller, KR.: . Kernel principal component analysis. In: Gerstner, W., Germond, A., Hasler, M., Nicoud, JD. (eds) Artificial Neural Networks — ICANN'97. ICANN 1997. Lecture Notes in Computer Science, vol 1327. Springer, Berlin, Heidelberg (1997). https://doi.org/10.1007/BFb0020217

Kao, Y.H., Van Roy, B.: Learning a factor model via regularized PCA. Mach Learn. 91, 279–303 (2013). https://doi.org/10.1007/s10994-013-5345-8

Roweis, S.T., Saul, L.K.: Nonlinear dimensionality reduction by locally linear embedding. Science. 290, 2323–2326 (2000). https://doi.org/10.1126/science.290.5500.2323

Torgerson, W.S.: Multidimensional scaling: I. Theory and method. Psychometrika. 17, 401–419 (1952). https://doi.org/10.1007/BF02288916

Cox, T., Cox, M.: Multidimensional Scaling. Multidimens. Scaling. (2000). https://doi.org/10.1201/9780367801700

Borg, I., Groenen, P.: Modern multidimensional scaling: Theory and applications. (2005)

Tenenbaum, J.B., De Silva, V., Langford, J.C.: A global geometric framework for nonlinear dimensionality reduction. Science. 290, 2319–2323 (2000). https://doi.org/10.1126/science.290.5500.2319

Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 15, 1373–1396 (2003). https://doi.org/10.1162/089976603321780317

Van Der Maaten, L., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9, 2579–2625 (2008)

Coifman, R.R., Lafon, S.: Diffusion maps. Appl. Comput. Harmon. Anal. 21, 5–30 (2006). https://doi.org/10.1016/j.acha.2006.04.006

Cunningham, J.P., Ghahramani, Z.: Linear dimensionality reduction: Survey, Insights, and Generalizations. J. Mach. Learn. Res. 16, 2859–2900 (2015)

Young, G., Householder, A.S.: Discussion of a set of points in terms of their mutual distances. Psychometrika 3, 19–22 (1938). https://doi.org/10.1007/BF02287916

Xia, Y.: Correlation and association analyses in microbiome study integrating multiomics in health and disease. Prog. Mol. Biol. Transl. Sci. 171, 309–491 (2020). https://doi.org/10.1016/BS.PMBTS.2020.04.003

Trosset, M.W., Priebe, C.E., Park, Y., Miller, M.I.: Semisupervised learning from dissimilarity data. Comput. Stat. Data Anal. 52, 4643 (2008). https://doi.org/10.1016/J.CSDA.2008.02.030

Kouropteva, O., Okun, O., Pietikäinen, M.: Incremental locally linear embedding algorithm. In: Lecture Notes in Computer Science. pp. 521–530 (2005)

Law, M.H.C., Zhang, N., Jain, A.K.: Nonlinear manifold learning for data stream. In: SIAM Proceedings Series. pp. 33–44 (2004)

Bengio, Y., Paiement, J.-F., Vincent, P., Delalleau, O., Le Roux, N., Ouimet, M.: Out-of-sample extensions for LLE, Isomap, MDS, Eigenmaps, and Spectral Clustering. Adv. Neural Inf. Process. Syst. 16, (2003)

Villena-Martinez, V., Oprea, S., Saval-Calvo, M., Azorin-Lopez, J., Fuster-Guillo, A., Fisher, R.B.: When deep learning meets data alignment: A Review on Deep Registration Networks (DRNs). Appl. Sci. 10, 7524–7524 (2020). https://doi.org/10.3390/APP10217524

Verleysen, M., Lee, J.A.: Nonlinear dimensionality reduction for visualization. In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). pp. 617–622 (2013)

Lee, J.A., Verleysen, M.: Quality assessment of dimensionality reduction: Rank-based criteria. Neurocomputing 72, 1431–1443 (2009). https://doi.org/10.1016/J.NEUCOM.2008.12.017

Dayawansa, W.P.: Recent advances in the stabilization problem for low dimensional systems. IFAC Proc. 25, 1–8 (1992). https://doi.org/10.1016/S1474-6670(17)52250-8

Buehrer, R.M., Wymeersch, H., Vaghefi, R.M.: Collaborative sensor network localization: algorithms and practical issues. Proc. IEEE 106, 1089–1114 (2018). https://doi.org/10.1109/JPROC.2018.2829439

Aicardi, I., Nex, F., Gerke, M., Lingua, A.M., Melgani, F., Pajares Martinsanz, G., Li, X., Thenkabail, P.S.: An image-based approach for the co-registration of multi-temporal UAV image datasets. Remote Sens. 8, 779–779 (2016). https://doi.org/10.3390/RS8090779

Saval-Calvo, M., Azorin-Lopez, J., Fuster-Guillo, A., Villena-Martinez, V., Fisher, R.B.: 3D non-rigid registration using color: Color coherent point drift. Comput. Vis. Image Underst. 169, 119–135 (2018). https://doi.org/10.1016/J.CVIU.2018.01.008

Eggert, D.W., Lorusso, A., Fisher, R.B.: Estimating 3-D rigid body transformations: a comparison of four major algorithms. Mach. Vis. Appl. 9, 272–290 (1997)

Miyakoshi, M.: Correcting whole-body motion capture data using rigid body transformation. Eur. J. Neurosci. 54, 7946–7958 (2021). https://doi.org/10.1111/EJN.15531

Rodrigues, M.A., Liu, Y.: On the representation of rigid body transformations for accurate registration of free-form shapes. Rob. Auton. Syst. 39, 37–52 (2002)

Yang, T., Liu, J., McMillan, L., Wang, W.: A fast approximation to multidimensional scaling. Proc. ECCV. 1–8 (2006)

Arun, K.S., Huang, T.S., Blostein, S.D.: Least-Squares Fitting of Two 3-D Point Sets. IEEE Trans. Pattern Anal. Mach. Intell. PAMI-9, 698–700 (1987). https://doi.org/10.1109/TPAMI.1987.4767965

Kruskal, J.B.: Multidimensional scaling by optimizing goodness of fit to a nonmetric hypothesis. Psychometrika 29, 1–27 (1964). https://doi.org/10.1007/BF02289565

Barber, C.B., Dobkin, D.P., Huhdanpaa, H.: The quickhull algorithm for convex hulls. ACM Trans. Math. Softw. 22, 469–483 (1996). https://doi.org/10.1145/235815.235821

De Leeuw, J., Stoop, I.: Upper bounds for Kruskal’s stress. Psychometrika 49, 391–402 (1984). https://doi.org/10.1007/BF02306028/METRICS

Pyrcz, M.: GeoDataSets: Synthetic Subsurface Data Repository, (2021)

Pyrcz, M.J., Deutsch, C.V.: Geostatistical Reservoir Modeling. 448 (2014)

Lal, A.K., Pati, S.: Linear Algebra through Matrices. (2018)

Sorkine, O., Rabinovich, M.: Least-squares rigid motion using svd: Technical notes, p. 1–6. (2017)

Acknowledgements

The authors sincerely appreciate Equinor and the Digital Reservoir Characterization Technology (DIRECT) consortium's industry partners at the Hildebrand Department of Petroleum and Geosystems Engineering, University of Texas at Austin for financial support. We acknowledge Equinor for granting permission to use the Duvernay case study dataset presented here.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

Based on Definition 5.8.5 in Lal and Pati [62], which states a map \(T: {\mathbb{R}}^{n}\to {\mathbb{R}}^{n}\) is said to be a rigid motion if \(\Vert T\left(\mathbf{x}\right)- T\left(\mathbf{y}\right) \Vert = \Vert \mathbf{x}-\mathbf{y}\Vert , \forall \mathbf{x}, \mathbf{y}\in {\mathbb{R}}^{n}\), where \(T\) is the rigid transformation operator such that when applied on set points, \(\mathbf{x}, \mathbf{y}\) each with \({\text{dim}}N\times 3\), leads to an invariant matrix. Thus, if a rigid transformation operator is performed on an MDS realization that preserves pairwise dissimilarity in the lower dimensional space, the same properties from the prior definition are implied and an invariant dissimilarity matrix is obtained. The mathematical proof by rigor for rigid transformations is in Sorkine-Hornung et al. [63]. Hence, we propose Theorem A.1.

Theorem A.1: Let \({\mathbf{S}}_{E}\) be the ensemble expectation of rigid transformed solutions for multiple realizations in the workflow known as stabilized solutions, i.e.,\({\mathbf{S}}_{E}={\mathbb{E}}\left[ T\left( {\mathbf{Z}}_{{\text{k}}}\right)\right]\), in a lower-dimensional space. If an out-of-sample-point (OOSP) that falls within a 95% confidence interval for each predictor feature of interest, \(P \forall m=1,\dots , M\), and \(M=\left\{ m \right|m\in {\mathbb{N}}\}\) is added, then the stabilized solution obtained by applying rigid transformation on the representation obtained from the low dimensional space, \({\mathbf{Z}}_{OOS{\text{P}}}\), is the same as the ensemble expectation of the stabilized solution for the \(N\)-sample case, which is Euclidean transformation invariant i.e., \({\mathbf{S}}_{E}\approx {\mathbf{S}}_{OOSP}\).

Proof: Suppose adding an OOSP within the 95% confidence interval causes the stabilized solution obtained by rigid transformation on \({{\varvec{Z}}}_{OOSP}\) to differ from \({\mathbf{S}}_{E}\). Let \(T\) be the rigid transformation such that \(T: {\mathbb{R}}^{n}\to {\mathbb{R}}^{n}\). This implies there is no applicable rigid transformation that ensures \(\Vert T\left(\mathbf{x}\right)- T\left(\mathbf{y}\right) \Vert = \Vert \mathbf{x}-\mathbf{y}\Vert , \forall \mathbf{x}, \mathbf{y}\in {\mathbb{R}}^{n}\) when \({\text{dim}}\left\{\mathbf{x}\right\}\ne {\text{dim}}\left\{\mathbf{y}\right\}\). This contradicts the definition of a rigid transformation as stated in Lal and Pati [62] and Sorkine-Hornung et al. [63].

Therefore, assuming the shape and size of the sets of anchor points from the \(N\)-sample and \(N+1\) sample cases are equal i.e., \({\text{dim}}\left\{{{\varvec{A}}}_{n}\right\}={\text{dim}}\{{{\varvec{A}}}_{OOSP}\}=n\), because the OOSP is within a 95% confidence interval for each predictor feature of interest. Then, a map \(T: {\mathbb{R}}^{{\text{n}}}\to {\mathbb{R}}^{{\text{n}}}\) that enforces rigid motion in the low dimensional space is possible if \(\Vert T\left({{\varvec{A}}}_{n}\right)- T\left({{\varvec{A}}}_{OOSP}\right) \Vert = \Vert {{\varvec{A}}}_{n}-{{\varvec{A}}}_{OOSP}\Vert , \forall {{\varvec{A}}}_{n}, {{\varvec{A}}}_{OOSP}\in {\mathbb{R}}^{n}\), provided that the OOSP does not reside in the tail distribution of each \(P\). This constraint is imposed to restrict the dissimilarity matrix, \({\varvec{D}}\), from changing significantly. Therefore, Theorem A.1 holds.

Credit author statement

Ademide O. Mabadeje: Data curation, Conceptualization, Methodology, Software, Validation, Visualization, Formal analysis, Writing – Original draft.

Michael Pyrcz: Data curation, Conceptualization, Supervision, Funding acquisition, Writing –Reviewing and Editing.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Mabadeje, A.O., Pyrcz, M.J. Rigid transformations for stabilized lower dimensional space to support subsurface uncertainty quantification and interpretation. Comput Geosci (2024). https://doi.org/10.1007/s10596-024-10278-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10596-024-10278-x