Abstract

In this contribution, we present a full overview of the continuous stochastic gradient (CSG) method, including convergence results, step size rules and algorithmic insights. We consider optimization problems in which the objective function requires some form of integration, e.g., expected values. Since approximating the integration by a fixed quadrature rule can introduce artificial local solutions into the problem while simultaneously raising the computational effort, stochastic optimization schemes have become increasingly popular in such contexts. However, known stochastic gradient type methods are typically limited to expected risk functions and inherently require many iterations. The latter is particularly problematic, if the evaluation of the cost function involves solving multiple state equations, given, e.g., in form of partial differential equations. To overcome these drawbacks, a recent article introduced the CSG method, which reuses old gradient sample information via the calculation of design dependent integration weights to obtain a better approximation to the full gradient. While in the original CSG paper convergence of a subsequence was established for a diminishing step size, here, we provide a complete convergence analysis of CSG for constant step sizes and an Armijo-type line search. Moreover, new methods to obtain the integration weights are presented, extending the application range of CSG to problems involving higher dimensional integrals and distributed data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this contribution, we present a full overview of the continuous stochastic gradient (CSG) method, including convergence results, step size rules, algorithmic insights and applications from topology optimization. The prototype idea for CSG was first proposed in [1]. Therein, it was shown that for expected-valued objective functions, CSG with diminishing step sizes and exact integration weights (see Sect. 3) almost surely produces a subsequence converging to a stationary point [1, Theorem 20].

This work generalizes this result, providing proofs for almost sure convergence of the full sequence of iterates in the case of constant step sizes (Theorems 5.1 and 5.2) and backtracking line search techniques (Theorem 6.1). Additionally, the convergence results hold in a less restrictive setting and for generalized approaches to the integration weight calculation (see Sect. 3). Before going into details, we want to explain the reason for introducing what seems like yet another first-order stochastic optimization scheme.

1.1 Motivation

Within PDE-constrained optimization, settings with expected-valued objective functions arise in numerous applications, ranging from purely stochastic settings, like machine learning or noisy simulations [2, 3], to fully deterministic problems, in which one is interested in a design that is optimal for an infinite set of outer parameters, e.g. [4,5,6,7]. In this work, we assume the stochasticity to appear solely in the objective function. For problems in which the underlying state equation itself includes uncertainties, different techniques have been proposed in the past [8,9,10,11]. Moreover, we restrict our convergence analysis to the finite-dimensional case, and leave a generalization to infinite-dimensional spaces, see, e.g., [12, 13], for future work. Especially in large scale settings, one usually does not consider deterministic approaches (see, e.g., [14, 15]) for the solution of such problems, as they are generally too computationally expensive or even intractable. Instead, one uses stochastic optimization schemes, like the Stochastic Gradient (SG) [16] or Stochastic Average Gradient (SAG) method [17]. A large number of schemes have been derived from these and thoroughly analyzed, including sequential quadratic programming for stochastic optimization [18, 19], quasi-Newton stochastic gradient schemes [20,21,22,23] and the well known adaptive gradient schemes Adam & AdaGrad [24, 25], to name some prominent examples.

Problematically, such methods rely on a heavily restrictive setting, in which the objective function value of a design u is simply given as the expected value of some quantity j, i.e., \(J(u) = \mathbb {E}_x[j(u,x)]\). Even the basic setting of nesting two expectation values, i.e., \(J(u) = \mathbb {E}_y[j_2(y,\mathbb {E}_x[(j_1(u,x)])]\), is beyond the scope of the mentioned schemes and requires special techniques, e.g. the Stochastic Composition Gradient Descent (SCGD) method [26], which itself is again only applicable in this specific setting.

Problems with such complex structures arise in several applications, see, e.g., [27], where we investigate an application from the field of optimal nanoparticle design. Therein, our main interest lies in the optical properties of nanoparticles. Specifically, the color of particulate products, which has been of great interest for many fields of research [28,29,30,31,32,33], is what we are trying to optimize for. This and similar applications serve only as motivation in this paper. However, in the numerical analysis of CSG [27], it is demonstrated that CSG indeed represents an efficient approach to such problems. While a detailed introduction to this setting is given in [27], we want to briefly summarize the problems arising in this application.

To obtain the color of a particulate product, we need to calculate the important optical properties of the nanoparticles in the product. For each particle design, this requires solving the time-harmonic Maxwell’s equations, which, depending of the setting, is numerically expensive. Furthermore, the color of the whole product is not determined by the optical properties of a single particle. Instead, we need to average these properties over, e.g., the particle design distribution and their orientation in the particulate product. Afterwards, the averaged values are used to calculate intermediate results for the special setting. These results then need to be integrated over the range of wavelengths, which are visible to the human eye, to obtain the color of the particulate product. Finally, the objective function uses the resulting color in a nonlinear fashion, before yielding the actual objective function value. In general, the objective function has the form

and can easily involve even more convoluted terms in more advanced settings.

On the one hand, the computational cost of deterministic approaches to such problems range from tremendous to infeasible, since \(j_1\) is typically not easy to evaluate. On the other hand, standard schemes from stochastic optimization, like the ones mentioned above, simply cannot solve the full problem. Thus, we are in the need for a general method to tackle optimization problems, which are given by arbitrary concatenations of nonlinear functions and expectation values.

1.2 Properties of CSG

As mentioned in the previous section, the CSG method aims to offer a new approach to optimization problems that involve integration of properties, which are expensive to evaluate. In each iteration, CSG draws a very small number (typically 1) of random samples, as is the case for SG. However, instead of discarding the information collected through these evaluations after the iteration, these results are stored. In later iterations, all of the information collected along the way is used to build an approximation to the full objective function and its gradient, by a special linear combination. The weights appearing in this linear combination, which we call integration weights, can be calculated in several different fashions, which are detailed in Sect. 3.

This approach of approximating an unknown function by old sample information is similar to surrogate methods from Bayesian optimization [34,35,36], but differs from these techniques in an important aspect: While surrogate models require a rather low combined dimension of design and integration, the performance of CSG is only impacted by the dimension of integration. This is demonstrated in [27], where a problem with high dimensional design is optimized subject to a rather low dimensional integration.

As a key result for CSG, we are able to show that the approximation error in both the gradient and the objective function approximation vanishes during the optimization process (Lemma 4.6). Thus, in the course of the iterations, CSG more and more behaves like an exact full gradient algorithm and is able to solve optimization problems far beyond the scope of standard SG-type methods. Furthermore, we show that this special behaviour results in CSG having convergence properties similar to full gradient descent methods, while keeping advantages of stochastic optimization approaches, i.e., each step is computationally cheap. To be precise, our main results consist of proving convergence to a stationary point for constant step sizes (Theorem 5.1) and an Armijo-type line search (Theorem 6.1), which is based on the gradient and objective function approximations of CSG.

1.3 Limitations of the method

While CSG combines advantages of deterministic and stochastic optimization schemes, the hybrid approach also yields drawbacks, which we try to address throughout this contribution. As mentioned earlier, the intended application for CSG lies in expected-valued optimization problems, in which solving the state problem is computationally expensive. In many other settings that heavily rely on stochastic optimization methods, e.g., neural networks, the situation is different. Here, we can efficiently obtain a stochastic (sub-)gradient, meaning that we are better off simply performing millions of SG iterations, than a few thousand CSG steps. In these situations, the inherent additional computational effort that lies within the calculation of the CSG integration weights (see Sect. 3) is no longer negligible and usually can not be compensated by the improved gradient approximation.

Furthermore, while the design dimension has almost no impact on the performance, the convergence rate of CSG worsens as the dimension of integration increases. While this can be avoided, if the objective function imposes additional structure, it remains a drawback in general. A detailed analysis of this issue can be found in [27].

We emphasize that CSG and SG-type methods share many similarities. However, their intended applications are complementary to each other, with SG preferring objective functions of simple structure and computationally cheap sampling, while CSG prefers the opposite.

1.4 Structure of the paper

Section 2 states the general framework of this contribution as well as the basic assumptions we impose throughout the paper. Furthermore, the general CSG method is presented.

The integration weights, which play an important role in the CSG scheme, are detailed in Sect. 3. Therein, we introduce four different methods to obtain weights which satisfy the necessary assumptions made in Sect. 2 and analyze some of their properties. The section also describes techniques to implement mini-batches in the CSG method.

Auxiliary results concerning CSG are given in Sect. 4. This includes the important gradient approximation property of CSG (Lemma 4.6) and details on how to generalize CSG to even broader settings (Remark 4.1). The first main result, i.e., convergence for constant steps (Theorem 5.1 and Theorem 5.2), is presented in Sect. 5.

Afterwards, in Sect. 6, we incorporate an Armijo-type line search in the CSG method and provide a convergence analysis for the associated optimization scheme (Theorem 6.1). Furthermore, we introduce heuristics to efficiently approximate some hyperparameters. The resulting SCIBL-CSG method (Algorithm 4) achieves identical convergence results, but does not require any step sizes tuning.

The key features of CSG are numerically demonstrated for academic examples in Sect. 7. A detailed numerical analysis of CSG, concerning the performance for non-academic examples and convergence rates, is not part of this contribution, as this can be found in [27].

2 Setting and assumptions

In this section, we introduce the general setting as well as formulate and motivate the assumptions made throughout this contribution. Additionally, the basic CSG algorithm is presented.

2.1 Setting

As mentioned above, the convergence analysis of the CSG method is carried out in a simplified setting, where the objective function is given by a single expected value. For the general case, see Remark 4.1.

Since our convergence analysis will heavily rely on the similarities between CSG and a full gradient scheme, we adapt the standard deterministic setting of an L-smooth objective function (Remark 2.3). Moreover, as the CSG method approximates gradients by a nearest neighbor model, we require all appearing functions to be Lipschitz continuous with respect to all arguments (Assumption 2.3).

Definition 2.1

(Objective Function) For \({d_{\text {o}}},{d_{\text {r}}}\in \mathbb {N}\), we introduce the set of admissible optimization variables \(\mathcal {U}\subseteq \mathbb {R}^{d_{\text {o}}}\) and the parameter set \(\mathcal {X}\subseteq \mathbb {R}^{d_{\text {r}}}\). The objective function \(J:\mathcal {U}\rightarrow \mathbb {R}\) is defined as

where we assume \(j\in C^1(\mathcal {U}\times \mathcal {X};\,\mathbb {R})\) to be measurable and \(X\sim \mu \). The (simplified) optimization problem is then given by

Since \(\mathcal {U}\) and \(\mathcal {X}\) are finite-dimensional, we do not have to consider specific norms on these spaces due to equivalence of norms and can instead choose them problem specific. In the following, we will denote them simply by \(\Vert \cdot \Vert _{_\mathcal {U}}\) and \(\Vert \cdot \Vert _{_\mathcal {X}}\).

During the optimization, we need to draw independent random samples of the random variable X, as stated in the following assumption.

Assumption 2.1

(Sample Sequence) The sequence of samples \((x_n)_{n\in \mathbb {N}}\) is a sequence of independent identically distributed realizations of the random variable \(X\sim \mu \).

Remark 2.1

(Almost Sure Density) We define the support of the measure \(\mu \) as the (closed) subset

It is important to note, that a sample sequence satisfying Assumption 2.1 is dense in \({{\,\textrm{supp}\,}}(\mu )\) with probability 1. This can be seen as follows:

Let \(x\in {{\,\textrm{supp}\,}}(\mu )\) and \(\varepsilon >0\). Then, given an independent identically distributed sequence \(x_1,x_2,\ldots \sim \mu \), we have

Hence, by the Borel–Cantelli Lemma [37, Theorem 2.7],

Thus, the sequence \((x_n)_{n\in \mathbb {N}}\) is dense in \({{\,\textrm{supp}\,}}(\mu )\) with probability 1.

Remark 2.2

(Almost Sure Convergence Results) The (almost sure) density of the sample sequence plays a crucial role in the upcoming proofs. Hence, the convergence results presented in this contribution all hold in the almost sure sense. However, to improve the readability, we refrain from always mentioning this explicitly.

Moreover, the sets \(\mathcal {U}\) and \(\mathcal {X}\) need to satisfy additional regularity conditions. Since CSG handles constraints by projecting steps onto the set of admissible designs \(\mathcal {U}\), we need to ensure this operation is well-defined. Furthermore, the approximation property (Lemma 4.6) of the nearest neighbor model in CSG is fundamentally based on the density of sample points \((x_n)_{n\in \mathbb {N}}\), which is why we require \({{\,\textrm{supp}\,}}(\mu )\) to be bounded.

Assumption 2.2

(Regularity of \(\mathcal {U}\), \(\mathcal {X}\) and \(\mu \)) The set \(\mathcal {U}\subseteq \mathbb {R}^{d_{\text {o}}}\) is compact and convex. The set \(\mathcal {X}\subseteq \mathbb {R}^{d_{\text {r}}}\) is bounded with \({{\,\textrm{supp}\,}}(\mu )\subseteq \mathcal {X}\).

Notice that the second part of Assumption 2.2 can always be achieved, as long as \({{\,\textrm{supp}\,}}(\mu )\subseteq \mathbb {R}^{d_{\text {r}}}\) is bounded.

Finally, as in the deterministic case, we assume the gradient of the objective function to be Lipschitz continuous, in order to obtain convergence for constant step sizes.

Assumption 2.3

(Regularity of j) The function \(\nabla _1 j: \mathcal {U}\times \mathcal {X}\rightarrow \mathbb {R}^{d_{\text {o}}}\) is bounded and Lipschitz continuous, i.e., there exists constants \(C,L_j\in \mathbb {R}_{>0}\) such that

for all \(u,u_1,u_2\in \mathcal {U}\) and \(x,x_1,x_2\in \mathcal {X}\).

Remark 2.3

(Regularity of \(\nabla J\)) By Assumptions 2.1 to 2.3, \(J:\mathcal {U}\rightarrow \mathbb {R}\) is L-smooth, i.e., there exists \(L>0\) such that

2.2 The CSG method

Given a starting point \(u_1\in \mathcal {U}\) and a random parameter sequence \(x_1,x_2,\ldots \) according to Assumption 2.1, the basic CSG method is stated in Algorithm 1. In each iteration, the inner objective function j and gradient \(\nabla _1 j\) are evaluated only at the current design-parameter-pair \((u_n,x_n)\). Afterwards, the integrals appearing in J and \(\nabla J\) are approximated by a linear combination, consisting of all samples accumulated in previous iterations.

The coefficients \((\alpha _k)_{k=1,\ldots ,n}\) appearing in Step 4 of Algorithm 1, which we call integration weights, are what differentiates CSG from other stochastic optimization schemes. In [1], where the main idea of the CSG method was proposed for the first time, a special choice of how to calculate these weights was already presented. A recap of this procedure as well as several new methods to obtain the integration weights is given in detail in Sect. 3.

Furthermore, \(\mathcal {P}_\mathcal {U}\) appearing in Step 8 of Algorithm 1 denotes the orthogonal projection (in the sense of \(\Vert \cdot \Vert _\mathcal {U})\), i.e.,

The final general assumption we will use throughout this contribution is related to the integration weights mentioned above.

Assumption 2.4

(Integration Weights) Denote the sequence of designs and random parameters generated by Algorithm 1 until iteration \(n\in \mathbb {N}\) by \((u_k)_{k=1,\ldots ,n}\) and \((x_k)_{k=1,\ldots ,n}\), respectively. Define \(k^n:\mathcal {X}\rightarrow \{1,\ldots ,n\}\) as

We assume the integration weights appearing in Algorithm 1 satisfy the following:

For all \(n\in \mathbb {N}\), there exists a probability measure \(\mu _n\) on \(\mathcal {X}\) such that

-

(a)

The integration weights \(\big (\alpha _k^{(n)}\big )_{k=1,\ldots ,n}\) in iteration \(n\in \mathbb {N}\) are given by

$$\begin{aligned} \alpha _k^{(n)} = \int _\mathcal {X}\delta _{k^n(x)}(k)\mu _n(\textrm{d}x)\quad \text {for all }k=1,\ldots ,n, \end{aligned}$$(3)where \(\delta _{k^n(x)}(k)\) corresponds to the Dirac measure of \(k^n(x)\).

-

(b)

The measures \((\mu _n)_{n\in \mathbb {N}}\) converge weakly to \(\mu \), i.e., \(\mu _n\Rightarrow \mu \) for \({n\rightarrow \infty }\), where

$$\begin{aligned} \mu _n\Rightarrow \mu \quad \text {iff} \quad \int _\mathcal {X}f(x)\mu _n(\textrm{d}x) \rightarrow \int _\mathcal {X}f(x)\mu (\textrm{d}x)\quad \text {for all } f\in \mathcal {C}^{0,1}(\mathcal {X},\mathbb {R}). \end{aligned}$$

There is a very simple idea hidden behind the technicalities of Assumption 2.4. Condition (3) states that the integration weights should be somehow based on a nearest neighbor approximation

while the condition \(\mu _n\Rightarrow \mu \) ensures that the weight of a sample is reasonably chosen, i.e.,

Remark 2.4

(Choice of Neighbor) For some \(x\in \mathcal {X}\), there mighty not exist a unique nearest neighboring sample to \((u_n,x)\). As a result, \(k^n(x)\), as defined in (2), may consist of more than one index, resulting in x to be counted towards multiple integration weights in (3). Thus, if \(k^n(x)\) is not unique, we have to pick one of the nearest neighbors and neglect the other possible choices. However, it is not important how we make that choice, so we could, for example, replace (2) by

or choose an index at random in these special instances. Thus, in the remainder of this work, we refrain from imposing a specific choice of neighbors and use the notationally reduced formulation (2), essentially assuming that the set of \(x\in \mathcal {X}\) with more than one nearest neighbor to \((u_n,x)\) is of \(\mu _n\)-measure zero.

Due to the finite-dimensional setting, all convergence results proven in this contribution hold independent of the chosen norm on \(\mathcal {U}\times \mathcal {X}\) implied by (2). However, the specific choice may strongly influence the behavior (see, Sect. 3.5) and performance of CSG. Further insight on the integration weights and multiple methods to obtain weights satisfying Assumption 2.4 are given in the following Sect. 3.

3 Integration weights

In this section, we present four methods on how to obtain integration weights satisfying Assumption 2.4 in practice. The methods differ greatly in their associated computational cost and accuracy, allowing us to make an appropriate choice in different settings. Moreover, two of the proposed techniques require no knowledge about the underlying measure \(\mu \), allowing us to use them in purely data-driven applications.

We start with a brief motivation: Suppose that we are currently in the 9th iteration of the CSG algorithm. So far, we have sampled \(\nabla _1 j(u,x)\) at nine points \((u_i,x_i)_{i=1,\ldots ,9}\) and the full gradient at the current design is given by

In Fig. 1, the situation is shown for the case \({d_{\text {r}}}= {d_{\text {o}}}= 1\). How can we use the samples \(\big (\nabla _1 j(u_i,x_i)\big )_{i=1,\ldots ,9}\) in an optimal fashion, to build an approximation to \(\nabla J(u_9)\)?

3.1 Exact weights

To approximate the value of the integral along the bold line, we may use a nearest neighbor approximation. The underlying idea is visualized in Fig. 2. Thus, we define the sets

By construction, \(M_k\) contains all points \(x\in \mathcal {X}\), such that \((u_n,x)\) is closer to \((u_k,x_k)\) than to any other previous point we evaluated \(\nabla _1 j\) at. The full gradient is then approximated in a piecewise constant fashion by

Therefore, we call \(\alpha _k^{\text {ex}} = \mu (M_k)\) exact integration weights, since they are based on an exact nearest neighbor approximation. These weights were first introduced in [1] and offer the best approximation to the full gradient. However, the calculation of the exact integration weights requires full knowledge of the measure \(\mu \) and is based on a Voronoi tessellation [38], which is computationally expensive for high dimensions of \(\mathcal {U}\times \mathcal {X}\).

Note that, in the special case \(\dim (\mathcal {X})=1\), the calculation of the tessellation can be circumvented, regardless of \(\dim (\mathcal {U})\). Instead, the intersection points of the line \(\{u_n\}\times \mathcal {X}\) and all faces of active Voronoi cells can be obtained directly by solving the equations appearing in the definition of \(M_k\). This, however, still requires us to solve \(\mathcal {O}(n^2)\) quadratic equations per CSG iteration.

3.2 Exact hybrid weights

In some settings, the dimension of \(\mathcal {X}\) can be very small compared to the dimension of \(\mathcal {U}\). Hence, we might avoid computing a Voronoi tessellation in \(\mathcal {U}\times \mathcal {X}\) by treating these spaces separately. For this, we introduce the sets

Denoting by \(1_{M_k}\) the indicator function of \(M_k\), we define the exact hybrid weights as

Notice that the sets \(M_k\) still appear in the definition of the exact hybrid weights, but do not need to be calculated explicitly. Instead, we only have to find the nearest sample point to \((u_n,x_i)_{i=1,\ldots ,n}\), which can be done efficiently even in large dimensions. We do, however, still require knowledge of \(\mu \). The idea of the exact hybrid weights is captured in Fig. 3.

The red stars on the bold line represent the points \((u_n,x_i)\). For each cell, we determine the number of these points inside the cell and combine their corresponding measures \(\mu \big ({\widetilde{M}}_i\big )\), indicated by the colored line segments. Since \(\dim (\mathcal {X}) = 1\) in the shown example, the sets \({\widetilde{M}}_i\) are simply intervals, where the endpoints are given by the midpoints of two neighboring \(x_i\)

3.3 Inexact hybrid weights

To calculate the integration weights in the case that the measure \(\mu \) is unknown, we may approximate \(\mu \big ({\widetilde{M}}_i\big )\) empirically. All that is required for this approach is additional samples of the random variable X. To be precise, we draw enough samples such that in iteration n, we have in total f(n) samples of X, where \(f:\mathbb {N}\rightarrow \mathbb {N}\) is a function which is strictly increasing and satisfies \(f(n)\ge n\) for all \(n\in \mathbb {N}\). It is important to note, that we still evaluate \(\nabla _1 j(u_n,\cdot )\) at only one of these points, which we denote by \(x_{j_n}\). Thus, exchanging \(\mu \big ({\widetilde{M}}_i\big )\) by its empirical approximation yields the inexact hybrid weights

How fast f grows determines not only the quality of the approximation, but also the computational complexity of the weight calculation. Based on the choice of f, the inexact hybrid weights interpolate between the exact hybrid weights and the empirical weights, which will be introduced below. In Fig. 5, this behavior is shown for functions of the form \(f(n) = \lfloor n^\beta \rfloor \) with \(\beta \ge 1\). Figure 4 illustrates the general concept of the inexact hybrid weights.

The red stars on the bold line represent the points \((u_n,x_i)_{i=1,\ldots ,f(n)}\). Similar to the exact hybrid weights, for each cell of the Voronoin diagramm, we count the points \((u_n,x_{j_i})_{i=1,\ldots ,n}\) inside the cell (marked by dashed lines). Instead of assigning them the weights \(\mu \big ({\widetilde{M}}_{j_i}\big )\), we weight them by an empirical approximation to this quantity, i.e., by the percentage of random samples \((x_i)_{i=1,\ldots ,f(n)}\) that lie in \({\widetilde{M}}_{j_i}\)

Median of the results for problem (20), initialized with 1000 random starting points, for empirical weights, exact hybrid weights and several inexact hybrid weights. The function f appearing in the inexact hybrid weights was chosen as \(f(n)=\lfloor n^\beta \rfloor \)

3.4 Empirical weights

The empirical weights offer a computationally cheap alternative to the methods mentioned above. Their calculation does not require any knowledge of the measure \(\mu \). For the empirical weights, the quantity \(\mu (M_k)\) is directly approximated by its empirical counterpart, i.e.,

The corresponding picture is shown in Fig. 6. By iteratively storing the distances \(\Vert x_i-x_j\Vert \), \(i,j<n\), the empirical weights can be calculated very efficiently.

3.5 Metric on \(\mathcal {U}\times \mathcal {X}\)

As mentioned after Assumption 2.4, the convergence results proven in this contribution are independent of the specific inner norms on \(\mathcal {U}\) and \(\mathcal {X}\), denoted by \(\Vert \cdot \Vert _{_\mathcal {U}}\) and \(\Vert \cdot \Vert _{_\mathcal {X}}\). Furthermore, they also hold if we substitute the outer norm on \(\mathcal {U}\times \mathcal {X}\), appearing in (2), e.g., by a generalized \(L^1\)-outer norm, i.e.,

with \(c_1,c_2>0\). While this does not seem particularly helpful at first glance, it in fact allows to drastically change the practical performance of CSG. By altering the ratio \(\xi =\tfrac{c_2}{c_1}\), the CSG gradient approximation tends to consider fewer (\(\xi <1\)) or more (\(\xi >1\)) old samples in the linear combination. The effect such a choice can have in practice is visible in the numerical analysis of CSG in [27].

To be precise, choosing \(\xi \ll 1\) results in the nearest neighbor being predominantly determined by the distance in design. In the extreme case, this means that CSG initially behaves more like a traditional SG algorithm. Analogously, \(\xi \gg 1\) will initially yield a gradient approximation, in which all old samples are used, even if they differ greatly in design (for discrete measure \(\mu \), this corresponds to SAG). The corresponding Voronoi diagrams are shown in Fig. 7.

For the sake of consistency, all numerical examples will use the Euclidean norm \(\Vert \cdot \Vert _{_2}\) as inner norms and we fix \(\xi =1\).

3.6 Properties of the integration weights

In this section, we will show that each of the previously presented methods of obtaining integration weights satisfies Assumption 2.4. For this, we first have to identify the corresponding measures \(\mu _n\) and then need to argue why they converge weakly to the true measure \(\mu \).

Starting with the empirical weights \(\alpha ^\text {e}\), we see that, in the sense of Assumption 2.4, they correspond to the empirical measure (see, e.g., [39])

since

It was shown in [40, Theorem 3], that \(\mu _n^{\text {e}}\Rightarrow \mu \) for \(n\rightarrow \infty \), where \(\Rightarrow \) denotes the weak convergence of measures (in our case, the weak-* convergence in dual space theory, see, e.g., [41, Section 4.3]):

Likewise, the measures associated with the other integration weights are given by

Now, \(\mu _n^\text {ex}\Rightarrow \mu \) is clear. For \(\mu _n^\text {eh}\), given any \(f\in \mathcal {C}^{0,1}(\mathcal {X},\mathbb {R})\), we have

Here, we used the almost sure density of \((x_n)_{n\in \mathbb {N}}\), see Remark 2.1, together with the definition of \({\widetilde{M}}_i\), to conclude

Lastly, \(\mu _n^\text {ih}\Rightarrow \mu \) follows from combining \(\mu _n^\text {eh}\Rightarrow \mu \) and \(\mu _n^{e}\Rightarrow \mu \) with the triangle inequality.

3.7 Batches, patches and parallelization

All methods for obtaining the integration weights presented above heavily relied on evaluating the gradient \(\nabla _1 j\) only at a single sample point \((u_n,x_n)\) per iteration. In other words, Algorithm 1 has a natural batch size of 1. While we certainly do not want to increase this number too much, stochastic mini-batch algorithms outperform classic SG in some instances, especially if the evaluation of the gradient samples \(\nabla _1 j\big (u_n,x_n^{(i)}\big )_{i=1,\ldots ,N}\) can be done in parallel.

Increasing the batch size N in CSG leaves the question of how the integration weights should be obtained. We could, of course, simply collect all evaluation points and calculate the weights as usual. This, however, may significantly increase the computational cost, effectively scaling it by N. In many instances, there is a much more elegant solution to this problem, which lets us include mini-batches without any additional weight calculation cost.

Assume, for simplicity, that \(\mathcal {X}\) is an open interval and that \(\mu \) corresponds to a uniform distribution, i.e., there exist \(a<b\in \mathbb {R}\) such that

Given \(N\in \mathbb {N}\), we obtain

Thus, we can include mini-batches of size \(N\in \mathbb {N}\) into CSG by performing the following steps in each iteration:

-

1.)

Draw random sample \(x_n\in \left( a,a+\tfrac{b-a}{N}\right) \).

-

2.)

Evaluate \(\nabla _1 j(u_n,\cdot )\) at each

$$\begin{aligned} x_n^{(i)} = x_n + (i-1)\tfrac{b-a}{N},\quad i=1,\ldots ,N. \end{aligned}$$ -

3.)

Compute

$$\begin{aligned} \nabla _1 {\tilde{j}}(u_n,x_n) = \sum _{i=1}^N \nabla _1 j\big (u_n,x_n^{(i)}\big ). \end{aligned}$$ -

4.)

Calculate integration weights \((\alpha _k)_{k=1,\ldots ,n}\) as usual for \((u_k,x_k)_{k=1,\ldots ,n}\) and set

$$\begin{aligned} \hat{G}_n = \sum _{k=1}^n \alpha _k \nabla _1 {\tilde{j}}(u_k,x_k). \end{aligned}$$

It is straightforward to apply this idea to higher dimensional rectangular cuboids. Furthermore, the process of subdividing \(\mathcal {X}\) into smaller patches and drawing the samples in only one of these regions \(\mathcal {X}_1\subseteq \mathcal {X}\) can be generalized to more complex settings as well. However, it is necessary that translating the sample \(x_n\) into the other patches preserves the underlying probability distribution \(X\sim \mu \), e.g., if \(\mu \) is induced by a Lipschitz continuous density function and the different patches are obtained by reflecting, translating, rotating and scaling \(\mathcal {X}_1\). A conceptual example is shown in Fig. 8.

The effect of introducing mini-batches into CSG is tested for the optimization problem

by dividing \(\mathcal {X}=\left[ -\tfrac{1}{2},\tfrac{1}{2}\right] ^2\) into small squares of sidelength \(\tfrac{1}{N}\), achieving a batch size of \(N^2\). The results of 500 optimization runs with random starting points are given in Fig. 9. Although increasing the batch size does, as expected, not influence the rate of convergence, it still improves the overall performance and should definitely be considered for complex optimization problems, especially if the gradient sampling can be performed in parallel.

Median distance of the CSG iterates to the optimal solution \(u^*= 0\in \mathbb {R}^2\) of (4) in each iteration. The batch size used is equal to \(N^2\), where different values of N are indicated by the different colors. For all runs, a constant step size of \(\tau = \tfrac{1}{2}\) was chosen (Color figure online)

4 Auxiliary results

From now on, unless explicitly stated otherwise, we will always assume Assumptions 2.1 to 2.4 to be satisfied. In this section, we collect some auxiliary results known from the convergence analysis of gradient descent schemes, like Lemma 4.1. Afterwards, we focus on more specific results concerning the density of the sample point sequence \((u_n,x_n)_{n\in \mathbb {N}}\), that are later used to prove the approximation property of CSG (Lemma 4.6). Said property represents the most distinguishing feature between CSG and other stochastic optimization methods and plays the most important role for our later convergence results in Sects. 5 and 6. Note that similar results were already obtained in [1]. Therein, however, the argumentation relied heavily on the usage of diminishing step sizes and exact integration weights. The results presented here hold in a more general setting and allow for the analysis of continuous stochastic optimization schemes beyond pure gradient methods in the future, see Remark 4.2.

Definition 4.1

(Stationary Points) Let \(J\in C^1(\mathcal {U})\) be given. We say \(u^*\in \mathcal {U}\) is a stationary point of J, if

Furthermore, we denote by

the set of all stationary points of J. Note that, under Assumption 2.2, (5) is equivalent to

Gradient descent methods for L-smooth objective functions have thoroughly been studied in the past (e.g. [42, 43]). The key ingredients for obtaining convergence results with constant step sizes are the descent lemma and the characteristic property of the projection operator, which we state in the following.

Lemma 4.1

(Descent Lemma) If \(J:\mathcal {U}\rightarrow \mathbb {R}\) is L-smooth, then it holds

Lemma 4.2

(Characteristic Property of Projection) For a projected step in direction \(\hat{G}_n\), i.e., \(u_{n+1}=\mathcal {P}_\mathcal {U}(u_n-\tau _n\hat{G}_n)\), the following holds:

Proof

Lemma 4.1 corresponds to [44, Lemma 5.7]. Lemma 4.2 is a direct consequence of [44, Theorem 6.41]. \(\square \)

Before we move on to results that are specific for the CSG method, we state a general convergence result, which will be helpful in the later proofs.

Lemma 4.3

(Finitely Many Accumulation Points) Let \((u_n)_{n\in \mathbb {N}}\subseteq \mathbb {R}^d\) be a bounded sequence. Suppose that \((u_n)_{n\in \mathbb {N}}\) has only finitely many accumulation points and it holds \(\Vert u_{n+1}-u_n\Vert \rightarrow 0\). Then \((u_n)_{n\in \mathbb {N}}\) is convergent.

Proof

Let \(\left\{ \bar{u}_1,\ldots ,\bar{u}_K\right\} \) be the accumulation points of \((u_n)_{n\in \mathbb {N}}\) and define

i.e, the minimal distance between two accumulation points of \((u_n)_{n\in \mathbb {N}}\). The accumulation point closest to \(u_n\) is defined as:

Next up, we show that there exists \(N\in \mathbb {N}\) such that for all \(n\ge N\) it holds \(\Vert u_n-\bar{u}(n)\Vert < \tfrac{\delta _0}{4}\). We prove this by contradiction.

Thus, we assume there exist infinitely many \(n\in \mathbb {N}\) such that \(\Vert u_n-\bar{u}(n)\Vert \ge \tfrac{\delta _0}{4}\). This subsequence is again bounded and therefore must have an accumulation point. By construction, this accumulation point is no accumulation point of \((u_n)_{n\in \mathbb {N}}\), which is a contradiction.

Now, let \(N_1\in \mathbb {N}\) be large enough such that \(\Vert u_n-\bar{u}(n)\Vert <\tfrac{\delta _0}{4}\) for all \(n\ge N_1\). By our assumptions, there also exists \(N_2\in \mathbb {N}\) with \(\Vert u_{n+1}-u_n\Vert <\tfrac{\delta _0}{4}\) for all \(n\ge N_2\). Define \(N:=\max \{N_1,N_2\}\).

Let \(n \ge N\) and assume for contradiction that \(\bar{u}(n) \ne \bar{u}(n+1)\). We obtain

where \({{\,\textrm{dist}\,}}(A,B):= \inf _{x\in A,y\in B}\Vert x-y\Vert \) for \(A,B\subseteq \mathbb {R}^d\). This is a contradiction to \(\Vert u_{n+1}-u_n\Vert <\tfrac{\delta _0}{4}\) for all \(n \ge N\).

We thus conclude that \(u_n\in \mathcal {B}_{\delta _0/4}\big (\bar{u}(n)\big )\) implies \(u_{n+1}\in \mathcal {B}_{\delta _0/4}\big (\bar{u}(n)\big )\) as well. Since \(\bar{u}(n)\) is the only accumulation point on \(\mathcal {B}_{\delta _0/4}\big (\bar{u}(n)\big )\), it follows that \(u_n\rightarrow \bar{u}(N)\).

\(\square \)

4.1 Results for CSG approximations

From now on, let \((u_n)_{n\in \mathbb {N}}\) denote the sequence of iterates generated by Algorithm 1. In this section, we want to show that the CSG approximations \(\hat{J}_n\) and \(\hat{G}_n\) in the course of iterations approach the values of \(J(u_n)\) and \(\nabla J(u_n)\), respectively. This is a key result for the convergence theorems stated in Sect. 5 and Sect. 6.

Lemma 4.4

(Density Result in \(\mathcal {X}\)) Let \((x_n)_{n\in \mathbb {N}}\) be the random sequence appearing in Algorithm 1. For all \({\varepsilon }>0\) there exists \(N\in \mathbb {N}\) such that

Proof

Utilizing the compactness of \({{\,\textrm{supp}\,}}(\mu )\subseteq \mathbb {R}^{d_{\text {r}}}\), there exists \(T\in \mathbb {N}\) such that \(\left( \mathcal {B}_{{\varepsilon }/2}(m_i)\right) _{i=1,\ldots ,T}\) is an open cover of \({{\,\textrm{supp}\,}}(\mu )\) consisting of balls with radius \(\frac{{\varepsilon }}{2}\) centered at points \(m_i\in {{\,\textrm{supp}\,}}(\mu )\). Thus, for each \(x\in {{\,\textrm{supp}\,}}(\mu )\) we can find \(i_x\in \{1,\ldots ,T\}\) with \(x\in \mathcal {B}_{{\varepsilon }/2}(m_{i_x})\). Hence, by Remark 2.1, for each \(i=1,\ldots ,T\), there exists \(n_i\in \mathbb {N}\) satisfying

Defining

for all \(x\in {{\,\textrm{supp}\,}}(\mu )\) we have

\(\square \)

Lemma 4.5

(Density Result in \(\mathcal {U}\times \mathcal {X}\)) Let \((u_n)_{n\in \mathbb {N}}\), \((x_n)_{n\in \mathbb {N}}\) be the sequences of optimization variables and sample sequence appearing in Algorithm 1. For all \({\varepsilon }>0\) there exists \(N\in \mathbb {N}\) such that

for all \(n>N\) and all \(x\in {{\,\textrm{supp}\,}}(\mu )\).

Proof

Since \(\mathcal {U}\) is compact, we can find a finite cover \(\left( \mathcal {B}_{{\varepsilon }/4}(m_i)\right) _{i=1,\ldots ,T}\) of \(\mathcal {U}\) consisting of \(T\in \mathbb {N}\) balls with radius \(\frac{{\varepsilon }}{4}\) centered at points \(m_i\in \mathcal {U}\). Define \(I\subseteq \{1,\ldots ,T\}\) as

By our definition of I, for each \(i\in \{1,\ldots ,T\}\setminus I\) there exists \({\tilde{N}}_i\in \mathbb {N}\) such that \({u_n\not \in \mathcal {B}_{{\varepsilon }/4}(m_i)}\) for all \(n>{\tilde{N}}_i\). Setting

it follows that

For \(i\in I\) let \(\big ( u_{n^{(i)}_t}\big )_{t\in \mathbb {N}}\) be the subsequence consisting of all elements of \((u_n)_{n\in \mathbb {N}}\) that lie in \(\mathcal {B}_{{\varepsilon }/4}(m_i)\). Observe that \(\big (x_{n_t^{(i)}}\big )_{t\in \mathbb {N}}\) is independent and identically distributed according to \(\mu \), since for all \(A\subseteq {{\,\textrm{supp}\,}}(\mu )\) and each \(i\in I\), it holds

Thus, by Remark 2.1, \(\big (x_{n_t^{(i)}}\big )_{t\in \mathbb {N}}\) is dense in \({{\,\textrm{supp}\,}}(\mu )\) with probability 1 for all \(i\in I\). By Lemma 4.4, we can find \(K_i\in \mathbb {N}\) such that

Define

as well as \(N:= \max (N_1,N_2)\). Notice that this definition of N implies for all \(i\in I\) and all \(n>N\)

By (6), for all \(n>N\) there exists \(i\in I\) such that

Now, given \(x\in {{\,\textrm{supp}\,}}(\mu )\) and \(n> N\), we choose \(j\in I\) such that \(u_n\in \mathcal {B}_{\varepsilon /4}(m_j)\). Thus, it holds

where we used (7) and \(u_n,u_{n^{(j)}_t}\in \mathcal {B}_{{\varepsilon }/4}(m_j)\) in the last line. \(\square \)

Lemma 4.6

(Approximation Results for J and \(\nabla J\)) The approximation errors for \(\nabla J\) and J vanish in the course of the iterations, i.e.,

Proof

Denote by \(\nu _n\) the measure corresponding to the integration weights according to (3). For \(x\in {{\,\textrm{supp}\,}}(\mu )\), we define the closest index in the current iteration \(k^n(x)\) as

i.e., we have

Now, it holds

where \(L_j\) is the Lipschitz constant of \(\nabla _1 j\) as defined in Assumption 2.3.

First, since \(Z_n\) is uniformly (in n) Lipschitz continuous, we obtain

Here, \(L_Z\) corresponds to the Lipschitz constant of \(Z_n\) and \(d_W\) denotes the Wasserstein distance of the measure \(\nu _n\) and \(\mu \). By Assumption 2.2, \(\mathcal {X}\) is bounded and by Assumption 2.4, we have \(\nu _n\Rightarrow \mu \). Thus, [45, Theorem 6] yields \(d_W(\nu _n,\mu )\rightarrow 0\). Additionally, since \(Z_n\) is bounded and converges pointwise to 0 (see Lemma 4.5), we use Lebesgue’s dominated convergence theorem and conclude

For the second part, let \(Q_n\) be an arbitrary coupling of \(\nu _n\) and \(\mu \), i.e., \(Q_n(\cdot \times \mathcal {X}) = \nu _n\) and \(Q_n(\mathcal {X}\times \cdot )=\mu \). Utilizing the Lipschitz continuity of \(\nabla _1 j\) (Assumption 2.3) once again, we obtain

Denote the set of all couplings of \(\nu _n\) and \(\mu \) by \(\textbf{Q}\). Since \(Q_n\) was arbitrary, it holds

finishing the proof of \(\left\| \hat{G}_n - \nabla J(u_n)\right\| \rightarrow 0\). The second part of the claim follows analogously.

\(\square \)

As a final remark before starting the convergence analysis, we want to give further details on the class of problems that can be solved by the CSG algorithm.

Remark 4.1

(Generalized Setting) Suppose that, in addition to \(\mathcal {U},\mathcal {X}\) and J as defined in the introduction, we are given a convex set \(\mathcal {V}\subseteq \mathbb {R}^{d_1}\) for some \(d_1\in \mathbb {N}\) and a continuously differentiable function \(F:\mathcal {V}\times \mathbb {R}\rightarrow \mathbb {R}\). Now, if we consider the optimization problem

the gradient of the objective function with respect to (u, v) is given by

It is a direct consequence of Lemma 4.6, that

Thus, we can use the CSG method to solve (8) and all our convergence results carry over to this setting, as long as the new objective function satisfies Assumption 2.3.

Furthermore, let \(\mathcal {Y}\subseteq \mathbb {R}^{d_2}\) for some \(d_2\in \mathbb {N}\). Assume that we are given a probability measure \(\nu \) such that the pair \((\mathcal {Y},\nu )\) satisfies the same assumptions we imposed on \((\mathcal {X},\mu )\) and consider the optimization problem

Again, the gradient of this objective function can be approximated by the CSG method, if \({\tilde{F}}:\mathcal {V}\times \mathbb {R}\times \mathcal {Y}\rightarrow \mathbb {R}\) is Lipschitz continuously differentiable.

It is clear that we can continue to wrap around functions or expectation values in these fashions indefinitely. Therefore, we see that the scope of the CSG method is far larger than problems like (1) and includes many settings, which stochastic gradient descent methods can not handle, like nested expected values, tracking of expected values and many more.

A numerical example for a composite objective function, where CSG is compared to a method from the literature, can be found in Sect. 7.2.

Remark 4.2

Carefully observing the proofs above, it can be seen that the approximation property (Lemma 4.6) almost surely holds for any sequence of designs, regardless of the method it is obtained by. Thus, the results presented in this section are a consequence of the underlying stochastic approximation scheme through our integration weights and do not depend on the outer gradient descent method, in which they are used. Therefore, for future work, we may even consider combining the approximations \(\hat{J}_n\) and \(\hat{G}_n\) with more advanced optimization schemes from literature.

5 Convergence results for constant step size

Our first result considers the special case in which the objective function J appearing in (1) has only finitely many stationary points on \(\mathcal {U}\). The proof of this result serves as a prototype for the later convergence results, as they share a common idea.

To illustrate the practical performance of CSG with constant step sizes, an academic example is presented in Sect. 7.1.

Theorem 5.1

(Convergence for Constant Steps) Assume that J has only finitely many stationary points on \(\mathcal {U}\).

Then CSG with a positive constant step size \(\tau _n=\tau <\tfrac{2}{L}\) converges to a stationary point of J with probability 1.

We want to sketch the proof of Theorem 5.1 before going into details. In the deterministic case, Lemma 4.1 and Lemma 4.2 are used to show that \({J(u_{n+1})\le J(u_n)}\) for all \(n\in \mathbb {N}\). It then follows from a telescopic sum argument that \(\Vert u_{n+1}-u_n\Vert \rightarrow 0\), i.e., every accumulation point of \((u_n)_{n\in \mathbb {N}}\) is stationary (compare [43, Theorem 5.1] or [44, Theorem 10.15]).

In the case of CSG, we can not guarantee monotonicity of the objective function values \((J(u_n))_{n\in \mathbb {N}}\). Instead, we split the sequence into two subsequences. On one of these subsequences, we can guarantee a decrease in function values, while for the other sequence we can not. However, we prove that the latter sequence can accumulate only at stationary points of J. The main idea is then that \((u_n)_{n\in \mathbb {N}}\) can have only one accumulation point, because “switching" between several points conflicts with the decrease in function values that must happen for steps in between.

Proof of Theorem 5.1

The following arguments are being made for a fixed sample sequence \((x_n)_{n\in \mathbb {N}}\) and the corresponding trajectory of designs \((u_n)_{n\in \mathbb {N}}\). Moreover, during the proof, we construct several subsequences of \((u_n)_{n\in \mathbb {N}}\). By construction and Assumption 2.1, the associated subsequences of \((x_n)_{n\in \mathbb {N}}\) are again independent identically distributed realizations of \(X\sim \mu \). Thus, each of these subsequences is dense in \({{\,\textrm{supp}\,}}(\mu )\) with probability 1. Since this property is required for the following arguments, we assume that \((x_n)_{n\in \mathbb {N}}\) satisfies this condition.

By Lemma 4.1 we have

Utilizing Lemma 4.2 and the Cauchy-Schwarz inequality, we now obtain

Since \(\frac{L}{2}-\frac{1}{\tau }<0\), our idea is the following:

Steps that satisfy

i.e., steps with small errors in the gradient approximation, will yield decreasing function values.

On the other hand, the remaining steps will satisfy \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\), due to \(\Vert \nabla J(u_n)-\hat{G}_n\Vert \rightarrow 0\) (see Lemma 4.6). With this in mind, we distinguish three cases: In Case 1, (11) is satisfied for almost all steps, while in Case 2 it is satisfied for only finitely many steps. In the last case, there are infinitely many steps satisfying and infinitely many steps violating (11).

Case 1: There exists \(N\in \mathbb {N}\) such that

In this case, it follows from (10) that \(J(u_{n+1})\le J(u_n)\) for all \(n\ge N\). Therefore, the sequence \((J(u_n))_{n\in \mathbb {N}}\) is monotonically decreasing for almost every \(n\in \mathbb {N}\). Since J is continuous and \(\mathcal {U}\) is compact, J is bounded and we therefore have \({J(u_n)\rightarrow \bar{J}\in \mathbb {R}}\). Thus, it holds

Since \(\frac{1}{2}\left( \frac{L}{2}-\frac{1}{\tau }\right) <0\), we must have \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\). Let \(\left( u_{n_k}\right) _{k\in \mathbb {N}}\) be a convergent subsequence with \(u_{n_k}\rightarrow {\overline{u}}\in \mathcal {U}\).

By Lemma 4.6, we have \(\hat{G}_{n_k}\rightarrow \nabla J({\overline{u}})\) and thus

i.e., every accumulation point of \((u_n)_{n\in \mathbb {N}}\) is stationary. Since J has only finitely many stationary points, Lemma 4.3 yields the convergence of \((u_n)_{n\in \mathbb {N}}\) to a stationary point of J.

Case 2: There exists \(N\in \mathbb {N}\) such that

By Lemma 4.6, we have \(\Vert \nabla J(u_n)-\hat{G}_n\Vert \rightarrow 0\). Since \(\frac{1}{2}\left( \frac{1}{\tau }-\frac{L}{2}\right) >0\), the above inequality directly implies \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\). Analogously to Case 1, we conclude that \((u_n)_{n\in \mathbb {N}}\) converges to a stationary point of J.

Case 3: There are infinitely many \(n\in \mathbb {N}\) with

and infinitely many \(n\in \mathbb {N}\) with

In this case, we split \((u_n)_{n\in \mathbb {N}}\) disjointly in the two sequences \((u_{a(n)})_{n\in \mathbb {N}}\) and \((u_{b(n)})_{n\in \mathbb {N}}\), such that we have

and

We call \((u_{a(n)})_{n\in \mathbb {N}}\) the sequence of descent steps. For \((u_{b(n)})_{n\in \mathbb {N}}\), observe that, as in Case 2, we directly obtain

and every accumulation point of \((u_{b(n)})_{n\in \mathbb {N}}\) is stationary. Therefore, as in the proof of Lemma 4.3, for all \(\varepsilon >0\) there exists \(N\in \mathbb {N}\) such that

where \({\overline{u}}_1,\ldots ,{\overline{u}}_K\) denote the \(K\in \mathbb {N}\) accumulation points of \((u_{b(n)})_{n\in \mathbb {N}}\).

Now, we prove by contradiction that \(J({\overline{u}}_1)=J({\overline{u}}_2)=\ldots =J({\overline{u}}_K)\).

Suppose that this is not the case. Then we have at least \(M\ge 2\) function values of accumulation points and

for some \(f_1>f_2>\ldots >f_M\in \mathbb {R}\). Now, choose \(\varepsilon >0\) small enough, such that

where \(c_{_L}\) denotes the Lipschitz constant of J. By (12) and (13), there exists \(N\in \mathbb {N}\) such that for all \(n\ge N\) we have

Therefore, for \(n\ge N\) and \(i\in \{1,\ldots ,K\}\), we have

Especially, for all \(n\ge N\) and all \(i=1,\ldots , K\) it holds

It follows from (14) and (16) that for \(n\ge N+1\):

-

(A)

If \(u_{b(n)}\in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_i)\) and \(u_{b(n+1)}\in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_j)\) for some \(j\ne i\), then there must be at least one descent step between \(u_{b(n)}\) and \(u_{b(n+1)}\).

-

(B)

If \(u_{b(n)}\in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_i)\) and \(u_{b(n)-1}\not \in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_i)\), then \(u_{b(n)-1}\) must be a descent step.

Observe that (A) follows directly from (14) and (16), as moving from the vicinity of \({\overline{u}}_i\) to a neighborhood of \({\overline{u}}_j\) requires that there is an intermediate step \(u_n\) with \(\min _{i\in \{1,\ldots ,K\}}\Vert u_{b(n)}-{\overline{u}}_i\Vert _{_\mathcal {U}}\ge \tfrac{\varepsilon }{4}\). Similarly, (B) is just the second condition in (16) reformulated.

Note that, given \({\overline{u}}_j\), \({\overline{u}}_k\) with \(J({\overline{u}}_j) = f_1\) and \(J({\overline{u}}_i) \le f_2\), we have

Thus, starting at \(u\in \mathcal {B}_{\tfrac{{\varepsilon }}{4}}({\overline{u}}_i)\), we can not reach \(v\in \mathcal {B}_{\tfrac{{\varepsilon }}{4}}({\overline{u}}_j)\) by descent steps alone. Now, let \(i\in \{1,\ldots ,K\}\) be chosen such that \(J({\overline{u}}_i)\le f_2\) and let \(n_0\ge N\) be chosen such that \(u_{b(n_0)}\in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_i)\) and \(u_{b(n_0)+1}\not \in \mathcal {B}_{\frac{\varepsilon }{4}}({\overline{u}}_i)\). Using (15) and (17), we obtain

Therefore, descent steps can never reach \(\mathcal {B}_{\frac{\varepsilon }{4}}\left( J^{-1}(\{f_1\}\cap \{{\overline{u}}_1,\ldots ,{\overline{u}}_K\})\right) \) again! It follows from item (B), that \(u_n\not \in \mathcal {B}_{\frac{\varepsilon }{4}}\left( J^{-1}(\{f_1\})\cap \{{\overline{u}}_1,\ldots ,{\overline{u}}_K\}\right) \) for all \({n\ge b(n_0)}+1\), in contradiction to \(J^{-1}(\{f_1\})\cap \{{\overline{u}}_1,\ldots ,{\overline{u}}_K\}\) consisting of at least one accumulation point of \((u_n)_{n\in \mathbb {N}}\). Hence, we have

Next, we show that every accumulation point of \((u_{a(n)})_{n\in \mathbb {N}}\) is stationary. We prove this by contradiction.

Assume there exists a non-stationary accumulation point \({\overline{u}}\) of \((u_{a(n)})_{n\in \mathbb {N}}\). Observe that

Case 3.1: \(J({\overline{u}})<\bar{J}\).

Then, by the same arguments as above, there exists \(N\in \mathbb {N}\) and \({\varepsilon }>0\) s.t.

This is a contradiction to \({\overline{u}}_1,\ldots ,{\overline{u}}_K\) being accumulation points of \((u_n)_{n\in \mathbb {N}}\).

Case 3.2: \(J({\overline{u}})>\bar{J}\).

In this case, there exists \(N\in \mathbb {N}\) and \({\varepsilon }>0\) such that \(u_n\not \in \mathcal {B}_{\frac{\varepsilon }{4}}\left( {\overline{u}}\right) \) for all \(n\ge N\). This is a contradiction to \({\overline{u}}\) being an accumulation point of \((u_n)_{n\in \mathbb {N}}\).

Case 3.3: \(J({\overline{u}})=\bar{J}\).

Since \({\overline{u}}\) is an accumulation point of \((u_{a(n)})_{n\in \mathbb {N}}\), there exists a subsequence \((u_{a(n_k)})_{k\in \mathbb {N}}\) with \(u_{a(n_k)}\rightarrow {\overline{u}}\). The sequence \((u_{a(n_k)-1})_{k\in \mathbb {N}}\) is bounded and therefore has at least one accumulation point \({\overline{u}}_{-1}\) and a subsequence \((u_{a(n_{k_t})-1})_{t\in \mathbb {N}}\) with \(u_{a(n_{k_t})-1}\rightarrow {\overline{u}}_{-1}\). It follows that

As \({\overline{u}}\) is not stationary by our assumption, \({\overline{u}}_{-1}\ne {\overline{u}}\) and \({\overline{u}}_{-1}\) is no stationary point of J. Thus, Lemma 4.1 combined with Lemma 4.2 yields

Therefore, \({\overline{u}}_{-1}\) is an accumulation point of \((u_{a(n)})_{n\in \mathbb {N}}\), which satisfies \(J({\overline{u}}_{-1})>J({\overline{u}})=\bar{J}\). This, however, is impossible, as seen in Case 3.2.

In conclusion, in Case 3, all accumulation points of \((u_n)_{n\in \mathbb {N}}\) are stationary. Thus, on every convergent subsequence we have \(\Vert u_{n_k+1}-u_{n_k}\Vert _{_\mathcal {U}}\rightarrow 0\). Since \((u_n)_{n\in \mathbb {N}}\) is bounded, this already implies \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\). Now, Lemma 4.3 yields the claimed convergence of \((u_n)_{n\in \mathbb {N}}\) to a stationary point of J.

\(\square \)

The idea of the proof above still applies in the case that J is constant on some parts of \(\mathcal {U}\), i.e., J can have infinitely many stationary points. We obtain the following convergence result:

Theorem 5.2

Let \(\mathcal {S}(J)\) be the set of stationary points of J on U as defined in Definition 4.1. Assume that the set

is of Lebesgue-measure zero. Then, with probability 1, every accumulation point of the sequence generated by CSG with constant step size \(\tau <\tfrac{2}{L}\) is stationary and we have convergence in function values.

Remark 5.1

Comparing Theorem 5.1 and Theorem 5.2, observe that under the weaker assumptions on the set of stationary points of J, we no longer obtain convergence for the whole sequence of iterates. To illustrate why that is the case, consider the function \(J:\mathbb {R}^{d_{\text {o}}}\rightarrow \mathbb {R}\) given by \(J(u)=\cos (\pi \Vert u\Vert _2^2)\) and \({\mathcal {U}=\{ u\in \mathbb {R}^{d_{\text {o}}}\,:\,\Vert u\Vert _2^2\le \frac{3}{2}\}}\). Then, \(\mathcal {S}(J)=\{0\}\cup \{u\in \mathcal {U}:\,\Vert u\Vert _2 =1\}\), i.e., every point on the unit sphere is stationary. Thus, we can not use Lemma 4.3 at the end of the proof to obtain convergence of \((u_n)_{n\in \mathbb {N}}\). Theoretically, it might happen that the iterates \((u_n)_{n\in \mathbb {N}}\) cycle around the unit sphere, producing infinitely many accumulation points, all of which have the same objective function value. This, however, did not occur when testing this example numerically.

Remark 5.2

While the assumption in Theorem 5.2 seems unhandy at first, there is actually a rich theory concerning such properties. For example, Sard’s Theorem [46] and generalizations [47] give that the assumption holds if \(J\in C^{d_{\text {o}}}\) and \(\mathcal {U}\) has smooth boundary. Even though it can be shown that there exist functions, which do not satisfy the assumption (e.g. [48, 49]), such counter-examples need to be precisely constructed and will most likely not appear in any application.

Proof of Theorem 5.2

As in the proof of Theorem 5.1, we consider a fixed sample sequence \((x_n)_{n\in \mathbb {N}}\) and the corresponding trajectory of designs \((u_n)_{n\in \mathbb {N}}\). Again, with probability 1, we can assume all relevant subsequences of \((x_n)_{n\in \mathbb {N}}\) to be dense in \({{\,\textrm{supp}\,}}(\mu )\). Now, proceeding analogously as in the proof of Theorem 5.1, we only have to adapt two intermediate results in Case 3:

-

(R1)

The objective function values of all accumulation points of \((u_{b(n)})_{n\in \mathbb {N}}\) are equal.

-

(R2)

Every accumulation point of \((u_{a(n)})_{n\in \mathbb {N}}\) is stationary.

Assume first, that (R1) does not hold. Then there exist two stationary points \({{\overline{u}}_1\ne {\overline{u}}_2}\) with \(J({\overline{u}}_1)< J({\overline{u}}_2)\). Now, (A) and (B) shown in the proof of Theorem 5.1 yield that there must exist an accumulation point \({\overline{u}}_3\) of \((u_{b(n)})_{n\in \mathbb {N}}\), i.e., a stationary point, with \(J({\overline{u}}_1)<J({\overline{u}}_3)<J({\overline{u}}_2)\). Iterating this procedure, we conclude that the set \(\mathcal {N}\cap \big [ J({\overline{u}}_1),J({\overline{u}}_2)\big ]\) is dense in \(\big [ J({\overline{u}}_1),J({\overline{u}}_2)\big ]\).

By continuity of \(u\mapsto \mathcal {P}_\mathcal {U}\big (u-\tau \nabla J(u)\big )-u\) and compactness of \(\mathcal {U}\), we see that

contradicting our assumption that \(\lambda (\mathcal {N})=0\).

For (R2), assume that \((u_{a(n)})_{n\in \mathbb {N}}\) has a non-stationary accumulation point \({\overline{u}}\). Since \(\mathcal {S}(J)\) is compact, it holds

Thus, by the same arguments as in Case 3.1, 3.2 and 3.3 within the proof of Theorem 5.1, we observe that such a point \({\overline{u}}\) can not exist. \(\square \)

6 Backtracking

Choosing an appropriate step size in practice is usually very challenging. While a deterministic full gradient method is typically carried out with a line search scheme, it is unclear how such techniques can be included in standard stochastic gradient methods. However, since we have \({\Vert \hat{G}_n-\nabla J(u_n)\Vert \rightarrow 0}\) and \({\Vert \hat{J}_n- J(u_n)\Vert \rightarrow 0}\) in CSG, we can use these approximations to refine the step length by a backtracking line search method, similar to the deterministic case.

The stabilizing effect of these augmentations can be seen in Sect. 7.3, where we compare CSG with backtracking (Algorithm 3) to AdaGrad.

Definition 6.1

For simplicity, we define

Furthermore, given n gradient samples \(\nabla _1 j(u_i,x_i)\) and n cost function samples \(j(u_i,x_i)\), by calculating the weights \(\alpha _i^{(n)}(u)\) w.r.t. a given point \(u\in \mathcal {U}\), we define

which are approximations to J(u) and \(\nabla J(u)\) respectively.

Based on the well known Armijo-Wolfe conditions from continuous optimization [50,51,52], we introduce the following step size conditions:

Definition 6.2

For \(0<c_1<c_2<1\), we call \(s_n(\tau _n)\) an Armijo step, if

Additionally, we Define the following Wolfe-type condition:

We try to obtain a step size that satisfies (SW1) and (SW2) by a bisection approach, as formulated in Algorithm 2. Since we can not guarantee to find a suitable step size, we perform only a fixed number \(T\in \mathbb {N}\) of backtracking steps. Notice that the curvature condition (SW2) only has an influence, if \(u_n-\tau _n\hat{G}_n\in \mathcal {U}\) (see line 6 in Algorithm 2). This way, we gain the advantages of a Wolfe line search while inside \(\mathcal {U}\), without ruling out stationary points at the boundary of \(\mathcal {U}\).

For our convergence analysis, we assume that in each iteration of CSG with line search, Algorithm 2 is initiated with the same \(\eta _n=\eta >0\). From a practical point of view, we might also consider a diminishing sequence \((\eta _n)_{n\in \mathbb {N}}\) of backtracking initializations (see Sect. 7.3). The CSG method with backtracking line search (bCSG) is given in Algorithm 3. Since all of the terms \({\tilde{J}}_n(s_n(\tau _n))\), \(\hat{J}_n\) and \(\hat{G}_n\) appearing in (SW1) contain some approximation error when compared to \(J(s_n(\tau _n))\), \(J(u_n)\) and \(\nabla J(u_n)\) respectively, especially the first iterations of Algorithm 3 might profit from a slightly weaker formulation of (SW1). Therefore, in practice, we will replace (SW1) by the non-monotone version

for some \(K\in \{0,\ldots ,n\}\).

6.1 Convergence results

For CSG with backtracking line search, we obtain the same convergence results as for constant step sizes:

Theorem 6.1

(Convergence for Backtracking Line Search) Let \(\mathcal {S}(J)\) be the set of stationary points of J on U as defined in Definition 4.1. Assume that

is of Lebesgue-measure zero and T in Algorithm 2 is chosen large enough, such that \(2^{-T}\eta <\frac{2}{L}\). Then, with probability 1, every accumulation point of the sequence \((u_n)_{n\in \mathbb {N}}\) generated by Algorithm 3 is stationary and we have convergence in function values.

If J satisfies the stronger assumption of having only finitely many stationary points, \((u_n)_{n\in \mathbb {N}}\) converges to a stationary point of J.

Proof

Using the same notation as in the proof of Theorem 5.1, we again assume the fixed sample sequence \((x_n)_{n\in \mathbb {N}}\) and all of its subsequences constructed in the following to be dense in \({{\,\textrm{supp}\,}}(\mu )\), which holds with probability 1, see Remark 2.1. Notice first, that there are only two possible outcomes of Algorithm 2: Either \(\tau _n\) satisfies (SW1), or \({\tau _n=2^{-T}\eta <\frac{2}{L}}\). Furthermore, as we have seen in the proof of Lemma 4.3, for all \(\varepsilon >0\) almost all \(u_n\) lie in \(\varepsilon \)-Balls around the accumulation points of \((u_n)_{n\in \mathbb {N}}\), since \((u_n)_{n\in \mathbb {N}}\) is bounded. Therefore, \({{\tilde{J}}_n(u_{n+1})- J(u_{n+1})\rightarrow 0}\) and \({{\tilde{G}}_n(u_{n+1})-\nabla J(u_{n+1})\rightarrow 0}\) (compare Lemma 4.6). Since we already know that the steps with constant step size \(\tau _n=2^{-T}\eta <\frac{2}{L}\) can be split in descent steps and steps which satisfy \({\Vert u_{n+1}-u_n\Vert \rightarrow 0}\), we now take a closer look at the Armijo-steps, i.e., steps with \({\tau _n\ne 2^{-T}\eta }\).

If \(\tau _n\ne 2^{-T}\eta \), by (SW1) and Lemma 4.2, it holds

Therefore, we either have

in which case it holds \(J(u_{n+1})\le J(u_n)\), or

in which case \({\tilde{J}}_n(u_{n+1})-J(u_{n+1})\rightarrow 0\) and \(\hat{J}_n- J(u_{n})\rightarrow 0\) yield \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\).

Thus, regardless of whether or not \(\tau _n=2^{-T}\eta \), we can split \((u_n)_{n\in \mathbb {N}}\) in a subsequence of descent steps and a subsequence of steps with \(\Vert u_{n+1}-u_n\Vert _{_\mathcal {U}}\rightarrow 0\). The rest of the proof is now identical to the proof of Theorem 5.1 and Theorem 5.2. \(\square \)

6.2 Estimations for the lipschitz constant of \(\nabla J\)

We have already seen, that the Lipschitz constant L of \(\nabla J\) is closely connected with efficient bounds on the step sizes. However, in general, we can not expect to have any knowledge of L a priori. Thus, we are interested in an approximation of L, that can be calculated during the optimization process.

Investigating the proof of Lemma 4.1 in [44]

we observe that we do not need the true Lipschitz constant L of \(\nabla J\) for the second inequality. Instead, it is sufficient to choose any constant \(C=C(u_1,u_2)\) that satisfies

To motivate our approach, assume that J is twice continously differentiable. In this case, a possible approximation to the constant \(C_n\) in iteration n is \(\Vert \nabla ^2 J(u_n)\Vert \). Therefore, utilizing the previous gradient approximations, we obtain

Then, \(C_n^{-1}\) yields a good initial step size for our backtracking line search. To circumvent high oscillation of \(C_n\), which may arise from the approximation errors of the terms involved, we project \(C_n\) onto the interval \(\left[ C_{\text {min}},C_{\text {max}}\right] \subseteq \mathbb {R}\), where \(0<C_{\text {min}}<C_{\text {max}}<\tfrac{2^{T+1}}{L}\), i.e.,

If possible, \(C_{\text {min}}\) and \(C_{\text {max}}\) should be chosen according to information concerning L. However, tight bounds on these quantities are not needed, as long as T is chosen large enough. The resulting SCIBL-CSG (SCaling Independent Backtracking Line search) method is presented in Algorithm 4. Notice that SCIBL-CSG does not require any a priori choice of step sizes and yields the same convergence results as bCSG.

7 Examples to illustrate key aspects of CSG performance

So far, all results presented indicate promising features of CSG in theory, but did not provide any insight on the actual practical performance. While a detailed numerical analysis of CSG can be found in [27], we want to investigate isolated key features with the help of some academic examples.

To be precise, we start by comparing the performances of CSG and standard SG for constant step sizes (Sect. 7.1). Afterwards, the generalized setting, mentioned in Remark 4.1, is explored for a composite objective function (Sect. 7.2). Note that we consider only the case of two nested expected values, because for more complex settings, there is a lack of efficient stochastic optimization techniques in the literature. Lastly, the line search techniques presented in Sect. 6 are tested and compared to the adaptive step sizes chosen in AdaGrad ( Sect. 7.3).

7.1 Academic example for constant step size

Define \(\mathcal {U}=\left[ -\frac{1}{2},\frac{1}{2}\right] \), \(\mathcal {X}=\left[ -\frac{1}{2},\frac{1}{2}\right] \) and consider the problem

It is easy to see that (20) has a unique solution \(u^*= 0\). Furthermore, the objective function is L-smooth (with Lipschitz constant 1) and even strictly convex. Thus, by Theorem 5.1, the CSG method with a constant positive step size \(\tau < 2\) produces a sequence \((u_n)_{n\in \mathbb {N}}\) that satisfies \(u_n\rightarrow 0\).

However, even in this highly regular setting, the commonly used basic SG method does not guarantee convergence of the iterates for a constant step size.

To demonstrate this behavior of both CSG and SG, we draw 2000 random starting points \(u_0\in \mathcal {U}\) and compare the iterates produced by CSG and SG with five different constant step sizes (\(\tau \in \{0.01,0.1,1,1.9,1.99\}\)). The CSG integration weights were calculated using the empirical method, i.e., the computationally cheapest choice. The results are shown in Fig. 10.

As expected, the iterates produced by the SG method do not converge to the optimal solution, but instead remain in a neighborhood of \(u^*\). The radius of said neighborhood depends on the choice of \(\tau \) and decreases for smaller \(\tau \), see [53, Theorem 4.6].

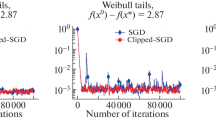

Comparison of the iterates produced by 500 steps of SG (red/first row) and CSG (green/second row) with 2000 random starting points \(u_0\in \mathcal {U}\). Both methods have been tested for the five different constant step sizes \(\tau \in \{0.01,0.1,1,1.9,1.99\}\) (first to fifth column). The shaded areas indicate the quantiles \(P_{0.1,0.9}\) (light) and \(P_{0.25,0.75}\) (dark), while the solid line represents the median of the 2000 runs (Color figure online)

7.2 Example for a composite objective function

To study the performance of CSG in the generalized setting, we consider an optimization problem in which the objective function is not of the type (1), but instead falls in the broader class of possible settings mentioned in Remark 4.1. Thus, we introduce the sets \(\mathcal {U}= [0,10]\), \(\mathcal {X}= [-1,1]\) and \(\mathcal {Y}=(-3,3)\) and define the optimization problem

The optimal solution \(u^*=\tfrac{\pi ^2}{2}\) to (21) can be found analytically. The nonlinear fashion in which the inner integral over \(\mathcal {X}\) enters the objective function prohibits us from using SG-type methods to solve (21). There is, however, the possibility to use the stochastic compositional gradient descent method (SCGD), which was proposed in [26] and is specifically designed for optimization problems of the form (21). Each SCGD iteration consists of two main steps: The inner integral is approximated by samples using iterative updating with a slow-diminishing step size. This approximation is then used to carry out a stochastic gradient descent step with a fast-diminishing step size.

For numerical comparison, we choose 1000 random starting points in \([\tfrac{11}{2},\tfrac{19}{2}]\), i.e., the right half of \(\mathcal {U}\). In our tests, the accelerated SCGD method (see [26]) performed better than basic SCGD, mainly since the objective function of (21) is strongly convex in a neighborhood of \(u^*\). Thus, we compare the results obtained by CSG to the aSCGD algorithm, for which we chose the optimal step sizes according to [26, Theorem 7]. For CSG, we chose a constant step size \(\tau = \tfrac{1}{30}\), which represents a rough approximation to the inverse of the Lipschitz constant L. The results are given in Fig. 11.

Furthermore, we are interested in the number of steps each method has to perform such that the distance to \(u^*\) lies (and stays) within a given tolerance. Thus, we also analyzed the number of steps after which the different methods obtain a result within a tolerance of \(10^{-1}\) in at least 90% of all runs. The results are shown in Fig. 12.

Absolute error \(\Vert u_n-u^*\Vert \) during the optimization process. From top to bottom: aSCGD (red), CSG with empirical weights (cyan), CSG with inexact hybrid weights with \(f(n) = \lfloor n^{1.5}\rfloor \) (green) and CSG with exact hybrid weights (blue). The shaded areas indicate the quantiles \(P_{0.1,0.9}\) (light) and \(P_{0.25,0.75}\) (dark) (Color figure online)

Minimum number of steps needed for the different algorithms such that at least 90% of the runs achieve an absolute error smaller than the given tolerance. the colors are chosen in the same fashion as in Fig. 11. The exact numbers for a tolerance of \(10^{-1}\) are 42 (exact hybrid), 76 (inexact hybrid), 440 (empirical) and 1382 (aSCGD)

7.3 Step size stability for bCSG

To analyze the proclaimed stability of bCSG with respect to the initially guessed step size \(\eta _n\), we set \(\mathcal {U}=[-10,10]^5\), \(\mathcal {X}=[-1,1]^5\) and consider the Problem

where

Problem (22) has the unique solution \(u^*=0\in \mathcal {U}\), which can be found analytically.

As a comparison to our method, we choose the AdaGrad [25] algorithm, as it is widely used for problems of type (1). Both AdaGrad and bCSG start each iteration with a presdescribed step size \(\eta _n>0\), based on which the calculation of the true step size \(\tau _n\) is performed (see Algorithm 2). We want to test the stability of both methods with respect to the initially chosen step length. For this purpose, we set \(\eta _n = \tfrac{\tau _0}{n^d}\), where \(\tau _0>0\), n is the iteration count and \(d\in [0,1]\) is fixed.

For each combination of \(\tau _0\) and d, we choose 1200 random starting points in \(\mathcal {U}\) and perform 500 optimization steps with both AdaGrad and backtracking CSG. Again, the integration weight calculation in bCSG was carried out using the empirical method, leading to a faster weight calculation while decreasing the overall progress per iteration performance. The median of the absolute error \(\Vert u_{500}-u^*\Vert \) after the optimization, depending on d and \(\tau _0\), is presented in Fig. 13.

While there are a few instances where AdaGrad yields a better result than backtracking CSG, we observe that the performance of AdaGrad changes rapidly, especially with respect to the parameter d. The backtracking CSG method on the other hand performs superior in most cases and is much less dependent on the choice of parameters.

8 Conclusion and outlook

In this contribution, we provided a detailed convergence analysis of the CSG method. The calculation of the integration weights was enhanced by several new approaches, which have been discussed and generalized for the possible implementation of mini-batches.

We provided a convergence proof for the CSG method when carried out with a small enough constant step size. Additionally, it was shown that CSG can be augmented by an Armijo-type backtracking line search, based on the gradient and objective function approximations generated by CSG in the course of the iterations. The resulting bCSG scheme was proven to converge under mild assumptions and was shown to yield stable results for a large spectrum of hyperparameters. Lastly, we combined a heuristic approach for approximating the Lipschitz constant of the gradient with bCSG to obtain a method that requires no a priori step size rule and almost no information about the optimization problem.

For all CSG variants, the stated convergence results are similar to convergence results for full gradient schemes, i.e., every accumulation point of the sequence of iterates is stationary and we have convergence in objective function values. Furthermore, as is the case for full gradient methods, if the optimization problem has only finitely many stationary points, the presented CSG variants produce a sequence which is guaranteed to converge to one of these stationary points.

However, none of the presented convergence results for CSG give any indication of the underlying rate of convergence. Furthermore, while the performance of all proposed CSG variants was tested on academic examples, it is important to analyze how they compare to algorithms from literature and commercial solvers, when used in real world applications.

Detailed numerical results concerning both of these aspects can be found in [27].

Data availibility

In this contribution, only simple academic examples are used to visualize the theoretical results. These can be reproduced based on the given algorithms. Nevertheless, the simulation datasets generated during the current study are available from the corresponding author on request.

References

Pflug, L., Bernhardt, N., Grieshammer, M., Stingl, M.: CSG: a new stochastic gradient method for the efficient solution of structural optimization problems with infinitely many states. Struct. Multidiscip. Optim. 61(6), 2595–2611 (2020). https://doi.org/10.1007/s00158-020-02571-x

Kim, C., Lee, J., Yoo, J.: Machine learning-combined topology optimization for functionary graded composite structure design. Comput. Methods Appl. Mech. Eng. 387, 114158–32 (2021). https://doi.org/10.1016/j.cma.2021.114158

Evstatiev, E.G., Finn, J.M., Shadwick, B.A., Hengartner, N.: Noise and error analysis and optimization in particle-based kinetic plasma simulations. J. Comput. Phys. 440, 110394–28 (2021). https://doi.org/10.1016/j.jcp.2021.110394

Wadbro, E., Berggren, M.: Topology optimization of an acoustic horn. Comput. Methods Appl. Mech. Eng. 196(1–3), 420–436 (2006). https://doi.org/10.1016/j.cma.2006.05.005

Hassan, E., Wadbro, E., Berggren, M.: Topology optimization of metallic antennas. IEEE Trans. Antennas Propag. 62(5), 2488–2500 (2014). https://doi.org/10.1109/TAP.2014.2309112

Semmler, J., Pflug, L., Stingl, M., Leugering, G.: Shape optimization in electromagnetic applications. In: New Trends in Shape Optimization. Internat. Ser. Numer. Math., vol. 166, pp. 251–269. Birkhäuser/Springer, Cham ( 2015). https://doi.org/10.1007/978-3-319-17563-8_11

Singh, S., Pflug, L., Mergheim, J., Stingl, M.: Robust design optimization for enhancing delamination resistance of composites. Internat. J. Numer. Methods Eng. 124(6), 1381–1404 (2023). https://doi.org/10.1002/nme.7168

Martin, M., Nobile, F.: Pde-constrained optimal control problems with uncertain parameters using saga. SIAM/ASA J. Uncertain. Quanti. 9(3), 979–1012 (2021). https://doi.org/10.1137/18M1224076

Borzì, A., von Winckel, G.: Multigrid methods and sparse-grid collocation techniques for parabolic optimal control problems with random coefficients. SIAM J. Sci. Comput. 31(3), 2172–2192 (2009). https://doi.org/10.1137/070711311

Babuška, I., Nobile, F., Tempone, R.: A stochastic collocation method for elliptic partial differential equations with random input data. SIAM Rev. 52(2), 317–355 (2010). https://doi.org/10.1137/100786356

Babuska, I., Tempone, R., Zouraris, G.E.: Galerkin finite element approximations of stochastic elliptic partial differential equations. SIAM J. Numer. Anal. 42(2), 800–825 (2004). https://doi.org/10.1137/S0036142902418680

Geiersbach, C., Pflug, G.C.: Projected stochastic gradients for convex constrained problems in Hilbert spaces. SIAM J. Optim. 29(3), 2079–2099 (2019). https://doi.org/10.1137/18M1200208