Abstract

We investigate optimal control problems with \(L^0\) constraints, which restrict the measure of the support of the controls. We prove necessary optimality conditions of Pontryagin maximum principle type. Here, a special control perturbation is used that respects the \(L^0\) constraint. First, the maximum principle is obtained in integral form, which is then turned into a pointwise form. In addition, an optimization algorithm of proximal gradient type is analyzed. Under some assumptions, the sequence of iterates contains strongly converging subsequences, whose limits are feasible and satisfy a subset of the necessary optimality conditions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

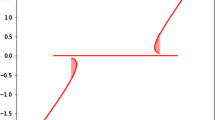

We are interested in the following optimal control problem written as an optimation problem:

subject to

Here, \(\Omega \subseteq {\mathbb {R}}^d\) is an open set supplied with the Lebesgue measure, \(f:L^2(\Omega )\rightarrow {\mathbb {R}}\) abstracts the state equation and smooth ingredients of the control problem, \(\alpha \ge 0\) is a parameter. The constraint (1.2) uses the so-called \(L^0\) norm (which is—of course—not a norm) that is defined for measurable \(u:\Omega \rightarrow {\mathbb {R}}\) by

Of course, \(\tau \in (0, {\text {meas}}(\Omega ))\) is a meaningful restriction.

The motivation to study such problems comes from sparse control: Find a control with small support, in our case: with prescribed size of support. The main challenge is the discontinuity and non-convexity of the \(\Vert \cdot \Vert _0\)-functional: Methods from differentiable or convex optimization are not applicable. In addition, due to the lack of weak lower continuity it is not possible to ensure existence of solutions in spaces of integrable functions. Nevertheless, we can look into optimality conditions that need to be satisfied at a solution. In order to study necessary optimality conditions, we will employ the Pontryagin maximum principle, which is first obtained in integral form, and then turned into a pointwise condition by means of natural arguments.

Let us mention related works. Optimal control problems with \(L^0\) norm of the control in the cost function were investigated in [10, 15]. An actuator design problem is studied in [11]: the controlled source term in the equation is \(\chi _\omega u\), where \(\chi _\omega \) is the characteristic function of \(\omega \), and the subset \(\omega \) and the control u are optimization variables. An additional volume constraint is posed on \(\omega \), which is equivalent to a \(L^0\) constraint on \(\chi _\omega u\). In that work, shape calculus and topological derivatives with respect to \(\omega \) are studied. Unfortunately, no optimality conditions involving these topological derivatives are given, which could be compared to our results. This is subject to future work. In the recent work [4], a shape optimization problem is turned into a problem with \(L^0\) constraints. There the control problem is posed in \(W^{1,p}\), and offers different challenges than the setting considered here. That work will become relevant if one wants to study the regularization of (1.1)–(1.2) in \(W^{1,p}\) spaces, which would guarantee existence of solutions due to the compact embedding of \(W^{1,p}\) in \(L^p\).

In this article, we will prove optimality conditions of Pontryagin maximum principle type. Related works can be found, e.g., in [5, 6, 14]. Those results are not directly applicable in our situation, since they do not cover \(L^0\) constraints. We will use a modification of the control perturbations considered in [5, 6, 14] that is adapted to the \(L^0\) constraints. These will give the maximum principle in integral form, see Theorem 4.4. In order to turn it into pointwise conditions in Theorem 4.5, we study integral minimization problems in Sect. 3.

In Sect. 5, we investigate an proximal gradient type algorithm, which extends our earlier works [13, 15], where optimization problems with \(L^0\) and \(L^p\), \(p\in (0,1)\), functionals were considered. Due to the simple nature of its sub-problems, this method is easy to implement. Other methods in finite-dimensional \(L^0\) constrained (or cardinality constrained) optimization include augmented Lagrangian methods [12] and DC-based reformulations [8]. We will prove some convergence results for the proximal gradient method. As it turns out, limit points of iterates do not satisfy the necessary condition Theorem 5.2 but only a subset of those, see Theorem 5.7. We hope that this work initiates further research on algorithms with \(L^0\) constraints in an infinite-dimensional setting.

Notation We will frequently use the following notation: For a measurable set A, we denote its characteristic function by \(\chi _A\). The integrand in the \(L^0\) norm is abbreviated by

Then \(\Vert u\Vert _0 = \int _\Omega |u(x)|_0 \,\textrm{d}x\). Note that \(u\mapsto |u|_0\) is neither continuous nor convex but lower semicontinuous. In addition, \(u\mapsto \Vert u\Vert _0\) as mapping from \(L^p(\Omega )\) to \({\mathbb {R}}\) is lower semicontintinuous but not weakly lower semicontinuous. Moreover, we will denote the support of the measurable function u by

2 Maximum principle for control of ordinary differential equations

Let us briefly and formally derive the maximum principle for an optimal control problem subject to ordinary differential equations with constraint \(\Vert u\Vert _0 \le \tau \), which serves as benchmark for more general situations. For illustration, let us consider the following control problem in Mayer form: Minimize

subject to

and

Here, \(T>0\) is fixed, and \(x:(0,T)\rightarrow {\mathbb {R}}^n\) and \(u:(0,T)\rightarrow {\mathbb {R}}\) are the state and control. The functions \(f:{\mathbb {R}}\times {\mathbb {R}}^n \times {\mathbb {R}}\) and \(l:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) are assumed to be smooth for simplicity. Employing a standard procedure, the constraint \( \Vert u\Vert _0 \le \tau \) can be written equivalently as an additional end-point constraint on the artificial state \(x_{n+1}\) as follows:

Let us set \(\tilde{f}(t,x,u):=(f(t,x,u), |u|_0)\). Then the classical maximum principle for an optimal control \(\bar{u}\) with state \((\bar{x},\bar{x}_{n+1})\) and adjoint \((\bar{p},\bar{p}_{n+1})\in {\mathbb {R}}^{n+1}\) is: there are \((\lambda _0,\lambda _{n+1})\ne 0\), \(\lambda _0\ge 0\), such that the following conditions are satisfied:

where \(H(t,x,u,p):=p^Tf(t,x,u)\) is the Hamiltonian of the original problem, and \(\bar{p}\) solves the adjoint system

and

Hence, \(\bar{p}_{n+1}\) is constant, \(\bar{p}_{n+1}\le 0\), and \(-\bar{p}_{n+1}\) can be interpreted as Lagrange multiplier to the constraint \(\Vert u\Vert _0\le \tau \). For a precise formulation of the maximum principle, we refer to [9]. In order to obtain the system in qualified form, i.e., \(\lambda _0>0\), one needs additional conditions (constraint qualifications).

Summarizing the above considerations, the following two conditions will serve as necessary optimality conditions: \(\bar{u}(t)\) maximizes the penalized Hamiltonian, i.e.,

and \(\lambda \ge 0\) satisfies the complementarity condition

Let us now transfer these results to optimal control problems, where the control is no longer defined on a subset of the real line but defined on a set \(\Omega \subseteq {\mathbb {R}}^d\), \(d>1\). For illustration, let now \(\Omega \subseteq {\mathbb {R}}^d \), \(d>1\), be a bounded domain. As above, we want to translate the control constraint \(\Vert u\Vert _0\le \tau \) to an auxiliary state constraint. In fact, if we define \(y_0\) as the weak solution in \(H^1(\Omega )\) of the auxiliary state equation

then it follows \(\int _\Omega y_0\,\textrm{d}x= \int _\Omega |u|_0 \,\textrm{d}x\), and the control constraint \(\Vert u\Vert _0\le \tau \) is equivalent to the constraint \(\int _\Omega y_0\,\textrm{d}x\le \tau \) on the auxiliary state y. In [6], the maximum principle for problems with elliptic partial differential equations was obtained. In order to get a system in qualified form (\(\lambda _0>0\)) strong stability is used: the optimal value function has to be locally Lipschitz continuous with respect to the parameter \(\tau \). To the best of our knowledge, such a result is not available in the literature for the \(L^0\) constraints considered here.

Thus, we will proceed differently. We will not formulate the integral control constraint as a state constraint. Rather we will modify the technique of [6] to only consider perturbations that satisfy the constraint. In this way, we get a maximum principle in integral form satisfied for all functions v with \(\Vert v\Vert _0\le \tau \). This integral maximum principle can be translated into a pointwise one. In that way, we get the final system in qualified form while circumventing the strong stability requirement.

3 Optimality conditions for integral functionals

First, we are going to derive optimality conditions for integral functionals. This is later used to transform the maximum principle from integral to pointwise form. We will consider integral functionals generated by normal integrands. In this section, let \(\Omega \subseteq {\mathbb {R}}^d\) be a Lebesgue measurable set.

Definition 3.1

The function \(f:\Omega \times {\mathbb {R}}\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is called normal integrand if there exist Caratheodory functions \((f_n)_{n\in {\mathbb {N}}}\) such that for all u and almost all \(x\in \Omega \)

for all u and almost all \(x\in \Omega \).

This definition is from [2, Def. 1] with equivalent characterizations in [2, Thm. 2]. We will now prove optimality conditions for the following problem: Minimize

subject to the constraint

Here, the minimization is over all measurable u such that \(g(\cdot ,u)\) is integrable. Clearly, if \(\bar{u}\) is a solution of (3.1) and \(\bar{u}(x)\ne 0\) then \(g(x,\bar{u}(x)) = \inf _{v\in {\mathbb {R}}} g(x,v)\). The latter function will play an important role in the subsequent analysis. We work with the following assumption.

Assumption 1

-

1.

\(g:\Omega \times {\mathbb {R}}\rightarrow {\mathbb {R}}\cup \{+\infty \}\) is a normal integrand,

-

2.

\(g(\cdot ,0)\) is integrable,

-

3.

\(\tau \in (0,{\text {meas}}(\Omega ))\).

Let us define the non-positive function \(\tilde{v}:\Omega \rightarrow {\mathbb {R}}\cup \{-\infty \}\) by

We start with a technical lemma that helps to prove integrability of \(\tilde{v}\) under suitable assumptions.

Lemma 3.2

Let \(g:\Omega \times {\mathbb {R}}\rightarrow {\mathbb {R}}\cup \{+\infty \}\) be a normal integrand. Then there are measurable functions \(v_n\) and \(u_n\) such that

for almost all \(x\in \Omega \). In addition, \(\tilde{v}\) is measurable.

Proof

Let \((g_n)\) be Caratheodory functions such that \(g(x,u)=\sup _n g_n(x,u)\) for all u and almost all \(x\in \Omega \). Let us define the set-valued mapping

so E(x) is the epi-graph of \(v\mapsto g(x,v)-g(x,0)\). Then it holds

Each of the set-valued mappings in the intersection is measurable by [1, Thm. 8.2.9], so E is measurable by [1, Thm. 8.2.4]. Using [1, Thm. 8.2.11], we get the measurability of \(u_n\) and \(v_n\). Measurability of \(\tilde{v}\) is a consequence of \(\tilde{v}(x) = \inf _n v_n(x)\). \(\square \)

Using the function \(\tilde{v}\) from (3.2), we define the sets

Lemma 3.3

Let \(u\in {\mathcal {U}}_{\tau }\) be given such that \(g(\cdot ,u)\) is integrable. Let \(s\le 0\) and \(S\subseteq \Omega \) with \({\text {meas}}(S)=\tau \) be such that \(\tilde{v}\) (see (3.2)) is integrable on S and

Then it holds

This inequality is satisfied with equality only if the following conditions are satisfied:

-

1.

\(g(x,u(x)) - g(x,0) = \tilde{v}(x)\) for almost all \(x\in {\text {supp}}u\),

-

2.

\(\Omega _{< s} \subseteq {\text {supp}}u\subseteq \Omega _{\le s}\),

-

3.

\(s=0\) or \({\text {meas}}({\text {supp}}u)=\tau \).

Proof

Let \(A:={\text {supp}}u\). Then \({\text {meas}}(A) \le \tau = {\text {meas}}(S)\), and it follows \({\text {meas}}(A \setminus S) \le {\text {meas}}(S \setminus A)\). Using (3.3), we estimate

Equality in the above chain of inequalities is obtained only if (a) \(g(\cdot ,u) - g(\cdot ,0) = \tilde{v}\) on A, (b) \(\tilde{v} = s\) on \(A\setminus S\), hence \(A \subseteq \Omega _{\le s}\), (c) \(s ({\text {meas}}(A {\setminus } S) - {\text {meas}}(S {\setminus } A))=0\), and (d) \(s {\text {meas}}(S {\setminus } A) = \int _{S {\setminus } A} \tilde{v}\,\textrm{d}x\). Condition (d) implies \(\tilde{v} = s\) on \(S \setminus A\), hence \(\Omega _{< s} \subseteq A\). If \(s\ne 0\) then condition (c) implies \({\text {meas}}(A)=\tau \). \(\square \)

With the help of these sets, we can fully characterize the solutions of (3.1).

Theorem 3.4

Let Assumption 1 be satisfied. Then \(\bar{u}\) is a solution of (3.1) if and only if there are \(s\le 0\) and \(A\subseteq \Omega \) with \({\text {meas}}(A)=\tau \) such that

\(\tilde{v}\) is integrable on A, and

where \(\tilde{v}\) is defined in (3.2).

Proof

Let \(\bar{u}\) be a solution of (3.1). Let \((v_n)\) and \((u_n)\) be given by Lemma 3.2. By construction, \((v_n(x))\) is monotonically decreasing and \(v_n(x)\rightarrow \tilde{v}(x)\) for almost all \(x\in \Omega \). Let \(B\subseteq \Omega \) with \({\text {meas}}(B)\le \tau \). We want to show that \(\chi _Bu_n\) is feasible for (3.1). It remains to argue that \(g(\cdot ,\chi _Bu_n)\) is integrable. If the negative part of \(g(\cdot ,\chi _Bu_n)\) would not be integrable, then problem (3.1) would be unsolvable, as we could find subsets \(B_k\subseteq B\) such that \(\int _\Omega g(x,\chi _{B_k} u_n)\,\textrm{d}x\rightarrow -\infty \) for \(k\rightarrow \infty \). So the negative part of \(g(\cdot ,\chi _Bu_n)\) is integrable, and the integrability of \(g(\cdot ,\chi _Bu_n)\) is a consequence of \(g(x,\chi _B u_n(x)) - g(x,0) \le 0\) for almost all x.

Then \(\chi _B u_n\) is feasible for (3.1), which implies

By the monotone convergence theorem, it follows that \(\tilde{v}\) is integrable on B and

The increasing functions \(s\mapsto {\text {meas}}(\Omega _{<s})\) and \(s\mapsto {\text {meas}}(\Omega _{\le s})\) are continuous from the left and from the right, respectively. Given \(\tau \), there is a uniquely determined \(s\le 0\) such that \({\text {meas}}(\Omega _{<s}) \le \tau \le {\text {meas}}(\Omega _{\le s})\). Since the measure space is non-atomic, the celebrated Sierpiński theorem implies that there is \(S\subseteq \Omega \) such that \(\Omega _{< s} \subseteq S \subseteq \Omega _{\le s}\) and \({\text {meas}}(S)=\tau \).

By the first part of the proof, \(\tilde{v}\) is integrable on S. Then s and S satisfy the requirements of Lemma 3.3. Using Lemma 3.3 and (3.6), we get

Hence, the inequality of Lemma 3.3 is satisfied with equality, which implies \( \Omega _{< s} \subseteq {\text {supp}}\bar{u} \subseteq \Omega _{\le s}\). It remains to utilize that \(s=0\) or \({\text {meas}}({\text {supp}}\bar{u})=\tau \). If \({\text {meas}}(\bar{u})=\tau \) then (3.4) and (3.5) are satisfied with \(A:={\text {supp}}\bar{u}\). If \({\text {meas}}({\text {supp}}\bar{u})<\tau \) then \(s=0\), and we can find a set A with \({\text {meas}}(A)=\tau \) and \({\text {supp}}\bar{u} \subseteq A \subseteq \Omega = \Omega _{\le 0}\), which is (3.4). Using Lemma 3.3 and \(\bar{u}(x)=0\) on \(A\setminus {\text {supp}}\bar{u}\), we see that (3.5) is satisfied.

Let now \(\bar{u},s,A\) satisfy (3.4) and (3.5) such that \(\tilde{v}\) is integrable on A. Let \(u\in {\mathcal {U}}_{\tau }\). Then by Lemma 3.3 with \(S:=A\) we find

and \(\bar{u}\) solves (3.1). \(\square \)

Corollary 3.5

Let Assumption 1 be satisfied. Let \(\bar{u}\) be a solution of (3.1). Let \(s\le 0\) be given by Theorem 3.4. Then for almost all \(x\in \Omega \)

Proof

This follows from Theorem 3.4, (3.4): If \(\bar{u}(x)\ne 0\), then \(\tilde{v}(x) \le s\). \(\square \)

Let us define the value function of (3.1) by

Using the above characterization of solutions, we have the following strong stability result.

Lemma 3.6

Let Assumption 1 be satisfied. Let \(\tau ,\tau ^{\prime } \in (0,{\text {meas}}(\Omega ))\) with \(\tau < \tau ^{\prime }\) be given. Then \(0\le V(\tau )-V(\tau ^{\prime }) \le |s| (\tau - \tau ^{\prime })\), where s is associated to \(\tau \) by Theorem 3.4.

Proof

Let \(u_\tau , u_{\tau ^{\prime }}\) be solutions to \(\tau ,\tau ^{\prime }\). Due to Theorem 3.4 there are \(s,s^{\prime }\), \(A,A^{\prime }\) such that \({\text {meas}}(A) = \tau \), \({\text {meas}}(A^{\prime }) = \tau ^{\prime }\), and

If \(s<s^{\prime }\) then \(\Omega _{\le s} \subseteq \Omega _{< s^{\prime }}\) and \(A\subseteq A^{\prime }\), which implies

If \(s=s^{\prime }\) then

resulting in the same estimate. \(\square \)

In addition, we obtain the following result, which says that \(-s\) can be interpreted as Lagrange multiplier to the constraint \(\Vert u\Vert _0\le \tau \).

Corollary 3.7

Let Assumption 1 be satisfied. Let \(\bar{u}\) be a solution of (3.1). Let \(s\le 0\) be given by Theorem 3.4. Then we have

Proof

Suppose \(\Vert \bar{u}\Vert _0< \tau \). By Theorem 3.4 there is A with \({\text {meas}}(A)=\tau \) and \( {\text {supp}}\bar{u} \subseteq A\subseteq \Omega _{\le s}\). Due to (3.5), \(\tilde{v}=0\) on \(A\setminus {\text {supp}}\bar{u} \subseteq \Omega _{\le s}\), where \(A\setminus {\text {supp}}\bar{u}\) has positive measure. Hence, \(s=0\) follows by definition of \(\Omega _{\le s}\), see (3.3). \(\square \)

Furthermore, \(\bar{u}\) is a solution of unconstrained penalized problems, where \(-s\) plays the role of a penalization parameter.

Corollary 3.8

Let Assumption 1 be satisfied. Let \(\bar{u}\) be a solution of (3.1). Let \(\lambda :=-s\ge 0\), where s is given by Theorem 3.4. Then \(\bar{u}\) is a solution of

and a solution of

Proof

Let s and A be as in Theorem 3.4. Let u be measurable and set \(B:={\text {supp}}u\). As in the proof of Lemma 3.3, we get

and

which results in

We proceed with

where we used \(\Vert \bar{u}\Vert _0 \le {\text {meas}}(A)\), \(\Vert u\Vert _0={\text {meas}}(B)\), and \(s\le 0\). This proves the first claim. Using the result of Corollary 3.7 and \(s\le 0\), we get

which proves the second claim. \(\square \)

Let us prove the following converse result.

Corollary 3.9

Let Assumption 1 be satisfied. Let \(\lambda ^{\prime }\ge 0\). Let \(\bar{u}\) with \(\Vert \bar{u}\Vert _0=\tau \) be a solution of

Then \(\bar{u}\) solves (3.1).

Proof

Let u be given with \(\Vert u\Vert _0\le \tau \). By optimality of \(\bar{u}\), we have

which implies the claim. \(\square \)

Let us close the section with the following observation: Every minimum of the integral functional \(\int _\Omega g(x,u(x))\,\textrm{d}x\) subject to the constraint \(u\in {\mathcal {U}}_{\tau }\cap L^p(\Omega )\) is a solution of (3.1).

Theorem 3.10

Let Assumption 1 be satisfied. Let \(p\in [1,\infty ]\). Let \(\bar{u}\in L^p(\Omega )\) be a solution of

Then \(\bar{u}\) solves (3.1).

Proof

Let \((u_n)\) and \((v_n)\) be given by Lemma 3.2, which implies \(u_n\in L^\infty (\Omega )\) for all n. Let \(B\subseteq \Omega \) with \({\text {meas}}(B)\le \tau \) be given, hence \(\chi _B u_n\in L^p(\Omega )\) for all n. Arguing as in the proof of Theorem 3.4, we get \(\int _B v_n \,\textrm{d}x\rightarrow \int _B \tilde{v} \,\textrm{d}x\ge \int _\Omega g(x,\bar{u}(x)) - g(x,0)\,\textrm{d}x\) by monotone convergence, see (3.6). Let now u be feasible for (3.1). Let \(B:={\text {supp}}u\). Then

hence \(\bar{u}\) solves (3.1) as well. \(\square \)

Remark 3.11

All the results of this section are valid in the more general situation of a non-atomic, complete, \(\sigma \)-finite measure space.

4 Optimal control of elliptic partial differential equation with \(L^0\) constraint

In this section, we consider the following optimal control problem: Minimize

subject to

where \(y_u\) is the weak solution of the equation

We impose the following assumption on the data of this problem:

Assumption 2

-

1.

\(\Omega \) is an open and bounded domain in \({\mathbb {R}}^d\), \(d \in \{2,3\}\), with Lipschitz boundary \(\partial \Omega \). Let \(\tau \in (0,{\text {meas}}(\Omega ))\).

-

2.

A denotes a second-order elliptic operator in \(\Omega \) of the type

$$\begin{aligned} Ay=-\sum _{i,j=1}^d\partial _{x_j}(a_{ij}(x)\partial _{x_i}y) \end{aligned}$$with coefficients \(a_{ij} \in C(\bar{\Omega })\). In addition, there is \(\Lambda >0\) such that for almost all \(x\in \Omega \)

$$\begin{aligned} \sum _{i,j=1}^d a_{ij}(x)\xi _i\xi _j \ge \Lambda |\xi |^2 \quad \forall \xi \in {\mathbb {R}}^d. \end{aligned}$$ -

3.

The functions \(f,L:\Omega \times {\mathbb {R}}\times {\mathbb {R}}\) are Caratheodory functions, i.e., \(x\mapsto f(x,y,u)\) and \(x\mapsto L(x,y,u)\) are measurable for all \(y,u\in {\mathbb {R}}\), and \((u,y) \mapsto f(x,y,u)\) and \((u,y) \mapsto L(x,y,u)\) are continuous for almost all \(x\in \Omega \). We assume that f, L are continuously differentiable with respect to y for almost all \(x\in \Omega \) and all \(u\in {\mathbb {R}}\) with \(f_y(x,y,u)\le 0\). In addition, for all \(M>0\) there are non-negative \(a_M\in L^1(\Omega )\), \(b_M\in {\mathbb {R}}\), \(c_M\in L^2(\Omega )\) such that for almost all \(x\in \Omega \)

$$\begin{aligned} |L(x,y,u)| + |L_y(x,y,u)| \le a_M(x) + b_M |u|^2 \quad \forall |y|\le M \end{aligned}$$and

$$\begin{aligned} |f(x,y,u)|+ |f_y(x,y,u)| \le c_M(x) + b_M | u| \quad \forall |y|\le M, \end{aligned}$$where \(f_y,L_y\) denote the partial derivatives of f, L with respect to y.

Let us briefly comment on those assumptions. The conditions on the differential equation are to ensure \(W^{1,p}\) regularity of weak solutions y of (4.1c) for some \(p>d\), which guarantees \(y\in L^\infty (\Omega )\). The conditions on L and f ensure that the Nemyzki operators induced by them are continuous (and differentiable with respect to y) from \(L^\infty (\Omega ) \times L^2(\Omega )\) to \(L^1(\Omega )\) and \(L^2(\Omega )\), respectively. We opted for this set of conditions in order to be able to use the results of [6] on regularity of solutions of partial differential equations. This allows us to focus on the \(L^0\) constraints. Of course, other settings are possible (e.g., control constraints, other types of boundary conditions, parabolic equations).

As consequence of the assumptions, we have the following solvability and regularity result for (4.1c).

Theorem 4.1

Let Assumption 2 be satisfied. Let \(u\in L^2(\Omega )\) be given. Then there is a uniquely determined \(y_u\in W^{1,p}_0(\Omega )\) solving the Eq. (4.1c), where \(p>d\).

Proof

This is a consequence of [6, Theorem 1]. \(\square \)

We define the Hamiltonian of the control problem (4.1) by

Note that the inequality constraint \(\Vert u\Vert _0\le \tau \) is not taken into account in the Hamiltonian, which is different to the approach in Sect. 2. In addition, we defined the Hamiltonian in the qualified sense, that is, there is no “multiplier” \(\varphi _0\ge 0\) associated to the functional L by \(\varphi _0L\).

We are going to prove the maximum principle in integrated form first. The main difference to other works, e.g., [6, 14], is the construction of perturbations that satisfy the constraint \(\Vert u\Vert _0\le \tau \). Here, we will adapt a result of [14] to generate these perturbations. It is based on Lyapunov’s theorem.

Lemma 4.2

Let \(\rho \in (0,1)\). Let \(g_1,\dots , g_m \in L^1(\Omega )\) be given. Then there is a sequence \((E_\rho ^n)\) of measurable subsets of \(\Omega \) such that

and

Proof

The proof is an adaptation of the proof of [14, Lemma 4.2]. It is included for the convenience of the reader.

Let \((\varphi _n)\) be a dense subset of \(L^1(\Omega )\). For \(n\ge 0\), define \(f^n:\Omega \rightarrow {\mathbb {R}}^{m+n}\) by

By the Lyapunov convexity theorem [7, Corollary IX.5], there is \(E_\rho ^n \subseteq \Omega \) such that \(\rho \int _\Omega f^n \,\textrm{d}x= \int _{E_\rho ^n} f^n \,\textrm{d}x\). By definition of \(f^n\), this implies \(\int _{E_\rho ^n} g_k \,\textrm{d}x= \rho \int _\Omega g_k\,\textrm{d}x\) for all k.

Let now \(\varphi \in L^1(\Omega )\) be given. Take \(\epsilon >0\). By density, there is N such that \(\Vert \varphi - \varphi _N\Vert _{L^1(\Omega )} <\epsilon \). Then for all \(n>N\), we get

which proves the claim. \(\square \)

Corollary 4.3

Let \((E_\rho ^n)\) be a sequence of measurable subsets of \(\Omega \) such that

Let \(h \in L^2(\Omega )\) be given. Then \((1-\frac{1}{\rho }\chi _{E_\rho ^n})h \rightarrow 0\) in \(W^{-1,p}(\Omega )\) where \(p\in (1,+\infty )\) for \(d=2\) and \(p\in (1,6)\) for \(d=3\).

Proof

Due to the assumptions, we have \((1-\frac{1}{\rho }\chi _{E_\rho ^n})h \rightharpoonup 0\) in \(L^2(\Omega )\). Under the conditions on p, the embedding \(W^{1,p^{\prime }}_0(\Omega )\hookrightarrow L^2(\Omega )\) is compact, where \(p^{\prime }\) is given by \(\frac{1}{p}+\frac{1}{p^{\prime }}=1\). Hence, the embedding \(L^2(\Omega )\hookrightarrow W^{-1,p}(\Omega )\) is compact as well. \(\square \)

Now we have all tools available to prove the maximum principle. The proof is very similar to the proofs in [6]. Hence, we will be brief on arguments that are similar to those in [6]. We first prove the maximum principle in integrated form.

Theorem 4.4

Let \(\bar{u}\) be a local solution of (4.1a) and (4.1b) in the \(L^2(\Omega )\)-sense with associated state \(\bar{y}:= y_{\bar{u}} \in W^{1,p}_0(\Omega )\), where \(p>d\) is such that \(W^{1,p^{\prime }}_0(\Omega )\hookrightarrow L^2(\Omega )\), where \(p^{\prime }\) is given by \(\frac{1}{p}+\frac{1}{p^{\prime }}=1\). Then there is \(\bar{\varphi }\in W^{1,p^{\prime }}_0(\Omega )\) that solves the adjoint equation

In addition,

for all \(v\in L^2(\Omega )\) be with \(\Vert v\Vert _0 \le \tau \).

Proof

Let \(v\in L^2(\Omega )\) with \(\Vert v\Vert _0 \le \tau \). Set \(h:=f(\cdot , \bar{y}, v) - f(\cdot , \bar{y}, \bar{u})\), \(m:=4\), and

Then by Lemma 4.2 and Corollary 4.3, for each \(\rho >0\) there is a set \(E_\rho \) such that

and

Let us set

Then

and

Hence, \(J(\bar{u}) \le J(u_\rho )\) by local optimality of \(\bar{u}\) for \(\rho >0\) small enough. Arguing as in [6, Lemma 2], we find

where

and \(z\in W^{1,p}_0(\Omega )\) satisfies

In addition, there is \(\bar{\varphi }\in W^{1,p^{\prime }}_0(\Omega )\) [6, Theorem 2] that solves the adjoint equation

This implies

which is the claim. \(\square \)

Using Theorem 3.10 and the results of Sect. 3, we can turn the maximum principle from integrated to pointwise form.

Theorem 4.5

Let \(\bar{u}\) be a local solution of (4.1a)–(4.1b) in the \(L^2(\Omega )\)-sense with associated state \(\bar{y}:= y_{\bar{u}} \in W^{1,p}_0(\Omega )\), where \(p>d\) is such that \(W^{1,p^{\prime }}_0(\Omega )\hookrightarrow L^2(\Omega )\) where \(p^{\prime }\) is given by \(\frac{1}{p}+\frac{1}{p^{\prime }}=1\), and adjoint \(\bar{\varphi }\in W^{1,p^{\prime }}_0(\Omega )\) given by Theorem 4.4.

Then there is a number \(s\le 0\) such that

and for almost all \(x\in \Omega \)

In addition, we have the following properties for almost all \(x\in \Omega \):

Proof

Let us define g by

Then g is a normal integrand, and \(g(\cdot ,0)\) is integrable. Due to Theorem 4.4, \(\bar{u}\) solves

By Theorem 3.10, \(\bar{u}\) solves (3.1). Hence, the results of Sect. 3 are applicable. Let \(s\le 0\) be as in Theorem 3.4. Then the claim follows with Corollaries 3.5, 3.7 and 3.8. \(\square \)

This result shows that the conditions (2.1) and (2.2), which we derived for an ODE control problem, are satisfied in adapted form in the PDE control problem.

5 Proximal gradient algorithm

In this section, we will analyze a proximal gradient algorithm applied to a problem with \(L^0\) constraints. Here, we consider problems of the type

We are going to use the following set of assumptions.

Assumption 3

-

1.

\(\Omega \subseteq {\mathbb {R}}^d\) is Lebesgue measurable with \({\text {meas}}(\Omega )\in (0,\infty )\), \(\tau \in (0,{\text {meas}}(\Omega ))\).

-

2.

The function \(f:L^2(\Omega )\rightarrow {\mathbb {R}}\) is bounded from below and Fréchet differentiable. In addition, \(\nabla f:L^2(\Omega )\rightarrow L^2(\Omega )\) is Lipschitz continuous with constant \(L_f\), i.e.,

$$\begin{aligned} \Vert \nabla f(u_1)-\nabla f(u_2)\Vert _{L^2(\Omega )}\le L_f\Vert u_1-u_2\Vert _{L^2(\Omega )} \end{aligned}$$holds for all \(u_1,u_2\in L^2(\Omega )\).

-

3.

\(\alpha \ge 0\).

These requirements on f are well-established in the context of first-order optimization methods. The requirement of global Lipschitz continuity of \(\nabla f\) and knowledge of the Lipschitz modulus \(L_f\) can be overcome by a suitable back-tracking method, see [15, Section 3.3], which can be used in our situation as well.

Remark 5.1

Under some restrictions, the problem of Sect. 4 satisfies these assumptions. Let us assume that L is of the form \(L(x,y,u)=L(x,y) + \frac{\alpha }{2} u^2\). Define \(f(u):= \int _\Omega L(x,y_u(x))\,\textrm{d}x\), where \(y_u\) is the solution of (4.1c). If the nonlinearity in the equation is linear in u, e.g., \(f(x,y,u) = f(x,y)+u\), then f satisfies Assumption 3. See also the discussion in [13, Section 2.2].

Let us first prove a necessary optimality condition for (5.1). The proof is similar to Theorem 4.4 above.

Theorem 5.2

Suppose f is a Fréchet differentiable mapping from \(L^1(\Omega )\rightarrow {\mathbb {R}}\). Let \(\bar{u}\) be a local solution of (5.1). Then it holds

for all \(v\in L^2(\Omega )\) be with \(\Vert v\Vert _0 \le \tau \).

In addition, there is a number \(s\le 0\) such that

If \(\alpha >0\) then for almost all \(x\in \Omega \) the following conditions are fulfilled:

If \(\alpha =0\) then \(\nabla f(\bar{u})=0\).

Proof

Let us set \(F(u):=f(u) + \frac{\alpha }{2} \Vert u\Vert _{L^2(\Omega )}^2\), which is Fréchet differentiable on \(L^2(\Omega )\) with gradient \(\nabla F(u)=\nabla f(u)+\alpha u\). Let \(v\in L^2(\Omega )\) with \(\Vert v\Vert _0 \le \tau \). Set \(m:=5\), and

Then by Lemma 4.2, for each \(\rho >0\) there is a set \(E_\rho \) such that \(\int _{E_\rho } g_j \,\textrm{d}x= \rho \int _\Omega g_j\,\textrm{d}x\) for all \(j=1\dots m\). As in the proof of Theorem 4.4, the function \(u_\rho := \bar{u} + \chi _{E_\rho }(v-\bar{u})\) satisfies \( \Vert u_\rho \Vert _0 \le \tau \) and \( \Vert u_\rho -\bar{u}\Vert _{L^2(\Omega )}^2 = \rho \Vert v-\bar{u}\Vert _{L^2(\Omega )}^2\). Due to Fréchet differentiability and the construction of \(E_\rho \) and \(u_\rho \), we have

Dividing by \(\rho >0\) and passing to the limit \(\rho \searrow 0\), implies by local optimality

which proves the first claim. The second claim follows from Theorem 3.4 and Corollaries 3.5 and 3.7 with \(\tilde{v} = -\frac{1}{2\alpha } |\nabla f(\bar{u})|^2\). \(\square \)

Let us briefly give the motivation of the proximal gradient algorithm. The well-known steepest descent method applied to the unconstrained differentiable problem \(\min _u f(u)\) amounts to the iteration

where \(t_k>0\) is a suitable step-size. It is immediate that \(u_{k+1}\) is a solution of the unconstrained problem

While it is impossible to add the constraint \(\Vert u\Vert _0\le \tau \) to the iteration procedure (5.5), this constraint can be easily imposed on the problem (5.6). The resulting proximal gradient (or forward-backward) algorithm reads as follows. Here, we replaced the parameter \(t_k\) by a fixed parameter L, which takes the place of \(\frac{1}{t_k}\).

Algorithm 1

(Proximal gradient algorithm) Choose \(L>0\) and \(u_0\in L^2(\Omega )\). Set \(k=0\).

-

1.

Compute \(u_{k+1}\) as solution of

$$\begin{aligned} \min _{u\in {\mathcal {U}}_{\tau }\cap L^2(\Omega )} f(u_k)+\nabla f(u_k) \cdot (u-u_k) + \frac{L}{2}\Vert u-u_k\Vert ^2_{L^2(\Omega )} + \frac{\alpha }{2} \Vert u\Vert _{L^2(\Omega )}^2 \end{aligned}$$(5.7) -

2.

Set \(k:=k+1\), go to step 1.

The functional to be minimized in (5.7) can be written as an integral functional \(\int _\Omega g(x,u(x))\,\textrm{d}x\) with g defined by

The pointwise minimum of g is realized by the function \(\tilde{u}\in L^2(\Omega )\) defined by

Clearly, \(g(\cdot ,0)\) is integrable, Assumption 1 is satisfied, and the results of Sect. 3 are applicable. Hence, a solution of (5.7) can be computed as in Theorem 3.4. Here, \(L>0\) is important: note that integrability of \(g(\cdot ,u)\) implies \(u\in L^2(\Omega )\). It is easy to verify that

which corresponds to \(\tilde{v}\) in Theorem 3.4.

Lemma 5.3

Let Assumption 3 be satisfied. Let \(L>0\) and \(u_k\in L^2(\Omega )\) be given. Then (5.7) is solvable. In addition, there is \(\lambda _{k+1}\ge 0\) such that for every solution \(u_{k+1}\) of (5.7) it holds

and \(u_{k+1}\) solves

Moreover, for almost all \(x\in \Omega \) we have

and

Proof

Existence of solutions follows from Theorem 3.4. The properties of \(\lambda _{k+1}:=-s\), where s is as in Theorem 3.4, are consequences of Corollary 3.7 and Corollary 3.8. The claim (5.8) is a consequence of Corollary 3.8 and [15, Corollary 3.9]. Finally, Corollary 3.5 implies (5.9). \(\square \)

The iterates of the algorithm satisfy the following properties.

Theorem 5.4

Let Assumption 3 be satisfied. Suppose \(L>L_f\). Let \((u_k)\) be a sequence of iterates generated by Algorithm 1. Then it holds that:

-

1.

The sequences \((u_k)\) and \((\nabla f(u_k))\) are bounded in \(L^2(\Omega )\) if \(\alpha >0\).

-

2.

The sequence \((f(u_k) + \frac{\alpha }{2}\Vert u_k\Vert _{L^2(\Omega )})\) is monotonically decreasing and converging.

-

3.

\(\sum _{k=0}^\infty \Vert u_{k+1}-u_k\Vert _{L^2(\Omega )}^2 <\infty \).

Proof

These claims can be proven as in [15, Theorem 3.13]. \(\square \)

Let us define the following sequence

Using Eq. (5.8), we have the following estimate of \((\chi _k)\), which is similar to [15, Lemma 3.12].

Lemma 5.5

Let \((u_k)\) be iterates of Algorithm 1. Then it holds

Proof

Let \(x\in \Omega \) such that \(\chi _{k+1}(x)\ne \chi _k(x)\). Then \(|\chi _{k+1}(x)- \chi _k(x)|=1\), and exactly one of \(u_{k+1}(x)\) and \(u_k(x)\) is zero. Suppose \(u_{k+1}(x)=0\) and \(u_k(x)\ne 0\). Then \(|u_{k+1}(x)-u_k(x)|=|u_k(x)|\ge \sqrt{ \frac{2 \lambda _k}{L+\alpha }}\). If \(u_{k+1}(x)\ne 0\) and \(u_k(x)=0\) then \(|u_{k+1}(x)|\ge \sqrt{ \frac{2 \lambda _{k+1}}{L+\alpha } }\). And the claim is proven. \(\square \)

Under the assumption that \((\lambda _k)\) is bounded from below by a positive number, we can prove feasibility of weak limit points of the algorithm. In the general situation, it is not clear how to prove such a result as the map \(u\mapsto \Vert u\Vert _0\) is not weakly sequentially lower semi-continuous from \(L^2(\Omega ) \rightarrow {\mathbb {R}}\).

Theorem 5.6

Let Assumption 3 be satisfied. Suppose \(L>L_f\). Let \((u_k)\) be a sequence of iterates generated by Algorithm 1. Suppose

where \((\lambda _k)\) is as in Lemma 5.3.

Then \(\chi _k\rightarrow \bar{\chi }\) in \(L^1(\Omega )\), and every weak sequential limit point \(\bar{u}\) of \((u_k)\) is feasible for the \(L^0\) constraint, i.e. \(\Vert \bar{u}\Vert _0\le \tau \).

Proof

Let \(\lambda :=\liminf _{k\rightarrow \infty } \lambda _k>0\). Then for all k sufficiently large, we have

The summability of \(\Vert u_{k+1}-u_k\Vert _{L^2(\Omega )}^2\) implies those of \(\Vert \chi _{k+1}-\chi _k\Vert _{L^1(\Omega )}\). Hence \((\chi _k)\) is a Cauchy sequence in \(L^1(\Omega )\), \(\chi _k\rightarrow \bar{\chi }\) in \(L^1(\Omega )\), and \(\bar{\chi }\) is a characteristic function. As \((\chi _k)\) is trivially bounded in \(L^\infty (\Omega )\), it follows \(\chi _k\rightarrow \bar{\chi }\) in \(L^p(\Omega )\) for all \(p<\infty \).

Let now \((u_{k_n})\) be a subsequence with \(u_{k_n} \rightharpoonup \bar{u}\) in \(L^2(\Omega )\). Let \(\varphi \in L^\infty (\Omega )\). Since \(\chi _k(x):= |u_k(x)|_0\), we have \(\int _\Omega (1-\chi _{k_n})u_{k_n} \varphi \,\textrm{d}x=0\) for all n. Passing to the limit in this equation yields \(\int _\Omega (1-\bar{\chi }) \bar{u} \varphi \,\textrm{d}x=0\). Since \(\varphi \in L^\infty (\Omega )\) was arbitrary, this implies \( (1-\bar{\chi }) \bar{u}=0\) almost everywhere, which in turn implies \(|\bar{u}|_0 \le \bar{\chi }\) almost everywhere, as both functions \(\bar{\chi }\) and \(|u|_0\) only attain the values 0 and 1. And it follows

and \(\bar{u}\) is feasible for the \(L^0\) constraint. \(\square \)

Moreover, we can prove strong convergence under additional assumptions on \(\nabla f\). See also the related result [15, Theorem 3.18]. Here, we assume that \(\nabla f\) maps weakly to strongly converging sequences.

Theorem 5.7

Let Assumption 3 be satisfied. Suppose \(L>L_f\). Let us assume complete continuity of \(\nabla f\) from \(L^2(\Omega )\) to \(L^2(\Omega )\), i.e., for all sequences \((v_k)\) in \(L^2(\Omega )\) the following implication

holds. In addition, we require \(\alpha >0\).

Let \((u_k)\) be a sequence of iterates generated by Algorithm 1. Suppose

where \((\lambda _k)\) is as in Lemma 5.3.

Then \(u_{k_n}\rightharpoonup \bar{u}\) in \(L^2(\Omega )\) implies \(u_{k_n}\rightarrow \bar{u}\) in \(L^2(\Omega )\). In addition, for almost all \(x\in \Omega \) the following condition is fulfilled

Proof

If \(u_{k+1}(x)\ne 0\) then \(\alpha u_{k+1}(x) = - (\nabla f(u_k)(x) + L(u_{k+1}(x)-u_k(x)))\). This implies

Adding the equation \((1-\chi _{k+1}) u_{k+1} =0\), yields

Let now \(u_{k_n}\rightharpoonup \bar{u}\) in \(L^2(\Omega )\). Then \(\nabla f(u_{k_n})\rightarrow \nabla f(\bar{u})\) in \(L^2(\Omega )\) by complete continuity of \(\nabla f\). In addition, \(u_{k+1} - u_k\rightarrow 0 \) in \(L^2(\Omega )\) by Theorem 5.4. The right-hand side in (5.12) converges strongly in \(L^2(\Omega )\) by Lemma 5.8 below, which implies the strong convergence \(u_{k_n}\rightarrow \bar{u}\) in \(L^2(\Omega )\). In addition, in the limit we obtain from (5.12) \(\alpha \bar{u} =- \bar{\chi } \nabla f(\bar{u})\). \(\square \)

Let us compare the properties of limit points \(\bar{u}\) with the necessary optimality conditions (5.2)–(5.4) according to Theorem 5.2. The above result only proves the implication (5.3) for limit points \(\bar{u}\). It seems to be impossible to prove the remaining two conditions (5.2) and (5.4). An obvious choice for s in those formulas would be any limit point of \((-\lambda _k)\). Under the assumption (5.11), we would get \(s<0\). However, it seems impossible to prove \(\Vert \bar{u}\Vert _0=\tau \): the mapping \(u\mapsto |u|_0\) is merely lower semicontinuous at \(u=0\), so that we can only prove \(\Vert \bar{u}\Vert _0 \le \tau \). And it is not clear that the complementarity condition (5.2) is satisfied in the limit. In order to prove (5.4), a natural idea would be to pass to the limit in the condition (5.9). At best we can expect to get

This is different to (5.4) because of the presence of the prox-parameter \(L>0\) in the inequality.

We close the section with the following auxiliary result, whis was used in the proof of Theorem 5.7. Note that an application of Hölder inequality implies strong convergence in \(L^p(\Omega )\) only for \(p<2\).

Lemma 5.8

Let \({\text {meas}}(\Omega )<\infty \). Let sequences \((\chi _k)\) and \((g_k)\) be given such that \(\Vert \chi _k\Vert _{L^\infty (\Omega )}\le 1\), \(\chi _k \rightarrow \bar{\chi }\) in \(L^1(\Omega )\), and \(g_k\rightarrow \bar{g}\) in \(L^2(\Omega )\). Then \(\chi _k g_k \rightarrow \bar{\chi } \bar{g}\) in \(L^2(\Omega )\).

Proof

The sequences admit pointwise a.e. converging subsequences \((g_{k_n})\), \((\chi _{k_n})\) together with a dominating function \(a\in L^2(\Omega )\) with \(|g_{k_n}|\le a\), see [3, Theorem 4.9]. Then \(\chi _{k_n} g_{k_n} \rightarrow \bar{\chi } \bar{g}\) in \(L^2(\Omega )\) by dominated convergence. A subsequence-subsequence argument finishes the proof. \(\square \)

Data availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Aubin, J.-P., Frankowska, H.: Set-Valued Analysis. Vol. 2. Systems & Control: Foundations & Applications. Birkhäuser Boston, Inc., Boston (1990). https://doi.org/10.1007/978-0-8176-4848-0

Berliocchi, H., Lasry, J.-M.: Intégrandes normales et mesures paramétrées en calcul des variations. Bulletin de la Société Mathématique de France 101, 129–184 (1973). https://doi.org/10.24033/bsmf.1755

Brezis, H.: Functional Analysis, Sobolev Spaces and Partial Differential Equations. Universitext. Springer, New York, pp. xiv+599 (2011)

Buttazzo, G., Maiale, F.P., Velichkov, B.: Shape optimization problems in control form. Atti Accad. Naz. Lincei Rend. Lincei Mat. Appl. 32(3), 413–435 (2021). https://doi.org/10.4171/rlm/942

Casas, E., Raymond, J.-P., Zidani, H.: Optimal control problem governed by semilinear elliptic equations with integral control constraints and pointwise state constraints. In: Control and estimation of distributed parameter systems (Vorau, 1996). Vol. 126. Internat. Ser. Numer. Math. Birkhäuser, Basel, pp. 89–102 (1998)

Casas, E.: Pontryagin’s principle for optimal control problems governed by semilinear elliptic equations. In: Control and Estimation of Distributed Parameter Systems: Nonlinear Phenomena (Vorau, 1993). Vol. 118. Internat. Ser. Numer. Math. Birkhäuser, Basel, pp. 97–114 (1994)

Diestel, J., Uhl Jr., J.J.: Vector measures. Mathematical Surveys, No. 15. With a foreword by B. J. Pettis. American Mathematical Society, Providence, pp. xiii+322 (1977)

Gotoh, J.-Y., Takeda, A., Tono, K.: DC formulations and algorithms for sparse optimization problems. Math. Program. Ser. B 169(1), 141–176 (2018). https://doi.org/10.1007/s10107-017-1181-0

Ioffe, A.D., Tihomirov, V.M.: Theorie der Extremalaufgaben. Translated from the Russian by Bernd Luderer. VEB Deutscher Verlag der Wissenschaften, Berlin, p. 399 (1979)

Ito, K., Kunisch, K.: Optimal control with \(L^{p} (\Omega )\), \(p \in [0, 1)\), control cost. SIAM J. Control Optim. 52(2), 1251–1275 (2014). https://doi.org/10.1137/120896529

Kalise, D., Kunisch, K., Sturm, K.: Optimal actuator design based on shape calculus. Math. Models Methods Appl. Sci. 28(13), 2667–2717 (2018). https://doi.org/10.1142/S0218202518500586

Kanzow, C., Raharja, A.B., Schwartz, A.: An augmented Lagrangian method for cardinality-constrained optimization problems. J. Optim. Theory Appl. 189(3), 793–813 (2021). https://doi.org/10.1007/s10957-021-01854-7

Natemeyer, C., Wachsmuth, D.: A proximal gradient method for control problems with non-smooth and non-convex control cost. Comput. Optim. Appl. 80(2), 639–677 (2021). https://doi.org/10.1007/s10589-021-00308-0

Raymond, J.P., Zidani, H.: Pontryagin’s principle for state-constrained control problems governed by parabolic equations with unbounded controls. SIAM J. Control Optim. 36(6), 1853–1879 (1998). https://doi.org/10.1137/S0363012996302470

Wachsmuth, D.: Iterative hard-thresholding applied to optimal control problems with \(L^{0} (\Omega )\) control cost. SIAM J. Control Optim. 57(2), 854–879 (2019). https://doi.org/10.1137/18M1194602

Acknowledgements

The author wants to thank an anonymous referee for pointing out a serious error in the submitted manuscript.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was partially supported by the German Research Foundation DFG under project Grant Wa 3626/3-2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wachsmuth, D. Optimal control problems with \(L^0(\Omega )\) constraints: maximum principle and proximal gradient method. Comput Optim Appl 87, 811–833 (2024). https://doi.org/10.1007/s10589-023-00456-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-023-00456-5