Abstract

We consider nonlinear model predictive control (MPC) with multiple competing cost functions. In each step of the scheme, a multiobjective optimal control problem with a nonlinear system and terminal conditions is solved. We propose an algorithm and give performance guarantees for the resulting MPC closed loop system. Thereby, we significantly simplify the assumptions made in the literature so far by assuming strict dissipativity and the existence of a compatible terminal cost for one of the competing objective functions only. We give conditions which ensure asymptotic stability of the closed loop and, what is more, obtain performance estimates for all cost criteria. Numerical simulations on various instances illustrate our findings. The proposed algorithm requires the selection of an efficient solution in each iteration, thus we examine several selection rules and their impact on the results. and we also examine numerically how different selection rules impact the results

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Model predictive control (MPC) is a control method in which in each sampling instant an optimal control problem is solved in order to determine the control input for the next sampling interval. Besides important system theoretic properties such as stability and constraint satisfaction, the optimization-based nature of the method also allows to conclude performance estimates of the closed loop, measured in terms of the optimization objective used for computing the control. In this paper we consider general (often also called economic) MPC formulations, for which such performance estimates have been obtained in [2,3,4, 14, 15, 17] (see also Chapter 7 in [16] for a concise presentation). These references cover MPC schemes both with and without terminal constraints and costs. In both cases, strict dissipativity of the underlying optimal control problem is a crucial assumption.

In many practical applications, as in [19, 20, 25], it is desirable to consider not only a single but several cost criteria. For instance, in chemical process control, it may be desirable to stabilize a chemical process at a certain set point but at the same time approach the set point in such a way that the yield of the reaction is maximized (this is also the situation that we will illustrate in the numerical example in this paper). As these criteria might be conflicting, the resulting optimization problem is a multicriteria or multiobjective optimization problem [9]. In an MPC context, multiobjective optimization has been investigated, e.g., in [13, 18, 26,27,28] in different contexts. Particularly, [18, 26] present a multiobjective MPC algorithm for which some of the performance estimates known from classical MPC can be carried over, again using strict dissipativity as a main theoretical ingredient in the proofs. See also [5] for an application of this algorithm to a PDE-governed control problem.

Whenever multiple objective functions, i.e., cost criteria, are considered, one has to agree on an optimality notion used for such problems. A widely used concept is that of efficiency [9]: a feasible solution is called efficient in case none of the objective functions can be improved by choosing another feasible solution without a worsening of the values of at least one of the remaining functions. The vector of the corresponding values of all the objective functions under consideration is called a nondominated point. These nondominated points form the nondominated set, which corresponds to the optimal value in case of just one objective function. In general, as the objective functions are competing, there is not a single efficient point which optimizes all objectives at the same time. Thus, the set of optimal solutions, called efficient set, is in general not unique. In single-objective MPC in each sampling instant typically an optimal solution is chosen to determine the control for the next step, and at least the optimal value of this optimal control problem is unique. In case of several competing criteria, a typically infinite number of efficient solutions as potential choices exist with non-unique values. Still, a certain efficient solution has to be chosen for the next step. This additional degree of freedom makes the extension of MPC to the multiobjective setting more challenging and requires new iterative approaches which guide this choice for guaranteeing convergence results and for allowing performance estimates. Within this work we will reach this aim by introducing upper bounds on the possible choices steered by the selected efficient solution in the first step. Note that we do not rely on scalarization approaches like a weighted sum of the objective functions as done in [23], as this would require pre-knowledge for instance about the preferred weights, which is in general not available. For a discussion on the advantage of direct methods compared to scalarization based approaches we refer to [12].

The present paper builds on [18] and is also closely related to [28]. More precisely, we significantly simplify the assumptions made in [18], by requiring strict dissipativity and the existence of a terminal cost satisfying the usual conditions from the MPC literature only for one of the optimization objectives (by default, always for the first), rather than for all of them. Despite the relaxed conditions, we obtain essentially the same results as in [18] concerning the qualitative behavior and the averaged and non-averaged performance of the closed loop. For some of the results we can even simplify the proposed algorithm. Moreover, we develop conditions under which asymptotic stability of the closed loop (as opposed to mere convergence to a steady state) can be ensured. The close relation to [28] stems from the fact that, as in this reference, the first optimization objective plays a particular role and in particular determines the steady state to which the closed-loop solutions converge. However, the main difference of this paper to [28] is that we obtain performance estimates for all objectives.

As in [18], our theoretical performance estimates depend on the choice of the efficient solution in the first step of the MPC iteration, i.e., at initial time. This implies that these estimates do not consider the choices of the efficient solutions in the subsequent iterations. So far, the effects of these choices on the performance of the MPC closed-loop solution were not investigated. In this paper, in addition to the theoretical results, we use numerical examples to shed some light on these effects.

The paper is organized as follows: In Sect. 2 we introduce the problems we are considering along with basic definitions and properties from multiobjective optimization as well as stability results for the single-objective case. In Sect. 3 we introduce a new version of a multiobjective MPC algorithm for multiobjective optimal control problems with terminal constraints. We move on, in Sect. 4 and 5, showing performance results for all cost criteria and a stability theorem. In Sect. 6 we illustrate our theoretical findings by numerical examples. Further, in Sect. 7 we investigate the influence of the subsequent iterations of the algorithm by means of numerical examples. Sect. 8 concludes this paper.

2 Problem formulation and preliminaries

In the following we introduce the multiobjective optimal control problem we are considering. We give the basic definitions and properties from multiobjective optimization and we recall stability results for the single-objective case.

2.1 Multiobjective optimal control problems

We consider discrete time nonlinear systems of the form

with \(f: \mathbb {R}^n\times \mathbb {R}^m\rightarrow \mathbb {R}^n\) continuous. We denote the solution of system (2.1) for a control sequence \({{\textbf {u}}}= (u(0),\dots , u(N-1))\in (\mathbb {R}^m)^{N}\) and initial value \(x_0\in \mathbb {R}^n\) by \(x_{{{\textbf {u}}}}(\cdot , x_0)\), or short by \(x(\cdot )\) if there is no ambiguity about the respective control sequence and the initial value.

We impose nonempty state and input constraint sets \(\mathbb {X}\subseteq \mathbb {R}^n\) and \(\mathbb {U}\subseteq \mathbb {R}^m\), respectively, \(\mathbb {U}^{N}\) as the set for control sequences of length N, as well as a nonempty terminal constraint set \(\mathbb {X}_0\subseteq \mathbb {R}^n\), and the set of admissible control sequences for \(x_0\in \mathbb {X}\) up to time \(N\in \mathbb {N}\) by \(\mathbb {U}^{N}(x_0):= \{ {{\textbf {u}}}\in \mathbb {U}^{N}\,|\, x_{{{\textbf {u}}}}(k, x_0)\in \mathbb {X}\; \forall \; k=1,\ldots ,N-1 \text { and}\; x_{{{\textbf {u}}}}(N, x_0)\in \mathbb {X}_0\}\). The terminal constraint \(x_{{{\textbf {u}}}}(N,x_0)\in \mathbb {X}_0\) can generally not be satisfied for all initial values \(x_0\in \mathbb {X}\), such that we define the feasible set

noting that \(\mathbb {U}^{N}(x_0)\ne \emptyset\) if and only if \(x_0\in \mathbb {X}_N\). Assumption 3.1 (iv), below, will guarantee that \(\mathbb {X}_N\ne \emptyset\) for all \(N\ge 1\). Further, a pair \((x^{e},u^{e})\in \mathbb {X}\times \mathbb {U}\) is called equilibrium if \(x^{e}=f(x^{e},u^{e})\) holds.

For given continuous stage cost \(\ell _{1}:\mathbb {X}\times \mathbb {U}\rightarrow \mathbb {R}\) and continuous terminal cost \(F_{1}:\mathbb {X}_0\rightarrow \mathbb {R}_{\ge 0}\), we define the cost functional \(J_{1}^{N}:\mathbb {X}\times \mathbb {U}^{N}\rightarrow \mathbb {R}\) by

and for \(i\in \{2,\dots ,s\}, s\ge 2\) we define continuous stage costs \(\ell _{i}:\mathbb {X}\times \mathbb {U}\rightarrow \mathbb {R}\) and the corresponding cost functionals \(J_{i}^{N}:\mathbb {X}\times \mathbb {U}^{N}\rightarrow \mathbb {R}\) by

for horizon \(N\in \mathbb {N}\) with \(N\ge 2\). Here, \(F_{1}\) is defined on the terminal constraint set \(\mathbb {X}_0\subseteq \mathbb {X}\) and we need to ensure that \(x_{{{\textbf {u}}}}(N,x_0)\in \mathbb {X}_0\), i.e., \(J^{N}_{1}(x_0,{{\textbf {u}}})\) is well defined for \(x_0\in \mathbb {X}_N\) and \({{\textbf {u}}}\in \mathbb {U}^{N}(x_0)\) and we will only use it for such arguments in the remainder of this paper.

We remark that we do not need any terminal costs for \(i\in \{2,\dots ,s\}\), which significantly simplifies the design compared to [18, 26]. We aim on minimizing all cost functionals \(J_{1}^{N},\dots , J_s^{N}\) at the same time for given \(x_0\) w.r.t. \({{\textbf {u}}}\) and along a solution of (2.1). Hence, we obtain a multiobjective optimal control problem with terminal constraints and costs

Throughout this paper, we will only consider multiobjective optimal control problems with terminal constraints of the form (MO OCP) with \(s\ge 2\) and \(N\ge 2\).

2.2 Basics of multiobjective optimization

In the presence of multiple competing objectives we need an appropriate notion of optimality. In general, there will not be one optimal \({{\textbf {u}}}\) such that all cost functionals are minimized simultaneously. The formalization we will use in this paper is based on the componentwise ordering in the image space \(\mathbb {R}^s\) and is summarized in the next definition. For this definition and an introduction to multiobjective optimization we refer, for instance, to [9] or the recent survey [12].

Definition 2.1

Let N be the horizon length. A sequence \({{\textbf {u}}}^\star \in \mathbb {U}^{N}(x_0)\) is called efficient for (MO OCP) with \(x_0\in \mathbb {X}\) if there is no \({{\textbf {u}}}\in \mathbb {U}^{N}(x_0)\) such that

The objective value \(J^{N}(x_0,{{\textbf {u}}}^\star )=(J_{1}^{N}(x_0,{{\textbf {u}}}^\star ),\dots ,J_s^{N}(x_0,{{\textbf {u}}}^\star ))\) is called nondominated.

Usually, there is not only one (unique) efficient solution of (MO OCP) but there exists a set of such solutions and such nondominated values. Therefore, the set of all efficient solutions of length N for initial value \(x_0\in \mathbb {X}_N\) will be denoted by \(\mathbb {U}_{\mathcal {P}}^{N}(x_0)\). We define the set of attainable values by

and the nondominated set by

This set is often referred as the Pareto front. In this paper, the \(\min\)-operator is defined as

and, accordingly

We now provide basic definitions and results from the theory of multiobjective optimization, adapted from [9, 24] to our setting.

Definition 2.2

(External stability) The set \({\mathcal {J}}^{N}_{\mathcal {P}}(x_0)\) is called externally stable for \({\mathcal {J}}^{N}(x_0)\) if for all \(y\in {\mathcal {J}}^{N}(x_0)\) there is \(y_{\mathcal {P}}\in {\mathcal {J}}^{N}_{\mathcal {P}}(x_0)\) such that \(y\ge y_{\mathcal {P}}\) holds componentwise.

Definition 2.3

(Cone-Compactness) The set \({\mathcal {J}}^{N}(x_0)\) is called \(\mathbb {R}_{\ge 0}^s\)-compact if for all \(y\in {\mathcal {J}}^{N}(x_0)\) the set \((y-\mathbb {R}_{\ge 0}^s)\cap {\mathcal {J}}^{N}(x_0)\) is compact.

Here, we write \(z-\mathbb {R}_{\ge 0}^s\) for the difference of the sets \(\{z\}\) and \(\mathbb {R}_{\ge 0}^s:=\{y\in \mathbb {R}^s\mid y_{i}\ge 0\; \forall i=1,\dots ,s\}\) in the Minkowski sense. The next theorem states a condition for external stability of the set \({\mathcal {J}}^{N}_{\mathcal {P}}(x_0)\) and a proof can be found in [9, 24].

Theorem 2.4

Given a horizon \(N\in \mathbb {N}\) and an initial value \(x_0\in \mathbb {X}_N\). If \({\mathcal {J}}^{N}(x_0)\ne \emptyset\) and \({\mathcal {J}}^{N}(x_0)\) is \(\mathbb {R}_{\ge 0}^s\)-compact, then the set \({\mathcal {J}}^{N}_{\mathcal {P}}(x_0)\) is externally stable for \({\mathcal {J}}^{N}(x_0)\).

Since Theorem 2.4 is in practice difficult to verify, the next lemma provides easily checkable conditions for external stability which we will need for feasibility below.

Lemma 2.5

Let \(\mathbb {U}\) be compact, \(\mathbb {X}\) and \(\mathbb {X}_0\) be closed and let \(x\in \mathbb {X}_N\) and \(N\in \mathbb {N}\). Then the set \({\mathcal {J}}^{N}_{\mathcal {P}}(x)\) is externally stable for \({\mathcal {J}}^{N}(x)\).

Proof

Analogous to the proof of Lemma 4.8 in [26] or Lemma 2.5 in [18]. \(\square\)

In the single-objective case an immediate consequence of the dynamic programming principle (DPP) is that tails of optimal control sequences are again optimal control sequences. The same result holds for efficient solutions and can be found in [26, Lemma 4.1].

Lemma 2.6

(Tails of efficient solutions are efficient solutions) Let \(K<N\). If \({{\textbf {u}}}^{\star ,N}\in \mathbb {U}_{\mathcal {P}}^{N}(x_0)\), then \({{\textbf {u}}}^{\star ,K}\in \mathbb {U}_{\mathcal {P}}^{N-K}(x_{{{\textbf {u}}}^{\star ,N}}(K,x_0))\) with \({{\textbf {u}}}^{\star ,K}:={{\textbf {u}}}^{\star ,N}(\cdot +K)\) for all \(K<N\), where \({{\textbf {u}}}^{\star ,N}(\cdot +K):=(u^{\star ,N}(K),u^{\star ,N}(K+1),\dots ,u^{\star ,N}(N-1))\).

2.3 Auxiliary results on stability and dissipativity

We will make use of comparison-functions defined by

Moreover, with \({\mathcal {B}}_\varepsilon (x_0)\subseteq \mathbb {R}^n\) we denote the open ball with radius \(\varepsilon >0\) around \(x_0\).

The MPC Algorithms 1 and 2, below, generate a solution trajectory that is referred to as the MPC closed loop. For analyzing its stability, we recall the definition of a uniform time-varying Lyapunov function, which can be found in [16, Definition 2.21]. To this end, we consider a general time-varying discrete-time dynamical system given by

with \(g:\mathbb {N}_0\times \mathbb {X}\rightarrow \mathbb {X}\), initial condition \(x(0)=x_0\), and state space \(\mathbb {X}\subseteq \mathbb {R}^n\).

Definition 2.7

(Uniform time-varying Lyapunov function) Consider system (2.5), an equilibrium \(x^{e}\in \mathbb {X}\), i.e., \(x^{e}=g({k,} x^{e})\), \(k\in \mathbb {N}\), subsets of the state space \(S(k)\subseteq \mathbb {X}\), \(k\in \mathbb {N}_0\), and define \({\mathcal {S}}=\{(k,x)\mid k\in \mathbb {N}_0, x\in S(k)\}\). A function \(V:{\mathcal {S}}\rightarrow \mathbb {R}_{\ge 0}\) is called uniform time-varying Lyapunov function on \({\mathcal {S}}\) if the following conditions are satisfied:

-

(i)

There exist functions \(\alpha _{1}, \alpha _2\in {\mathcal {K}}_\infty\) such that

$$\begin{aligned} \alpha _{1}(\left\Vert x-x^{e}\right\Vert )\le V({k,x})\le \alpha _2(\left\Vert x-x^{e}\right\Vert ) \end{aligned}$$holds for all \((k,x)\in S\).

-

(ii)

There exists a function \(\alpha _V\in {\mathcal {K}}\) such that

$$\begin{aligned} V({k+1, g(k,x)})\le V({k,x})-\alpha _V(\left\Vert x-x^{e}\right\Vert ) \end{aligned}$$holds for all \(k\in \mathbb {N}_0\) and \(x\in S(k)\) with \(g(k,x)\in S(k+1)\).

The following theorem shows that the existence of such a Lyapunov function ensures asymptotic stability. For a proof we refer to [16, Theorem 2.22] and for the definition of the stability conditions used in its formulation to [16, Definitions 2.14 and 2.16].

Theorem 2.8

Let \(x^{e}\) be an equilibrium of system (2.5), i.e., \(x^{e}=g({k,} x^{e})\),\(k\in \mathbb {N}\), and assume there exists a uniform time-varying Lyapunov function V on a set \({\mathcal {S}}\subset \mathbb {N}_0\times \mathbb {R}^n\) as defined in Definition 2.7. If each S(k) contains a ball \({\mathcal {B}}_\nu (x^{e})\) with radius \(\nu >0\) with \(g(k, x)\in S(k+1)\) for all \(x\in {\mathcal {B}}_\nu (x^{e})\), then \(x^{e}\) is locally asymptotically stable with \(\eta =\alpha _2^{-1}\circ \alpha _{1}(\nu )\). If the family of sets S(k) is forward invariant (i.e., if \(g(k,x)\in S(k+1)\) for all \((k,x)\in {\mathcal {S}}\)) then \(x^{e}\) is asymptotically stable on S(k). If \(S(k)=\mathbb {R}^n\) holds for all \(k\in \mathbb {N}_0\) then \(x^{e}\) is globally asymptotically stable.

Recent research has established close connections between strict dissipativity and both the stability and near-optimality of closed-loop solutions of model predictive control schemes. We will also use this notion here. To this end, we introduce strict dissipativity for a single-objective optimal control problem, meaning we consider only one cost functional, see, for instance, [16, Sect.8.2].

Definition 2.9

(Strict dissipativity) A single-objective optimal control problem with stage cost \(\ell _{i}\) is strictly dissipative at an equilibrium \((x^{e},u^{e})\) if there exists a storage function \(\lambda _{i}:\mathbb {X}\rightarrow \mathbb {R}\) bounded from below and satisfying \(\lambda _{i}(x^{e})=0\), and a function \(\rho \in {\mathcal {K}}_\infty\) such that for all \((x,u)\in \mathbb {X}\times \mathbb {U}\) the inequality

holds.

3 A new multiobjective MPC algorithm

In this section, we introduce a multiobjective model predictive control (MO MPC) scheme that relies on solving multiobjective optimal control problems of the type (MO OCP). Since in the multiobjective case there are several “optimal” (efficient) solutions we have to adapt the “standard” MPC, e.g. see [16]. We build on the results in [18, 26] which we recall at the appropriate places for completeness. We introduce a simplified version of the algorithm presented in [18], in which we allow for more general problems of the type (MO OCP) than in [18], and we get rid of the restrictive assumption of the existence of stabilizing stage and terminal costs in all objective functions. Rather, we require strict dissipativity of the optimal control problem and the existence of a compatible terminal cost for only one stage cost. In particular, the existence of terminal costs that are jointly compatible with all the stage costs is no longer required. For two objectives, the resulting algorithm bears similarities with the one in [27], where one stabilizing and one economic objective were considered. In this sense, we merge the ideas presented in [18, 27], but at the same time we also extend them and, in addition to stability results, we will also provide performance estimates for all cost criteria \(J_{i}^{N}\), \(i\in \{1,\dots ,s\}\), which are not present in [27]. Throughout, we make the convention that the optimal control problem is strictly dissipative for the first stage cost \(\ell _{1}\).

Assumption 3.1

We assume that

-

(i)

there is an equilibrium \((x^{e},u^{e})\in \mathbb {X}\times \mathbb {U}\) such that \(f(x^{e},u^{e})=x^{e}\)

-

(ii)

there is a storage function \(\lambda _{1}:\mathbb {X}\rightarrow \mathbb {R}\) bounded from below with \(\lambda _{1}(x^{e})=0\) and a function \(\alpha _{\ell ,1}\in {\mathcal {K}}_\infty\) such that

$$\begin{aligned}&\ell _{1}(x,u)-\ell _{1}(x^{e},u^{e})+\lambda _{1}(x)-\lambda _{1}(f(x,u))\ge \alpha _{\ell ,1}(\left\Vert x-x^{e}\right\Vert \nonumber \\&\quad + \left\Vert u-u^e\right\Vert )\quad \forall (x,u)\in \mathbb {X}\times \mathbb {U}\end{aligned}$$(3.1) -

(iii)

\(\ell _{i}\) is continuous for all \(i=1,\dots ,s\)

-

(iv)

\(x^{e}\in \mathbb {X}_0\) and there exists a local feedback \(\kappa :\mathbb {X}_0\rightarrow \mathbb {U}\) satisfying

-

1.

\(f(x,\kappa (x))\in \mathbb {X}_0\) for all \(x\in \mathbb {X}_0\)

-

2.

\(\forall x\in \mathbb {X}_0\): \(F_{1}(f(x,\kappa (x)))+\ell _{1}(x,\kappa (x))\le F_{1}(x) +\ell _{1}(x^{e},u^{e})\)

-

1.

-

(v)

the sets \({\mathcal {J}}^{N}_{\mathcal {P}}(x)\) are externally stable for \({\mathcal {J}}^{N}(x)\) for each \(x\in \mathbb {X}_N\), with \(\mathbb {X}_N\) from (2.2).

We note that item (iv) of this assumption ensures \(\mathbb {X}_0\subseteq \mathbb {X}_N\) for all \(N\ge 1\) and thus \(\mathbb {X}_N\ne \emptyset\). Item (iv b) is usually referred to as compatibility of the terminal cost \(F_{1}\).

Assumption 3.1 states that we only require strict dissipativity for the optimal control problem with the first stage cost, while for the remaining \(s-1\) stage costs we do not impose any conditions nor the existence of terminal costs. We remark that stabilizing, i.e., positive definite stage costs are a special case of stage costs for which strict dissipativity holds with \(\lambda \equiv 0\). In the following, under Assumption 3.1, we provide performance estimates for all cost criteria \(J_{i}^{N}\) and show asymptotic stability of the closed loop.

Algorithm 1, below, gives a variant of Algorithm 2 in [18], in which the constraint (3.2) is only imposed for the first cost criterion \(J_{1}^{N}\), instead of for all cost criteria \(J_{i}^{N}\), \(i=1,\ldots ,s\), as in [18]. By using the local feedback \(\kappa\) as part of the comparison control sequence in Step (2), we enforce the trajectory to converge by means of the first objective function.

We have visualized the bound in (3.2) in Fig. 1, where the dashed line represents the bound resulting from \({{\textbf {u}}}_{x(k)}^{N}\) and the red line the set of nondominated points of (MO OCP) satisfying this bound. We like to stress that we do not constrain the second objective (or, for that matter, any other objectives that may appear in the formulation). We remark that for the subsequent theoretical results it is not important which nondominated point on the red part of the nondominated set in Fig. 1 we choose in step (1), as we provide performance bounds which hold for any feasible choice of \({{\textbf {u}}}_{x(k)}^{\star }\) and only depend on the choice of \({{\textbf {u}}}_{x(0)}^{\star }\) in step (0). However, this does not mean that the choices for \(k\ge 1\) do not affect the MPC closed-loop solutions. In Sect. 7, we will thus investigate the development of the nondominated sets and the effect of different selection rules for the efficient point via numerical simulations. Before we do this, we show in the subsequent sections that this MO MPC algorithm has the certain desirable properties: feasibility, convergence and performance results. We start with feasibility and convergence of the closed-loop trajectory and with performance results for the cost criterion \(J_{1}\). We note that the convergence of the closed-loop and performance results were already shown in [18, 26] for the algorithm with stronger constraints.

4 Performance results

Within this section we examine the properties of the closed loop trajectory which results from Algorithm 1. We obtain convergence for the trajectory as well as performance estimates for all cost criteria.

4.1 Performance estimate of \(J_{1}^{N}\)

In this subsection we state a performance theorem for the first cost criterion \(J_{1}^{N}\), which guarantees a bounded performance of the feedback \(\mu ^{N}\) defined in Algorithm 1. To this end, we first show a performance estimate for the so-called rotated cost function \({\widetilde{J}}_{1}^{N}\) and conclude then the trajectory convergence as well as the main performance theorem of this section.

First, we recall the classical definitions of rotated costs.

Definition 4.1

For \(x\in \mathbb {X}\) and \(u\in \mathbb {U}\) we define the rotated stage cost

with equilibrium \(x^{e}\) and storage cost \(\lambda _{1}\) from Assumption 3.1, and rotated terminal cost

The corresponding cost functional is given by

We remark that, under Assumption 3.1, for all \(x\in \mathbb {X}\), \(u\in \mathbb {U}\) the rotated stage cost \({\widetilde{\ell }}_{1}\) is bounded from below by \(\alpha _{\ell , 1}\) with \(\alpha _{\ell , 1}\) from Assumption 3.1(ii) and, thus, \({\widetilde{\ell }}(x,u)\ge 0\). We emphasize that for implementing the MPC algorithms from this paper the rotated stage cost \({\widetilde{\ell }}_{1}\) and the storage function \(\lambda _{1}\) do not need to be known, as we always optimize the original stage cost \(\ell _{1}\), whereas \({\widetilde{\ell }}_{1}\) is only needed as an auxiliary cost for the subsequent analysis.

Next, we use the definitions above to derive some relations between rotated and classical cost. It is easy to check that the relation

and the equalities \({\widetilde{\ell }}_{1}(x^{e},u^{e})=0\) and \({\widetilde{F}}_{1}(x^{e})=0\) hold. Moreover, the inequality

holds for all \(x\in \mathbb {X}_N\) and \(\kappa\) from Assumption 3.1 (iv).

Using the definitions and relations above we can state a performance estimate for the rotated stage cost \({\widetilde{\ell }}_{1}\). We will use this result to conclude the convergence of the closed-loop trajectory. We refer again to [26], especially to Section 5.1.2. where similar results and proofs are provided.

Lemma 4.2

(Non-averaged rotated performance for \(\ell _{1}\)) Let Assumptions 3.1 hold and \(x_0\in \mathbb {X}_N\). Then, it holds

with \(\mu ^{N}\) the MPC feedback defined in Algorithm 1.

Proof

The existence of the efficient solutions in step (0) and (1) is concluded from Assumption 3.1 (v) – the external stability of \({\mathcal {J}}^{N}_{\mathcal {P}}(x)\). Feasibility of \({{\textbf {u}}}_{x(k+1,x_0)}\) in (2) follows from Assumption 3.1 (iv). Recursive feasibility of \(\mathbb {X}\), see [16, Theorem 3.5] for a definition, is an immediate consequence.

Further, for each \(K\in \mathbb {N}\) it holds, with \({{\textbf {u}}}_{x(k)}^{\star }\) denoting the control from Algorithm 1,

in which the first equality follows from the definition of \({\widetilde{\ell }}_{1}\) and the second equality holds by means of the terminal condition. Note that we use the notation \({{\textbf {u}}}_{x(k)}^{\star }(\cdot +1):=(u^{\star }(1),u^{\star }(2),\dots ,u^{\star }(N-1))\) Further, because of step (1) in Algorithm 1 and dissipativity we can estimate

Finally, letting K tend to infinity and using that \({\widetilde{\ell }}_{1}(x,u) \ge 0\), for all \(x\in \mathbb {X}\) and \(u\in \mathbb {U}\) yields the statement. \(\square\)

Corollary 4.3

(Trajectory convergence) Consider (MO OCP). Let Assumption 3.1 hold. Then the closed-loop trajectory \(x(\cdot )=x_\mu (\cdot ,x_0)\) driven by the feedback \(\mu ^{N}\) from Algorithm 1 converges to the equilibrium \(x^{e}\) and \({\widetilde{\ell }}_{1}(x(k),\mu ^{N}(k,x(k)))\) converges to 0 as \(k\rightarrow \infty\).

Proof

We follow the proof of Corollary 4.9 in [26]:

From Theorem 4.2 it follows that the sum \(\sum _{k=0}^\infty {\widetilde{\ell }}_{1}(x(k),\mu ^{N}(k,x(k)))\) converges and, thus, the sequence satisfies \({\widetilde{\ell }}_{1}(x(k),\mu ^{N}(k,x(k)))\rightarrow 0\) as \(k\rightarrow \infty\). Hence, since the optimal control problem with stage cost \({\widetilde{\ell }}_{1}\) is strictly dissipative and \(\alpha _{\ell ,1}\in {\mathcal {K}}\), we get

which is equivalent to \(\lim _{k\rightarrow \infty }\left\Vert x(k)-x^{e}\right\Vert =0\). \(\square\)

We now carry over the estimates for \({\widetilde{J}}_{1}^\infty\) to \(J_{1}^\infty\). To this end and for the subsequent stability analysis in Sect. 5, we need an additional assumption.

Assumption 4.4

There exist \(\gamma _{F_{1}}\in {\mathcal {K}}_\infty\) and \(\gamma _{\lambda _{1}}\in {\mathcal {K}}_\infty\) such that the following holds.

-

(i)

For all \(x\in \mathbb {X}_0\) it holds that

$$\begin{aligned} |F_{1}(x)-F_{1}(x^{e})|\le \gamma _{F_{1}}(\left\Vert x-x^{e}\right\Vert ) \end{aligned}$$and it yields that \(F_{1}(x^{e})=0\).

-

(ii)

For all \(x\in \mathbb {X}\) it holds that

$$\begin{aligned} |\lambda _{1}(x)-\lambda _{1}(x^{e})|\le \gamma _{\lambda _{1}}(\left\Vert x-x^{e}\right\Vert ) \end{aligned}$$with \(\lambda _{1}\) from Assumption 3.1.

Using Part (ii) of this assumption, we can show an infinite horizon performance result on \(J_{1}\) similar to the one in [18, 26].

Theorem 4.5

(Performance estimate for \(J_{1}\)) Consider the multiobjective optimal control problem with terminal conditions (MO OCP). Let Assumptions 3.1 and 4.4 (ii) hold and assume \(\ell _{1}(x^{e},u^{e})=0\). Then, the MPC feedback \(\mu ^{N}:\mathbb {N}_0\times \mathbb {X}\rightarrow \mathbb {U}\) defined in Algorithm 1 renders the set \(\mathbb {X}\) forward invariant and has the infinite-horizon closed-loop performance

in which \({{\textbf {u}}}_{x_0}^{\star }\) denotes the efficient solution of step (0) in Algorithm 1.

Proof

As in the proof of Lemma 4.2 the existence of the efficient solutions in step (0) and (1) in Algorithm 1 is, again, concluded from the external stability of \({\mathcal {J}}^{N}_{\mathcal {P}}(x)\). Feasibility of \({{\textbf {u}}}_{x(k+1,x_0)}\) in (2) follows from Assumption 3.1 (iv). Forward invariance of \(\mathbb {X}\) is an immediate consequence.

Using the definition of \({\widetilde{\ell }}_{1}\), the estimate from the proof of Lemma 4.2, the relation (4.4), and \(\ell (x^e,u^e)=0\), it holds that

Now Assumption 4.4 (ii) together with the storage function \(\lambda _{1}\) from Definition 2.9 with \(\lambda _{1}(x^e)=0\) and the fact that by Corollary 4.3 we have \(x_\mu (K,x_0)\rightarrow x^e\) implies that \(\lambda _{1}(x_\mu (K,x_0))\rightarrow 0\) as \(K\rightarrow \infty\). This shows the assertion. \(\square\)

Remark 4.6

The proof of Theorem 4.5 also implies the averaged performance estimate

In case \(\ell _{1}(x^e,u^e) \ne 0\), this inequality holds for the shifted cost \({\hat{\ell }}_{1}(x,u) = \ell _{1}(x,u) - \ell _{1}(x^e,u^e)\). This implies

Thus, we obtain an averaged performance estimate also in the case \(\ell _{1}(x^e,u^e)\ne 0\).

In the proof above we argue that we get feasibility because of the external stability. We remark that Lemma 2.5 provides conditions such that external stability can be guaranteed.

4.2 Averaged performance estimates for \(J_{i}\)

Besides the performance of \(J_{1}\) we are also interested in performance estimates for \(J_{i}\), \(i\in \{2,\dots ,s\}\). Thus, we first consider the averaged performance of \(J_{i}\), \(i\in \{2,\dots ,s\}\), by using the results of the previous section. Hence, the continuity of the stage costs \(\ell _{i}\), \(i\in \{2,\dots ,s\}\), and the trajectory convergence deliver the averaged performance estimate.

Lemma 4.7

For each \(i=2,\ldots ,s\) there is \(\omega _{i}\in {\mathcal {K}}_\infty\) such that \(|\ell _{i}(x,u)-\ell _{i}(x^e,u^e)| \le \omega _{i}({\widetilde{\ell }}_{1}(x,u))\).

Proof

Since \(\ell _{i}\) is continuous, there is \({{\tilde{\omega }}}_{i}\in {\mathcal {K}}_\infty\) such that \(|\ell _{i}(x,u)-\ell _{i}(x^e,u^e)| \le {{\tilde{\omega }}}_{i}(\left\Vert x-x^e\right\Vert + \left\Vert u-u^e\right\Vert )\). Since \({\widetilde{\ell }}_{1}(x,u) \ge \alpha _{\ell ,1}(\left\Vert x-x^e\right\Vert + \left\Vert u-u^e\right\Vert )\), the assertion follows with \(\omega _{i} = {{\tilde{\omega }}}_{i}\circ \alpha _{\ell ,1}^{-1}\). \(\square\)

Theorem 4.8

Consider the multiobjective optimal control problem with terminal conditions (MO OCP). Let Assumptions 3.1 and 4.4 hold.

Then, the MPC-feedback \(\mu ^{N}:\mathbb {N}_0\times \mathbb {X}\rightarrow \mathbb {U}\) defined in Algorithm 1 has the infinite-horizon averaged closed-loop performance

for all objectives \(i\in \{2,\dots ,s\}\).

Proof

The existence of the efficient solutions and feasibility is ensured by Lemma 4.2 and Theorem 4.5. Further, from Corollary 4.3 and Lemma 4.7 it follows that there exists \(M\in \mathbb {N}_0\) such that for all \(k\ge M\) the relation \(\ell _{i}(x_{{{\textbf {u}}}_{x(k)}^{\star }}(k,x_0),{{\textbf {u}}}_{x(k)}^{\star }(0))=\ell _{i}(x^{e},u^{e})+\varepsilon (k)\), \(i\in \{2,\dots ,s\}\), with \(\varepsilon (k)\rightarrow 0\) as \(k\rightarrow \infty\), holds. Thus, given any arbitrary \({{\tilde{\varepsilon }}}>0\), there exists \(\tilde{k}\in \mathbb {N}_0\), \({\tilde{K}}\ge M\), such that for \(k\ge {\tilde{k}}\) the error term satisfies \(\varepsilon (k)<{{\tilde{\varepsilon }}}\). Thus, the sequence \((\varepsilon (k))_{k\in \mathbb {N}}\) tends to 0 for \(k\rightarrow \infty\).

Then, for each fixed, but arbitrary \(K\in \mathbb {N}\) with \(K>{\tilde{k}}\)

where \(C := \sum _{k=0}^{\widetilde{k}-1}\ell _{i}(x_{{{\textbf {u}}}_{x(k)}^{\star }}(k,x_0),{{\textbf {u}}}_{x(k)}^{\star }(0))\) is independent of K and \({{\textbf {u}}}_{x(k)}^{\star }\) denotes the control from Algorithm 1. Letting \(K\rightarrow \infty\), this implies

and since \({{\tilde{\varepsilon }}}>0\) was arbitrary, this shows the assertion. \(\square\)

5 Stability

In this section, we show that Algorithm 1 has a stability property. Therefore, we use the assumptions, results and calculations from the previous sections to formulate the following theorem. To this end, we adapt the classical stability result from the single-objective case.

Theorem 5.1

(Asymptotic Stability) Consider the multiobjective optimal control problem with terminal conditions (MO OCP), which we assume to be strictly dissipative for stage cost \(\ell _{1}\) at the equilibrium \((x^{e},u^{e})\). Let Assumption 3.1 (iv) and (v) and Assumption 4.4 (i) and (ii) be satisfied. Let \(\gamma _J\in {\mathcal {K}}_\infty\) and choose the efficient solutions \({{\textbf {u}}}_{x_0}^{\star }\) in step (0) of Algorithm 1 such that they satisfy the inequality

Then the (optimal) equilibrium \(x^{e}\) is asymptotically stable on \(\mathbb {X}_N\) for the MPC closed loop defined in Algorithm 1.

Proof

We follow the proof of the single-objective case and show that the modified cost functional \({\widetilde{J}}_{1}^{N}\) is a uniform time-varying Lyapunov function (see Definition 2.7) for the closed-loop system for \(x^{e}\). Then, we can conclude, using Theorem 2.8, that the equilibrium \(x^{e}\) is asymptotically stable. Without loss of generality we may assume \(\ell _{1}(x^{e},u^{e})=0\), because replacing \(\ell _{1}\) by \(\ell _{1}-\ell _{1}(x^{e},u^{e})\) does not change the closed-loop solutions and thus not the stability.

To this end we first show an auxiliary inequality.

In order to simplify the notation, we write x instead of \(x(k,x_0)\) and \(x^+\) instead of \(x(k+1,x_0)\) for the states on the MPC closed-loop solution. Then for the control sequences defined in Algorithm 1 it holds that

and

Moreover, we observe the relation

Using these identities and inequality (3.2) it thus follows that

In the last step, we used inequality (4.5) with \(x=x_{{{\textbf {u}}}^{\star }_{x^+}(\cdot +1)}(N-1,x^+)\) and \(\ell (x^{e},u^{e})=0\).

We will now check that \(V(k,x) := {\widetilde{J}}_{1}^{N}(x,{{\textbf {u}}}^{\star }_{x})\), with \({{\textbf {u}}}^{\star }_{x}\) denoting the control from the k-th step of the MPC iteration, is a uniform time-varying Lyapunov function according to Definition 2.7 for \(g(k,x) = f(x,\mu ^{N}(k,x))\). In order to do this, we show the existence of \(\alpha _{1},\alpha _2,\alpha _3\in {\mathcal {K}}_\infty\), such that the inequalities

-

(i)

\(\alpha _{1}(\left\Vert x_0-x^{e}\right\Vert )\le \ {\widetilde{J}}_{1}^{N}(x_0,{{\textbf {u}}}_{x_0}^{\star })\le \alpha _2(\left\Vert x_0-x^{e}\right\Vert )\)

-

(ii)

\({\widetilde{\ell }}_{1}(x,u)\ge \alpha _3(\left\Vert x-x^{e}\right\Vert )\)

hold for all \(x,x_0\in \mathbb {X}\). Condition (ii) is satisfied by our strict dissipativity assumption with \(\alpha _3 = \alpha _{\ell ,1}\). For the inequalities in condition (i) we first need to establish a lower bound for \({\widetilde{F}}_{1}\). We recall Assumption 3.1 (iv) with local feedback \(\kappa\) for each \(x\in \mathbb {X}_0\). Then, Assumption 3.1 and strict dissipativity imply

By induction along the closed-loop solution for the local feedback \(\kappa\) we then obtain

By Assumption 4.4 (i) and (ii) and Corollary 4.3 this implies \({\widetilde{F}}_{1}(x_\kappa (K,x))\rightarrow {\widetilde{F}}(x^{e})=0\) as \(K\rightarrow \infty\) from which we can conclude

From this, the definition of \({\widetilde{J}}_{1}^{N}\) immediately implies \({\widetilde{J}}_{1}^{N}(x_0,{{\textbf {u}}}_{x_0}^{\star })\ge {\widetilde{\ell }}_{1}(x_0,\mu ^{N})\ge \alpha _{\ell ,1}(\left\Vert x_0-x^{e}\right\Vert )\) and thus the inequality for \(\alpha _{1}\) with \(\alpha _{1}=\alpha _{\ell ,1}\).

Finally, since \({\widetilde{J}}_{1}^{N}(x^{e},u^{e})=0\) and due to Assumption 4.4 (ii), the (in)equalities (5.1), (4.4), and \(\ell (x^{e},u^{e})=0\) it follows that \(\alpha _2 = \gamma _{\lambda _{1}}+\gamma _{J_{1}^{N}}\). \(\square\)

Observe that in the case of stabilizing stage costs, we obtain \(\lambda _{1}\equiv 0\) and \(\ell _{1}(x^{e},u^{e})=0\), and thus \({\widetilde{J}}_{1}^{N} = J_{1}^{N}\). This implies that the objective function itself is a Lyapunov function.

Remark 5.2

-

(i)

It is not a priori clear that inequality (5.1) can be satisfied. In order to guarantee this, techniques similar to those used, e.g., in [16, Proposition 5.14] or [22, Propositions 2.15 or 2.16] for single-objective MPC could be used.

-

(ii)

If inequality (5.1) can be satisfied, then it will restrict the choice of the efficient point in step (0) of Algorithm 1. In particular, enforcing (5.1) will typically require to put more emphasis on the cost \(J_{1}^{N}\) at the expense that the performance of \(J_{i}^{N}\) for \(i\ge 2\) may deteriorate.

5.1 Non-averaged performance estimates on \(J_{i}\)

In the next section we aim to show a non-averaged performance result on \(J_{i}\) for \(i=2,\dots ,s\). For this purpose we will use the results of the previous sections and combine them with the idea of the performance of single-objective economic MPC without terminal conditions, see [16]. To this end, we consider the trajectories x which are driven by the efficient solution \({{\textbf {u}}}_{x_0}^{\star }\). We denote these trajectories by \(x(\cdot )=x_{{{\textbf {u}}}_{x_0}^{\star }}(\cdot ,x_0)\) and name them efficient trajectories.

First, we show that the end points of the efficient trajectories are close to the equilibrium because of stability and strict dissipativity for the stage cost \(\ell _{1}\).

Lemma 5.3

Let Assumptions 3.1 and 4.4 hold and consider efficient trajectories \(x(j) = x_{{{\textbf {u}}}_{x_0}^{\star }}(j,x_0)\), \(j=0,\ldots ,N\), for which there is \(\gamma _J\in {\mathcal {K}}_\infty\) such that

Then there are \(\rho _{1},\rho _2\in {\mathcal {K}}_\infty\) such that for all \(N\in \mathbb {N}\) the final points on the trajectories satisfy

Proof

From (5.2) and (4.4) we obtain that

Using this inequality for \(j=0\) implies that there exists a time index \(j_0\in \{0,\ldots ,N\}\) such that \({\widetilde{\ell }}_{1}(x(j_0),{{\textbf {u}}}_{x_0}^{\star }(j_0)) \le \rho _2(\Vert x(j_0)-x^e\Vert )/N\) if \(j_0<N\) or \({{\widetilde{F}}}_{1}(x(N))\le \rho _2(\Vert x(j_0)-x^e\Vert )/N\). If \(j_0<N\), then using the lower bound from the dissipativity \(\alpha _{\ell ,1}\) on \({\widetilde{\ell }}_{1}\) and, again, the inequality above it follows that

Since, as shown in the proof of Theorem 5.1, \(\alpha _{\ell ,1}\) is also a lower bound on \({{\widetilde{F}}}_{1}\), we obtain

This implies the assertion with \(\rho _{1}(r)= \max \{\alpha _{\ell ,1}^{-1}(r),\alpha _{\ell ,1}^{-1}\circ \rho _2\circ \alpha _{\ell ,1}^{-1}(r)\}\). \(\square\)

Next, in order to establish a performance estimate on \(J_{i}\), \(i=2,\dots ,s\) we extend the constraint (3.2) to all \(i=1,\ldots ,s\). In this way we end up with an algorithm originally proposed in [18]. However, different from [18], for the subsequent results we still do not require additional properties of the stage cost \(\ell _{i}\) for \(i\ge 2\). We can avoid these conditions by exploiting that the feedback from Assumption 3.1(iv) steers a state x to the equilibrium \(x^{e}\).

Assumption 5.4

For each \(i=2,\ldots ,s\) there is \(\gamma _{i}\in {\mathcal {K}}_\infty\) such that

holds for all \(x\in \mathbb {X}_0\) and \(\kappa\) from Assumption 3.1(iv).

In Fig. 2 we have visualized the bounds of step (1) in Algorithm 2. The dashed lines represent the bounds (5.3) and the red line the set of nondominated points satisfying these inequalities. We remark that now all cost criteria \(J_{i}^{N}\) are bounded. For Algorithm 2 we can state the following performance result.

Theorem 5.5

[Performance Estimate for \(J_{i}\)] Let Assumptions 3.1, 4.4, and 5.4 hold and assume that the efficient solutions generated by Algorithm 2 satisfy the inequalities (5.2) for some \(\gamma _J\in {\mathcal {K}}_\infty\). Then for all \(i=2,\ldots , s\) and for any \(C>0\) there is a function \(\delta _{i}\in {\mathcal {L}}\) such that

for all \(N,K\in \mathbb {N}\) with \(K\ge N\) and all \(x_0\in \mathbb {X}_N\) with \(\Vert x_0-x^e\Vert \le C\).

Proof

Consider the controls \({{\textbf {u}}}_{x(k)}^{\star }\) and \({{\textbf {u}}}_{x(k+1)}\) from Algorithm 2, where x(k) denotes the closed loop solution generated by the algorithm. Then Lemma 5.3 and Assumption 5.4 imply

Here we used that the asymptotic stability property of the closed loop from Theorem 5.1 implies that whenever the initial value satisfies \(\Vert x_0-x^e\Vert \le C\), then there is \({{\widetilde{C}}}>0\) such that \(\Vert x(k)-x^e\Vert \le {{\widetilde{C}}}\) for all \(k\in \mathbb {N}\). This inequality together with inequality (5.3) for \(i=2,\dots ,s\) implies

Now again the asymptotic stability and the boundedness of \(\Vert x_0-x^e\Vert\) imply the existence of \(\chi \in {\mathcal {L}}\) such that \(\Vert x(k)-x^e\Vert \le \chi (k)\).

This implies \({{\widetilde{J}}}_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)}^{\star })\le \gamma _J(\chi (k))\) and the individual (nonnegative) terms of this sum also satisfy \({\widetilde{\ell }}_{1}(x_{{{\textbf {u}}}_{x(k)}^{\star }}(j,x(k)), {{\textbf {u}}}_{x(k)}^{\star }(j)) \le \gamma _J(\chi (k))\) for all \(j=0,\ldots ,N-1\). By Lemma 4.7 this yields \(\ell _{i}(x_{{{\textbf {u}}}_{x(k)}^{\star }}(j,x(k)), {{\textbf {u}}}_{x(k)}^{\star }(j)) \ge \ell _{i}(x^e,u^e) - \omega _{i}(\gamma _J(\chi (k)))\) and we can conclude that

where we used \(K\ge N\) and the monotonicity of \(\chi \in {\mathcal {L}}\) in the last step. This yields the assertion with

\(\square\)

Remark 5.6

The fact that the error term \(K\delta (N)\) grows linearly with K might at the first glance make the estimate useless. However, unless we are in the very special case that \(\ell _{i}(x^e,u^e)=0\), the term \(J_{i}^{N}(x_0,{{\textbf {u}}}_{x_0}^{\star }) + (K-N)\ell _{i}(x^e,u^e)\) also grows affine linearly with K. Hence, for all sufficiently large K the relative error is proportional to \(\delta (N)\) and thus decreases to 0 as N tends to infinity. Hence, in terms of the relative error the estimate gives a perfectly useful estimate. We note that this estimate is structurally similar to estimates for the closed-loop performance of single-objective economic MPC without terminal conditions, see, e.g., [16, Theorem 8.39].

Remark 5.7

The inequalities in (5.2) restrict the efficient solutions, for which the performance statement in Theorem 5.5 holds. If \(\gamma _J\in {\mathcal {K}}_\infty\) can be computed or is known, the inequalities could be added as additional constraints in the optimization routine.

6 Numerical simulations

The aim of this section is to illustrate the theoretical results of the previous sections. In the following we distinguish between the efficient solutions chosen in the different steps of Algorithm 1 and 2. To this end, we introduce the following denominations for the efficient solutions in the algorithms:

-

the efficient solution \({{\textbf {u}}}_{x_0}^{\star }\) chosen in step (0), i.e., in the first iteration, we call the first efficient solution.

-

the efficient solutions \({{\textbf {u}}}_{x(k)}^{\star }\) chosen in step (1), i.e., from iteration step \(k=2\) onwards, we name the subsequent efficient solutions.

For verifying the theoretical results we use the example of a chemical reactor, see [8, 27].

Example 6.1

(Reactor Part 1) We consider a single first-order, irreversible chemical reaction in an isothermal continuous stirred-tank reactor (CSTR)

in which \(k_r = 1.2\) is the rate constant. The material balances and the system data are provided in [8] whereas the stage costs – a tracking type cost forcing the solutions to a desired equilibrium and an economic stage cost maximizing the yield (by minimizing the negative yield) of the reaction – are introduced in [27]. The overall bi-objective optimal control problem is given by

We consider the molar concentrations \(c_A(k)\ge 0\) and \(c_B(k)\ge 0\), \(k\in \mathbb {N}\), of A and B respectively, and \(0\le u(k)\le 20\)(L/min) is the flow through the reactor at time k. The feed concentrations of A and B are given by \(c_{A_f}=1\) mol/L, and \(c_{B_f}=0\) mol/L respectively. The volume of the reactor is given by \(V =10\) L. Further, we abbreviate the states by \(c = (c_A, c_B)\) and we consider two stage costs given by

where the second stage cost consists of the price of B and a separation cost. These second costs therefore represent the (negative) economic yield of the reaction. Further, we set the terminal cost to zero, i.e. \(F_{i}\equiv 0\) for \(i=1,2\). The equilibrium under consideration of the system in (6.1) is given by \((c_A^{e},c_B^{e},u^{e})=(c^{e},u^{e})=(\frac{1}{2}, \frac{1}{2}, 12)\), which we also set as the terminal constraint, i.e. \(\mathbb {X}_0=\{(c^{e},u^{e})\}\). This way, Assumption 3.1 is fulfilled since stabilizing stage costs always render the optimal control problem strictly dissipative and by setting \(\kappa = u^{e}\) there exists a local feedback with the desired properties. Hence, Assumption 4.4 is also satisfied. By imposing box constraints \(\mathbb {U}\) and \(\mathbb {X}\) we can conclude external stability of \({\mathcal {J}}^{N}_{\mathcal {P}}(c_0)\) and, thus, the trajectory convergence as well as the averaged and non-averaged performance of the first cost criterion \(J_{1}\) by Corollary 4.3 and Theorem 4.5.

In this example we use

-

Algorithm 1 to substantiate our theoretical results with numerical simulations. Thus, we restrict only the first objective by the constraint in step (1) of the algorithm. The resulting bound on the nondominated set in the second iteration is visualized in Fig. 3;

-

the ASMO Solver [1], a solver for nonlinear multiobjective optimization, to generate an approximation of the nondominated set in the first iteration for choosing the first efficient solution. ASMO is an implementation of the algorithm presented in [10, 11] which combines the Pascoletti-Serafini scalarization with an adaptive parameter control to achieve an approximation of the nondominated set with well distributed approximation points;

-

as the first efficient solution the efficient solution with \(J^5(x_0,{{\textbf {u}}}_{c_0}^\star )=(54.034, 9.500)\) for \(N=5\), and with \(J^{15}(c_0,{{\textbf {u}}}_{c_0}^\star )=(408.459,-478.459)\) for \(N=15\);

-

the global criterion method, also known as compromise programming approach, see, for instance, [21], to find efficient solutions of the multiobjective optimization problems in the subsequent iterations as proposed in a multiobjective MPC context in [27, 28]. This means that the subsequent efficient solution \({{\textbf {u}}}_{x(k)}^{\star }\) is chosen in each iteration such that

$$\begin{aligned} \begin{array}{rcl} {{\textbf {u}}}_{x(k)}^{\star }\in {{\,\mathrm{arg\,min}\,}}\bigg \{\left( \sum \limits _{i=1}^{s}|J_{i}^{N}(x(k),{{\textbf {u}}})-z_{i}^\star |^2\right) ^{\frac{1}{2}}\,&{}\bigg |\,&{} {{\textbf {u}}}\in \mathbb {U}^{N}(x_0),\\ &{}&{} J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)}^{\star })\le J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)})\bigg \}, \end{array} \end{aligned}$$(6.2)where

$$\begin{aligned} z_{i}^\star =\min \left\{ \left. J_{i}^{N}(x(k),{{\textbf {u}}})\,\right| \ {{\textbf {u}}}\in \mathbb {U}^{N}(x_0),\ J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)}^{\star })\le J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)}) \right\} , \end{aligned}$$for all \(i=1,\ldots ,s\), is set as the so called ideal point of the restricted problem, cf. [28]; i.e., \({{\textbf {u}}}_{x(k)}^{\star }\) is defined as pre-image of the nondominated point which has the smallest Euclidean distance to the ideal point. Whenever applying Algorithm 2 instead of Algorithm 1, then \(J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)}^{\star })\le J_{1}^{N}(x(k),{{\textbf {u}}}_{x(k)})\) is replaced by \(J_{i}^{N}(x(k),{{\textbf {u}}}_{x(k)}^{\star })\le J_{i}^{N}(x(k),{{\textbf {u}}}_{x(k)})\), \(i=1,\ldots ,s\) in the above optimization problems.

We note that for this example the optimization problems contained as subproblems in these algorithms are non-convex, hence we have no theoretical guarantees that the numerical optimization reached a globally optimal solution. However, the numerical results strongly suggest that globally optimal solutions were found in all our numerical simulations.

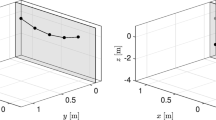

The behavior of the closed-loop trajectory is visualized in Fig. 4 for MPC-horizons \(N=5\) and \(N=15\). We observe that the trajectories converge to the equilibrium \(c^e\) independently of the choice of MPC-horizon and the initial value. However, the MPC-horizon N influences the convergence rate. On the left side, for \(N=5\), the \(c_B\)-trajectory converges faster to the equilibrium point \(c^{e} =\frac{1}{2}\) than for \(N=15\). We note that this is a typical behavior of MPC with equilibrium terminal constraints, see [16, Discussion after Ex. 7.23]. In addition, the components of the trajectory show different transient behavior.

In contrast, the bound of the averaged performance of \(J_{1}\) is independent of N, the initial value and the choice of the efficient solutions in each iteration. According to Remark 4.6 the bound is given by \(\ell _{1}(c^{e},u^{e})=0\). This bound and the averaged costs \({{\bar{J}}}_{1}^k\) in dependence of the iteration step k are visualized in Fig. 5 for MPC-horizons \(N=5\) and \(N=15\). Additionally, the averaged cost of the cost criterion \(J_2\) is visualized in Fig. 6 with bound \(\ell _2(c^{e},u^{e})=-6\) for MPC-horizon \(N=5\) and \(N=15\). For both cost criteria the averaged cost \({{\bar{J}}}_{i}^k\), \(i=1,2\), converges for \(k\rightarrow \infty\) whereas for \(N=5\) the convergence is significantly faster. Moreover, we remark that the second averaged cost \(\bar{J}_2^k\) requires considerably more iterations to converge.

Since the upper bound on \(J_{1}^\infty (c(0),\mu ^{N})\) from Theorem 4.5 depends on the first efficient solution \({{\textbf {u}}}_{c_0}^{\star }\) in Algorithm 1, we have visualized the performance result for different choices of this efficient solution. In Fig. 7 on the left side the first nondominated set \({\mathcal {J}}^{N}_{\mathcal {P}}(c_0)\) is shown with the different choices of the first efficient solution. The red point corresponds to the efficient solution such that \(J^5(c_0,{{\textbf {u}}}_{c_0}^\star )=(76.064,-13.435)\) and the blue point corresponds to \(J^5(c_0,{{\textbf {u}}}_{c_0}^\star )=(182.852,-26.267)\). Further, the performance of the first cost criterion \(J_{1}\) for \(N=5\) is visualized in dependence of the iteration step k and the choice of the first efficient solution (the red line correspond to the red efficient solution and the blue line respectively to the blue one). The dashed lines mark the upper bounds derived by the values of the first objective function for the chosen first efficient solution \(J_{1}^{N}(c(0),{{\textbf {u}}}_{c_0}^{\star })\). Hence, we remark that the choice of the first efficient solution has a big impact on the upper bound and on the performance of \(J_{1}\).

By choosing the efficient solution \({{\textbf {u}}}_{1}^\star\) (red point), which has a relatively small value in the first cost functional, we get an upper bound of about 76. In contrast, the efficient solution \({{\textbf {u}}}_2^\star\) (blue point) with small value in the second cost delivers an upper bound of approximately 182. Moreover, we observe that for both efficient solutions the values of the cost functional \(J_{1}\) reach a small neighborhood of their stationary values 53 (red) and 86 (blue), respectively, after less than 10 iteration steps. Additionally, the theoretical upper bound, which depends on the choice of the first efficient solution and is visualized as a dashed line, is adhered as expected. Thus, we can confirm the dependence of the performance of \(J_{1}\) on the choice of the first efficient solution.

The last result shown for Algorithm 1 is the asymptotic stability property of the equilibrium \((c^{e},u^{e})\). In contrast to the convergence, the condition for stability depends on the initial value. For this reason, we have to ensure that inequality (5.1) is verified for the initial value \(c(0)=c_0=(c_A(0),c_B(0))\) and the corresponding first efficient solution \({{\textbf {u}}}_{c_0}^{\star }\). With a suitable choice of the first efficient solution we can ensure the existence of \(\gamma _J\in {\mathcal {K}}_\infty\) such that inequality (5.1) holds, since \(\ell _{1}(c,u)\) is a quadratic function and the system is exponentially controllable to \(c^e\).

In Fig. 8 the Euclidean norm \(\left\Vert c(k)-c^{e}\right\Vert _2\) of the closed-loop trajectory is visualized for fixed MPC-horizon \(N = 15\) in dependence of the iteration step k and for different initial values c(0). There, we observe that the closer the initial value is to the equilibrium the smaller is the peak of the norm of the trajectory. The numerical results indicate that the stability result from Theorem 5.1 holds for this example.

In the next example we continue with the reactor example, but now we consider Algorithm 2 and check the stronger assumptions that we will need to apply Theorem 5.5.

Example 6.2

(Reactor Part 2) We consider again the isothermal reactor described in Example 6.1 with the same constants and constraints. Now, we like to illustrate the results of the performance on the second cost criterion \(J_2\). Therefore, we need to consider Algorithm 2 where inequality (5.3) holds for all cost criteria. Since we have imposed the special case of an endpoint constraint \(\mathbb {X}_0=\{(c^{e},u^{e})\}\) the endpoint is fixed by \(c(N) = (c_A(N),c_B(N)) = (c_A^{e},c_B^{e})\) and Assumption 5.4 is trivially satisfied for \(\kappa = u^{e}\). Thus, we can conclude the existence of \(\delta \in {\mathcal {L}}\) for which the performance estimate on \(J_2\) according to Theorem 5.5 holds.

For \(N=5\), numerical test show that for \(\delta (5)=1/5\) the inequality

holds for \(k\ge 5\) large enough. The second cost \(J_2\) and the corresponding bound \({\mathcal {M}}({{\textbf {u}}}_{c_0}^{\star },5,k)\) are visualized in Fig. 9. For other MPC-horizons N and other choices of the first efficient solution it is not that easy to find appropriate values for the \({\mathcal {L}}-\)function \(\delta\). For this reason, we only visualize the bound \({\mathcal {M}}\) for this special case in Fig. 9.

In Fig. 10 the performance of the cost criterion \(J_2\) is visualized for MPC-horizon \(N= 5\) and for different choices of the first efficient solution \({{\textbf {u}}}_{c(0)}^{\star ,N}\). The first efficient solutions are chosen as in Example 6.1 in Fig. 7 on the left side. Note that the first nondominated set \({\mathcal {J}}^{N}_{\mathcal {P}}(c(0))\) is identical for both algorithms. Thus, the efficient solution (and the colors) are the same as in the previous simulations. Again, we remark that the choice of the first efficient solution has an impact on the performance of the second cost criterion \(J_2\).

After verifying the theoretical results from Sect.4 and 5 by means of numerical simulations for the isothermal reactor with two cost functions, we now like to illustrate that—as the theoretical results suggest—our approach also works for more than two cost criteria. To this end, we add another cost criterion to the multiobjective optimal control problem (6.1) and present the numerical results in the same manner as in Example 6.1 and 6.2.

Example 6.3

(Reactor with three objectives) We consider the isothermal reactor from Example 6.1 and the corresponding multiobjective optimal control problem (6.1). In order to extend the example we add a third cost function given by

i.e., we now aim to minimize \(J^{N}(c_0,{{\textbf {u}}})=(J_{1}^{N}(c_0,{{\textbf {u}}}),J_2^{N}(c_0,{{\textbf {u}}}),J_3^{N}(c_0,{{\textbf {u}}}))\). Stage cost \(\ell _3\) is a continuous function and, thus, Assumption 3.1 is satisfied. Assumptions 4.4 and 5.4 can be shown exactly as in Example 6.1 and 6.2. For our numerical simulations we consider the MPC-horizon \(N=15\). As in Example 6.2, we apply Algorithm 2 to illustrate the trajectory convergence, the averaged performance of all cost criteria \(J_{i}\), \(i=1,2,3\), and, especially, the non-averaged performance of \(J_{i}\) for all \(i\in \{1,2,3\}\). Further, we chose the first efficient solution such that \(J^{15}(c_0,{{\textbf {u}}}_{c_0}^\star )=(317.827,-380.092,1969.311)\).

In Fig. 11 we observe that the closed-loop trajectory behaves qualitatively as in Fig.4 but quantitatively there are differences. Especially, the peak of the second component \(c_B\) is higher than in Example 6.1. Further, the averaged cost \({{\bar{J}}}_{1}\) has a smaller start value and the amplitude of the second averaged cost \({{\bar{J}}}_2\) is larger than in the previous example. These phenomenons are visualized in Fig.12. Especially, on the right side in Fig. 12 we remark that the third averaged cost \({{\bar{J}}}_3\) also converges from below to the theoretical bound \(\ell _3(x^{e},u^{e})=144\) as stated in Theorem 4.8.

Further, in Fig. 13 the performances of \(J_{i}\), \(i=1,2,3\), are shown. Again, the first cost function \(J_{1}\) complies with the theoretical bound \(J_{1}^{N}(c_0,{{\textbf {u}}}^{\star }_{c_0})\). For the second and third cost criterion we can observe that the performance behaves as expected. The third cost \(J_3\) is strictly increasing since in each iteration the squared value of the cost in the equilibrium \(u^{e}=12\) is added.

In the examples in this section we have illustrated our theoretical results numerically, particularly regarding the impact of the choice of the first efficient solution \({{\textbf {u}}}_{x(0)}^{\star }\), chosen in step (0) of our algorithms. In the next section, we will analyse numerically whether the choice of the subsequent efficient solutions \({{\textbf {u}}}_{x(k)}^{\star }\) in step (1) of the algorithms, has an impact on the solution behavior and on the performance.

7 Selection rules for subsequent efficient solutions

In this section we will investigate numerically the impact of different selection rules for the subsequent efficient solutions, i.e., those in step (1) of the algorithms. We will do this for Algorithm 2, since we want to guarantee the theoretical performance estimates for all cost criteria. Closely related is the development of the nondominated sets during the iterations. In all simulations, we consider the same first efficient solution and, thus, determine the performance of the MO MPC algorithm only depending on the choice of the subsequent efficient solutions. In order to describe the expected effects, we first recall the resulting bounds on the nondominated set of Algorithm 2. For this reason, we visualized the nondominated set of the isothermal reactor with two objectives, see Example 6.2, in the second iteration in Fig. 14. The approximation of this nondominated set was calculated with ASMO [1]. We observe that the resulting nondominated set from which we choose the efficient solution is relatively small and excludes the extremal ends of the nondominated set.

Nevertheless, there is still a degree of freedom in choosing the subsequent efficient solution. Generally, an efficient solution with values at the top left of the considered nondominated set will cause the first cost criterion to become smaller, and vice versa. Particularly, in our setting, where the first cost is always the stabilizing one, putting a large emphasis on the first cost criterion forces the trajectory to converge faster, since this causes a lower cost. In order to check whether this effect can be seen in practice, we will investigate different selection rules for choosing the subsequent efficient solutions. We examine the influence of the choice of the subsequent efficient solutions in the algorithms on the solution and performance behavior. To this end, we introduce the following selection rules for the subsequent efficient solutions:

-

“ideal”: the efficient solutions are computed as in (6.2) as pre-image of nondominated points with minimal Euclidean distance to the ideal point \(z^\star\). This selection rule is illustrated in Fig. 15.

-

“min 1”: the efficient solutions are chosen such that \(J_{1}^{N}\) (with the additional bounds from (5.3)) is minimal.

-

“min 2”: the efficient solutions are chosen such that \(J_2^{N}\) (with the additional bounds from (5.3)) is minimal.

We note that the last two selection criteria only guarantee to find weakly efficient solutions of the underlying multiobjective optimization problem which are located at the extremal ends of the nondominated set. The set of weakly efficient solutions forms a superset of the set of efficient solutions and contains also those feasible solutions for which just no other feasible solution exists which strictly improves all objective functions at the same time. However, in our case the solutions are additionally constrained by the bounds (5.3) in Algorithm 2, which “cut off” these extremal points, cf. Fig. 14. Since according to our numerical experience this is the situation in all our numerical tests, we can rule out that our algorithm yields weakly efficient solutions which are not also efficient.

Example 7.1

(Reactor Part 3) We consider again the isothermal reactor from Example 6.1 with the same constants and constraints. For the simulation we used Algorithm 2 with the different selection rules described above for choosing the subsequent efficient solution \({{\textbf {u}}}^{\star }_{c(k)}\). In all simulations we consider the MPC-horizon \(N= 5\) and use the same first efficient solution \({{\textbf {u}}}_{c(0)}^{\star }\) which is chosen as in Example 6.1. In Fig. 16 the first and the second nondominated set are shown.

The colored points are the efficient solutions chosen according to the respective selection rules. While in all simulations the same first efficient solution is chosen, we compare different selection rules for the subsequent efficient solutions, which are, however, fixed during the iterations. The different selection rules are visualized in Fig. 16 on the right-hand side. Further, in Fig. 17 we visualized the nondominated set and the corresponding chosen subsequent efficient solutions in iteration step \(k=6\).

We remark that the magnitudes of the cost functionals and, thus, the size and the location of the nondominated sets are significantly different for the three selection rules. While the nondominated set for “min 2” (right) has a range from 7 to 13 and from -58 to -52, the nondominated set for “min 1” (mid) is substantially smaller with a range from 0.5 to 0.8 and from -36 to -34.6. The nondominated set for “ideal” (left) lies between those for “min 1” and “min 2”. Hence, for each selection rule the subsequent efficient solutions are chosen not only according to different rules, but also from completely different nondominated sets. This indicates that the choice of the efficient solutions should have an impact on convergence rate and performance. Fig. 18 illustrates the resulting closed-loop trajectories.

On the left-hand side in Fig. 18 we observe that for the first component of the trajectory \(c_A\) all selection rules deliver a similar behavior. In contrast, on the right-hand side, the behavior of the second component \(c_B\) depends strongly on the selection rule. The rule “min 2” aims to maximize the economic yield. Therefore, the second component of the closed-loop has large values and converges only slowly to the equilibrium. While the trajectory of “min 1” reaches a small neighborhood of the equilibrium within 15 iteration steps, “min 2” needs about 2000 iterations to get similarly close to the equilibrium. In Fig. 19, we visualize the cost criteria \(J_{1}^{N}\) and \(J_2^{N}\).

Here, we observe that “min 1” results in a significantly smaller \(J_{1}\) than the other strategies, while \(J_2\) is the largest, whereas “min 2” enforces exactly the opposite. In terms of the cost, the main feature of “ideal” becomes particularly clear. The selection rule “ideal” yields a compromise between both costs, which in this example turns out to be closer to “min 1” than to “min 2”.

While the results in Example 7.1 show precisely the behavior that one would expect from the different selection rules, a priori it was not clear that the quantitative differences are so pronounced. Indeed, as the following example shows, the effect of the different rules can also be almost negligible.

Example 7.2

We consider an economic growth model, introduced in [6]. The system dynamic is given by

and the stage cost by

with parameters \(A =5\) and \(\alpha =0.34\). We impose state and control constraints \(\mathbb {X}=[0,10]\) and \(\mathbb {U}=[0.1,5]\). As calculated in [7], the equilibrium for which the problem is strictly dissipative is given by \((x^{e},u^{e})=(x^{e},x^{e})\approx (2.23,2.23)\). We use the equilibrium to set the endpoint terminal constraint \(\mathbb {X}_0=\{x^{e}\}\). Next, we introduce the second stage cost

which additionally stabilizes the equilibrium. Thus, the multiobjective optimal control problem reads

Due to the strict dissipativity, the stage cost \(\ell _{1}\) fulfills the required Assumption 3.1 and 4.4. Since we have introduced endpoint terminal constraints Assumption 5.4 holds with the same argument as in Example 6.2. Hence, this example fits in our theoretical setting. Now we check whether the choice of the subsequent efficient solution in Algorithm 2 has an impact on the solution behavior. To this end, we consider the MPC-horizon \(N=10\) and the different selection rules as in Example 7.1. We chose the first efficient solution such that \(J^{10}(x_0,{{\textbf {u}}}_{x_0}^{\star })=(-15.085,7.892)\).

In Fig. 20 we observe that in the second iteration only one single point is cut out of the nondominated set and, thus, there is no more degree of freedom in choosing the efficient solution. This suggests that the selection rules have no impact on the solution behavior. This is confirmed by Fig. 21, as there are no differences—except for numerical inaccuracies—in the trajectories resulting from the selection rules. The same phenomenon is reflected in the costs. Thus, we can conclude that for this example the choice of the subsequent efficient solutions has no influence on the behavior of the trajectory and the cost criteria.

In summary, we can say that the implementation of different selection rules for the subsequent efficient solutions can make a significant difference for the resulting closed loop trajectories and costs, as seen in Example 7.1. In contrast, Example 7.2 shows that this difference may also be negligible.

8 Conclusion and outlook

In this paper we have introduced a new multiobjective MPC algorithm for which we require strong assumptions, i.e., strict dissipativity and the existence of a compatible terminal cost, only for the first cost criterion. For this algorithm we have shown a performance estimate for the first cost function \(J_{1}^{N}\) as well as averaged performance estimates for all cost function \(J_{i}^{N}\), \(i=1,\dots ,s\). Under suitable technical assumption we have shown asymptotic stability of the closed-loop trajectory using time-varying Lyapunov functions. Further, for the algorithm introduced in [18] and using our assumption we state a performance theorem for the other cost functions \(J_{i}^{N}\), \(i=2,\dots ,s\), again without requiring strict dissipativity for these costs. In addition, we have numerically illustrated our theoretical results and, in the course of this, investigated the influence on the solution behavior of selection rules for the choice of the subsequent efficient solutions. For this influence we have shown that, depending on the concrete example, it may be significant or negligible.

For future research it would be interesting to investigate theoretical performance estimates for different selection rules. In Sect.7 we have observed that for some selection rules the upper bound of the performance of the cost function is sharp, while for other selection rules it is not. Thus, the question arises whether we can refine our estimates by taking into account the selection rules from the second iteration on is still open. Another point is the efficiency of the resulting closed-loop trajectory on the infinite time horizon and the corresponding MPC feedback. The question whether we can state an optimality result comparable to those for the standard MPC case, see for instance [16, 17], still remains open.

Data availability

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

ASMO - a solver for multiobjective optimization. https://github.com/GEichfelder/ASMO

Amrit, Rishi, Rawlings, James B., Angeli, David: Economic optimization using model predictive control with a terminal cost. Annu. Rev. Control. 35, 178–186 (2011)

Angeli D., Amrit R., Rawlings JB: Rawlings. Receding horizon cost optimization for overly constrained nonlinear plants. In Proceedings of the 48th IEEE Conference on Decision and Control – CDC 2009, pp 7972–7977, Shanghai, China, (2009)

Angeli, David, Amrit, Rishi, Rawlings, James B.: On average performance and stability of economic model predictive control. IEEE Trans. Autom. Control 57(7), 1615–1626 (2012)

Banholzer, S., Fabrini, G., Grüne, L., Volkwein, S.: Multiobjective model predictive control of a parabolic advection-diffusion-reaction equation. Mathematics 8(5), 777 (2020)

Brock, W.A., Mirman, L.J.: Optimal economic growth and uncertainty: The discounted case. J. Econ. Theor 4(3), 479–513 (1972)

Damm, Tobias, Grüne, Lars, Stieler, Marleen, Worthmann, Karl: An exponential turnpike theorem for dissipative discrete time optimal control problems. SIAM J. Control. Optim. 52(3), 1935–1957 (2014)

Diehl, M., Amrit, R., Rawlings, J.B.: A Lyapunov function for economic optimizing model predictive control. IEEE Trans. Autom. Control 56(3), 703–707 (2011)

Ehrgott Matthias: Multicriteria Optimization. Springer-Verlag, (2005)

Eichfelder, Gabriele: Adaptive scalarization methods in multiobjective optimization. Springer, Vector Optimization (2008)

Eichfelder, Gabriele: An adaptive scalarization method in multiobjective optimization. SIAM J. Optim. 19(4), 1694–1718 (2009)

Eichfelder, G.: Twenty years of continuous multiobjective optimization in the twenty-first century. EURO J. Comput. Optim 9, 100014 (2021)

Flaßkamp, Kathrin, Ober-Blöbaum, Sina, Peitz Sebastian Symmetry in optimal control: A multiobjective model predictive control approach. In Advances in Dynamics, Optimization and Computation, pages 209–237. Springer International Publishing, (2020)

Grüne, Lars: Economic receding horizon control without terminal constraints. Automatica 49(3), 725–734 (2013)

Grüne, Lars, Panin Anastasia: On non-averaged performance of economic MPC with terminal conditions. In Proceedings of the 54th IEEE Conference on Decision and Control — CDC 2015, pages 4332–4337, Osaka, Japan, (2015)

Grüne, Lars, Pannek, Jürgen.: Nonlinear Model Predictive Control : Theory and Algorithms, 2nd edn. Communications and Control Engineering. Springer, Cham, Switzerland (2017)

Grüne, Lars, Stieler, Marleen: Asymptotic stability and transient optimality of economic MPC without terminal conditions. J. Process Control 24(8), 1187–1196 (2014)

Grüne, Lars, Stieler, Marleen: Multiobjective model predictive control for stabilizing cost criteria. Discrete & Continuous Dynamical Systems - B 24(8), 3905–3928 (2019)

Kajgaard, Mikkel Urban, Mogensen, Jesper, Wittendorff, Anders, Todor Veress, Attila, Biegel Benjamin: Model predictive control of domestic heat pump. In Proceedings of the 2013 American Control Conference, pages 2013–2018. IEEE, (2013)

Logist, Filip, Houska, Boris, Diehl, Moritz, Van Impe, Jan: Fast Pareto set generation for nonlinear optimal control problems with multiple objectives. Struct. Multidiscip. Optim. 42(4), 591–603 (2010)

Miettinen, Kaisa: Nonlinear Multiobjective Optimization. Springer, US (1998)

Rawlings, James B., Mayne, David Q., Diehl, Moritz M.: Model Predictive Control: Theory. Computation and Design. Nob Hill Publishing, Madison, Wisconsin (2017)

Sauerteig, Philipp, Worthmann, Karl: Towards multiobjective optimization and control of smart grids. Optim. Control. Appl. Method 41, 128–145 (2019)

Sawaragi, Yoshikazu: Theory of multiobjective optimization. Academic Press, Orlando (1985)

Schmitt, Thomas, Rodemann,Tobias, Adamy, Jürgen: Multi-objective model predictive control for microgrids. at - Automatisierungstechnik, 68(8):687–702, (2020)

Stieler, M: Performance Estimates for Scalar and Multiobjective Model Predictive Control Schemes. PhD thesis, Universität Bayreuth, Bayreuth, (2018)

Zavala, Victor M.: A multiobjective optimization perspective on the stability of economic MPC. IFAC-Pap. Online 48(8), 974–980 (2015)

Zavala, V.M., Flores-Tlacuahuac, A.: Stability of multiobjective predictive control: A utopia-tracking approach. Automatica 48(10), 2627–2632 (2012)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

conflict of interest

This work was supported by Grant Gr 1569/13-2 of the Deutsche Forschungsgemeinschaft (DFG).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors are supported by DFG Grant Gr 1569/13-2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Eichfelder, G., Grüne, L., Krügel, L. et al. Relaxed dissipativity assumptions and a simplified algorithm for multiobjective MPC. Comput Optim Appl 86, 1081–1116 (2023). https://doi.org/10.1007/s10589-022-00398-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-022-00398-4