Abstract

The paper deals with the numerical solution of the problem P to maximize a homogeneous polynomial over the unit simplex. We discuss the convergence properties of the so-called replicator dynamics for solving P. We further examine an ascent method, which also makes use of the replicator transformation. Numerical experiments with polynomials of different degrees illustrate the theoretical convergence results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The present paper studies two special methods for computing local or global maximizers of homogeneous polynomials of degree d over the unit simplex in \({\mathbb{R}}^n\). The maximization problem is referred to as P (cf., (1)). We are especially interested in the so-called replicator iteration to solve P. The mathematical structure and theoretical properties of P have been investigated, e.g., in [5, 6] for \(d=2\) and, e.g., in [1] for \(d>2\). The problem considered has many applications, such as portfolio decisions [15], evolutionary biology (see [3, 21] for \(d=2\), and [20] for \(d>2\)), as well as maximum clique problem in (hyper-) graphs [9].

It is well-known that for \(d\ge 2\) the global maximization problem P is NP-hard (cf., [19]). Approximation methods for computing a global maximizer have been investigated in [2] for \(d=2\), and in [12, 13] for \(d > 2\). However, in the present article we are less interested in the solution of the global maximization problem but we are looking for an algorithm to compute local maximizers of P. For this purpose we focus our attention to the so-called replicator dynamics (cf., (20) below). The useful monotonicity properties of the replicator transformation are well-known (see [2, 14]). The convergence of the replicator iteration to a (unique) critical point of P has been proven in [16] for \(d=2\). Also for \(d=2\), results on the rate of convergence of the replicator dynamics have been presented in [17].

In the present paper, we are especially interested in the convergence properties of the replicator method for \(d > 2\). It appears that (as for \(d=2\)) a linear convergence to a critical point \(\overline{x}\) of P can only occur if a strict complementarity condition is satisfied at \(\overline{x}\). We therefore also examine an appropriate ascent method to increase the convergence speed. Another main aim of the paper is to provide a selection of numerical experiments with many example problems for objective functions of different degrees d.

The paper is organized as follows. Section 2 introduces the maximization problem P, presents properties of the homogeneous objective function f(x) in terms of the corresponding n-dimensional symmetric tensor A of order d, and summarizes useful optimality conditions for P.

In Sect. 3, we describe two important applications of our maximization problem. Section 4 contains the main results of the paper. We study the properties of the replicator method to find local maximizers of P. More precisely, in Sect. 4.1 we discuss the behavior of the replicator transformation y(x) (cf., (16)). It appears that the fixed points of this transformation y(x) coincide with the critical points of P. We summarize known convergence results of the replicator iteration for the case \(d=2\) and present new convergence results for \(d \ge 2\). Section 4.3 shows how the replicator transformation y(x) can be used to set up an ascent method, enjoying a faster convergence in comparison with the pure replicator dynamics. Section 5 presents many numerical experiments with the replicator iteration and the special ascent method for example objective functions f(x) of different degrees d. Finally, Sect. 6 is devoted to concluding remarks.

2 Preliminaries

In the present paper, we consider the problem to compute local or global maximizers of an (in general nonconvex) homogeneous polynomial of degree d over the unit simplex in \({\mathbb{R}}^n\):

where

is a homogeneous polynomial of degree d given by the \(d^{th}\) order n-dimensional tensor \(A=(a_{i_1, \ldots , i_d})_{1\le i_1, \ldots , i_d \le n}\). As usual, \(e \in {\mathbb{R}}^n\) is the vector with all components equal to 1. We define the index set \(N := \{1, \ldots , n\}\).

In this paper, we always assume that the tensor A is symmetric, i.e.,

The space of all symmetric tensors of order d in n-variables has dimension \({n+d-1 \atopwithdelims ()d}\) and is denoted by \({\mathcal{S}}^{n,d}\). For \(A, B \in {\mathcal{S}}^{n,d}\) we define the inner product \(A \cdot B\) by,

For \(x \in {\mathbb{R}}^n\) we introduce the symmetric tensor \(x^d = (x_{i_1} x_{i_2} \cdots x_{i_d})_{1\le i_1, \ldots , i_d \le n} \in {\mathcal{S}}^{n,d}\). Then the polynomial f(x) of order d can be written as

If we make use of the fact that f(x) is a d-linear form we write

We further introduce the tensors of order \(d-1\):

With these abbreviations the following holds for the elements of the gradient \(\nabla f(x)\) and the Hessian \(\nabla ^2 f(x)\),

Interested in (local) maximizers of P we summarize some well-known facts needed below. For \(\overline{x}~\in ~\Delta _n\) the KKT condition is said to hold if with (unique) Lagrangian multipliers \(\lambda \in {\mathbb{R}}\), \(0 \le \mu \in {\mathbb{R}}^n\), we have

We call such a point \(\overline{x}\) a KKT point of P. A feasible point \(\overline{x}\in \Delta _n\) satisfying (5) with \(\mu \in {\mathbb{R}}^n\) (without the non-negativity condition) is called a critical point of P. The KKT point \(\overline{x}\) (critical point \(\overline{x}\)) is said to satisfy strict complementarity (SC) if

For a KKT point \(\overline{x}\in \Delta _n\) with corresponding (unique) multiplier \(0 \le \mu \in {\mathbb{R}}^n\) the necessary second order (NSOC) and the sufficient second order conditions (SSOC) are given by

where \(C_{\overline{x}}\) is the cone of critical directions,

Here are the well-known optimality conditions for P (cf., e.g., [1, Lemma 3.3a,b, Remark 3.3]).

Lemma 1

-

(a)

[necessary conditions] Let \(\overline{x}\in \Delta _n\) be a local maximizer of P. Then the KKT condition holds for \(\overline{x}\) with NSOC.

-

(b)

[sufficient conditions] Let \(\overline{x}\in \Delta _n\) be a KKT point of P satisfying SSOC, then \(\overline{x}\) is a strict local maximizer of P satisfying the following second order growth condition: with some \(\varepsilon , c >0\) it holds

$$\begin{aligned} f(\overline{x}) \ge f(x) + c \Vert x - \overline{x}\Vert ^2 \, \quad \forall x \in \Delta _n, \, \Vert x - \overline{x}\Vert < \varepsilon . \end{aligned}$$(8)Moreover, \(\overline{x}\) is an isolated KKT point.

The KKT- and critical point conditions can be written in terms of the tensor A. Componentwise, condition (5) yields using (3),

Multiplying with \(\overline{x}_i\) and summing up leads to

By substituting \(\lambda = d\, A\cdot \overline{x}^d\) into (9) we obtain

So, the critical point condition for \(\overline{x}\) is equivalent with

and such a point \(\overline{x}\) is a KKT point of P, if in addition we have

Moreover, a critical point \(\overline{x}\) satisfies the SC condition (\(\mu _i \ne 0 \, \forall i\) such that \(\overline{x}_i =0\)) if and only if

Remark 1

Note that for any critical point \(\overline{x}\in \Delta _n\) of P satisfying \(\overline{x}>0\) (\(\overline{x}\) in the relative interior of \(\Delta _n\)) by definition (\(\overline{x}_i \mu _i=0\) for all i) the multiplier \(\mu\) (cf., (5)) must be zero. So, any critical point \(\overline{x}> 0\) must be a KKT point.

Remark 2

The assumption that a homogeneous polynomial f(x) of degree d,

(\(c_{i_1, \ldots , i_d} \in {\mathbb{R}}\) arbitrarily) is given in the form

with symmetric coefficients \(a_{i_1, \ldots , i_d}\), i.e., \(A=\bigl (a_{i_1, \ldots , i_d} \bigr )_{ 1\le i_1, \ldots , i_d \le n} \in S^{n,d}\), does not mean any restriction. Indeed, let be given a polynomial in the form (13). Take any of the subsets \(\{j_1, \ldots , j_d\} \subset N\) such that \(j_1 \le j_2 \le \ldots \le j_d\). Let \({\mathcal {S}} = {\mathcal {S}}(j_1,\ldots , j_d)\) be the set of all permutations \(\sigma = \sigma (j_1,\ldots , j_d)\) of the numbers in \(\{j_1,\ldots , j_d\}\) without repetition. Then by

we have defined a symmetric tensor A such that \(f(x) = A \cdot x^d\).

Remark 3

In this paper, we study problem P for the case of homogeneous polynomials f(x). But this does not mean a real restriction. Indeed suppose we have given a non-homogeneous polynomial f(x) of degree d. We then can simply transform f(x) into homogeneous form as follows. By multiplying each monomial \(\prod _{i=1}^n x_i^{\beta _i}\) with \(\sum _{i=1}^n \beta _i <d\) appearing in f by the factor \(\bigl (\sum _{i=1}^n x_i \bigr )^{d - \sum _{i=1}^n \beta _i}\), f becomes a homogeneous polynomial of degree d and in view of \(\sum _{i=1}^n x_i=1\) this does not change the function values of f on \(\Delta _n\).

We finally give some notation used throughout the paper. As usual, \(e_i, \, i \in N\), denote the standard basis vectors in \({\mathbb{R}}^n\), \(\Vert x\Vert\) is the Euclidean norm of \(x \in {\mathbb{R}}^n\), and for a tensor \(A \in {\mathcal{S}}^{n,d}\) we define the (Frobenius) norm \(\Vert A\Vert = \sqrt{A \cdot A}\). This tensor norm satisfies \(|A \cdot B| \le \Vert A\Vert \Vert B\Vert\) and for \(x \in \Delta _n\) we find \(\Vert x^d \Vert \le 1\).

3 Applications

Polynomial optimization over the simplex has numerous applications. In this section we describe two of them. For both we present numerical experiments in Sect. 5.

3.1 Evolutionarily stable strategies

The first application depends on the close relation between the maximization problem P and a model in evolutionary biology. For \(d=2\) the model goes back to Maynard Smith and Price [22] and for \(d > 2\) we refer to [7, 20].

Let us consider a population of individuals which differ in n distinct features (also called strategies):

-

For \(x = (x_1, \ldots , x_n) \in \Delta _n\), the component \(x_i\) gives the percentage of the population having feature i. So, x gives the strategy (state) of the whole population.

-

We are given a symmetric fitness tensor \(A \in {\mathcal{S}}^{n,d}\). The value \(A \cdot x^d\) represents the (mean) fitness of a population with strategy x.

In evolutionary biology special strategies called evolutionarily stable strategies (ESS) play an important role.

Definition 1

[ESS in biology] A point \({x \in \Delta _n}\) is called an ESS for \(A \in {\mathcal{S}}^{n,d}\) if for all \(x \ne y \in \Delta _n\) the following conditions hold for the d-linear form \(f(x)= A \cdot x^d \equiv F(x, \ldots , x)\):

-

1.

\(F(y, x , \ldots ,x) \le F(x,x, \ldots ,x)\)

-

2.

and if \(F(y, x , \ldots ,x) = F(x,x, \ldots ,x)\) then \(F(y, y,x , \ldots ,x) \le F(y,x, \ldots ,x)\)

\(\vdots\)

-

d.

and if \(F(y, x , \ldots ,x) = F(y,y,x, \ldots ,x)= \ldots = F(y, \ldots ,y,x)\) then \(F(y , \ldots , y ,y) < F(y, \ldots ,y, x)\).

If only the first relation \(F(y, x , \ldots ,x) \le F(x,x, \ldots ,x) \forall y \in \Delta _n\) holds, the strategy x is called a symmetric Nash equilibrium (see, e.g., [1, Remark 3.1]).

The following relation is well-known, see, e.g., [1, Lemma 3.1, Lemma 3.2].

Lemma 2

Let be given \(A \in {\mathcal{S}}^{n,d}\) and \(x \in \Delta _n\) . Then, x is an ESS for A if and only if x is a strict local maximizer of P . Moreover, x is a symmetric Nash equilibrium if and only if x is a KKT point of P.

3.2 Cliques in a d-graph

The optimization problem P is also connected with the maximum clique problem on graphs (\(d=2\)) and on hypergraphs (\(d > 2\)).

Definition 2

Let \(G=(N,E)\) be a hypergraph with set \(N=\{1,2,\cdots ,n\}\) of vertices and set \(E \subset P(N) \setminus \emptyset\) of (hyper) edges \(e \in E\). Here, P(N) is the power set of N (set of all subsets of N). Let for \(d \ge 2\) the set \(P_d(N)\), \(P_d(N) \subset P(N)\), denote the set of all subsets in P(N) with exactly d elements. A hypergraph G is called a d-graph if all edges \(e \in E\) are in \(P_d(N)\). A 2-graph is also called a graph. A subset C of N is a clique in the d-graph G if \(P_d(C) \subset E\). A clique C is called maximal if it is not contained in any other clique, and it is said to be a maximum clique if C has maximum cardinality among all cliques. The complement \(\overline{G}\) of a d-graph G is defined as the d-graph \(\overline{G}=(N,\overline{E})\) where \(\overline{E}=P_d(N) \setminus E\).

As we will see now, there is a close relation between the maximal/maximum clique problem in a d-graph and special problems P. For \(d=2\) (graphs) we refer the reader to [4, 18] and for \(d>2\) (hypergraphs) to [9].

Let us introduce the so-called Lagrangean \(L_{\overline{G}}\) of the complement \(\overline{G}\) of a d-graph G,

and for \(\tau >0\) the homogeneous polynomial of degree d,

Then consider the optimization problem:

Define for a subset \(S \subset N\) the characteristic vector \(x^S \in \Delta _n\) by

Then the following holds (see [9, Theorem 5] for a proof).

Theorem 1

Let \(G=(N,E)\) be a d -graph and let \(0< \tau \le \frac{1}{d(d-1)}\) ( \(\tau < \frac{1}{d(d-1)}\) for \(d=2\) ). Then \(x\in \Delta _n\) is a strict local (global) maximizer of (15) if and only if \(x=x^C\) is the characteristic vector of a maximal (maximum) clique C of G.

4 Numerical methods based on the replicator transformation

In this section, we are going to discuss two methods for computing local maximizers of P which will be based on the following so-called replicator transformation:

For given \(A\in {\mathcal{S}}^{n,d}\) and \(x \in \Delta _n\) define the transformation

We emphasize that to assure that the image y(x) remains in \(\Delta _n\) we have to make sure that the values \(A \cdot x^d\) and \(A^i \cdot x^{d-1}\) remain positive. To do so throughout the paper we assume

In Sect. 4.4 lateron, we will see that this assumption does not mean any real restriction.

4.1 Properties of the replicator transformation y(x)

As usual a point \(\overline{x}\in \Delta _n\) is called a fixed point of the transformation y in (16) if \(y(\overline{x}) = \overline{x}\) holds or equivalently if

A comparison with (10) reveals the following key connection between the transformation y and the maximization problem P. For \(\overline{x}\in \Delta _n\) it holds,

It is well-known that the transformation y(x) enjoys a monotonicity property.

Lemma 3

(Monotonicity) For any \(x \in \Delta _n\) and y(x) given by (16) it holds for \(f(x)=A\cdot x^d\),

with equality if and only if \(y(x)=x\) (i.e., x is a fixed point of y).

Proof

For a proof we refer to [2, 14]. The proofs are valid for general (non-symmetric) tensors. \(\square\)

In [17] a stronger version of the monotonicity result has been proven for \(d=2\):

It is not difficult to show that this formula remains true for \(d>2\).

The monotonicity behavior of y(x) gives rise to the following replicator iteration for solving the maximization problem P: Start with some \(x^{(0)} \in \Delta _n\) and iterate (cf., (16))

In algorithmic form this iteration leads to Method 1.

By a simple compactness and continuity argument combined with Lemma 3, we obtain (see [16, Proposition 1]).

Lemma 4

Any sequence (20) has a limit point (at least one) and each limit point \(\overline{x}\) of this iteration is a fixed point of y , i.e., a critical point of P (cf., (19)).

Unfortunately, limit points \(\overline{x}\) of (20) (i.e., critical points of P) need not satisfy the necessary KKT condition for a local maximizer of P (see Lemma 1(a)). If however the iterates \(x^{(k)}\) in (20) converge to a fixed point \(\hat{x}\) of y which is not a KKT point of P (cf., also Remark 1), then the following lemma could be used to jump away from \(\hat{x}\) to better points.

Lemma 5

Let \(\hat{x}\in \Delta _n\) be a fixed point of y but not a KKT point of P , so that by (11) there is some index \(j_0\) with \(\hat{x}_{j_0} =0\) and \(A^{j_0} \cdot \hat{x}^{d-1}> A \cdot \hat{x}^{d}\) . Then for all \(t\ge 0\) the points \(\frac{\hat{x}+ t e_{j_0}}{1+t}\) are in \(\Delta _n\) and satisfy

Proof

Since \(e^T \frac{\hat{x}+ t e_{j_0}}{1+t} =\frac{1}{1+t} ( e^T\hat{x}+ t) =1\) and \(\frac{\hat{x}+ t e_{j_0}}{1+t} \ge 0\) for all \(t \ge 0\), it follows \(\frac{\hat{x}+ t e_{j_0}}{1+t} \in \Delta _n\). To prove the second statement it suffice to show that the differentiable function \(g(t)= f\bigl ( \frac{\hat{x}+ t e_{j_0}}{1+t} \bigr )\) satisfies \(g'(0) > 0\). By our assumptions we actually obtain

\(\square\)

4.2 Convergence properties of the replicator iteration

Let A and thus \(f(x) = A \cdot x^d\) be given, and consider for some starting point \(x^{(0)}\) the replicator iterates \(x^{(k)}\) in (20). Let \(S=S(x^{(0)})\) denote the set of all limit points of the sequence \(x^{(k)}\). Recall that by Lemma 4 the set S is nonempty and is contained in the set of all fixed points of the mapping y (cf., (16)).

Lemma 6

The limit point set S is closed (compact), connected, and all points \(\overline{x}\in S\) have the same value \(f(\overline{x}) = v_S\) .

Proof

A proof is, e.g., to be found in [16, Proposition 1], where the case \(d=2\) is considered. The proof is also valid for the general case \(d \ge 2\). \(\square\)

More difficult is to answer the following two questions.

-

Is for each starting point \(x^{(0)} \in \Delta _n\) the replicator iteration (20) convergent, i.e., is \(S=S(x^{(0)}) = \{ \overline{x}\}\) a singleton?

-

If \(x^{(k)}\) converges to (a unique) \(\overline{x}\), how fast is this convergence, i.e.,

$$\begin{aligned} {\text{how fast goes}} \, \Vert x^{(k)} - \overline{x}\Vert \, {\text{ to zero?}} \end{aligned}$$

For \(d=2\) the first question has been answered positively in [17, Theorem 3.2] and in [16, Convergence Theorem 2]. However, a generalisation of the proofs to the case \(d>2\) seems to be difficult. The arguments in these proofs essentially make use of the fact that (for \(d=2\)) the function f(x) is quadratic.

For \(d=2\) also the second question has been answered in [17] as follows. Recall that the convergence of \(x^{(k)} \rightarrow \overline{x}\) is said to be linear, if with some constants \(C > 0\) and q, \(0 \le q < 1\), we have

The constant q is called linear convergence factor.

Theorem 2

Let \(d=2\) and let the iterates \(x^{(k)}\) in (20) converge to \(\overline{x}\). (Recall that in this case \(\overline{x}\) is a fixed point of y and a critical point of P, see Lemma 4). Then:

-

(a)

With some constant \(C_1 > 0\) it holds

$$\begin{aligned} \Vert x^{(k)} - \overline{x}\Vert \le C_1 \cdot \frac{1}{\sqrt{k}} \qquad \forall k \in {\mathbb N}. \end{aligned}$$ -

(b)

The convergence is linear if and only if the critical point \(\overline{x}\) of P satisfies the SC condition

$$\begin{aligned} A^i \cdot \overline{x}^{d-1}- A\cdot \overline{x}^d \ne 0 \, \quad {\text{ for all }} i \in N {\text{ with }} \overline{x}_i = 0 {\text{ and }}x_i^{(0)} >0. \end{aligned}$$(21)

Proof

For (a) we refer to [17, Theorem 3.3] and for (b) to [17, Theorem 3.4]. \(\square\)

Again, we did not see a way to generalize the results of Theorem 2 to the case \(d>2\) (except for the only if direction in Theorem 2(b), cf., Theorem 3 below). The reason is that the proof of the preceding theorem is based on Lemmas 2.3,2.4 in [17] which again essentially depend on the fact that for \(d=2\) the function f(x) is a quadratic polynomial.

Notice that if the SC condition (21) is not fulfilled the convergence must be sublinear and according to Theorem 2(a) the convergence might be extremely slow.

Recall that for \(d=2\) (cf., [17, Theorem 3.2]), any iteration (20) converges to a (unique) point \(\overline{x}\). Since we did not succeed in a generalization of this statement to \(d > 2\), we will at least give a partial convergence result for the general case \(d \ge 2\).

Theorem 3

For any \(d \ge 2\) the following holds.

-

(a)

Let the iterates \(x^{(k)}\) given by (20) have a limit point \(\overline{x}\) such that \(\overline{x}\) is a KKT point with corresponding (unique) multiplier \(0 \le \mu \in {\mathbb{R}}^n\), satisfying the second order condition SSOC (cf., (7)). Then the sequence \(x^{(k)}\) converges to \(\overline{x}\), i.e., the point \(\overline{x}\) is the only limit point.

-

(b)

Suppose \(\overline{x}\) is a KKT point of P satisfying the second order condition SSOC, as well as the SC condition (12). Then there is some \(\delta _1 >0\) such that the following is true: The point \(\overline{x}\) is the only fixed point \(\hat{x}\in \Delta _n\) of y(x) with \(\Vert \hat{x}- \overline{x}\Vert < \delta _1\), and for any starting point \(x^{(0)} \in \Delta _n\), with \(\Vert x^{(0)} - \overline{x}\Vert < \delta _1\) the replicator iterates (20) converge to \(\overline{x}\).

Proof

By the assumptions the limit point \(\overline{x}\) (see Lemma 1(b)) satisfies the relation (8). So let \(\varepsilon>0, c > 0\) be given as in (8). The mapping y(x) is a continuous function of x (recall that by assumption we have \(A \cdot x^d \ge m >0, \, \forall x \in \Delta _n\)). So, in view of \(y(\overline{x}) - \overline{x}=0\), given \(\varepsilon\), we can chose some \(\delta _0 > 0\) such that

Clearly, we can chose \(\delta _0 < \frac{\varepsilon }{2}\). For any \(\delta\), \(0< \delta < \delta _0\), there is some \(\delta _1\) such that

Again take \(\delta _1 < \delta\). Since \(\overline{x}\) is a limit point of \(x^{(k)}\) we can find an iterate \(x^{(k_\delta )}\) satisfying \(\Vert ~x^{(k_\delta )}~-~\overline{x}~\Vert ~<~\delta _1\). By (22) we then have

In view of (8) and the monotonicity condition \(f(y(x^{(k_\delta )} )) \ge f(x^{(k_\delta )} )\) it follows

i.e., \(\Vert x^{(k_\delta +1) } - \overline{x}\Vert < \delta\). We can repeat the same arguments and obtain \(\Vert y(x^{(k_\delta +1)} )- \overline{x}\Vert < \varepsilon\), \(\Vert x^{(k_\delta + 2)} ) - \overline{x}\Vert ^2 \le \delta ^2\) and thus for all \(k \ge k_\delta\) the iterates \(x^{(k)}\) stay in the neighborhood \(\{x \in \Delta _n \mid \Vert x - \overline{x}\Vert < \delta \}\). Since \(\delta\) can be chosen arbitrarily small it follows that the sequence \(x^{(k)}\) must converge to \(\overline{x}\).

(b) By continuity, using SC, there exists \(\delta _2 >0\) such that (cf., (11), (12))

It now follows that if \(\hat{x}\in \Delta _n\), \(\Vert \hat{x}- \overline{x}\Vert < \delta _2\), is a fixed point of y, then it must be a KKT point of P. Indeed, by (23) we obtain \(\hat{x}_i =0 \Rightarrow \overline{x}_i =0\) and thus by the first relation of (23) we find

Consequently, in view of (11) \(\hat{x}\) is a KKT point. According to the last statement of Lemma 1(b), by making \(\delta _2\) smaller if necessary, \(\hat{x}\) must coincide with \(\overline{x}\), so that \(\overline{x}\) is the only fixed point in a \(\delta _2\)-neighborhood of \(\overline{x}\).

To prove the convergence statement recall the arguments in the proof of part (a) and choose \(\delta\) in (a) smaller than \(\delta _2\). Then if we take a starting point \(x^{(0)} \in \Delta _n\) satisfying \(\Vert x^{(0)} - \overline{x}\Vert < \delta _1\) (with \(\delta _1\) in (a)) by the arguments above, for all iterates \(x^{(k)}\) the relation

holds. To show that \(x^{(k)}\) converges to \(\overline{x}\), suppose to the contrary that the sequence \(x^{(k)}\) has a limit point \(\hat{x}, \, \hat{x}\ne \overline{x}\). By (24) we have \(\Vert \hat{x}- \overline{x}\Vert \le \delta < \delta _2\) in contradiction to the fact that \(\overline{x}\) is the only fixed point satisfying \(\Vert \hat{x}- \overline{x}\Vert < \delta _2\). \(\square\)

Fortunately, the proof of the only if part of the statement in Theorem 2(b) remains valid for \(d>2\).

Theorem 4

For all \(d \ge 2\) the following holds. Let the sequence \(x^{(k)}\) given by (20) converge linearly to the fixed point \(\overline{x}\) of y. Then the following SC conditions must hold:

Proof

For completeness we reproduce the proof from [17, Theorem 3.3]. Notice first that by definition (cf., (16)) the relation \(x^{(0)}_i=0\) implies \(x^{(k)}_i =0\) for all k and \(\overline{x}_i=0\). Moreover, by (20) it follows

and thus

By [11, Proposition 2.26] we have

Now let \(x^{(k)}\) converge linearly to \(\overline{x}\), i.e., with some \(C>0\), \(0< q<1\), it holds componentwise

So for any index i such that \(x^{(0)}_i >0\), \(\overline{x}_i=0\) (the nontrivial case), this implies

Consequently using (26) we obtain

\(\square\)

We emphasize that the SC condition (25) in Theorem 4 is slightly stronger than the SC condition (21) in Theorem 2(b). The former in particular implies that if we start with \(x^{(0)} > 0\) then linear convergence can only occur if \(x^{(k)}\) converges to a KKT point \(\overline{x}\). Recall (cf., Remark 1) that any fixed point \(\overline{x}\) of y satisfying \(\overline{x}>0\) must be a KKT point.

4.3 Speeding up the convergence by line maximization

Notice that by Theorem 4, linear convergence of the replicator iteration (20) to a fixed point \(\overline{x}\) of y can only happen if the SC condition (25) is met. Keep in mind that this SC condition trivially holds for a fixed point \(\overline{x}>0\) (i.e., \(\overline{x}\) is in the relative interior of \(\Delta _n\)). In other words, for a fixed point \(\overline{x}\) where (25) is not fulfilled, a convergence to this point must be sublinear. According to Theorem 2(a) the sublinear convergence might be extremely slow.

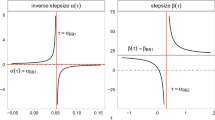

On the other hand, even when for larger d (say \(d>4\)) linear convergence occurs, the linear convergence factor \(q<1\) might be very close to 1 (see numerical experiments below).

So we may be interested to speed up the convergence by an appropriate ascent method using (some sort of) line maximization. Also here the transformation y(x) can be used to achieve that. Indeed it appears, that the vector \(s= y(x)-x\) is a direction of growth for f.

Lemma 7

For all \(x \in \Delta _n\) it holds for y(x) in (16),

Proof

Using \(\sum _i x_i [ A^i \cdot x^{d-1}] = A \cdot x^d\), we find for \(x \in \Delta _n\),

So \(f(x)^T (y(x)-x) \ge 0\) holds iff we have

The convexity of the real valued function \(h(t) = t^2\) yields the inequality

with equality iff for all \(x_i > 0\) the values \(t_i = \lambda\) are the same. So taking \(t_i: = A^i \cdot x^{d-1}\) we have shown that \(f(x)^T (y-x) \ge 0 \,\) holds, with equality iff for all \(x_i > 0\) the values \(A^i \cdot x^{d-1} = \lambda\) are the same. We finally obtain for \(\lambda\) a value

which proves the statement of the lemma. \(\square\)

The preceding lemma suggests the following ascent method for solving P.

It can easily be seen that for the sequence \(x^{(k)}\) generated by Method 2 a result as in Lemma 4 remains true. Also Lemma 6 is still valid for the set S of limit points of such a sequence \(x^{(k)}\), except for the connectness of S. The reason is that the "pure" line maximization of Method 2 allows the iterates \(x^{(k)}\) to jump (infinitely many times) between different (unconnected) fixed points \(\overline{x}, \hat{x}\) of S. In our numerical experiments, we however did never see such an event. A ziggzagging between different fixed points could however be prevented by an appropriate step-length control in the line maximization.

Efficient implementation of the line maximization step (3): We add some remarks on an efficient implementation of the line maximization step (3) of Method 2. Recall that by Lemma 3 we have for \(x=x^{(k)}\) (since \(y(x^{(k)}) \ne x^{(k)}\))

To find the optimal step-size t we have to solve the line maximization problem (with \(x = x^{(k)}\))

It is easily seen that with \(f(x) \equiv F(x, \ldots , x)\) (a d-linear form) we have (\(y=y(x)\))

The condition \(x+t(y(x)-x) \in \Delta _n, \, t\ge 0\), leads to the restriction

So we have to solve the line maximization problem

with a polynomial g(t) of degree d. Notice that in view of formula (28) for x close to a fixed point \(\overline{x}\) of y the coefficients \(\alpha _i, \, 1 \le i \le d\), tend to zero according to (recall \(y(\overline{x})-\overline{x}=0\))

Consequently, for \(x \approx \overline{x}\) the coefficients \(\alpha _i\), \(i \ge 3\), will only play a minor role in the line maximization of g(t) and we propose the following efficient procedure.

Approximate line maximization step (\(3^{\prime }\)) in Method 2:

-

Only compute the coefficients \(\alpha _1, \alpha _2\) (\(\alpha _1 >0\) by Lemma 7), and consider the quadratic approximation \(\tilde{g}(t)=\alpha _0 + \alpha _1t + \alpha _2 t^2\) of g(t).

-

Compute the solution \(\overline{t} = \frac{- \alpha _1}{2 \alpha _2}\) of \(\tilde{g}'(t)=0\), and take as new iterate \(x^{(k+1)}\) on the line segment \(\bigl \{ x^{(k)} + t (y(x^{(k)}) - x^{(k)}) \mid t \in (0, t^*] \bigr \}\) the point

$$\begin{aligned} x^{(k+1)} = \left\{ \begin{array}{ll} x^{(k)} + \overline{t} (y(x^{(k)}) - x^{(k)}) &{} {\text{if }}\, 0 < \overline{t} \le t^*\\ \arg \max \bigl \{ f(y( x^{(k)})),\, f( x^{(k)} + {t^*} (y(x^{(k)}) - x^{(k)})) \bigr \}&{} {\text{otherwise}} \end{array} \right. . \end{aligned}$$

We emphasize that for solving this approximate line maximization problem we only have to compute the coefficients \(\alpha _1, \alpha _2\) of g(t). This can be done efficiently, requiring (roughly) the computation time of one function evaluation of f.

Remark 4

The line maximization (27) is particularly easy for \(d=2\). Then (as a simple exercise) we can show: the optimal step-size \(t_x\) in (27) is given by

4.4 Dependence of the replicator iteration on a transformation \(A \rightarrow A + \alpha E\)

We have to recall that the property

and the monotonicity \(f(y(x)) \ge f(x)\) for \(x \in \Delta _n\) depends on the assumption \(f(x)=A \cdot x^d >0\) and \(\frac{\partial }{\partial x_i} f(x) = d\, A^i \cdot x^{d-1} \ge 0 \, \forall i\). We have assured these conditions by assuming \(A > 0\), i.e., all elements of the tensor A are positive.

In case A has negative elements we can force positivity by applying a transformation \(A \rightarrow A+ \alpha E\), where \(E \in {\mathcal{S}}^{n,d}\) is the tensor with all elements equal to 1, and \(\alpha \in {\mathbb{R}}\) is chosen such that \(A+ \alpha E >0\) holds. So instead of \(f(x)= A \cdot x^d\) we consider the function \(f^\alpha (x):= (A+ \alpha E)\cdot x^d\). Using \(E\cdot x^d =1\) for \(x \in \Delta _n\), we obtain the identities

Fortunately under this transformation the fixed points of y(x) remain the same.

Lemma 8

Under the transformation \(f(x) \rightarrow f^\alpha (x)\) , \(\alpha \in {\mathbb{R}}\) , the fixed points of y and the KKT points of P , and thus the maximizers of P , remain unchanged.

Proof

Consider the transformation \(f(x) \rightarrow f^\alpha (x)\) and the corresponding transformation \(y(x) \rightarrow y^\alpha (x)\) where \(y^\alpha (x)\) is the vector with coefficients

So the fixed point relation \(y^\alpha (x)=x\), \(x \in \Delta _n\), is equivalent with

which are the fixed point relations for y(x). The same is true for the additional KKT conditions (cf., (11)). \(\square\)

Note that formally we have \(f = f^0\) and \(y=y^0\). Evidently, all results in the preceding subsections remain valid for any function \(f^\alpha , \, y^\alpha\), as long as \((A+ \alpha E) > 0\) is satisfied. However the iterates \(x^{(k+1)}= y^\alpha (x^{(k)})\) of the replicator dynamics (20) clearly depend on \(\alpha\). So starting at the same point \(x^{(0)}\) the limit points of these iterates and the speed of convergence may strongly depend on the choice of \(\alpha\). We give an illustrative example.

Example 1

For \(n=d=2\) consider the function \(f^{\alpha }(x)= x_1^2 +x_2^2 + x_1 x_2 +\alpha\) and iteration \(x^{(k+1)}= y^\alpha (x^{(k)})\), where

which has a (repelling) fixed point (minimizer) at (1/2, 1/2) and two maximizers at \(\overline{x}=(1,0)\) and \(\tilde{x} = (0,1)\).

Starting from \(x^{(0)} = (0.55,0.45)\) the iterates \(x^{(k)}\) converge to \(\overline{x}\) with convergence quotients \(q^{(k)}:= \frac{\Vert x^{(k)} -\overline{x}\Vert }{\Vert x^{(k-1)} - \overline{x}\Vert }\) given in the following table for different values of \(\alpha\).

It is not difficult to see that depending on the choice of \(\alpha\) the linear convergence factors \(q^{(k)}\) converge to:

showing how in this example the linear convergence factor increases with increasing \(\alpha\).

Notice that the SC condition (25) holds at \(\overline{x}\) (and \(\tilde{x}\)). For \(i =2\) with \(\overline{x}_2 =0\) we actually find \(A^2 \cdot \overline{x}^{d-1} = \frac{1}{2} < 1 = A \cdot \overline{x}^d\).

We can also give a general formula for the dependence on \(\alpha\) of the transformation \(y^\alpha (x)\) compared with \(y(x)=y^0(x)\):

So for small \(\rho := \frac{\alpha }{A \cdot x^d }\) using \(\frac{1}{1+ \rho } = 1 - \rho + O(\rho ^2)\) we find

Rewriting this relation in the form

it appears that compared with \(\alpha =0\), for \(\alpha >0\) the new point \(y^\alpha (x)\) is closer to x (i.e., the step is shorter).

This may be seen as an indication that with increasing \(\alpha\) the speed of convergence is decreasing, as was shown explicitly in Example 1. All our numerical experiments suggest such a behavior. So as a rule of thumb, to assure \((A+ \alpha E)> 0\) we should choose \(\alpha\) appropriately small.

5 Numerical experiments

In this section, we report on many examples which illustrate numerically the theoretical convergence results in Section 4.

In all examples, we indicate the numbers n, d, the function f(x) and the choices of \(\alpha\) to assure \(f^{\alpha }(x) > 0\) (cf., Subsection 4.4). We also give the starting point \(x^{(0)}\) and specify whether the replicator iteration (20) (Method 1) is applied or Method 2 is used. For the former we describe the convergence behavior of the sequence \(x^{(k)} \rightarrow \overline{x}\). If linear convergence occurs also information on the linear convergence factors \(q^{(k)} := \frac{\Vert x^{(k+1)} - \overline{x}\Vert }{\Vert x^{(k)} - \overline{x}\Vert }\) is provided.

Example 2

We chose \(n=3\), \(d=2\) and

There is a unique (global) maximizer \(\overline{x}=(\frac{1}{3}, \frac{1}{3}, \frac{1}{3})\) which trivially satisfies the SC condition, cf., (12). Thus according to Theorem 2 the replicator iteration (20) should always show a linear convergence when starting with \(x^{(0)}>0\).

Replicator iteration:

-

\(\alpha =1\), different starting points \(x^{(0)} > 0\) led to \(x^{(k)} \rightarrow \overline{x}\) and linear convergence factors \(q^{(k)}\) converging to \(0.66\ldots\) . E.g., \(x^{(0)}= (0.4,0.5,0.1)\) and \(\Vert x^{(20)} - \overline{x}\Vert \approx 4 \cdot 10^{-4}\).

-

\(\alpha =2\) different starting points \(x^{(0)} > 0\) led to \(x^{(k)} \rightarrow \overline{x}\) and linear convergence factors \(q^{(k)}\) converging to \(0.83\ldots\) . E.g., \(x^{(0)}= (0.4,0.5,0.1)\) and \(\Vert x^{(20)} - \overline{x}\Vert \approx 2 \cdot 10^{-2}\).

Method 2:

-

\(\alpha =1\), \(x^{(0)} = (0.2,0.3,0.5)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(10)} - \overline{x}\Vert \approx 3 \cdot 10^{-5}\).

-

\(\alpha =2\), \(x^{(0)} = (0.2,0.3,0.5)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(10)} - \overline{x}\Vert \approx 4 \cdot 10^{-5}\).

Compared with the replicator iteration, Method 2 shows a considerable speeding up of the convergence.

Example 3

Take \(n=3\), \(d=2\) and

with unique maximizer \(\overline{x}= \frac{1}{2}(1,1,0)\), \(f(\overline{x})=0\). In view of \(\frac{1}{2} \frac{\partial }{\partial x_3} f(\overline{x}) =0 = f(\overline{x})\) the condition SC is not satisfied. Thus according to Theorem 2 starting with some \(x^{(0)} >0\) the convergence of the replicator dynamics (20) must be sublinear. Replicator iteration:

-

\(\alpha =2\), \(x^{(0)} = (0.3,0.2,0.5)\) led to \(x^{(k)} \rightarrow \overline{x}\) and factors \(q^{(k)}\) which monotonically increase towards 1 with e.g., \(q^{(100)}= 0.989..\) , \(\Vert x^{(100)} - \overline{x}\Vert \approx 3 \cdot 10^{-2}\).

Method 2:

-

\(\alpha =2\), \(x^{(0)} = (0.3,0.2,0.5)\) generated \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(20)} - \overline{x}\Vert \approx \cdot 3 \cdot 10^{-2}\).

Example 4

Consider for \(n=3\), \(d=3\) the function

with 3 (global) maximizers \(\overline{x}=(\frac{1}{3}, \frac{1}{3}, \frac{1}{3})\), \(\hat{x}=(1,0,0)\) and \(\tilde{x} = (0,1,0)\), where \(f(\overline{x})=f(\hat{x})= f(\tilde{x})=0\). At \(\overline{x}>0\) the SC condition is (trivially) satisfied but not at \(\hat{x}, \tilde{x}\).

Replicator iteration:

-

\(\alpha =0.5\), \(x^{(0)} = (0.2,0.6,0.2)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.925..\), and \(\Vert x^{(100)} - \overline{x}\Vert \approx 3 \cdot 10^{-4}\).

-

\(\alpha =1\), \(x^{(0)} = (0.5,0.2,0.3)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.963..\), and \(\Vert x^{(100)} - \overline{x}\Vert \approx 8 \cdot 10^{-2}\).

-

\(\alpha =1\), \(x^{(0)} = (0.4,0.4,0.2)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.667..\), and \(\Vert x^{(30)} - \overline{x}\Vert \approx 1 \cdot 10^{-6}\).

-

\(\alpha =1\), \(x^{(0)} = (0.8,0.1,0.1)\) led to a sublinear convergence \(x^{(k)} \rightarrow \hat{x}\) with \(q^{(k)} \uparrow 1\), and \(q^{(200)} \approx 0.997..\), \(\Vert x^{(200)} - \hat{x}\Vert \approx 9 \cdot 10^{-2}\).

Note that for the same value \(\alpha =1\) different starting points led to convergence to \(\overline{x}\) with different linear convergence factors 0.963.., resp., 0.667.. .

Method 2:

-

\(\alpha =1\), \(x^{(0)} = (0.5,0.2,0.3)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(10)} - \overline{x}\Vert \approx 4 \cdot 10^{-3}\).

-

\(\alpha =2\), \(x^{(0)} = (0.8,0.1,0.1)\) led to \(x^{(k)} \rightarrow \hat{x}\) with \(x^{(2)} \approx (0.91,0,0.09)\) on subface, \(x_2^{(2)} =0\), and \(x^{(11)} = \hat{x}\) exactly.

Example 5

For \(n=3\), \(d=4\) the function

has the (global) maximizers \(\overline{x}=(\frac{1}{3}, \frac{1}{3}, \frac{1}{3})\), \(\hat{x}=(1,0,0)\) and \(\tilde{x} = (0,0,1)\), where at \(\overline{x}\) SC (trivially) holds but not at \(\hat{x}, \tilde{x}\).

Replicator iteration:

-

\(\alpha =0.5\), \(x^{(0)} = (0.2,0.3,0.5)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.948..\), and \(\Vert x^{(200)} - \overline{x}\Vert \approx 3 \cdot 10^{-5}\).

-

\(\alpha =1\), \(x^{(0)} = (0.2,0.3,0.5)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.974..\), and \(\Vert x^{(200)} - \overline{x}\Vert \approx 4 \cdot 10^{-3}\).

-

\(\alpha =1\), \(x^{(0)} = (0.8,0.1,0.1)\) led to a sublinear convergence \(x^{(k)} \rightarrow \hat{x}\) with \(q^{(k)} \uparrow 1\), and \(q^{(200)} \approx 0.995..\), \(\Vert x^{(200)} - \hat{x}\Vert \approx 1\cdot 10^{-2}\).

Method 2:

-

\(\alpha =1\), \(x^{(0)} = (0.2,0.3,0.5)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(20)} - \overline{x}\Vert \approx 5 \cdot 10^{-4}\).

-

\(\alpha =1\), \(x^{(0)} = (0.8,0.1,0.1)\) led to \(x^{(k)} \rightarrow \hat{x}\) with \(\Vert x^{(20)} - \hat{x} \Vert \approx 8 \cdot 10^{-3}\).

Example 6

Consider for \(n=3\), \(d=6\) the function

The (global) maximizers are \(\overline{x}=(\frac{1}{3}, \frac{1}{3}, \frac{1}{3})\), \(\hat{x}=(1,0,0)\) and \(\tilde{x} = (0,1,0)\), with \(f(\overline{x})=f(\hat{x})= f(\tilde{x})=0\). Again at \(\overline{x}\) the condition SC holds but not at \(\hat{x}, \tilde{x}\).

Replicator iteration:

-

\(\alpha =0.5\), \(x^{(0)} = (0.2,0.5,0.3)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.994..\), and \(\Vert x^{(500)} - \overline{x}\Vert \approx 3 \cdot 10^{-2}\).

-

\(\alpha =1\), \(x^{(0)} = (0.2,0.5,0.3)\) led to a linear convergence \(x^{(k)} \rightarrow \overline{x}\) with \(q^{(k)} \rightarrow 0.997..\), and \(\Vert x^{(500)} - \overline{x}\Vert \approx 1.2 \cdot 10^{-1}\).

-

\(\alpha =1\), \(x^{(0)} = (0.8,0.1,0.1)\) led to a sublinear convergence \(x^{(k)} \rightarrow \hat{x}\) with \(q^{(k)} \uparrow 1\), and \(q^{(500)} \approx 0.999..\), \(\Vert x^{(500)} - \hat{x}\Vert \approx 3 \cdot 10^{-1}\).

Method 2:

-

\(\alpha =0.5\), \(x^{(0)} = (0.2,0.5,0.3)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(100)} - \overline{x}\Vert \approx 4 \cdot 10^{-6}\).

-

\(\alpha =1\), \(x^{(0)} = (0.2,0.5,0.3)\) led to \(x^{(k)} \rightarrow \overline{x}\) with \(\Vert x^{(100)} - \overline{x}\Vert \approx 2 \cdot 10^{-3}\).

-

\(\alpha =1\), \(x^{(0)} = (0.8,0.1,0.1)\) led to \(x^{(k)} \rightarrow \hat{x}\) with \(\Vert x^{(100)} - \hat{x}\Vert \approx 3 \cdot 10^{-1}\).

For this example problem we have added some more experiments with \(\alpha =1\). For both methods, 1000 random initial points \(x^{(0)}\) have been generated. The stopping criterion for convergence is \(\min \{\Vert x^{(k)} - {\bar{x}}\Vert , \Vert x^{(k)} - \hat{x}\Vert , \Vert x^{(k)} - \tilde{x}\Vert \} < 10^{-3}\) and we allowed a maximum number of 1000 iterations in each run.

In both methods, for \(x^{(0)}\) near \({{\bar{x}}}\) the replicator iteration converges to \({{\bar{x}}}\). For Method 1 only one of the 1000 initial points converged to \({\hat{x}}\) within the 1000 iterations and none converged to \({\tilde{x}}\) (cf., Figure 1, Table 1). The convergence to these points was too slow (sublinear). Method 2 performed better (see Figure 2 and Table 1). All maximizers \({\bar{x}} , \hat{x}, \tilde{x}\) have been detected.

Table 1 presents the results of the experiments for both methods. It contains the number of cases out of the 1000 runs where convergence occurred, as well as the corresponding maximum, minimum and mean numbers (with ± standard deviation) of iterations for the random initial point \(x^{(0)}\).

Method 1 applied to Example 6 (NC = did not meet convergence criterion)

Method 2 applied to Example 6 (NC = did not meet convergence criterion)

Example 7

(cf. [8, Example 9.8]) In this example we consider the ESS problem with \(n=2, d=4\). The tensor \(A\in {\mathcal{S}}^{2,4}\) of the function \(f(x)= A \cdot x^d\) is given by:

The function f has two (global) maximizers \({{\bar{x}}}=(\frac{1}{4}, \frac{3}{4})\) and \({\hat{x}}=(\frac{3}{4}, \frac{1}{4})\). We choose \(\alpha = \frac{13}{96} + \frac{1}{100}\) and generate 1000 random instances for the initial vector \(x^{(0)}\). In this example for all initial vectors the iterations met the convergence criterion \(\min \{\Vert x^{(k)} - {\bar{x}}\Vert , \Vert x^{(k)} - \hat{x}\Vert \} < 10^{-3}\) and around 50% of the initial points converged to \({{\bar{x}}}\). The others converged to \({\hat{x}}\). The details of the experiment are presented in Table 2, where again for both methods the maximum, minimum and mean numbers (with ± standard deviation) of iterations are given.

Example 8

(cf. [10]) Consider the \(3-\)hypergraph with \(V=\{1,2,3,4,5\}\) and

So we have (see Section 3.2), \(n=5,d=3\)

The \(3-\)hypergraph has three maximum cliques, \(\{1,2,3,4\}, \{1,2,3,5\}, \{3,4,5\}\) with corresponding global maximizers \({\bar{x}}=(0,0,\frac{1}{3},\frac{1}{3},\frac{1}{3}),\tilde{x}=(\frac{1}{4},\frac{1}{4},\frac{1}{4},\frac{1}{4},0), \hat{x}=(\frac{1}{4},\frac{1}{4},\frac{1}{4},0,\frac{1}{4})\) of \(h_{{{\bar{G}}}}^{\tau } (x)\). The corresponding tensor \(A \in S^{5,3}\) is given by,

We have chosen \(\tau = \frac{1}{12}\) (cf. Theorem 1) and have applied a transformation \(A \rightarrow A + \alpha E\) with \(\alpha = \frac{1}{6} + \frac{1}{100}\). The stopping criterion for convergence is \(\min \{\Vert x^{(k)} - {\bar{x}}\Vert , \Vert x^{(k)} - \hat{x}\Vert , \Vert x^{(k)} - \tilde{x}\Vert \} < 10^{-3}\) and we allowed a maximum number of 1000 iterations in each run. For all 1000 random starting vectors \(x^{(0)}\) the iterations converged to one of the maximizers. The details are to be found in Table 3.

Example 9

We apply Method 2 to detect the maximal cliques for some of the graphs in the well-known Second DIMACS Implementation Challenge. Note that in this case we have \(d=2\) and line maximization step is simplified (see Remark 4).Footnote 1 For each graph we apply the method for 1000 initial points \(x^{(0)}\). The results are shown in Table 4. Column 4 and 5 give the number n of nodes and the number |E| of edges. In the last column the notation \(c:\#\) means that a maximal clique of size c has been detected \(\#\) times out of the 1000 runs with stopping criterion \(\Vert x^{k+1}-x^{k}\Vert \le 10^{-6}\).

6 Conclusion

In this paper we are interested in the problem to maximize homogeneous polynomials of degree d over the simplex. Two methods for the computation of local (global) maximizers are investigated. Both are based on the replicator dynamics.

New convergence results for the replicator iteration are presented for the case \(d >2\). Linear convergence to a maximizer \({{\bar{x}}}\) can only occur if strict complementarity holds at \({{\bar{x}}}\). Many numerical experiments illustrate the theoretical convergence results.

References

Ahmed, F., Still, G.: Maximization of homogeneous polynomials over simplex and sphere: structure, stability, and generic behavior. J. Optim. Theory Appl. 181, 972–996 (2019)

Baum, L., Eagon, J.: An inequality with applications to statistical estimation for probabilistic functions of Markov processes and to a model for ecology. Bull. Am. Math. Soc. 73, 360–363 (1967)

Bomze, I.M.: Non-cooperative two-person games in biology: a classification. Int. J. Game Theory 15(1), 31–57 (1986)

Bomze, I.M.: Evolution towards the maximum clique. J. Glob. Optim. 10(2), 143–164 (1997)

Bomze, I.M.: On standard quadratic optimization problems. J. Glob. Optim. 13(4), 369–387 (1998)

Bomze, I.M., De Klerk, E.: Solving standard quadratic optimization problems via linear, semidefinite and copositive programming. J. Glob. Optim. 24(2), 163–185 (2002)

Broom, M., Cannings, C., Vickers, G.: Multi-player matrix games. Bull. Math. Biol. 59(5), 931–952 (1997)

Broom, M., Rychtár, J.: Game-Theoretical Models in Biology. CRC Press, Boca Raton (2013)

Bulò, S.R., Pelillo, M.: A continuous characterization of maximal cliques in k-uniform hypergraphs. In: International conference on learning and intelligent optimization, pp. 220–233. Springer (2007)

Bulò, S.R., Pelillo, M.: A generalization of the Motzkin–Straus theorem to hypergraphs. Optim. Lett. 3(2), 287–295 (2009)

Clark, P.: Sequences and series; a source book. http://www.math.uga.edu/~pete/3100supp.pdf

De Klerk, E., Laurent, M., Sun, Z., Vera, J.C.: On the convergence rate of grid search for polynomial optimization over the simplex. Optim. Lett. 11(3), 597–608 (2017)

Faybusovich, L.: Global optimization of homogeneous polynomials on the simplex and on the sphere. In: Frontiers in Global Optimization, pp. 109–121. Springer (2004)

Kingman, J.F.: On an inequality in partial averages. Q. J. Math. 12, 78–80 (1961)

Kleniati, P.-M., Parpas, P., Rustem, B.: Partitioning procedure for polynomial optimization. J. Glob. Optim. 48, 549–567 (2010)

Losert, V., Akin, E.: Dynamics of games and genes: discrete versus continuous time. J. Math. Biol. 17, 241–251 (1983)

Lyubich, Y., Maistrovskii, G., Ol’khovskii, Y.: Selection-induced convergence to equilibrium in a single-locus autosomal population. Probl. Peredachi Inf. 16(1), 93–104 (1980). ((Russian))

Motzkin, T., Strauss, E.: Maxima for graphs and a new proof of a theorem of Turán. Can. J. Math. 17, 533–540 (1965)

Murty, K., Kabadi, S.: Some NP-complete problems in quadratic and nonlinear programming. Math. Program. 39(2), 117–129 (1987)

Palm, G.: Evolutionary stable strategies and game dynamics for \(n\)-person games. J. Math. Biol. 19(3), 329–334 (1984)

Smith, J.M.: The theory of games and the evolution of animal conflicts. J. Theor. Biol. 47(1), 209–221 (1974)

Smith, J.M., Price, G.R.: The logic of animal conflict. Nature 246(5427), 15–18 (1973)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmed, F., Still, G. Two methods for the maximization of homogeneous polynomials over the simplex. Comput Optim Appl 80, 523–548 (2021). https://doi.org/10.1007/s10589-021-00307-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-021-00307-1

Keywords

- Optimization over the simplex

- Homogeneous polynomials

- Symmetric tensors

- Numerical methods

- Replicator transformation

- Evolutionarily stable strategies

- Convergence properties