Abstract

The Remez penalty and smoothing algorithm (RPSALG) is a unified framework for penalty and smoothing methods for solving min-max convex semi-infinite programing problems, whose convergence was analyzed in a previous paper of three of the authors. In this paper we consider a partial implementation of RPSALG for solving ordinary convex semi-infinite programming problems. Each iteration of RPSALG involves two types of auxiliary optimization problems: the first one consists of obtaining an approximate solution of some discretized convex problem, while the second one requires to solve a non-convex optimization problem involving the parametric constraints as objective function with the parameter as variable. In this paper we tackle the latter problem with a variant of the cutting angle method called ECAM, a global optimization procedure for solving Lipschitz programming problems. We implement different variants of RPSALG which are compared with the unique publicly available SIP solver, NSIPS, on a battery of test problems.

Similar content being viewed by others

References

Auslender, A., Goberna, M.A., López, M.A.: Penalty and smoothing methods for convex semi-infinite programming. Math. Oper. Res. 34, 303–319 (2009)

Auslender, A., Teboulle, M.: Interior gradient and proximal methods for convex and conic optimization. SIAM J. Optim. 16, 697–725 (2006)

Bagirov, A.M., Rubinov, A.M.: Global minimization of increasing positively homogeneous functions over the unit simplex. Ann. Oper. Res. 98, 171–187 (2000)

Bagirov, A.M., Rubinov, A.M.: Modified versions of the cutting angle method. In: Hadjisavvas, N., Pardalos, P.M. (eds.) Advances in Convex Analysis and Global Optimization, pp. 245–268. Kluwer, Netherlands (2001)

Batten, L.M., Beliakov, G.: Fast algorithm for the cutting angle method of global optimization. J. Global Optim. 24, 149–161 (2002)

Beliakov, G.: Geometry and combinatorics of the cutting angle method. Optimization 52, 379–394 (2003)

Beliakov, G.: Cutting angle method. A tool for constrained global optimization. Optim. Method. Softw 19, 137–151 (2004)

Beliakov, G.: A review of applications of the cutting angle method. In: Rubinov, A.M., Jeyakumar, V. (eds.) Continuous Optimization, pp. 209–248. Springer, New York (2005)

Beliakov, G.: Extended cutting angle method of global optimization. Pacific J. Optim. 4, 153–176 (2008)

Beliakov, G., Ferrer, A.: Bounded lower subdifferentiability optimization techniques: applications. J. Global Optim. 47, 211–231 (2010)

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer, New York (2000)

Cheney, E.W., Goldstein, A.A.: Newton method for convex programming and Tchebycheff approximation. Numer. Math. 1, 253–268 (1959)

Dinh, N., Goberna, M.A., López, M.A.: On the stability of the feasible set in optimization problems. SIAM J. Optim. 20, 2254–2280 (2010)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fackrell, M.: A semi-infinite programming approach to identifying matrix–exponential distributions. Int. J. Syst. Sci. 43, 1623–1631 (2012)

Faybusovich, L., Mouktonglang, T., Tsuchiya, T.: Numerical experiments with universal barrier functions for cones of Chebyshev systems. Comput. Optim. Appl. 41, 205–223 (2008)

Ferrer, A., Miranda, E.: Random test examples with known minimum for convex semi-infinite programming problems. E-prints UPC, (2013) (http://hdl.handle.net/2117/19118)

Gill, P.E., Murray, W., Saunders, M.A., Wright, M.H.: User’s guide for NPSOL: A Fortran Package for Nonliner Programing. Stanford University, Stanford (1986)

Gürtuna, F.: Duality of ellipsoidal approximations via semi-infinite programming. SIAM J. Optim. 20, 1421–1438 (2009)

Hiriart-Urruty, J.-B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms I. Fundamentals. Springer, Berlin (1993)

Ito, S., Liu, Y., Teo, K.L.: A dual parametrization method for convex semi-infinite programming. Ann. Oper. Res. 98, 189–213 (2000)

Joshi, S., Boyd, S.: Sensor selection via convex optimization. IEEE Trans. Signal Proces. 57, 451–462 (2009)

Karimi, A., Galdos, G.: Fixed-order \(H_{\infty }\) controller design for nonparametric models by convex optimization. Automatica 46, 1388–1394 (2010)

Katselis, D., Rojas, C., Welsh, J., Hjalmarsson, H.: Robust experiment design for system identification via semi-infinite programming techniques. In: Kinnaert, M. (ed.) Preprints of the 16th IFAC Symposium on System Identification, pp. 680–685. Brussels, Belgium (2012)

Kelley Jr, J.E.: The cutting-plane method for solving convex programs. J. Soc. Ind. Appl. Math. 8, 703–712 (1960)

Muller, J.M.: Elementary Functions: Algorithms and Implementation, 2nd edn. Birkhäuser, Boston (2006)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate \(O(\frac{1}{k^{2}})\). Soviet Math. Dokl. 27, 372–376 (1983)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Remez, E.: Sur la détermination des polynômes d’approximation de degré donné. Commun. Soc. Math. Kharkoff et Inst. Sci. Math. et Mecan 10, 41–63 (1934)

Rubinov, A.M.: Abstract Convexity and Global Optimization. Kluwer, Dordrecht/Boston (2000)

Tichatschke, R., Kaplan, A., Voetmann, T., Böhm, M.: Numerical treatment of an asset price model with non-stochastic uncertainty. TOP 10, 1–50 (2002)

Vaz, A., Fernandes, E., Gomes, M.: SIPAMPL: Semi-infinite programming with AMPL. ACM Trans. Math. Softw. 30, 47–61 (2004)

Acknowledgments

The authors wish to thank A. Vaz for his valuable help concerning the implementation of SIPAMPL.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was partially supported by MINECO of Spain, Grants MTM2011-29064-C03-01/02.

Appendix: performance profiles

Appendix: performance profiles

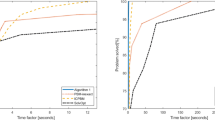

The benchmark results are generated by running the three solvers to be compared on the collection of problems gathered in the SIPAMPL database and recording the information of interest, in this case the number of function evaluations (as it is independent of the available hardware). In this paper we use the notion of performance profile due to Dolan and Moré [14]) as a tool for comparing the performance of a set of solvers \( \mathcal {S}\) on a test set \(\mathcal {P}\). For each couple \(({ p}, { s})\in \mathcal {P\times S}\) we define

Let \({ p}\in \mathcal {P}\) be a problem solvable by solver \( { s}\in \mathcal {S}\). We compare the performance on problem \( { p}\) of solver \({ s}\) with the best performance of any solver on the same problem by means of the performance ratio

with \(r_{{ p},{ s}}=1\) if and only if \(s\) is a winner for \(p\) (i.e. it is at least as good, for solving \(p,\) as any other solver of \(\mathcal {S}\)). We also define \(r_{{ p},{ s}}=r_{M}\) when solver \({ s}\) does not solve problem \({ p},\) where \(r_{M}\) is some scalar greater than the maximum of the performance ratios\(r_{{ p},{ s}}\) of all couples \(( { p},{ s})\in \mathcal {P\times S}\) such that \( { p}\) is solved by solver \({ s}.\) The choice of \(r_{M}\) does not affect the performance evaluation.

The performance of solver \({ s}\) on any given problem may be of interest, but we would like to obtain an overall assessment of the performance of the solver. To this aim, we associate with each \({ s}\in \mathcal {S}\) a function \(\rho _{{ s}}:\mathbb {R} _{+}\rightarrow [0,~1],\) called performance profile of \(s,\) defined as the ratio

Obviously, \(\rho _{{ s}}\) is a stepwise non-decreasing function such that \(\rho _{{ s}}\left( t\right) =0\) for all \(t\in [ 0,1[ \) and \(\rho _{{ s}}(1)\) is the relative frequency of wins of solver \(s\) over the rest of the solvers. If \(p\) is taken at random from \(\mathcal {P},\) then \(r_{{ p},{ s}}\) can be interpreted as a random variable and \(\rho _{{ s}}(1)\) as the probability of solver \(s\) to win over the rest of the solvers while, for \( t>1,\) \(\rho _{{ s}}(t)\) represents the probability for solver \( { s}\in \mathcal {S}\) that a performance ratio \(r_{{ p}, { s}}\) is within a factor \(t\in \mathbb {R}\) of the best possible ratio. So, in probabilist terms, \(\rho _{{ s}}\) can be seen as a distribution function.

The definition of the performance profile for large values requires some care. We assume that \(r_{{ p},{ s}}\in [1,r_{M}] \) and that \(r_{{ p},{ s}}=r_{M}\) only when problem \({ p}\) is not solved by solver \({ s}\). As a result of this convention, \(\rho _{{ s}}(r_{M})=1\), and the number

is the probability that the solver \({ s}\in \mathcal {S}\) solves problems of \(\mathcal {P}\).

Choosing a best solver for \(\mathcal {P}\) is a bicriteria decision problem, the objectives being the probability of winning and the probability of solving a problem, i.e.

Performance profiles are relatively insensitive to changes in results on a small number of problems. Additionally, they are also largely unaffected by small changes in results over many problems.

Rights and permissions

About this article

Cite this article

Auslender, A., Ferrer, A., Goberna, M.A. et al. Comparative study of RPSALG algorithm for convex semi-infinite programming. Comput Optim Appl 60, 59–87 (2015). https://doi.org/10.1007/s10589-014-9667-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-014-9667-7