Abstract

When dealing with numerical solution of stochastic optimal control problems, stochastic dynamic programming is the natural framework. In order to try to overcome the so-called curse of dimensionality, the stochastic programming school promoted another approach based on scenario trees which can be seen as the combination of Monte Carlo sampling ideas on the one hand, and of a heuristic technique to handle causality (or nonanticipativeness) constraints on the other hand.

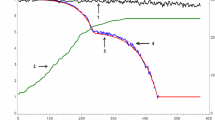

However, if one considers that the solution of a stochastic optimal control problem is a feedback law which relates control to state variables, the numerical resolution of the optimization problem over a scenario tree should be completed by a feedback synthesis stage in which, at each time step of the scenario tree, control values at nodes are plotted against corresponding state values to provide a first discrete shape of this feedback law from which a continuous function can be finally inferred. From this point of view, the scenario tree approach faces an important difficulty: at the first time stages (close to the tree root), there are a few nodes (or Monte-Carlo particles), and therefore a relatively scarce amount of information to guess a feedback law, but this information is generally of a good quality (that is, viewed as a set of control value estimates for some particular state values, it has a small variance because the future of those nodes is rich enough); on the contrary, at the final time stages (near the tree leaves), the number of nodes increases but the variance gets large because the future of each node gets poor (and sometimes even deterministic).

After this dilemma has been confirmed by numerical experiments, we have tried to derive new variational approaches. First of all, two different formulations of the essential constraint of nonanticipativeness are considered: one is called algebraic and the other one is called functional. Next, in both settings, we obtain optimality conditions for the corresponding optimal control problem. For the numerical resolution of those optimality conditions, an adaptive mesh discretization method is used in the state space in order to provide information for feedback synthesis. This mesh is naturally derived from a bunch of sample noise trajectories which need not to be put into the form of a tree prior to numerical resolution. In particular, an important consequence of this discrepancy with the scenario tree approach is that the same number of nodes (or points) are available from the beginning to the end of the time horizon. And this will be obtained without sacrifying the quality of the results (that is, the variance of the estimates). Results of experiments with a hydro-electric dam production management problem will be presented and will demonstrate the claimed improvements. A more realistic problem will also be presented in order to demonstrate the effectiveness of the method for high dimensional problems.

Similar content being viewed by others

Notes

SOWG, École Nationale des Ponts et Chaussées: Laetitia Andrieu, Kengy Barty, Pierre Carpentier, Jean-Philippe Chancelier, Guy Cohen, Anes Dallagi, Michel De Lara, Pierre Girardeau, Babakar Seck, Cyrille Strugarek.

A random variable

is measurable with respect to another random variable

is measurable with respect to another random variable  if and only if the σ-field generated by the first is included into the one generated by the second:

if and only if the σ-field generated by the first is included into the one generated by the second:  . In this paper, we will not elaborate on these definitions. Instead, we refer the reader to [7] for further details on the probability and measurability theory.

. In this paper, we will not elaborate on these definitions. Instead, we refer the reader to [7] for further details on the probability and measurability theory.Note that the σ-fields

’s are “fixed”, in the sense that they do not depend on the control variable

’s are “fixed”, in the sense that they do not depend on the control variable  .

.Note that complete causal noise observation implies the perfect memory property, as far as

is a filtration.

is a filtration.In fact, μ T−1=K′⊤. From Assumption 2, μ T−1 is a continuous mapping.

In fact a continuous one (from Assumption 2).

Note that we obtained as an intermediate result that

.

.All these methods can be embedded in a common framework: see [21] for further details.

We do not compare the particle method with SDDP-like methods because of the difficulty to efficiently implement these last methods on a nonlinear problem. Such a numerical comparison is not in the scope of this paper.

According to [26], it seems that “the total number of scenarios needed to solve the true problem with a reasonable accuracy grows exponentially with increase of the number of stages T”.

This corresponds to a classical way to implement subgradient methods.

In this case, optimization and simulation processes are the same.

References

Aubin, J.-P., Frankowska, H.: Set-Valued Analysis. Birkhäuser, Boston (1990)

Barty, K.: Contributions à la discrétisation des contraintes de mesurabilité pour les problèmes d’optimisation stochastique. PhD dissertation, École Nationale des Ponts et Chaussées (2004)

Barty, K., Carpentier, P., Chancelier, J.-P., Cohen, G., De Lara, M., Guilbaud, T.: Dual effect free stochastic controls. Ann. Oper. Res. 142, 41–62 (2006)

Bellman, R.: Dynamic Programming. Princeton University Press, New Jersey (1957)

Bertsekas, D.: Dynamic Programming and Stochastic Control. Academic Press, San Diego (1976)

Bertsekas, D., Shreve, S.: Stochastic Optimal Control: The Discrete-Time Case. Athena Scientific, Belmont (1996)

Breiman, L.: Probability. SIAM, Philadelphia (1992)

Brodie, P., Glasserman, M.: A stochastic mesh method for pricing high dimensional American options. J. Comput. Finance 7 (2004)

Chen, Z.L., Powell, W.B.: Convergent cutting plane and partial sampling algorithms for multistage stochastic linear programs with recourse. J. Optim. Theory Appl. 102, 497–524 (1999)

Dallagi, A.: Méthodes particulaires en commande optimale stochastique. Ph.D. dissertation, Université Paris I Panthéon-Sorbonne (2007)

Donohue, C.J., Birge, J.R.: The abridged nested decomposition method for multistage stochastic linear programs with relatively complete recourse. Algorithmic Oper. Res. 1, 20–30 (2006)

Dupac̀ová, J., Gröwe-Kuska, N., Römisch, W.: Scenario reduction in stochastic programming. An approach using probability metrics. Math. Program. 95, 493–511 (2003)

Ekeland, I., Temam, R.: Convex Analysis and Variational Problems. SIAM, Philadelphia (1999)

Heitsch, H., Römisch, W.: Scenario reduction algorithms in stochastic programming. Comput. Optim. Appl. 187–206 (2003)

Heitsch, H., Römisch, W.: Scenario tree modeling for multistage stochastic programs. Math. Program. 118, 371–406 (2009)

Hiriart-Urruty, J.-B.: Extension of Lipschitz integrands and minimization of nonconvex integral functionals: Applications to the optimal recourse problem in discrete time. Probab. Math. Stat. 3, 19–36 (1982)

Leese, S.: Multifunctions of Souslin type. Bull. Aust. Math. Soc. 11, 395–411 (1974)

Outrata, J., Römisch, W.: On optimality conditions for some nonsmooth optimization problems over L p spaces. J. Optim. Theory Appl. 126, 411–438 (2005)

Pereira, M., Pinto, L.: Multi-stage stochastic optimization applied to energy planning. Math. Program. 52, 359–375 (1991)

Pflug, G.: Scenario tree generation for multiperiod financial optimization by optimal discretization. Math. Program. 89, 251–271 (2001)

Philpott, A.B., Guan, Z.: On the convergence of stochastic dual dynamic programming and related methods. Oper. Res. Lett. 36, 450–455 (2008)

Powell, W.B.: Approximate Dynamic Programming: Solving the Curses of Dimensionality. Wiley Series in Probability and Statistics. Wiley-Interscence, New York (2007)

Rao, M.: Measure Theory and Integration. Pure and Applied Mathematics Series. Marcel Dekker, New York (2004)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Ruszczynski, A., Shapiro, A. (eds.): Handbooks in Operations Research and Management Science: Stochastic Programming. Elsevier, Amsterdam (2003)

Shapiro, A.: On complexity of multistage stochastic programs. Oper. Res. Lett. 34, 1–8 (2006)

Strugarek, C.: Approaches variationnelles et autres contributions en optimisation stochastique. PhD dissertation, École Nationale des Ponts et Chaussées (2006)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (1998)

Thénié, J., Vial, J.-P.: Step decision rules for multistage stochastic programming: a heuristic approach. Automatica 44, 1569–1584 (2008)

Turgeon, A.: Optimal operation of multireservoir power system with stochastic inflows. Water Resour. Res. 16, 275–283 (1980)

Wagner, D.: Survey of measurable selection theorems. SIAM J. Control Optim. 15, 859–903 (1977)

Author information

Authors and Affiliations

Corresponding author

Appendix: Optimization on an Hilbert space: a special case

Appendix: Optimization on an Hilbert space: a special case

Let \(\mathcal {H}\) be an Hilbert space, let \(\mathcal {H}^{\mathrm{fe}}\) be a closed convex subset of \(\mathcal {H}\) and let f be a real valued function defined on \(\mathcal {H}\). We consider the following optimization problem:

In the following, \(\chi _{{}_{H}}\) will denote the indicator function of a subset \(H\subset \mathcal {H}\), namely

The optimization literature gives different expressions for the necessary optimality conditions of an optimization problem in a general Hilbert space (see e.g. [13]). For instance, if \(x^{\sharp}\in \mathcal {H}\) is solution of (33), then the following statements are equivalent:

We now consider a specific structure for the feasible set \(\mathcal {H}^{\mathrm{fe}}\). More precisely, we assume that \(\mathcal {H}^{\mathrm{fe}}= \mathcal {H}^{\mathrm{cv}}\cap \mathcal {H}^{\mathrm{sp}}\), \(\mathcal {H}^{\mathrm{sp}}\) being a closed subspace of \(\mathcal {H}\) and \(\mathcal {H}^{\mathrm{cv}}\) being a closed convex subset of \(\mathcal {H}\). We moreover assume that the following property holds.

Assumption 5

The sets \(\mathcal {H}^{\mathrm{sp}}\) and \(\mathcal {H}^{\mathrm{cv}}\) are such that \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{cv}}}\left ( \mathcal {H}^{\mathrm{sp}}\right )\subset \mathcal {H}^{\mathrm{sp}}\).

Then the projection operator on \(\mathcal {H}^{\mathrm{fe}}\) has the following property.

Lemma 2

Under Assumption 5, the following relation holds true:

Proof

Let \(y\in \mathcal {H}^{\mathrm{cv}}\cap \mathcal {H}^{\mathrm{sp}}\). Then

From the characterization of the projection of \(z:= \mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (x\right )\) over the convex subset \(\mathcal {H}^{\mathrm{cv}}\), the last inner product in the previous expression is non positive: therefore,

From \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{cv}}}\left ( \mathcal {H}^{\mathrm{sp}}\right )\subset \mathcal {H}^{\mathrm{sp}}\), we deduce that \(y- \mathrm {proj}_{ \mathcal {H}^{\mathrm{cv}}}\left (z\right )\in \mathcal {H}^{\mathrm{sp}}\). Since \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\) is a self-adjoint operator, we have

the last inequality arising from \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (x-z\right ) = \mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (x\right )- \mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (z\right ) =0\) (since \(\mathcal {H}^{\mathrm{sp}}\) is a linear subspace, then \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (\cdot\right )\) is a linear operator). We thus conclude that, for all \(y\in \mathcal {H}^{\mathrm{cv}}\cap \mathcal {H}^{\mathrm{sp}}\),

a variational inequality which characterizes \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{cv}}}\circ \mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\left (x\right )\) as the projection of x over \(\mathcal {H}^{\mathrm{fe}}= \mathcal {H}^{\mathrm{cv}}\cap \mathcal {H}^{\mathrm{sp}}\). □

The following proposition gives necessary optimality conditions for Problem (33) when the feasible set \(\mathcal {H}^{\mathrm{fe}}\) has the specific structure \(\mathcal {H}^{\mathrm{cv}}\cap \mathcal {H}^{\mathrm{sp}}\).

Proposition 5

We suppose that Assumption 5 is fulfilled and that f is differentiable. If x ♯ is solution of (33), then

Proof

Let x ♯ be solution of (33). Using Condition (34c) and Lemma 2, we obtain that

But \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}\) is a linear operator and \(x^{\sharp}\in \mathcal {H}^{\mathrm{sp}}\), so that

From (34a)–(34c), the last relation is equivalent to \(\mathrm {proj}_{ \mathcal {H}^{\mathrm{sp}}}(f'(x^{\sharp}))\in -\partial \chi _{{}_{ \mathcal {H}^{\mathrm{cv}}}}(x^{\sharp})\). □

Rights and permissions

About this article

Cite this article

Carpentier, P., Cohen, G. & Dallagi, A. Particle methods for stochastic optimal control problems. Comput Optim Appl 56, 635–674 (2013). https://doi.org/10.1007/s10589-013-9579-y

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-013-9579-y

is measurable with respect to another random variable

is measurable with respect to another random variable  if and only if the σ-field generated by the first is included into the one generated by the second:

if and only if the σ-field generated by the first is included into the one generated by the second:  . In this paper, we will not elaborate on these definitions. Instead, we refer the reader to [

. In this paper, we will not elaborate on these definitions. Instead, we refer the reader to [ ’s are “fixed”, in the sense that they do not depend on the control variable

’s are “fixed”, in the sense that they do not depend on the control variable  .

. is a filtration.

is a filtration. .

.