Abstract

Malware remains a persistent threat to industrial operations, causing disruptions and financial losses. Traditional malware detection approaches struggle with the increasing complexity of false positives and negatives. However, existing Intrusion Detection Systems (IDSs) often lack the capability to assess the severity of detected malware, crucial for effective threat mitigation. This paper presents a novel model, MAlware DEtection and Severity Analysis for eNcrypted Traffic (MADESANT), designed to detect and analyze malware severity in encrypted traffic data. MADESANT combines Deep Learning (DL)-based intrusion detection with Machine Learning (ML)-based severity analysis, specifically customized for the minutiae of IoT systems and assets. Notably, MADESANT introduces a cascading model integrating a Cascading Forward Back Propagation Neural Network (CFBPNN) with the J48 tree to systematically assess risk factors in network traffic. Our assessment, conducted on diverse encrypted datasets including UNSW-NB15, IoT23, and XIIoTID, highlights the remarkable efficacy of MADESANT. Impressively, it achieves a flawless 0% false positive rate in detecting binary attack instances, surpassing benchmarks set by conventional models. Additionally, MADESANT excels in accurately estimate malware severity, providing invaluable insights into the factors contributing to the risk. To further validate its efficiency, we compared MADESANT against prevalent Neural Network models like FeedForward and Recurrent Neural Networks, with MADESANT emerging as the superior choice. The experimentation encompasses both the entire dataset and subsets generated through meticulous risk factor analysis. These results underscore MADESANT’s prowess in not only identifying malware but also in evaluating its potential impact, signifying a significant leap forward in industrial cybersecurity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Malware is a computer program designed to infiltrate, damage, or disable computer systems without users’ consent [1]. The malware fetches commands from an attacker and communicates with a Command and Control (C &C) server.

Malwarebytes [2], one of the most popular cyber security products, reports that six threats: “Passwords, Malvertising, Banking Trojans, Mac malware, potentially unwanted programs (PUPs), Atomic Stealer (AMOS)” that illustrate most serious cybercrime tactics in 2024 [2].

Malware detection using network traffic information has the potential to prevent or at least reduce malware propagation in the network. Deep packet inspection is one of the most common methods for detecting malware in network traffic. This technique aggregates packets with the same protocol type, port, and address. A review of the aggregate packets is then conducted to determine whether the source is malicious or benign [3]. Software applications and internet-connected devices increasingly use encryption to secure everyday objects as they become more digital. This helps to protect privacy, secure communications, and maintain trust over the internet. As a result, the rise in encrypted network traffic has affected the cyber security landscape [5]. Malware can also leverage encryption by using it to evade detection and hide malicious activities [4]. Cisco report [5] state that a majority of malware does not use encryption, yet malicious network traffic that uses Hyper Text Transfer Protocol Secure (HTTPS) encryption has been steadily increasing over the past few years by 10–\(12\%\) [5].

IoT (Internet of Things) involves crucial device operations on specific data. Intrusions in IoT environments can lead to significant disruptions, including the malfunctioning of devices, misuse of water pumping systems, blackouts in smart grids, and delays in manufacturing processes. Therefore, it is essential to develop an effective model for intrusion detection in IoT systems with risk prediction [6]. However, the use of HTTPS and device operation on encrypted traffic are increasingly concerning in intrusion detectors. All remote actions connect to the device configurations through HTTPS exposing potential vulnerability points and implementing data masking mechanisms [4]. Thus, IoT requires an intrusion detection model that can classify the maliciousness even in the encrypted network traffic [6].

1.1 Challenges in intrusion detection

The attack surface and attack vectors are increasing day by day in the IoT paradigm due to the growing device connections. In addition, the attackers encrypt the traffic to bypass detection methodologies. Thus, once traffic is permitted to enter the IoT network, encrypted malware can perform destructive actions, which cause system failure and production to halt. Detection difficulty in the present intrusion detection systems and the use of secure transport layer communication are the major aspects, which create challenges in IoT security.

-

Detection difficulty: In general, encryption has a significant impact on the detection and analysis of network traffic. It hides all the payload data [5]. Finding suspicious patterns in encrypted traffic is much more difficult than detecting them in normal traffic. In addition, data loss prevention and protection solutions are unable to monitor and protect against the unauthorized flow of sensitive data as there is no visible pattern to identify once the traffic is encrypted [5]. Thus, the existing literature is non-reactive in the case of encrypted traffic analysis.

-

Transport Layer Security (TLS) hindrance: TLS-encrypted internet traffic is becoming ubiquitous with website owners. Malware prohibits service operations and specific activities. A malware study [6] from 2016 has reported that approximately \(10\%\) of malware employs TLS to hide network communications. Additionally, malware use of Internet Protocol (IP) Flux (continually changing IP address mapping to the domain name) techniques to evade monitoring and IP blacklisting. Therefore, security monitoring devices need to detect hidden malicious traffic within TLS encryption [6].

1.2 Motivation and contribution

The rise of the Internet of Things (IoT) has revolutionized industries by connecting various devices to a network. However, this increased connectivity has also made it easier for malware to attack. While current solutions are good at detecting malware, there’s a gap in analyzing the severity of these threats. This gap is especially critical in detecting malware in encrypted IoT traffic. Severity analysis involves assigning a score to identified threats, considering their potential impact on the IoT system. Pinpointing the specific features and risk factors in encrypted IoT traffic is essential for accurate analysis and maximizing the effectiveness of malware detection.

Addressing this challenge, our proposed model, named MADESANT, aims to fill the existing gap. MADESANT tackles the issue by categorizing all network traffic according to the severity of the associated risk. This approach allows MADESANT to offer security against zero-day attacks, contributing significantly to the field. The key contributions of MADESANT include:

-

Auto Encoder: The choice of Auto Encoder (AE) for dimensional reduction stands out for its ability to recognize intricate, non-linear patterns, retaining data distribution and semantic information, especially beneficial for complex datasets. Its unsupervised learning and feature extraction capabilities ensure the preservation of crucial information while filtering out noise, making AE the ideal method for compressing bulk input values in the experiment and enhancing the accuracy of the detection model.

-

Cascading model: The Cascading Forward Back Propagation Neural Network (CFBPNN) is an innovative neural network technique that combines the cascade-correlation algorithm with back-propagation. It sequentially adds multiple connected layers, maintaining both nonlinear and linear relations between input nodes. CFBPNN uses an incremental search strategy to identify normal and attack values in input and hidden units, and the incorporation of additional connections enhances speed and learning rate, distinguishing it from existing techniques.

-

Severity analysis: Our IIoT model incorporates severity analysis as a secondary defense layer, continuously monitoring the network for subtle variations to identify potential attacks. Utilizing the J48 algorithm, chosen after rigorous comparison and ambiguity table analysis, it employs a DL algorithm to track variables and values, enabling packet classification based on risk levels. This approach assigns a risk rating to each packet, contributing to the establishment of a secure network environment.

-

Efficiency: We apply our advanced detection model to encrypted IIoT datasets, commonly used in industrial settings, to identify unusual node behavior and detect binary and multi-class attacks. We compared our model with traditional Feedforward and Recurrent neural network models. Our proposed cascading model, a combination of both, establishes a strong link between inputs and their impact on target values through pattern inter-connectivity. We also used machine learning for severity analysis to understand packet behavior. Focusing on key risk-contributing variables, our approach improves malware detection by 15.5% compared to using all input values.

The primary objective of this study is to introduce a novel framework for intrusion detection systems tailored specifically for industrial environments, integrating three key components. Unlike current neural network models in industrial security, our framework incorporates Auto Encoder (AE) based dimensional reduction to adapt the model’s fitness to varying input variables commonly found in industrial structures. By normalizing input data, the framework achieves a balanced initial status, crucial for effective operation. The Cascading Forward Back Propagation Neural Network (CFBPNN) with its cascading structure enhances connectivity between input and output layers, enabling sequential addition of multiple connected layers to accurately classify binary or multi-class attack variants. In light of the escalating threat of zero-day attacks, our framework identifies risk factors by monitoring network activity and detecting subtle variations, bolstered by machine learning algorithms like J48 for packet classification based on risk levels. This innovative approach offers practical applications in real-world industrial settings, providing robust protection against a broad spectrum of cyber threats while minimizing false positives and ensuring efficient network resource utilization.

1.3 Organization

The paper is organized into several sections to provide a structured exploration of the proposed methodology. In Sect. 2, we delve into relevant works within the domain. Section 3 provides a concise overview of the system model, detailing its internal structure. The comprehensive technique is outlined in Sect. 4, encompassing dataset selection, normalization, feature reduction, intrusion detection, and risk analysis. In Sect. 5, we present the experimental observations of our model, elucidating the methodology’s performance. We evaluate the effectiveness of MADESANT against diverse datasets and compare it with existing models in Sect. 6. The performance analysis and comparisons with state-of-the-art models contribute to a thorough understanding of the proposed approach. Finally, Sect. 7 serves as the conclusion, summarizing the key findings and the insights drawn from the study.

2 Related work

The literature review delves into three key areas. Firstly, it evaluates the latest Recurrent Neural Network (RNN) models dedicated to detecting malware, providing insights into the advancements in this critical domain. Secondly, the exploration extends to articles emphasizing risk factor analysis, shedding light on the evolving understanding of potential threats in cybersecurity. Lastly, the review provides a concise overview of articles employing diverse Machine Learning (ML) based feature reduction techniques on Industrial Internet of Things (IIoT) datasets, showcasing the ongoing efforts to optimize data analysis in complex and interconnected industrial systems.

Common methods to prevent encrypted malware exploitation include signature usage and decrypting traffic content [6]. However, proxy devices negotiating key exchanges for decryption may introduce confidentiality breaches. The decryption of TLS in network security monitoring devices impacts network performance, highlighting the need for advanced security detection methods beyond signature-based approaches, as noted by Dhirani [7]. In neural network modeling, Recurrent Neural Networks (RNNs) play a crucial role in sequence modeling. Despite advancements in Long short-term memory (LSTM) and Gated Recurrent Unit (GRU) designs, the simplicity of fundamental RNN architecture provides advantages in scenarios with less complex memory management. This simplicity allows for faster inference times, particularly crucial in real-time applications. Recent IDS models often integrate RNN approaches, emphasizing their continued relevance in contemporary cybersecurity applications. Table 1 provides an overview of RNN models, highlighting LSTM’s importance in various applications.

Altunay et al. [8] achieve \(99.84\%\) and \(99.80\%\) detection accuracy with their hybrid CNN+LSTM for binary and multi-class attacks in X-IoTID. Tharewal et al. [9] integrate reinforcement learning, GBM, and PP02, reaching \(99\%\) accuracy. Yazdinejad et al. [10] propose an ensemble model with Auto-Encoder (AE) and LSTM, achieving \(99.7\%\) on real IIoT datasets. Firdous et al. [11] reduce fog cloud communication overhead by 90% using RNN and Ensemble with detection. Sanju et al. [12] integrate RNNs with meta-heuristics, achieving a low 1.88% false rate on CICIDS2017 datasets. RNNs excel in simplicity and computational efficiency compared to LSTM and GRU, making them strategic for extended memory management in less critical applications. However, the choice among RNN, LSTM, or GRU depends on the problem, dataset, and desired complexity. Each architecture contributes valuable tools to sequence modeling and neural network design. In addition to tracing existing patterns, we discuss several innovative approaches to enhance encrypted network security below.

-

Malicious Signature Creation: The work in [13] generates malicious signature patterns from packet metadata, integrating them into the Aho-Corasick pattern-matching algorithm for detection. Achieving an average performance of 85Gbps, it accelerates hardware architectures with parallel processing.

-

Deep-Full-Range (DFR): The authors in [14] introduce DFR, utilizing neural language to transform domain-name strings into a low-dimensional format. The model combines a Convolutional Neural Network (CNN), Long Short-Term Memory (LSTM), and Stacked Auto-Encoder (SAE) to learn, monitor, categorize, and extract features from raw traffic within a spatio-temporal range.

-

Distributed Anomaly Detection: [15] presents a model identifying ambiguous probabilities through pre-processing, clustering, deep learning detection, and histogram matrix visualization. Tested on a real-time testbed, it achieves \(98\%\) accuracy in response to specific and realistic scenarios.

-

Reverse Engineering Model: The authors in [16] propose a reverse engineering model for in-depth analysis of malware, revealing inner workings, architecture, code segments, and design using ML techniques.

Modern traffic analysis, beyond traditional Deep learning (DL) methods, integrates advanced techniques using ML and DL models. While traditional approaches fall short in identifying threats within encrypted TLS traffic, modern analysis leverages additional features for enhanced threat detection in complex network environments [17]. Predicting risk factors becomes simpler with limited input features. Recent articles focusing on optimized feature detection are mentioned below.

-

A Deep Neural Network (DNN) classifier by [18] achieved \(17\%\) better results on compressed datasets for 5 G real-time applications.

-

Improved Critical Feature Selection (ICFS) with Ensemble Learning (EL) by [19] for IDS in IoT-MQTT, attaining \(99\%\) accuracy with \(7\%\) improvement using EL-based attack detection.

-

Intelligent Multi-Objective Particle Swarm Optimization (IMOPSO) by [20], integrating MSVM, resulted in limited false rates.

-

Switch Port Anomaly-based IDS (SPA-IDS) by [21], utilizing Vertical Mode Decomposition, statistical iterative feature selection, and KNN, produced the least false values in compressed datasets.

The above-mentioned studies aim to enhance communication efficiency, ensuring low latency, maximum security, and high detection ratios. However, existing literature lacks inference for risks in non-malicious encrypted traffic. Our proposed solution, MADESANT, integrates detection, feature reduction, and risk prediction for encrypted data content.

3 System model

Let us assume that there is a continuous flow of data packets in a network and at any time interval from \(t-1\) to t, and we denote the flow: \({d_{1}, d_{2}, \ldots , d_{n}}\). We define \(R_{d_{i}}\) as the associated risk factor for each data packet \(d_{i}\). We can express \(R_{d_{i}}\) as a function of several parameters depending on the application domain and network conditions as shown in Eq. (1).

In Eq. (1), each \(p_{i}\) is a unique and relevant parameter of the network. In order to classify the data packets, we use Eq. (2). MADESANT uses two thresholds on \(R_{d_{i}}\): \(LTh_{R_{d_{i}}}\) and \(UTh_{R_{d_{i}}}\). EL-based these threshold values by using efficient data mining algorithms on the network data packets. We use a double-layer DL analysis on the packets. Our first step is to detect malicious(m) data packets in the network using a binary method. The checking criteria for this layer are given in expression (2).

Though we use such a threshold, the detection method alone cannot protect the network against zero-day attacks. To overcome this shortcoming, we apply another layer of DL analysis. It helps to classify the set of data packets, whose risk factor lies between the two thresholds as shown in expression (3). The second DL method classifies all these data packets in the grey zone according to the risk factors. In this manner, we can identify the data packets, which pose a higher risk in the network. Then, the network considers these packets as potential malicious data packets.

4 Proposed model: MADESANT

MADESANT stands as a multifaceted system that goes beyond mere intrusion detection, encompassing risk analysis and key feature extraction. Its efficacy extends to both encrypted and non-encrypted traffic, facilitated by a distinctive Cascading Forward Back Propagation Neural Network (CFBPNN). This neural network aids in the identification of malicious traffic and concurrently evaluates its associated risk level. To sustain continuous data categorization and analysis, MADESANT leverages the J48 tree, a machine-learning algorithm. Detailed explanations of the model overview, dataset particulars, normalization procedures, dimension reduction methods, the detection process, and severity calculation can be found in the subsequent subsections.

4.1 Model overview

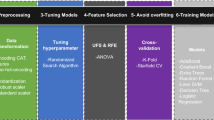

The proposed security model MADESANT excels in detecting malicious packets and also identifying potentially unclear ones in the network. The three key implementation phases, illustrated in Fig. 1, begin with the initial stage where encrypted data from popular datasets is imported and normalized. This normalization process addresses missing values and balances the dataset for further processing. Phase one utilizes Auto Encoders (AEs) to compress data values and extract features.The decoded content is then considered as input to cascading model. Moving to phase two, a cascading network model is implemented for the classification of binary and multi-class attack variations. In the third phase, risk factors are assessed using severity calculations and various decision tree models. A multi-layered network with static hidden layers is employed, evaluated based on error factors, and retrained until optimal results are achieved

4.2 Dataset

This paper utilizes the Aposemat IoT-23 dataset, generated at the Stratosphere Laboratory [25], and the UNSW-NB15 dataset [26], created by the IXIA Perfect Storm tool in the Cyber Range Lab of the Australian Centre for Cyber Security (ACCS). The Aposemat IoT-23 dataset is particularly suitable for intrusion detection and prevention test scenarios, featuring IoT devices with 20 malware and 3 benign captures. Widely employed due to its accessibility and stability, IoT-23 stands out for assessing the latest intrusion detection methods, containing real-world attack scenarios. With 23 scenarios of various IoT network traffic and 25 input features, IoT-23 includes both infected IoT devices with malware samples and captures of benign samples. Notably, this dataset is selected for its representation of encrypted data and its applicability to recent intrusion detection experiments, distinguishing it from other popular IoT datasets.

The UNSW-NB15 dataset [26] originates from raw network packets generated by the IXIA Perfect Storm tool in the Cyber Range Lab of the Australian Centre for Cyber Security (ACCS). It encompasses nine types of cyber-attacks, each represented by 45 labeled features, with a total of 2,540,044 records stored in four CSV files. For this experiment, we focus on a partition of the dataset, comprising 175,341 training samples and 82,332 testing samples, featuring multi-class attack variants. The dataset includes 56,000 normal values and 119,341 records with nine attack categories. Notably, the generic attack type has the highest instance with 40,000 records, followed by exploits with 33,393 records. This selection aims to enhance model accuracy by incorporating a higher instance of attack data.

X-IIoTID dataset [27] Exclusive industrial open dataset for IDS experiments generated with commercial IDS logs and device resources to expose safe and susceptible device and network environments. The dataset contains 51.3 percent normal and 48.65 percent multi-class attack cases. Apart from binary class with benign and malicious instances, this also support with eighteen labeled multiclass attack instance and ten labelled cyber attack instance which is used for our experiment.

4.3 Data normalization

Real-time data acquisition often introduces mixed formats with noise and missing values, impacting model performance. In this study, crucial data preprocessing techniques are employed to address these challenges. Missing values are handled using a fill-missing method, replacing them with standard or constant values. To facilitate deep learning models, categorical values are converted into numerical identities through one-hot encoding. This step ensures easy prediction and enhances model accuracy. The resulting numerical identifiers, representing attack variants with sample size for all three datasets are detailed in Table 2.

4.4 Dimension reduction

We chose Auto Encoder (AE) for reducing dimensions in our data because it’s great at recognizing complex patterns. Unlike simpler methods, AE keeps the main data characteristics and details, filtering out unnecessary noise. Its unsupervised learning and feature extraction capabilities ensure it retains crucial information, making it the best choice for compressing large input values in our experiment and improving the accuracy of the detection model.

The AE model used in this study is shown in Fig. 2, this takes in 25 features and produces an output with the same length for the IoT23 dataset. AE learns to represent data in a simpler way, using an encoder to shrink dimensions and a decoder to bring them back. The reduced-length AE results are then used as input features for our detection model, helping simplify and enhance the training process. For dimension reduction on unlabeled training samples in the form \(\{x^1, x^2,\ldots ,x^n\}\), where \(x^i \epsilon R^n\), we use Auto Encoder (AE), a neural network-based unsupervised learning algorithm.

The AE reduces input into a simpler form to create a new representation. It employs back-propagation to duplicate the input vector against the output layer, using a loss function \(L\left( x,{\widehat{x}}\right)\) to minimize the difference between the raw and encoded values. The AE, trained with 25 hidden layers using the scaled conjugate gradient approach, implements ReLU and sigmoid functions in the compression process. Mean Square Error (MSE) with L2 sparsity regularization yields a minimal error rate of \(4.56\%\) in MADESANT. To prevent overfitting, two regression methods are applied during regularization. The back propagation is applied by setting the target values to the nearest input values, i.e., \(y^i = x^i\).

We train the AE with \(10 \times 10\) hidden layers for 25 input values, where \(sum = 100\). Each hidden unit i computes a function of the input for W weights and B biases shown in Eq. (2).

The function’s output, computed by hidden unit i, relies on parameters \(W_{ij}^{\left( 1\right) }\), and the activation function processes the non-linear input feature \(a_{i}^{\left( 2\right) }\). Training, executed with scaled conjugate gradient, evaluates performance using MSE with L2 and sparsity regularization. The encoder maps input to a lower-dimensional space, and the decoder reconstructs it. Well-trained weights and biases enable the AE to capture vital data features. After 100 epochs, the model’s MSE performance is \(6.52e+11\), with a gradient value of \(5.88e+04\). Algorithm 1 outlines the detailed AE model procedure.

In Algorithm 1, key notations include \({\widehat{x}}\) for the AE variable, x for input, w for weight, and b for bias. The loss function assesses the difference between real and forecast values, with \(\lambda >0\) as the regularization parameter and \(\sigma\) representing overall computed loss. Neurons are labeled “inactive” near 0 and “active” near 1. Sparsity parameters stimulate neurons and prevent overfitting, maintaining an average activity close to 0. Some of the notations used in the Algorithm 1 are given below:

-

X: Input data matrix of size (m, n).

-

E: Encoder function.

-

Y: Decoder function.

-

Z: Encoded latent space.

-

\({\widehat{x}}\): Reconstructed output.

We summarise the AE model of MADESANT in Algorithm 1.

The network’s training is separated into two segments: encoder and decoder. The AE function is called using X input parameters, which represent the dataset’s input features. The encoder technique phi transforms the original data X into a latent space F. To begin again, we must initialize the hidden layer L, weight w, bias b, and performance indicator MSE. The AE network is then built using X input features and L hidden layers using the trainAutoencoder(X, L) technique. The psi decoder module maps the latent space F. The output function of AE is the same as the input function. With the decoded material, MADESANT reconstructs the original sample size. Next, we start the loop and repeat the process for 1000 epochs. The NN phi encoding function is followed by an activation function \(\sigma\), where y is the latent dimension. Decoding NN psi is done in a similar way, with different weights and biases. Finally, the model’s performance is assessed using the loss function and MSE. MSE and loss are computed for N rows \(N_i, N_i+1,\ldots , N_L\) and M columns \(M_j, M_{j+1},\ldots , M_L\) with decoded values \(y, y'\). The output of AE y is sent into the detection model as an input parameter.

4.5 Detection process

Existing studies highlight the popularity of using machine learning (ML) techniques, such as those in [22], for detecting anomalies in encrypted traffic data. However, classic ML approaches have limitations like pre-defined clusters and center point initialization. Works in [23] and [9] address these issues using multi-layer perceptron techniques, but these may perform poorly with few parameters, making them insufficient for complex detection systems for diverse devices. To overcome this, we opt for a Cascading Feed-Forward Back Propagation Neural Network (CFBPNN) model for MADESANT, leveraging its ability to learn complex relationships between input and output vectors through multiple interconnected layers. To validate the effectiveness of our proposed CFBPNN model, we conducted training on selected datasets using traditional Feed Forward Neural Network (FFNN) and Recurrent Neural Network (RNN) architectures. The network architectures for all three models, including CFBPNN, in the experiments conducted on the UNSW-NB15 dataset, are illustrated in Fig. 3 described in experimental setup Sect. 5.

Our proposed ANN model leverages the unique capability of establishing boundaries between clusters in a complex, high-dimensional space. This addresses issues encountered by previous approaches. The model, a variant of FFNN with recurrent connectivity, identifies normal and abnormal behavior through the formation of inherent clusters. During training, we use supervised backpropagation, initializing FFNN with the Levenberg Marquardt (LM) learning algorithm. The network consists of neurons in a multi-layer structure, with direct links shaped through an activation function in the hidden layer. We systematically add perception layers, starting small and gradually increasing size and connections to enhance speed and learning rate. Training concludes when the net performance achieves the desired accuracy. The mathematical expression for the proposed model, CFBPNN, is described in Eq. (3).

In Eq. (3), \(\int\) represents activation function, w represents the weight from input to output layer, i represents iteration, and we use y for the output layer. We add the bias to the weights and sum with the previous value till kth layer. For given N samples, we add \(\int\), being the activation function \(\int\) with the weights and bias for each iteration of i to the \(n_th\) value represented in the above expression. We summarize the sequence of the CFBPNN model in Algorithm 2.

Backpropagation serves as a learning algorithm, updating weights and calculating errors to propagate information through the network layers. The non-linear transfer functions enable learning of both linear and non-linear relations between input and output vectors. The interconnected network components maintain an uninterrupted flow of information. Figure 3c illustrates these connections. MADESANT decisions are based on the final association of its input variants. We evaluate all three models for both binary and multiclass attack classifications on UNSW-NB15 and IoT23 Datasets.

4.6 Severity calculation

In addition to binary attack detection in encrypted traffic (for Iot23 dataset), MADESANT classifies each incoming packet based on its risk factor using a supervised machine learning algorithm, Decision Tree (DT). The primary objective of this experiment is to build a predictive training model for the target variable’s class using decision rules. The process begins by importing the dataset and creating variables D (Dataset) and T (Tree). Starting from the root of the tree to predict a class, the algorithm compares the values of the root attribute with the record attribute, iterating until it reaches the nth field or terminates automatically. Each path in the tree, denoted as \(\left\{ T\right\}\), leads to a leaf representing an attack variant. By following the branch corresponding to that value, the algorithm jumps to the next node and calculates the height. The steps for calculating the risk factor (\(Risk_{p_{i.}}\)) are summarized in Algorithm 3.

Let us assume that \(X = \{x_{1}, x_{2}, \ldots , x_{k}\}\), where \(k \ge 1\), is a set of variables and \(AT = \{at_{1}, at_{2}, \ldots , at_{p} \}\), where \(p \ge 1\), is the set of possible attacks in the network. P denotes the propositions along the paths to the leaf nodes. We aim to calculate the risk of each packet \(p_i\) as \(Risk_{p_{i}}\). We calculate \(Risk_{p_{i}}\) as a function of the variable mentioned above and the attack: \(Risk_{p_{i}}= f\{ X, AT\}\). where \(P_1, P_2, \ldots , P_m\) are the propositions along the first path, \(Q_1, Q_2, \ldots , Q_k\) are the propositions along the second path, and so on, and \(R_1, R_2, \ldots , R_j\) are the propositions along the j-th path. Each conjunction outlined above maps a path from the root node to a leaf node, representing an attack variant. The logical OR operator combines these paths, while the logical AND operator represents the propositions along each path. Let P denote the proposition that \(X_j\) is in the path of \(at_i\), and let Q denote the proposition that \(val(X_j)\) is not in \(list(at_i)\). Then the given pseudo-code expression can be expressed as: \(P \wedge Q\), where \(\wedge\) is the logical AND operator. Therefore, the logical expression that corresponds to the given pseudo-code expression is “\(X_j\) is in the path of \(at_i\) and \(val(X_j)\) is not in \(list(at_i)\)”. Table T is called Ambiguity Table. Whenever a new packet \(p_{new}\) arrives in the network, MADESANT looks up the Ambiguity Table and checks if the variables of \(p_{new}\) are ambiguous. We show an example of an ambiguity table in Sect. 5. We assign each ambiguous variable a number: 1/k, so the total becomes as shown in Eq. (4).

If \(n = k\), i.e., all of the variables of the packet are ambiguous then \(Risk_{p_{i}} = 1\), and if \(n = 0\), i.e., no variable is ambiguous then \(Risk_{p_{i}} = 0\). So the limit of the calculation becomes as: \(0< Risk_{p_{i}}<= 1\), where 1 defines the highest level of severity.

MADESANT provide a comprehensive understanding of the potential impact of detected threat, in addition to this we have also analysed the risk of individual packet by calculating the risk score given in instance Fig. 4. Specifically, the risk scores assigned to packets is used as indicators of the severity of potential malware infections. For instance, packets associated with higher risk scores indicate a greater likelihood of malicious activity or a more significant impact on system integrity and operation. Additionally, the ambiguity Table 4 helps in identifying suspicious variables within incoming packets, which is further used as subset generated from severity analysis for detection procedure. Considering the factors as the type of attack, the criticality of affected systems or assets, and the potential consequences of successful exploitation, MADESANT enhance the ability to assess the severity of detected malware.

5 Experimental setup

This section outlines the experimental process and statistical considerations for better comprehension of MADESANT. The experiments are conducted on all three neural network models using a system featuring an Intel Core i5 processor, 16 GB RAM, and 1 TB Octane memory, running on Windows 10 and MATLAB R2021A. Severity calculation is performed using WEKA. Details of the experimental methodology for the IoT23 dataset and UNSW-NB15 encrypted traffic data samples are provided, along with insights into the approach for multi-class classification to determine severity.

5.1 CFBPNN for classification

Auto Encoder results serve as input for the CFBPNN detection model. Data is divided into training (70%), testing (15%), and validation (15%) groups. New attack classes are introduced in validation samples not present in training data to test the model’s ability to detect novel samples. MADESANT is validated to identify new samples and prevent overfitting and underfitting based on validation accuracy. The model is experimented with both binary and multi-class attack values, converting string values to a binary variable for consistent ordering. CFBPNN is implemented for the IoT23 and UNSW-NB15 datasets, training the model to classify and detect multi-class attacks and testing for both normal and abnormal classes. The detailed procedures and relevant network parameters for the study on each dataset are discussed in the following subsections.

Implementation Procedure on IoT23 and UNSW-NB15 For the IoT23 dataset, CFBPNN is configured with 24input layers, 4hidden layers, and a \(4-class\) output layer. In contrast, for the UNSW-NB15 dataset, the model comprises 44input layers, 5hiddenlayers, and 9attack categories, including fuzzers, analysis, backdoors, DoS, exploits, generic, reconnaissance, shellcode, and worms. Each layer features a recurrent connection from the input layer, with weights and biases added from the previous node. The key parameters used in constructing and training the CFBPNN networks are as follows:

-

Data division method: Random (dividerand),

-

No. of Epochs: 1000,

-

Transfer function: Transig,

-

Training method: Levenberg-Marquardt (trainlm),

-

Adaption learning function: learngdm, and

-

Performance indicator: Mean Square Error (MSE).

The feedforward neural network (FFNN) learns from initial inputs and their relationships, enabling it to infer unseen relationships on new data and generalize for predictions. Co-relating input values until the last layer is crucial to avoid missing data. The recurrent data model establishes loop-based connectivity from the initial input to the last output layer, enhancing the understanding of sequential patterns. The cluster model integrates both feed-forward and recurrent methods. Selected samples undergo training and testing on all three models-Feed Forward Neural Network (FFNN), Recurrent Neural Network (RNN), and the proposed Cascaded Feedforward and Recurrent Neural Network (CFBPNN)-for both IoT23 and UNSW-NB15 datasets. The internal network structure varies only in the input and output layers of the models, with all other properties being common across all experimented networks. An illustrative example of the network model for the UNSW-NB15 dataset is provided in Fig. 3.

Figure 3 displays the network model of FFNN (Fig. 3a), RNN (Fig. 3b) and CFBPNN (Fig. 3c) with 5 hidden neurons for each layer, 3 hidden layers, and 9 output layers for all models.

5.2 Risk analysis using severity calculation

For simulating MADESANT, WEKA is employed for risk factor analysis on the UNSW-NB15 dataset. Various decision tree models, including REPTree, Random Tree, Random Forest (RF), Decision Stump, and J48, are applied to assess the risk factor. Results in Table 3, reveal that J48 and RF techniques achieve high accuracy, with the lowest error rate of 0.04%. REPTree shows similar Mean Absolute Error (MAE) and performance close to J48 for other evaluation metrics. The comparison emphasizes J48’s superior accuracy and lower error rates compared to RF and REPTree based on training and testing results.

Although there is a minimal difference in Correctly Classified Instances (CCI) between decision tree and random forest methods (Table 2), the Decision Tree (DT) splits data into branches based on input features, while the Random Forest (RF) combines multiple DTs to reduce overfitting. As the dataset is normalized and without overfitting issues, J48 DT model is selected for risk analysis and prediction. The standalone DT outperforms an RF with only one tree, indicating J48 as a favorable choice for the next procedure. The decrease in the Correct Classification Increase (CCI) metric by 1% in the RF suggests its suboptimal performance, likely due to noise amplification. The complexity of the relationship between features and the target variable may not justify the use of RF for this dataset. Additionally, both DT and RF performance depend on hyperparameters, and RF may not be well-tuned for this specific dataset, leading to potential suboptimal performance.

Comparative indicators of classification accuracy show that the J48 algorithm has a higher accuracy percentage than RF and REP Tree. Similarly, for the Kappa value, J48 outperforms the other two algorithms. In terms of error values, the root mean square error of J48 is better than REP Tree but equal to RF. For mean absolute error, J48 performs better than Random Tree and Decision Stump but is balanced with REP and RF. The decision trees generated by the three algorithms exhibit significant differences. The J48 algorithm generates 4 exploits from input attributes, while RF results in 6 exploits, and a REP Tree uses 9 out of 47 input features as criteria to establish decision tree branches Table 4. Notably, REPTree shows a significant difference comparatively.

Figure 4a, b depicts instances generated by the decision tree, illustrating the paths leading to an exploit attack. Using Algorithm 3, we obtain the Ambiguity Table for exploit attacks Table 4. Eight variables, including sbytes, service, sttl, ct-st-ltm, ct-srv-src, dbytes, ct-src-ltm, spkts, along with 17 distinguishing values, are identified as responsible for exploit attacks. Assigning each of these eight variables a value of 1/47, we calculate the risk of a packet arriving in the network with these specific values as \(Risk_{p_i} = \sum _{8} (1/47) = 8/47 = 0.17021\). This method allows us to develop an ambiguity table for all attacks and identify the risks associated with each incoming packet.

Finally out of all the methods used in the experiment to analyze the risk factor given in Table 3, J48 and RF have optimistic results in predicting the best instances to analyze the risk factor. The J48 algorithm results are close to the RF algorithm with similar MAE and RMSE. J48 classifies the correct instances with \(84.02\%\) accuracy, with the least error rate among the models under testing, whereas decision stump results with \(43.58\%\) accuracy and \(88.00\%\) RRSE has the lowest performance comparatively.

6 Evaluation metrics

MADESANT’s performance is assessed using confusion matrices, accuracy, MSE, logarithmic loss, and the ratio of true positive to true negative. Using a confusion matrix, we examine the rate of false positives and false negatives.

Classification accuracy MADESANT provides multi-class classification. We calculate the accuracy of the model based on the ratio of the correct predictions to the total number of input samples as shown in Eq. (5). We observe that CFBPNN of MADESANT results with \(100\%\) accuracy for multi-class classification.

Logarithmic Loss (LL) We use this metric to calculate the loss for multi-class classification by penalizing the false classifications. Minimizing logarithmic loss gives greater accuracy for the classifier. For N input samples with M classification, we predict the loss as shown in Eq. (6). Our study shows that logarithmic loss for CFBPNN is 0.0313m.

Here, \(y_{i_j}\) indicates whether sample i belongs to class j. \(p_{i_j}\) indicates the probability of sample i belonging to class j.

Confusion matrix We use a confusion matrix to evaluate the complete performance of the model. We have used a four-class matrix that projects the true and false identification for each attack class and the normal values. We show a confusion matrix for a binary class in Fig. 5. We calculate the matrix accuracy by the average of the diagonal value given in Eq. (7).

Mean Squared Error (MSE) We calculate MSE based on the average of the squares between predicted and actual values. The effect of a larger error becomes more pronounced than the smaller error CFBPNN MSE is 0.273. The regular expression of MSE is shown in Eq. (8).

False Negative Rate (FNR): This provides missed attack in detection and the ratio of anomalies directed as regular network activity. In simple this represents the false instances designated as true calculated using Eq. (9). On average, our proposed model resulted with a zero false rate for attack identification under binary class for all the experimented samples. Whereas for multi-class attacks the cascading model projected 0.22% overall false rate for the tested samples. The values show the non-descriptive nature of the number of anomaly situations that are taken to be true.

False Positive Rate (FPR): Attack records that are considered normal are present in the network package, according to this. The false cases are traced with Eq. (10). This represents the percentage of incorrect results classified. For the binary class, our suggested model displays a \(0\%\) false rate, indicating no occurrence of incorrect interpretation.

Precision (P): The ratio of actual positive sample results to anticipated positive sample is referred as precision given withP in Eq. (11). To put it another way, precision refers to how well the system detects threats, and it is especially crucial in circumstances where the cost of false alarms is large. A high precision number lowers the possibility of false positives and indicates the system’s reliability in detecting true threats.

Recall (R): This reflects the proportion of actual positive cases to the overall instance using Eq. (12). R is a representation of the model’s ability to identify attacks from a specific class. The CFBPNN model can categorize attacks with an accuracy of \(0.99\% and an error rate of\)0.1%, according to the R-value.

F Score: Using the harmonic mean and incorporating the false positive and negative rates of imbalance datasets, the F-score measures both recall and precision as shown in Eq. 13.

where recall is the proportion of actual positive cases to all predicted instances. The precision is the ratio of true identified instances among the projected in Eq. (12). There is a range of performance from 0 to 1, with 0 representing the worst performance and 1 representing flawless precision and recall given in Eq. (11). In order to achieve a high F-score, precision and recall must be balanced.

6.1 Results and comparative analysis

In this section, we evaluate the efficiency of MADESANT and compare results with traditional network models, focusing on false rate and accuracy as key factors for robustness assessment. Cascading models, like CFBPNN in MADESANT, capture temporal dynamics, enabling analysis of interconnections and relations between inputs. Traditional network models, while simpler and intuitive, may not fully capture the complexity of real-world systems, especially when system dynamics are crucial. This section explores CFBPNN in MADESANT compared to FFNN and RNN, tested on IoT23 and UNSW-NB15 datasets.

6.1.1 Comparison for IoT23 dataset

Feed-forward networks, initially designed for linear regression problems, handle non-linear representations through multi-layer perceptrons, extracting relationships between variables. These networks pass a linear combination of inputs through layers, modifying input utilization with activation functions. Non-linear functions, like logistic and sigmoid, capture complex relationships but can struggle with large datasets, leading to low accuracy. RNNs address this by randomizing weight initialization but introduce vanishing gradients issues. The exploding gradient problem occurs when derivatives are larger than gradients, leading to exponential growth. In the CFBPNN model, connections extend from the input layer to every successive layer, directly linking to the output layer. CFBPNN accommodates non-linear relationships between input and target variables, addressing the limitations of traditional activation functions in large datasets.

In a comparative evaluation on the IoT23 dataset, FFNN, RNN, and our CFBPNN model were tested for suitability in MADESANT, with results depicted in Fig. 6. Confusion matrices for FFNN and RNN, shown in Fig. 6a, b, respectively, were utilized for accuracy analysis with multi-class representation. All three models were trained and tested on the same dataset with identical layer and neuron structures. FFNN achieved 96.4% accuracy, lower than RNN’s 99.8%. However, RNN results were comparable to our CFBPNN. After repeated training and weight initialization, CFBPNN achieved 100% accuracy with no false ratio, as evidenced in the confusion matrix shown in Fig. 6c.

We summarize the comparative study of the three models (FFNN, RNN, and the proposed CFBPNN) in Table 5 for each attack category represented with numerical integers assigned in Table 2. The overall accuracy of FFNN, RNN, and CFBPNN is 96.0%, 99.8%, and 100%, respectively. FFNN yielded a comparatively higher error rate of 3.42, while RNN demonstrated a lower error rate of 3.07. The proposed cascading model, CFBPNN, claims the lowest error score of 0.27 and the highest accuracy in identifying anomalies in IoT structures. This suggests the effectiveness of the proposed cascading model in detecting anomalies in IoT structures.

The performance of all three network types is nearly identical, with slight differences. Each model correctly recognized typical traffic flow with an average accuracy of 99.75%, while the cascade model achieved 99.99% accuracy in categorizing instances. The feedforward network (FNN) showed underwhelming performance in handling complex intrusion detection tasks compared to RNN and CFBPNN models. Despite achieving 96.18% accuracy for malicious attacks and 97.17% for C and C attacks, FNN exhibited a significant limitation in discerning normal network behavior from malicious activities, reflected in its higher false positive rate of 0.5%.

The lack of sequential memory in FNN, essential for handling temporal dependencies in network data, contrasts with the effective handling of sequences by RNN and the cascading architecture of CFBPNN. FNN’s reliance on static feature extraction may not be optimal for the intricate and dynamic nature of intrusion detection scenarios. While FNN showed promising accuracy, its results were poorer compared to RNN and CFBPNN, highlighting the importance of using models with more sophisticated architectures for effective intrusion detection. For malicious attacks and horizontal port attacks, RNN and CFBPNN produced comparable precision and recall results. Despite RNN’s high accuracy of 99.98% for C and C attacks, it exhibited a low precision of 0.18, indicating missing positive predictions, and a weighted average of 0.28, which the other two models did not have. In the subsequent investigation, by adjusting the required learning strategies, unbiased findings for CFBPNN were obtained, with a precision-recall and F-score of 1.0, indicating a balanced categorization of the model.

6.1.2 Comparison for UNSW-NB15 dataset

We evaluate the performance of FFNN, RNN, and our CFBPNN using the UNSW-NB15 dataset to assess model performance for encrypted traffic analysis. CFBPNN achieves the highest accuracy of 84.3% for all input features, while RNN and FFNN achieve 80.8% and 86.3%, respectively. Additionally, we experiment with the models, incorporating our proposed risk factor analysis module of MADESANT, and the results are summarized in Table 6.

As illustrated in Fig. 4, we consider the feature path extracted from J48 tree risk factor analysis, encompassing six input features (service, spkts, sbytes, dbytes, ct-srv-src, ct-src-ltm) and one target variable (attack-cat) representing multi-class attacks with nine types. Training and testing the proposed models on these variables for risk prediction lead to improved accuracy and a low false rate, as shown in Table 6. Confusion matrices for the selected features are depicted in Fig. 7 for all three models.

In the comparative analysis, RNN and CFBPNN models achieve the highest accuracy of 99.9% and 99.8%, with only a 0.1% variation. Conversely, the traditional feed-forward method exhibits an accuracy of 89.4%, indicating its inadequacy for detection techniques when tested on the UNSW-NB15 dataset. Considering accuracy as a crucial metric in intrusion detection studies, we find from Table 6 that RNN and CFBPNN results are closely connected, with significant variance for FFNN. Sequential connection for layer processing is deemed unsuitable for encrypted analysis, while RNN and CFBPNN, with recurring behavior functions, intelligently reinforce links between layers to detect abnormalities. In both studies, RNN and CFBPNN exhibit very modest differences in performance. When evaluated with the entire dataset, CFBPNN shows a 1.54% loss and 4.3% greater accuracy than RNN.

When assessed on risk factor variables, the results of RNN and CFBPNN align closely. Considering the various techniques for measuring risk, performance normalization and balance across the entire dataset, regardless of instances, indicate that the proposed CFBPNN model is well-suited for identifying attack variants in large encrypted datasets.

From Fig. 7, all neural network models exhibit high average results, surpassing 95%, for intrusion detection on encrypted data. However, there is variation in performance across models, depending on the attack type. Table 7 presents the performance with key metrics for nine attack types tested on all three neural network models. FFNN achieves an overall accuracy of 89.4%, but it falls short in detecting backdoors, exploits, and worms. False rates reach 11% for backdoors and worms, while exploits yield a relatively good 0.98 F1 score. In tracking shellcode attacks, RNN and CFBPNN boast a 0.07% false rate, even with 145 instances of worm-infected records. Underfitting issues arise due to the unbalanced distribution of attack instances, resulting in low performance, but this is negligible in the case of CFBPNN and RNN models. All other attack instances exhibit 100% accuracy with 1.0 precision and recall values.

6.1.3 Comparison for X-IIoTID dataset

After processing the data with autoencoders and employing the CFBPNN model for multi-class attack classification, the outcomes illustrated in Fig. 8 for the latest industrial intrusion dataset. This suggest that our proposed model serves as a robust baseline for identifying DDoS and DoS attack variants. Across diverse datasets, the model consistently achieves 100% accuracy in recognizing service denial attacks, reflecting its efficacy in this domain. However, upon testing with provided data, the model displays an 8.36% false positive rate, particularly in detecting communication leaks within command and control attacks. To mitigate this limitation, AIDS models could offer a viable solution for tracking such attacks. Overall, PIGNUS exhibits an impressive average accuracy of 99% across all attack categories, with the exception of instances involving RDoS and tampering, underscoring its resilience and effectiveness on the testing data

6.1.4 Comparison of CFBPNN with any other network models

CFBPNN outperforms FFNN and RNN, making it suitable for MADESANT. When compared with state-of-the-art models, as detailed in Table 8, CFBPNN exhibits a unique characteristic of high accuracy due to the direct connection between the input and output layers. The use of Autoencoders (AEs) in our model enhances results by training them to extract optimal features influencing the technique based on the inputs. Addressing the non-recurrent property of FFNNs and overcoming missing values in RNNs, MADESANT, as part of its dual-purpose function, calculates weights and biases, forwarding values to the next layer for fine-tuning detection. Observing Table 8, we note that most models utilize feature selection techniques to choose the optimal feature set for detection accuracy. MADESANT, through severity calculation and risk-causing variables, achieves 100% accuracy in detecting multi-class attacks in the UNSW-NB15 dataset.

Eva Papadogiannaki et al. [13] achieve 100% true negative results but have limited performance on real-time datasets and cannot identify known patterns. In contrast, MADESANT achieves 0% false rates across different attack classes. A DL-based integrated method reported by Zeng et al. [14] attains 99.85% accuracy, comparable to MADESANT, but lacks the ability to identify risk factors.

Regarding clustering and classification, anomaly-based detection models developed by Garcia et al. [15], Xuyang et al. [24], Kilincer et al. [21], and Keshav Sood et al. [18] are best suited to their specific use cases. MADESANT, on the other hand, demonstrates global applicability through experiments on two popular datasets. Siddharthan et al. [19] create attack and normal samples using three Raspberry Pi devices, testing the model with popular machine learning techniques like K-nearest neighbor, eXtreme Gradient Boosting, and random forest. MADESANT outperforms two common deep learning techniques, FNN and RNN, in terms of accuracy.

A unique factor in feature selection using Vertical Mode decomposition by Subramani et al., tested on the Cloud Intrusion Detection Dataset (CIDD) [20], results in 97.74% accuracy based on vertically generated instances, but struggles to accommodate changes in network patterns.

MADESANT demonstrates high recall rates and a lower false positive ratio compared to other models, minimizing the scope of error. The cascade model’s unique attribute of processing previous weight and bias values to subsequent hidden layers enhances detection rates and reduces errors, setting it apart from traditional neural network techniques. The experiments validate the model’s efficiency, achieving 100% accuracy with zero false rates for the IoT-23 dataset and 99.98% accuracy for the UNSW-NB15 dataset.

7 Conclusion

In conclusion, this study introduces MADESANT, a deep learning-based Intrusion Detection System designed to enhance detection capabilities in encrypted data environments. Leveraging a cascade neural network model and deep learning-based Autoencoder (AE) for feature extraction, MADESANT achieves accurate monitoring and robust abnormal behavior detection. The model is evaluated on the IoT-23, UNSW-NB15 and XIIoTID datasets, demonstrating superior performance, with a 100% accuracy rate for IoT-23, 99.8% for UNSW-NB15 and 99% accuracy on XIIoTID dataset. A notable innovation is MADESANT’s risk factor analysis using a J48 decision tree for normal traffic, contributing to the model’s effectiveness.

MADESANT result with 100% accuracy for binary class, this enhances security by minimizing false positives and false negatives and also enables prompt threat response, thereby improving operational resilience. With precise detection capabilities, we can effectively mitigate potential damage and disruption to industrial operations, reinforce overall security posture. Additionally, the risk analysis facilitates targeted resource allocation, and prioritize the security to address high-risk vulnerabilities efficiently.

In encrypted traffic scenarios, MADESANT excels in multi-class classification, achieving a 0% false rate for 4-class attacks and a 15.7% false rate for 9-class attacks. Overall, MADESANT outperforms existing models with a 20% improvement in accuracy and a 15% reduction in false rates. This versatility makes MADESANT well-suited for analyzing both encrypted and non-encrypted data traffic. Future work aims to extend experiments to other encrypted datasets, ensuring the generalization of results, and explore combinations of multiple neural network structures to further enhance accuracy.

Data availability

No datasets were generated or analysed during the current study.

References

Aycock, J.: Computer Viruses and Malware, Advances in Information Security. Springer, Berlin (2006)

State of Malware (2024). https://www.felipeprado1975.com/single-post/report-2024-state-of-malware-threatdown-malwarebytes. Accessed 6 Feb 2024

Sen, S., Spatscheck, O., Wang, D.: Accurate, scalable in-network identification of p2p traffic using application signatures. In: 13th International Conference on World Wide Web, WWW 2004. ACM, pp. 512–521 (2004)

Google, Https encryption on the web: https://transparencyreport.google.com/ https/. Accessed 10 Sep 2023

Anderson, B.: Hiding in plain sight: Malware’s use of TLS and encryption, https://blogs.cisco.com/security/malwares-use-of-tls-and-encryption, CISCO Blogs,. Accessed 10 Sep 2023

Radware, Global application and network security report (2016–2017). https://www.radware.com/PleaseRegister.aspx?returnUrl=644245912, 2017. Accessed 10 Sep 2023

Dhirani, L.L., Armstrong, E., Newe, T.: Industrial IoT, cyber threats, and standards landscape: evaluation and roadmap. Sensors (Basel) 21(11), 1–30 (2021)

Altunay, H.C., Albayrak, Z.: A hybrid CNN+ LSTM based intrusion detection system for industrial IoT networks. Eng. Sci. Technol. 38, 1–13 (2023)

Tharewal, S., Mohammed, W.A., Sayyada, S.B., Perumal, U., Samar, M.H., Mohammad, S.: Intrusion detection system for industrial Internet of Things based on deep reinforcement learning. In: Wireless Communications and Mobile Computing, pp. 1–8 (2022)

Yazdinejad, A., Mostafa, K., Reza, M.P., Ali, D., Hadis, K.: An ensemble deep learning model for cyber threat hunting in industrial internet of things. Digit. Commun. Netw. 9(1), 101–110 (2023)

Syed, N.F., Mengmeng, G., Zubair, B.: Fog-cloud based intrusion detection system using recurrent neural networks and feature selection for IoT networks. Comput. Netw. 225, 1–14 (2023)

Sanju, P.: Enhancing intrusion detection in IoT systems: a hybrid metaheuristics-deep learning approach with ensemble of recurrent neural networks. J. Eng. Res. 11, 356–361 (2023)

Papadogiannaki, E., Tsirantonakis, G., Ioannidis, S.: Network intrusion detection in encrypted traffic. In: IEEE Conference on Dependable and Secure Computing (DSC), Edinburgh, pp. 1–8 (2022)

Zeng, Y., Gu, H., Wei, W., Guo, Y.: \(Deep-Full-Range\): a deep learning based network encrypted traffic classification and intrusion detection framework. IEEE Access 7, 45182–45190 (2019)

Garcia, N., Alcaniz, T., González-Vidal, A., Bernabe, J.B., Rivera, D., Skarmeta, A.: Distributed real-time SlowDoS attacks detection over encrypted traffic using Artificial Intelligence. J. Netw. Comput. Appl. 173, 1–16 (2021)

Wadkar, M., Troia, F.D., Stamp, M.: Detecting malware evolution using support vector machines. Expert Syst. Appl. 143, 1–10 (2020)

Maddison, J.: Encrypted Traffic Reaches A New Threshold, IT Infrastructure Advice, Discussion, Community—Network Computing. https://www.networkcomputing.com/network-security /encrypted-trafficreachesnew-threshold. Accessed 20 Oct 2023

Sood, K., Nosouhi, M.R., Nguyen, D.D.N., Jiang, F., Chowdhury, M., Doss, R.: Intrusion detection scheme with dimensionality reduction in next generation networks. IEEE Trans. Inf. Forensics Secur. 18, 965–979 (2023)

Siddharthan, H., Thangavel, D.: A novel framework approach for intrusion detection based on improved critical feature selection in Internet of Things networks. Concurr. Comput. Pract. Exper. 35(1), 1–14 (2023)

Subramani, S., Selvi, M.: Multi-objective PSO based feature selection for intrusion detection in IoT-based wireless sensor networks. Optik 273, 1–15 (2023)

Kilincer, I.F., Tuncer, T., Ertam, F., Sengur, A.: SPA-IDS: an intelligent intrusion detection system based on vertical mode decomposition and iterative feature selection in computer networks. Microprocess. Microsyst. 96, 1–13 (2023)

Jorgensen, S., Holodnak, J., Dempsey, J., de Souza, K., Raghunath, A., Rivet, V., DeMoes, N., Alejos, A., Wollaber, A.: Extensible machine learning for encrypted network traffic application labeling via uncertainty quantification. IEEE Transactions on Artificial Intelligence (2023)

Ahmad, Z., Shahid Khan, A., Wai Shiang, C., Abdullah, J., Ahmad, F.: Network intrusion detection system: a systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. 32(1), 1–29 (2021)

Jing, X., Zhao, J., Yan, Z., Pedrycz, W., Li, X.: Granular classifier: building traffic granules for encrypted traffic classification based on granular computing. Digit. Commun. Netw. (2022) (in press)

Garcia, S., Agustin, P., Maria, J.E.: IoT-23: a labeled dataset with malicious and benign IoT network traffic, Technical report. Stratosphere Lab, Praha (2020)

Moustafa, N., Slay, J.: Unsw-nb15: a comprehensive data set for network intrusion detection systems (UNSW-NB15 network data set), Military Communications and Information Systems conference (MilCIS). IEEE, pp. 1–6 (2015)

Al-Hawawreh, M., Sitnikova, E., Aboutorab, N.: X-IIoTID: a connectivity-agnostic and device-agnostic intrusion data set for industrial Internet of Things. IEEE Internet Things J. 9(5), 3962–3977 (2021)

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

The authors of the paper are having the following explicit roles; however, the overall presented work is the output of the coordination among the authors. PLS Jayalaxmi: Conceptualization, Methodology, and Writing – original draft. Manali Chakraborty: Validation, Writing—review and editing. Rahul Saha: Investigation, Supervision, Writing—original draft. Gulshan Kumar: Data curation, Methodology, Formal analysis. Mauro Conti: Project administration, Writing—review and editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jayalaxmi, P.L.S., Chakraborty, M., Saha, R. et al. MADESANT: malware detection and severity analysis in industrial environments. Cluster Comput (2024). https://doi.org/10.1007/s10586-024-04527-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10586-024-04527-y