Abstract

Super-computing or HPC clusters are built to provide services to execute computationally complex applications. Generally, these HPC applications involve large scale IO (input/output) processing over the networked parallel file system disks. They are commonly developed on top of the C/C++ based MPI standard library. The HPC clusters MPI–IO performance significantly depends on the particular parameter value configurations, not generally considered when writing the algorithms or programs. Therefore, this leads to poor IO and overall program performance degradation. The IO is mostly left to individual practitioners to be optimised at code level. This usually leads to unexpected consequences due to IO bandwidth degradation which becomes inevitable as the file data scales in size to petabytes. To overcome the poor IO performance, this research paper presents an approach for auto-tuning of the configuration parameters by forecasting the MPI–IO bandwidth via artificial neural networks (ANNs), a machine learning (ML) technique. These parameters are related to MPI–IO library and lustre (parallel) file system. In addition to this, we have identified a number of common configurations out of numerous possibilities, selected in the auto-tuning process of READ/WRITE operations. These configurations caused an overall READ bandwidth improvement of 65.7% with almost 83% test cases improved. In addition, the overall WRITE bandwidth improved by 83% with number of test cases improved by almost 93%. This paper demonstrates that by using auto-tuning parameters via ANNs predictions, this can significantly impact overall IO bandwidth performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This research examines the use of artificial neural networks (ANNs) in optimisation of parallel high performance computing (HPC) input/output (IO). HPC or super-computing machines are normally clusters of many-core CPU nodes and storage disks. These are interconnected via fast LAN cables (e.g., InfiniBand, Intel OmniPath, etc.) as the medium of communication at hardware infrastructure level [1, 2]. Figure 1 shows the typical overview of a HPC cluster hardware structure. The diagram depicts the multiple compute nodes connected with each other and multiple storage, over the fast network, which is generally a structure of any HPC cluster. Parallelization has been highly effective in exploiting the power of a such cluster via message passing interface (MPI) standard software library written on C/C++ platform [3]. MPI is a distributed memory parallel programming framework that comes with a broad set of functionalities to utilize a cluster’s parallelism potential. When an application using MPI is built, the MPI–IO part within the program is often leftover to consider for optimization thus, causes the poor IO throughput, resulting in the overall performance issues. Since, the IO side needs to be manually tuned by different configurations for performance optimization, this creates an extra overhead at the user end to perform this task. It becomes even more challenging for the user to select particular settings since not knowing the IO performance outcome. To overcome this challenge, in this research, we have proposed the technique to predict the IO bandwidth performance and auto-tune the related configuration parameters before an IO operation. This extra software layer between user and MPI–IO operation takes away the burden of manually tuning the configuration settings. Additionally, the powerful ANN based ML prediction model supports in selecting new value settings with highly expected improvement in parallel IO performance.

Despite MPI being an efficient parallel computing framework, the parallel MPI–IO side of the HPC cluster suffers from application performance degradation. The first reason is the slow pace of development in the advancement of IO storage processing hardware in contrast to computing hardware. The second reason is, in HPC systems parallel IO depends on particular parameters to determine its bandwidth from the software side. These critical parameters are related to different components which support parallel IO on the clusters. Parallel IO and storage are normally managed by a specific parallel file system (PFS) in the clusters at a low level. In this research case our PFS is lustre file system (LFS) [4]. The common factors which effect IO bandwidth performance are; the number of MPI processes, the number of parallel discs, the file access patterns and other properties. These factors and IO optimizations are not generally taken into account by the parallel application programmers. This results in poor IO and consequently overall application performance degradation. The parallel IO side is often neglected and left solely to manual tuning by researchers to devise techniques for the improvement of parallel IO at the software side, as being done in the past [5, 6, 8]. These approaches suggest the different strategies around data-alignment based configuration settings that improve IO but do not cover all the scenarios as explained in [9]. In this research, we require an approach to optimize IO for the maximum possible scenarios within MPI–IO applications. To achieve this, we propose to auto-tune parameter settings from current values, estimate maximum possible bandwidth values, before any IO execution.

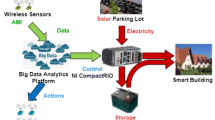

The need of HPC and machine learning (ML), is crucial to areas such as Internet of Things (IoTs) based smart city environment, that critically relies on the efficient data IO, and has shown recent advancement [10–1217, 18]. In this research, the ML technique is applied to HPC MPI–IO operations, for the bandwidth performance optimization. It is the extension of the previous work completed in relation to MPI–IO operations bandwidth prediction only, on the related parameters settings [9]. In extending this previous research, our innovation lies in auto-tuning those parameters on the basis of MPI–IO bandwidth prediction to the optimized values. Previous research shows significant benefits of ML based IO performance prediction and auto-tuning over different parameters settings at different environment experimental setups [19, 20, 21, 23]. This has provided the motivation to apply similar approach to improve the MPI–IO application bandwidth performance, in this research scenario. Therefore, the prediction models are generated through the ML process, and used in this research to support the auto-tuning approach. The work presented in [24, 25, 26, 28], explains the basic working of ANNs as ML technique, application to improve file access times in HPC, and shows the significant prediction accuracy of using ANNs, specially when models constructed in PyTorch framework. This provided us further motivation to use ANNs based ML technique with PyTorch API. In result of this, the generated ANN models in this research, have proven significantly beneficial for optimizing the MPI–IO performance through auto-tuning parameter settings against the default configuration test cases.

Initially, we re-generated and re-executed the MPI–IO READ/WRITE benchmarks for bandwidth profiling over the related parameters, as done in [9]. This was to ensure that we replicated similar IO bandwidth patterns from varying the configuration settings. Then we re-generated separate ANN models for READ/WRITE operations, having the precise similar prediction accuracy to the previous work. Subsequently, the further work presented in this research is innovative since we have designed our auto-tuning strategy based on those ANNs, which is a critical first contribution of this research. This research presents an ANN ML based approach to select and auto-tune the parameters settings to get optimized bandwidth. The results have shown notable improvements in bandwidth through a detailed statistical analysis which represents the second key contribution contained in this research. Finally, since having significant IO bandwidth performance outcomes, the most beneficial configurations have been noted and highlighted in detail as the third key contribution.

The results presented later in this paper demonstrate that the proposed auto-tuning strategy using ANN models contributes to a significant gain in IO performance. The remaining structure of this paper follows with the Related Literature in Sect. 2, Research and Design Strategy in Sect. 3, Performance Evaluation in Sect. 4, Summary of the Work in Sect. 5 and Conclusion in Sect. 6.

2 Background and related research

Earlier research studies have been explored, involving the use of ML based predictive IO modelling, and how best to address poor IO performance issues. This is primarily achieved by tuning related parameters on certain cluster environments. These research studies have been a motivation for us towards auto-tuning of our related parameters predicting maximum possible IO bandwidth performance for files striped across parallel networked lustre disks in HPC clusters.

In [9], it was shown that the parameters relating to MPI, LFS and file properties can cause increases or decreases in IO bandwidth. Therefore, ANN models were created to predict IO bandwidth performance for the MPI–IO READ and WRITE operations scenarios. The models’ prediction accuracy values were 62.5% and 72.1% for READ and WRITE operations, respectively. The parallel HiPlots have also been used to visualize bandwidth changes with different parameters settings values [29].

In [19], the study demonstrates that parameters like the IO scheduler, number of IO threads and CPU frequency affects HPC–IO performance. The IO behaviour is predicted and determined upon these factors as parameters through extrapolation and interpolation techniques. This was completed by large-scale experiments using a data analytics framework. Their performance evaluations were conducted by prediction accuracy calculations with the unseen testing system configurations. Afterwards, the system used a Bayesian Treed Gaussian process variability map with different regression techniques. This supported parameter selection through HPC variability management and insights of current statistical methods.

The method demonstrated in [20], allows for the adaptive scheduling of parallel IO requests within a HPC system running application. This was achieved by tuning the time window based parameters of the current executing workload. This adaptive technique is implemented through reinforcement learning by the scheduler. It achieved 88% precision in parameter selection on runtime after access pattern observation and classification using neural networks by the system for few minutes. Subsequently, its IO performance would be optimized by the system for its remaining lifetime, as declared in the study.

The work presented in [22], examines bandwidth predictive modelling for MPI WRITE collective operations via random forest regression. The prediction accuracy values are extremely high, ranging around 82–99%, which depends on depth setting maximum value. The data sets for training and testing were relatively small, which would be require more examination. Having greater variation in the data, the accuracy values could be significantly lowered.

In [21], the IO performance optimization of parallel applications was proposed for HDF5 format files. It was tested on a range of varied HPC clusters employing LFS and general PFS (GPFS). The auto-tuning played a key role on the basis of predictive IO modelling. The predictive models were trained with lustre IOR and other benchmarks data using a nonlinear regression technique. The IO performance notably increased with new parameters selection via auto-tuning. Comparatively, Reference [23] also demonstrated predictive IO modelling for LFS IOR benchmarks but using a Gaussian process regression (GPR).

The work carried out in [26], performs prediction for time of file accesses on LFS storage disks. The file access time for a series of tests are recorded to be used for developing prediction models. The evaluation shows the generated ANNs yield 30% less error in average prediction as compared to linear predictive modelling. The file access times distribution and its evaluation were conducted with regard to similar parameters used to access files. It also discovered that the typical file access times usually differed by a degree of magnitude, depending on the different IO paths.

Additionally, some other research studies were inspected in the area of MPI application optimization using ML prediction and auto-tuning parameters, however, the IO side was mostly ignored [30, 31].

3 Experimental design and implementation

This research has been conducted by applying a series of steps in sequence. (1) Identification of the key parameters, (2) the generation of benchmark data, (3) generating two ANN based prediction models for each READ and WRITE operation, (4) designing and applying the auto-tuning strategy, (5) analysing IO bandwidth improvements statistics and (6) identifying the common configuration settings selected by auto-tuning the system model. Figure 2 explains the main components that summarise the sequence of steps involved in our proposed approach with the experimental setup, further elaborated and explained in Listings 1 to 5.

3.1 Identification of key and tunable parameters

Initially, we must identify the key configuration parameters with fixed possible value settings. This is an essential step prior to the execution of READ/WRITE bytes of data from the file on disk in the series of benchmarks. Each benchmark execution should be completed in order to profile bandwidth, as an output against each READ/WRITE operation with a specific set of configuration values settings. This bandwidth profiling data is then split and used as training and testing sets separately. The data is split in a 80:20 ratio of the total benchmarks results for these training and testing sets. This approach then allows us to learn and validate our ANN models. It should be noted that configuration parameters are either tunable or non-tunable, when considering them for auto-tuning in later.

Table 1 contains the identified key tunable and non-tunable parameters against their corresponding values settings specified for benchmarks execution. The total number of value settings available to benchmark is shown to total 30,720, which gives an indication of the scale of configurations for the ML process. However, the IO operation specified in this table is not essentially a configuration parameter. This is to just specify that these configurations are executed for both READ and WRITE operations separately. They are marked as “Not Applicable” from the tunability point of view. Arising from this, we have a total of 15,360 possible configurations (30, 720/2) for each operation type (READ and WRITE). Thus, we have an intuitive process for the generation of datasets relating to both the READ and WRITE bandwidth patterns. This can then be modelled by two separate ANN models for each operation type.

To summarise, a single configuration setting row in a list of data set can be represented as follows:

where it runs for a READ and WRITE operation separately. Similarly, all configuration settings in that list are fetched and benchmarked iteratively and separately for both IO operations (READ/WRITE).

3.2 Generating MPI–IO benchmarks results data

In this section we describe the procedure for generating MPI–IO benchmarks in order to then use this as a training and testing set for our ANN models.

The Listing 1 shows the method Generate_Benchmark_Results() used to generate IO bandwidth profiling data by means of benchmarking against each configuration setting. It takes Configuration_Settings as an argument, which has the list of value settings for each parameter. The first step (Line 2) is to generate a complete list of all possible configuration settings to be benchmarked. Each row of (MPI_{i}O_Configs_List) have one set of configuration settings, as stated by the example mentioned in Sect. 3.1 previously.

During the execution of MPI–IO benchmarks, the MPI processes read/write the corresponding file chunks. Prior to execution, the file is striped and generated over stripe count of LFS employed disks with the specified value of stripe size in the case of READ operations. This is stated from Lines 11 to 16. For WRITE operations these lustre settings are applied to an empty file beforehand.

When the file is written on disks it is distributed on the same stripe count of disks with the stripe size value. A single MPI read/write execution can be collective or non-collective. In the collective operation each process accesses other running parallel processes address space, therefore making a non-contiguous access. Whereas, in non-collective operation each MPI process can have access to its own address space only and keeps the access contiguous.

Before every benchmark execution the pre-benchmarks settings are applied in regard to LFS striping as mentioned earlier, and the Darshan utility. Darshan is the tool for characterization of HPC–IO for capturing bandwidth during execution [32], as the setting applied on Line 18.

At this point, we apply pre-benchmark settings as a means of measuring the bandwidth performance of a specific configuration in regard to specific file striping. Once the execution of a configuration set completes on Line 21, the IO bandwidth performance gets captured by Darshan and parsed to retrieve on Line 24. The benchmarked bandwidth value for each IO execution with its configuration parameters values settings are added at the end of file in the YAML dictionary style format on Line 25.

Afterwards, the post-benchmark setting is applied to that benchmarked file by deleting it to free the cache, on the last Line 28. This completes a single benchmark iteration over a configuration set of parameter settings for each READ and WRITE operation.

Figure 3 shows the benchmark results which depict the bandwidth patterns for all configuration settings available. In general, the bandwidth values increase with respect to the increasing number of MPI nodes and processes per node.

3.3 Creation and development of ANN models

In this section, we present the training and development of ANN models that are used for bandwidth predictions. These ANNs have two hidden layers with 256 and 128 nodes respectively, as outlined in Table 2. The input layer and output layer has 7 and 1 nodes against the 7 input feature parameters from Table 1 and 1 output feature as the predicted IO bandwidth value in all models. Table 3 shows the mappings of 7 input layer nodes to the 7 configuration parameters. The 1 output layer node maps to the IO bandwidth value. The IO operation parameter from Table 1 is excluded because the models are created separately for READ and WRITE operations. Therefore, we have two distinct ANNs models, \(ANN_{READ}\) and \(ANN_{WRITE}\). Table 4 represents the values for hyper-parameters being used during the ANNs training, to support the accuracy during validation.

The ANN models are developed and trained using PyTorch [27] through the pseudo-code ANN_Training() in Listing 2. The hyper-parameters values are applied according to Table 4. Prior to the ANNs training, the MPI–IO benchmarks results data is scaled using MaxAbsScaler [33]. Afterwards, data is shuffled and split between training and testing sets. For each ANN model 80% of benchmarks results are used for training purposes and 20% for testing.

Initially, Lines 5 to 14 initialized the ANN model with random weights, where 0.05% dropout is applied on the hidden layers in the case of training \(ANN_{WRITE}\), and 0.00% for \(ANN_{READ}\). Additionally, the Rectified Linear Unit activation function [nn.ReLU()] is also applied from layer to layer in order to get the expected decimal value at the output [34]. The loss function is defined as the MSELoss() on Line 16 to compute the loss between actual and predicted value during training, which is a standard mean squared error, MSE [35]. The other hyper-parameters are; learning rate 0.002, and weight decay \(1e{-5}\) are applied on Line 18. This relates to using (Adam) as our gradient descent optimizer. Then X and y are set as the input and output features from the training set on Lines 20 and 21.

Subsequently, the Lines 23 to 34 control the main loop which trains the ANN model until the specific number of iterations defined as MAX_Limit is reached. During loop iterations, the bandwidth value is predicted on Line 25. Then, Line 27 computes the loss by MSE value. The Adam optimizer zeros the gradients on Line 29 before the back propagation can occur. Once complete, loss is propagated backward on Line 31 and the optimizer updates the weights which are eventually updated by the model calling its train function on Line 34.

Once the model is trained it has been saved in “.pt” file on Line 37. It is then loaded back to memory at the time of prediction to support the auto-tuning process later.

The ANN models run on the test set, and the predicted values are re-scaled to their original values to compute accuracy with the profiled benchmark bandwidth values. The prediction accuracy denoted by P.A for both ANN models, is defined by the following equations:

where y and r are actual and predicted n number of total bandwidth values, respectively, and i is the i th row of X, y and r thus, \(y_{i}\) is the i th actual bandwidth value and \(r_{i}\) is the i th predicted bandwidth value computed by running model() on \(X_{i}\) the i th configuration parameters values set.

The prediction accuracy of \(ANN_{READ}\) yields \(\approx 63\)%, and for \(ANN_{WRITE}\) yields \(\approx 79\)%. Figure 4 shows the model predictions on a randomised 50 configuration settings for each READ and WRITE operations. It is evident from these figures that both ANN models are well fitted for future predictions on unseen configuration data. Later on, these models play a vital role in auto-tuning the parameters required for overall bandwidth performance gain.

3.4 Complete auto-tuning design applied to test cases

In this section, we will outline the complete auto-tuning process and procedure to collect our statistical evaluation data relating to performance. The ANNs which we have previously described, can be used for auto-tuning configuration parameters.

It should be noted that there are a number of tunable parameters that relate to IO operations (READ or WRITE), and many remaining which are non-tunable parameters, as mentioned in Table 1 3rd column.

The tunable parameters are marked “Yes”, and non-tunable parameters are marked “No” with respect to READ/-WRITE operation. The common non-tunable parameters in both IO operations are Number of MPI nodes, MPI processes on each node and File size. The reason is MPI nodes and processes cannot be altered once the application is initialized and the file size also cannot be changed being the user requirement. In case of READ operation the tunable parameters are Chunk size and File access pattern values. The additional two non-tunable parameters are Lustre stripe count and Lustre stripe size. As the file will be already distributed over a specific number of lustre disks to be READ therefore, re-striping on runtime will not affect the file distribution pattern on disks. In a situation where we write the file, which is then subsequently read, this is not optimal behaviour as it will add unnecessary IO overhead. Whereas, for WRITE operations the tunable parameters are Lustre stripe count, Lustre stripe size, Chunk size and File access pattern. All these configurations can affect WRITE bandwidth at the runtime.

Considering the configuration parameters tunability, the summarized steps to complete the execution flow are: (1) retrieve the existing applied set of parameters settings, (2) predict the IO bandwidths on current and all other possible settings of tunable parameters specified in Listing 3 with the given non-tunable parameter settings, (3) compare all the predicted bandwidth values with each other. (4) Select the maximum predicted IO bandwidth settings and (5) apply the selected settings to configure the tunable parameters. These steps are further elaborated in Listing 3 and 4.

3.4.1 Parameters selection

In this section, we present a procedure on how to select new parameters settings that can predict maximum bandwidth.

The function Select_Parameters() in Listing 3 returns the new tunable configuration settings. It takes the arguments for the given current settings, IO operation (READ or WRITE) and the file path to saved ANN model (“*.pt”). The Line 1 imports the torch package to load the saved and trained ANN model in PyTorch on Line 5. The Line 9 to 11 loads the current settings in variable X, then sets the max_bandwidth to predicted value using model() on current settings X, and max_settings hold the current settings. Lines 14 to 17 represent the possible value settings for tunable parameters for both IO operations, also specified in Table 1.

The mechanism to select new configuration settings is to check every possible combination of tunable parameters values in nested loops on Lines 20 to 23. The stripe_counts and stripe_sizes are checked when the current IO operation is WRITE, from Lines 26 to 28. By predicting on Line 29 and comparing the new bandwidth value with current max_bandwidth value gives the maximum possible bandwidth and its new configuration settings in max_settings as on Lines 30 to 32. If the current IO operation is READ then the last two nested loops for checking stripe_count and stripe_size values are not required. In that case, the break statements are applied on Lines 33 and 34, respectively, to terminate the loops for READ operation.

Finally, the new configurations predicting maximum possible IO bandwidth will be returned on Line 35. These new settings return to code shown in Listing 4. According to steps in Listing 3 it is possible that parameters may not be tuned to new values. This can be due to the existing configurations predicting the maximum possible IO bandwidth, by checking other value settings in range.

3.4.2 Auto-tuning IO operations

In this section we present the auto-tuning procedure on the MPI side, based on the new parameter values selection. After the selection of these new parameter value settings, they are used to tune the configurations before executing parallel IO.

The Listing 4 elaborates the overview of how the auto-tuning task is carried out at MPI application level in the function defined as Auto_tune_and_run_{i}O(). It takes the current settings, intended IO operation, target file path or name:f_name and ANN model file path as arguments on Line 3. Line 5 initializes the MPI space and environment using MPI_{i}nit(). Subsequently, Line 9 returns the current process ID or rank. The current settings, IO operation and model file path are passed to the method Select_Parameters() on Line 13, and defined in Listing 3. If the current process ID or rank is 0 only then Select_Parameters() function executes, as checked on Line 12. This returns the new parameters values in max_settings predicting maximum possible bandwidth with the given non-tunable parameters.

Since the max_settings are not returned, all other processes are waiting on Line 16. This is enforced by MPI_Barrier() method which is explained in [36]. Once the new values are returned to the rank 0 process, it will send the max_settings object message to other MPI processes or ranks. Those ranks receive the message in their respective local max_settings object. This is done on Line 17 using the MPI broadcasting method MPI_Bcast(), also explained in [36].

At this point, all processes will have received their max_settings. Therefore, each process updates its configurations with a new set of value settings (Lines 19 to 20). The access_pattern and chunk_size are required by all processes to read or write the file with same access pattern and number of bytes. While the stripe_count and stripe_size are updated for all processes, they are only required at the rank 0 process when IO operation is WRITE. This is essential, for avoiding a race condition on the same file when applying a new file striping order (Lines 22 to 24). In the case of the Rank 0 process, this removes the previous stripe settings by removing the existing empty file on Line 23 via Remove_Previous_File() method. Then the new stripe settings are applied on the file (Line 24) using the Apply_New_Lustre_Striping() method.

It should be noted here, that all processes except rank 0, wait again on Line 27 until the rank 0 process is applying the new lustre stripe settings on the file. This creates a new empty file on the same path with new stripe settings. When the WRITE operation executes afterwards by all processes the file will be written with new settings applied to it. On completion, the bandwidth will be recorded using Darshan, as mentioned earlier. It is also shown in Listing 5 for auto-tuning the default configuration test cases, using this method defined in Listing 4.

3.4.3 Statistical data collection upon auto-tuning test cases

In this section we present the experimental setup for auto-tuning the test cases and collecting the key statistical values for our system bandwidth performance. The working functionalities in Listings 3 and 4 perform auto-tuning on default configuration test cases, as mentioned in Table 5. These default test cases with parameters values settings make a total of 1458 configuration settings to be tested. The tunability of parameters is exactly the same as in Table 1.

The purpose of auto-tuning a number of default test cases is to analyze the improvement in IO bandwidth in terms of percentage, through the use of ANN models. The bandwidth values have been collected using the Darshan characterization tool, upon auto-tuning the test cases. Listing 5 presents the methodology to collect all this necessary statistical data for performance evaluation presented later in this paper.

It should be noted that while we trained the ANN models on smaller file size and chunk size values, in our evaluation approach, we used larger file and chunk size values in our test cases. These are shown in our default configuration test cases to auto-tune in Table 5.

Multiplying the number of value settings results in 1458 possible combinations of the default configuration settings in one complete list. The description of steps to collect statistics of IO performance improvement data, starts with the function definition of Stats_Data_Collection(). This method provides seven arguments: (1) Default_Configurations, the lists of all default parameters values, (2) io, READ/WRITE operation and (3) model_path, the path of ANN model (“.pt”) file to use. This is followed by first generating a complete list of possible default configuration settings in X on Line 5, such that each element of X is a set of different parameters with values as stated earlier by example in Sect. 3.1. Then Line 6 computes the length or size of that list in memory n. On Line 7 the Repetitions count is set to the value 3, which controls the iterations of the nested loop on Line 12. This determines the repeated execution of the default configurations and the auto-tuned configurations respectively.

Line 9 initiates the main loop to process default and auto-tuned configuration settings against each set of parameters in X. Lines 10 and 11 create the default_bandwidth and tuned_bandwidth lists. The purpose of these lists is to save the default settings bandwidths and the auto-tuned settings bandwidth after their execution. The bandwidth values of the default and auto-tuned configuration settings are saved in their respective lists three times from Lines 14 to 27. The reason for three repetitions was to mitigate against individual variations across bandwidth values due to any reason, and therefore provide an average. After this inner loop, both bandwidths are averaged and appended in old_bandwidths and new_bandwidths lists, respectively on Lines 29 and 30. The bandwidth improvement or dis-improvement count is also maintained in c_{i}m and c_di on Lines 31 and 32, respectively.

Once the main loop is finished, all the default and auto-tuned bandwidths are averaged on Lines 34 and 35. The overall bandwidth improvement in percentages is calculated (Lines 37 to 38). Then all remaining statistics are calculated (Lines 40 to 51). These include percentages of improved and dis-improved test cases, maximum, minimum, median, standard deviation and variance in both default and auto-tuned bandwidth values for comparison. We provide a comprehensive study of performance in the next section using the data gathered in these steps.

4 Experimental results and evaluation

In this section, we outline the runtime complexity analysis of using ANNs predictions for parameter selection, the improvement results in READ/WRITE bandwidth, achieved by auto-tuning the default configuration test cases in Table 5, and the common configuration settings applied by the system model during the auto-tuning process.

The auto-tuning of the test cases is conducted on 16 compute nodes of the Intel Xeon Gold 6148 Skylake processors cluster (KAY) and its 16 LFS employed disks as IO object storage targets, OSTs [37]. The ANN models have been supported by training and testing through tensors construct of PyTorch, on NVIDIA Tesla V100 GPU cards [27, 38].

4.1 Runtime complexity analysis of ANNs predictions

Since this procedure of parameters value selection involves a brute force to check all combinations, its runtime cost should be analysed. Line 29 of Listing 3, runs the ANN feed forward propagation pass to predict the value using model(X). In our case, there are three steps involving: (1) input layer to Hidden Layer 1, (2) Hidden Layer 1 to Hidden Layer 2 and finally (3) Hidden Layer 2 to output layer. Table 7 presents all the steps involved, alongside the number of computations against multiplications (Mul.) and additions (Add.).

In the first step, there are roughly 3584 computations of multiplications and additions. Similarly, in second step there are approximately 65,536 computations. This is the most expensive phase in this feed forward propagation process in both computation and memory consumption terms. This is due to the reason that the hidden layers and their weights matrices have the greatest number of nodes and memory allocation, respectively.

Finally, in the third and last step, it performs 256 computations. By summing these computations, we determine 69376 runs in total of a single feed forward propagation pass to predict a value. In case of a READ operation the forward propagation pass runs 8 (\(4\times 2\)) times making a total execution of 555,008 instructions to select parameters predicting maximum bandwidth. Whereas, in case of a WRITE operation the forward propagation pass runs 160 (\(4\times 2\times 4\times 5\)) times which makes a total of 11,100,160 computations to select parameters. This is according to the code logic of Listing 3.

In order to analyze how fast these millions of computations can be processed, this relies on memory resources usage during the feed forward propagation. Table 6 shows the matrices and the memory space used during a forward propagation pass. These matrices were part of the ANN model which is created with a default data type float32. This represents a 32-bit or 4-bytes floating point number. There are seven matrices used in a feed forward propagation process as can be noticed.

The total memory required by the ANN model is 140,320 bytes or almost 137 KiB. If the model is created using a 64-bit or 8 bytes double precision floating point number then this value will be doubled to almost 274 KiB. However, with either of the decimal datatypes or sizes used, these matrices are easily cacheable in the CPU RAM cache memory. Therefore, running the millions of computations as stated earlier, approximately takes a negligible execution time in the unit seconds of \(10e^{-4}\) including the loading time of the required libraries. However, for a first time execution, the program loading can take around 30 s. Afterwards, it reduces to less than a second, as tested on a KAY’s compute node [37].

For the sake of simplicity and ease, we coded the parameters selection logic as a separate Python script to execute using PyTorch module, which interfaces with the caller MPI based C++ program and applies the new configuration parameters settings and run the READ or WRITE operation, as stated in Listing 4. Otherwise, the trained ANN model could be coded in the same MPI based program as well by using its C++ version of PyTorch library which is more complex than the Python scripting however, it could be even faster by avoiding the extra program loading during the already executing program.

4.2 READ auto-tuning and common configurations analysis

In this section, we provide a detailed discussion of the auto-tuning performance with respect to READ operations.

Table 8 shows the READ improvements after execution the procedure represented in Listing 5. The first observation, is that the number of test cases improved during the testing process. These are 1206 indicating a 82.7% of the total cases (1458). On further examination, we observe that the bandwidth values also show significant improvements. The mean bandwidth value of the tuned settings is 62,630.5 MiB/s, which is significantly 1.65\(\times\) times greater than the mean bandwidth value 37,798.1 MiB/s of the default settings. This is a clear indication of how significant our tuning approach can be with respect to the critical metric of bandwidth. The overall READ bandwidth improvement is shown to be approximately 65.7% which is a significant optimized performance gain. The remaining statistics are presented with maximum, minimum, median and standard deviation of bandwidth values for default configurations against the tuned configurations.

Furthermore, the Fig. 5 shows the graph of default versus tuned bandwidths throughout all 1458 test configuration settings. It can be seen that the auto-tuned bandwidths demonstrate far better values than the default bandwidths in many cases.

The ANN predictions have leveraged the auto-tuning process and demonstrate these significant READ bandwidth performance optimization. It is also worth noting the common auto-tuned parameters values selection throughout the test cases. Table 9 shows the performance of three common tuned parameters values versus the default configuration settings test cases. This performance is stated with respect to the relative improvements and dis-improvements. When examining the tuned configurations, we note that the first uses a chunk size of 1 GiB and a non-collective file access pattern.

This setting has been selected in the majority of test cases, 1332 times. This resulted in 1156 occurrences of improvement while there were 176 occurrences of dis-improvement. Therefore probabilistically, this configuration setting has a 86.8% chance to improve bandwidth while indicating a 13.2% chance of dis-improving.

The second frequently tuned setting involved a chunk size of 2 GiB with the non-collective file access pattern. This setting was selected 114 times in which it yielded 48 improvements and 66 dis-improvements. In this case, the model prediction resulted in a 42% chance of improvement and a 58% chance of dis-improvement.

The third and last setting selected for auto-tuning was 1 GiB of chunk size with the collective file access pattern. This was selected the least number of times, showing 2 improvements and 10 dis-improvements. This has indicates a chance of 16.7% for bandwidth improvement. Therefore, it is the most unfavourable setting to apply in auto-tuning.

We note that, the non-collective file access pattern was a common factor in the improvements recorded. The non-collective access setting has been used mostly and proven successful with two different chunk size values throughout the test cases. This yielded close to 83% chance of improving bandwidth according to Table 9. Therefore, according to our findings, non-collective access is the most favourable pattern for READ operations with a view to maximising bandwidth.

4.3 WRITE auto-tuning and common configurations analysis

This section presents a series of results related to MPI WRITE operation optimization. It is evident from Table 10 that majority of test cases improved when auto-tuning is applied. The number of overall improvements observed were 1353 which is 92.8% of the total number (1458) of the default configurations test cases. The remaining data shows the mean bandwidth throughput values of the default and tuned configurations. It can be seen that the mean bandwidth of the tuned configuration cases is 9798.1 MiB/s which is 1.83\(\times\) times greater than the mean bandwidth of the default configurations (5346.9 MiB/s). These numerical figures indicate an overall bandwidth performance of 83.2%. This shows the WRITE bandwidth performance throughput has been significantly optimized with our devised ANN prediction based auto-tuning strategy.

We note that the maximum tuned bandwidth is greater than the maximum default bandwidth. This significant increase can be seen in the minimum and median bandwidth values from the default to tuned settings. The minimum value increased by almost 49\(\times\) and the median value by 2.2\(\times\) due to auto-tuning. The standard deviations remain comparable between both default and auto-tuned results.

To support our observations, we observe the data in Fig. 6, which shows the default versus tuned bandwidths. We note that the auto-tuned bandwidths show consistently higher values than the default bandwidths in the majority of the cases. Therefore, it is safe to conclude the significant performance gain in WRITE operation bandwidth optimized by auto-tuning based on the ANNs predictions.

The configuration settings which are most commonly selected by our auto-tuning process are shown for WRITE operations in Table 11. This table shows the five common settings applied during the auto-tuning of default configuration test cases. For auto-tuning WRITE operations we have four tunable parameters: stripe count, stripe size, chunk size and file access pattern. The most common configuration setting was selected 864 times which shown first in the table. This contains stripe count of value 16, stripe size of 2048 MiB and the non-collective or contiguous file access pattern. This setting resulted in 794 improvements and 70 dis-improvements which are both the largest when compared to rest of the settings. Probabilistically, the chance of improving bandwidth through using this setting is 91.9%, with a 8.1% chance of dis-improvement.

The second, third and fifth configurations in Table 11 show improvements of 103, 145 and 97 respectively. Due to these settings being less frequently selected by the system arising from auto-tuning, they show comparatively small observations of dis-improvements of 5, 17 and 11. However, in terms of probability percentage, the second configuration has a 95.4% chance of improving bandwidth with a 4.6% chance of dis-improving.

This shows better more promising results than the first configuration with respect to probability. Whereas, the third and fifth settings have \(\approx\) 90% chance to improve bandwidth, with a \(\approx\) 10% chance to dis-improve it. These marginally less optimal than the first configuration.

The fourth configuration has 214 improvements and only 2 dis-improvements. Therefore, probabilistically, it has a 99% chance of improving bandwidth with only a 1% chance of dis-improving it. Interestingly, this configuration has a common feature with results that were recently published in this area. The selected stripe size of 1 MiB, was identified and suggested as a means of increasing the WRITE bandwidth from the parallel-plots in [9].

Another factor which should be noted is that the stripe count value of 16 and non-collective file access pattern are common across all these selected configurations. The stripe count value of 16 was the maximum in the range specified in Listing 3, for the selection. Therefore, it can be inferred to keep the maximum stripe count available. This means bandwidth is expected to increase by increasing number of stripe count value. Since the file access pattern is set to non-collective in all these settings, it is best for maximising overall bandwidth.

From studying these settings, we can apply them or similar ones to maximise bandwidth throughput in the event of the prediction model not being available for parameter selection.

5 Summary of the work

The results presented in this paper indicate strong performance gains arising from using ANN models to auto-tune HPC MPI–IO configurations. These improvements have been demonstrated with respect to bandwidth performance primarily.

The tuning process is focused on a number of key parameters that directly effect IO bandwidth. Since we are optimising at runtime, tunable parameters can be modified i.e., the chunk size per MPI process, Lustre striping parameters, etc. Therefore, we identified certain tunable parameters and settings for READ and WRITE operations separately.

The optimization of HPC IO using ML predictions has been a common approach in recent studies [19, 20, 21, 23, 26, 13, 14, 16]. The parameters before IO execution can be modified or tuned to improve IO bandwidth. We note that the predictive IO modelling with multiple configurations is a primary requirement of the existing problem. The most convincing technique for predictive IO modelling is ANN due to their high forecasting accuracy [24, 26, 28].

In previous work, the key parameters were identified and executed as benchmarks [9]. However, in the research outlined in this paper, the research has been further extended to identify the tunable and non-tunable parameters. The previous benchmarks were re-executed on the generated list of all possible configurations as mentioned in Table 1. This was done to regenerate the ANNs through a ML process, as mentioned in Table 2. This process was conducted with respect to their specific hyper-parameters in Table 4, for both READ and WRITE operations. The mappings of the input layer nodes to configuration parameters are also described in Table 3. The models predict bandwidth precisely and match with the pattern of the changing configurations. The benchmarks and prediction results are presented in Figs. 3 and 4.

Subsequently, the auto-tuning approach was applied on the default configuration test settings, as mentioned in Table 5, with respect to READ and WRITE operations.

We observed the auto-tuning of configuration parameters based on ANN predictions, and these have shown significant optimized IO bandwidth performance results. The READ operation has shown 65.7% improvement in bandwidth while having 83% of the test cases improved. Whereas, the WRITE operation has shown 83% improvement in the overall IO bandwidth while having almost 93% of the test cases improved.

Subsequently, we discussed the common configuration settings as a result of auto-tuning for both READ and WRITE operations. It was observed that the non-collective or contiguous file access pattern was selected in the majority of the READ test cases. Furthermore, the stripe count was set to a maximum available value 16 in all the WRITE test cases, in order to have maximum possible bandwidth value.

These configurations can be considered with variations of other parameters values for tuning MPI IO operations, in the event that a ANN model is not available, to optimise significant IO bandwidth performance.

6 Conclusion

In this paper, we analyzed MPI–IO bandwidth performance improvements by auto-tuning configuration parameters via ANNs predictions. The paper explains the requirement of an efficient and adaptable optimization technique and extends the work from [9]. This aligns with already established research which examines the challenges of HPC–IO performance through ML prediction [19, 20, 21, 23, 26, 13, 14, 16].

There are three main contributions outlined in this research paper. First is the auto-tuning strategy based on the ANNs MPI–IO bandwidth prediction on various configuration parameters. Second is the statistical analysis of the overall auto-tuned cases with respect to bandwidth performance. Third is the identified common tunable parameters settings, selected and used by ANN based system model.

From the results presented in this paper, we have shown an overall READ bandwidth improvement of 65.7% with almost 83% test cases improved. Whereas, overall WRITE bandwidth improvement yielded upto 83% with almost 93% test cases improved.

Furthermore, from the configurations identified in this research, it is shown that there are clear benefits to firstly using the non-collective file access pattern in both READ/WRITE cases. Secondly, we note the benefits also of using the maximum lustre stripe count value in WRITE operations. Throughout our results these observations were noted as being prominent in the most optimal settings.

In the event that an ML optimisation approach is not available, these configuration policies which we have identified could be easily applied to benefit the bandwidth utilisation challenges noted in previous research. Furthermore, the approach and results outlined in this paper show the considerable advantages to professionals in this area. We have demonstrated substantial improvements on HPC MPI–IO bandwidth performance, thereby enhancing the overall efficiency of large sized data processing task completion.

Data availability

The benchmarks data sets generated and used in this research are available on request.

Code availability

The program code is available on request.

Consent for publication

Authors give consent to publish this article upon acceptance.

Abbreviations

- HPC:

-

High performance computing

- MPI:

-

Message passing interface

- IO:

-

Input/output

- PFS:

-

Parallel file system

- LFS:

-

Lustre file system

- OST:

-

Object storage target

- GPFS:

-

General parallel file system

- HDF:

-

Hierarchical data format

- ML:

-

Machine learning

- ANN:

-

Artificial neural network

- MSE:

-

Mean square error

References

Pfister, G.F.: An introduction to the InfiniBand architecture. In: High Performance Mass Storage and Parallel I/O, vol. 42, pp. 617–632. Wiley, Hoboken (2001)

Birrittella, M.S., Debbage, M., Huggahalli, R., Kunz, J., Lovett, T., Rimmer, T., Underwood, K.D., Zak, R.C.: Intel® omni-path architecture: enabling scalable, high performance fabrics. In: 2015 IEEE 23rd Annual Symposium on High-Performance Interconnects, 2015, pp. 1–9. IEEE (2015)

Gropp, W., Lusk, E., Doss, N., Skjellum, A.: A high-performance, portable implementation of the MPI message passing interface standard. Parallel Comput. 22(6), 789–828 (1996)

Koutoupis, P.: The Lustre distributed filesystem. Linux J. 2011(210), 3 (2011)

Li, Y., Li, H.: Optimization of parallel I/O for Cannon’s algorithm based on lustre. In: 2012 11th International Symposium on Distributed Computing and Applications to Business, Engineering and Science, 2012, pp. 31–35. IEEE (2012)

Liao, W.-K.: Design and evaluation of MPI file domain partitioning methods under extent-based file locking protocol. IEEE Trans. Parallel Distrib. Syst. 22(2), 260–272 (2010)

Dickens, P.M., Logan, J.: Y-lib: a user level library to increase the performance of MPI–IO in a lustre file system environment. In: Proceedings of the 18th ACM International Symposium on High Performance Distributed Computing, 2009, pp. 31–38. ACM (2009)

Yu, W., Vetter, J., Canon, R.S., Jiang, S.: Exploiting lustre file joining for effective collective IO. In: Seventh IEEE International Symposium on Cluster Computing and the Grid (CCGrid’07), 2007, pp. 267–274. IEEE (2007)

Tipu, A.J.S., Conbhuí, P.Ó., Howley, E.: Applying neural networks to predict HPC–I/O bandwidth over seismic data on lustre file system for ExSeisDat. Clust. Comput. 25, 1–22 (2021)

El Baz, D.: IoT and the need for high performance computing. In: 2014 International Conference on Identification, Information and Knowledge in the Internet of Things, 2014, pp. 1–6. IEEE (2014)

Shafiq, M., Tian, Z., Sun, Y., Du, X., Guizani, M.: Selection of effective machine learning algorithm and Bot-IoT attacks traffic identification for Internet of Things in smart city. Future Gener. Comput. Syst. 107, 433–442 (2020)

Qiu, J., Tian, Z., Du, C., Zuo, Q., Su, S., Fang, B.: A survey on access control in the age of Internet of Things. IEEE Internet Things J. 7(6), 4682–4696 (2020)

Betke, E., Kunkel, J.: Footprinting parallel I/O—machine learning to classify application’s I/O behavior. In: International Conference on High Performance Computing, 2019, pp. 214–226. Springer (2019)

Wyatt II, M.R., Herbein, S., Gamblin, T., Moody, A., Ahn, D.H., Taufer, M.: PRIONN: predicting runtime and IO using neural networks. In: Proceedings of the 47th International Conference on Parallel Processing, 2018, p. 46. ACM (2018)

Zhao, T., Hu, J.: Performance evaluation of parallel file system based on lustre and Grey theory. In: 2010 Ninth International Conference on Grid and Cloud Computing, 2010, pp. 118–123. IEEE (2010)

Zhao, T., March, V., Dong, S., See, S.: Evaluation of a performance model of lustre file system. In: 2010 Fifth Annual ChinaGrid Conference, 2010, pp. 191–196. IEEE (2010)

Muhammad, Shafiq Zhihong, Tian Ali Kashif, Bashir Xiaojiang, Du Mohsen, Guizani CorrAUC: A Malicious Bot-IoT Traffic Detection Method in IoT Network Using Machine-Learning Techniques. IEEE Internet of Things Journal 8(5) 3242-3254 9116932. https://doi.org/10.1109/JIOT.2020.3002255

Muhammad, Shafiq Zhihong, Tian Ali Kashif, Bashir Xiaojiang, Du Mohsen, Guizani (2020) IoT malicious traffic identification using wrapper-based feature selection mechanisms. Computers & Security 94101863-S0167404820301358 101863. https://doi.org/10.1016/j.cose.2020.101863

Xu, L., Lux, T., Chang, T., Li, B., Hong, Y., Watson, L., Butt, A., Yao, D., Cameron, K.: Prediction of high-performance computing input/output variability and its application to optimization for system configurations. Qual. Eng. 33(2), 318–334 (2021). https://doi.org/10.1080/08982112.2020.1866203

Bez, J.L., Boito, F.Z., Nou, R., Miranda, A., Cortes, T., Navaux, P.O.: Adaptive request scheduling for the I/O forwarding layer using reinforcement learning. Future Gener. Comput. Syst. 112, 1156–1169 (2020)

Behzad, B., Byna, S., Snir, M.: Optimizing i/o performance of HPC applications with autotuning. ACM Trans. Parallel Comput. 5(4), 1–27 (2019)

Bağbaba, A.: Improving collective I/O performance with machine learning supported auto-tuning. In: 2020 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), 2020, pp. 814–821. IEEE (2020)

Madireddy, S., Balaprakash, P., Carns, P., Latham, R., Ross, R., Snyder, S., Wild, S.M.: Machine learning based parallel I/O predictive modeling: a case study on lustre file systems. In: International Conference on High Performance Computing, 2018, pp. 184–204. Springer (2018)

Hopfield, J.J.: Artificial neural networks. IEEE Circuits Devices Mag. 4(5), 3–10 (1988). https://doi.org/10.1109/101.8118

Hagan, M.T., Demuth, H.B., Beale, M.: Neural Network Design. PWS Publishing Co., Boston (1997)

Schmidt, J.F., Kunkel, J.M.: Predicting I/O performance in HPC using artificial neural networks. Supercomput. Front. Innov. 3(3), 19–33 (2016)

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., : PyTorch: an imperative style, high-performance deep learning library. In: Advances in Neural Information Processing Systems, 2019, pp. 8026–8037 (2019)

Elshawi, R., Wahab, A., Barnawi, A., Sakr, S.: DLBench: a comprehensive experimental evaluation of deep learning frameworks. Clust. Comput. 24, 1–22 (2021)

Haziza, D., Rapin, J.: GS: HiPlot-High Dimensional Interactive Plotting, Github (2020)

Zheng, W., Fang, J., Juan, C., Wu, F., Pan, X., Wang, H., Sun, X., Yuan, Y., Xie, M., Huang, C., Tang, T., Wang, Z.: Auto-tuning MPI collective operations on large-scale parallel systems. In: 2019 IEEE 21st International Conference on High Performance Computing and Communications; IEEE 17th International Conference on Smart City; IEEE 5th International Conference on Data Science and Systems (HPCC/SmartCity/DSS), 2019, pp. 670–677 (2019)

Hernández, Á.B., Perez, M.S., Gupta, S., Muntés-Mulero, V.: Using machine learning to optimize parallelism in big data applications. Future Gener. Comput. Syst. 86, 1076–1092 (2018)

Carns, P., Harms, K., Allcock, W., Bacon, C., Lang, S., Latham, R., Ross, R.: Understanding and improving computational science storage access through continuous characterization. ACM Trans. Storage 7(3), 1–26 (2011)

Pires, I.M., Hussain, F., Garcia, N.M., Lameski, P., Zdravevski, E.: Homogeneous data normalization and deep learning: a case study in human activity classification. Future Internet 12(11), 194 (2020)

Agarap, A.F.: Deep learning using rectified linear units (ReLu), arXiv (2018)

James, G., Witten, D., Hastie, T., Tibshirani, R.: An Introduction to Statistical Learning, vol. 112. Springer, New York (2013)

MPI: A Message-Passing Interface Standard Version 3.1 (2015). https://www.mpi-forum.org/docs/mpi-3.1/mpi31-report.pdf. Accessed 7 Nov 2019

Ketkar, N., Moolayil, J.: Introduction to PyTorch. In: Deep learning with python, 2021, pp. 27–91. Springer (2021)

Acknowledgements

This research has been conducted under the funding of ExSeisDat Project designed in partnership with Tullow Oil plc and DataDirect Networks (DDN). ExSeisDat and this research is supported, in part, by Science Foundation Ireland Grant 13/RC/2094 and co-funded under the European Regional Development Fund through the Southern and Eastern Regional Operational Programme to Lero—The Irish Software Research Centre (www.lero.ie).

Funding

This research has been mainly funded by Science Foundation Ireland and co-funded under the European Regional Development Fund through the Southern and Eastern Regional Operational Programme to Lero—The Irish Software Research Centre (www.lero.ie)

Author information

Authors and Affiliations

Contributions

AJS contributed in Conceptualization, Methodology, Software, Investigation, Statistical Analysis and Writing—Original Draft. PO did the Supervision and Benchmarks Development. EH Co-supervised the research and contributed in Writing—Review and Editing.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. It is to further declare that there is no conflict of interest among the authors of this paper.

Ethical approval

Not applicable.

Informed consent

Authors give consent to participate in extending this research.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tipu, A.J.S., Conbhuí, P.Ó. & Howley, E. Artificial neural networks based predictions towards the auto-tuning and optimization of parallel IO bandwidth in HPC system. Cluster Comput 27, 71–90 (2024). https://doi.org/10.1007/s10586-022-03814-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-022-03814-w