Abstract

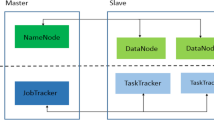

The evolution of parallel architectures points to dynamic environments where the number of available resources or configurations may vary during the execution of applications. This can be easily observed in grids and clouds, but can also be explored in clusters and multiprocessor architectures. Over more than two decades, several research initiatives have explored this characteristic by parallel applications, enabling the development of adaptive applications that can reconfigure the number of processes/threads and their allocation to processors to cope with varying workloads and changes in the availability of resources in the system. Despite the long history of development of solutions for adaptability for parallel architectures, there is no literature reviewing these efforts. In this context, the goal of this paper is to present the state of-the-art on adaptability from resource and application perspectives, ranging from shared memory architectures, clusters, and grids, to virtualized resources in cloud and fog computing in the last twenty years (2002-2022). A comprehensive analysis of the leading research initiatives in the field of adaptive parallel applications can provide the reader with an understanding of the essential concepts of development in this area.

Similar content being viewed by others

References

Khan, A.W., Khan, M.U., Khan, J.A., et al.: Analyzing and evaluating critical challenges and practices for software vendor organizations to secure big data on cloud computing: An ahp-based systematic approach. IEEE Access 9, 107,309–107,332 (2021). https://doi.org/10.1109/ACCESS.2021.3100287

Cera, M.C.: Providing adaptability to mpi applications on current parallel architectures. PhD thesis, Universidade Federal do Rio Grande do Sul. Instituto de Informática.Programa de Pós-Graduação em Computação., (2011) https://lume.ufrgs.br/handle/10183/55464

Feitelson, D.G., Rudolph, L.: Toward convergence in job schedulers for parallel supercomputers. In: Feitelson, D.G., Rudolph, L. (eds) Job Scheduling Strategies for Parallel Processing, Lecture Notes in Computer Science, Vol. 1162. Springer, pp. 1–26 (1996). http://dx.doi.org/10.1007/BFb0022284

Kalé, L.V., Kumar, S., DeSouza, J.: A malleable-job system for timeshared parallel machines. In: Proceedings of the 2Nd IEEE/ACM International Symposium on Cluster Computing and the Grid. IEEE Computer Society, Washington, DC, USA, CCGRID ’02, pp 230–, (2002) https://doi.org/10.1109/CCGRID.2002.1017131

Galante, G., Bona, L.C.E.: A survey on cloud computing elasticity. In: Proceedings of the International Workshop on Clouds and eScience Applications Management. IEEE, CloudAM’12, pp. 263–270 (2012). https://doi.org/10.1109/UCC.2012.30

Lorido-Botran, T., Miguel-Alonso, J., Lozano, J.A.: A review of auto-scaling techniques for elastic applications in cloud environments. J. Grid Comput. 12(4), 559–592 (2014). https://doi.org/10.1007/s10723-014-9314-7

Coutinho, E.F., de Carvalho Sousa, F.R., Rego, P.A.L., et al.: Elasticity in cloud computing: a survey. Ann. Télécommun. 70(7–8), 289–309 (2015). https://doi.org/10.1007/s12243-014-0450-7

Al-Dhuraibi, Y., Paraiso, F., Djarallah, N., et al.: Elasticity in cloud computing: state of the art and research challenges. IEEE Trans. Serv. Comput. 11(2), 430–447 (2018). https://doi.org/10.1109/TSC.2017.2711009

Kehrer, S., Blochinger, W.: Elastic parallel systems for high performance cloud computing: State-of-the-art and future directions. Parallel Process. Lett. 29(02), 1950,006 (2019). https://doi.org/10.1142/S0129626419500063

Cruz, G.M.: Optimization techniques for adaptability in mpi applications. PhD thesis, Computer Sicence and Engineering Department - Universidad Carlos III de Madrid (2015). https://e-archivo.uc3m.es/handle/10016/22631

Creech, T.M.: Efficient multiprogramming for multicores with scaf. Master’s thesis, Faculty of the Graduate School of the University of Maryland (2015). https://doi.org/10.13016/M2RB19

Galante, G., da Rosa Righi, R.: Exploring cloud elasticity in scientific applications. In: Antonopoulos N, Gillam L (eds) Cloud Computing - Principles, Systems and Applications, Second Edition. Computer Communications and Networks, Springer, pp. 101–125 (2017). https://doi.org/10.1007/978-3-319-54645-2_4

Stanimirovic, I.: Parallel Programming. Arcler Press, Oakville, Canada (2020)

Prabhakaran, S., Iqbal, M., Rinke, S., et al.: A batch system with fair scheduling for evolving applications. In: Proceedings of the 2014 Brazilian Conference on Intelligent Systems. IEEE Computer Society, USA, BRACIS ’14, pp. 351–360 (2014). https://doi.org/10.1109/ICPP.2014.44

Herbst, N.R., Kounev, S., Reussner, R.: Elasticity in cloud computing: what it is, and what it is not. In: Proceedings of the 10th International Conference on Autonomic Computing. USENIX, ICAC’13, pp. 23–27 (2013). https://www.usenix.org/system/files/conference/icac13/icac13_herbst.pdf

Galante, G., Bona, L.C.E.: Supporting elasticity in openmp applications. In: Proceedings of the 2014 22nd Euromicro International Conference on Parallel, Distributed, and Network-Based Processing. IEEE Computer Society, USA, PDP ’14, pp. 188-195 (2014). https://doi.org/10.1109/PDP.2014.36

Jin, C., de Supinski, B.R., Abramson, D., et al.: A survey on software methods to improve the energy efficiency of parallel computing. Int. J. High Perform. Comput. Appl. 31(6), 517–549 (2017). https://doi.org/10.1177/1094342016665471

Schroeder, B., Gibson, G.A.: Understanding failures in petascale computers. J. Phys. Conf. Ser. 78(012), 022 (2007). https://doi.org/10.1088/1742-6596/78/1/012022

George, C., Vadhiyar, S.S.: Adft: an adaptive framework for fault tolerance on large scale systems using application malleability. Procedia Comput. Sci. 9, 166–175 (2012)

Martin, M., Chopard, B.: Low cost parallelizing: A way to be efficient. In: Palma JMLM, Dongarra JJ, Hernández V (eds) Vector and Parallel Processing - VECPAR ’98, Third International Conference, Porto, Portugal, June 21-23, 1998, Selected Papers and Invited Talks, Lecture Notes in Computer Science, vol 1573. Springer, pp 522–533, (1998) https://doi.org/10.1007/10703040_39

Sudarsan, R., Ribbens, C.J.: Reshape: A framework for dynamic resizing and scheduling of homogeneous applications in a parallel environment. In: Proceedings of the 2007 International Conference on Parallel Processing. IEEE Computer Society, USA, ICPP ’07, p. 44 (2007). https://doi.org/10.1109/ICPP.2007.73

Dongarra, J., Beckman, P., Moore, T., et al.: The international exascale software project roadmap. Int. J. High Perform. Comput. Appl. 25(1), 3–60 (2011). https://doi.org/10.1177/1094342010391989

Kale, V.: Parallel computing architectures and APIs : IoT big data stream processing. CRC Press, Taylor & Francis Group, Boca Raton, FL, (2020) https://doi.org/10.1201/9781351029223

Grelck, C.: Moldable applications on multi-core servers: Active resource management instead of passive resource administration. In: Proceedings of the 18. Kolloquium Programmiersprachen und Grundlagen der Programmierung. TU Wien, KPS 2015, pp 1–10, (2015) https://hdl.handle.net/11245.1/f8689ec4-4aa0-4bfe-9430-38745eaaf846

Hungershöfer, J., Wierum, J.: On the quality of partitions based on space-filling curves. In: Sloot, P.M.A., Tan, C.J.K., Dongarra, J.J., et al (eds) Computational Science - ICCS 2002, International Conference, Amsterdam, The Netherlands, April 21-24, 2002. Proceedings, Part III, Lecture Notes in Computer Science, vol 2331. Springer, pp 36–45, (2002) https://doi.org/10.1007/3-540-47789-6_4

Utrera, G., Corbalan, J., Labarta, J.: Implementing malleability on mpi jobs. In: Proceedings of the 13th International Conference on Parallel Architectures and Compilation Techniques. IEEE Computer Society, USA, PACT ’04, pp. 215–224 (2004). https://doi.org/10.1109/PACT.2004.10006

Suleman, M.A., Qureshi, M.K., Patt, Y.N.: Feedback-driven threading: power-efficient and high-performance execution of multi-threaded workloads on cmps. SIGARCH Comput. Architect. News 36(1), 277–286 (2008). https://doi.org/10.1145/1353534.1346317

McFarland, D.J.: Exploiting malleable parallelism on multicore systems. Master’s thesis, Faculty of the Virginia Polytechnic Institute and State University (2011). http://hdl.handle.net/10919/33819

Gordon, A.W., Lu, P.: Elastic phoenix: Malleable mapreduce for shared-memory systems. In: Altman ER, Shi W (Eds.), Network and Parallel Computing—8th IFIP International Conference, NPC 2011, Lecture Notes in Computer Science, Vol. 6985. Springer, pp. 1–16 (2011). https://doi.org/10.1007/978-3-642-24403-2_1

Georgakoudis, G., Vandierendonck, H., Thoman, P., et al.: Scalo: Scalability-aware parallelism orchestration for multi-threaded workloads. ACM Trans. Archit. Code Optim. 14(4), 1–25 (2017). https://doi.org/10.1145/3158643

Cho, Y., Guzman, C.A.C., Egger, B.: Maximizing system utilization via parallelism management for co-located parallel applications. In: Proceedings of the 27th International Conference on Parallel Architectures and Compilation Techniques. Association for Computing Machinery, New York, NY, USA, PACT ’18, pp. 1–14 (2018). https://doi.org/10.1145/3243176.3243199

da Silva, V.S., Nogueira, A.G.D., de Lima, E.C., et al.: Smart resource allocation of concurrent execution of parallel applications. Concurr. Comput. Pract. Exp. n/a(n/a):e6600. (2021) https://doi.org/10.1002/cpe.6600

Marques, S.M.V., Serpa, M.S., Muñoz, A.N., et al.: Optimizing the edp of openmp applications via concurrency throttling and frequency boosting. J. Syst. Architect. 123, 102–379 (2022). https://doi.org/10.1016/j.sysarc.2021.102379

Pagani, D.H., Bona, L.C.E.D., Galante, G.: Uma abordagem baseada em níveis de estresse para alocação elástica de recursos em sistema de bancos de dados. In: Anais do XIV Workshop em Clouds e Aplicações. SBC, Porto Alegre, WCGA 2016, pp. 1–14 (2016). http://sbrc2016.ufba.br/downloads/WCGA/154923_1.pdf

Dominico, S., de Almeida, E.C., Meira, J.A., et al.: An elastic multi-core allocation mechanism for database systems. In: 2018 IEEE 34th International Conference on Data Engineering (ICDE), pp. 473–484 (2018). https://doi.org/10.1109/ICDE.2018.00050

Catalán, S., Herrero, J.R., Quintana-Ortí, E.S., et al.: A case for malleable thread-level linear algebra libraries: The lu factorization with partial pivoting. IEEE Access 7, 17, 617–17, 633 (2019). https://doi.org/10.1109/ACCESS.2019.2895541

Libutti, L.A., Igual, F.D., Piñuel, L., et al.: Towards a malleable tensorflow implementation. In: Rucci E, Naiouf M, Chichizola F, et al (Eds.), Cloud Computing, Big Data & Emerging Topics. Springer International Publishing, Cham, pp. 30–40 (2020). https://doi.org/10.1007/978-3-030-61218-4_3

Hwang, K., Fox, G.C., Dongarra, J.J.: Distributed and Cloud Computing: From Parallel Processing to the Internet of Things. Morgan Kaufmann, Amsterdam (2012)

Comprés, I., Mo-Hellenbrand, A., Gerndt, M., et al.: Infrastructure and api extensions for elastic execution of mpi applications. In: Proceedings of the 23rd European MPI Users’ Group Meeting. Association for Computing Machinery, New York, NY, USA, EuroMPI 2016, pp. 82–97 (2016), https://doi.org/10.1145/2966884.2966917

Huang, C., Lawlor, O., Kalé, L.V.: Adaptive mpi. In: Rauchwerger L (Ed.) Languages and Compilers for Parallel Computing. Springer, Berlin, pp. 306–322 (2004). http://dx.doi.org/10.1007/978-3-540-24644-2_20

El Maghraoui, K., Desell, T.J., Szymanski, B.K., et al.: Malleable iterative mpi applications. Concurr. Comput. Pract. Exp. 21(3), 393–413 (2009). https://doi.org/10.1002/cpe.1362

Kim, D., Larson, J.W., Chiu, K.: Toward malleable model coupling. Procedia Computer Science 4, 312–321 (2011). https://doi.org/10.1016/j.procs.2011.04.033, proceedings of the International Conference on Computational Science, ICCS 2011

Martín, G., Singh, D.E., Marinescu, M.C., et al.: Enhancing the performance of malleable mpi applications by using performance-aware dynamic reconfiguration. Parallel Comput. 46(C), 60–77 (2015). https://doi.org/10.1016/j.parco.2015.04.003

Lemarinier, P., Hasanov, K., Venugopal, S., et al.: Architecting malleable mpi applications for priority-driven adaptive scheduling. In: Proceedings of the 23rd European MPI Users’ Group Meeting. Association for Computing Machinery, New York, NY, USA, EuroMPI 2016, pp. 74–81 (2016). https://doi.org/10.1145/2966884.2966907

Iserte, S., Mayo, R., Quintana-Ortí, E.S., et al.: Dmr api: improving cluster productivity by turning applications into malleable. Parallel Comput. 78, 54–66 (2018). https://doi.org/10.1016/j.parco.2018.07.006

Iserte, S., Rojek, K.: An study of the effect of process malleability in the energy efficiency on gpu-based clusters. J. Supercomput. 76(1), 255–274 (2020). https://doi.org/10.1007/s11227-019-03034-x

D’Amico, M., Garcia-Gasulla, M., López, V., et al.: Drom: Enabling efficient and effortless malleability for resource managers. In: Proceedings of the 47th International Conference on Parallel Processing Companion. Association for Computing Machinery, New York, NY, USA, ICPP ’18, pp. 1–10 (2018). https://doi.org/10.1145/3229710.3229752

Batheja, J., Parashar, M.: A framework for adaptive cluster computing using javaspaces. Clust. Comput. 6(3), 201–213 (2003). https://doi.org/10.1023/A:1023536503299

Gupta, A., Acun, B., Sarood, O., et al.: Towards realizing the potential of malleable jobs. In: 2014 21st International Conference on High Performance Computing (HiPC), pp. 1–10 (2014). https://doi.org/10.1109/HiPC.2014.7116905

Fox, W., Ghoshal, D., Souza, A., et al.: E-hpc: A library for elastic resource management in hpc environments. In: Proceedings of the 12th Workshop on Workflows in Support of Large-Scale Science. Association for Computing Machinery, New York, NY, USA, WORKS ’17, pp. 1–11 (2017). https://doi.org/10.1145/3150994.3150996

Klein, C., Perez, C.: An rms for non-predictably evolving applications. In: 2011 IEEE International Conference on Cluster Computing, pp. 326–334 (2011). https://doi.org/10.1109/CLUSTER.2011.56

Liu, F., Weissman, J.B.: Elastic job bundling: An adaptive resource request strategy for large-scale parallel applications. In: Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis. Association for Computing Machinery, New York, NY, USA, SC ’15, pp. 1–12, (2015) https://doi.org/10.1145/2807591.2807610

Leopold, C., Süß, M., Breitbart, J.: Programming for malleability with hybrid mpi-2 and openmp: Experiences with a simulation program for global water prognosis. In: Proceedings of the European Conference on Modelling and Simulation, pp. 665–670 (2006). http://michaelsuess.net/michaelsuess/publications/leopold_suess_breitbart_malleability_06.pdf

Sudarsan, R., Ribbens, C.J., Farkas, D.: Dynamic resizing of parallel scientific simulations: A case study using lammps. In: Proceedings of the 9th International Conference on Computational Science: Part I. Springer-Verlag, Berlin, Heidelberg, ICCS ’09, pp. 175-184 (2009). https://doi.org/10.1007/978-3-642-01970-8_18

Mo-Hellenbrand, A., Comprés, I., Meister, O., et al.: A large-scale malleable tsunami simulation realized on an elastic mpi infrastructure. In: Proceedings of the Computing Frontiers Conference. Association for Computing Machinery, New York, NY, USA, CF’17, pp. 271–274 (2017). https://doi.org/10.1145/3075564.3075585

Iserte, S., Martínez, H., Barrachina, S., et al.: Dynamic reconfiguration of noniterative scientific applications: a case study with hpg aligner. Int. J. High Perform. Comput. Appl. 33(5), 804–816 (2019). https://doi.org/10.1177/1094342018802347

Spenke, F., Balzer, K., Frick, S., et al.: Malleable parallelism with minimal effort for maximal throughput and maximal hardware load. Comput. Theor. Chem. 1151, 72–77 (2019). https://doi.org/10.1016/j.comptc.2019.02.002

Martín-Álvarez, I., Aliaga, J.I., Castillo, M.I., et al.: Malleability implementation in a mpi iterative method. In: 2021 IEEE International Conference on Cluster Computing (CLUSTER), pp. 801–802, (2021). https://doi.org/10.1109/Cluster48925.2021.00078

Houzeaux, G., Badia, R.M., Borrell, R., et al.: Dynamic resource allocation for efficient parallel CFD simulations. CoRR abs/2112.09560. (2021) https://doi.org/10.48550/arXiv.2112.09560

Wilkinson, B.: Grid Computing: Techniques and Applications, 1st Edn. CRC Press, Boca Raton, FL (2009). https://www.routledge.com/Grid-Computing-Techniques-and-Applications/Wilkinson/p/book/9781138116061

Foster, I., Zhao, Y., Raicu, I., et al.: Cloud computing and grid computing 360-degree compared. In: 2008 Grid Computing Environments Workshop. IEEE, pp 1–10 (2008). https://doi.org/10.1109/GCE.2008.4738445

Kennedy, K., Mazina, M., Mellor-Crummey, J.M., et al.: Toward a framework for preparing and executing adaptive grid programs. In: Proceedings of the 16th International Parallel and Distributed Processing Symposium. IEEE Computer Society, USA, IPDPS ’02, p. 322 (2002). https://doi.org/10.1109/IPDPS.2002.1016570

Vadhiyar, S.S., Dongarra, J.J.: Srs: A framework for developing malleable and migratable parallel applications for distributed systems. Parallel Process. Lett. 13(02), 291–312 (2003). https://doi.org/10.1142/S0129626403001288

Mayes, K., Luján, M., Riley, G., et al.: Towards performance control on the grid. Philos. Trans. R. Soc. 363(1833), 1793–1805 (2005). https://doi.org/10.1098/rsta.2005.1607

Wrzesinska, G., van Nieuwpoort, R., Maassen, J., et al.: Fault-tolerance, malleability and migration for divide-and-conquer applications on the grid. In: 19th IEEE International Parallel and Distributed Processing Symposium, p. 10 (2005). https://doi.org/10.1109/IPDPS.2005.224

Van Nieuwpoort, R.V., Wrzesińska, G., Jacobs, C.J.H., et al.: Satin: a high-level and efficient grid programming model. ACM Trans. Program. Lang. Syst. (2010). https://doi.org/10.1145/1709093.1709096

Aldinucci, M., Coppola, M., Danelutto, M., et al.: High level grid programming with ASSIST. Comput. Methods Sci. Technol. 12(1), 21–32 (2006). https://doi.org/10.12921/cmst.2006.12.01.21-32

Buisson, J., Andre, F., Pazat, J.L.: Supporting adaptable applications in grid resource management systems. In: Proceedings of the 8th IEEE/ACM International Conference on Grid Computing. IEEE Computer Society, USA, GRID ’07, pp. 58–65 (2007a) https://doi.org/10.1109/GRID.2007.4354116

Klemm, M., Bezold, M., Gabriel, S., et al.: Reparallelization techniques for migrating openmp codes in computational grids. Concurr. Comput. Pract. Exp. 21(3), 281–299 (2009). https://doi.org/10.1002/cpe.1356

Ribeiro, F., Rebello, V., Nascimento, A., et al.: Autonomic malleability in iterative mpi applications. In: Proceedings of the 2013 25th International Symposium on Computer Architecture and High Performance Computing. IEEE Computer Society, USA, SBAC-PAD ’13, pp. 192–199 (2013). https://doi.org/10.1109/SBAC-PAD.2013.4

Buisson, J.B., Sonmez, O., Mohamed, H., et al.: Scheduling malleable applications in multicluster systems. In: Proceedings of the 2007 IEEE International Conference on Cluster Computing. IEEE Computer Society, USA, CLUSTER ’07, pp. 372–381 (2007b). https://doi.org/10.1109/CLUSTR.2007.4629252

Raveendran, A., Bicer, T., Agrawal, G.: A framework for elastic execution of existing mpi programs. In: Proceedings of the International Symposium on Parallel and Distributed Processing Workshops and PhD Forum. IEEE, IPDPSW’11, pp. 940–947 (2011). https://doi.org/10.1109/IPDPS.2011.240

Rajan, D., Canino, A., Izaguirre, J.A., et al.: Converting a high performance application to an elastic cloud application. In: Proceedings of the 3rd International Conference on Cloud Computing Technology and Science. IEEE, CLOUDCOM’11, pp. 383–390 (2011). https://doi.org/10.1109/CloudCom.2011.58

Galante, G., Erpen De Bona, L.C.: A programming-level approach for elasticizing parallel scientific applications. J. Syst. Softw. 110, 239–252 (2015). https://doi.org/10.1016/j.jss.2015.08.051

Wottrich, R., Azevedo, R., Araujo, G.: Cloud-based openmp parallelization using a mapreduce runtime. In: 26th IEEE International Symposium on Computer Architecture and High Performance Computing, SBAC-PAD 2014. IEEE, pp. 334–341 (2014). https://doi.org/10.1109/SBAC-PAD.2014.46

da Rosa, Righi R., Rodrigues, V.F., da Costa, C.A., et al.: Autoelastic: automatic resource elasticity for high performance applications in the cloud. IEEE Trans. Cloud Comput. 4(1), 6–19 (2016). https://doi.org/10.1109/TCC.2015.2424876

Rodrigues, V.F., da Rosa Righi, R., da Costa C.A., et al.: Towards combining reactive and proactive cloud elasticity on running HPC applications. In: Muñoz, V.M., Wills, G.B., Walters, R.J., et al. (Eds.), Proceedings of the 3rd International Conference on Internet of Things, Big Data and Security, IoTBDS 2018. SciTePress, pp. 261–268 (2018). https://doi.org/10.5220/0006761302610268

Kehrer, S., Blochinger, W.: Equilibrium: an elasticity controller for parallel tree search in the cloud. J. Supercomput. 76(11), 9211–9245 (2020). https://doi.org/10.1007/s11227-020-03197-y

Rauback Aubin, M., da Rosa, R.R., Valiati, V.H., et al.: Helastic: on combining threshold-based and serverless elasticity approaches for optimizing the execution of bioinformatics applications. J. Comput. Sci. 53(101), 407 (2021). https://doi.org/10.1016/j.jocs.2021.101407

Risco, S., Moltó, G., Naranjo, D.M., et al.: Serverless workflows for containerised applications in the cloud continuum. J. Grid Comput. 19(3), 30 (2021). https://doi.org/10.1007/s10723-021-09570-2

Nunes, J., Bianchi, T., Iwasaki, A., et al.: State of the art on microservices autoscaling: An overview. In: Anais do XLVIII Seminário Integrado de Software e Hardware. SBC, Porto Alegre, RS, Brasil, pp. 30–38 (2021). https://doi.org/10.5753/semish.2021.15804

Fourati, M.H., Marzouk, S., Jmaiel, M.: Epma: Elastic platform for microservices-based applications: Towards optimal resource elasticity. J. Grid Comput. (2022). https://doi.org/10.1007/s10723-021-09597-5

Rajan, D., Thain, D.: Designing self-tuning split-map-merge applications for high cost-efficiency in the cloud. IEEE Trans. Cloud Comput. 5(2), 303–316 (2017). https://doi.org/10.1109/TCC.2015.2415780

Cao, K., Zhou, J., Xu, G., et al.: Exploring renewable-adaptive computation offloading for hierarchical qos optimization in fog computing. IEEE Trans. Comput. Aid. Des. Integr. Circ. Syst. 39(10), 2095–2108 (2020). https://doi.org/10.1109/TCAD.2019.2957374

Yin, L., Luo, J., Luo, H.: Tasks scheduling and resource allocation in fog computing based on containers for smart manufacturing. IEEE Trans. Ind. Inform. 14(10), 4712–4721 (2018). https://doi.org/10.1109/TII.2018.2851241

Naha, R.K., Garg, S., Chan, A., et al.: Deadline-based dynamic resource allocation and provisioning algorithms in fog-cloud environment. Future Gener. Comput. Syst. 104, 131–141 (2020). https://doi.org/10.1016/j.future.2019.10.018

Chen, Y., Chang, Y., Chen, C., et al.: Cloud-fog computing for information-centric internet-of-things applications. In: 2017 International Conference on Applied System Innovation (ICASI), pp. 637–640 (2017). https://doi.org/10.1109/ICASI.2017.7988506

Small, N., Akkermans, S., Joosen, W., et al.: Niflheim: An end-to-end middleware for applications on a multi-tier iot infrastructure. In: 2017 IEEE 16th International Symposium on Network Computing and Applications (NCA), pp. 1–8 (2017). https://doi.org/10.1109/NCA.2017.8171356

Bonomi, F., Milito, R., Zhu, J., et al.: Fog computing and its role in the internet of things. In: Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing. Association for Computing Machinery, New York, NY, USA, MCC ’12, pp. 13–16 (2012) https://doi.org/10.1145/2342509.2342513

He, J., Wei, J., Chen, K., et al.: Multitier fog computing with large-scale iot data analytics for smart cities. IEEE Internet Things J. 5(2), 677–686 (2018). https://doi.org/10.1109/JIOT.2017.2724845

Choi, Y., Alsaffar, A.A., et al.: An Architecture of IoT Service Delegation and Resource Allocation Based on Collaboration between Fog and Cloud Computing. Mobile Information Systems 2016 (2016). https://doi.org/10.1155/2016/6123234

Al-khafajiy, M., Baker, T., Al-Libawy, H., et al.: Improving fog computing performance via fog-2-fog collaboration. Future Gener. Comput. Syst. 100, 266–280 (2019). https://doi.org/10.1016/j.future.2019.05.015

Nguyen, N.D., Phan, L.A., Park, D.H., et al.: Elasticfog: Elastic resource provisioning in container-based fog computing. IEEE Access 8, 183,879–183,890. (2020) https://doi.org/10.1109/ACCESS.2020.3029583

Jiang, Y., Kodialam, M., Lakshman, T.V., et al.: Resource allocation in data centers using fast reinforcement learning algorithms. IEEE Trans. Network Serv. Manag. (2021). https://doi.org/10.1109/TNSM.2021.3100460

Yadav, M.P., Rohit., Yadav, D.K.: Resource provisioning through machine learning in cloud services. Arab. J. Sci. Eng. (2021) https://doi.org/10.1007/s13369-021-05864-5

Srinadh, V., Rao, P.V.N.: Implementation of dynamic resource allocation using adaptive fuzzy multi-objective genetic algorithm for iot based cloud system. In: 2022 4th International Conference on Smart Systems and Inventive Technology (ICSSIT), pp. 111–118 (2022). https://doi.org/10.1109/ICSSIT53264.2022.9716228

Garí, Y., Monge, D.A., Mateos, C.: A q-learning approach for the autoscaling of scientific workflows in the cloud. Future Gener. Comput. Syst. 127, 168–180 (2022). https://doi.org/10.1016/j.future.2021.09.007

Acknowledgements

The authors would like to thank the following Brazilian agencies: CNPq (process 305263/2021-8) and FAPERGS (process 21/2551-0000118-6).

Author information

Authors and Affiliations

Contributions

Both authors worked equally on the research, data synthesis and writing of the article.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix Summary of Adaptivity Solutions

Appendix Summary of Adaptivity Solutions

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Galante, G., da Rosa Righi, R. Adaptive parallel applications: from shared memory architectures to fog computing (2002–2022). Cluster Comput 25, 4439–4461 (2022). https://doi.org/10.1007/s10586-022-03692-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10586-022-03692-2