Abstract

Convergence research is driven by specific and compelling problems and requires deep integration across disciplines. The potential of convergence research is widely recognized, but questions remain about how to design, facilitate, and assess such research. Here we analyze a seven-year, twelve-million-dollar convergence project on sustainable climate risk management to answer two questions. First, what is the impact of a project-level emphasis on the values that motivate and tie convergence research to the compelling problems? Second, how does participation in convergence projects shape the research of postdoctoral scholars who are still in the process of establishing themselves professionally? We use an interview-based approach to characterize what the project specifically enabled in each participant’s research. We find that (a) the project pushed participants’ research into better alignment with the motivating concept of convergence research and that this effect was stronger for postdoctoral scholars than for more senior faculty. (b) Postdocs’ self-assessed understanding of key project themes, however, appears unconnected to metrics of project participation, raising questions about training and integration. Regarding values, (c) the project enabled heightened attention to values in the research of a large minority of participants. (d) Participants strongly believe in the importance of explicitly reflecting on values that motivate and pervade scientific research, but they question their own understanding of how to put value-focused science into practice. This mismatch of perceived importance with poor understanding highlights an unmet need in the practice of convergence science.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many pressing societal problems—such as pandemics, antibiotic resistance, global climate change, and sustainable development—span established academic disciplines. For problems like these, improving understanding of underlying trade-offs and providing effective decision support requires integrating expertise and insights across disciplines and stakeholders (National Research Council 2014; Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019). A number of partly overlapping labels are used to describe such integrative research, including interdisciplinary, transdisciplinary, and convergence research (we will refer collectively to ITC research) (Huutoniemi et al. 2010; Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019, 2021; National Research Council 2014; National Science Foundation, n.d.-a). In light of its standing among the “10 Big Ideas” of the US National Science Foundation (NSF) (National Science Foundation, n.d.-b), here we prioritize the concept of convergence research, characterized as research “driven by a specific and compelling problem” and involving “deep integration across disciplines” (National Science Foundation, n.d.-b). Nevertheless, we situate our study with respect to the broader umbrella of ITC research, given the overlap between concepts. A series of National Academies reports synthesizes current understanding and best practices for facilitating ITC research (National Research Council 2014; Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019). One theme in these reports is a continuing need for more meta-research or science of science (Fortunato et al. 2018; Ioannidis 2018) to better understand the social and intellectual processes conducive to ITC research (National Research Council 2014; Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019).

A growing literature is responding to this need, using a variety of methods including bibliometric analyses (Porter et al. 2006, 2010; Anzai et al. 2012; Abramo et al. 2018; Kodama et al. 2013), surveys of research participants (Tress et al. 2005; Cummings and Kiesler 2005; van Rijnsoever and Hessels 2011; Teirlinck and Spithoven 2015), interviews (Corley et al. 2006; Lundershausen 2018; Polk 2014; Siedlok et al. 2015; Tress et al. 2005; Wall et al. 2017), ethnographic observation (MacLeod and Nersessian 2014; Polk 2014; Siedlok et al. 2015), document analysis of proposals, meeting minutes, or other sources (Gaziulusoy et al. 2016; Corley et al. 2006; Cummings and Kiesler 2007; Siedlok et al. 2015), analysis of data from funding agencies (Bromham et al. 2016; Cummings and Kiesler 2007), and reflections on personal experience (Freeth and Caniglia 2020; Gaziulusoy et al. 2016; König et al. 2013; Lang et al. 2012; McLeish and Strang 2016; West et al. 2019).

Such studies pursue a variety of aims, including measuring collaboration and interdisciplinarity (Porter et al. 2006, 2007; Porter et al. 2008; Anzai et al. 2012; Abramo et al. 2012; Abramo et al. 2018; Sylvan Katz and Martin 1997), identifying barriers (Brister 2016; Gaziulusoy et al. 2016; Lang et al. 2012; MacLeod 2018), evaluating coordination mechanisms (Cummings and Kiesler 2005, 2007), measuring funding success (Bromham et al. 2016), linking outcomes to individual- or project-level characteristics (Polk 2014; Teirlinck and Spithoven 2015; Tress et al. 2005; van Rijnsoever and Hessels 2011), and developing frameworks for guiding ITC research (Corley et al. 2006; Freeth and Caniglia 2020; König et al. 2013; Lang et al. 2012; Siedlok and Hibbert 2014; West et al. 2019) or for studying and evaluating ITC research (Bark et al. 2016; Huutoniemi et al. 2010; Klein 2008; Kodama et al. 2013; MacLeod and Nagatsu 2018; McLeish and Strang 2016; Wall et al. 2017). A majority of such studies also leverage their findings to posit generalized insights and recommendations, targeting all levels of organization from participants and project leaders through institutions and funding agencies.

This growing body of research has provided important insights, but many questions remain. Our study addresses two open questions. The first concerns the importance of values in ITC research. Disciplines and institutions have their own cultures, defined in part by shared values (Institute of Medicine 2005; Laursen et al. 2021). These values include both ethical values regarding the societal importance of research (Bessette et al. 2017; Diekmann and Peterson 2013; Vezér et al. 2018) and epistemic values—also called knowledge values (Rhoten 2003)—regarding the scientific importance of research questions and the features that make a piece of research respectable and rigorous (Vezér et al. 2018; Mayer et al. 2017; MacLeod 2018). Non-academic partners and other stakeholders bring their own sets of values (O’Brien and Wolf 2010; Polk 2014; Tschakert et al. 2017). Openness to other perspectives and values may be a common characteristic of successful ITC researchers (Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019), and divergence in values (both ethical and epistemic) can be a powerful obstacle to ITC research (Brister 2016; MacLeod 2018; MacLeod and Nagatsu 2018).

While some ITC integration tools include a role for values (Robinson et al. 2016; Laursen et al. 2021), there is a lack of project-level studies investigating the effectiveness of efforts to improve ITC research through strategies that foreground values. Findings cited above suggest that teams of researchers who can individually practice openness to other perspectives and values (Institute of Medicine 2005; National Academies of Sciences, Engineering, and Medicine 2019), and who can collectively diagnose and manage divergence in values (Brister 2016; MacLeod and Nagatsu 2018; Mayer et al. 2017), may achieve greater understanding and integration both across disciplines and between researchers and stakeholders. Evaluation of project-level efforts to promote such value competencies may be of particular interest to principal investigators, funding agencies, and proposal review committees.

A second question concerns what junior scholars, who are still in the process of establishing themselves professionally, learn through participation in ITC projects. Barriers and best practices for ITC research vary by career stage (Institute of Medicine 2005; National Research Council 2014). Postdoctoral scholars in particular can play an important role in collaborations and connections within ITC projects (National Research Council 2014; Rhoten 2003), and postdoctoral experiences may provide “the best opportunity for researchers to train deeply in a new discipline” (Institute of Medicine 2005). Despite considerable attention to the training of postdoctoral scholars (henceforth postdocs) in science generally, the literature offers little targeted insight into what postdocs learn from participating in ITC projects or how these experiences shape their research. These are key questions for improving the capacity-building aspect of ITC research projects, given the special training opportunities within such projects and the importance of the postdoc career stage for broadening disciplinary knowledge.

To address these questions, we combine participant interviews (n = 29), bibliometric analysis (155 publications), and individual-level project data in a mixed-methods case study of a large convergence-science project, the Network for Sustainable Climate Risk Management (SCRiM). SCRiM was a $11.9-million research network funded from 2012 to 2019 through the NSF’s Sustainability Research Networks (SRN) program (National Science Foundation, n.d.-c), a precursor to the current Growing Convergence Research (National Science Foundation, n.d.-a) and Sustainable Regional Systems Research Networks (National Science Foundation, n.d.-d) programs. SCRiM spanned a number of disciplines, including Earth sciences, statistics, engineering, economics, decision- and risk-analysis, philosophy, and the social sciences. The project included twenty-four funded senior personnel and twenty postdocs across eight institutions as well as graduate and undergraduate students and administrative and computing support staff. SCRiM’s mission was to identify “sustainable, scientifically sound, technologically feasible, economically efficient, and ethically defensible climate risk management strategies” (“The Network for Sustainable Climate Risk Management (SCRiM)” n.d.). (We, the authors of this study, were ourselves a part of SCRiM, including the principle investigator (PI) and several co-PIs from the project's lead institution.)

A distinctive feature of SCRiM allows us to address our question concerning the role of values in convergence research. SCRiM included an element of heightened attention to the values shaping and embedded within research. Project leaders brought this focus on values into SCRiM through the concept of coupled ethical-epistemic analysis, which refers to a practice of deliberating over method choices and research design with explicit consideration for both ethical and epistemic values, including trade-offs among values (Tuana 2013; Tuana et al. 2012; Valles et al. 2019). For example, design choices that benefit real-world relevance and applicability of potential findings (ethical values) may sometimes trade off against the feasibility and trustworthiness of the required analysis (epistemic values), or against the prospect of fundamental scientific insights (more epistemic values). The practice of coupled ethical-epistemic analysis was discussed at SCRiM’s annual all-hands meetings, taught in the project’s annual summer school, and further developed and applied through a number of collaborative SCRiM co-supported publications (Bessette et al. 2017; Mayer et al. 2017; Vezér et al. 2018; Garner et al. 2016; Helgeson et al. 2021; Wong et al. 2017).

We examine the impact and reception of this focus on values in SCRiM by two routes. First, we ask SCRiM participants whether and how the project shaped their own research. Through this general, open-ended question, we survey all changes in the character or content of individuals’ research that participants themselves attribute to their participation in SCRiM. We identify common elements within participant responses (including, but not limited to, the values focus) and quantify their frequency across participants. Second, we ask a series of targeted questions about specific cross-cutting themes identified by the authors as representing SCRiM’s overall research agenda. One of these themes is coupled ethical-epistemic analysis. Using a quantitative rating scale, we asked participants to indicate how well they understood each of these themes and also how important they considered it for achieving the project’s research goals. This allows us to compare perceptions and attitudes across themes and participants.

Due to its size and duration, SCRiM provides a relatively large pool of postdoc participants whose research and training may have been shaped by their participation in a convergence research project. We investigate the participation of postdocs in convergence research by interviewing both faculty and postdoc participants in SCRiM and partitioning the results of our analyses to reveal contrasts between these two groups. We explore individual variation in quantitative responses through further steps of analysis examining potential explanatory variables drawn from individual-level project data (e.g., funding level) and from a bibliographic analysis of coauthorship patterns within SCRiM-supported publications.

Our two research questions (about values and about postdoc training) are distinct and could be addressed in separate studies. But here we use the same data and participants to address both questions, allowing the findings to inform one another and providing multiple complementary windows into a single large convergence project.

2 Participant interviews

We invited all SCRiM-funded faculty and postdocs for interviews. Twenty-nine took part (66% response rate), with roughly equal numbers of faculty (n = 15; 63% response) and postdocs (n = 14; 70% response). See Table S1 for a breakdown of participation by institution. (SCRiM also funded thirty-four graduate and undergraduate students, whom we omitted from the study for two reasons: to minimize risk of improper influence in the recruitment of institutionally and deferentially vulnerable research participants, and because students have typically done too little prior research to answer a key question regarding SCRiM’s influence on their work.)

All interviews were conducted by a single interviewer in February and March of 2018 via teleconference (Zoom). Interviews were recorded and professionally transcribed. The transcripts were reviewed and corrected by the interviewer with reference to the recordings. (Due to technical difficulties, one interview was conducted by phone and not recorded; in this case, the interviewer took notes by hand.) Table S2 displays the full list of interview questions and the subset on which we report here.

To prompt responses on how SCRiM shaped participants’ research, we asked participants to describe any aspects of their SCRiM-supported research that were “uniquely enabled” by the project, in contrast to what they might have done outside the project, but with the same level of funding (question 2, Table S2). While the question involves a hypothetical comparison, it is one that each individual is well-placed to make, since they are speaking only for themselves and their own research trajectory. By framing the question in this way, we focus on the “value added” of the project understood in causal terms.

We subsequently raised three cross-cutting themes identified in advance as characteristic of SCRiM’s overall research agenda. The first theme was multi-objective robust decision analysis, an approach to decision analysis that evaluates strategies based on their performance across a wide range of possibilities while eschewing premature assumptions about the relative importance of diverse objectives (Kasprzyk et al. 2013; Hadka et al. 2015). The second theme, identifying and characterizing deep uncertainties, refers to a commitment to questioning modeling assumptions and incorporating into analyses even those uncertainties that cannot be uncontroversially quantified with a single probability distribution (Kwakkel and Pruyt 2013; Lempert et al. 2006). The third theme was coupled ethical-epistemic analysis (see above). We asked participants to indicate how well they understood each theme and also how important they considered it for achieving the project’s research goals—in both cases using a rating scale from one to five (questions 3–5, Table S2).

3 Transcript analysis and results

Two coders (also called raters) analyzed participant responses to the “uniquely enabled” question by first developing and testing a set of concepts corresponding to common themes within participant responses, then coding the responses (flagging instances of the concepts as they occur in the transcripts) to enable quantification of those concepts’ frequency and distribution (Saldana 2015). One quarter of the transcripts were independently double-coded as a check on coder subjectivity. We coded transcripts using the brat rapid annotation tool (Stenetorp et al. 2012) followed by processing and plotting in R (R Core Team 2013). See SI for details on code development and testing, inter-rater reliability calculations using Cohen’s kappa statistic (Cohen 1960), and the final, two-stage codebook.

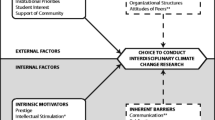

Despite variation in the topics and questions addressed by participants’ research, we identified four general attributes shared across participant responses to the “uniquely enabled” question. Bridging disciplines refers to the crossing of disciplinary boundaries in one’s research. Decision relevance refers to a greater focus on actionable insights or the relevance of research to real-world decisions. Treatment of uncertainties refers to expanded or improved treatment of uncertainties in methods and findings. Attention to values refers to greater attention to ethical values motivating the research and ethical assumptions embedded within the research. (Our narrow focus on ethical values in this coding exercise is due in large part to the ubiquity of attention to epistemic values in any discussion of scientific research.) Table 1 displays interview excerpts illustrating each attribute. Figure 1 shows their frequency across participants, including the breakdown by faculty versus postdocs.

Four attributes of the changes to individuals’ research that resulted from participation in the convergence research project SCRiM: bridging disciplines, decision relevance, treatment of uncertainties, and attention to values. These attributes summarize ways that research done through the project diverged from what each participant considered their own business-as-usual trajectory in the absence of participating in SCRiM. Bars show the fraction of participants whose description of what SCRiM enabled in their own work includes the attribute (inter-rater reliability = 0.92). The top panel shows all participants in aggregate; the bottom panel separates faculty from postdocs

Regarding the cross-cutting themes specified in advance, all three themes were judged highly important, with coupled ethical-epistemic analysis rated (narrowly) the highest (Fig. 2). Understanding varied more across themes, with coupled ethical-epistemic analysis notably lower than the other two (Fig. 2). The responses on coupled ethical-epistemic analysis thus stand out by combining the highest perceived importance with the lowest perceived understanding.

Histograms showing the distribution of participant responses on perceived importance and self-assessed understanding of three cross-cutting themes representing the research agenda of the project SCRiM: a) multi-objective robust decision analysis, b) identifying and characterizing deep uncertainties, and c) coupled ethical-epistemic analysis. See the main text for explanations of the three themes. Points on the x-axis indicate means

All participants answered the understanding questions, but one-third did not answer the importance questions as they felt unable to make the required judgments. Figure 2 includes all responses, with each histogram normalized to display response frequencies. An alternative approach would be to discard understanding responses from participants who did not also answer the importance questions; this shifts each understanding distribution slightly to the right (i.e., participants who felt unable to judge importance had relatively low understanding). Most participants supplied integer answers to these rating-scale questions, but some (about 20%) gave intervals such as “three to four.” In order to include all data in the histograms (Fig. 2), intervals were treated as a weighted combination of integers.

4 Further analysis

To further contextualize and interpret results reported above, we bring to bear two additional sources of data: project management records and a database of SCRiM-supported research publications. The management records include individual-level details of project personnel, funding levels, and attendance at SCRiM’s annual all-hands meetings and summer schools.

4.1 Attention to values in uniquely enabled research

A large minority of participants (roughly 40%) described “uniquely enabled” departures from their previous research trajectory that we subsequently coded as “attention to values” (Table 1; Fig. 1). As a check on our coders’ interpretation of researcher statements, and to provide more detail on how this “attention to values” was expressed in research outputs, we examined the SCRiM-supported publications of this subset of participants. Among the publications coauthored by each participant, we identified those publications whose content best corresponded to the participant’s statements about “uniquely enabled” research that were subsequently coded as “attention to values.” Table 2 lists the publications identified through this process and summarizes aspects in which each exhibits attention to values. We emphasize that Table 2 is not an exhaustive accounting of values components across SCRiM-supported research, but rather a summary of those instances where at least one coauthor (among the researchers interviewed) volunteered the attention-to-values aspect of the research as something that was uniquely enabled by their participation in SCRiM.

Of the eleven interview participants (six faculty and five postdocs) whose “uniquely enabled” research descriptions were coded as “attention to values,” two (one faculty, one postdoc) had not yet published research corresponding to these research descriptions as of the cutoff date used in our publications analysis (see below). The remaining nine participants are each represented in Table 2.

4.2 Variation in participant understanding of project themes

Returning to participants’ rating-scale responses on SCRiM’s cross-cutting themes (Fig. 2), self-assessed understanding showed far more variation than the importance judgments. To investigate this variation, we use the mean of each participant’s three understanding scores in a further step of analysis. Since few participants entered the network with expertise in more than one theme, we use mean understanding across the three themes as an indicator of individual intellectual integration into the project. Drawing from the data sources noted above, we construct three potential explanatory variables for this understanding indicator: (a) months of project funding received by the participant, (b) number of project-wide events attended by the participant (annual summer schools and all-hands meetings; see SI for details), and (c) an index of interdisciplinary coauthorship within SCRiM publications.

To calculate the coauthorship index, we assign each SCRiM participant a primary departmental affiliation (Table S8) by reviewing participants’ institutional websites. For each publication, we then count the number of unique affiliations among SCRiM coauthors and subtract one. Summing across the publications coauthored by a participant yields that individual’s coauthorship index. In other words, the index sums the number of instances of coauthorship with SCRiM coauthors outside the participant’s home department. As a supplement to this department-based index, we repeat the same calculation using a purpose-built disciplinary classification (Table S9) that groups participants somewhat differently than departmental affiliations.

For calculating the coauthorship indices (and also for the document analysis above), we consider all SCRiM-supported research publications published as of March 2019 (155 publications). The rationale for this date (1 year after completing the interviews) was to allow for research already underway at the time of the interviews to be included in the analysis. We used the Python package pubStats (developed for this project, and available via GitHub repository) to calculate coauthorship indices and other analytical outputs used qualitatively to guide the coauthorship analysis. (See SI for additional results concerning coauthorship across institutions.)

Figure 3 plots the understanding indicator against the potential explanatory variables, with faculty (top row) separated from postdocs (bottom row). These variables indeed appear relevant to faculty understanding (Fig. 3a–c), with 90% confidence intervals for the slope of linear regression lines excluding zero for the coauthorship index (both variants) and bounded by zero (roughly) for both funding and attendance (see Fig. 3 caption for confidence intervals). In contrast, postdoc understanding shows no statistically significant linear relationship with the potential explanatory variables (Fig. 3d–f).

Self-assessed understanding of SCRiM research themes plotted against potential explanatory variables. Each point is one participant. Mean understanding refers to the average of an individual’s three self-assessed understanding scores. a–c (top row) show faculty. d–f (bottom row) show postdocs. The project manager was removed from (a) and the lead PI was removed from (c) prior to calculating regression lines and confidence intervals; see supplemental information for discussion. Ninety percent confidence intervals for the slope of linear regression lines: a. (0.01, 0.36); b. (− 0.02, 0.29); c. dept. (0.05, 0.19), disc. (0.04, 0.20); d. (− 0.04, 0.04); e. (− 0.25, 0.16); f. dept. (− 0.09, 0.09), disc. (− 0.06, − 0.08)

5 Discussion

The motivation for our study is the great and pressing need—from both scientific and societal perspectives—for improving the practice of convergence research. Specifically, we have targeted two research gaps within existing literature on the design, conduct, and evaluation of projects falling under the somewhat broader umbrella of ITC research. These gaps concern the role of values and the training of postdocs within such projects. We begin with postdocs.

5.1 Training and participation of postdocs

We identified four general attributes within participants’ descriptions of what SCRiM “uniquely enabled” in their own research (Table 1; Fig. 1). Among these, the two that occurred most frequently align with the defining elements of convergence research. Convergence research is (1) driven by a specific and compelling problem and (2) involves deep integration across disciplines (National Science Foundation, n.d.-a). The most common attribute, bridging disciplines, echoes “deep integration across disciplines,” while the second most common, decision relevance, can be seen as an indicator of research “driven by a specific and compelling problem.” The frequencies of these two attributes were notably higher among postdocs than among faculty, each occurring in two thirds of postdoc participants’ research (Fig. 1).

In this way, SCRiM appears to have pushed the research of postdocs in a convergence-science direction more reliably than it did the research of faculty. One possible explanation is that faculty often have broader project portfolios than postdocs, and these portfolios may be, overall, less focused on convergence research. Another possibility is that faculty research agendas are more rigid and less easily influenced. Our study suggests that insofar as postdoc research agendas are more flexible, SCRiM made use of this flexibility to bend those agendas towards the concept of convergence research. These results deepen and reinforce prior findings that postdocs can play an important role in collaborations within ITC projects (National Research Council 2014; Rhoten 2003) and that postdoc experiences can provide a good opportunity for interdisciplinary training (Institute of Medicine 2005).

Our focus on what SCRiM enabled in participants’ research can be contrasted with other studies characterizing ITC project outcomes, which typically address process and collaboration (Freeth and Caniglia 2020; Gaziulusoy et al. 2016; Hessels et al. 2018; König et al. 2013; Polk 2014), researcher satisfaction (Katoh et al. 2018; Tress et al. 2005), or categories of outputs and outcomes (Corley et al. 2006; Cummings and Kiesler 2005, 2007; Steger et al. 2021; Teirlinck and Spithoven 2015; Tress et al. 2005). Some bibliometric analyses posit and measure indicators of interdisciplinarity in the publications of a project or researcher (Abramo et al. 2012; Abramo et al. 2018; Bark et al. 2016; Anzai et al. 2012; Porter et al. 2008), but these provide only a partial view through the imperfect lenses of author affiliations and journal classifications. Our study demonstrates a complementary approach to illuminating the content of research carried out within a project.

While participation in SCRiM clearly made postdocs’ research more interdisciplinary and more problem-driven, our analysis of individual variation in understanding of key project-specific themes leaves us with a puzzle about postdoctoral training. In contrast to faculty, we find no statistically significant relationships between postdocs’ understanding of key project themes and our potential explanatory variables (Fig. 3). Yet it seems fair to assume that the amount of training that postdocs received through SCRiM is likely linked to those variables. So why does this training not improve perceived understanding of the project?

One possible explanation is that postdocs are typically advised to focus on specific and tractable research questions and that this narrower focus detracts from developing an understanding of the project as a whole. This hypothesis may be seen as an instance of the frequently observed misalignment of project goals and career goals in ITC research (e.g., Bark et al. 2016; Gaziulusoy et al. 2016; Tress et al. 2005). On this hypothesis, variation in project understanding would be explained by the variation in specific research questions addressed by each postdoc: postdocs developed an understanding of key project themes only where this was directly relevant to their specific research questions.

As for why faculty differ from postdocs, one possibility is that as co-PIs, site leads, and senior personnel in SCRiM, faculty felt a greater sense of ownership (Lang et al. 2012) for the project, and that only in the presence of this attitude does more participation and collaboration reliably promote understanding of a project’s high-level themes. A related possibility is that investment in the proposal-writing process was a common cause that helps explain both understanding and the Fig. 3 variables (and thus correlations between them). Prior collaboration with other project personnel (Cummings and Kiesler 2007) may play a similar role. Rigorously testing such hypotheses may require more longitudinal study designs.

Other possible explanations for the contrast between faculty and postdocs include potential differences in self-reporting behavior across those groups. For example, if understanding project themes is expected of faculty (but not postdocs), and if this expectation grows with funding and participation, then correlations could be explained in part by social-desirability bias in faculty responses. Or if postdocs are less confident in self-assessment and generally give more middling scores, this might obscure a trend in our relatively small sample of data. These hypotheses might be tested by supplementing self-assessment with external assessment of participant understanding.

5.2 Values in convergence research

SCRiM’s focus on values was motivated by two hypotheses: first, that explicit deliberation about ethical and epistemic values can support the design of research that is relevant to real-world problems, and second, that such deliberation can also support communication across academic cultures and between analysts and stakeholders. Our finding of “uniquely enabled” decision relevance and bridging disciplines in a majority of participants’ research (Fig. 1) is consistent with these motivating hypotheses. Our study does not, however, specifically assess what contribution SCRiM’s focus on values made to those outcomes.

We consider the frequency of attention to values in participants’ research (about 40%; Fig. 1) an encouraging level of uptake for a concept rarely emphasized in scientific projects. Corresponding SCRiM-supported publications show a variety of substantive engagements with the values components of climate risk management (Table 2). Most of these papers fall into one of four (overlapping) categories: interview-based studies of the values of stakeholders and researchers (e.g., Bessette et al. 2017; Mayer et al. 2017); discussion and demonstration of how risk analysis can better facilitate ethical deliberation (e.g., Garner et al. 2016; Lempert et al. 2013; Simpson et al. 2016; Tuana 2013); values-inclusive policy analysis that illuminates trade-offs among contested values (e.g., Adler et al. 2017; Garner and Keller 2018; Garner et al. 2016; Quinn et al. 2018; Singh et al. 2015); and examination of motivating values behind design choice in geoscience model building and hazard characterization (e.g., Bakker et al. 2017; Bakker et al. 2016; Quinn et al. 2018; Wong et al. 2017).

When asked directly about the theme of coupled ethical-epistemic analysis, participants rated its importance to achieving project research goals (see mission statement above) very highly in absolute terms and highest among the three cross-cutting themes (Fig. 2). We see this broad agreement on the importance of the theme as validation of SCRiM’s focus on values and encouragement to further pursue the potential for enabling convergence research through foregrounding values.

At the same time, participants’ self-assessed understanding of the coupled ethical-epistemic analysis theme was relatively low (Fig. 2). This was presumably due, at least in part, to the theme’s novelty for most participants. But other comments suggest this is not the only reason. One participant said of coupled ethical-epistemic analysis “I’m a lot more aware of it,” but “I’m not sure we’ve got a process down for, you know, incorporating it—it’s not, sort of, part of the crank-turning” [F3] (see participant-labeling scheme introduced in Table 1). Another said “I like to quantify things, and I thought it was just descriptive and trying to label things, but I never saw it as a means of doing quantitative analysis” [F4]. A third participant said “This was always something that was, to me, a bit of a catchphrase more than an actual application” [PD7]. These comments suggest that realizing the potential benefits of coupled ethical-epistemic analysis may require more codified approaches that better operationalize the practice, as well as improved integration into quantitative methods, tools, and workflows.

The values theme was led by participants from philosophy (cf. Nagatsu et al. 2020; Robinson et al. 2016; Tuana 2020) and the tensions in the quotes above regarding quantification and operational processes may derive in part from the disciplinary “distance” between humanists and scientists. As one participant noted: “the ethical-epistemic stuff is different—different sort of people—and so it’s not as well-oiled a machine as some of the other stuff” [F3]. Discussing challenges within SCRiM, another participant highlighted the “difficulty of incorporating all the worldviews, especially philosophy” [PD8]. Another noted that some SCRiM researchers “come from quite different disciplines, especially [philosopher], and so we never sort of worked, talking the same language that much” [F4]. These comments point to the high “transaction costs” (van Rijnsoever and Hessels 2011) and “interdisciplinary overhead” (Bark et al. 2016) involved in working across large disciplinary divides.

Efforts to bridge divides within SCRiM included the development of a form of conceptual model called a values-informed mental model (ViMM). Conceptual models are a promising tool for integrating knowledge and building shared understanding in project teams (Bark et al. 2016; Heemskerk et al. 2003). ViMMs expand on traditional conceptual modeling approaches to explicitly represent values as well as knowledge in support of coupled ethical-epistemic analysis. The ViMM concept was developed and applied during the course of SCRiM in work published towards the end of the project (Bessette et al. 2017; Mayer et al. 2017). This tool was not available at the start of the project and was not mainstreamed into SCRiM as a whole. Further development of ViMMs and their applications in convergence science is a topic of ongoing research.

Another factor that may have compounded barriers to broader understanding of coupled ethical-epistemic analysis was the relatively few project participants focused primarily on the values theme. In terms of funding duration, humanists received just 4% of SCRiM’s total person-months of funding (compared with, e.g., 28% for meteorology/climatology, thirteen for geosciences, and ten for economics).

6 Conclusions

Here we apply our own backgrounds, perspectives, and experiences to interpret and translate the findings of this study into actionable ideas. The following recommendations are in no way comprehensive, nor do they exhaust what we have learned through SCRiM. The scope of these recommendations is limited to issues directly informed by this study. The scope is further restricted to ideas that are not already widely appreciated in published advice (see Sect. 1). Our goal is an incremental expansion of existing understanding of best practices for the design and facilitation of convergence research projects. Other take-aways from the study are possible; these are ours:

-

1.

Follow the values. Scrutinize why the research matters, to whom, and in what ways. Does the research design serve these motivations well? Acknowledge potential tensions between real-world relevance, scientific feasibility, disciplinary norms, and professional rewards. Navigate these tensions transparently. Further work is needed on how best to structure and guide these activities and improve their interface with the workflows and quantitative mindset of STEM researchers.

-

2.

Exploit new training opportunities. Large convergence projects offer new kinds of training opportunities. These include chances to expand one’s vision by appreciating the breadth of a project and how all of the pieces fit together. Otherwise successful postdoc training may not reliably advance this big-picture project understanding (and associated capacity to envision and lead convergence research). Where such capacity-building is a priority, new training mechanisms may be needed.

-

3.

Support disciplinary islands. Participants whose disciplinary culture is far from a project’s “center of mass” can face greater integration challenges. To support the integration of concepts and methods from relatively isolated disciplines, consider developing dedicated coordinating mechanisms and ask whether allocated time and personnel adequately offset higher transaction costs.

-

4.

Leverage participant expertise in assessment. Each project participant knows their own research far better than anyone else. This intimate knowledge can and should be exploited to better understand the outcomes of convergence funding within the research of project participants. “Bottom up” approaches that begin from project participants’ perspective and experience can supplement “top down” bibliographic approaches to quantifying interdisciplinarity and other aspects of research content.

Data availability

The data used in this study are supplied to the greatest extent consistent with protecting our study participants. See SI Sect. 8 and Figure S3 for details on the data provided and withheld. Supplied data are available via GitHub repository:

Code availability

All code used in this study is available via GitHub repositories:

References

Abramo G, D’Angelo CA, Di Costa F (2012) Identifying interdisciplinarity through the disciplinary classification of coauthors of scientific publications. J Am Soc Inform Sci Technol 63(11):2206–2222

Abramo G, D’Angelo CA, Zhang L (2018) A comparison of two approaches for measuring interdisciplinary research output: the disciplinary diversity of authors vs the disciplinary diversity of the reference list. J Informet 12(4):1182–1193

Adler M, Anthoff D, Bosetti V, Garner G, Keller K, Treich N (2017) Priority for the worse-off and the social cost of carbon. Nat Clim Chang 7(6):443–449

Anzai T, Kusama R, Kodama H, Sengoku S (2012) Holistic observation and monitoring of the impact of interdisciplinary academic research projects: an empirical assessment in Japan. Technovation 32(6):345–357

Bakker AMR, Applegate PJ, Keller K (2016) A simple, physically motivated model of sea-level contributions from the Greenland ice sheet in response to temperature changes. Environ Model Softw 83:27–35

Bakker AMR, Wong TE, Ruckert KL, Keller K (2017) Sea-level projections representing the deeply uncertain contribution of the West Antarctic ice sheet. Sci Rep 7(1):3880

Bark RH, Kragt ME, Robson BJ (2016) Evaluating an interdisciplinary research project: lessons learned for organisations, researchers and funders. Int J Project Manag 34(8):1449–1459

Bessette DL, Mayer LA, Cwik B, Vezér M, Keller K, Lempert RJ, Tuana N (2017) Building a values-informed mental model for New Orleans climate risk management. Risk Anal 37(10):1993–2004

Brister E (2016) Disciplinary capture and epistemological obstacles to interdisciplinary research: lessons from central African conservation disputes. Stud Hist Philos Biol Biomed Sci 56:82–91

Bromham L, Dinnage R, Hua X (2016) Interdisciplinary research has consistently lower funding success. Nature 534(7609):684–687

Cohen J (1960) A coefficient of agreement for nominal scales. Educ Psychol Meas 20(1):37–46

Corley EA, Craig Boardman P, Bozeman B (2006) Design and the management of multi-institutional research collaborations: theoretical implications from two case studies. Res Policy 35(7):975–993

Cummings JN, Kiesler S (2005) Collaborative research across disciplinary and organizational boundaries. Soc Stud Sci 35(5):703–722

Cummings JN, Kiesler S (2007) Coordination costs and project outcomes in multi-university collaborations. Res Policy 36(10):1620–1634

Diekmann S, Peterson M (2013) The role of non-epistemic values in engineering models. Sci Eng Ethics 19(1):207–218

Fortunato Santo, Bergstrom Carl T, Börner Katy, Evans James A, Helbing Dirk, Milojević Staša, Petersen Alexander M et al (2018) Science of science. Science 359(6379)

Freeth R, Caniglia G (2020) Learning to collaborate while collaborating: advancing interdisciplinary sustainability research. Sustain Sci 15(1):247–261

Garner G, Keller K (2018) Using direct policy search to identify robust strategies in adapting to uncertain sea-level rise and storm surge. Environ Model Softw

Garner G, Reed P, Keller K (2016) Climate risk management requires explicit representation of societal trade-offs. Clim Chang 134(4):713–723

Gaziulusoy AI, Ryan C, McGrail S, Chandler P, Twomey P (2016) Identifying and addressing challenges faced by transdisciplinary research teams in climate change research. J Clean Prod 123:55–64

Hadka D, Herman J, Reed P, Keller K (2015) An open source framework for many-objective robust decision making. Environ Model Softw 74:114–129

Heemskerk M, Wilson K, Pavao-Zuckerman M (2003) Conceptual models as tools for communication across disciplines. Conserv Eco 7(3)

Helgeson C, Srikrishnan V, Keller K, Tuana N (2021) Why simpler computer simulation models can be epistemically better for informing decisions. Philos Sci 88(2)

Hessels LK, De Jong SPL, Brouwer S (2018) Collaboration between heterogeneous practitioners in sustainability research: a comparative analysis of three transdisciplinary programmes. Sustain: Sci Pract Policy 10(12):4760

Huutoniemi K, Klein JT, Bruun H, Hukkinen J (2010) Analyzing interdisciplinarity: typology and indicators. Res Policy 39(1):79–88

Institute of Medicine (2005) Facilitating interdisciplinary research. National Academies Press, Washington DC

Ioannidis JP. A (2018) Meta-research: why research on research matters. PLoS Biol 16(3):e2005468

Kasprzyk JR, Nataraj S, Reed PM, Lempert RJ (2013) Many objective robust decision making for complex environmental systems undergoing change. Environ Model Softw 42:55–71

Klein JT (2008) Evaluation of interdisciplinary and transdisciplinary research: a literature review. Am J Prev Med 35(2 Suppl):S116–S123

Kodama H, Watatani K, Sengoku S (2013) Competency-based assessment of academic interdisciplinary research and implication to university management. Res Eval 22(2):93–104

König B, Diehl K, Tscherning K, Helming K (2013) A framework for structuring interdisciplinary research management. Res Policy 42(1):261–272

Kwakkel JH, Pruyt E (2013) Exploratory modeling and analysis, an approach for model-based foresight under deep uncertainty. Technol Forecast Soc Chang 80(3):419–431

Lang DJ, Wiek A, Bergmann M, Stauffacher M, Martens P, Moll P, Swilling M, Thomas CJ (2012) Transdisciplinary research in sustainability science: practice, principles, and challenges. Sustain Sci 7(1):25–43

Laursen BK, Gonnerman C, Crowley SJ (2021) Improving philosophical dialogue interventions to better resolve problematic value pluralism in collaborative environmental science. Stud Hist Philos Sci B Stud Hist Philos Mod Phys 87:54–71

Lempert RJ, Groves DG, Popper SW, Bankes SC (2006) A general, analytic method for generating robust strategies and narrative scenarios. Manag Sci 52(4):514–528

Lempert RJ, Groves DG, Fischbach JR (2013) Is it ethical to use a single probability density function. RAND Corporation, Santa Monica. https://www.rand.org/content/dam/rand/pubs/working_papers/WR900/WR992/RAND_WR992.pdf

Lundershausen J (2018) The anthropocene working group and its (inter-)disciplinarity. Sustain Sci Pract Policy 14(1):31–45

MacLeod M (2018) What makes interdisciplinarity difficult? Some consequences of domain specificity in interdisciplinary practice. Synthese 195(2):697–720

MacLeod M, Nagatsu M (2018) What does interdisciplinarity look like in practice: mapping interdisciplinarity and its limits in the environmental sciences. Stud Hist Philos Sci 67:74–84

MacLeod M, Nersessian NJ (2014) Strategies for coordinating experimentation and modeling in integrative systems biology. J Exp Zool B Mol Dev Evol 322(4):230–39

Mayer LA, Loa K, Cwik B, Tuana N, Keller K, Gonnerman C, Parker AM, Lempert RJ (2017) Understanding scientists’ computational modeling decisions about climate risk management strategies using values-informed mental models. Glob Environ Chang Hum Policy Dimensions 42:107–116

McLeish T, Strang V (2016) Evaluating interdisciplinary research: the elephant in the peer-reviewers’ room. Palgrave Commun 2(1):1

Nagatsu M, Taylor Davis C, DesRoches T, Koskinen I, MacLeod M, Stojanovic M, Thorén H (2020) Philosophy of science for sustainability science. Sustain Sci 15(6):1807–1817

National Academies of Sciences, Engineering, and Medicine (2019) Fostering the culture of convergence in research: proceedings of a workshop. National Academies Press, Washington DC

National Academies of Sciences, Engineering and Medicine (2021) Measuring convergence in science and engineering: proceedings of a workshop. The National Academies Press

National Research Council (2014) Convergence: facilitating transdisciplinary integration of life sciences, physical sciences, engineering, and beyond. The National Academies Press, Washington DC

National Science Foundation (n.d.-a) Growing convergence research program solicitation. Accessed May 15, 2020. https://www.nsf.gov/pubs/2019/nsf19551/nsf19551.htm

National Science Foundation (n.d.-b) NSF’s ten big ideas. Accessed July 6, 2020. https://www.nsf.gov/news/special_reports/big_ideas/index.jsp

National Science Foundation (n.d.-c) Sustainability research networks program solicitation. Accessed December 1, 2020. https://www.nsf.gov/pubs/2011/nsf11574/nsf11574.htm.

National Science Foundation (n.d.-d) Sustainable regional systems research networks program solicitation. Accessed November 19, 2021. https://www.nsf.gov/pubs/2020/nsf20611/nsf20611.htm

O’Brien KL, Wolf J (2010) A values-based approach to vulnerability and adaptation to climate change: a values-based approach. Wiley Interdiscip Rev Clim Chang 1(2):232–242

Polk M (2014) Achieving the promise of transdisciplinarity: a critical exploration of the relationship between transdisciplinary research and societal problem solving. Sustain Sci 9(4):439–451

Porter AL, Cohen AS, David Roessner J, Perreault M (2007) Measuring researcher interdisciplinarity. Scientometrics 72(1):117–147

Porter AL, Roessner DJ, Heberger AE (2008) How interdisciplinary is a given body of research? Res Eval 17(4):273–282

Porter AL, David Roessner J, Cohen AS, Perreault M (2006) Interdisciplinary research: meaning, metrics and nurture. Res Eval 15(3):187–195

Porter AL, Schoeneck DJ, Roessner D, Garner J (2010) Practical research proposal and publication profiling. Res Eval 19(1):29–44

Quinn JD, Reed PM, Giuliani M, Castelletti A, Oyler JW, Nicholas RE (2018) Exploring how changing monsoonal dynamics and human pressures challenge multireservoir management for flood protection, hydropower production, and agricultural water supply. Water Resour Res 54(7):4638–4662

R Core Team (2013) R: a language and environment for statistical computing. http://cran.univ-paris1.fr/web/packages/dplR/vignettes/intro-dplR.pdf

Rhoten D (2003) A multi-method analysis of the social and technical conditions for interdisciplinary collaboration. Final report, National Science Foundation BCS-0129573. http://ssrc-cdn1.s3.amazonaws.com/crmuploads/new_publication_3/a-multi-method-analysis-of-the-social-and-technical-conditions-for-interdisciplinary-collaboration.pdf

Robinson B, Vasko SE, Gonnerman C, Christen M, O’Rourke M, Steel D (2016) Human values and the value of humanities in interdisciplinary research. Cogent Arts Hum 3(1):1123080

Saldana J (2015) The coding manual for qualitative researchers. SAGE

Siedlok F, Hibbert P (2014) The organization of interdisciplinary research: modes, drivers and barriers. Int J Manag Rev 16(2):194–210

Siedlok F, Hibbert P, Sillince J (2015) From practice to collaborative community in interdisciplinary research contexts. Res Policy 44(1):96–107

Simpson M, Wallgrün JO, Klippel A, Yan L, Garner G, Keller K, Oprean D, Bansal S (2016) Immersive analytics for multi-objective dynamic integrated climate-economy (DICE) models. In Proceedings of the 2016 ACM companion on interactive surfaces and spaces, 99–105. ISS ’16 companion. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3009939.3009955

Singh R, Reed PM, Keller K (2015) Many-objective robust decision making for managing an ecosystem with a deeply uncertain threshold response. Ecol Soc

Stenetorp P, Pyysalo S, Topić G, Ohta T, Ananiadou S, Tsujii J (2012) Brat: a web-based tool for NLP-assisted text annotation. In Proceedings of the demonstrations at the 13th conference of the European chapter of the Association for Computational Linguistics, 102–7. Association for Computational Linguistics, Avignon

Sylvan Katz J, Martin BR (1997) What is research collaboration? Res Policy 26:1–18

Teirlinck P, Spithoven A (2015) How the nature of networks determines the outcome of publicly funded university research projects. Res Eval 24(2):158–170

The Network for Sustainable Climate Risk Management (SCRiM) (n.d.) Overview. Accessed December 1, 2021. https://www.scrim.psu.edu/research/overview/

Tress B, Tress G, Fry G (2005) Researchers’ experiences, positive and negative, in integrative landscape projects. Environ Manag 36(6):792–807

Tschakert P, Barnett J, Ellis N, Lawrence C, Tuana N, New M, Elrick-Barr C, Pandit R, Pannell D (2017) Climate change and loss, as if people mattered: values, places, and experiences: climate change and loss, as if people mattered. Wiley Interdiscip Rev Clim Chang 8(5):e476

Tuana N (2013) Embedding philosophers in the practices of science: bringing humanities to the sciences. Synthese 190(11):1955–1973

Tuana N (2020) Values-informed decision support: the place of philosophy. In Philosophy for the real world: an introduction to field philosophy with case studies and practical strategies, edited by Evelyn Brister And, 143–59. Taylor & Francis/Routledge

Tuana N, Sriver RL, Svoboda T, Olson R, Irvine PJ, Haqq-Misra J, Keller K (2012) Towards integrated ethical and scientific analysis of geoengineering: a research agenda. Ethics Policy Environ 15(2):136–157

Valles SA, Piso Z, O’Rourke M (2019) Coupled ethical-epistemic analysis as a tool for environmental science. Ethics Policy Environ 22(3):267–286

van Rijnsoever FJ, Hessels LK (2011) Factors associated with disciplinary and interdisciplinary research collaboration. Res Policy 40(3):463–72

Vezér M, Bakker A, Keller K, Tuana N (2018) Epistemic and ethical trade-offs in decision analytical modelling. Clim Chang 147(1):1–10

Wall TU, Meadow AM, Horganic A (2017) Developing evaluation indicators to improve the process of coproducing usable climate science. Weather Clim Soc 9(1):95–107

Ward VL, Singh R, Reed PM, Keller K (2015) Confronting tipping points: can multi-objective evolutionary algorithms discover pollution control tradeoffs given environmental thresholds? Environ Model Softw 73:27–43

West S, van Kerkhoff L, Wagenaar H (2019) Beyond ‘linking knowledge and action’: towards a practice-based approach to transdisciplinary sustainability interventions. Policy Stud 40(5):534–555

Wong TE, Bakker AMR, Ruckert K, Applegate P, Slangen ABA, Keller K (2017) BRICK v0. 2, a simple, accessible, and transparent model framework for climate and regional sea-level projections. Geosci Mod Dev 10(7):2741

Acknowledgements

We thank Randy Miller and Matthew Lisk for computing assistance, Vivek Srikrishnan for help refining the disciplinary classification of SCRiM personnel, Katerina Kostadinova for collating data on SCRiM personnel, and Courtney Cooper for comments on a draft of the manuscript. Special thanks go to all members of the SCRiM project and the interviewees. This work was supported by the National Science Foundation through the Network for Sustainable Climate Risk Management (SCRiM) under NSF cooperative agreement GEO-1240507 and by the Penn State Center for Climate Risk Management (CLIMA). All errors and opinions are those of the authors and do not reflect the views of the funding entities.

Funding

This work was supported by the National Science Foundation through the Network for Sustainable Climate Risk Management (SCRiM) under NSF cooperative agreement GEO-1240507, by the Penn State Center for Climate Risk Management (CLIMA), and by the Rock Ethics Institute.

Author information

Authors and Affiliations

Contributions

All authors jointly conceived the project and designed the interview protocol. CH performed the interviews. NT and CH developed the codebooks and coded the interview transcripts. RN and CH conceived the software package used in the publications analysis. CH performed remaining data analysis and data visualization with all authors providing critical feedback. All authors contributed to writing the manuscript.

Corresponding author

Ethics declarations

Ethics approval

All research was conducted with approval from the Institutional Review Board of Pennsylvania State University (study ID: STUDY00008957).

Consent to participate

All participants provided informed consent.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Helgeson, C., Nicholas, R.E., Keller, K. et al. Attention to values helps shape convergence research. Climatic Change 170, 17 (2022). https://doi.org/10.1007/s10584-021-03274-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-021-03274-y