Abstract

Increasing likelihoods of extreme weather events is the most noticeable and damaging manifestation of anthropogenic climate change. In the aftermath of an extreme event, policy makers are often called upon to make timely and sensitive decisions about rebuilding and managing present and future risks. Information regarding whether, where and how present-day and future risks are changing is needed to adequately inform these decisions. But, this information is often not available and when it is, it is often not presented in a systematic way. Here, we demonstrate a seamless approach to the science of extreme event attribution and future risk assessment by using the same set of model ensembles to provide such information on past, present and future hazard risks in four case studies on different types of events. Given the current relevance, we focus on estimating the change in future hazard risk under 1.5 °C and 2 °C of global mean temperature rise. We find that this approach not only addresses important decision-making gaps, but also improves the robustness of future risk assessment and attribution statements alike.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

“What role did climate change play?” is frequently asked after an extreme weather event, especially when that event resulted in significant damage. While still a relatively young field, the science of extreme event attribution has developed tools and methods to rapidly, objectively and quantitatively assess the changing nature of extreme weather risk at the local level (e.g. National Academy of Science report, Otto et al. 2017, Philip et al. 2017). The high level of public interest and media coverage these attribution analyses receive demonstrates that extreme weather events with measurable impacts provide an important opportunity to raise awareness of how global warming is changing the risks of certain types of extreme weather and begin a conversation about how to best manage and adapt to these changing risks.

In contrast, the assessment of current and future risks of extreme weather events can get side-lined (van Aalst et al. 2017) with decision makers and governments focussing on present-day challenges. Consequently, civil society organisations and decision-makers alike are requesting information on the role of climate change in extreme weather events on a range of timescales (past, present and future) produced in a seamless way and relevant to the impacts experienced.

So far, a small number of studies have been undertaken to provide past, present and future information on changing risks of extreme events based on standard meteorologically defined indices of extremes (Fischer and Knutti 2015; Harrington et al. 2017; King et al. 2017). However, these studies have so far focussed on global scales only and are not associated to extreme events that have actually occurred and thus resonate with regional decision-makers. If events can be both defined from meteorological data and related directly to the impacts that societies have experienced, this will allow a more direct link between currently relatively separate bases of climate information, ranging from disaster risk reduction to climate projections. The connection with the impact is important for the public, decision-makers and scientists alike in order to appreciate and relate to the index chosen to define the event and enable future climate projections to be incorporated in local and regional planning.

Furthermore, the assessment of the return period of an event that has occurred, i.e. the rareness of the event, is crucial information for decision making. As such, it is important to define the event carefully with respect to the impacts as both the spatial (Uhe et al. 2016) and temporal (Harrington 2017) extent of the event to determine the return period, which, in turn, determines the change in likelihood of the event occurring (risk ratio or probability ratio). Depending on the nature of the distribution of extremes, which is often related to the type of event, the return period can be especially sensitive to the spatial and temporal definition of the event. While this challenge is applicable to event attribution throughout (e.g. Otto et al. 2016), it highlights again the importance to communicate the degree of sensitivity associated with a result, for instance, by providing the confidence interval (CI) around a best estimate, and by using multiple well-tested methods to arrive at a best estimate.

The main aim of this study is to combine the request for information on extreme events on timescales spanning past, present and future focussing on extreme events that have occurred in recent years. Our aim is not to attribute the events with the largest impacts or highest damages but to demonstrate for different types of events with large impacts, for different regions and time intervals across timescales, how a seamless approach to attribution and future risk assessment can be applied.

We identify a set of four different types of extreme weather events from around the world defined by their impacts, choosing events that occurred in domains for which we have regional climate model simulations available and for which current climate models and datasets can be expected to perform well enough: a flood in Thailand, a drought in Somalia, a wet season in Colombia and a cold wave in Peru.

We use the same set of model ensembles to provide information on past, present and future hazard risks. Given the current relevance, we focus on estimating the change in future hazard risk under 1.5 °C and 2 °C of global mean temperature rise (subsequently respectively referred to as the 1.5 °C and 2.0 °C scenarios), rather than standard time slice experiments.

We furthermore aim to combine the best practice methodological approach from event attribution, the multi-method approach recommended by the National Academy of Science (see NAS report 2016) and frequently applied in attribution studies, with large ensembles of future simulations. The methods to choose from for future risk assessment, however, are fewer than for pure attribution studies (observations cannot be used). We compare and synthesise results on hazard risk for each event and time frame where possible.

In the remainder of this paper, after a brief methods section (“Methodology”), we focus on the analysis of the events, for each event in turn we provide a brief event description, event definition and the observational analysis, followed by a model evaluation and analysis, and, finally, a synthesis of the results (“The events”). Next, we reflect on the benefits and limitations of our approach in “Discussion”. “Conclusions” highlights the conclusions that can be drawn from this analysis. A detailed description of the models that are in principle used to analyse the events is given in the SI.

2 Methodology

2.1 Event selection

With the aim of this paper being to demonstrate a seamless approach estimating changing likelihoods of hazards across timescales for events that had large impacts, we chose different types of weather events in the developing world that fell within the historical period simulated in the HAPPI experiments (happimip.org, Mitchell et al. 2017), simulations developed specifically for the assessment of changing risks of extreme events under 1.5 °C and 2 °C and where with weather@home a set of ensembles on regional scales is available (see SI for more details). The arguably most complete database of damages and losses incurred from extreme weather events is the Emergency Events Database (EM-DAT), a global database containing information on the occurrence and effects of thousands of disasters.

In order to make the most use of the simulations available, we focus on three regions: South Asia, South America and Africa north of 9°S. From EM-DAT we obtained a list of disasters in these regions with a death toll indicating high impact, resulting in a list of 56 events in South America and over 100 events in South Asia, and in the northern part of Africa. From these, we chose five events of different types with very high impacts that are relatively localised. One of these events is a heat wave in India, which has been attributed in a recent paper (van Oldenborgh et al. 2018) and is thus not included in the analysis.

The four events that are chosen from the list are described in “The events”.

2.2 Detection, attribution and projection of changing hazard risks

The first step in the analysis is trend detection in observational data: fitting the quantity of interest to a statistical model and determining if a trend emerges beyond the range of deviations expected by natural variability. We use different distributions for the different events, as we have precipitation, drought and temperature events, which have different extreme value characteristics. The distributions used are a Gaussian, a generalised Pareto distribution (GPD) and a generalised extreme value distribution (GEV), all described by two or three parameters, including the location parameter μ, the scale parameter σ and, if appropriate, the shape parameter ξ. In this statistical approach, global warming is factored in by allowing the fit to the distribution to be a function of the (low-pass filtered) global mean surface temperature (GMST) (e.g. van Oldenborgh et al. 2015), where GMST is taken from the National Aeronautics and Space Administration (NASA) Goddard Institute for Space Science (GISS) surface temperature analysis (GISTEMP, Hansen et al. 2010). In the case of precipitation (or drought), it is assumed that the scale parameter σ (the standard deviation of the statistical distribution) scales with the location parameter μ (the mean of the statistical distribution), and the PDF is scaled up or down with the (smoothed) GMST. In the case of temperature, it is assumed that the scale parameter σ is fixed, and the PDF is shifted up or down with the GMST. In this way, it results in a distribution that varies continuously with GMST. This distribution can be evaluated for a GMST in the past (e.g. 1950 or 1880) and for the current GMST. A 1000-member non-parametric bootstrap procedure is used to estimate confidence intervals for the fit. We can then assess the probability of occurrence of the observed event in the present climate, p1, and past climate, p0. These probabilities are communicated as return periods of the event in the present and past: 1/p1 and 1/p0, respectively. The risk ratio is evaluated as the ratio of p1 to p0. If the 95% confidence interval for risk ratio does not encompass unity, we say that the risk ratio is significantly larger (or smaller) than one and there is a detectable positive (or negative) trend in the observational data. This approach has been used before, e.g. Philip et al. (2017) for drought, Schaller et al. (2014) and Van der Wiel et al. (2017) for heavy precipitation and Uhe et al. (2016) and van Oldenborgh et al. (2015) for temperature.

In our attribution analysis, the second step is the attribution of the observed trend to global warming, natural variability or other factors, such as El Niño-Southern Oscillation (ENSO). For this study, we provide an estimation of the influence of ENSO (results of ENSO analysis in SI) using observations only, with the knowledge that there are physical mechanisms behind well-known observed teleconnections. The attribution to global warming (or other factors) requires that model simulations run with and without anthropogenic forcings (or other factors).

In this study, we include a third step—an assessment of how hazard risk will change in the future. Model simulations are obviously also required in order to assess changes in hazard risk beyond the time period for which we have observations. The models used in this study (if they pass a basic evaluation) are EC-Earth, weather@home and the CMIP5 ensemble (see SI for a detailed model description).

For model simulations using transient forcing, we calculate the risk ratio much in the same way as for observations, here taking the model GMST in the year 1880 to represent preindustrial conditions, and a future year to represent a future 1.5 °C or 2.0 °C scenario. For model simulations with ‘time-slice’ or stationary forcing for selected climate scenarios, the risk ratio is calculated directly between ensembles of two different climate scenarios, such as an ‘all forcings’ (including anthropogenic forcing) and a ‘natural’ scenario (excluding anthropogenic forcing), or in the case of our analysis of the future changing hazard risk, between a current climate ‘all forcings’ scenario and a future 1.5 °C or 2.0 °C scenario (see SI 1.1 and 1.3 for further details).

For comparison with model analyses, we extrapolate the risk ratio from observations back in time to obtain a risk ratio comparing the risk in the year of the event and preindustrial times, represented by the year 1880.

For model evaluation we follow the same methods used in e.g. Philip et al. (2017).

2.3 Synthesising of results

For observations, risk ratios (RR) are given for the year of the event with respect to 1880. For the EC-Earth model, a similar method is used, whereas for the weather@home model and the CMIP5 model ensemble, we evaluate RR by construction between the ‘current period’ (the block of years 2006–2015) and preindustrial conditions (see SI for details). We synthesise the results by taking a simple average (as in Uhe et al. 2018) of observational and model results.

For the future scenarios, we show risk ratios for the 1.5 °C and 2.0 °C scenarios: the change in probability between the 1.5 °C scenario and either the year of the event or the ‘current period’ (RR1.5) and the change in probability between the 2.0 °C scenario and either the year of the event or the ‘current period’ (RR2.0). We compare these values visually, but do not attempt to make an average due to a couple of reasons. First of all, it is not possible to make a fair comparison of RR1.5 or RR2.0 with RR and the number of data sets to be analysed in the future is more limited. Secondly, and crucially, the method for evaluating risk ratios using a single transient model (EC-Earth) is different from that using multi-model ensemble time-slice experiments as in CMIP5 or the weather@home ensemble that is explicitly designed to simulate + 1.5 °C and + 2.0 °C worlds. Instead we comment on how the risk ratio per model changes between the 1.5 °C and 2.0 °C scenarios, and if this is consistent between the models.

3 The events

As described above, four events were chosen based on: the impact they had, whether we can assume that with the methodologies available there will be climate models that are able to simulate the statistics of the event and the geographical location. We focussed on events in regions covered by the regional models available.

3.1 Thailand floods in 2010

Starting in October 2010, large parts of Thailand were submerged by flooding following extreme rainfall in the season. There was a series of flash floods leading to flooding in October and November, initially triggered by late monsoon rainfall, and on top of that, in November, a tropical storm hit the south of Thailand, leading to bigger problems and many more deaths due to the fact that they were already coping with the flooding. Given that the month of August 2010 was very wet, that there was a lack of good drainage and that the timing of the event was unusual (in October the main rainy season is usually coming to an end), the impact of the extreme rainfall was increased even more. The first event that started the flooding lasted around 10 days. Analysing the observational record, we indeed see a series of precipitation anomalies, including the high 10-day rainfall, between the 11th and 20th of October. We select this extreme event as the trigger for the first floods leading to the months of flooding over Thailand.

3.1.1 Event definition

The distribution of rainfall in Thailand is bimodal and the influence on flooding is different in different seasons, which makes it scientifically impossible to analyse a 10-day rainfall in the entire year. We focus on the season with the highest rainfall, between July and October (JASO), in which the flooding occurred in 2010. The event definition is set to be the maximum 10-day rainfall over the season JASO, averaged over Thailand. However, we note that such an extreme 10-day event will only have a large impact if other factors contribute as well, such as higher than average precipitation amounts preceding this event and inadequate drainage.

3.1.2 Observational analysis

The longest purely observational data set for investigating precipitation on a daily time scale over Thailand is the CPC 0.5° gridded analysis, available from 1979 to now. Whilst longer re-analysis data sets are available, they have poorer spatial resolution and are less suited to studying short precipitation events. To describe the JASO maxima of the 10-day precipitation, we use a GEV distribution.

The observations indicate that the 10-day precipitation extreme (11–20 Oct 2010) had an observed value of 13.1 mm/day with a return period in the event year of about 2.5 years (1.8 to 3.9). Compared to preindustrial times (1880), the frequency of such events has likely increased; however, due to the limited length of the observational series, the trend is not statistically distinguishable above natural climate variability (the RR encompasses unity).

3.1.3 Model analysis

The EC-Earth model validates well against the CPC observational analysis, with both σ/μ and ξ being well within the observational 95% confidence intervals, rendering the model suitable for a simple multiplicative bias correction on the mean (~ 27%). The best estimate for the change in probability since 1880 is a factor of 2.0, which agrees well with the observational estimate (1.8). Due to the large ensemble size, the increase in risk is clear (1.5 to 2.3).

The weather@home model also validates well according to the criteria. Using the rounded return time of the event, one in 3 years, as definition, weather@home simulates also a significantly positive change in risk (RR is 1.2 to 1.3), which is found to increase in a warming world.

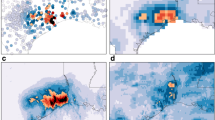

Risk ratios for both weather@home and EC-Earth are within the observed range and significantly positive, see Table S1. The averaged RR over observations and models is 1.6 (1–4.8), see Fig. 1a. Model results narrow the confidence intervals such that we can now exclude both a decrease in probability and an increase in probability of more than 4.8.

Estimates of the change in risk, between preindustrial conditions (the year 1880 or ‘natural’ scenario) and the present conditions (event year or ‘current’ climate scenario) for the different methodologies, with observations in blue and model results in red for (a) Jul–Oct precipitation over Thailand, (b) minimum temperatures over Jun–Aug in Peru, (c) precipitation over Jan–Apr in the box 1–8°N, 72–79°W in Colombia, (d) precipitation in Somalia (excluding Somaliland) averaged over a full year Jul–Jun, (e) as (d) but for Jul–Dec and (f) as (d) but for Jan–Jun. The averaged synthesis result is shown in magenta. For each bar, the black marker indicates the best estimate and the shading depicts the range given by the 95% CI

In agreement with results for RR, for the 1.5 °C and 2.0 °C scenarios, the trend in JASO 10-day precipitation extremes is significant and positive in both EC-Earth and weather@home, see Table S1 and Fig. S1.

3.2 Cold wave in Peru in 2013

In the last few years, Peru saw several winters with extreme cold that affected the vulnerable populations and led to individual deaths; however, in 2013, the entire winter was so very cold that 505 people died (EM-DAT). In previous attribution studies, we have seen that cold waves unsurprisingly are becoming less frequent (Peterson et al. 2012), but, as with all localised extreme events, the quantification of the expected decrease is crucial for resilience building and adaptation planning.

3.2.1 Event definition

The event definition for this event is country-average of the minimum temperature in the winter season June, July and August (JJA). Almost the whole country experienced a cold winter, but it was especially cold along the coast in the mountainous area, where temperatures are usually low. This naturally influenced the impact of the event.

3.2.2 Observational analysis

For this event, we use the CRU TS4.00 data set for monthly minimum temperatures. The data is used from 1950 onwards, as before that a visual inspection shows large inhomogeneities. These are probably caused by the large increase in the number of stations used in the data set just before 1950. The observed value of Jun–Aug averaged minimum temperatures in 2013 is 11.7 °C. Whilst both Gaussian and GPD distributions fit the data well, we use a Gaussian distribution, which uses all data, rather than the GPD, which samples only the cold tail (e.g. the lowest 20% of Jun–Aug temperatures). The 2013 cold event has a return period of 17 years (7–75 years). In the past, such cold events happened more often: the risk ratio is 0.08 (0.02–0.21).

3.2.3 Model analysis

For the EC-Earth model validation we use a GPD with 20% threshold, as in EC-Earth we have a sufficiently large quantity of data and the variability (σ) of EC-Earth was too low in the Gaussian fit. For a GPD, σ for EC-Earth just fits in the observational CI, but the shape parameter has only overlapping error margins: the best estimate of ξ is below the 95% CI range of values from observations, indicating that the model distribution is flatter in the tail. We thus use the model with care. As the mean of the extremes is 1.38 °C too low, we use an additive bias correction of 1.38 °C to the model. The model shows a strong trend towards less severe cold extremes. From Table S2 and Fig. 2b, we see that an event such as the observed will virtually never happen again in the 2.0 °C scenario, and, thus, RR2.0 is not reliable anymore. This result could also be due to the tail of the EC-Earth distribution being flatter than that for the observed distribution. The weather@home simulations have a much smaller decrease in the risk than the observations and EC-Earth simulations, while the RR1.5 is comparable to that simulated by EC-Earth.

Estimates of the change in risk of Jul–Oct 10 precipitation over Thailand at least as wet as in 2010, between the present conditions (event year or ‘current’ climate scenario) and either the 1.5 °C scenario (magenta) or the 2.0 °C scenario (red), for the different model methodologies as labelled. For each bar, the black marker indicates the best estimate and the shading depicts the range of the 95% CI. All events are the same as in Fig. 1, but we do not give an assessment for the full year in Somalia

The average RR for a cold wave like the one in Peru in 2013 is 0.06 (0.01–0.18). This indicates that there is a strong decrease in cold waves over Peru.

This decrease in cold events will continue in the future. The values for RR1.5 and RR2.0 are smaller than 1, see Table S2 and Fig. 2b.

3.3 Extreme rainfall in Colombia in 2006

According to EM-DAT and other sources of impact data (SI), the rainfall event associated with by far the most deaths in recent years in South America was an extreme rainfall event occurring during the first half of 2006 in Colombia. This event led to large scale flooding primarily in the Magdalena River basin and in the southeast of the country. From a meteorological point of view, the event (see SI for the spatial distribution) was not the most extreme in the series, if looking at monthly average rainfall, despite the large impacts in the first rainy season of the year.

3.3.1 Event definition

Similar to Thailand, the distribution of rainfall is bimodal and given the event occurred towards the end of the first rainy season, we focus on monthly rainfall over Jan–Apr. The region is defined as a box (1–8°N, 72–79°W) covering the central and western half of the country and including most of the Magdalena River basin (Fig. S7).

3.3.2 Observational analysis

We use the CRU TS4.00 monthly precipitation data set (1900–2015) for this event. Whilst the data set starts in 1900, we only use the years 1950–2015, as there is a large increase in the number of stations used in the data set just before 1950. The event has a magnitude of 8.0 mm/day averaged over the months Jan–Apr 2006 averaged over the box. We fit the data to a GPD distribution, using an 80% threshold. However, we find no significant trend in observations (95% CI on RR is 0.002 to 75) and we therefore calculate the return period assuming no trend. The return period of the 2006 event is calculated assuming no trend, and has a value of 20 years (10 to 130 years, 95% CI).

3.3.3 Model analysis

The EC-Earth model validation is fine when using a GPD fitted to GMST, so we use this for the analysis of the risk ratio, after a multiplicative bias correction of 1.57. The risk ratio (RR) is smaller than one and significant: 0.5 (0.3 to 0.8), which means that precipitation events of this magnitude or more severe than the observed magnitude have become less common in this model.

The weather@home validation is satisfactory and, subsequently, using the model to perform the analysis, the RR is almost exactly the same as for EC-Earth. CMIP5 also agrees with the observed and modelled trend, although here the trend is not significant. See Table S3 for details.

The models narrow the margins in RR calculated from observations to a risk ratio of 0.5 (0.1–3.3), see Fig. 1d. Mainly due to the large uncertainty in the observations, the decrease in risk up to the present is not significant. We can, however, exclude increases in probability larger than 3.3 and decreases smaller than 0.1.

For the future scenarios, the picture is less consistent, see Fig. 2d. EC-Earth still shows that events like this precipitation event become less and less common, while for weather@home, the trend under the 1.5 °C and 2 °C scenarios is less consistent between the two models, but still of the same sign. In contrast, CMIP5 simulates risk ratios centred around 1.

3.4 Somalia drought in 2010–2011

Droughts in East Africa are almost an annual occurrence, but the location and duration vary and, thus, the impacts (e.g. Philip et al. 2017). Unsurprisingly, impacts are highest in countries with unstable regimes and, thus, have very high vulnerability. In terms of the death toll, the drought in 2010–2011 over Somalia holds a sad record. The drought extended over two rainy seasons, which have different characteristics and for which in previous attribution studies the influence of anthropogenic climate change was estimated independently (Lott et al. 2013). The results of that study are, however, based on a single model and over a larger region and, thus, difficult to compare to other studies. In Somalia many difficulties from a scientific point of view come together that make attribution studies challenging.

3.4.1 Event definition

In East Africa, the availability of station data is very limited. Gridded observational data sets CHIRPS (daily, 1981–now) and CenTrends (monthly, 1900–2014) provide the best option for observational analyses (Philip et al. 2017). We use the CenTrends monthly data for the observational analysis of the 2010–2011 drought in Somalia (excluding the Somaliland region). There are around four stations feeding into this data set in the period 1900–1920, about 20 stations from 1920 to 2000, and satellite data is used for the period after 2000. As for the rapid attribution analysis of the 2016–2017 Somalia drought performed by the WWA team and available at https://wwa.climatecentral.org/analyses/somalia-drought-2016-2017/, we choose to take the whole data set, starting in 1900. We take monthly rainfall over a full year (Jul 2010–Jun 2011) as basis, area-averaged over Somalia. The weather@home model, however, can only provide yearly analyses when starting in January. Therefore, we additionally analyse the two failed rainy seasons individually in the two half years, Jul–Dec 2010 and Jan–Jun 2011, to enable comparison.

3.4.2 Observational analysis

In this very dry region of Africa, the dry tail of the distribution is extremely dry and any small changes to the observed values can lead to large changes in the fitting parameters of the distribution. Therefore, the estimation of the return time of the event strongly depends on the fit.

For the full year period, the observations indicate a return period of the Jul 2010–Jun 2011 mean precipitation (0.46 mm/month) of 290 years (at least 140 years).

For the first half year, the precipitation was well below average but the anomaly was not as extreme as during the following half year. The return period of the Jul–Dec 2010 mean precipitation (0.49 mm/day) is about 11 years (8 to 23 years).

For the second half year, the observational estimate for the return period of the Jan–Jun 2011 mean precipitation (0.43 mm/day) is around 125 years (at least 70).

For the full year Jul–Jun, the risk ratios calculated from observations are consistent with no change in risk between preindustrial times and now. Also, for the first half year, we do not find evidence of a change in risk of such a drought in the observational series. For the second half year period, the risk ratio best estimate is larger than for the first half year and full year, but the 95% CI still encompasses no change since preindustrial times until now.

3.4.3 Model analysis

We validate and bias correct the EC-Earth model based on the period of overlap 1900–2014 with CenTrends. For the full year and half year Jul–Dec, the GPD fit parameters of EC-Earth are within the 95% CI of those from observations. For Jan–Jun, there are larger differences between the GPD fit parameters of the model and observations, with EC-Earth showing larger variability relative to μ (the 20% quantile). As the 95% CI of the model and observational σ/μ estimates only just overlap, the model results for the Jan–Jun half year should be treated with more caution. Multiplicative bias corrections on μ of − 30.2%, − 51.7% and + 28.1% were applied to the EC-Earth series for the Jul–Jun, Jul–Dec and Jan–Jun periods respectively.

For the full year Jul–Jun, as for the observations, we find no significant trend in the EC-Earth data. The best estimates for the risk ratios are around unity, with 95% CI of 0.7 to 4.5 (RR), 0.9 to 1.2 (RR1.5) and 0.97 to 1.8 (RR2.0). With weather@home unavailable for analyses in consecutive calendar years and CMIP5 not validating satisfactorily, we cannot give a synthesis figure for RR1.5 and RR2.0. The present-day change in risk (RR) is exactly 1, but with large uncertainty bounds, which only allows to exclude changes in both directions larger than an order of magnitude.

For the half year Jul–Dec, we find no significant change in risk since preindustrial times, but with the central estimates being opposite in the two different models. A synthesised result provides a slightly positive change in risk of 1.3 and slightly smaller uncertainty bounds.

For the half year Jan–Jun, as with the observations, the EC-Earth risk ratio best estimates are larger than for the Jul–Dec half year and full year, but this time, weather@home gives the opposite sign to the observations and the other model, leading to an overall change in risk of 1.7, but the 95% CI still encompassing no change in risk since preindustrial times until now.

When estimating future changes in risk, both models provide the same sign for the best estimate in the change in risk for RR1.5 and RR2.0. However, while slightly positive for the Jan–Jun half years, it is negative for the Jul–Dec half year. This suggests that potentially different dynamical mechanisms influence the change in the different seasons. However, without further analysis and an understanding of these processes and their representations and in light of the very large uncertainty, no clear signal for future drought risk in Somalia emerges.

4 Discussion

The intention of this paper is to show that the assessment of changing hazard risks across timescales for events that are selected based on their impacts is possible in a comparable way by combining event attribution methods with projections into the future, although there are some issues to resolve.

This approach resulted in selecting some events for which the experienced or perceived impact was large, but the meteorological situation was not especially rare (e.g. Thailand 2010 floods), and others for which the meteorological event was exceptional (e.g. Somalia 2010–2011 drought).

The multi-method approach we apply has been adopted as the standard in the extreme event attribution literature (e.g. NAS report). Here, we show that extending such a study to the future can be done, but it also raises new issues.

Ideally, the models would perform well, and can be considered to represent the observed event and even refine the confidence intervals (e.g. in the case of Colombia). We would then compare the present and future scenarios using only models. However, frequently, this is not the case due to inadequacies in the observations or models or both, and instead we find that a combined estimate from models and observations gives the most reliable synthesis for the historical period. When this is the case, we cannot directly compare the combined model-observational estimates for the historical period with future period model-only estimates. While this might be unsatisfactory, combining attribution studies, which can take observations into account with future predictions highlights the cases where, even when models validate favourably with observations, drivers of the events might be more complex than simulated and, hence, projections might be more uncertain than otherwise noticeable.

This study is very much a paper of opportunity, making use of available regional climate model simulations. As in all attribution studies, the event definition is crucial in determining the outcome of the results, but in the same way always a trade-off between what caused the impact and what can realistically be expected to be reliably simulated by climate models. Hence, in order to make use of political opportunities opening up after extreme events have struck and to facilitate the use of scientific information to shape adaptation measures, the connection to the real impact is vital. We try to stay reasonably objective by using country-wide averages or rectangular boxes, and not base the area totally on the event. Ideally, impact models would be used in connection with climate models (Schaller et al. 2016), but the science is currently only exploring how to do this and well-tested methodologies, as we have for event attribution (NAS), are not yet available. Thus, failing an easy solution, testing the sensitivity of the results to arbitrary choices of events seems a necessary ingredient in the cookbook of best practices for extreme event attribution.

We have deliberately chosen to include a drought in East Africa where previous attribution studies did not find a climate change signal. This can be the case for several reasons. One reason is that the thermodynamic and dynamic changes in the likelihood of extreme events genuinely lead to changes in occurrence frequency of an event that are in opposing directions and, thus, cancel each other out (Otto et al. 2016) so that the likelihood of the event does not change or might even decrease with rising global temperatures. Another reason for no change in the likelihood of an event occurring could be that drivers other than anthropogenic greenhouse gases are currently masking a change in the occurrence frequency. For risk assessment and in particular adaptation planning, these two different reasons have very different implications. And while our abilities to evaluate model fidelity for future hazard assessments are very limited compared to assessments of present-day simulations, including an assessment of potential future risk ratios in attribution studies could provide a test on whether the risk is not changing for dynamical reasons or whether thermodynamic effects are currently masking the signal.

5 Conclusions

The results presented above clearly highlight the value of putting present-day risk assessment and attribution studies into a seamless assessment with future risks. In two of the four events studied, the attribution result and the assessment of future risk ratios are in the same direction that overall adds confidence to the results, suggesting that, indeed, anthropogenic climate change is an important driver of changing risks. There are, however, important differences.

In the case of the Thailand rainfalls, RR, RR1.5 and RR2.0 are of the same order of magnitude and in all cases with relatively small uncertainty bounds; arguably providing the clearest result. In the case of Peru, the risk of cold waves is unsurprisingly decreasing in the present, as well as in the future. The future risk ratio is, however, extremely difficult to quantify as the events become extremely rare.

In two of the four analysed cases, we find that the attribution result is not in line with the estimate of future risk ratios. In the case of Colombia, where all methodologies agree and, indeed, refine the result, the fact that the future change in risk is less clear suggests that what looks like a clear attribution result might not be an attribution to primarily greenhouse gas emissions, but also other drivers. Only in the case of Somalia, we cannot say that a risk assessment across timescales helped to understand or contextualise the attribution or projection. It highlights, however, that without a detailed understanding of underlying processes, assessments of changing risks in East Africa are not possible.

These results show that, from a scientific perspective, looking at changing risks across timescales is clearly beneficial and helps to increase confidence in results (Thailand) or refine quantification of risk ratios (Peru) or point to sources of uncertainty that could potentially be overlooked if focussing only on past or present timescales (Colombia). Furthermore, information across timescales might make it easier for information to be used by different stakeholders and this study shows that we can provide such information in a seamless way.

References

Fischer EM, Knutti R (2015) Anthropogenic contribution to global occurrence of heavy-precipitation and high-temperature extremes. Nat Clim Chang 5:560–564. https://doi.org/10.1038/nclimate2617

Hansen J, Ruedy R, Sato M, Lo K (2010) Global surface temperature change. Rev Geophys 48:RG4004. https://doi.org/10.1029/2010RG000345

Harrington L (2017) Investigating differences between event-as-class and probability density-based attribution statements with emerging climate change. Clim Chang 141:641–654

Harrington LJ, DJ Frame, Ed Hawkins and M Joshi (2017) Seasonal cycles enhance disparities between low- and high-income countries in exposure to monthly temperature emergence with future warming. Env Res Lett. https://doi.org/10.1088/1748-9326/aa95ae

King AD, DJ Karoly, and BJ Henley (2017) Australian climate extremes at 1.5 and 2 degrees of global warming. Nat Clim Chang, https://doi.org/10.1038/nclimate3296

Lott F, Christides N, Stott P (2013) Can the 2011 East African drought be attributed to anthropogenic climate change? Geophys Res Lett 40:1177–1181

Mitchell D, AchutaRao K, Allen M, Bethke I, Forster P et al (2017) Half a degree additional warming, projections, prognosis and impacts (HAPPI): background and experimental design. Geosci Model Dev 10:571–583

NAS report. 2016. Attribution of extreme weather events in the context of climate change. Washington, DC: Natl Acad Sci Eng Med

Otto FEL, van Oldenborgh GJ, Eden J, Stott PA, Karoly DJ, Allen MR (2016) The attribution question. Nat Clim Chang 6:813–816

Otto FEL, K van der Wiel, G J van Oldenborgh, S Philip, SF Kew, P Uhe and H Cullen (2017) Climate change increases the probability of heavy rains in Northern England/Southern Scotland like those of storm Desmond - a real-time event attribution revisited. Env Res Lett https://doi.org/10.1088/1748-9326/aa9663

Peterson T, Stott P, Herring S (2012) Explaining extreme events of 2011 from a climate perspective. Bull Am Meteorol Soc 93:1041–1067

Philip S, SF Kew, GJ van Oldenborgh, F Otto, S O’Keefe, K Haustein et al. (2017) Attribution analysis of the Ethiopian drought of 2015. J Clim, https://doi.org/10.1175/JCLI-D-17-0274.1

Schaller N, Otto FEL, van Oldenborgh GJ, Massey NR, Sparrow S, Allen MR (2014) The heavy precipitation event of May–June 2013 in the upper Danube and Elbe basins. Bull Amer Met Soc 95(9):S69–S72

Schaller N, Kay AL, Lamb R, Massey NR, van Oldenborgh GJ et al (2016) Human influence on climate in the 2014 southern England winter floods and their impacts. Nat Clim Chang 6:627–634

Uhe P, Otto FEL, Haustein K, van Oldenborgh GJ, King AD, Wallom DCH, Allen MR, Cullen H (2016) Comparison of methods: attributing the 2014 record European temperatures to human influences. Geophys Res Lett 43(16):8685–8693

Uhe P, Philip S, Kew S, Shah K, Kimutai J, Mwangi E, van Oldenborgh G, Singh R, Arrighi J, Jjemba E, Cullen H and Otto F (2018) Attributing drivers of the 2016 Kenyan drought. Int J Climatol https://doi.org/10.1002/joc.5389

van Aalst M, J Arrighi, E Coughlan, et al. (2017)Bridging science, policy and practice report of the International Conference on Climate Risk Management Pre-scoping Meeting for the IPCC Sixth Assessment Report 5–7 April 2017, Nairobi

van der Wiel K, Kapnick SB, van Oldenborgh GJ et al (2017) Rapid attribution of the August 2016 flood-inducing extreme precipitation in South Louisiana to climate change. Hydrol Earth Syst Sci 21(2):897–921. https://doi.org/10.5194/hess-21-897-2017

van Oldenborgh GJ, Haarsma R, De Vries H, Allen MR (2015) Cold extremes in north America vs. mild weather in Europe: the winter of 2013–14 in the context of a warming world. Bull Am Meteorol Soc 96(5):707–714

van Oldenborgh GJ, Philip S, Kew S,et al. (2018) Extreme heat in India and anthropogenic climate change, Nat Hazards Earth Syst Sci Discuss, https://doi.org/10.5194/nhess-2017-107

Acknowledgements

We would like to thank the volunteers running the weather@home models as well as the technical team in OeRC for their support. We would furthermore like to acknowledge funding from the McArthur foundation as well as the NERC grant NE/K006479/1.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

ESM 1

(DOCX 2598 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Otto, F.E.L., Philip, S., Kew, S. et al. Attributing high-impact extreme events across timescales—a case study of four different types of events. Climatic Change 149, 399–412 (2018). https://doi.org/10.1007/s10584-018-2258-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-018-2258-3