Abstract

Dementia affects cognitive functions of adults, including memory, language, and behaviour. Standard diagnostic biomarkers such as MRI are costly, whilst neuropsychological tests suffer from sensitivity issues in detecting dementia onset. The analysis of speech and language has emerged as a promising and non-intrusive technology to diagnose and monitor dementia. Currently, most work in this direction ignores the multi-modal nature of human communication and interactive aspects of everyday conversational interaction. Moreover, most studies ignore changes in cognitive status over time due to the lack of consistent longitudinal data. Here we introduce a novel fine-grained longitudinal multi-modal corpus collected in a natural setting from healthy controls and people with dementia over two phases, each spanning 28 sessions. The corpus consists of spoken conversations, a subset of which are transcribed, as well as typed and written thoughts and associated extra-linguistic information such as pen strokes and keystrokes. We present the data collection process and describe the corpus in detail. Furthermore, we establish baselines for capturing longitudinal changes in language across different modalities for two cohorts, healthy controls and people with dementia, outlining future research directions enabled by the corpus.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over 50 million people in the world have dementia, a syndrome involving deterioration in memory and cognitive abilities, with an annual increase of 10 million (Organization et al., 2019). Earlier diagnosis can improve patients’ quality of life by enabling better planning and medical interventions at the most effective and appropriate stage (Prince et al., 2011, 2014; Association, 2016)–especially if diagnosis occurs before clinical symptoms onset in Ritchie et al. (2016); Mortamais et al. (2017).

Standard diagnostic biomarkers such as MRI, PET scans and cerebrospinal fluids are intrusive and expensive, and therefore unsuitable for early diagnosis. The other main family of diagnosis methods are cognitive tests such as the ADAS-Cog (Rosen et al., 1984), MMSE (Folstein et al., 1975) and ACE-III (Noone, 2015), widely used in clinical studies. As well as suffering from sensitivity issues (Schneider & Sano, 2009) and lagging behind biomarkers in their ability to detect dementia onset (Jack et al., 2013), they have several other caveats: they are usually administered manually; they are pencil-and-paper type tests requiring an expert, and therefore applied only after initial referral to a doctor; they are unsuited for testing across large populations or at home. For early diagnosis, pre-screening and condition monitoring we need methods that can be automated, complementary to biomarkers but easily observable within everyday life without intrusion.

1.1 Language datasets for dementia

The automatic analysis of patients’ spontaneous speech and language is a promising non-invasive, inexpensive approach to screening and monitoring dementia progression, as speech and language impairments caused by dementia can occur early in the course of the disease (Fraser et al., 2015; König et al., 2018). To allow progress in this field researchers have been working with a number of different datasets. Table 1 provides an overview of the most widely used datasets (along with a new datasets proposed by us) in terms of different aspects such as the population, the amount of data, the modality, the nature of the tasks and ability to elicit linguistic information, the duration of the elicited tasks, and the longitudinal aspect (if any). Most consist of speech obtained in a clinical setting; some include either partially (Hansebo & Kihlgren, 2002; Weiner & Schultz, 2016) or fully (Luz et al., 2020) transcribed text or text extracted through Automatic Speech Recognition (ASR) (Luz et al., 2021).

1.2 Language based tasks

The majority of research has focused on distinguishing people with Alzheimer’s Disease (AD) from cognitively normal controls, a.k.a., the AD classification task. The Pitt (Becker et al., 1994), ADReSS (Luz et al., 2020), and \(ADReSSo \) (Luz et al., 2021) datasets have been widely used for addressing this task; Among them Pitt is the largest dataset. The datasets include speech recordings (Luz et al., 2021) or speech and transcripts of verbal descriptions (Becker et al., 1994; Luz et al., 2020) obtained by asking subjects to describe either general-purpose pictures (e.g., pictures showing animals) or the Cookie Theft picture (CTP) from the Boston Diagnostic Aphasia Examination (Goodglass, 2013). Unlike the Pitt Corpus (Becker et al., 1994), which contains annual data for the same person up to five times, ADReSS (Luz et al., 2020) and \(ADReSSo \) (Luz et al., 2021) include a single speech sample per participant. ADReSSo also contains occasional interactions between instructors and participants.

The inference of patients’ cognitive scores, a.k.a., the score regression task, has seen less attention. Several studies have extracted different linguistic and acoustic features to predict cognitive scores using the Pitt dataset (Becker et al., 1994). In Luz et al. (2020, 2021), authors use acoustic features and features extracted from transcripts in a single and bimodal setting for predicting cognitive scores, showing the effectiveness of simple modality fusion.

The task of predicting changes in cognitive status per individual over time, a.k.a., disease progression, has received even less attention due to the lack of consistent longitudinal datasets. The Pitt (Becker et al., 1994) and Carolinas (Pope & Davis, 2011) datasets are the largest longitudinal datasets currently available for studying the language of individuals with dementia. An important limitation of the Pitt corpus is that the longitudinal aspect is limited, spanning up to 5 sessions maximum per individual with most participants having two narratives only. On the other hand, in the Carolinas dataset, healthy controls have up to two interviews over the longitudinal study while people with dementia have between 1 and 9 sessions. Preliminary work has addressed disease progression as a classification task (Weiner & Schultz, 2016; Clark et al., 2016; Luz et al., 2021) on the basis of longitudinal assessments in ADReSSo spanning two years from dementia onset. However, spontaneous speech is only collected once.

An important limitation of existing dementia datasets is that their longitudinal aspect is limited to data snapshots at either a fixed time (Luz et al., 2020, 2021) or a few points in time (Becker et al., 1994; Pope & Davis, 2011). Moreover, the language elicitation tasks are biased towards particular genres or domains via lab-based tasks, such as the description of a particular set of images (Becker et al., 1994; Luz et al., 2020, 2021). Some studies elicit speech from more natural spontaneous conversations (Pope & Davis, 2011; Weiner & Schultz, 2016). Yet, the tasks are restricted to particular topics, well known to participants and subject to learning effects (Goldberg et al., 2015). Due to the above limitations in dementia datasets, existing work in language and speech processing for discriminating across cohorts of healthy controls and people with dementia ignore changes in language and how these relate to changes in cognition over time.

Here we address the above limitations and make the following contributions as follows:

-

We introduce a novel multi-modal dementia corpus of rich longitudinal natural conversations collected over 2 phases, each spanning 28 sessions. This was obtained from people with various forms of dementia and healthy controls on the basis of reminiscence material and in a non-clinical setting. The corpus contains speech, transcriptions, and written language (i.e., pen and keyboard modalities). To the best of our knowledge, this is the first multi-modal longitudinal dataset with this range of modalities and covering such fine-grained longitudinal spans. We present the data collection process and the dataset itself in detail.

-

We establish longitudinal tasks and baselines across different modalities and investigate language changes across the cohorts of health controls and people with dementia over time.

-

We conduct a set of experiments that show significant discrimination between healthy controls and people with dementia across all modalities. Our tasks and baselines pave the way on future directions enabled by our new dataset.

2 Related work

2.1 Linguistic manifestation of dementia

Dementia is often associated with reduction in vocabulary size, syntactic complexity and information content (Maxim & Bryan, 1994; Croisile et al., 1996), as well as loss of coherence, both temporal (construction of logical time sequences) and thematic (continuity of topic) (Ellis, 1996). When conducting dialogue, adults with dementia show less coherence and cohesion and more disruptive topic shifts and empty phrases (Dijkstra et al., 2004), more topically irrelevant utterances (St-Pierre et al., 2005), and characteristic ways of responding to questions (Elsey et al., 2015). Dementia has also been associated with apathy (Nobis & Husain, 2018), emotional dysregulation and mood swings, even in people with mild to moderate dementia (Petry et al., 1989); such emotional aspects are known to surface and are detectable in language (e.g. Purver & Battersby, 2012).

2.2 Language tests

Interview-based tests have therefore been developed that assess the linguistic ability for diagnosis and disease progression (Taler & Phillips, 2008; Tarawneh & Holtzman, 2012). However these tests are vulnerable to practice effects (Goldberg et al., 2015), and are not applicable to everyday spontaneous speech. Promisingly, Forbes-McKay et al. (2013, 2014) showed that linguistic characteristics of spontaneous speech and writing can reliably discriminate healthy older controls from mild-moderate AD patients, and track aspects of decline over time; however, longitudinal findings were limited by infrequent (6-monthly) data collection and their results relied on manual linguistic analysis.

2.3 NLP for dementia

More recent work has used NLP approaches for dementia detection by analysing aspects of language such as lexical, grammatical, and semantic features (Ahmed et al., 2013; Orimaye et al., 2017; Kavé & Dassa, 2018), showing that people with dementia produce less lexical and semantic context and lower syntactic complexity compared to healthy controls. Lack of fluency through the study of paralinguistic features has also been shown to be indicative of people with dementia (de Ipiña et al., 2013). The semantics and pragmatics of language appear to be affected by dementia throughout the entire span of the disease, more so than syntax (Bayles & Boone, 1982). In particular, people with dementia talk more slowly with longer pauses (Gayraud et al., 2011; de Ipiña et al., 2013; Pistono et al., 2019).

Recent work has also investigated manually engineered acoustic features to recognize AD from spontaneous speech (Luz et al., 2020, 2021) while other work exploited non-linguistic features to distinguish people with AD from healthy controls (Nasreen et al., 2021). Neural models such as LSTM and CNN(Karlekar et al., 2018) or pre-trained language models (Yuan et al., 2020), have been used to analyze disfluency characteristics, such as filled pauses. Researchers have used neural approaches to extract either acoustic features (Pan et al., 2020, 2021) or linguistic information (Zhu et al., 2021) directly from the speech signal for dementia detection.

2.4 Longitudinal language changes

Existing work has focused on distinguishing people with dementia from healthy controls without considering language changes over time. Moreover, where present, longitudinal data in current datasets are sparse. For example, in the Carolinas Corpus (Pope & Davis, 2011), the largest available longitudinal dataset, subjects have up to 9 speech records across the longitudinal study. However, only 8 people (3 dementia and 5 controls) have 9 speech records across the entire collection. Our newly introduced corpus consists of 22 people, where each subject was asked to record 28 sessions of 15 mins of speech for each of the two study phases, as well as provide written logs (See Table 1 for the comparative benefits of our dataset). Additionally, ours is the first study to investigate longitudinal language changes across modalities and how these manifest in the dementia and control cohorts.

3 Collecting the longitudinal multimodal corpus

Our goal has been to collect a longitudinal multi-modal corpus including both spontaneous speech and writing, as well as extra-linguistic information associated with language production, and to focus on interactions occurring in a non-clinical setting. There are three important novel aspects in the corpus design: I) Conversations and written thoughts as well as associated paralinguistic information are obtained on the basis of reminiscence material, specifically images from past decades on topics of general interest. Reminiscence is a meaningful and useful activity for people with and without dementia that can improve cognition, mood, and quality of life (Pinquart & Forstmeier, 2012; Gonzalez et al., 2015). II) The corpus contains daily data over two phases each spanning around 4 weeks or 28 sessions. III) The corpus is collected in the participants’ own environment using a custom-built tablet application.

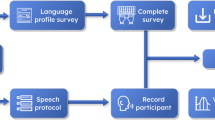

3.1 Corpus collection process

According to our protocol our corpus is collected in separate phases each lasting four weeks or 28 sessions, where phases are 14 weeks apart. In practice due to unforeseen delays the period between phases has been longer than 14 weeks (see Sect. 7). Participants are paired with a carer and asked to record daily sessions during each study phase, alternating between (a) 15 mins of conversation with their carers, and (b) typed or hand-written thoughts using a stylus pen.

Both spoken and written language are elicited using reminiscence material, i.e., images from the past, created by the dementia communication specialists Many Happy ReturnsFootnote 1. Images are presented using a bespoke Android Tablet application which records spoken and written data and sends it to a secure remote server for storage. The application was designed, developed, and tested together with our commercial clinical partner ClinvivoFootnote 2, in consultation with a stakeholder group from the Alzheimer’s Society. The application allows recording three modalities: speech, typed text (keyboard), and hand-written text (pen). For the latter two paralinguistic information such as key strokes and pen strokes, pen pressure and deletions are recorded respectively.

At the start of each 4-week phase, participants are given a tablet running the purpose built application which contains reminiscence material and allows recording language in the various modalities. A Mini Mental State Examination (MMSE) (Folstein et al., 1975) and an Addenbrooke’s Cognitive Examination-III (ACE-III) (Noone, 2015) are administered by a suitably qualified person at the start and end of each phase respectively as cognitive impairment benchmarks. While these tests provide only minimal cognitive data, they allow us to assess cognitive change at the comparison points needed to analyse the rich linguistic information collected.

The investigators monitor the submission of data via a remote server. If data have not been submitted for 48 hours, a research team member contacts the participant and carer to ensure they are not having difficulties. If a participant is unable to record data for longer than a week, the research team considers their withdrawal from the study. If it is deemed that a participant has lost capacity to consent, they are withdrawn from further data collection. Subject to consultation with the participants’ carers, any data collected for that participant up to that point are used in the analysis.

3.2 Reminiscence material & tablet application

The bespoke tablet application shows a participant four images every day, each representing a topic of general interest from the 50s, 60s and 70s. Each image/topic is accompanied by three questions to help initiate a conversation or thought process and provide memory “joggers”. The participant chooses one topic out of these four as well as the mode of interaction (recording a conversation, typing or writing thoughts). The four images are pseudo-randomly selected from a pool of images available in each of the 4-week phases. Figure 1 illustrates the table application once a subject has chosen a particular topic (here “Radio”).

The image material and corresponding questions were developed by an organisation specialising in dementia communicationFootnote 3 and have been used with people in care homes. The 50s and 60s material was adapted for use in the tablet application. The 70s material was created for the purpose of the study. The collected corpus covers a set of 67 images/topics. Phase 1 includes 26 topics from the 50s and Phase 2 includes 41 images from the 60s and 70s. By design topics were meant to be repeated every so often but based on individual’s feedback such repetition has been minimised and each phase includes different material. In particular, Phase 1 topics include: Goblin Teasmade, Washday and Smog, Keeping Warm, Household smells, Sundays, Housewives, Budgies, Radio, Television, The cinema and music, The Goons, Weekly children’s comics, The Coronation, Holiday Camps, The Modern Era - transport, Endless freedom, Toys and books, School, Knitting, Hair, Teddy boys and teenagers, Bikes, Fashion, Immigration, National Service, Sport. Phase 2 was augmented with more images from the 70s, which seems to better fit the memory bump of our participant cohort.

3.3 Participant recruitment

Our target was to recruit a cohort of people living with dementia or MCI (n=20) and age-matched controls (n=10). These numbers are appropriate for a pilot study. Previous longitudinal research examining language indicators in people with Alzheimer’s disease over a year (Forbes-McKay et al., 2013, 2014) have recruited similar numbers of participants, with assessments every six months. Participant inclusion and exclusion criteria as well as recruitment methods are described in Figure 2.

Eligibility: Participants are aged 65-80 years with mild to moderate dementia, MCI, or are age-matched healthy controls. They are resident in their own home or with family and are in daily contact with a carer or family member. They must have lived in the UK during the 50s-70s so they can relate to the reminiscence material. They must be able to conduct daily conversations and write their thoughts using the provided tablet application.

Recruitment: Primary means of recruiting participants has been through the Join Dementia Research platform (JDR), mailing lists, dementia networks, dementia cafes and memory clinics. Interested candidate participants are subsequently screened by qualified staff (NIHR research nurses) in terms of providing consent and meeting the study eligibility criteria.

3.4 Transcription

A subset of the spoken data (51 sessions from 8 participants spanning several weeks) were transcribed manually by experienced dialogue transcribers, using PRAAT.Footnote 4 As well as the words spoken, transcripts include significant non-verbal events such as utterance timings, pauses, laughter, crying, yawning, whispering, coughing as well as disfluencies including mis-speaking and reformulation. The transcription convention used was developed on the basis of the CHAT protocol (MacWhinney, 1992) and techniques for transcribers (Garrard et al., 2011).

3.5 Community support and dissemination

We have created a steering committee consisting of six Alzheimer’s society volunteers and the founder of Many Happy Returns/Real Comminication Works, an organisation working closely with people living with dementia to help with engagement and dialogue. We have had meetings every several months with the entire group and work closely with a subgroup on the design and usability of the data collection application as well as any concerns that may be faced by participants. This committee has had vital input into the design of the study, the identification of suitable participants as well as dissemination of findings at the end of the study.

The raw data itself cannot be made publicly available as this does not comply with our ethics. Yet, it could be made available to interested parties subject to an NDA agreement. In the future, we also aim to make publicly available pre-trained embeddings for linguistic and audio modalities.

4 Dataset description

4.1 Participant demographics

We have data from 22 participants (6 females and 4 males with no dementia diagnosis, and 3 females and 9 males with a dementia diagnosis). The average age at the time of recruitment for people with dementia was 70.9 years and for healthy controls 68.9 years. Most participants had at least 10 years of education, with about 25% having completed a University degree. Conditions represented in the collected corpus include Mild Cognitive Impairment (MCI), Alzheimer’s Disease (AD), Vascular Dementia (VD), Frontotemporal Dementia (FD), and Mixed Dementia (MD). Overall, the corpus includes 10 healthy controls, 5 people with AD, 2 people with MCI, 2 people with FD, 1 with VD, and 2 with MD (1 AD+VD, 1 AD + Lewy body Dementia), covering 816 sessions and different modalities. Table 2 summarizes dataset statistics across all modalities.

For Phase 1, the cohorts include 12 people with dementia or MCI and 10 controls. As managing the longitudinal data collection process is expensive, both in terms of human effort and in terms of the hardware, software and data storage our protocol catered for a small number of participants (20 people with dementia and 10 controls). Similar numbers have been used before in previous longitudinal studies (Forbes-McKay et al., 2013, 2014). While our study protocol targeted recruitment of twice as many people with dementia as healthy controls, since we expect greater homogeneity between controls while dementia can manifest in many different ways, in practice we only managed to recruit 12 people with dementia in the given timeframe. For delays primarily external to the study, discussed in Sect. 7, only 3 people with dementia and 6 controls were able to complete Phase 2 of the study Footnote 5.

4.2 Statistical overview of different data modalities

The speech modality is the most popular amounting for 490 sessions by 22 participants, that is 101:26 hours of audio data. The Typed/Key modality follows with 17 participants and 271 sessions while the Hand-written/Pen modality was selected by 12 participants in 104 sessions. In general, controls record a larger amount of sessions compared to participants with dementia (51.1 (STD=13.1) vs 29.1 (STD=11.4)). In each session, participants chose 2.5 topics on average (STD=2.6). In total, the sessions cover 66/67 unique topics. However, participants with dementia addressed fewer topics in the same number of sessions compared to controls (63 vs 65).

For speech, the mean duration of sessions is slightly shorter for the dementia group compared to controls (12:11 mins (STD=4:31) vs 12:39 mins (STD=4:07)). Figure 3 summarizes the number of recorded sessions per individual in the two groups together with the session duration in the speech modality. For the majority of sessions conversations last between 15 and 16 mins in both groups, although some topics seem harder for both groups, resulting in shorter sessions. On average, subjects choose the same topic 1.2 (STD=0.5) and 1.3 (STD=0.6) times over the longitudinal study in control and dementia cohorts, correspondingly. Overall, the duration of individual conversations/sessions is balanced across the two groups. In total, the duration of speech sessions is 50:49 hours for people with dementia and 50:36 hours for controls.

Table 3 summarizes statistics of other modalities included in the corpus, i.e., typed and hand-written daily logs, and transcribed daily conversations, along with their corresponding characteristics. For the typed daily logs, healthy controls spend 20.9 minutes writing a log, while the respective length of time to produce a written log for people with dementia is 35.9 minutes. By contrast, the average length of typed characters is 2,647 for healthy controls and 1,752 for people with dementia. We see a similar pattern for the hand-written logs, with the average length of a character sequence produced for this purpose being 529 for healthy controls and 392 for people with dementia. Therefore healthy controls are able to produce more written or typed text within a shorter amount of time. Yet the averaged recorded pen pressure is similar across the two cohorts.

Part of the spoken conversations (for 8 speakers, 6 people with dementia and 2 controls) has been manually transcribed. Most of the manually transcribed spoken conversations were chosen to be by participants with dementia (79/84 sessions). This allows us in the future to analyse linguistic patterns that characterise people with dementia and use the corresponding para-linguistic information to fine-tune pre-trained speech-to-text models for automatic speech recognition (ASR) specialising in speech by people with dementia.

5 Longitudinal multimodal language changes across dementia and control cohorts

5.1 Task

Here we showcase the utility of our newly proposed dataset by investigating longitudinal changes in language across different modalities (i.e., speech, transcribed conversation, and typed text) in relation to the two cohorts (i.e., healthy controls and people with dementia). Our goal is to identify subjects’ language variations over time. In particular, given a sequence of N sessions \(\{S_1, S_2, ..., S_N\}\) over the longitudinal study, we first map each of the sessions to a d-dimensional representation \(\{S_1^d, S_2^d, ..., S_N^d\}\) such as \(d \in \mathbb {I^{+}}\). We then compute the distance D across different sessions over the longitudinal study through cosine similarity for measuring changes in language within subjects. To this effect, we explore two tasks by calculating language changes: a) between adjacent sessions \(D(S_t^d,S_{t+1}^d)\) where \(t \in N \), called the consecutive task, and b) from the beginning of data collection up to time t, \(D(S_1^d,S_{t}^d)\) where \(t \in N \) and \(t>1\), called the non-consecutive task. For calculating the distance D, we consider different statistical functions (i.e., mean, median, std). To the best of our knowledge, this would be the first task to allow such fine-grained multimodal longitudinal analysis as previous work mostly considered modality-specific classification of disease progression at limited fixed time points.

5.2 Session-level representations

To obtain session-level representations for both linguistic (transcribed spoken conversations and typed logs) and audio modalities (acoustic aspects of spoken conversations), we first segment language into utterances, where an utterance is defined as an unbroken chain of spoken or written language. We then map each of the utterances into a pre-trained embedding representation. We finally construct session-level representations by averaging the utterance embeddings within sessions.

When working on the linguistic modality, segmentation is performed on an unbroken chain of spoken language for the transcriptions and on punctuation for the typed texts. Each segmented utterance is mapped onto a fixed-size sentence representation (Reimers & Gurevych, 2019). We chose sentence embedding representations as previous work has shown their effectiveness in assessing cognition through language for mental health (Iter et al., 2018; Voleti et al., 2019).

For the audio modality, we use an end-to-end voice activity detection model Footnote 6 to perform segmentation on speech. In line with the linguistic modality and as previous work showed the superiority of using neural representations over manual-engineered acoustic features (Zhu et al., 2021), we map speech segments to pre-trained speech embeddings. Here, we use TRIpLET Loss network (TRILL), which has resulted in a good performance in non-semantic speech tasks including AD classification on DementiaBank (Shor et al., 2020). We encode moments of silence by applying random initialization.

5.3 Results

We calculated the mean, median, and std cosine distance of session-level representations between consecutive and non-consecutive sessions for each speaker individually. We then averaged the obtained scores of speakers across the two cohorts. We chose cosine distance of sentence level representations as it has been shown in previous work to be a strong baseline for tasks in mental health (Iter et al., 2018).

For speech, we noticed that the mean and median cosine distance scores were different across the two cohorts for both the consecutive and non-consecutive tasks (see Table 4). However, the distance scores were significantly higher for the dementia group (\(p<0.05\)) when we calculated changes across non-consecutive sessions. That is changes in speech across sessions were particularly prominent in temporally distant sessions. We also investigated speech variations for people who participated in both phases of the longitudinal study. There are 9 such participants (6 controls and 3 people with dementia). Here, we averaged the session embeddings per participant within a phase and calculated the distance between the two phases. Again, participants in the dementia cohort exhibited substantial speech variations across phases (see Table 5). This justifies further the importance of collecting longitudinal language data for dementia monitoring.

We obtained similar results when conducting experiments with transcriptions and typed texts (see Table 4). Overall, we observed that transcribed speech is most informative in capturing longitudinal language changes across the two cohorts. Yet, speech is more useful when comparing people across phases (see Table 5). In the case of typed text, while distance scores are higher for the dementia cohort, the difference was not statistically significant. We assume this is because in planned, non-spontaneous texts, such as written thoughts, the planning going into writing the text makes it more coherent. However, the typed and written text modalities convey additional, currently unexplored, extra-linguistic information (number of deletions, pauses between keystrokes), that show corrections of one’s text and these may be better indicators of changes in cognition. In the future, we aim to investigate self-repair tasks (Rohanian & Hough, 2021) that are more appropriate for written discourse.

6 Conclusion

We introduce a novel fine-grained longitudinal multi-modal corpus containing data from healthy controls and people with dementia. The dataset covers audio and text, containing spoken and transcribed conversations, written and typed logs as well as associated extra-linguistic information such as pen and keystrokes. Conversations and written thoughts are elicited in a natural setting, in the participants own environment, triggered by reminiscence material. Specifically, people can record their thoughts via recorded audio, typed or written text through a bespoke tablet application. We present the data collection process and describe the corpus providing statistical information about the two cohorts across the different modalities collected. We also establish baselines to capture longitudinal language changes in relation to the two cohorts and across the audio and linguistic modalities. A set of initial experiments shows that longitudinal language variations are higher in people with dementia. This effect is even more pronounced across temporally distant sessions. In the future, we aim to investigate tasks that involve language-function variations, such as coherence and disfluency, that are particularly prominent in the progression of dementia.

7 Limitations

In this work, we introduced a multi-modal longitudinal corpus for monitoring changes in dementia progression. The corpus was collected in a natural setting from healthy controls and people with dementia over two phases, each spanning 28 sessions. Moreover, subjects could choose to hold conversations or write or type their thoughts on a variety of topics from reminiscence material provided by a bespoke tablet application. Despite the novel fine-grained longitudinal multimodal nature of the corpus, an important limitation is the relatively small-scale cohorts in the study. In particular there is only a small number of people who where able to participate in the second phase of the study. This was due to unforeseen disruptions to the study first via the introduction of GDPR regulation in 2018, which required pausing of the study to update software for data collection, and then COVID-19. This meant that in several cases 12 months or longer elapsed between phases 1 and 2 and as a result many of our participants were no longer able to participate, primarily due to a decline in their health or change in their personal circumstances. We aim to address this limitation by expanding the existing corpus with a new data collection spanning three phases within twelve months, by recruiting individuals from a collaborating memory clinic. Nevertheless the existing dataset is the first of its kind and has opened new avenues for research in longitudinal changes in language for people with dementia and across different modalities.

Indeed, we have introduced baselines to capture longitudinal changes in language across modalities in the two cohorts. In particular, we calculated the distance between adjacent and across non-adjacent sessions when those were mapped to fixed-size representations. A set of initial experiments showed promising results for monitoring dementia using fine-grained multi-modal longitudinal data. However these approaches are limited in capturing various linguistic functions associated with the progression of dementia (Tang-Wai & Graham, 2008; Klimova et al., 2015). In future work, we aim to use NLP techniques to characterise the language in terms of features likely to be associated with disease onset and progression and/or be suitable for detecting changes in use over time across all types of conversations, i.e., speech, transcriptions, typed and written thoughts. These will include analysis in terms of lexical, syntax and coherence features already identified in the literature (Fraser et al., 2015; Ellis, 1996); and in terms of recent approaches which infer vector-based representations of words or speakers (embeddings) from observed use and are well suited to tracking changes in language use over time (Hamilton et al., 2016; Tsakalidis et al., 2022).

8 Ethical considerations

The collection of the corpus involves ethical considerations especially as we are working with vulnerable individuals who have dementia. The study has received ethics approval from the NHS Research Ethics Committee (REC) and the Health Research Authority (HRA), with reference number 16/WS/0226. Participating individuals as well as their carers consented to permit data collection and analysis for research purposes. User identifying information was kept separate from the language data collected via the bespoke tablet application.

While data was collected anonymously, there are potential ethical concerns with using spoken language and computational approaches for monitoring changes in cognitive status and dementia. One concern is related to privacy and confidentiality, as language data may contain sensitive personal information. Other potential risks involve the misuse of models trained on the data for monitoring changes in cognition, which could be used carelessly or maliciously without considering the impact and social consequences in the broader community. To mitigate such risks, we apply strategies such as running software on authorised servers only, with encrypted data during transfer, anonymization of data prior to analysis. Data is only accessed by authorised individuals and interested parties can only obtain access subject to an NDA agreement which carefully states research goals.

For a real-world application, ethical concerns are related to the potential for misdiagnosis or overdiagnosis, which could lead to unnecessary treatment or psychological distress for patients and their families. Additionally, there may be issues related to access and equity, as some individuals may not have access to the necessary technology or resources for speech recognition and monitor through analysis of language. Finally, there may be concerns related to the accuracy and reliability of technology, as well as the potential for bias in the data or algorithms used for monitoring changes. It is important to consider these ethical concerns when developing and implementing technologies for dementia monitoring and diagnosis.

Notes

Now called Real communications https://realcommunicationworks.com

We discuss dropouts caused by unforeseen delays and how we aim to tackle the relatively small amount of subjects in the Limitations sect., 7

References

Ahmed, S., Haigh, A.-M., de Jager, C. A., & Garrard, P. (2013). Connected speech as a marker of disease progression in autopsy-proven Alzheimer’s disease. Brain, 136, 3727–3737.

Association, Alzheimer’s, et al. (2016). Alzheimer’s disease facts and figures. Alzheimer’s & Dementia, 12(4), 459–509.

Bayles, K. A., & Boone, D. R. (1982). The potential of language tasks for identifying senile Dementia. The Journal of Speech and Hearing Disorders, 47(2), 210–7.

Becker, J. T., Boller, F., Lopez, O. L., Saxton, J. A., & MCGonigle, K. L. (1994). The natural history of Alzheimer’s disease. Description of study cohort and accuracy of diagnosis. Archives of Neurology, 51(6), 585–94.

Clark, D. G., McLaughlin, P. M., Woo, E., Hwang, K. S., Hurtz, S., Ramirez, L. M., Eastman, J. A., Dukes, R.-M., Kapur, P., DeRamus, T. P., & Apostolova, L. G. (2016). Novel verbal fluency scores and structural brain imaging for prediction of cognitive outcome in mild cognitive impairment. Alzheimer’s & Dementia: Diagnosis, Assessment & Disease Monitoring, 2, 113–122.

Croisile, B., Ska, B., Brabant, M.-J., Duchêne, A., Lepage, Y., Aimard, G., & Trillet, M. (1996). Comparative study of oral and written picture description in patients with Alzheimer’s disease. Brain and Language, 53, 1–19.

Dijkstra, K., Bourgeois, M. S., Allen, R. S., & Burgio, L. (2004). Conversational coherence: Discourse analysis of older adults with and without Dementia. Journal of Neurolinguistics, 17, 263–283.

Ellis, D. G. (1996). Coherence patterns in Alzheimer’s discourse. Communication Research, 23, 472–495.

Elsey, C., Drew, P., Jones, D., Blackburn, D. J., Wakefield, S. J., Harkness, K., Venneri, A., & Reuber, M. (2015). Towards diagnostic conversational profiles of patients presenting with Dementia or functional memory disorders to memory clinics. Patient Education and Counseling, 98(9), 1071–7.

Folstein, M. F., Folstein, S. E., & McHugh, P. R. (1975). “Mini-mental state’’: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(3), 189–198.

Forbes-McKay, K. E., Shanks, M. F., & Venneri, A. (2013). Profiling spontaneous speech decline in Alzheimer’s disease: A longitudinal study. Acta Neuropsychiatrica, 25, 320–327.

Forbes-McKay, K. E., Shanks, M. F., & Venneri, A. (2014). Charting the decline in spontaneous writing in Alzheimer’s disease: A longitudinal study. Acta Neuropsychiatrica, 26, 246–252.

Fraser, K. C., Meltzer, J. A., & Rudzicz, F. (2015). Linguistic features identify Alzheimer’s disease in narrative speech. Journal of Alzheimer’s Disease: JAD, 49(2), 407–22.

Garrard, P., Haigh, A.-M., & de Jager, C. (2011). Techniques for transcribers: Assessing and improving consistency in transcripts of spoken language. Literary and Linguistic Computing, 26, 389–405.

Gayraud, F., Lee, H., & Barkat-Defradas, M. (2011). Syntactic and lexical context of pauses and hesitations in the discourse of Alzheimer patients and healthy elderly subjects. Clinical Linguistics & Phonetics, 25, 198–209.

Goldberg, T. E., Harvey, P. D., Wesnes, K. A., Snyder, P. J., & Schneider, L. S. (2015). Practice effects due to serial cognitive assessment: Implications for preclinical Alzheimer’s disease randomized controlled trials. Alzheimer’s & Dementia : Diagnosis, Assessment & Disease Monitoring, 1, 103–111.

Gonzalez, J., Mayordomo, T., Torres, M., Sales, A., & Mélendez, J. C. (2015). Reminiscence and Dementia: A therapeutic intervention. International Psychogeriatrics, 27, 1731–1737.

Goodglass, H. (2013). Boston diagnostic aphasia examination. Lea & Febiger.

Hamilton, W.L., Leskovec, J., & Jurafsky, D. (2016). Cultural shift or linguistic drift? comparing two computational measures of semantic change. In Proceedings of the Conference on Empirical Methods in Natural Language Processing. Conference on Empirical Methods in Natural Language Processing 2016 (pp. 2116–2121).

Hansebo, G., & Kihlgren, M. (2002). Carers’ interactions with patients suffering from severe Dementia: A difficult balance to facilitate mutual togetherness. Journal of Clinical Nursing, 11(2), 225–36.

de Ipiña, K. L., Alonso, J. B., Travieso-González, C. M., Solé-Casals, J., Eguiraun, H., Faúndez-Zanuy, M., Ezeiza, A., Barroso, N., Ecay, M., Martínez-Lage, P., & Martinez-de-Lizardui, U. (2013). On the selection of non-invasive methods based on speech analysis oriented to automatic Alzheimer disease diagnosis. Sensors (Basel, Switzerland), 13, 6730–6745.

Iter, D., Yoon, J.H., & Jurafsky, D. (2018). Automatic detection of incoherent speech for diagnosing schizophrenia. In CLPsych@NAACL-HTL.

Jack, C. R., Knopman, D. S., Jagust, W. J., Petersen, R. C., Weiner, M. W., Aisen, P. S., Shaw, L. M., Vemuri, P., Wiste, H. J., Weigand, S. D., Lesnick, T. G., Pankratz, V. S., Donohue, M. C., & Trojanowski, J. Q. (2013). Tracking pathophysiological processes in Alzheimer’s disease: An updated hypothetical model of dynamic biomarkers. The Lancet Neurology, 12, 207–216.

Karlekar, S., Niu, T., & Bansal, M. (2018). Detecting linguistic characteristics of Alzheimer’s Dementia by interpreting neural models. ArXiv: abs/1804.06440.

Kavé, G., & Dassa, A. (2018). Severity of Alzheimer’s disease and language features in picture descriptions. Aphasiology, 32, 27–40.

Klimova, B., Maresova, P., Valis, M., Hort, J., & Kuca, K. (2015). Alzheimer’s disease and language impairments: Social intervention and medical treatment. Clinical Interventions in Aging, 10, 1401–1408.

König, A., Linz, N., Tröger, J., Wolters, M. K., Alexandersson, J., & Robert, P. (2018). Fully automatic speech-based analysis of the semantic verbal fluency task. Dementia and Geriatric Cognitive Disorders, 45, 198–209.

Luz, S., Haider, F., de la Fuente, S., Fromm, D., & MacWhinney, B. (2020). Alzheimer’s Dementia recognition through spontaneous speech: The ADReSS challenge. ArXiv: abs/2004.06833.

Luz, S., Haider, F., de la Fuente, S., Fromm, D., & MacWhinney, B. (2021). Detecting cognitive decline using speech only: The ADReSSo challenge. In: medRxiv.

MacWhinney, B. (1992). The childes project: Tools for analyzing talk. Child Language Teaching and Therapy, 8, 217–218.

Maxim, J. & Bryan, K. (1994). Language of the elderly: A clinical perspective.

Mortamais, M., Ash, J. A., Harrison, J., Kaye, J., Kramer, J., Randolph, C., Pose, C., Albala, B., Ropacki, M., Ritchie, C. W., et al. (2017). Detecting cognitive changes in preclinical Alzheimer’s disease: A review of its feasibility. Alzheimer’s & Dementia, 13(4), 468–492.

Nasreen, S., Hough, J., & Purver, M. (2021). Detecting Alzheimer’s disease using interactional and acoustic features from spontaneous speech. In Interspeech.

Nobis, L., & Husain, M. (2018). Apathy in Alzheimer’s disease. Current Opinion in Behavioral Sciences, 22, 7–13.

Noone, P. (2015). Addenbrooke’s cognitive examination-iii. Occupational Medicine, 65(5), 418–420.

Organization, W.H., et al. (2019). Risk reduction of cognitive decline and Dementia: Who guidelines.

Orimaye, S. O., Wong, J.S.-M., Golden, K. J., Wong, C. P., & Soyiri, I. N. (2017). Predicting probable Alzheimer’s disease using linguistic deficits and biomarkers. BMC Bioinformatics, 18(1), 1–13.

Pan, Y., Mirheidari, B., Tu, Z.C., O’Malley, R., Walker, T., Venneri, A., Reuber, M., Blackburn, D.J., & Christensen, H. (2020). Acoustic feature extraction with interpretable deep neural network for neurodegenerative related disorder classification. In Interspeech.

Pan, Y., Mirheidari, B., Harris, J.M., Thompson, J.C., Jones, M., Snowden, J.S., Blackburn, D., & Christensen, H. (2021). Using the outputs of different automatic speech recognition paradigms for acoustic- and bert-based Alzheimer’s Dementia detection through spontaneous speech. In Interspeech.

Petry, S. D., Cummings, J. L., Hill, M. A., & Shapira, J. S. (1989). Personality alterations in Dementia of the Alzheimer type: A three-year follow-up study. Journal of Geriatric Psychiatry and Neurology, 2, 203–207.

Pinquart, M., & Forstmeier, S. (2012). Effects of reminiscence interventions on psychosocial outcomes: A meta-analysis. Aging & Mental Health, 16, 541–558.

Pistono, A., Pariente, J., Bézy, C., Lemesle, B., Men, J. L., & Jucla, M. (2019). What happens when nothing happens? an investigation of pauses as a compensatory mechanism in early Alzheimer’s disease. Neuropsychologia, 124, 133–143.

Pope, C., & Davis, B.H. (2011). Finding a balance: The carolinas conversation collection.

Prince, M., Bryce, R., Ferri, C., et al. (2011). The benefits of early diagnosis and intervention. World Alzheimer Report 2011.

Prince, M., Knapp, M., Guerchet, M., McCrone, P., Prina, M., Comas-Herrera, M., Adelaja, R., Hu, B., King, B., Rehill, D., et al. (2014). Dementia uk: update.

Purver, M. & Battersby, S.A. (2012). Experimenting with distant supervision for emotion classification. In Conference of the European Chapter of the Association for Computational Linguistics.

Reimers, N., & Gurevych, I. (2019). Sentence-bert: Sentence embeddings using siamese bert-networks. In Conference on Empirical Methods in Natural Language Processing.

Ritchie, K., Carrière, I., Berr, C., Amieva, H., Dartigues, J.-F., Ancelin, M.-L., & Ritchie, C. W. (2016). The clinical picture of Alzheimer’s disease in the decade before diagnosis: Clinical and biomarker trajectories. The Journal of Clinical Psychiatry, 77(3), 2907.

Rohanian, M., & Hough, J. (2021). Best of both worlds: Making high accuracy non-incremental transformer-based disfluency detection incremental. In Annual Meeting of the Association for Computational Linguistics.

Rosen, W. G., Mohs, R. C., & Davis, K. L. (1984). A new rating scale for Alzheimer’s disease. The American Journal of Psychiatry, 141(11), 1356–1364.

Schneider, L. S., & Sano, M. (2009). Current Alzheimer’s disease clinical trials: Methods and placebo outcomes. Alzheimer’s & Dementia, 5, 388–397.

Shor, J., Jansen, A., Maor, R., Lang, O., Tuval, O., de Chaumont Quitry, F., Tagliasacchi, M., Shavitt, I., Emanuel, D., & Haviv, Y.A. (2020). Towards learning a universal non-semantic representation of speech. ArXiv: abs/2002.12764.

St-Pierre, M.-C., Ska, B., & Béland, R. (2005). Lack of coherence in the narrative discourse of patients with Dementia of the Alzheimer’s type. Journal of Multilingual Communication Disorders, 3, 211–215.

Taler, V., & Phillips, N. A. (2008). Language performance in Alzheimer’s disease and mild cognitive impairment: A comparative review. Journal of Clinical and Experimental Neuropsychology, 30, 501–556.

Tang-Wai, D. F., & Graham, N. L. (2008). Assessment of language function in Dementia-Alzheimer. Geriatrics, 11(2), 103–110.

Tarawneh, R., & Holtzman, D. M. (2012). The clinical problem of symptomatic Alzheimer disease and mild cognitive impairment. Cold Spring Harbor Perspectives in Medicine, 2(5), 006148.

Tsakalidis, A., Nanni, F., Hills, A., Chim, J., Song, J., & Liakata, M. (2022). Identifying moments of change from longitudinal user text. arXiv preprint arXiv:2205.05593.

Voleti, R., Liss, J. M., & Berisha, V. (2019). A review of automated speech and language features for assessment of cognitive and thought disorders. IEEE Journal of Selected Topics in Signal Processing, 14, 282–298.

Weiner, J., & Schultz, T. (2016). Detection of intra-personal development of cognitive impairment from conversational speech. In ITG Symposium on Speech Communication.

Weiner, J., Herff, C. & Schultz, T. (2016). Speech-based detection of Alzheimer’s disease in conversational german. In Interspeech.

Yuan, J., Bian, Y., Cai, X., Huang, J., Ye, Z., & Church, K. W. (2020). Disfluencies and fine-tuning pre-trained language models for detection of Alzheimer’s disease. In Interspeech.

Zhu, Y., Obyat, A., Liang, X., Batsis, J. A., & Roth, R. M. (2021). Wavbert: Exploiting semantic and non-semantic speech using wav2vec and bert for Dementia detection. Interspeech, 2021, 3790–3794.

Acknowledgements

This work was supported by a UKRI/EPSRC Turing AI Fellowship to Maria Liakata (grant EP/V030302/1), the Alan Turing Institute (grant EP/N510129/1), and Wellcome Trust MEDEA (grant 213939). Matthew Purver acknowledges financial support from the UK EPSRC via the projects Sodestream (EP/S033564/1) and ARCIDUCA (EP/W001632/1), and from the Slovenian Research Agency grant for research core funding P2-0103.

Author information

Authors and Affiliations

Contributions

Dimitris Gkoumas: Wrote the main manuscript, Conducted the analysis in Sect. 3 and the experiments in Sect. 4. Maria Liakata: Wrote the main manuscript. Adam Tsakalidis: Conceptualised and wrote the longitudinal task in Sect. 5.1. Bo Wang: Contributed to the Introduction and feature extraction in Sect. 5.1. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gkoumas, D., Wang, B., Tsakalidis, A. et al. A longitudinal multi-modal dataset for dementia monitoring and diagnosis. Lang Resources & Evaluation 58, 883–902 (2024). https://doi.org/10.1007/s10579-023-09718-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10579-023-09718-4