Abstract

Several studies have shown that mouth movements related to the pronunciation of individual phonemes are represented in the sensorimotor cortex. This would theoretically allow for brain computer interfaces that are capable of decoding continuous speech by training classifiers based on the activity in the sensorimotor cortex related to the production of individual phonemes. To address this, we investigated the decodability of trials with individual and paired phonemes (pronounced consecutively with one second interval) using activity in the sensorimotor cortex. Fifteen participants pronounced 3 different phonemes and 3 combinations of two of the same phonemes in a 7T functional MRI experiment. We confirmed that support vector machine (SVM) classification of single and paired phonemes was possible. Importantly, by combining classifiers trained on single phonemes, we were able to classify paired phonemes with an accuracy of 53% (33% chance level), demonstrating that activity of isolated phonemes is present and distinguishable in combined phonemes. A SVM searchlight analysis showed that the phoneme representations are widely distributed in the ventral sensorimotor cortex. These findings provide insights about the neural representations of single and paired phonemes. Furthermore, it supports the notion that speech BCI may be feasible based on machine learning algorithms trained on individual phonemes using intracranial electrode grids.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Throughout the past decades the field of brain-computer interfaces (BCI) has undergone unprecedented advancements. One major goal of BCI research has been the development of communication devices. Whereas healthy people are able to communicate verbally and non-verbally in order to interact with their environment, people who suffer from locked-in syndrome (LIS) have lost this ability. This syndrome is characterized by a loss of motor function, while consciousness and cognition remain intact. Research on the development of a BCI for communication has the potential to give these patients a “voice” again.

Many of the current BCI’s solutions are based on activity related to the execution of hand movements. Previous studies using intracortical microelectrode arrays translated neural signals into point-and-click commands to control a computer (Pandarinath et al. 2017; Nuyujukian et al. 2018). Furthermore, attempted handwriting movements were translated to text in real time (Willett et al. 2021). Other research discriminated between four complex hand gestures from American sign language alphabet from primary motor and sensory cortex using electrocorticography (ECoG) (Bleichner et al. 2016; Branco et al. 2017). One ECoG-BCI already enables patients with electrodes that are implanted on the hand motor area of the cortex to communicate at home with attempted hand movements (Vansteensel et al. 2016).

The use of motor activity related to speech production might represent an alternative, more intuitive target for a BCI implant. Neuronal networks related to speech production involve complex mechanisms that operate at several processing levels, where ultimately, the execution of coordinated and sequential movements of the articulators give rise to speech. While the decoding of words based on their pattern of articulator activity in the sensorimotor cortex should be possible in principle, the extensive vocabulary of any language makes this practically impossible. Instead, decoding might be performed on the phonemes that make up a word. Since the number of phonemes within a language is substantially lower, phonemes could serve to decode continuous speech (Mugler et al. 2014a, b; Wilson et al. 2020). Despite intracranial electrophysiology being the state of art for the development of naturalistic speech BCIs, fMRI can offer valuable neuroscientific insights than can be subsequently verified using intracranial methods. Previous studies with functional magnetic resonance imaging (fMRI) have successfully decoded articulator movements (Bleichner et al. 2015), consonant-vowel-consonant utterances (Correia et al. 2020), syllables (Otaka et al. 2008) and words (Grootswagers et al. 2013). Furthermore, invasive electrophysiological recordings allowed the classification of isolated phonemes (Blakely et al. 2008; Ramsey et al. 2018), single phonemes within words from the ventral sensorimotor cortex (Mugler et al. 2014a, b) and single phonemes within continuous speech from the dorsal sensorimotor cortex (Wilson et al. 2020). These studies provide evidence that articulators and phonemes are represented in the sensorimotor cortex during speech production and that it should be possible to develop a BCI system for continuous speech based on phonemes.

In this study we assess the decodability of single phonemes and combination of phonemes based on Blood-oxygen-level-dependent (BOLD) responses in the sensorimotor cortex using 7-Tesla fMRI. Importantly, we assess whether phonemes can be distinguished in isolation and within combinations, the latter being relevant for (eventually) detecting phonemes within spoken words, which would validate the prospect of reconstructing words based on classifiers for individual phonemes. In addition, we explored in more detail the topographical presentations such as laterality and presence in the adjacent non-motor language area (pars triangularis and pars opercularis). Finally, we explore differences in the spatial properties of neuronal representations between phonemes.

Methods

Participants

Fifteen subjects (average age 23.07 ± 2.54 years; 8 male) participated in the study. The study was approved by the medical-ethical committee of the University Medical Center Utrecht and all subjects gave their written informed consent in agreement with the Declaration of Helsinki (World Medical Association, 2013).

Scan Protocol

The fMRI measurements were performed on a whole-body 7 Tesla MR system (Achieva, Philips Health Care, Best, Netherlands) using a 32-channel head-coil (Nova Medical, MA, USA). Subjects were provided with hearing protection while being in the scanner. Prior to the Phoneme task, a T1-weighted MP2RAGE (Marques et al. 2010) image of whole-brain was acquired. The functional data was recorded in transverse orientation using an EPI-sequence with the following parameters: repetition time (TR) = 1400 ms, echo time (TE) = 25 ms, flip angle (FA) = 60°, voxel size: 1.586 × 1.586 × 1.75 mm (Willett et al. 2021), 30 slices, ascending interleaved slice acquisition order, field of view (FOV) = 226.462 (AP) x 52.5 (FH) x 184 (LR) mm (Willett et al. 2021), anterior-posterior phase encoding direction and the slice stack was rotated so that the FOV covered the left pre- and postcentral gyrus. A total of 1680 functional images were acquired for each subject. At the start of functional imaging, a single functional image with identical parameters except for a reversed phase-encoding blip was acquired. Prism glasses allowed subjects to visualize the task displayed on a screen by a projection through a waveguide.

Stimuli and task Design

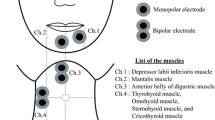

For the experiment, participants overtly pronounced different phonemes (/t/, /p/, /ə/). These particular phonemes were chosen as their pronunciation primarily engages individual articulators, including tongue for /t/ (alveolar consonant), lip for /p/ (alveolar consonant), and larynx for /ə/ (central vowel). During the task, seven different stimulus classes were presented: three single phonemes (/t/, /p/, /ə/), three paired phonemes (/p/ /t/, /ə/ /t/, /p/ /ə/) and a single triplet phoneme (/ə/ /t/ /p/). The triplet phoneme class (/ə/ /t/ /p/) was excluded from the current analyses as we noticed that the discrimination of this condition was mainly driven by a higher number of phonemes that was being pronounced.

A slow event-related design was used for the task, with an inter-trial interval of 14s. The production of each individual phoneme was cued by a visual stimulus. For paired phoneme trials, there was an interval of 1s between the individual phoneme cues within the trial (Fig. 1). A /%/ was added to the visual stimuli to control for visual confounders in the trials with less than 3 phonemes. Participants were instructed to remain silent if /%/ was underlined. Each participant performed six runs of the task, where each run included four repetitions per class, providing a total of 24 trials per run. The trial order per run was randomly generated, with the same sequence being used for all participants (trials were perfectly balanced across participants). Prior to the scanning session, the participants were instructed on the proper pronunciation of the phonemes and the importance of restricting any other movements.

Preprocessing

Preprocessing was performed using SPM12 (http://www.fil.ion.ucl.ac.uk/spm/), FreeSurfer 7.0 (Fischl 2012) (https://surfer.nmr.mgh.harvard.edu) and FSL 6.0 (Jenkinson et al. 2012) (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/FSL). The functional images were slice time corrected and realigned & unwarped using SPM12. Spatial distortions in the functional images were corrected using FSL’s topup in combination with the functional image that was acquired with reversed phase encoding blip (Andersson et al. 2003). The resulting functional images were coregistered to the anatomical image, the mean was subtracted from the timeseries for each run, and timeseries were high-pass-filtered using a kernel with a cut-off at 70 s to eliminate low frequency signal drifts. Subsequently, a cortical surface reconstruction was created based on the anatomical image using the FreeSurfer recon-all pipeline. Regions of interest were created based on the FreeSurfer parcellation according to the Desikan-Killiany atlas and included the sensorimotor cortex (precentral and postcentral gyrus), and pars triangularis and pars opercularis (referred to as PTPO, compromising Broca’s area for left hemisphere, and its homologue for the right hemisphere). An additional region of interest (ROI) was created that only included the cerebral white matter. This area was included as control area as it is assumed not to exhibit BOLD responses related to phoneme production. The white matter was slightly eroded to avoid inclusion of grey matter due to partial voluming effects.

2.1 Classification

Machine learning classification was performed on the functional data in the predefined ROIs using a MATLAB implementation of a multiclass Support Vector Machine (SVM) using a linear kernel (with a constraint parameter C = 1). SVM is known to be suitable for decoding patterns of fMRI activity involving high-dimensional data (Mitchell et al. 2004). We used the default regularization parameter since the number of voxels used was substantially higher than the number of trials, enabling a linear separability without the need to optimize the parameter C (Mourão-Miranda et al. 2006).

We used a leave-one-run out cross-validation scheme. Per subject, the 5 steps described here below were repeated for each of the 6 runs. With each iteration, one run was left out for training the model, and was subsequently used to test the model.

-

(1)

A General Linear Model was fitted on the training data using one regressor per class plus six head movement parameters, providing a single t-map for each trial class;

-

(2)

A mask was created for each predefined ROI, including the 1000 voxels with the highest t-values across the six t-maps (the percentage of the total number of voxels per ROI this represents is displayed in Table 1);

-

(3)

From these voxels, the BOLD signal across images 4th, 5th or 6th (4.9–7.7 s) after each trial onset was averaged and extracted for the training and testing data for both isolated and paired phoneme trials (Bleichner et al. 2014, 2015; Bruurmijn et al. 2017). These images were chosen given they correspond to the peak of the BOLD response (Fig. 2). During these images no articulator movements that could cofound results were made;

-

(4)

The resulting values per voxel were used as features in training and testing the SVM;

-

(5)

Accuracies were calculated by classification of the trials in the test run. The resulting accuracies were averaged across these runs, and subsequently averaged across subjects.

The group-mean (n = 15) BOLD signal (A) and classification accuracy (B) at each time point after stimulus onset. Three ROIs were used: sensorimotor cortex represented in blue, pars triangularis and pars opercularis in green and the cerebral white matter in yellow. The cerebral white matter was used as control, since a low BOLD signal and classification accuracy were expected. The shaded areas directly surrounding the curves represent the 95% confidence intervals. The grey shaded area marks the period during which participants were pronouncing the phonemes. The shaded red area marks the time interval used for the feature extraction (mean across images 4, 5 and 6). The baseline of responses in panel A is set at the signal amplitude during the last image, as it is least affected by motion artefacts or BOLD signal changes

This approach was repeated to classify all phoneme classes, only single phoneme, only paired phoneme trials, using single phonemes as training data and paired phonemes as testing data, using paired phonemes as training and single phonemes as testing data. For the classification with single phonemes, the data for training and testing included only trials with single phonemes. Similarly, classification with paired phonemes trials included only trials with paired phonemes for both training and testing. Additional analyses were performed to address possible confounding of classification results by motion artefacts due to phoneme production (see Results section). Furthermore, in order to assess if paired phonemes could be predicted based on the activity of isolated phonemes, the SVM was trained on activity during production of the single phoneme trials and used to classify paired phoneme trials. The opposite analysis was also performed, with the activity during paired phoneme trials being used to train the SVM, while testing the activity during single phoneme trials.

In order to establish a statistical significance (one sided, α = 0.05) threshold for the classification accuracies per subject, a Monte Carlo simulation was performed with 1000 permutations of the trial-class labels (Modarres and Good 1995; Nichols and Holmes 2002; Combrisson and Jerbi 2015). Group-level results were obtained by averaging classification accuracies and confusion matrices across subjects, and using t-tests for statistical inference. Bonferroni correction for multiple comparisons was used to account for fifteen comparisons: (1) sensorimotor cortex (bilaterally), (2) precentral gyrus (bilaterally), (3) postcentral gyrus (bilaterally), (4) pars triangularis and opercularis (bilaterally), (5) cerebral white matter (bilaterally), (6) sensorimotor cortex left hemisphere,7) precentral gyrus left hemisphere, 8) postcentral gyrus left hemisphere, 9) pars triangularis and opercularis left hemisphere, 10) cerebral white matter left hemisphere, 11) sensorimotor cortex right hemisphere,12) precentral gyrus right hemisphere, 13) postcentral gyrus right hemisphere, 14) pars triangularis and opercularis right hemisphere and 15) cerebral white matter right hemisphere. To assess whether there were significant differences between phoneme classes, a Friedman test was conducted followed by post-hoc pairwise comparisons with adjustment of the p-values to correct for multiple comparisons.

Univariate Results

To establish univariate (voxel-wise) results for the trials with one-phoneme, a GLM was created using a design matrix including factors for each condition and each run. The resulting volumes with beta-coefficients that represented single phoneme trials were averaged across runs, resulting in three volumes for each subject. These volumes were mapped to the surface using an enclosing voxel algorithm and while smoothing across the surface with a 12 mm gaussian kernel. The resulting surface-based activity maps were used as input for second-level analyses with one-sample and paired samples t-tests, comparing the single phoneme conditions against rest and against each other. Correction for multiple comparison was done using Random Field Theory.

Surface-based Searchlight

A multivariate searchlight (Kriegeskorte et al. 2006) analysis was used to generate surface-based maps (Chen et al. 2011) indicating the presence of local information driving classification results. The volumetric functional data of each run was mapped to the cortical surface using FreeSurfer. An enclosing voxel (‘nearest neighbor’) algorithm was used to map voxel values to the vertices. The method for inclusion of vertices for each MVPA during the searchlight analysis was as follows:

-

1)

All vertices were sequentially chosen as seed vertex.

-

2)

All other vertices were sorted based on Euclidean distance to the seed-vertex in the inflated surface.

-

3)

If multiple vertices were sampled from the same voxel, a single vertex was selected which was the one closest to the seed voxel.

For each vertex, a SVM was trained and tested including the activity in the 200 most proximate vertices (across the inflated surface) that were sampled from separate voxels. The 200 vertices closest to the seed vertex were selected as features for the MVPA. The SVM procedure was similar to the one described in the Classification section and resulted in a classification accuracy and confusion matrix for every vertex of the surface. For groupwise analysis, the single-subject results were registered to Freesurfer’s left/right symmetrical templates (fsaverage-sym) (Greve et al. 2013) and averaged across subjects. To correct for multiple comparisons, family wise error correction based on Random Field Theory was applied (p <.05).

Results

Single and Paired Phonemes can be Decoded Bilaterally from both the Sensorimotor Cortex and pars Triangularis and Opercularis

First, we assessed if it was possible to discriminate between all 6 phoneme trial classes. The classification accuracy for the bilateral sensorimotor cortex was significantly above the 16.7% chance level (a = 0.05 at a threshold of 22%) for each participant. The mean accuracy across the 15 participants for the bilateral sensorimotor cortex was 43% (SD = 9%; min-max = 33 − 63%; one-sample t(14) = 13.1; p <.001; see Table 2; Fig. 3).

Group mean (n = 15) classification accuracy when including all 6 classes for activity in the sensorimotor cortex (blue) and pars triangularis and pars opercularis (green). Adjacent boxes indicate the range of the 25th and 75th percentile, the red line inside the box represents the mean. LR = left and right hemispheres combined; L = left hemisphere; R = right hemisphere. *** p <.001, Bonferroni corrected for multiple comparisons

To investigate the decodability of the distinct classes, we visually inspected the mean confusion matrix across subjects (Fig. 4). The percentage of correctly classified trials for each class ranged from 41 to 54%. We further observed that the decoder confuses significantly more amongst single phoneme trials and amongst paired phoneme trials, instead of confusing single phoneme with paired phoneme trials and vice-versa (paired t(14) = 12.4; p <.001).

The classification was repeated using only precentral or postcentral gyrus. The accuracies for the precentral and postcentral gyrus were 45% and 42% respectively (SD = 8.33%; min-max = 29 − 60%; one-sample t(14) = 12.95; p <.001 for precentral gyrus; SD = 8.97%; min-max = 29 − 60%; one-sample t(14) = 10.79; p <.001 for postcentral gyrus), however no significant difference was observed between the gyri (paired t(14) = 1.64; p =.12; Table 2). In addition, we assessed the potential of classifying phonemes based on activity in PTPO. The mean accuracy for PTPO (Table 2; Fig. 3), was 32% (SD = 8%; min-max = 20 − 42%; one-sample t(14) = 7.8; p <.001), which was significantly lower than the accuracy obtained for the sensorimotor cortex (Bonferroni corrected paired t(14) = 9.8; p <.001).

Visual inspection of the confusion matrix shows that for single phoneme trials, trials with the phoneme /ə/ are more reliably classified (52%) than trials with /t/ and /p/ (41% and 46% respectively). For trials with paired phonemes, the trials with the paired phonemes /p/ /ə/ are more accurately classified (54%) than trials with phonemes /p/ /t/ and /ə/ /t/ (47% and 42% respectively) (Fig. 4). However, Friedman test followed by multiple comparison correction showed the only significant difference was between the conditions /t/ and /p/ /ə/ (mean rank difference = -3.99; adjusted p =.02).

Phoneme Information is Not Lateralized

To assess hemispheric preference for phoneme decoding, we calculated the classification accuracy for the left and right hemisphere separately. This revealed no significant difference between left and right hemispheres for both sensorimotor cortex (paired t(14) = 2.3; p =.0356, Bonferroni corrected; Table 2; Fig. 3) and PTPO (paired t (14) = 1.7; p =.1078, Bonferroni corrected).

Classification when including both hemispheres was significantly higher than for only the right hemisphere, but not only the left hemisphere (Table 2; Fig. 3; both vs. left hemisphere: paired t (14) = 1.1; p =.3001, Bonferroni corrected; both vs. right hemisphere: paired t (14) = 4.7; p <.001, Bonferroni corrected). Similarly, in the PTPO, the difference between both hemispheres and a left hemisphere was not significant, while the difference between both hemispheres and right hemisphere was significant (Table 2; Fig. 3; both vs. left hemisphere: paired t (14) = 3; p =.0091, Bonferroni corrected; both vs. right hemisphere: paired t (14) = 3.6; p =.003, Bonferroni corrected).

Functional Images used are free of head Movement Artifacts Associated with Phoneme Production

To inspect whether the observed classification scores were truly based on neural representation, rather than head motion artifacts introduced by articulator movements, we repeated the classification analysis. However, instead of using the mean of voxels across three images as features in the classification procedure, we used the voxel values of each single image after stimulus onset. Effects of head movements on classification scores should only occur during the first 3.5s after trial onset in order to not interfere with results. Classification on images after 4.9s (which corresponds to the 4th image after stimuli onset) should be free of head motion artifacts.

The BOLD signals and classification accuracy at each time point are shown in Fig. 2A and B. In absence of confounding BOLD activity caused by motion artifacts, the classification accuracy over time since stimulus onset should resemble a BOLD-like shape. While evidence for motion artifacts for the images directly following the stimulus may be anticipated due to the presence of mouth movements, this is acceptable as long as there are no indications that they affect the images from which the features were extracted (i.e. images 4, 5, 6).

Accuracy over the image since onset in the sensorimotor cortex and PTPO produced a BOLD like shape, with a peak corresponding to the peak in the BOLD response at 4.9 s, and subsequent decrease until the next stimulus. In contrast to the BOLD responses, there was an increase in the classification accuracy around two seconds after stimulus onset across all ROIs (sensorimotor cortex, PTPO and cerebral white matter). These time points coincide with the period participants were engaging in phoneme production (grey highlighted area in Fig. 2B), and the increased classification accuracy is thus likely driven by motion artifacts. The reduction in BOLD relative to baseline during the first 2 images (Fig. 2A) may indicate that these artefacts operate through imperfect shimming as a result of changes in the position of the articulators during phoneme production relative to rest.

Variations in classification accuracy after 3.5s are similar to the BOLD response, suggesting they are driven by cerebrovascular instead of artifactual signal changes. Hence, it is unlikely that phoneme classification during images 4–6 is related to motion.

Paired Phonemes can be Decoded by Training the Classifiers with Single Phonemes

To establish the possibility of classifying phoneme combinations based on single phoneme activity, a SVM was trained on activity during production of the single phoneme trials and used to classify paired phoneme trials. The group-mean classification matrix can be seen in Fig. 5A. Results were tested by estimating the accuracy of correctly predicting the absence of a single phoneme in a paired phoneme trial (chance level is 33%). The group-mean accuracy was 53% (SD = 9%; min-max = 38 − 71%; one-sample t(14) = 15.6; p <.001) (see Table 2), with 11 out of 15 subjects having a classification accuracy significantly above chance level (p <.05). Visual inspection of the confusion matrix revealed that when classifiers were trained on single phonemes and tested on paired phoneme trials, the group-mean classification matrix reveals a highest accuracy for the detection of the second phoneme produced, however this difference was not significant (paired t(14) = 0.24; p =.81). Results of the reversed classification scheme, where isolated phoneme trials are predicted based on the activity of paired phonemes trials, were significant as well (Group-mean accuracy = 51%; SD = 7%; min-max = 39 − 61%; one-sample t(14) = 20.1; p <.001) (see Table 2; Fig. 5B). Importantly, the accuracy of predicting the phonemes present in the conditions was significantly higher than the accuracy of predicting the absent phonemes for both schemes (paired t(14) = -10.33; p <.001 for training on single phonemes and testing on paired; paired t(14) = -9.69; p <.001 for training on paired and testing on single phonemes).

Group-mean classification matrix showing accuracies while training on single phoneme trials and classifying paired phoneme trials (A and C) and while training on paired phoneme trials and classifying single phoneme trials (B and D). Panel A and B represent classification results while using fMRI data from the sensorimotor cortex. Panel C and D show results when using simulated data, assuming linear addition of single phoneme activity in paired phoneme trials. Note that the matrix is structured so that the cells where the predicted/true single phoneme is absent from the true/predicted paired phoneme trial, are located on the diagonal (bottom-left to upper-right)

Classification of Simulated data that Assumes Linear Addition of Phoneme Activity is Similar to fMRI data

To verify that obtained classification results were consistent with paired phonemes being linear additions of single phonemes, we performed an additional analysis based on simulated data. Simulated data, meant to represent the extracted BOLD signal of the 3 individual phonemes, was generated by creating 3 fixed normally distributed patterns of 1000 features. Independent normally distributed noise was added to the pattern of every individual trial. The signal to noise ratio was adjusted until the classification accuracy while using simulated data representing the isolated phoneme trials was similar to the classification accuracy obtained to classify individual phonemes with BOLD signal (chance level of 33%). In order to simulate the combined phonemes trials, the patterns representing 2 of the isolated phonemes were linearly combined. The decodability of linearly combined simulated data was assessed by training SVM with simulated isolated phoneme trials and used to classify simulated combined trials, and vice-versa. Data was randomly generated one hundred times, and classification accuracies and confusion matrices were averaged across one hundred repetitions. The pattern of results was similar to those obtained with the real data (see Fig. 5C and D). Note that these results indicate consistency with linear addition of phoneme activity, but that other underlying mechanisms might produce similar results.

Local Information to Decode Phonemes is Localized around the Central Sulcus

Univariate and a searchlight SVM approaches were used to obtain more insight in the spatial distribution that is underlying phoneme activation and information used for classification. Univariate results show that there was significant activity when comparing each single phoneme condition with rest in the ventral sensorimotor cortex bilaterally (Fig. 6). However, for none of the comparisons between single phoneme conditions were there any voxels with significantly different activity (not shown). The groupwise searchlight-results including all 6 classes show that most relevant discriminability is in the mouth area of the ventral sensorimotor cortex (Fig. 7A and B). In order to investigate the nature of the distribution in more detail, three ROIs were manually defined that covered the superior, medial, and inferior part of the ventral sensorimotor cortex. Visual inspection of the mean confusion matrices in these ROIs (Fig. 7C, D, E), indicated that significant accuracy was primarily driven by classification between single and paired phoneme trials.

Results of the univariate analysis comparing activity with rest on an inflated surface for the /p/ phoneme (A), the /t/ phoneme (B), and the /ə/ phoneme (C). The blue line marks the border of the area that was included in the groupwise analysis. Multiple comparisons correction with family wise error correction based on Random Field Theory (p <.05)

Significant groupwise (n = 15) searchlight classification results while including all classes, superimposed on the inflated surface template of the left (A) and right (B) hemisphere. The panels on the right show the mean confusion matrices for significant (p <.05) vertices in the superior (C), medial (D) and inferior (E) part of the sensorimotor cortex. The blue line marks the border of the area that was included in the groupwise analysis. Multiple comparisons correction with family wise error correction based on Random Field Theory (p <.05)

Furthermore, we repeated the searchlight procedure with including only single phoneme trials. This analysis revealed far fewer significant vertices, but most were still located in the ventral sensorimotor cortex (Fig. 8A and B). In order to assess if the searchlight results included evidence that discriminability was based on differential engagement of the somatotopic representations of the main articulators for each single phoneme class, the same three ROIs (superior, medial, and inferior) were used to calculate mean confusion matrices. According to this rationale, e.g. the lip area (superior ROI) may accurately identify a /p/ phoneme, while confusing the other phonemes. The mean confusion matrices for different portions of the ventral sensorimotor cortex did however not vary substantially, suggesting that classification between single phonemes is not driven by differential somatotopic activity (see Fig. 8C, D and E).

Significant groupwise (n = 15) searchlight classification results while including only single phoneme trials, superimposed on the inflated surface template of the left (A) and right (B) hemisphere. The panels on the right show the mean confusion matrices for significant (p <.05) vertices in the superior (C), medial (D) and inferior (E) part of the sensorimotor cortex. The blue line marks the border of the area that was included in the groupwise analysis. Multiple comparisons correction with family wise error correction based on Random Field Theory (p <.05)

Discussion

In this study, we demonstrated the possibility of classifying phonemes pronounced in isolation and in pairs based on BOLD activity in the sensorimotor cortex and PTPO, with sensorimotor cortex achieving the highest accuracy (see Table 2; Fig. 3). Additionally, we were able to classify phonemes that were pronounced in pairs while using support vector machines trained on isolated phonemes (see Table 2; Fig. 5A), indicating the activity patterns of single phonemes is recognizable when phonemes are pronounced in combinations. Simulation results indicate this is in line with the activity of combined phonemes being linear combinations of individual phonemes. However, more detailed analyses using searchlight support vector machines did not reveal evidence that this was due to a straightforward somatotopic addition of activity of individual articulators. Our results are of importance for creating a speech BCI that works by detecting the presence of individual phonemes in spoken words.

Our results for the sensorimotor cortex are in line with previous fMRI experiments investigating the feasibility of classifying mouth-actuator-activity. Bleichner et al. used 7T-fMRI to classify 4 different mouth movements with an 80% accuracy (Bleichner et al. 2015), and Correia et al. achieved significant classification based on BOLD responses for voiced and whispered phonatory gestures involving the tongue, lips and velum (Correia et al. 2020). Results from ECoG measurements further confirm the sensorimotor cortex as a suitable area for decoding speech-related brain activity. This includes the classification of phonemes (Ramsey et al. 2018), vowels and consonants from consonant-vowel syllables (Livezey et al. 2019), letter sequences using code words (Metzger et al. 2022) and sentences (Moses et al. 2021). Additionally, there was no significant difference between the classification accuracy of the precentral and postcentral gyrus. Previous studies have demonstrated that somatosensory representations are still present for patients with limited or no motor output and amputees (Bruurmijn et al. 2017), suggesting that they may also be preserved after paralysis and thus provide a target for BCIs.

Importantly, the main novel finding in our study is the establishment of the feasibility of detecting single phonemes could be detected when pronounced in pairs, when training on single phoneme activity. This finding establishes a proof of principle for detecting the building blocks of language production, allowing machine learning on single phonemes to classify words. This is in line with previous ECoG studies that demonstrated the feasibility of discriminating phonemes within words (Mugler et al. 2014a, b). Note however that the paired phonemes in our study were pronounced consecutively with 1 s interval, which is not representative for word pronunciation during natural language production. E.g., Salari et al. demonstrated that neural signatures are influenced by previous speech movements when spaced a second or less apart using repeated vowel production (Salari et al. 2019) and that including neural patterns of a vowel pronounced in different contexts (isolation or preceded by other vowel) in the training set performs better than training the classifier for each context (Salari et al. 2018). The influence of these complicating interactions seems to be limited at the pace of phoneme production that we chose, as the results we observe are roughly similar to those acquired when classifying based on simulated data where paired-phoneme trial data was created by linear addition of the activity of single phonemes. A speech BCI using the principles applied in this experiment could thus be feasible up to a speed of at least 1 phoneme per second.

Using a searchlight SVM, we found that phoneme-classification based on local information was possible primarily in the ventral sensorimotor cortex, particularly close to the central sulcus (Fig. 6A and B). However, the confusion matrices of the significant vertices indicated that the classifiers were mostly differentiating between single and paired phonemes (Fig. 6C, D and E). It is thus most likely that significant local classification is caused simply by trials with paired phonemes yielding higher activity than trials with single phonemes, since increasing the quantity of stimulation should enhance BOLD responses. To account for this, the searchlight analysis was repeated using single phoneme trials only (Fig. 7A and B). While this analysis revealed a few locations in the ventral sensorimotor cortex where significant classification based on local information was possible, the extent of these areas was far less than when including all classes. In addition, the confusion matrices for different portions of the ventral sensorimotor cortex were highly similar, indicating that pronunciation of the different phonemes did not result in mapping to the Penfield representations due to differential use of tongue, or larynx due articulators (Figure C, D and E). Also, the univariate comparisons between the single phoneme conditions did not reveal any significant differences. The absence of clearly localized patterns is in line with more variable articulator representation than has been reported previously (Carey et al. 2017). Studies with ECoG have also reported more broadly distributed and overlapping combinations of electrodes to decode phonemes and syllables (Ramsey et al. 2018; Bouchard et al. 2013; Conant et al. 2014). These results suggest that for the purpose of a speech-BCI it would be beneficial to cover a more extensive part of the ventral sensorimotor cortex.

Despite the fact that most BCIs have focused on neural signals originating from the sensorimotor cortex because of its somatotopic organization, other brain regions may be suitable for BCI purposes as well (Gallego et al. 2022). Broca’s area has been shown to have a roles in speech production, ranging from representation of articulatory programs to higher linguistic mechanisms (Papitto et al. 2020; Fedorenko and Blank 2020). However, it has received far less attention in speech decoding studies than the sensorimotor cortex, especially among studies using intracranial recordings. The classification accuracy from PTPO was significant, but lower than for the sensorimotor area (Table 2; Fig. 3), suggesting PTPO to be sub-optimal for a BCI implant. These results align with result those of previous studies that showed no electrodes significantly decoded covertly produced vowels (Ikeda et al. 2014), no activity recorded during articulation of single words using ECoG (Flinker et al. 2015) and no speech information during attempted speech using microarrays (Willett et al. 2023). On the other hand, there is evidence for the possibility to decode overtly and covertly produced vowels and consonants within phonological sequences (Pei et al. 2011). Results of studies so far that compared neuronal representations of overt, covert and attempted speech observed substantial overlap (Palmer et al. 2001; Shuster and Lemieux 2005; Zhang et al. 2020; Brumberg et al. 2016; Soroush et al. 2023) and classifiers trained on attempted speech have been successfully used to classify overt speech based on features acquired using intracranial recordings (Metzger et al. 2023; Willett et al. 2023). Additionally, it was shown that Broca’s neural activity predicted speech onset in other intracranial studies (Delfino et al. 2021; Castellucci et al. 2022; Rao et al. 2017). This evidence is further supplemented by recent establishment of Broca’s involvement in higher-level language processes such as sequencing, syntax, lexical selection, among others (Bohland and Guenther 2006; Riecker et al. 2008; Conner et al. 2019; Matchin and Hickok 2020; Deldar et al. 2020). The task used in our study most likely did not sufficiently engage these speech related functions.

Searchlight results using all phoneme classes revealed significant classification in the temporal lobe. As participants overtly pronounced the phonemes, this could have been the result of responses in the primary auditory cortices. Whether this did in fact occur is unclear, as subjects wore hearing protection and were in a noisy environment. Nevertheless, auditory stimulation could have happened through bone conduction. If classification from auditory cortex in our subjects is based on acoustic stimulation, it would not be feasible in locked-in patients, given that they are expected to produce attempted speech with limited or no auditory output. However, some studies observed activation of auditory regions in covert, imagined and attempted speech (Metzger et al. 2023; Zhang et al. 2020; Soroush et al. 2023; Martin et al. 2016), but it needs to be further explored whether this can be used for distinguishing words. In addition, as our primary focus was on the sensorimotor cortex, we defined the field of view to maximize voxel coverage within this area. Consequently, not all subjects achieved complete coverage of the auditory regions in the temporal lobe.

Our results did not show a difference in phoneme classification between the left and right hemisphere in both sensorimotor cortex and PTPO (Table 2; Fig. 3), indicating both hemispheres are a suitable location for a speech-BCI implant. Studies on motor planning of speech production have observed left lateralization in inferior frontal gyrus and ventral premotor cortex (Riecker et al. 2005; Peeva et al. 2010; Ghosh et al. 2008; Kearney and Guenther 2019). Additionally, our results do not show a hemispheric preference for classification based on activity in PTPO, although this might be caused by the aforementioned lack of syntactical and lexical processing involved in the task that we used. The results we obtained for the sensorimotor cortex are however in line with several studies that found bilateral activation in this region during speech related movements (Ramsey et al. 2018).

Investigating articulators using fMRI comes with the challenge of motion artifacts. Displacement of the articulators affects functional images that are acquired during phoneme production, thereby confounding the measured BOLD responses. To account for this, we only included features from functional images acquired after the phoneme production, which were shown to be unaffected by artifacts (Fig. 2). Unfortunately, such speech-related artifacts are likely to limit the interpretability of BOLD signals acquired during continuous speech, imposing some limits on fMRI research of overt language production.

A limitation of the current experimental design is that it is based on overt speech production, while the target population for a speech BCI is in a locked in state, and consequently not able to produce overt speech. However, ECoG grids on the sensorimotor cortex that decode attempted hand movements have proven to be feasible (Pandarinath et al. 2017; Willett et al. 2021; Vansteensel et al. 2016; Hochberg et al. 2012). Moreover, there is a correlation between sensorimotor patterns of actual and attempted hand movements (Bruurmijn et al. 2017). One would assume the same to apply to activity patterns in sensorimotor cortex during articulator movements. Nevertheless, a necessary next step involves the assessment of replicability of the current findings in locked in patients.

While fMRI at 3T has repeatedly been shown to provide insightful information regarding speech dynamics (Correia et al. 2020; Grabski et al. 2012), high-field fMRI allows measurements at increased spatial resolutions while maintaining adequate signal to noise ratios (Formisano and Kriegeskorte 2012). Therefore, it is expected that fMRI at 7T provides details in the activity patterns that are not accessible at 3T (Formisano and Kriegeskorte 2012; Chaimow et al. 2018), despite the fact 7T is more susceptible to distortions and artifacts. However, the current design using fMRI is not fully representative for the actual BCI, that would most likely be based on electrophysiological measurements. While 7T fMRI has substantially contributed to findings regarding brain activity related to speech production, and has shown to correlate with ECoG in the sensorimotor cortex (Siero et al. 2013, 2014), the characteristics of hemodynamic responses inherently limit its temporal resolution. We were therefore only able to access spatial features of brain activity related to phoneme production, and effectively ignoring variations in brain activity during the complex sequences of articulator movements. Such features could of course be used in a BCI based electrophysiological measurements. In addition, the necessity to use a slow event related fMRI design limited the total number of trials to train the classifiers. Such limitation would not apply to a BCI that is based on electrophysiology, allowing a far greater number of trials to train the classifiers, and thus increase its performance.

In conclusion, we showed that it is possible to decode individual phonemes and paired phonemes that were pronounced overtly while acquiring the BOLD signal in the sensorimotor cortex. Notably, by demonstrating the possibility of classification of paired phonemes while training the classifier on single phonemes, we provided a proof of concept of a BCI that detects phonemes present in speech. Future research is needed to assess the detection of phonemes present spoken words to further explore the feasibility of a phoneme based BCI using invasive electrophysiology.

Data Availability

Raw MRI data will be available upon request to the authors. MRI data is considered personal data pursuant to General data protection regulation (GDPR) and can only be shared based on and subject to the Royal Netherlands Academy of Arts and Sciences (KNAW) policies. Considering the requirements imposed by law and the sensitive nature of personal data, any requests will be addressed on a case-by-case basis, subject to a data usage agreement.

References

Andersson JLR, Skare S, Ashburner J (2003) How to correct susceptibility distortions in spin-echo echo-planar images: application to diffusion tensor imaging. NeuroImage 20(2):870–888. https://doi.org/10.1016/S1053-8119(03)00336-7

Basilakos A, Smith KG, Fillmore P, Fridriksson J, Fedorenko E (2018) Functional characterization of the Human Speech Articulation Network. Cereb Cortex 28(5):1816–1830. https://doi.org/10.1093/cercor/bhx100

Blakely T, Miller KJ, Rao RPN, Holmes MD, Ojemann JG (2008) Localization and classification of phonemes using high spatial resolution electrocorticography (ECoG) grids. In: Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS’08 - Personalized Healthcare through Technology;:4964–4967. https://doi.org/10.1109/iembs.2008.4650328

Bleichner MG, Jansma JM, Sellmeijer J, Raemaekers M, Ramsey NF (2014) Give me a sign: Decoding complex coordinated hand movements using high-field fMRI. Brain Topogr 27(2):248–257. https://doi.org/10.1007/s10548-013-0322-x

Bleichner MG, Jansma JM, Salari E, Freudenburg ZV, Raemaekers M, Ramsey NF (2015) Classification of mouth movements using 7 T fMRI. J Neural Eng 12(6):066026. https://doi.org/10.1088/1741-2560/12/6/066026

Bleichner MG, Freudenburg ZV, Jansma JM, Aarnoutse EJ, Vansteensel MJ, Ramsey NF (2016) Give me a sign: decoding four complex hand gestures based on high-density ECoG. Brain Struct Function 221(1):203–216. https://doi.org/10.1007/s00429-014-0902-x

Bohland JW, Guenther FH (2006) An fMRI investigation of syllable sequence production. NeuroImage 32(2):821–841. https://doi.org/10.1016/j.neuroimage.2006.04.173

Bouchard KE, Mesgarani N, Johnson K, Chang EF (2013) Functional organization of human sensorimotor cortex for speech articulation. Nature 495(7441):327–332. https://doi.org/10.1038/nature11911

Branco MP, Freudenburg ZV, Aarnoutse EJ, Bleichner MG, Vansteensel MJ, Ramsey NF (2017) Decoding hand gestures from primary somatosensory cortex using high-density ECoG. NeuroImage 147:130–142. https://doi.org/10.1016/j.neuroimage.2016.12.004

Brown S, Laird AR, Pfordresher PQ, Thelen SM, Turkeltaub P, Liotti M (2009) The somatotopy of speech: Phonation and articulation in the human motor cortex. Brain Cogn 70(1):31–41. https://doi.org/10.1016/j.bandc.2008.12.006

Brumberg JS, Krusienski DJ, Chakrabarti S et al (2016) Spatio-temporal progression of cortical activity related to continuous overt and covert speech production in a reading task. PLoS ONE 11(11):e0166872. https://doi.org/10.1371/journal.pone.0166872

Bruurmijn MLCM, Pereboom IPL, Vansteensel MJ, Raemaekers MAH, Ramsey NF (2017) Preservation of hand movement representation in the sensorimotor areas of amputees. Brain 140(12):3166–3178. https://doi.org/10.1093/brain/awx274

Carey D, Krishnan S, Callaghan MF, Sereno MI, Dick F (2017) Functional and quantitative MRI mapping of somatomotor representations of human supralaryngeal vocal tract. Cereb Cortex 27(1):265–278. https://doi.org/10.1093/cercor/bhw393

Castellucci GA, Kovach CK, Howard MA, Greenlee JDW, Long MA (2022) A speech planning network for interactive language use. Nature 602(7895):117–122. https://doi.org/10.1038/s41586-021-04270-z

Chaimow D, Uğurbil K, Shmuel A (2018) Optimization of functional MRI for detection, decoding and high-resolution imaging of the response patterns of cortical columns. NeuroImage 164:67–99. https://doi.org/10.1016/j.neuroimage.2017.04.011

Chen Y, Namburi P, Elliott LT et al (2011) Cortical surface-based searchlight decoding. NeuroImage 56(2):582–592. https://doi.org/10.1016/j.neuroimage.2010.07.035

Cogan GB, Thesen T, Carlson C, Doyle W, Devinsky O, Pesaran B (2014) Sensory-motor transformations for speech occur bilaterally. Nature 507(7490):94–98. https://doi.org/10.1038/nature12935

Combrisson E, Jerbi K (2015) Exceeding chance level by chance: the caveat of theoretical chance levels in brain signal classification and statistical assessment of decoding accuracy. J Neurosci Methods 250. https://doi.org/10.1016/j.jneumeth.2015.01.010

Conant D, Bouchard KE, Chang EF (2014) Speech map in the human ventral sensory-motor cortex. Curr Opin Neurobiol 24:63–67. https://doi.org/10.1016/j.conb.2013.08.015

Conner CR, Kadipasaoglu CM, Shouval HZ, Hickok G, Tandon N (2019) Network dynamics of Broca’s area during word selection. PLoS ONE 14(12):e0225756. https://doi.org/10.1371/journal.pone.0225756

Correia JM, Caballero-Gaudes C, Guediche S, Carreiras M (2020) Phonatory and articulatory representations of speech production in cortical and subcortical fMRI responses. Sci Rep 10(1):1–14. https://doi.org/10.1038/s41598-020-61435-y

Deldar Z, Gevers-Montoro C, Gevers-Montoro C et al (2020) The interaction between language and working memory: a systematic review of fMRI studies in the past two decades. AIMS Neurosci 8(1):1–32. https://doi.org/10.3934/NEUROSCIENCE.2021001

Delfino E, Pastore A, Zucchini E et al (2021) Prediction of Speech Onset by Micro-electrocorticography of the human brain. Int J Neural Syst 31(7):2150025. https://doi.org/10.1142/S0129065721500258

Fedorenko E, Blank IA (2020) Broca’s area is not a Natural Kind. Trends Cogn Sci 24(4):270–284. https://doi.org/10.1016/j.tics.2020.01.001

Fischl B, FreeSurfer (2012) NeuroImage 62(2):774–781. https://doi.org/10.1016/j.neuroimage.2012.01.021

Flinker A, Korzeniewska A, Shestyuk AY et al (2015) Redefining the role of Broca’s area in speech. Proc Natl Acad Sci USA 112(9):2871–2875. https://doi.org/10.1073/pnas.1414491112

Formisano E, Kriegeskorte N (2012) Seeing patterns through the hemodynamic veil - the future of pattern-information fMRI. NeuroImage 62(2):1249–1256. https://doi.org/10.1016/j.neuroimage.2012.02.078

Formisano E, De Martino F, Bonte M, Goebel R (2008) Who is saying what? Brain-based decoding of human voice and speech. Science 322(5903):970–973. https://doi.org/10.1126/science.1164318

Gallego JA, Makin TR, McDougle SD (2022) Going beyond primary motor cortex to improve brain–computer interfaces. Trends Neurosci 45(3):176–183. https://doi.org/10.1016/j.tins.2021.12.006

Ghosh SS, Tourville JA, Guenther FH (2008) A neuroimaging study of premotor lateralization and cerebellar involvement in the production of phonemes and syllables. J Speech Lang Hear Res 51(5):1183–1203. https://doi.org/10.1044/1092-4388(2008/07-0119)

Grabski K, Lamalle L, Vilain C et al (2012) Functional MRI assessment of orofacial articulators: neural correlates of lip, jaw, larynx, and tongue movements. Hum Brain Mapp 33(10):2306–2321. https://doi.org/10.1002/hbm.21363

Greve DN, Van der Haegen L, Cai Q et al (2013) A surface-based analysis of Language lateralization and cortical asymmetry. J Cogn Neurosci 25(9):1477–1492. https://doi.org/10.1162/jocn_a_00405

Grootswagers T, Dijkstra K, Bosch L, Ten, Brandmeyer A, Sadakata M (2013) Word identification using phonetic features: Towards a method to support multivariate fmri speech decoding. In: Proceedings of the Annual Conference of the International Speech Communication Association, INTERSPEECH.;:3201–3205. https://doi.org/10.21437/interspeech.2013-710

Guan C, Aflalo T, Zhang CY et al (2022) Stability of motor representations after paralysis. eLife 11:e74478. https://doi.org/10.7554/eLife.74478

Hochberg LR, Bacher D, Jarosiewicz B et al (2012) Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485(7398):372–375. https://doi.org/10.1038/nature11076

Hotz-Boendermaker S, Funk M, Summers P et al (2008) Preservation of motor programs in paraplegics as demonstrated by attempted and imagined foot movements. NeuroImage 39(1):383–394. https://doi.org/10.1016/j.neuroimage.2007.07.065

Ikeda S, Shibata T, Nakano N et al (2014) Neural decoding of single vowels during covert articulation using electrocorticography. Front Hum Neurosci 8:125. https://doi.org/10.3389/fnhum.2014.00125

Jenkinson M, Beckmann CF, Behrens TEJ, Woolrich MW, Smith SM, Review FSL (2012) NeuroImage 62(2):782–790. https://doi.org/10.1016/j.neuroimage.2011.09.015

Kearney E, Guenther FH (2019) Articulating: the neural mechanisms of speech production. Lang Cognition Neurosci 34(9):1214–1229. https://doi.org/10.1080/23273798.2019.1589541

Kriegeskorte N, Goebel R, Bandettini P (2006) Information-based functional brain mapping. Proc Natl Acad Sci USA 103(10):3863–3868. https://doi.org/10.1073/pnas.0600244103

Livezey JA, Bouchard KE, Chang EF (2019) Deep learning as a tool for neural data analysis: Speech classification and crossfrequency coupling in human sensorimotor cortex. PLoS Comput Biol 15(9):e1007091. https://doi.org/10.1371/journal.pcbi.1007091

Marques JP, Kober T, Krueger G, van der Zwaag W, Van de Moortele PF, Gruetter R (2010) MP2RAGE, a self bias-field corrected sequence for improved segmentation and T1-mapping at high field. NeuroImage 49(2):1271–1281. https://doi.org/10.1016/j.neuroimage.2009.10.002

Martin S, Brunner P, Iturrate I et al (2016) Word pair classification during imagined speech using direct brain recordings. Sci Rep 6:25803. https://doi.org/10.1038/srep25803

Matchin W, Hickok G (2020) The Cortical Organization of Syntax. Cereb Cortex 30(3):1481–1498. https://doi.org/10.1093/cercor/bhz180

Metzger SL, Liu JR, Moses DA et al (2022) Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis. Nat Commun 13(1). https://doi.org/10.1038/s41467-022-33611-3

Metzger SL, Littlejohn KT, Silva AB et al (2023) A high-performance neuroprosthesis for speech decoding and avatar control. Nature 620(7976):1037–1046. https://doi.org/10.1038/s41586-023-06443-4

Mitchell TM, Hutchinson R, Niculescu RS et al (2004) Learning to decode cognitive states from brain images. Mach Learn 57(1):145–175. https://doi.org/10.1023/B:MACH.0000035475.85309.1b

Modarres R, Good P (1995) Permutation tests: a practical guide to Resampling methods for Testing hypotheses. J Am Stat Assoc 90(429). https://doi.org/10.2307/2291167

Moses DA, Metzger SL, Liu JR et al (2021) Neuroprosthesis for Decoding Speech in a paralyzed person with Anarthria. N Engl J Med 385(3):217–227. https://doi.org/10.1056/nejmoa2027540

Mourão-Miranda J, Reynaud E, McGlone F, Calvert G, Brammer M (2006) The impact of temporal compression and space selection on SVM analysis of single-subject and multi-subject fMRI data. NeuroImage 33(4):1055–1065. https://doi.org/10.1016/j.neuroimage.2006.08.016

Mugler EM, Patton JL, Flint RD et al (2014a) Direct classification of all American English phonemes using signals from functional speech motor cortex. J Neural Eng 11(3):035015. https://doi.org/10.1088/1741-2560/11/3/035015

Mugler EM, Goldrick M, Slutzky MW (2014b) Cortical encoding of phonemic context during word production. In: 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC 2014.; 2014:6790–6793. https://doi.org/10.1109/EMBC.2014.6945187

Nichols TE, Holmes AP (2002) Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum Brain Mapp 15(1). https://doi.org/10.1002/hbm.1058

Nuyujukian P, Albites Sanabria J, Saab J et al (2018) Cortical control of a tablet computer by people with paralysis. PLoS ONE 13(11):e0204566. https://doi.org/10.1371/journal.pone.0204566

Otaka Y, Osu R, Kawato M, Liu M, Murata S, Kamitani Y (2008) Decoding syllables from human fMRI activity. In: Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). Vol 4985 LNCS.;:979–986. https://doi.org/10.1007/978-3-540-69162-4_102

Palmer ED, Rosen HJ, Ojemann JG, Buckner RL, Kelley WM, Petersen SE (2001) An event-related fMRI study of overt and covert word stem completion. NeuroImage 14(1 I):182–193. https://doi.org/10.1006/nimg.2001.0779

Pandarinath C, Nuyujukian P, Blabe CH et al (2017) High performance communication by people with paralysis using an intracortical brain-computer interface. eLife 6:e18554. https://doi.org/10.7554/eLife.18554

Papitto G, Friederici AD, Zaccarella E (2020) The topographical organization of motor processing: an ALE meta-analysis on six action domains and the relevance of Broca’s region. NeuroImage 206:116321. https://doi.org/10.1016/j.neuroimage.2019.116321

Peeva MG, Guenther FH, Tourville JA et al (2010) Distinct representations of phonemes, syllables, and supra-syllabic sequences in the speech production network. NeuroImage 50(2):626–638. https://doi.org/10.1016/j.neuroimage.2009.12.065

Pei X, Barbour DL, Leuthardt EC, Schalk G (2011) Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans. J Neural Eng 8:046028. https://doi.org/10.1088/1741-2560/8/4/046028

Pulvermüller F, Huss M, Kherif F, Del Prado Martin FM, Hauk O, Shtyrov Y (2006) Motor cortex maps articulatory features of speech sounds. Proc Natl Acad Sci USA 103(20):7865–7870. https://doi.org/10.1073/pnas.0509989103

Ramsey NF, Salari E, Aarnoutse EJ, Vansteensel MJ, Bleichner MG, Freudenburg ZV (2018) Decoding spoken phonemes from sensorimotor cortex with high-density ECoG grids. NeuroImage 180:301–311. https://doi.org/10.1016/j.neuroimage.2017.10.011

Rao VR, Leonard MK, Kleen JK, Lucas BA, Mirro EA, Chang EF (2017) Chronic ambulatory electrocorticography from human speech cortex. NeuroImage 153:273–282. https://doi.org/10.1016/j.neuroimage.2017.04.008

Riecker A, Mathiak K, Wildgruber D et al (2005) fMRI reveals two distinct cerebral networks subserving speech motor control. Neurology 64(4):700–706. https://doi.org/10.1212/01.WNL.0000152156.90779.89

Riecker A, Brendel B, Ziegler W, Erb M, Ackermann H (2008) The influence of syllable onset complexity and syllable frequency on speech motor control. Brain Lang 107(2):102–113. https://doi.org/10.1016/j.bandl.2008.01.008

Salari E, Freudenburg ZV, Vansteensel MJ, Ramsey NF (2018) The influence of prior pronunciations on sensorimotor cortex activity patterns during vowel production. J Neural Eng 15(6):066025. https://doi.org/10.1088/1741-2552/aae329

Salari E, Freudenburg ZV, Vansteensel MJ, Ramsey NF (2019) Repeated vowel production affects features of neural activity in Sensorimotor Cortex. Brain Topogr 32(1):97–110. https://doi.org/10.1007/s10548-018-0673-4

Shuster LI, Lemieux SK (2005) An fMRI investigation of covertly and overtly produced mono- and multisyllabic words. Brain Lang 93(1):20–31. https://doi.org/10.1016/j.bandl.2004.07.007

Siero JCW, Hermes D, Hoogduin H, Luijten PR, Petridou N, Ramsey NF (2013) BOLD consistently matches electrophysiology in human sensorimotor cortex at increasing movement rates: a combined 7T fMRI and ECoG study on neurovascular coupling. J Cereb Blood Flow Metab 33(9):1448–1456. https://doi.org/10.1038/jcbfm.2013.97

Siero JCW, Hermes D, Hoogduin H, Luijten PR, Ramsey NF, Petridou N (2014) BOLD matches neuronal activity at the mm scale: a combined 7T fMRI and ECoG study in human sensorimotor cortex. NeuroImage 101:177–184. https://doi.org/10.1016/j.neuroimage.2014.07.002

Soroush PZ, Herff C, Ries SK, Shih JJ, Schultz T, Krusienski DJ (2023) The nested hierarchy of overt, mouthed, and imagined speech activity evident in intracranial recordings. NeuroImage 269:119913. https://doi.org/10.1016/j.neuroimage.2023.119913

Vansteensel MJ, Pels EGM, Bleichner MG et al (2016) Fully implanted brain–computer interface in a Locked-In patient with ALS. N Engl J Med 375(21):2060–2066. https://doi.org/10.1056/nejmoa1608085

Willett FR, Avansino DT, Hochberg LR, Henderson JM, Shenoy KV (2021) High-performance brain-to-text communication via handwriting. Nature 593(7858):249–254. https://doi.org/10.1038/s41586-021-03506-2

Willett FR, Kunz EM, Fan C et al (2023) A high-performance speech neuroprosthesis. Nature 620(7976):1031–1036. https://doi.org/10.1038/s41586-023-06377-x

Wilson GH, Stavisky SD, Willett FR et al (2020) Decoding spoken English from intracortical electrode arrays in dorsal precentral gyrus. J Neural Eng 17(6):066007. https://doi.org/10.1088/1741-2552/abbfef

World Medical Association declaration of Helsinki (2013) Ethical principles for medical research involving human subjects. JAMA - Journal of the American Medical Association 310(20):2191–2194. https://doi.org/10.1001/jama.2013.281053

Zhang W, Liu Y, Wang X, Tian X (2020) The dynamic and task-dependent representational transformation between the motor and sensory systems during speech production. Cogn Neurosci 11(4):194–204. https://doi.org/10.1080/17588928.2020.1792868

Funding

This work was funded by the Dutch Science Foundation SGW-406-18-GO-086.

Author information

Authors and Affiliations

Contributions

Maria Araújo Vitória performed the experiments and analysed the data, assisted by Mathijs Raemaekers. Maria Araújo Vitória wrote the manuscript with input from all authors. All authors provided critical feedback to the discussion and interpretation of the results. Mathijs Raemaekers supervised the project.

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no conflict of interest to declare.

Additional information

Communicated by Edmund Lalor.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vitória, M.A., Fernandes, F.G., van den Boom, M. et al. Decoding Single and Paired Phonemes Using 7T Functional MRI. Brain Topogr (2024). https://doi.org/10.1007/s10548-024-01034-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10548-024-01034-6