Abstract

Cross-modal learning requires the use of information from different sensory modalities. This study investigated how the prior visual experience of late blind individuals could modulate neural processes associated with learning of sound localization. Learning was realized by standardized training on sound localization processing, and experience was investigated by comparing brain activations elicited from a sound localization task in individuals with (late blind, LB) and without (early blind, EB) prior visual experience. After the training, EB showed decreased activation in the precuneus, which was functionally connected to a limbic-multisensory network. In contrast, LB showed the increased activation of the precuneus. A subgroup of LB participants who demonstrated higher visuospatial working memory capabilities (LB-HVM) exhibited an enhanced precuneus-lingual gyrus network. This differential connectivity suggests that visuospatial working memory due to the prior visual experience gained via LB-HVM enhanced learning of sound localization. Active visuospatial navigation processes could have occurred in LB-HVM compared to the retrieval of previously bound information from long-term memory for EB. The precuneus appears to play a crucial role in learning of sound localization, disregarding prior visual experience. Prior visual experience, however, could enhance cross-modal learning by extending binding to the integration of unprocessed information, mediated by the cognitive functions that these experiences develop.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Daily life requires the continuous integration of information obtained through multiple sensory modalities, which, in turn, depends on cross-modal learning and the use of information from various sensory modalities (Spence 2011). One example of cross-modal learning is visuoauditory spatial learning. This type of learning occurs when people use sensory-specific cues (i.e., visual and auditory) to infer multisensory representations characterizing intrinsic properties (i.e., locations) (Yamashita et al. 2015). For example, the contour of a train appears larger as the sound of its whistle becomes louder, both of which indicate that the train is arriving. The result of visuoauditory spatial learning enables individuals inside the lounge to realize that a train is arriving only by hearing its engine sound. Similar to other types of learning, cross-modal learning can be modulated by the experiences that individuals gain from the involved modalities. For example, individuals who have never heard the whistle would not recognize the sound but would associate a louder whistle with the arrival of the train.

Cross-modal learning is mediated by several neural networks that specialize in sensory association and memory (Calvert 2001). These networks consist of polymodal association regions, such as the medial parietal cortices, prefrontal cortices, and the superior temporal gyrus/sulcus (Watson et al. 2014), and memory-related regions, such as the hippocampus and parahippocampus (Tanabe et al. 2005). The specific network used largely depends on the sensory modalities involved and an individual’s familiarity with the sensory information being processed (Tanabe et al. 2005). Fuster et al. (2000) have proposed that cross-modal learning involves at least three steps: the activation of the cross-modal network during long-term memory formation; sustained activation of that association during working memory; and reactivation of the network when presented with one of the associates. Activity of the superior temporal sulcus was found to increase during the initial association process but to decrease as learning proceeded (Tanabe et al. 2005). Activity in the prefrontal cortex was related to the integration of visual and auditory stimuli that were sustained throughout the learning process (Fuster et al. 2000). This study investigated the effect of the prior experience of late blind individuals (LB) on modulating cross-modal learning. We are particularly interested in learning of sound localization, which involves comparisons of the associated processes between early blind (EB) and LB individuals.

There is vast literature on cross-modal learning in EB individuals based on the auditory (e.g., Chan et al. 2013; Halko et al. 2014; Striem-Amit et al. 2011) or tactile modality (Chebat et al. 2007, 2011; Kupers et al. 2010; Ptito and Kupers 2005). The common understanding among researchers is that EB individuals possess superior discrimination ability than do LB individuals, such as minimum-audible-angle discrimination in peripheral space (Voss et al. 2004) and high-resolution sound localization in a fan-shape space (Tao et al. 2015). In another two studies (Gougoux et al. 2005; Voss et al. 2011), which used the same/different-sound-position task and the pointing task toward sound sources, only those with superior performance among the EB participants were found to outperform their LB and normal-vision counterparts. The rest of the EB participants were reported to perform at a similar level to those of the LB or normal visual participants. Gori et al. (2014) tested congenitally blind participants on a spatial and temporal bisection task, a minimum audible angle task, a pointing to sound source task, and a slower version of the spatial bisection task. The results found that the participants, when compared with the sighted participants, only impaired at performing the spatial bisection task. In contrast, Kupers et al. (2010) trained congenitally blind participants on a virtual navigation task with a tactile-to-vision sensory substitution device. The participants showed significant improvements that were comparable to those of the sighted participants. In agreement with above results, two review studies presented both impaired and enhanced spatial skills of blind individuals across experimental tasks (Cuturi et al. 2016; Gori et al. 2016). A recent review study that Schinazi et al. (2016) conducted suggested that the differences observed could largely be due to the variations in the abilities, strategies taken, and mental representations of the blind individuals. More importantly, the study stipulated that blind individuals tended to show patterns of performances that could be progressively lower than (called cumulative), consistently lower than (persistent), or approaching (convergent) those of the sighted individuals (Schinazi et al. 2016). With this in mind, the task employed in this study made reference to the low-resolution sound recognition task used in our previous study on EB individuals who performed better than sighted participants did (Chan et al. 2013). The existing task was more challenging for the EB and perhaps LB, as it involved the localization of high-resolution sounds. Among the auditory and tactile modalities, this study chose to investigate sound localization learning for two reasons. First, the focus on the auditory modality extends our previous studies on sound localization processing (Chan et al. 2013; Tao et al. 2015) to further understand how prior visual experience would modulate its learning. Second, previous brain imaging studies reported common neural substrates mediating the cross auditory- and tactile-spatial processes (Renier et al. 2010). They clustered around the medial parietal areas, including the precuneus, superior parietal lobule (SPL), posterior parietal cortex (PPC), and middle occipital gyrus (MOG) (Bonino et al. 2008; Collignon et al. 2011; Renier et al. 2010). The results from the study of sound localization learning may shed light on the tactile-spatial counterpart in blind individuals.

Visual experience is important for the development of the multisensory integration necessary for spatial cognition (Pasqualotto and Proulx 2010). Such experience is more relevant to LB than EB individuals during the extraction of spatial information from the auditory signals. Neuroimaging studies explored the differences between EB and LB on cross sound localization processing. The results vary across studies but in general neural substrates in the occipital cortex particularly the MOG was consistently found to be activated only in EB but not LB group (Collignon et al. 2013; Tao et al. 2015; Voss et al. 2008). The MOG has been theorized to signify blind-related plasticity specialized in spatial localization of sound. Our previous study revealed that audio-spatial learning in EB participants was associated with activation of the inferior parietal cortex, left hippocampus, and right cuneus (Chan et al. 2013). It did not address how visual experience would have modulated the learning processes, and no LB participants were involved. The present study aimed to investigate the neural processes associated with cross-modal learning using a pre- and post-training design. The notion of visual experience was operationalized by comparing the EB and LB who had visual deprivation at the early and late developmental periods. The between-group comparison can also shed light on another issue related to learning ability of people blind individual. We proposed that prior visual experience in LB would facilitate sound localization learning and be associated with the increased activation of extended multi-modal association regions. Without prior visual experience, EB participants would gain less in the learning and have a lower increase in brain activations compared with LB participants. Prior visual experience was investigated by comparing EB and LB individuals on the sound localization learning of “Bat-ears” sounds through a 7-day training. This study did not have a normal vision control group because the results from a pilot study (N = 3) revealed that the training could yield below-random chance performances among the sighted individuals. More intense training, such as longer hours, for the normal vision participants would have biased the between-group comparison in the post-training scan. The “Bat-ears” is a sensory substitution device that utilizes an ultra-sound echo technology to assist blind individuals with navigation (Chan et al. 2013; Tao et al. 2015). The sounds emitted from the “Bat-ears” device contain spatial information with distance and azimuth. Longitudinal functional magnetic resonance imaging (fMRI) data were collected before and after the training while participants localized the “Bat-ears” sounds. Training-related changes in neural activation were investigated by region-of-interest (ROI) analysis and longitudinal contrast analysis; training-related changes in functional connectivity were investigated by the psychophysiological interaction (PPI) analysis (Friston et al. 1997). The PPI method has been shown to be effective for studying integrative processes across sensory modalities (Kim and Zatorre 2011). The hypothesis is that prior visual and multisensory spatial experience in LB would facilitate sound localization learning through visuo-spatial working memory. The LB participants with high visuo-spatial working memory abilities would improve more than LB participants with low visuo-spatial working memory abilities. Sound localization learning of better learners would be associated with increased activation of the polymodal association regions. Without prior visual experience, EB participants would only be able to learn the pairing of the sound localization stimuli and it would be associated predominantly with the memory network. Because of the differences in the postulated learning processes, we further hypothesized that EB participants would not have superior level of performance than the LB participants.

Materials and Methods

Participants

There were 14 EB and 17 LB participants initially recruited, who participated in a previous cross-modal processing study reported in Tao et al. (2015). Three EB and 4 LB participants dropped out of the study during training due to personal reasons unrelated to the training. The final sample size was 11 EB (6 male, age range = 19–31 y, mean age = 26.36) and 13 LB (13 male, age range 27–49 y, mean age = 33.85 y) participants. The EB participants were affected by congenital blindness that presented before the first year of age. In the LB group, the onset of blindness ranged from 5 to 39 years (mean = 21.23 y) while the duration of blindness ranged from 2 to 31 years (mean = 12.62 y). The demographics of the participants are summarized in Table 1. All participants reported no light perception and normal hearing. To ensure that all participants were able to discriminate auditory stimuli, we used a pitch discrimination test with a criterion of above 60% accuracy (Collignon et al. 2007). The stimulus pairs composed of a reference sound (200 ms) and a discrimination sound (200 ms). The test was a one-back comparison task, which required the participants to compare and determine whether the two stimuli were same or different regarding its identity (frequency or intensity). The EB (mean = 77.8%) and LB participants (mean = 75.5%) demonstrated comparable discrimination performance (t2,22 = 0.64, P = 0.529). Furthermore, all participants had normal intelligence, as assessed by the Wechsler Adult Intelligence Scale-Revised for China (WAIS-RC) (Gong 1982; Wechsler 1955). A pilot study was conducted on three normal vision individuals (2 male, age range = 25–29 years, mean age = 27.33) and they were blindfolded throughout testing and training. The mean accuracy performance on the pitch discrimination test was 62.3% and the sound localization performance was improved from 23.4 to 29.7% (below the chance level of 33.3%). It suggested that the sound localization task (15 sounds) constructed for this study had been too difficult to learn and perform by normal vision individuals. As a result, only EB and LB individuals were recruited as participants in this study. Written informed consents were obtained from all participants and they understood the purpose and procedure of the study. The research protocol was approved by the Human Ethics Committees of Beijing Normal University, where the fMRI scans were carried out, and The Hong Kong Polytechnic University, from which the study originated. The methods were carried out in accordance with the approved protocols/guidelines.

Behavioral Test–Matrix Test

The adapted matrix test (Cornoldi et al. 1991; Tao et al. 2015) was used to assess visuo-spatial working memory ability among the participants. There were two haptic subtests: one 2D matrix (3 × 3 squares) comprised of nine wooden cubes (2 cm per side) and one 3D matrix (2 × 2 × 2 squares) comprised of 8 wooden cubes (2 cm per side). Within each matrix, sandpaper pads were attached to the surface of one cube, which was easily tactually recognized as a target. For each trial, the starting position of the target was varied and the participant was instructed to tactually recognize and memorize the location of the target. The participant was then instructed to mentally maneuver the target on the surface of the matrix according to verbal scripts, which were delivered to the participant using a tape recorder. The verbal scripts were instructions on relocating the target, such as forward–backward and right-left for the 2D matrix or forward–backward, right-left and up-down for the 3D matrix. Finally, the participant was required to indicate the final location of the target on a blank 2D or 3D matrix. The task requires active visuo-spatial imagery operations on matrices and tests for short-term memory of visuo-spatial materials. The task progressed from easy to difficult trials. For a difficult trial, two or three targets were maneuvered in two to four steps of relocation instructions. There were 12 trials each for the 2D and 3D matrices. Performance was measured as the percentage of final locations accurately identified out of the 24 trials by the participant.

Stimuli and Apparatus

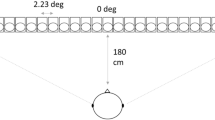

The auditory stimuli originated from an auditory substitution device called “Bat-ears.” The electronic “Bat-ears” device was used in two other studies conducted by the authors and described elsewhere in detail (Chan et al. 2013; Tao et al. 2015). In brief, it consists of one transmitter, two receivers, one demodulator, and two earphones. The transmitter emits ultrasonic pulses, which are reflected back as echoes upon hitting an obstacle. The binaural receivers then detect the reflected echoes, which are converted to audible signals (da-da-da sounds) by the demodulator. Finally, audible sounds are received by human subjects through the earphones. The KEMAR Manikin (Burkhard and Sachs 1975) was used to record the “Bat-ears” stimuli that reflected from the obstacle (30 × 30 cm cardboard), which was placed at designated locations in a soundproof chamber. Low-resolution stimuli were recorded at six locations [two distances: 1, 4 m; paired with 3 azimuths: −30° (left side), 0°, and +30° (right side)], and the high-resolution stimuli were recorded at 15 locations [3 distances: 1.5, 2.5, 3.5 m; paired with 5 azimuths: −30° (left side), −15°, 0°, +15°, +30° (right side)] (Fig. 1). The auditory stimuli were bilaterally presented via MRI-compatible headphones, and the sound-pressure level was adjusted to 80–90 dB.

Locations for the low- and high-resolution sound stimuli and definition of correct responses in the sound localization task. The neighboring locations are also regarded as correct responses. The neighboring locations have the same distance or azimuth as the exact correct locations, but with one-step difference in distance or azimuth. The locations indicated as blue circles (e.g.,1.5 m/−30°), each has two neighboring locations; the locations indicated as green triangles (e.g., 2.5 m/−30° or 1.5 m/−15°), each has three neighboring locations; the locations indicated as orange stars (e.g., 2.5 m/+15°), each has four neighboring locations. The chance levels of accuracy for the blue, green and orange locations are 33.33, 25, and 20%, respectively. (Color figure online)

Sound Localization Training

The sound localization training proceeded from low- (6 locations) to high-resolution (15 locations) stimuli, with 2 and 5 sessions (2 h per session), respectively. The training was conducted in the sitting position. Verbal instruction describing the sound-location pair was given prior to listening to the sound. A 2D fan-shaped model was constructed and used to facilitate the communication between the participant and the trainer. The locations were further explained with the trainer by guiding the participant’s hands to the corresponding physical locations on the 2D model. The training was divided into distance and azimuth training blocks (Bedford 1993, 1995). The sequence of the two types of training blocks was counterbalanced. An evaluation trial was conducted for each of the distance and azimuth training block, in which the participant received verbal feedback on their performance. The participant had to reach 80% accuracy in both distance and azimuth training blocks, after which the participant could proceed to the final training stage for localization evaluation (both distance and azimuth). Two reasons exist for setting an 80% accuracy rate. First, the sound localization task is relatively hard for both EB and LB participants to perform. To maintain a low attrition rate, the 80% is to enable the participants to complete the training without spending an excessively long amount of time on it. This also helps to minimize the frustrated experiences of the participants. Second, the 80% is to safeguard an adequate number of correct trials for the fMRI analysis and hence reach a good signal-to-noise ratio. At the end of training, all participants obtained a performance level of 60% accuracy on the high resolution sound localization. The mean number of blocks used for evaluation is six (range from 5 to 8) and it is same for EB and LB. As a result, the training blocks only included verbal instruction by the trainer and did not have performance. The evaluation trials using a correction feedback procedure had performance, and both EB and LB had to achieve performance of 80% for each distance and azimuth block and obtain performance of 80% for localization (distance plus azimuth).

Experimental Design and fMRI Tasks

fMRIs were conducted pre- and post-training. An event-related design was used. The participants went through a familiarization session (1 h) before the pre-training scan, which involved practicing on six sound-to-location trials using a headphone and joystick. Each scan session had four functional runs. Each run had an unbalanced number of localization (experimental, 17–20) and discrimination (control, 8–11) trials organized in a pseudo-randomized order. The order of the runs was counterbalanced among the participants. This gave a total of 75 localization and 37 discrimination trials. For each trial, an auditory cue (750 ms) was presented to indicate the task type: either localization (2000 Hz, 70 dB) or discrimination (500 Hz, 70 dB). Following the tone presentation, there was a 1750 ms delay during which the participant was instructed to prepare for the appropriate task. The “Bat-ears” stimulus was presented for 3000 ms followed by a 500 ms auditory cue (2000 Hz, 70 dB) after which the participant was to respond with the joystick. The response window was 4000 ms. Successive trials were separated with a jitter sampled from a uniform distribution (2500, 5000, or 7500 ms). The sound localization task required the participant to hear the “Bat-ears” sounds and identify their locations among the 15 combinations of azimuth and distance. The participant responded by maneuvering the joystick to one particular location, which indicated both the distance and azimuth of the sound source. Calibration of the joystick was: left/straight/right indicated by −15°/0°/+15° and outer left/right indicated by −30°/+30°; and backward/horizontal/forward indicated by 1.5/2.5/3.5 m. The pitch discrimination task was designed as a control task and required the participants to discriminate whether a deviant pitch (6000–8000 Hz, 70 dB) was inserted into the “Bat-ears” sounds. The participant made a “Yes” or “No” response by pressing or by not pressing on the joystick, respectively. In both tasks, we used novel “Bat-ears” sounds, which were recorded near the locations of the high-resolution sounds used in the training. This discrimination task was meant to produce baseline BOLD responses associated with non-auditory spatial processing.

Behavioral Analysis

Response time for the localization task was not used as a behavioral measure because responses to stimuli presented at farther distances (e.g., 3.54 m) and at the outer left or right side (e.g., ±31°) took longer to register responses to than did those at a closer distance (e.g., 1.47 m) and at the center (e.g., ±2°). Most participants reported that localizing the sounds and mapping the location on joystick required some effort particularly when the task was carried out in the scanner. As a result, more lenient criteria were adopted for defining accuracy of the responses (Fig. 1): localization of an exact or neighboring location was regarded as a correct response. For instance, responses at two neighboring locations were regarded as “correct” for localizing a stimulus emitted from the nearest-distance outer-right location (1.53 m, +30°). The two neighboring locations were the medium-distance outer right (2.46 m, +30°) and nearest-distance right (1.52 m, +13°). Accuracy rate was defined as the percentage of correct trials over all the trials completed by the participant during each of the two scans. The effect of training on the accuracy rate were tested using repeated measure ANOVA: Group (LB versus EB) × Training (Pre-training versus Post-training). Significant interaction effects were tested with post-hoc comparisons. All tests adopted P < 0.05 as the threshold for defining statistical significance.

MRI Acquisition and Analysis

The method of data acquisition was comparable to that used in Tao et al. (2015). fMRI series were acquired on a 3-T Siemens machine with a 12-channel head coil. Functional T2*-weighted images were obtained with a gradient echo-planar sequence (repetition time [TR] = 2500 ms; echo time [TE] = 30 ms; flip angle [FA] = 90°; voxel size = 3.1 × 3.1 × 3.2 mm3). Structural T1-weighted images (TR = 2530 ms; TE = 3.39 ms; voxel size = 1.3 × 1.0 × 1.3 mm3) were also acquired.

Analyses were carried out using SPM8 (Welcome Department of Imaging Neuroscience, London, UK) implemented in MATLAB R2008a (Mathworks). Preprocessing included slice-timing correction to correct for differences in the timing of acquisition between slices, realignment of functional time series to remove head motion, co-registration of functional and anatomical data, segmentation for extracting gray matter, spatial normalization to the Montreal Neurological Institute (MNI) space, and spatial smoothing (Gaussian kernel, 6 mm FWHM).

The preprocessed fMRI data were fitted to a general linear model (GLM) in SPM8 (Friston et al. 1994). We used four event-related regressors. Two of those modeled the BOLD signals corresponding to the 3000 ms “Bat-ears” sounds in correct responses made in the localization and discrimination trials. The other two were regressors of no interest that modeled the BOLD signals for incorrect responses made in the localization and discrimination trials. All regressors were constructed by convolving the onset times of the “Bat-ears” sounds with the canonical hemodynamic response function. The motion parameters detected by the Artifact Detection Tools (ART, developed by the Gabrieli Lab, Massachusetts Institute of Technology, available at: http://web.mit.edu/swg/software.htm) were included in the GLM for further regression of the motion-dependent confounds (Mazaika et al. 2005). Slow changes in the data were removed by applying a high-pass filter with a cut-off of 128 s, and a first-order autoregressive process was used to correct for autocorrelation of residuals in the GLM. Linear contrast of (Localization–Discrimination) was used to test the main effect of interest: sound localization processing. After single-subject analyses, we performed random-effect analyses at the group level for the EB and LB groups on the pre- and post-training sessions. The results of the pre-training session partially overlapped with those reported in Tao et al. (2015). The reason for the partial overlap was that not all participants in the pre-training completed the training. One-sample t tests were performed to investigate the main effect of sound localization. In addition, we conducted the following contrasts at individual level to investigate the training effect: (Localizationpre − Discriminationpre) − (Localizationpost − Discriminationpost) and (Localizationpost − Discriminationpost) − (Localizationpre − Discrimimationpre). The subtraction at the individual level was to control for the effects of repeated measurement and time (Poldrack 2000; Poldrack and Gabrieli 2001). One-sample t test was conducted in the EB and LB groups to characterize the training effect.

To address how visual experience could modulate sound localization training, exploratory ROI analyses were performed on the basis of the current results. The ROIs were defined by conjunction analysis (Nichols et al. 2005) of the pre- and post-training session data. The conjunction analysis identified common BOLD responses across all participants. The threshold was P < 0.001 (uncorrected) at the voxel level and P < 0.05 (FDR corrected) at the cluster level. All ROIs were created with a spherical mask 9 mm in radius centered at the local peaks of the activated clusters. Then, a stand-alone MATLAB-based toolkit of REX was used to extract the mean contrast value of the ROIs, which was submitted to 3 (Group: EB versus LB-LVM versus LB-HVM) × 2 (Training: Pre- vs Post-training) repeated measure ANOVA. To assess correlation with behavioral performance, Pearson correlation analyses were conducted on changes of mean contrast value of ROIs and performance in the sound localization task.

Finally, to further explore the training-dependent changes on functional connectivity, PPI analyses (Friston et al. 1997) were performed. As with the ROIs in the ROI analysis, seed regions were defined by conjunction analysis (Nichols et al. 2005) of the pre- and post-training session data. Separate PPIs were computed for the EB, LB-LVM and LB-HVM groups. In each participant, BOLD signal time series were extracted from the seed regions (6 mm sphere) and entered into the PPI analysis. New GLMs were constructed with four regressors: (i) the psychological regressor representing the main effect of auditory spatial processing (Localization–Discrimination), (ii) the physical regressor representing the original VOI eigenvariate in each session, (iii) the interaction of interest between the psychological and physical regressors, and (iv) the movement regressor. The 1st level GLM was built on pre- and post-training sessions and each session therefore has four regressors (psychological, physiological, interaction, motion). Significant PPI results indicated that the reported regions were functionally connected with the seed region in response to auditory spatial processing. The individual summary images (fixed effects) were then spatially smoothed (6 mm FWHM Gaussian kernel) and entered in group-level one-sample t test to test the auditory spatial processing effect for each session in each group (random effects). The threshold was P < 0.005 (uncorrected) at the voxel level and P < 0.05 (FDR-corrected) at the cluster level.

Results

Behavioral Results–Matrix Test

The 2D and 3D matrix test were administered to test the visuo-spatial working memory of the participants. The results of independent sample t-test showed significant better performance on the 2D test in the LB (mean = 58.2 ± 15.3%, ranged 40.0–86.7%) than the EB group (mean = 43.9% ± 12.7, ranged 33.3–70%) (t2,22 = 2.46, P = 0.022). For the 3D test, the LB group (mean = 49.2 ± 20.2%, ranged 26.7–90.0%) performed not significantly differed from the EB group (mean = 37.3 ± 14.3%, ranged 26.7–66.7%) (t2,22 = 1.64, P = 0.114). Given the large variance and potential heterogeneity in the LB group, we further differentiate the LB participants according to their performance on 2D test. A cut-off score of 50% for the 2D test was set for classifying the LB participants into the higher or lower ability groups. The participants with aggregated accuracy rates higher than 50% formed the higher VM group (LB-HVM; n = 7), whereas those having rates equal to or lower than 50% formed the lower VM group (LB-LVM; n = 6).

Behavioral Results–fMRI Task

For the sound localization trials, all participants performed above the chance level of 33.3% at both pre- and post-training occasions. The first round of analysis was based on the two groups of LB (n = 13) and EB (n = 11) participants. A significant Training effect was revealed (P < 0.001), and both the EB (Pre-training: mean = 45.09 ± 5.62%; Post-training: mean = 53.21 ± 6.41%) and the LB (Pre-training: mean = 44.21 ± 5.22%; Post-training: mean = 53.23 ± 6.65%) groups showed a higher level of performance after the training. No significant Group (F1,22 = 0.034, P = 0.856) and Group × Training effects were revealed (F1,22 = 0.404, P = 0.531). The second round of analysis was based on the three groups of LB-LVM (n = 7), LB-HVM (n = 6), and EB (n = 11) participants. In contrast to the first round of analysis, a significant Group × Training effect was revealed (F2,21 = 4.35, P = 0.026), suggesting that participants in the three groups (EB, LB-HVM, and LB-LVM) benefited differently from the sound localization training (Fig. 2). Subsequent post-hoc analyses were based on the change in performance score. As expected, members of the LB-HVM group learned sound localization significantly better (Pre-training: mean = 44.95 ± 7.04%; Post-training: mean = 56.19 ± 7.03%) than members of the LB-LVM (P = 0.046; Pre-training: mean = 43.33 ± 2.02%; Post-training: mean = 49.78 ± 4.52%) and EB (P = 0.042; Pre-training: mean = 45.09 ± 5.62%; Post-training: mean = 53.21 ± 6.41%) groups. No significant differences were revealed between the LB-LVM and EB groups.

For the discrimination trials, the EB (Pre-training: mean = 79.61 ± 10.63%; Post-training: mean = 81.57 ± 10.17%) and the LB group (Pre-training: mean = 79.58 ± 9.41%; Post-training: mean = 79.79 ± 11.72%) had comparable performances before and after the training. No significant Training (F1,22 = 0.21, P = 0.651), Group (F1,22 = 0.063, P = 0.804), or their interactions (F1,22 = 0.139, P = 0.713) were revealed.

Sound Localization Learning–Training Effect

The results of t-tests for the contrast of (Localization–Discrimination) in the EB and LB groups (including the two subgroups) during the two scanning sessions are shown in Fig. 3 (P < 0.001 uncorrected). As expected, both EB and LB groups showed significant BOLD responses in the bilateral occipital regions and parieto-frontal clusters, including the precentral gyrus, postcentral gyrus, and precuneus (Supplemental Table 1). However, longitudinal contrast analyses did not reveal significant results in the LB. Further analyses revealed different post- versus pre-training patterns of change in the BOLD responses across the EB and LB-HVM groups (Fig. 4, P < 0.005 uncorrected). The LB-HVM group showed increased BOLD responses in the left lingual gyrus, whereas the EB group showed decreased BOLD responses in the left postcentral gyrus, left cingulate gyrus, left inferior temporal gyrus (ITG), right precuneus, and right middle temporal gyrus (MTG) (Table 2).

Sound Localization Learning–Experience Modulation

To test whether the modulation of the sound localization training would be differed in the two groups, two sample t-tests were performed on the longitudinal contrasts. The modulation effects for the contrast of (Localizationpre − Discriminationpre) − (Localizationpost − Discriminationpost) was significantly more intense in the EB than the LB-HVM in the left inferior frontal gyrus (IFG) and left superior temporal gyrus (STG). In contrast, the modulation effects for the contrast of (Localizationpost − Discriminationpost) − (Localizationpre − Discriminationpre) was significantly more intense in the LB-HVM than the EB in the left medial frontal gyrus (MeFG) and left STG.

The ROIs were defined from conjunction analyses on BOLD responses before and after sound localization training. Four ROIs were identified: the right precuneus (9, −69, 52), the right MeFG (6,3,52), the left precuneus (−7, −72, 46), and the left MFG (−28, −0,65). Repeated ANOVA based on the three-group model revealed a significant Group × Training effect on BOLD signal changes in the left precuneus (F2,21 = 3.75, P = 0.041) and a marginally significant effect in the right precuneus (F2,21 = 3.04, P = 0.069, ƞ 2 = 0.224). Opposite changes in contrast value in the two neural correlates were revealed between the LB and EB groups, with increased change in contrast value in both the LB-HVM and LB-LVM groups but decreased change in the EB group (Fig. 5). Furthermore, sound localization performance in both the LB-HVM and LB-LVM groups was moderately correlated with the increased activation of the right precuneus (r = 0.589, P = 0.034) and the left MFG (r = 0.599, P = 0.031) as well as a marginal significant correlation with increase in the left precuneus (r = 0.504, P = 0.079), whereas no significant correlation with increase was observed in the right MeFG (r = 0.259, P = 0.393).

The four ROIs identified above were used as seed regions for conducting the PPI analyses. For the LB-HVM group, enhanced connectivity was revealed between the right precuneus and left lingual gyrus and between the left precuneus and the right lingual gyrus; no significant enhancement of connectivity was found in the LB-LVM group. For the EB group, the left precuneus was significantly connected to extensive limbic-temporo-parietal networks in the left and right brain. The networks included the left hippocampus, right posterior cingulate gyrus, bilateral MTG, right STG, precuneus, and inferior parietal lobule (IPL) (Fig. 6) (Supplemental Table 2).

Subgroup Analysis for EB and Total Participants

The modulation effect of visuo-spatial working memory on sound localization learning was further tested among the EB participants. The analysis procedures used for the LB participants were replicated. The cut-off score of 40% on the 2D test was used to classify the EB participants into higher and lower ability subgroups. Those who obtained an aggregated accuracy rate equal to or higher than 40% formed the higher VM group (EB-HVM; n = 6), whereas those obtained lower than 40% formed the lower VM group (EB-LVM; n = 5). Repeated measures ANOVA on the participants’ sound localization performance only revealed significant Training effect [F1,20 = 180.385, P < 0.001], but non-significant Group (F3,20 = 1.419, P = 0.267) and marginally significant Group × Training effect [F3,20 = 2.838, P = 0.064]. With a corrected threshold (FDR P < 0.05), longitudinal analysis showed no significant results in both EB-LVM and EB-HVM groups. The PPI results found significant enhanced functional connectivity between the left precuneus and left occipito-parietal regions in the EB-HVM group, and significant enhanced functional connectivity between the bilateral precuneus and parieto-temporo-frontal regions in the EB-LVM group. The ROI analyses based on the four-group model (all subgroups of EB and LB) revealed only marginally significant Group × Training effect on BOLD signal changes in the left precuneus (F3,21 = 2.556, P = 0.084, ƞ 2 = 0.277).

To further investigate the role of visuo-spatial working memory on sound localization learning, we conducted correlation analyses between the participants’ performances on the matrix test and improvement on sound localization performance for the EB and LB participants. Significant correlations were revealed for the LB (2D matrix, r = 0.605, P = 0.028; 3D matrix, r = 0.544, P = 0.055), but not the EB (2D matrix, r = 0.098, P = 0.774; 3D matrix, r = 0.177, P = 0.602). To exclude the possibility that the significant results are driven by the participants’ learning rather than visual experience, the EB and LB participants were pooled and then divided into the better learner (n = 12, EB/LB = 6/6, improvement > 8%) and poorer learner groups (n = 12, EB/LB = 5/7, improvement ≤ 8%). The behavioral results, as expected, revealed significant Training [F1,22 = 367.559, P < 0.001] and Group × Training effect [F1,22 = 33.796, P < 0.001], and a non-significant Group effect [F1,22 = 3.037, P = 0.095, ƞ 2 = 0.121]. With a corrected threshold (FDR P < 0.05), longitudinal analysis only found significant decreases in BOLD responses in the right IPL, right precentral gyrus, left insula, and right STG in the better learner group. The PPI and ROI analyses did not reveal significant results for the better versus poorer learner subgroup method.

Discussion

There are three main findings in this study. First, visual experience appears to modulate sound localization learning among the LB but not EB group. This effect is indeed unique to the LB as we found normal visual individuals despite their intact visual experience did not gain from the sound localization learning. The precuneus was found to play significantly different roles in the EB and LB groups. The increased activation of the bilateral precuneus after training was correlated with the LB participants’ performance in the sound localization task. By contrast, the EB participants showed decreased activation of the precuneus. These results suggest that the effect of the modulation of the precuneus on sound localization is likely attributable to the prior visual experience encoded by the LB but not the EB participants. This speculation is further substantiated by the different results obtained for the LB-HVM and LB-LVM groups. The higher visuospatial working-memory ability of participants in the LB-HVM group could have modulated the neural processes and hence the sound localization performance. These observations were robust as repeating analyses on other ways of sub-dividing the participants (i.e. EB-HVM and EB-LVM, and poorer/better learners) revealed non-significant or less meaningful results. Second, the role of the precuneus seems to depend on the functionality of the visual system and on prior visual experience. The discovery of enhanced connectivity revealed two distinct neural networks related to the precuneus. Among LB-HVM participants, it is the precuneus-lingual gyrus connectivity, suggesting that sound localization in this group may tap into visuospatial working memory. Among EB participants, it is the precuneus-limbic-multisensory connectivity, suggesting that sound localization in this group may predominantly rely on retrieval from spatial memory. The difference between these networks offers a plausible explanation for the variations in task performance and cross sound localization learning between the LB-HVM and EB participants.

The precuneus is part of the dorsal parietal cortex (DPC) located in the medial part of the PPC (Cabeza et al. 2008). In this study, the increased activation in LB participants after sound localization training was localized to the left and right posterior precuneus. The PPC belongs to the dorsal visual processing stream. It is functionally related to spatial perception (Ungerleider and Mishkin 1982) and specializes in receiving multisensory inputs for spatial processing and integration (Bremmer 2011). The PPC was also found to combine the spatial information embedded in the visual and auditory modalities (Nardo et al. 2014). TMS studies further confirmed the functional role of PPC in multisensory spatial tasks (Azanón et al. 2010; Bolognini et al. 2009). Because the posterior precuneus is connected to the occipital and parietal cortices, it has been implicated in visuospatial processing (Leichnetz 2001), spatial navigation (Boccia et al. 2014), and the retrieval of remembered episodes (Bergström et al. 2013). In this study, the participants learned to relate 15 “Bat-ears” sounds with locations to a specific distance × azimuth during sound localization training. The “Bat-ears” sounds employed for sound localization during the functional scans were recorded at neighboring locations and hence not previously presented to the participants. The successful localization of the sounds would require the participants to decode the embedded spatial information and to generalize the learned sound-to-location relationship to the unlearned sounds. The increased activation of the posterior precuneus in the post-training scan suggested that the LB participants employed active visuospatial navigation processes in sound localization learning. Previous studies of LB individuals focused on neural substrates in the occipital but not parietal cortex (Collignon et al. 2013; Voss et al. 2006) and therefore did not assay the precuneus. Our findings concur with those reported in Voss et al. (2008), which found an increased activation of the precuneus based on comparisons between LB and normal-vision groups.

One interesting finding in this study is that sound localization learning by the LB group was augmented by higher visuospatial working memory ability, which was the case for the LB-HVM but not the LB-LVM group. This was further substantiated by the training-induced enhancement of connectivity between the precuneus and the lingual gyrus in the LB-HVM but not the LB-LVM groups. Such connectivity is likely to be enhanced by prior visual experience and hence by the development of visuospatial working memory in the LB-HVM participants. The existence of the precuneus-lingual gyrus network for mediating sound localization after sound localization learning in the LB-HVM group but not in the LB-LVM group is a new finding. This is consistent with and extends previous findings that the lingual gyrus was associated with the processing of spatial information embedded in sounds in EB (Collignon et al. 2011; Gougoux et al. 2005) and LB individuals (Voss et al. 2006). The precuneus and lingual gyrus were found to be the neural correlates of landmark and path encoding during real-world route learning in people with normal vision (Schinazi and Epstein 2010), which corroborates the involvement of the precuneus-lingual gyrus network during navigation reported in a recent meta-analytic study (Boccia et al. 2014). The identification of the precuneus-lingual gyrus network supports the notion that sound localization learning by the LB-HVM might involve the integration of encoded prior visual experience with the spatial information embedded in the sounds. It is likely to be mediated by visuospatial working memory, which might have developed among the LB-HVM participants who had intact vision in their earlier years of life. Visuospatial working memory was found to augment sound localization learning in those who possessed higher abilities (i.e., LB-HVM individuals) and those who showed significantly higher performance on the sound localization task. Nevertheless, our results did not reveal significant relationships between the years of visual experience and the performance on the matrix tests (measure of visuospatial working memory) in the LB group, which is consistent with the results reported in Cattaneo et al. (2007). Vision was previously found to influence an individual’s development of spatial working memory and spatial imagery (Afonso et al. 2010; Cattaneo et al. 2008; Iachini and Ruggiero 2010). It is highly plausible that the LB participants would have gained very different types of visual exposure from the environment, such as at home, at school, and in the community. After blindness, the differences in prior visual experience would have enabled the LB participants to use different compensatory strategies and to engage in various modes of cross-modal learning. Without a significant relationship with the years of visual experience, our results can support only the notion that, among the LB participants who had higher visuospatial working-memory ability, prior visual experience appears to enhance the activation of the precuneus-lingual gyrus network in sound localization learning. However, the effects of prior visual experience did not seem to be significant for the LB participants, who had lower visuospatial working-memory ability. The extent to which visual experience and exposure to the environment contribute to lower visuospatial working-memory ability among LB individuals calls for future studies. Future study should also include a group of normal vision individuals who receive training on sound localization with comparable task performance with LB individuals. The comparisons between the LB and normal vision individuals will further shed light on the relationships among visual experience, visuospatial working-memory ability and the precuneus-lingual network.

Collignon et al. (2011) revealed that the precuneus-lingual gyrus network in the LB-HVM individuals was different from the lingual gyrus network reported in EB individuals. They found enhanced connectivity between the lingual gyrus and the IPL in congenitally blind participants during sound localization processing. The lack of a normal vision control group in this study cannot provide further evidence to explain our observation that the visuospatial working memory in the LB-HVB participants would have been gained from their prior visual experience. As a result, the notion that prior experience modulates sound localization learning in LB is a speculation that needs to be studied in future research.

Studies of EB individuals have received much more attention than those of LB individuals. Nevertheless, previous studies have often compared EB participants to those with normal vision. The current study revealed decreased activations in the precuneus after the EB participants received sound localization training. It assigns an important role for precuneus in differentiation process underlying sound localization learning between EB and LB individuals. First, the results obtained from the EB cohort were opposite those obtained from the LB, who exhibited increased activity in the precuneus. Second, the left precuneus in the EB group showed enhanced connectivity with an extended network, including the limbic system (the hippocampus and the cingulate gyrus) and the multisensory regions (MTG, STG, IPL). The network was different from that of the LB-HVM group, for whom enhanced connectivity was specific to the lingual gyrus. The decreased involvement of the precuneus among members of the EB cohort is not consistent with the activity reported in other studies. The main reason is that those studies focused on cross-modal spatial processing rather than on cross-modal learning. For instance, both EB and normal vision subjects presented similar involvement of the precuneus during spatial processing (Collignon et al. 2013) and tactile-spatial navigation (Gagnon et al. 2012). Similarly, the precuneus has been reported to be involved during monaural (Voss et al. 2008) and binaural sound localization among EB participants (Gougoux et al. 2005; Tao et al. 2015). In fact, in our study, the level of precuneus activation during the sound localization task was comparable in the LB and EB participants prior to sound localization training. The different patterns of precuneus activation change were observed only after the training. The decreased involvement of the precuneus and its connections with the limbic-multisensory network suggests that the EB participants tended to rely on memory, particularly on the retrieval process, in sound localization learning. Previous studies indicated that the medial and lateral parietal cortices as well as the posterior cingulate cortices are activated in association with memory retrieval (Wartman and Holahan 2013; Weible 2013). This is in agreement with current reports of decreased activity in the precuneus, cingulate gyrus, ITG, MTG, and MOG. The widely distributed precuneus network largely overlaps with the cued memory retrieval network (Burianová et al. 2012). Other studies indicated that the DPC, which contains the precuneus, is associated with the allocation of attention to strategic memory search (Cabeza et al. 2008; Ciaramelli et al. 2008). The left hippocampus has been shown to play a role in memory retrieval (Cabeza et al. 2004), particularly the retrieval of spatial information (DeMaster et al. 2013; Suthana et al. 2011). This is consistent with the finding that the learning of “Bat-ears” sounds by EB participants primarily involves the hippocampus-mediated binding of sound and distance (Chan et al. 2013). Similarly, the concurrent activation of the posterior precuneus and posterior cingulate gyrus was associated with retrieval effects (Elman et al. 2013). The MTG has been reported to mediate the retrieval of semantic (Martin and Chao 2001) and multimodal representations (Visser et al. 2012). Together, these data suggest that the major neural process associated with sound localization learning in the EB participants might be the top-down memory retrieval of the sound-to-distance relationships learned during training (Murray and Ranganath 2007). The localization of new sounds would therefore rely on associating incoming sounds with the sound-location pairs retrieved from memory and the subsequent approximation of the location of the new sound. This process could be quite different from that which the LB participants used—namely, active visuospatial navigation enhanced by visuospatial working memory.

The precuneus is regarded as the neural substrate of several complex cognitive processes (Margulies et al. 2009), including the processing of visuospatial imagery and memory retrieval (Cavanna and Trimble 2006). Recent reports have revealed two distinct networks related to the precuneus: the right parieto-frontal network and the default-mode network (Utevsky et al. 2014). Our findings suggest that the precuneus plays different roles during sound localization training between EB and LB. Its ability to network with different neural substrates perhaps can explain its different roles. Our findings support the notion that the precuneus plays a central role in highly integrated tasks (Cavanna and Trimble 2006). The involvement of the precuneus was found in blind-folded subjects with normal vision, in addition to blind individuals, during sound-to-distance (Chan et al. 2013), tactile-form (Ptito et al. 2012), and spatial navigation learning tasks (Kupers et al. 2010).

This study has several limitations. First, the findings of this study were generated from using a high-resolution sound localization task. This task was meant to tap participants’ ability and performance of sound localization. As a result, the results may not be directly comparable with those obtained by low-resolution sound localization tasks, such as the sound differentiation task (Collignon et al. 2013; Voss et al. 2006). Second, this study did not recruit normal-vision individuals to form a control group. Despite the 7-day training, normal-vision individuals were found to have failed to achieve a higher-than-chance level performance. This did not allow us to verify the observation that visuospatial working memory modulates sound localization learning among LB individuals and those with normal vision. Future studies each should include a normal-vision control group for testing the robustness of this phenomenon. Third, this study subdivided LB participants according to their visuospatial working-memory ability, which resulted in a small sample size for the two subgroups. This compromised the statistical power of the analysis of brain activation and the behavioral performance data. Future study will replicate these experiments with a larger sample size for the LB group.

The current findings shed light on how prior visual experience would modulate sound localization learning by individuals with the early and late onset of blindness. The precuneus appears to play important but different roles in the learning of locating high-resolution sounds among LB and EB individuals. The learning processes undergone among the LB participants are likely to be modulated by their visuospatial working memory, which would have developed prior to the onset of blindness. These learning processes were found to be associated with an enhanced precuneus-lingual gyrus network, suggesting the transformation of auditory information embedded in the stimuli to multisensory spatial representations. In contrast, the EB participants who possessed poor visuospatial working memory appeared to learn the locating of the sounds by encoding and associating the auditory and spatial information embedded in the stimuli without transformation. This binding process was reflected in the memory and precuneus-limbic-multisensory networks revealed in the study. The implication of these findings is that the differences in the learning of sound localization between LB and EB individuals are likely to be attributable to the poor development of visuospatial working memory among the latter. Future research should explore ways in which to improve visuospatial working memory among EB and LB individuals. The outcome is to enable individuals with blindness to better use auditory information for making spatial decisions, such as navigation with or without assistive devices.

References

Afonso A, Blum A, Katz BF, Tarroux P, Borst G, Denis M (2010) Structural properties of spatial representations in blind people: Scanning images constructed from haptic exploration or from locomotion in a 3-D audio virtual environment. Mem Cognit 38:591–604. doi:10.3758/MC.38.5.591

Azañón E, Longo MR, Soto-Faraco S, Haggard P (2010) The posterior parietal cortex remaps touch into external space. Curr Biol 20(14):1304–1309. doi:10.1016/j.cub.2010.05.063

Bedford FL (1993) Perceptual and cognitive spatial learning. J Exp Psycho Human 19(3):517–530. doi:10.1037/0096-1523.19.3.517

Bedford FL (1995) Constraints on perceptual learning: objects and dimensions. Cognition 54(3):253–297. doi:10.1016/0010-0277(94)00637-Z

Bergström ZM, Henson RN, Taylor JR, Simons JS (2013) Multimodal imaging reveals the spatiotemporal dynamics of recollection. NeuroImage 68:141–153. doi:10.1016/j.neuroimage.2012.11.030

Boccia M, Nemmi F, Guariglia C (2014) Neuropsychology of environmental navigation in humans: review and meta-analysis of fmri studies in healthy participants. Neuropsychol Rev 24(2):236–251. doi:10.1007/s11065-014-9247-8

Bolognini N, Miniussi C, Savazzi S, Bricolo E, Maravita A (2009) TMS modulation of visual and auditory processing in the posterior parietal cortex. Exp Brain Res 195(4):509–517. doi:10.1007/s00221-009-1820-7

Bonino D, Ricciardi E, Sani L et al (2008) Tactile spatial working memory activates the dorsal extrastriate cortical pathway in congenitally blind individuals. Arch Ital Biol 146:133–146

Bremmer F (2011) Multisensory space: from eye-movements to self-motion. J Physiol 589:815–823. doi:10.1113/jphysiol.2010.195537

Buckner RL, Raichle ME, Miezin FM, Petersen SE (1996) Functional anatomic studies of memory retrieval for auditory words and visual pictures. J Neurosci 16:6219–6235

Burianová H, Ciaramelli E, Grady CL, Moscovitch M (2012) Top-down and bottom-up attention-to-memory: Mapping functional connectivity in two distinct networks that underlie cued and uncued recognition memory. NeuroImage 63:1343–1352. doi:10.1016/j.neuroimage.2012.07.057

Burkhard MD, Sachs RM (1975) Anthropometric manikin for acoustic research. J Acoust Soc Am 58(1):214–222. doi:10.1121/1.380648

Cabeza R, Prince SE, Daselaar SM et al (2004) Brain activity during episodic retrieval of autobiographical and laboratory events: an fMRI study using a novel photo paradigm. J Cogn Neurosci 16:1583–1594

Cabeza R, Ciaramelli E, Olson IR, Moscovitch M (2008) The parietal cortex and episodic memory: an attentional account. Nat Rev Neurosci 9:613–625. doi:10.1038/nrn2459

Calvert GA(2001) Crossmodal processing in the human brain: Insights from functional neuroimaging studies. Cereb Cortex 11(12):1110–1123. doi:10.1093/cercor/11.12.1110

Cattaneo Z, Vecchi T, Monegato M, Pece A, Cornoldi C (2007) Effects of late visual impairment on mental representations activated by visual and tactile stimuli. Brain Res 1148:170–176. doi:10.1016/j.brainres.2007.02.033

Cattaneo Z, Vecchi T, Cornoldi C, Mammarella I, Bonino D, Ricciardi E, Pietrini P (2008). Imagery and spatial processes in blindness and visual impairment. Neurosci Biobehav Rev 32(8):1346–1360. doi:10.1016/j.neubiorev.2008.05.002

Cavanna AE, Trimble MR (2006) The precuneus: a review of its functional anatomy and behavioral correlates. Brain 129:564–583. doi:10.1093/brain/awl004

Chan CH, Wong AWK, Ting KH, Whitfield-Gabrieli S, He JF, Lee TM (2013) Cross auditory-spatial learning in early-blind individuals. Hum Brain Mapp 33(11):2714–2727. doi:10.1002/hbm.21395

Chebat DR, Chen JK, Schneider F, Ptito A, Kupers R, Ptito M (2007) Alterations in right posterior hippocampus in early blind individuals. Neuroreport 18(4):329–333. doi:10.1097/WNR.0b013e32802b70f8

Chebat DR, Schneider FC, Kupers R, Ptito M (2011) Navigation with asensorysubstitution device in congenitally blind individuals. Neuroreport 22(7):342–347. doi:10.1097/WNR.0b013e3283462def

Ciaramelli E, Grady CL, Moscovitch M (2008) Top-down and bottom-up attention to memory: a hypothesis (AtoM) on the role of the posterior parietal cortex in memory retrieval. Neuropsychologia 46(7):1828–1851. doi:10.1016/j.neuropsychologia.2008.03.022

Collignon O, Lassonde M, Lepore F, Bastien D, Veraart C (2007) Functional cerebral reorganization for auditory spatial processing and auditory substitution of vision in early blind subjects. Cereb Cortex 17(2):457–465. doi:10.1093/cercor/bhj162

Collignon O, Vandewalle G, Voss P, Albouy G, Charbonneau G, Lassonde M, Lepore F (2011) Functional specialization for auditory-spatial processing in the occipital cortex of congenitally blind humans. PNAS 108(11):4435–4440. doi:10.1073/pnas.1013928108

Collignon O, Dormal G, Albouy G, Vandewalle G, Voss P, Phillips C, Lepore F (2013) Impact of blindness onset on the functional organization and the connectivity of the occipital cortex. Brain 136(9):2769–2783. doi:10.1093/brain/awt176

Cornoldi C, Cortesi A, Preti D (1991) Individual differences in the capacity limitations of visuospatial short-term memory: Research on sighted and totally congenitally blind people. Mem. Cognition 19(5):459–468. doi:10.3758/BF03199569

Cuturi LF, Aggius-Vella E, Campus C, Parmiggiani A, Gori M (2016) From science to technology: Orientation and mobility in blind children and adults. Neurosci Biobehav Rev 71:240–251. doi:10.1016/j.neubiorev.2016.08.019

DeMaster D, Pathman T, Ghetti S (2013) Development of memory for spatial context: Hippocampal and cortical contributions. Neuropsychologia 51(12):2415–2426. doi:10.1016/j.neuropsychologia.2013.05.026

Dörfel D, Werner A, Schaefer M, Von Kummer R, Karl A (2009) Distinct brain networks in recognition memory share a defined region in the precuneus. Eur J Neurosci 30(10):1947–1959. doi:10.1111/j.1460-9568.2009.06973.x

Elman JA, Cohn-Sheehy BI, Shimamura AP (2013) Dissociable parietal regions facilitate successful retrieval of recently learned and personally familiar information. Neuropsychologia 51(4):573–583. doi:10.1016/j.neuropsychologia.2012.12.013

Friston KJ, Jezzard PJ, Turner R (1994) Analysis of functional MRI time-series. Hum Brain Mapp 1:153–171. doi:10.1002/hbm.460010207

Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ (1997) Psychophysiological and modulatory interactions in neuroimaging. NeuroImage 6:218–229. doi:10.1006/nimg.1997.0291

Fuster JM, Bodner M, Kroger JK (2000) Cross-modal and cross-temporal association in neurons of frontal cortex. Nature 405:347–351. doi:10.1038/35012613

Gagnon L, Schneider FC, Siebner HR, Paulson OB, Kupers R, Ptito M (2012) Activation of the hippocampal complex during tactile maze solving in congenitally blind subjects. Neuropsychologia 50(7):1663–1671. doi:10.1016/j.neuropsychologia.2012.03.022

Gong YX (1982) Manual of modified wechsler adult intelligence scale (WAIS-RC) (in Chinese). Hunan Med College, Changsha, China

Gori M, Sandini G, Martinoli C, Burr DC (2014) Impairment of auditory spatial localization in congenitally blind human subjects. Brain 137(Pt 1):288–293. doi:10.1093/brain/awt311

Gori M, Cappagli G, Tonelli A, Baud-Bovy G, Finocchietti S (2016) Devices for visually impaired people: High technological devices with low user acceptance and no adaptability for children. Neurosci Biobehav Rev 69:79–88. doi:10.1016/j.neubiorev.2016.06.043

Gougoux F, Zatorre RJ, Lassonde M, Voss P, Lepore F (2005) A functional neuroimaging study of sound localization: visual cortex activity predicts performance in early-blind individuals. PLoS Biol 3(2):e27. doi:10.1371/journal.pbio.0030027

Halko MA, Connors EC, Sánchez J, Merabet LB (2014) Real world navigation independence in the earlyblind correlates with differential brain activity associatedwith virtual navigation. Hum Brain Mapp 35:2768–2778. doi:10.1002/hbm.22365

Iachini T, Ruggiero G (2010) The role of visual experience in mental scanning of actual pathways: Evidence from blind and sighted people. Perception 39:953–969. doi:10.1068/p6457

Kim JK, Zatorre RJ (2011) Tactile-auditory shape learning engages the lateral occipital complex. J Neurosci 31(21):7848–7856. doi:10.1523/JNEUROSCI.3399-10.2011

Kupers R, Chebat DR, Madsen KH, Paulson OB, Ptito M (2010) Neural correlates of virtual route recognition in congenital blindness. Proc Natl Acad Sci USA 107(28):12716–12721. doi:10.1073/pnas.1006199107

Leichnetz GR (2001) Connections of the medial posterior parietal cortex (area 7 m) in the monkey. Anat Rec 263:215–236

Margulies DS, Vincent JL, Kelly C et al (2009) Precuneus shares intrinsic functional architecture in humans and monkeys. Proc Natl Acad Sci 106(47):20069–20074. doi:10.1073/pnas.0905314106

Martin A, Chao LL (2001) Semantic memory and the brain: structure and processes. Curr Opin Neurobiol 11:194–201. doi:10.1016/S0959-4388(00)00196-3

Mazaika PK, Whitfield S, Cooper JC (2005) Detection and repair of transient artifacts in fMRI data. NeuroImage 26:S36.

Murray LJ, Ranganath C (2007) The dorsolateral prefrontal cortex contributes to successful relational memory encoding. J Neurosci 27:5515–5522. doi:10.1523/JNEUROSCI.0406-07.2007

Nardo D, Santangelo V, Macaluso E (2014) Spatial orienting in complex audiovisual environments. Hum Brain Mapp 35:1597–1614. doi:10.1002/hbm.22276

Nichols T, Brett M, Andersson J, Wager T, Poline JB (2005) Valid conjunction inference with the minimum statistic. NeuroImage 25(3):653–660. doi:10.1016/j.neuroimage.2004.12.005

Pasqualotto A, Proulx MJ (2010) The role of visual experience for the neural basis of spatial cognition. Neurosci Biobehav Rev 36:1179–1187. doi:10.1016/j.neubiorev.2012.01.008

Poldrack RA (2000) Imaging brain plasticity: conceptual and methodological issues–a theoretical review. NeuroImage 12 (1):1–13.doi:10.1006/nimg.2000.0596

Poldrack RA, Gabrieli JDE (2001) Characterizing the neural basis of skill learning and repetition priming: Evidence from mirror-reading. Brain 124:67–82. doi:10.1093/brain/124.1.67

Ptito M, Kupers R (2005) Cross-modal plasticity in early blindness. J Integr Neurosci 4(4):479–488

Ptito M, Matteau I, Wang AZ, Paulson OB, Siebner HR, Kupers R (2012) Crossmodal recruitment of the ventral visual stream in congenital blindness. Neural Plast. Article ID 304045. doi:10.1155/2012/304045

Renier LA, Anurova I, De Volder AG, Carlson S, VanMeter J, Rauschecker JP (2010) Preserved functional specialization for spatial processing in the middle occipital gyrus of the early blind. Neuron 68(1):138–148. doi:10.1016/j.neuron.2010.09.021

Schinazi VR, Epstein RA (2010) Neural correlates of real-world route learning. NeuroImage 53(2):725–735. doi:10.1016/j.neuroimage.2010.06.065

Schinazi VR, Thrash T, Chebat DR (2016) Spatial navigation by congenitally blind individuals. Wiley Interdiscipl Rev. Cognitive Science 7(1):37–58. doi:10.1002/wcs.1375

Spence C (2011) Crossmodal correspondences: a tutorial review. Atten Percept Psychophys 73(4):971–995. doi:10.3758/s13414-010-0073-7

Striem-Amit E, Dakwar O, Reich L, Amedi A (2011) The large-scale organization of “visual” streams emerges without visual experience. Cereb Cortex 22(7):1698–1709. doi:10.1093/cercor/bhr253

Suthana N, Ekstrom A, Moshirvaziri S, Knowlton B, Bookheimer S (2011) Dissociations within human hippocampal subregions during encoding and retrieval of spatial information. Hippocampus 21(7):694–701. doi:10.1002/hipo.20833

Tanabe HC, Honda M, Sadato N (2005) Functionally segregated neural substrates for arbitrary audiovisual paired-association learning. J Neurosci 25(27):6409–6418. doi:10.1523/JNEUROSCI.0636-05.2005

Tao Q, Chan CH, Luo YJ, Li JJ, Ting KH, Wang J, Lee TMC (2015) How does experience modulate auditory spatial processing in individuals with blindness? Brain Topogr 28(3):506–519. doi:10.1007/s10548-013-0339-1

Ungerleider LG, Mishkin M (1982) Two cortical visual systems. In: Ingle DJ, Goodale M A, Mansfield RJW (eds) Analysis of visual behavior. The MIT Press, Cambridge, pp 549–586

Utevsky AV, Smith DV, Huettel SA (2014) Precuneus is a functional core of the default-mode network. J Neurosci 34(3):932–940. doi:10.1523/JNEUROSCI.4227-13.2014

Visser M, Jefferies E, Embleton KV, Ralph MAL (2012) Both the middle temporal gyrus and the ventral anterior temporal area are crucial for multimodal semantic processing: distortion-corrected fmri evidence for a double gradient of information convergence in the temporal lobes. J Cogn Neurosci 24(8):1766–1778. doi:10.1162/jocn_a_00244

Voss P, Lassonde M, Gougoux F, Fortin M, Guillemot JP, Lepore F (2004) Early- and late-onset blind individuals show supra-normal auditory abilities in far-space. Curr Biol 14:1734–1738. doi:10.1016/j.cub.2004.09.051

Voss P, Gougoux F, Lassonde M, Zatorre RJ, Lepore F (2006) A positron emission tomography study during auditory localization by late-onset blind individuals. Neuro Rep 17(4):383–388. doi:10.1097/01.wnr.0000204983.21748.2d

Voss P, Gougoux F, Zatorre RJ, Lassonde M, Lepore F (2008) Differential occipital responses in early- and late-blind individuals during a sound-source discrimination task. NeuroImage 40(2):746–758. doi:10.1016/j.neuroimage.2007.12.020

Voss P, Lepore F, Gougoux F, Zatorre RJ (2011) Relevance of spectral cues for auditory spatial processing in the occipital cortex of the blind. Front Psychol 48:1–12. doi:10.3389/fpsyg.2011.00048

Wartman BC, Holahan MR (2013) The use of sequential hippocampal-dependent and -non-dependent tasks to study the activation profile of the anterior cingulate cortex during recent and remote memory tests. Neurobiol Learn Mem 106:334–342. doi:10.1016/j.nlm.2013.08.011

Watson R, Latinus M, Charest I, Crabbe F, Belin P (2014) People-selectivity, audiovisual integration and heteromodality in the superior temporal sulcus. Cortex 50:125–136. doi:10.1016/j.cortex.2013.07.011

Wechsler D (1955) Manual for the Wechsler adult intelligence scale. The Psychological Corpration, Oxford

Weible AP (2013) Remembering to attend: The anterior cingulate cortex and remote memory. Behav Brain Res 245:63–75. doi:10.1016/j.bbr.2013.02.010

Yamashita M, Kawato M, Imamizu H (2015) Predicting learning plateau of working memory from whole-brain intrinsic network connectivity patterns. Sci Rep 5:7622. doi:10.1038/srep07622

Acknowledgements

The authors thank the associations of people with blindness and subjects for participating in this study. This study was supported by the Collaborative Research Fund awarded by Research Grant Council of Hong Kong to CCH Chan and TMC Lee (PolyU9/CRF/09) and grants awarded by the National Natural Science Foundation of China to Tao (81601969). This study was partially supported by an internal research grant from Department of Rehabilitation Sciences, The Hong Kong Polytechnic University to CCH Chan.

Author information

Authors and Affiliations

Corresponding authors

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tao, Q., Chan, C.C.H., Luo, Yj. et al. Prior Visual Experience Modulates Learning of Sound Localization Among Blind Individuals. Brain Topogr 30, 364–379 (2017). https://doi.org/10.1007/s10548-017-0549-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10548-017-0549-z