Abstract

In this work, the Parareal algorithm is applied to evolution problems that admit good low-rank approximations and for which the dynamical low-rank approximation (DLRA) can be used as time stepper. Many discrete integrators for DLRA have recently been proposed, based on splitting the projected vector field or by applying projected Runge–Kutta methods. The cost and accuracy of these methods are mostly governed by the rank chosen for the approximation. These properties are used in a new method, called low-rank Parareal, in order to obtain a time-parallel DLRA solver for evolution problems. The algorithm is analyzed on affine linear problems and the results are illustrated numerically.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This work is concerned with the parallel-in-time integration of evolution problems for which the solution can be well approximated by a time-dependent low-rank matrix. In particular, we aim to solve approximately the evolution problem

where X(t) is a matrix of size \(m \times m\). When the dimension m is large, the numerical solution of (1) can be very expensive since the matrix X(t) is usually dense. One way to alleviate this curse of dimensionality is to use low-rank approximations where, for every t, we approximate X(t) by \(Y(t) \in {\mathbb {R}}^{m \times m}\) such that \({{\,\mathrm{rank}\,}}(Y(t)) = r \ll m\). The accuracy of this approximation will depend on the choice of the rank r. Here, X(t) is assumed to be square for notational convenience and all results can be easily formulated for rectangular X(t).

A popular paradigm to solve directly for the low-rank approximation Y(t) is the dynamical low-rank approximation (DLRA), first proposed in [25]. As defined later in Def. 5, DLRA leads to an evolution problem that is a projected version of (1). In the last decade, many discrete integrators for this projected problem have been proposed. One class of integrators consists in a clever splitting of the projector so that the resulting splitting method can be implemented efficiently. An influential example is the projector-splitting scheme proposed in [29]. Other methods that require integrating parts of the vector field can be found in [4, 22]. Another approach, proposed in [9, 24, 35], is based on projecting standard Runge–Kutta methods (sometimes including their intermediate stages). Most of these methods are formulated for constant rank r. Rank adaptivity can be incorporated without much difficulty for splitting and for projected schemes; see [3, 6, 9, 35]. Finally, given the importance of DLRA in problems from physics (like the Schrödinger and Vlasov equation), the integrators in [7, 29] also preserve certain invariants, like energy. However, none of these time integrators consider a parallel-in-time scheme for DLRA, which is particularly interesting in the large-scale setting.

Parallel computing can be very effective and is even necessary to solve large-scale problems. While parallelization in space is well known, also the time direction can be parallelized to some extent when solving evolution problems. Over the last decades, various parallel-in-time algorithms have been proposed; see, e.g., the overviews [12, 32]. Among these, the Parareal algorithm from [28] is one of the more popular algorithms for time parallelization. It is based on a Newton-like iteration, with inaccurate but cheap corrections performed sequentially, and accurate but expensive solves performed in parallel. This idea of solving in parallel an evolution problem as a nonlinear (discretized) system also appears in related methods like PFASST [8], MGRIT [11] and Space-Time Multi-Grid [17]. Theoretical results and numerical studies on a large numbers of cores show that these parallel-in-time methods can have good parallel performance for parabolic problems; see, e.g., [16, 21, 37]. So far, these methods did not incorporate a low-rank compression of the space dimension, which is the main topic of this work.

2 Preliminaries and contributions

2.1 The Parareal algorithm

The Parareal iteration in Def. 1 below is given for constant time step h (hence \(T=Nh\)) and for autonomous F. Both restrictions are not crucial but ease the presentation. The quantity \(X_n^k\) is an approximation for \(X(t_n)\) at time \(t_n = nh\) and iteration k. The accuracy of this approximation is expected to improve with increasing k. Here, and throughout the paper, we denote dependency on the iteration index k as \({\ }^k\), which should not be confused with the kth power.

Definition 1

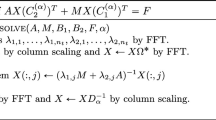

(Parareal) The Parareal algorithm is defined by the following double iteration on k and n,

Here, \({\mathscr {F}}^h(X)\) represents a fine (accurate) time stepper applied to the initial value X and propagated until time h. Similarly, \({\mathscr {G}}^h(X)\) represents a coarse (inaccurate) time stepper.

Given two time steppers, the Parareal algorithm is easy to implement. A remarkable property of Parareal is the convergence in a finite number of steps for \(k=n\). It is well known that Parareal works well on parabolic problems but behaves worse on hyperbolic problems; see [18] for an analysis.

2.2 Dynamical low-rank approximation

Let \({\mathscr {M}}_r\) denote the set of \(m \times m\) matrices of rank r, which is a smooth embedded submanifold in \({\mathbb {R}}^{m \times m}\). Instead of solving (1), the DLRA solves the following projected problem:

Definition 2

(Dynamical low-rank approximation) For a rank r, the dynamical low-rank approximation of problem (1) is the solution of

where \({\mathscr {P}}_{Y}\) is the \(l_2\)-orthogonal projection onto the tangent space \({\mathscr {T}}_{Y} {\mathscr {M}}_r\) of \({\mathscr {M}}_r\) at \(Y \in {\mathscr {M}}_r\). In particular, \(Y(t) \in {\mathscr {M}}_r\) for every \(t \in [0,T]\).

To analyze the approximation error of DLRA, we need the following standard assumptions from [23]. Here, and throughout the paper, \(\Vert \cdot \Vert \) denotes the Frobenius norm.

Assumption 1

(DLRA assumptions) The function F satisfies the following properties for all \(X,Y \in {\mathbb {R}}^{m \times m}\):

-

Lipschitz with constant L: \(\left\Vert F(X)-F(Y)\right\Vert \le L \left\Vert X-Y\right\Vert \).

-

One-sided Lipschitz with constant \(\ell \): \(\langle X-Y,F(X)-F(Y) \rangle \le \ell \left\Vert X-Y\right\Vert ^2\).

-

Maps almost to the tangent bundle of \({\mathscr {M}}_r\): \(\left\Vert F(Y)-{\mathscr {P}}_Y F(Y)\right\Vert \le \varepsilon _r\).

In the analysis in Section 3, it is necessary to have \(\ell < 0\) for convergence. This holds when F is a discretization of certain parabolic PDEs, like the heat equation. In particular, for an affine function of the form \(F(X)=A(X)+B\), it holds \(\ell = \tfrac{1}{2}\lambda _{\max }(A+A^T)\); see [20, Ch. I.10]. The quantity \(\varepsilon _r\) is called the modeling error and decreases when the rank r increases. For our problems of interest, this quantity is typically very small. Finally, the existence of L is only needed to guarantee the uniqueness of (1) but it will actually not appear in our analysis. We can therefore allow L to be large, as is the case for discretized parabolic PDEs.

Standard theory for perturbations of ODEs allows us to obtain the following error bound from the assumptions above:

Theorem 1

(Error of DLRA [23]) Under Assumption 1, the DLRA verifies

where \(\phi ^h\) is the flow of the original problem (1) and \(\psi ^h_r\) is the flow of its DLRA (5) for rank r.

The solution of DLRA (5) is quasi-optimal with the best rank approximation. This can be seen already in Theorem 1 for short time intervals. Similar estimates exist for parabolic problems [5] and for longer time when there is a sufficiently large gap in the singular values and when their derivatives are bounded [25].

2.3 Contributions

In this paper, we propose a new algorithm, called low-rank Parareal. As far as we know, this is the first parallel-in-time integrator for low-rank approximations. We analyze the proposed algorithm when the function F in (1) is affine. To this end, we extend the analysis of the classical Parareal algorithm in [14] to a more general setup where the coarse problem is different from the fine problem. We can prove that the method converges for big steps (large h) on diffusive problems (\(\ell <0\)). In numerical experiments, we confirm this behavior. In addition, the method also performs well empirically with a less strict condition on h and on a non-affine problem.

3 Low-rank Parareal

We now present our low-rank Parareal algorithm for solving (1). Since the cost of most discrete integrators for DLRA scales quadraticallyFootnote 1 with the approximation rank, we take the coarse time stepper as DLRA with a small rank q. Likewise, the fine time stepper is DLRA with a large rank r. We can even take \(r=m\), which corresponds to computing the exact solution as the fine time stepper since \(Y \in {\mathbb {R}}^{m \times m}\).

Definition 3

(Low-rank Parareal) Consider two ranks \(q < r\). The low-rank Parareal algorithm iterates

where \(\psi ^h_r(Z)\) is the solution of (5) at time h with initial value \(Y_0 = Z\), and \({\mathscr {T}}_r\) is the orthogonal projection onto \({\mathscr {M}}_r\). The notations \(\psi ^h_q\) and \({\mathscr {T}}_q\) are similar but apply to rank q. The matrices \({\mathscr {E}}_n\) are small perturbations such that \({{\,\mathrm{rank}\,}}(Y_{n+1}^0) = r + 2q\) and can be chosen randomly.Footnote 2

Observe that the rank of \(Y_n^k\) is at most \(r+2q\) for all n, k. The low-rank structure is therefore preserved over the iterations. The matrices \({\mathscr {E}}_n\) insure that each iteration has a rank between r and \(r+2q\). These matrices impact only the initial error but do not have any role in the convergence of the algorithm as is shown later in the analysis. An efficient implementation should store the low-rank matrices in factored form. In this context, the truncated SVD can be efficiently performed. The DLRA flows \(\psi ^h_r\) and \(\psi ^h_q\) can only be computed for relatively small problems. For larger problems, a suitable DLRA integrator must be used; see Sect. 4 for implementation details.

3.1 Convergence analysis

Let \(X_{n} = X(t_{n})\) be the solution of the full problem (1) at time \(t_{n}\). Let \(Y_{n}^{k}\) be the corresponding low-rank Parareal solution at iteration k. We are interested in bounding the error of the algorithm,

for all relevant n and k. To this end, we make the following assumption:

Assumption 2

(Affine vector field) The function F is affine linear and autonomous, that is,

with \(A:{\mathbb {R}}^{m \times m}\rightarrow {\mathbb {R}}^{m \times m}\) a linear operator and \(B \in {\mathbb {R}}^{m \times m}\).

The following lemma gives us a recursion for the Frobenius norm of the error. This recursion will be fundamental in deriving our convergence bounds later on when we generalize the proof for standard Parareal from [14].

Lemma 1

(Iteration of the error) Under the Assumptions 1 and 2, the error of low-rank Parareal verifies

The constants \(\ell , \varepsilon _q, \varepsilon _r\) are defined in Assumption 1. Moreover, \(C_{r,q}\) and \(C_q\) are the Lipschitz constants of \({\mathscr {T}}_{r} - {\mathscr {T}}_{q}\) and \({\mathscr {T}}_q\).

Proof

Our proof is similar to the one in [24] where first the continuous version of the approximation error of DLRA is studied. Denote by \(\phi ^h(Z)\) the solution of (1) at time h with initial value \(Y_0 = Z\). By definition, the discrete error is

We can interpret each term above as a flow from \(t_n\) to \(t_{n+1}=t_n+h\). Denote these flows by X(t), Z(t), W(t), and V(t) with the initial values

Defining the continuous error as

we then get the identity \(E_{n+1}^{k+1}= E(t_{n}+h)\).

We proceed by bounding \(\left\Vert E(t)\right\Vert \). By definition of the flows above, we have (omitting the dependence on t in the notation)

where the last equality holds since the function F is affine. Using Assumption 1 and Cauchy–Schwarz, we compute

Since \(\frac{d}{dt} \left\Vert E(t)\right\Vert ^2 = 2 \left\Vert E(t)\right\Vert \frac{d}{dt} \left\Vert E(t)\right\Vert \), we therefore obtain the differential inequality

Gr-nwall’s lemma allows us to conclude

From (12), we get

Denoting \({\mathscr {T}}_r^{\perp }= I - {\mathscr {T}}_r\) and \({\mathscr {T}}_{r,q} = {\mathscr {T}}_r- {\mathscr {T}}_q\), we get after rearranging terms

Taking norms gives

where \(C_{r,q}\) and \(C_q\) are the Lipschitz constants of \({\mathscr {T}}_{r,q}\) and \({\mathscr {T}}_q\) respectively. Combining inequalities (13) and (14) gives the statement of the lemma.\(\square \)

We now study the error recursion (11) in more detail. To this end, let us slightly rewrite it as

with the non-negative constants

Our first result is a linear convergence bound, up to the DLRA approximation error. It is similar to the linear bound for standard Parareal.

Theorem 2

(Linear convergence) Under the Assumptions 1 and 2, and if \(\alpha + \beta < 1\), low-rank Parareal verifies for all \(k \in {\mathbb {N}}\) the linear bound

where \(\alpha , \beta , \kappa \) are defined in (16).

Proof

Define \(e_{\star }^k = \max _{n \ge 0} \left\Vert E_n^k\right\Vert \). Taking the maximum for \(n \ge 0\) of both sides of (15), we obtain

By assumption, \(0 \le \beta < 1\) and we can therefore obtain the recursion

with solution

By assumption, we also have \(0 \le \frac{\alpha }{1-\beta }<1\), which allows us to obtain the statement of the theorem.\(\square \)

Next, we present a more refined superlinear bound. To this end, we require the following technical lemma that solves the equality version of the double iteration (15). A similar result, but without the \(\kappa \) term and only as an upper bound, already appeared in [14, Thm. 1]. Our proof is therefore similar but more elaborate.

Lemma 2

Let \(\alpha , \beta , \gamma , \kappa \in {\mathbb {R}}\) be any non-negative constants such that \(\alpha < 1\) and \(\beta < 1\). Let \(e_n^k\) be a sequence depending on \(n, k \in {\mathbb {N}}\) such that

Then,

Proof

The idea is to use the generating function \(\rho _k(\xi ) = \sum _{n=1}^{\infty }e_n^k \xi ^n\) for \(k \ge 1\). Multiplying (18) by \(\xi ^{n+1}\) and summing over n, we obtain

Since \(e_0^k = 0\) for all k, this gives the relations

We can therefore obtain the linear recurrence

Its solution satisfies

It remains to compute the coefficients in the power series of the above formula since by definition of \(\rho _k(\xi ) = \sum _{n=1}^{\infty }e_n^k \xi ^n\) they equal the unknowns \(e_n^k\). The binomial series formula for \(|z|<1\),

together with the Cauchy product gives

Hence, the first term in \(\rho _k(\xi )\) satisfies

while the second term can be written as

Putting everything together, we have

Finally, we can identify the coefficient \(e_n^k\) in front of \(\xi ^n\) with those on the right-hand side. The coefficient for \(\xi ^n\) in the first term is clearly nonzero only when \(n \ge k+1\). In the second term, there is only one m for every j such that \(m+j=n\). Substituting \(m=n-j\) allows us to identify the coefficient of \(\xi ^n\).\(\square \)

Using the previous lemma, we can obtain a convergence bound that is superlinear in k.

Theorem 3

(Superlinear convergence) Under the Assumptions 1 and 2, and if \(\alpha + \beta < 1\), the error of low-rank Parareal satisfies for all \(n,k \in {\mathbb {N}}\) the bound

where \(\alpha , \beta , \kappa \) are defined in (16).

Proof

Define \(e_n^k = \left\Vert E_n^k\right\Vert \). By Lemma 1, the terms \(e_n^k\) verify the relation described in Lemma 2 with \(=\) replaced by \(\le \) in (18). Hence, the solution (19) from Lemma 2 will be an upper bound for \(e_n^k\).

Since \(0 \le \alpha + \beta < 1\) and using the binomial series formula (20), we bound the first term in (19) as

For \(0 \le i \le n-k-1\) and \(n \ge k+1\), observe that

Since \(0 \le \beta < 1\), we can therefore bound the second term as

The conclusion now follows by the definition of \(\gamma \).\(\square \)

The proof above can be modified to obtain a simple linear bound that is similar but different to the one from Theorem 2:

Theorem 4

(Another linear convergence bound) Under Assumptions 1 and 2, and if \(\alpha + \beta < 1\), the error of low-rank Parareal satisfies for all \(n,k \in {\mathbb {N}}\) the bound

where \(\alpha , \beta , \kappa \) are defined in (16).

Proof

We repeat the proof for the superlinear bound but this time, the second term is bounded as

\(\square \)

Remark 1

In the proof above, yet another bound based on (20) is

This time we recover the linear bound from Theorem 2.

3.2 Summary of the convergence bounds

In the previous section, we have proven four upper bounds for the error of low-rank Parareal. The first is directly obtained from Lemma 2. It is the tightest bound but its expression is too unwieldy for practical use. The other three bounds can be summarized as

with

\(B_{n,k}\) | Rate of (23) in k |

|---|---|

\(\alpha ^k (1 - \beta )^{-k}\) | Linear |

\(\alpha ^k (1+\beta )^{n-1}\) | Linear |

\(\alpha ^k (1 - \beta )^{-1} \frac{\prod _{j=2}^k (n-j)}{(k-1)!}\) | Superlinear |

Each of these practical bounds describes different phases of the convergence, and none is always better than the others. In Fig. 1, we have plotted all four bounds for realistic values of \(\alpha \) and \(\beta \). We took \(\kappa = 10^{-15} \approx \varepsilon _{\text {mach}}\) since it only determines the stagnation of the error and would interfere with judging the transient behavior of the convergence plot. Furthermore, the errors \(e_n^0=\gamma =1\) at the start of the iteration \(k=0\) were chosen arbitrarily since they have little influence on the results.

The bounds above depend on \(\alpha =e^{\ell h} C_{r,q}\) and \(\beta =e^{\ell h} C_q\), where \(C_q\) and \(C_{r,q}\) are the Lipschitz constants of \({\mathscr {T}}_q\) and \({\mathscr {T}}_{r,q}\) respectively; see (16). While it seems difficult to give a priori results on the size \(C_q\) and \(C_{r,q}\), we can bound them up to first order in the theorem below. Note also that in the important case of \(\ell <0\), the constants \(\alpha \) and \(\beta \) can be made as small as desired by taking h sufficiently large.

Theorem 5

(Lipschitz constants) Let \(A , {\tilde{A}} \in {\mathbb {R}}^{m \times n}\). Then

where \(\sigma _q\) is the qth singular value of A. Moreover,

Proof

For the first inequality, we refer to [2, Theorem2] and [10, Theorem24].The second inequality follows from the first by the triangle inequality,

\(\square \)

In many applications with low-rank matrices, the singular values of the underlying matrix are rapidly decaying. In particular, when the singular values decay exponentially like \(\sigma _k \approx e^{-ck}\) for some \(c > 0\), we have

This last quantity decreases quickly to 1 when c grows. Even for \(c=1\), it is less than 1.6. We therefore see that the constants in Theorem 5 are not too large in this case.

Remark 2

In the analysis, a sufficiently large gap in the singular values is required at both the coarse rank and the fine rank. In our experiments, we observed that such a gap is indeed required at the coarse rank, but not at the fine rank. It suggests that the bound (25) can therefore probably be improved.

4 Numerical experiments

We now show numerical experiments for our low-rank Parareal algorithm. We implemented the algorithm in Python 3.10 and all computations were performed on a MacBook Pro with a M1 processor and 16GB of RAM. The complete code is available at GitHub so that all the experiments can be reproduced. The DLRA steps are solved by the second-order projector-splitting integrator from [29]. Since the problems considered are stiff, we used sufficiently many substeps of this integrator so that the coarse and fine solvers within low-rank Parareal can be considered exact.

4.1 Lyapunov equation

Consider the differential Lyapunov equation,

where \(A \in {\mathbb {R}}^{m \times m}\) is a symmetric matrix, and \(C \in {\mathbb {R}}^{m \times k}\) is a tall matrix for some \(k \le m\). This initial value problem admits a unique solution for \(t \in [0,T]\) for any \(T>0\). The most typical example of (27) is the heat equation on a square with separable source term. Other applications can be found in [31].

Assumption 3

The matrix \(A \in {\mathbb {R}}^{m \times m}\) is symmetric and strictly negative definite.

Under Assumption 3, the one-sided Lipschitz constant \(\ell \) for (27) is strictly negative. Indeed, the linear Lyapunov operator \({\mathscr {A}}(X) = AX + XA\) has the symmetric matrix representation \(A \otimes I + I \otimes A\) with eigenvalues \(\lambda _i(A) + \lambda _j(A)\) for \(1 \le i,j \le m\); see [19, Ch. 12.3]. As in [20, Ch. I.10], we therefore get immediately that \(\ell = 2 \max _i \lambda _i(A) < 0\). Moreover, since \({\mathscr {A}}\) is invertible, we can write the closed-form solution of (27) as

which can be easily verified by differentiation using properties of the matrix exponential \(e^{t {\mathscr {A}}}(Z) = e^{t A} Z e^{t A}\).

The following result shows that the solution of (27) can be well approximated by low rank. It is the analogue to a similar result for the algebraic Lyapunov equation \({\mathscr {A}}(X)=CC^T\). The latter result is well known, but we did not find a proof for the former in the literature.

Lemma 3

(Low-rank approximability of Lyapunov ODE) Let \(\sigma _i(X_0)\) be the ith singular value of \(X_0\) and likewise for \(\sigma _i(CC^T)\). Under Assumption 3, the solution X(t) of (27) has an approximation

for any \(0 \le r_0, r, \rho \le m\) with error

where \(\kappa _A = \left\Vert A\right\Vert _2 \Vert A^{-1}\Vert _2\) and \(\ell = 2 \max _i \lambda _i(A)\).

Proof

The aim is to approximate the following two terms that make up the closed-form solution X(t) in (28):

The first term \(X_1(t)\) can be treated directly. By the truncated SVD, the initial value satisfies

By Assumption 3, the operator \({\mathscr {A}}\) is full rank. We therefore obtain

where \({{\,\mathrm{rank}\,}}(Y_1(t)) = {{\,\mathrm{rank}\,}}(Y_0) = r_0\) and \(\Vert E_1(t)\Vert _2 \le e^{\ell t} \sigma _{r_0+1}(X_0)\) since \(\ell = 2 \max _i \lambda _i(A)\). Next, we focus on the second term \(X_2(t)\). Like above, the source term can be decomposed as

By linearity of the Lyapunov operator, we therefore obtain

Denote \(M=e^{t {\mathscr {A}}} D - D\). By definition of the Lyapunov operator \({\mathscr {A}}\), we have

As studied in [34] and then improved in [1], the singular values of the solution S are bounded as

where \(\kappa _A = \left\Vert A\right\Vert _2 \Vert A^{-1}\Vert _2\) and \( 0 \le \rho \le m\). Since \({{\,\mathrm{rank}\,}}(M) \le 2 {{\,\mathrm{rank}\,}}(D) = 2r\) by assumption on D, the bound (31) then implies that

where \({{\,\mathrm{rank}\,}}(Y_2(t)) \le 2 r \rho \) and

where the last inequality holds by properties of the logarithmic norm \(\mu \) of \({\mathscr {A}}\) which equals \(\ell \); see [36, Proposition 2.2]. Moreover, we can bound the last term in (30) as

Putting (29) and (30) together, we obtained

which proves the statement of the lemma.\(\square \)

The lemma shows that if \(X_0\) and \(CC^T\) have good low-rank approximations, then the solution X(t) of the differential Lyapunov equation has comparable low-rank approximations as well on [0, T]. Since \(\ell < 0\), we can even take \(T \rightarrow \infty \) and recover essentially the low-rank approximability of the Lyapunov equation \(X(\infty ) = {\mathscr {A}}^{-1}(CC^T)\). This is clearly visible when \(X_0\) and \(CC^T\) are exactly of low rank, which we state as a simple corollary for convenience.

Corollary 1

Under Assumption 3 and assuming that \({{\,\mathrm{rank}\,}}(X_0) = r_0, \, {{\,\mathrm{rank}\,}}(CC^T) = r,\) the solution X(t) of (28) has an approximation

for any \(0 \le \rho \le m\) with error

The corollary clearly shows that the approximation error decreases exponentially when the approximation rank increases linearly via \(\rho \). Furthermore, we see that the condition number of the matrix A has only a mild influence due to \(\log (\kappa _A)\).

Remark 3

Corollary 1 can be compared to a similar result in [26]. In that work, the authors solve (27) with exact low-rank \(X_0=ZZ^T\) and \(CC^T\) using a Krylov subspace method. More specifically, with \(U_k\) an orthonormal matrix that spans the block Krylov space \(K_k(A, [C\,Z])\), the projected Lyapunov equation

is used to define the approximation \(X_k(t) = U_k Y_k(t) U_k^T\). The approximation error of \(X_k(t)\) is studied in [26, Theorem4.2]. Since \({{\,\mathrm{rank}\,}}(X_k(t)) \le k({{\,\mathrm{rank}\,}}(Z) + {{\,\mathrm{rank}\,}}(C))\), we therefore also get a result on the low-rank approximability of (27). This bound is, however, worse than ours since it does not give zero error for \(t=0\) and \(k=1\), for example. On the other hand, it is a bound for a discrete method whereas our Lemma 3 and Corollary 1 are statements about the exact solution.

We now apply the low-rank Parareal algorithm to the differential Lyapunov equation (27). Let \(A = \varDelta _{dx}\) be the \(n \times n\) discrete Laplacian with zero Dirichlet boundary conditions obtained by standard centered differences on \([-1, 1]\). The Lyapunov equation is therefore a model for the 2D heat equation on \(\varOmega = [-1,1]^2\). In the experiments, we used \(n=100\) spatial points and the time interval \([0,T] = [0,2]\). The matrix C for the source is generated randomly with singular values \(\sigma _i = 10^{-5(i-1)}\) where \(i=1,2,\ldots \) so that its numerical rank is 4. In order to have a realistic initial value, \(X_0\) is obtained as the exact solution at time \(t=0.01\) of the same ODE but with a random initial value \(\tilde{X_0}\) with singular values \(\sigma _i = 10^{-(i-1)}\).

Figure 2 is a 3D plot of the solution over time on \(\varOmega \) with its corresponding singular values. As we can see, the solution becomes almost stationary at \(t=1.0\). In addition, it stays low-rank over time in agreement to Lemma 3. Moreover, the singular values suggest to take the fine rank \(r=16\) for an error of the fine solver of order \(10^{-12}\).

Solution over time of the Lyapunov ODE (27) for the heat equation. Note the change of scale between \(t=0.0\) and \(t=1.0\)

The convergence of the error of the low-rank Parareal algorithm is shown in Fig. 3. The algorithm converges linearly from the coarse rank solution to the fine rank solution. Figure 3a suggests that the coarse rank does not influence the convergence rate and it only reduces the initial error. This is consistent with our analysis. Indeed, since the singular values are exponentially decaying, the singular gap is approximately constant; see (26). Hence, the constants \(\alpha \) and \(\beta \) from (16) that determine the convergence rate do not depend on the coarse rank q; as is shown up to first order in Theorem 5. Figure 3b shows that, similarly, the convergence rate does not depend on the fine rank either, although it limits the final error.

Convergence of the error of low-rank Parareal for the Lyapunov ODE (27) with \(n=100\) and \(T=2.0\). Influence of the coarse and fine ranks

In Fig. 4a, we investigate the convergence for several sizes n. Even though the problem is stiff, the convergence does not seem influenced by the size of the problem. Figure 4b shows the error of the algorithm applied to the problem with several step sizes. According to our analysis, the convergence is faster when the stepsize h is large; see (16).

Convergence of the error of low-rank Parareal for the Lyapunov ODE (27) with coarse rank \(q=4\) and fine rank \(r=16\). Influence of size and final time

4.2 Parametric cookie problem

We now solve a simplified version of the parametric cookie problem from [27]. Consider the ODE

where the sparse matrices \(A_0, A_1 \in {\mathbb {R}}^{1580 \times 1580}\), \({\mathbf {b}} \in {\mathbb {R}}^{1580}\), and \(C_1 = {{\,\mathrm{diag}\,}}(c_1^1, c_1^2, \ldots ,c_1^p)\) are given in [27]. The aim of this problem is to solve a heat problem simultaneously with several heat coefficients, denoted by \(c_1^1, \ldots , c_1^p\).

In our experiments, we used \(p=101\) parameters with \(c_1^1 = 0, c_1^2 = 1, \ldots , c_1^{101} = 100\). The initial value \(X_0\) is obtained after computing the exact solution of (32) at time \(t=0.01\) with the zero matrix as initial value. The time interval is \([0,T] = [0, 0.1]\).

The singular values of the reference solution are shown in Fig. 5. The stationary solution has good low-rank approximations, as was proved in [27, Thm. 2.4]. The singular value decay suggests that a fine rank \(r=16\) leads to full numerical accuracy.

Singular values of the solution over time of the parametric cookie problem (32)

In Fig. 6, we applied the low-rank Parareal algorithm with several coarse ranks q and fine ranks r. Like for the Lyapunov equation, it seems that the convergence rate does not depend on the coarse rank q. In agreement to our analysis (see Fig. 1), the convergence is linear in the first iterations and superlinear in the last iterations. In addition, the convergence is not influenced by the fine rank r.

Convergence of the errorof low-rank Parareal for the parametric cookie problem (32). Influence of the coarse and fine ranks

4.3 Riccati equation

The Riccati differential equation is given by

where \(X \in {\mathbb {R}}^{m \times m}\), \(A \in {\mathbb {R}}^{m \times m}\), \(C \in {\mathbb {R}}^{k \times m}\), and \(S \in {\mathbb {R}}^{m \times m}\). We note that this is no longer an ODE with an affine vector field and hence our theoretical results do not apply here. As already studied in [33], we take \(S = I\) and A is the spatial discretization of the diffusion operator

on the spatial domain \(\varOmega = [0,1]\). Furthermore, we take \( \alpha (x) = 2 + 2 \cos (2 \pi x) \) and \(\lambda = 1\). The discretization is done by the finite volume method, as described in [15]. The tall matrix \(C \in {\mathbb {R}}^{k \times m}\) is obtained from k independent vectors \(\{1, e_1, \ldots ,e_{(k-1)/2}, f_1, \ldots , f_{(k-1)/2} \}\), where

are evaluated at the grid points \(\{x_j \}_{j=1}^m\) with \(x_j = \frac{j}{m+1}\). The time interval is \([0, T] = [0, 0.1]\).

As for the other problems, the singular values of the solution (shown in Fig. 7) indicate that we can expect good low-rank approximations on [0, T]. We choose the fine rank \(r=18\). The convergence of low-rank Parareal is shown in Fig. 8. Unlike the previous problems, the coarse rank q has a more pronounced influence on the behavior of the convergence. While our theoretical results do not hold for this nonlinear problem, we still see that low-rank Parareal converges linearly when the coarse rank q is sufficiently large (\(q=6\), \(q=8\)). The convergence is slower (but still superlinear) when \(q=4\). This could be due to the non-constant gaps in the singular values. The influence of the fine rank r is more like for the linear problems.

Singular values of the solution over time of the Riccati ODE (33)

Convergence of the error of low-rank Parareal for the Riccati problem (33). Influence of the coarse and fine ranks

4.4 Rank-adaptive algorithm

Since the approximation rank of the solution is usually not known a priori, it is more convenient for the user to supply an approximation tolerance than an approximation rank. Even though the rank can change to satisfy the tolerance during the truncation steps, Algorithm 3 can be easily reformulated for such a rank adaptive setting. The key idea is to fix the coarse rank to keep the cost of the coarse solver low, while the fine rank is determined by a fine tolerance.

Definition 4

(Adaptive low-rank Parareal) Consider a small fixed rank q and a fine tolerance \(\tau \). The adaptive low-rank Parareal algorithm iterates

where the notation is similar to that of the previous Def. 3, except for \({\mathscr {T}}_{\tau }\) which represents the rank-adaptive truncation. In particular, \({\mathscr {T}}_{\tau }(Y)\) is the best rank q approximation of Y so that the \((q+1)\)st singular value of Y equals the tolerance \(\tau \). The matrices \({\mathscr {E}}_n\) are small perturbations, randomly generated such that \({{\,\mathrm{rank}\,}}(Y_{n+1}^0) = {{\,\mathrm{rank}\,}}(Y_0)\) and its smallest singular value is larger than the fine tolerance \(\tau \).

Figure 9 shows the numerical behavior of this rank-adaptive algorithm. As we can see, the algorithm behaves as desired. Figure 9a shows the algorithm applied with several tolerances and is comparable to Fig. 3b with several fine ranks. Figure 9b shows the rank of the solution over time. Already after two iterations, the rank is reduced to almost the numerical rank of the exact solution and the rank does not change much for the rest of the iterations.

5 Conclusion and future work

We proposed the first parallel-in-time algorithm for integrating a dynamical low-rank approximation (DLRA) of a matrix evolution equation. The algorithm follows the traditional Parareal scheme but it uses DLRA with a low rank as coarse integrator, whereas the fine integrator is DLRA with a higher rank. Taking into account the modeling error of DLRA, we presented an analysis of the algorithm and showed linear convergence as well as superlinear convergence under common assumptions and for affine linear vector fields, up to the modeling error.

In our numerical experiments, the algorithm behaved well on diffusive problems, which is similar to the original Parareal algorithm. Due to the significant difference in computational cost for the fine and coarse integrators, it is reasonable to expect good speed-up in actual parallel implementations. A proper parallel implementation to verify this claim is a natural future work. It may however be more appropriate to first generalize more efficient parallel-in-time algorithms, like Schwarz waveform relaxation and multigrid methods [13], to DLRA.

Since DLRA can also be used to obtain low-rank tensor approximations [30], another future work is to extend low-rank Parareal to tensor DLRA. Finally, our theoretical analysis assumes that the ODE has an affine vector field. Since this assumption was only needed in one step of the proof of Lemma 1, it might be possible that it can be relaxed to include certain non-linear vector fields.

Notes

While the actual cost can be larger, at the very least the methods typically compute compact QR factorizations to normalize the approximations. This costs \(O(mr^2 + r^3)\) flops in our setting.

One could even take all initial \(Y_{n+1}^0\) random, as is sometimes also done in standard Parareal. The important property is \({{\,\mathrm{rank}\,}}(Y_{n+1}^0) = r + 2q\) so that the low-rank Parareal iterations (9) are performed on the correct manifold.

References

Beckermann, B., Townsend, A.: On the singular values of matrices with displacement structure. SIAM J. Matrix Anal. Appl. 38(4), 1227–1248 (2017). https://doi.org/10.1137/16M1096426

Breiding, P., Vannieuwenhoven, N.: Sensitivity of low-rank matrix recovery. arXiv:2103.00531 [cs, math] (2021)

Ceruti, G., Kusch, J., Lubich, C.: A rank-adaptive robust integrator for dynamical low-rank approximation. BIT Numer. Math. (2022). https://doi.org/10.1007/s10543-021-00907-7

Ceruti, G., Lubich, C.: An unconventional robust integrator for dynamicallow-rank approximation. BIT Numer. Math. 62(1), 23–44 (2022). https://doi.org/10.1007/s10543-021-00873-0

Conte, D.: Dynamical low-rank approximation to the solution of parabolicdifferential equations. Appl. Numer. Math. 156, 377–384 (2020). https://doi.org/10.1016/j.apnum.2020.05.011

Dektor, A., Rodgers, A., Venturi, D.: Rank-adaptive tensor methods for high-dimensional nonlinear PDEs. arXiv:2012.05962 [physics] (2021)

Einkemmer, L., Lubich, C.: A Quasi-conservative dynamical low-rankAlgorithm for the Vlasov Equation. SIAM J. Sci. Comput. 41(5), B1061–B1081 (2019). https://doi.org/10.1137/18M1218686

Emmett, M., Minion, M.: Toward an efficient parallel in time method for partial differential equations. Commun. Appl. Math. Comput. Sci. 7(1), 105–132 (2012)

Feppon, F., Lermusiaux, P.F.J.: Dynamically orthogonal numerical schemes for efficient stochastic advection and Lagrangian transport. SIAM Rev. 60(3), 595–625 (2018). https://doi.org/10.1137/16M1109394

Feppon, F., Lermusiaux, P.F.J.: A geometric approach to dynamical ModelOrder reduction. SIAM J. Matrix Anal. Appl. 39(1), 510–538 (2018). https://doi.org/10.1137/16M1095202

Friedhoff, S., Falgout, R.D., Kolev, T.V., MacLachlan, S., Schroder, J. B.: A multigrid-in-time algorithm for solving evolution equations in parallel. Lawrence Livermore National Lab (LLNL), Livermore, CA (2012)

Gander, M.J.: 50 years of time parallel time integration. In: Multiple Shooting and Time Domain Decomposition Methods, pp. 69–113. Springer (2015)

Gander, M.J.: Time Parallel Time Integration. In: Time Parallel Time Integration, p. 90. University of Geneva(2018)

Gander, M.J., Hairer, E.: Nonlinear Convergence Analysis for the PararealAlgorithm. In: T.J. Barth, M. Griebel, D.E. Keyes, R.M. Nieminen, D. Roose,T. Schlick, U. Langer, M. Discacciati, D.E. Keyes, O.B. Widlund, W. Zulehner(eds.) Domain Decomposition Methods in Science and EngineeringXVII, vol. 60, pp. 45–56. Springer Berlin Heidelberg, Berlin, Heidelberg(2008).https://doi.org/10.1007/978-3-540-75199-1_4.Series Title: Lecture Notes in Computational Science and Engineering

Gander, M.J., Kwok, F.: Numerical Analysis of Partial Differential Equations Using Maple and MATLAB. Society for Industrial and Applied Mathematics, Philadelphia, PA (2018).https://doi.org/10.1137/1.9781611975314

Gander, M.J., Lunet, T., Ruprecht, D., Speck, R.: A unified analysis frameworkfor iterative parallel-in-time algorithms. arXiv preprint arXiv:2203.16069 (2022)

Gander, M.J., Neumuller, M.: Analysis of a new space-time parallel multigridalgorithm for parabolic problems. SIAM J. Sci. Comput. 38(4), A2173–A2208 (2016)

Gander, M.J., Vandewalle, S.: Analysis of the parareal time-parallel time-integration method. SIAM J. Sci. Comput. 29(2), 556–578 (2007). https://doi.org/10.1137/05064607X

Golub, G.H., Van Loan, C.F.: Matrix computations, fourth edition edn. Johns Hopkins studies in the mathematical sciences. The JohnsHopkins University Press, Baltimore (2013). OCLC: ocn824733531

Hairer, E., Nørsett, S.P., Wanner, G.: Berlin Heidelberg Solving Ordinary Differential Equations I, Springer Series in Computational Mathematics. Springer, Berlin, Heidelberg (1987). https://doi.org/10.1007/978-3-662-12607-3

Hofer, C., Langer, U., Neumüller, M., Schneckenleitner, R.: Parallel and robust preconditioning for space-time isogeometric analysis of parabolic evolution problems. SIAM J. Sci. Comput. 41(3), A1793–A1821 (2019)

Khoromskij, B.N., Oseledets, I.V., Schneider, R.: Efficient time-stepping scheme for dynamics on TT-manifolds. Preprint (2012). https://www.mis.mpg.de/preprints/2012/preprint2012_24.pdf

Kieri, E., Lubich, C., Walach, H.: Discretized dynamical low-rank approximation in the presence of small singular values. SIAM J. Numer. Anal. 54(2), 1020–1038 (2016)

Kieri, E., Vandereycken, B.: Projection methods for dynamical low-rank approximation of high-dimensional problems. Comput. Methods Appl. Math. 19(1), 73–92 (2019). https://doi.org/10.1515/cmam-2018-0029

Koch, O., Lubich, C.: Dynamical low-rank approximation. SIAM J. Matrix Anal. Appl. 29(2), 434–454 (2007). https://doi.org/10.1137/050639703

Koskela, A., Mena, H.: Analysis of Krylov subspace approximation to large-scale differential Riccati equations. Etna 52, 431–454 (2020). https://doi.org/10.1553/etna_vol52s431

Kressner, D., Tobler, C.: Low-rank tensor Krylov subspace methods for parametrized linear systems. SIAM J. Matrix Anal. Appl. 32(4), 1288–1316 (2011). https://doi.org/10.1137/100799010

Lions, J.L., Maday, Y., Turinici, G.: Résolution d’EDP par un schéma en temps pararéel. Comptes Rendus de l’Académie des Sci. - Ser. I - Math. 332(7), 661–668 (2001). https://doi.org/10.1016/S0764-4442(00)01793-6

Lubich, C., Oseledets, I.V.: A projector-splitting integrator for dynamical low-rank approximation. BIT Numer. Math. 54(1), 171–188 (2014). https://doi.org/10.1007/s10543-013-0454-0

Lubich, C., Vandereycken, B., Walach, H.: Time integration of rank-constrained Tucker tensors. SIAM J. Numer. Anal. 56(3), 1273–1290 (2018). https://doi.org/10.1137/17M1146889

Mena, H., Ostermann, A., Pfurtscheller, L.M., Piazzola, C.: Numerical low-rankapproximation of matrix differential equations. J. Comput. Appl. Math. 340, 602–614 (2018). https://doi.org/10.1016/j.cam.2018.01.035

Ong, B.W., Schroder, J.B.: Applications of time parallelization. Comput. Vis. Sci. 23(1), 1–15 (2020)

Ostermann, A., Piazzola, C., Walach, H.: Convergence of a low-rankLie-Trotter splitting for stiff matrix differential equations. SIAM J. Numer. Anal. 57(4), 1947–1966 (2019). https://doi.org/10.1137/18M1177901

Penzl, T.: Eigenvalue decay bounds for solutions of Lyapunov equations: the symmetric case. Syst. Control Lett. 40(2), 139–144 (2000). https://doi.org/10.1016/S0167-6911(00)00010-4

Rodgers, A., Dektor, A., Venturi, D.: Adaptive integration of nonlinear evolution equations on tensor manifolds. (2021) arXiv:2008.00155 [physics]

Söderlind, G.: The logarithmic norm. History and modern theory. BIT Numer. Math. 46(3), 631–652 (2006). https://doi.org/10.1007/s10543-006-0069-9

Speck, R., Ruprecht, D., Krause, R., Emmett, M., Minion, M., Winkel, M., Gibbon, P.: A massively space-time parallel N-body solver. In: SC’12: Proceedings of the International Conference onHigh Performance Computing, Networking, Storage and Analysis, pp. 1–11. IEEE (2012)

Acknowledgements

This work was supported by the SNSF under research project 192363.

Funding

Open access funding provided by University of Geneva

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare.

Additional information

Communicated by Antonella Zanna Munthe-Kaas.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Carrel, B., Gander, M.J. & Vandereycken, B. Low-rank Parareal: a low-rank parallel-in-time integrator. Bit Numer Math 63, 13 (2023). https://doi.org/10.1007/s10543-023-00953-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10543-023-00953-3