Abstract

We present two semidiscretizations of the Camassa–Holm equation in periodic domains based on variational formulations and energy conservation. The first is a periodic version of an existing conservative multipeakon method on the real line, for which we propose efficient computation algorithms inspired by works of Camassa and collaborators. The second method, and of primary interest, is the periodic counterpart of a novel discretization of a two-component Camassa–Holm system based on variational principles in Lagrangian variables. Applying explicit ODE solvers to integrate in time, we compare the variational discretizations to existing methods over several numerical examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Camassa–Holm (CH) equation

was presented in [8] as a model for shallow water waves, where \(u = u(t,x)\) is the fluid velocity at position x at time t, and the subscripts denote partial derivatives with respect to these variables. Equation (1) can also be seen as a geodesic equation, see [22, 23, 46]. This paper focuses on numerical schemes that are inspired by this interpretation, and more specifically the flow map or Lagrangian point of view for the equation. We mention that the CH equation also turns up in models for hyperelastic rods [13, 26, 40], and that it is known to have appeared first in [29] as a member of a family of completely integrable evolution equations.

1.1 Background and setting

The CH equation (1) has been widely studied because of its rich mathematical structure and interesting properties. For instance it is bi-Hamiltonian [29], has infinitely many conserved quantities, see, e.g., [47], and its solutions may develop singularities in finite time even for smooth initial data, see, e.g., [19, 20]. Moreover, several extensions and generalizations of the Camassa–Hom equation exist, but we will only consider one of them, which is now commonly referred to as the two-component Camassa–Holm (2CH) system. It was first introduced in [53, Eq. (43)], and can be written as

That is, (1) has been augmented with a term accounting for the contribution of the fluid density \(\rho = \rho (t,x)\), and paired with a conservation law for this density.

Since the paper [8] by Camassa and Holm there have been numerous works on (1), and its extension (2) has also been widely studied. Naturally, there has also been proposed a great variety of numerical methods with these equations in mind, and here we will mention just a handful of them. An adaptive finite volume method for peakons was introduced in [3]. In [14, 36] finite difference schemes were proved to converge to dissipative solutions of (1), while invariant-preserving finite difference schemes for (1) and (2) were studied numerically in [49]. Pseudospectral or Fourier collocation methods for the CH equation were studied in [44, 45], where in the latter paper the authors also proved a convergence result for the method. In [9,10,11, 37] the authors consider particle methods for (1) based on its Hamiltonian formulation, which are shown to converge under suitable assumptions on the initial data. On a related note, a numerical method based on the conservative multipeakon solution [38] of (1) was presented in [41]. Furthermore, there have been proposed several Galerkin finite element methods for (1): an adaptive local discontinuous method was presented in [57], a Hamiltonian-conserving scheme was studied in [50], while [1] presented a Galerkin method with error estimates. There have also been proposed more geometrically oriented methods, such as a geometric finite difference scheme based on discrete gradient methods [17], and multi-symplectic methods for both (1) and (2) in [15, 16]. Moreover, Cohen and Raynaud [18] present a numerical method for (1) based on direct discretization of the equivalent Lagrangian system of [39]. Such a list can never be exhaustive, and for more numerical schemes we refer to the most recent papers mentioned above and the references therein.

In this paper however, we consider energy-preserving discretizations for (1) and (2), which are closely related to variational principles in [30, 38]. In particular, we are interested in studying how well the discretizations in [30, 38] serve as numerical methods. To this end, we will consider the initial value problem of (2), with periodic boundary conditions in order to obtain a computationally viable numerical scheme, i.e.,

Here \({\mathbb {T}}\) denotes some one-dimensional torus, and we assume \(u_0 \in {\mathbf {H}}^1({\mathbb {T}})\) and \(\rho _0 \in {\mathbf {L}}^{2}({\mathbb {T}})\). Observe that the choice \(\rho _{0}(x) \equiv 0\) in (3) yields the initial value problem for (1).

One of the hallmarks of the CH equation, and also the 2CH system, is the fact that even for smooth initial data, its solutions can develop singularities, also known as wave breaking. Specifically, this means that the wave profile u remains bounded, while the slope \(u_x\) becomes unbounded from below. At the same time energy may concentrate on sets of measure zero. This scenario is now well understood and has been described in [19, 20, 24, 31]. A fully analytical description of a solution which breaks is provided by the peakon–antipeakon example, see [38]. An important motivation for the discretizations derived in [30, 38] was for them to be able to handle such singularity formation, and we will see examples of this in our final numerical simulations.

1.2 Derivation of the semidiscretization

The variational derivation of the equation as a geodesic equation is based on Lagrangian variables, and the Lagrangian framework is an essential ingredient in the construction of global conservative solutions, see [6, 33, 39]. The other essential ingredient is the addition of an extra energy variable to the system of governing equations, which tracks the concentration of energy on sets of measure zero. Later we will see that these ingredients have all been accounted for in our discretization.

Next we will outline how the variational derivation of the CH equation is carried out in the periodic setting, before we turn to our discrete methods. In our setting, we take the period to be \(L> 0\) such that

for \(t \ge 0\). We introduce the characteristics \(y(t,\xi )\) and the Lagrangian variables

Furthermore, we require the periodic boundary conditions

Let us ignore r for the moment by setting \(r \equiv 0\), which corresponds to studying the CH equation. A rather straight-forward discretization of the above variables comes from replacing the continuous parameter \(\xi \) by a discrete parameter \(\xi _i\) for i in a set of indices. The corresponding pairs \((y_i, U_i)\) can then be considered as position and velocity pairs for a set of discrete particles. We want to derive the governing equations of the discrete system from an Euler–Lagrange principle. The system of equations will thus be fully determined once we have a corresponding Lagrangian \({\mathcal {L}}(y, U)\).

We base the construction of the discrete Lagrangian on the continuous case. For the CH equation, a Lagrangian formulation is already available from the variational derivation of the equation. Let us briefly review this derivation. The motion of a particle, labeled by the variable \(\xi \), is described by the function \(y(t, \xi )\). The velocity of the particle is given by \(y_t(t, \xi ) = U(t, \xi )\). The Eulerian velocity u is given in the same reference frame through \(u(t, y(t,\xi )) = U(t,\xi )\), and the energy is given by a scalar product in the Eulerian frame. For the CH equation, the scalar product \(\left\langle \cdot ,\cdot \right\rangle \) is given by the \({\mathbf {H}}^1\)-norm

Other choices of the scalar product lead to the Burgers or Hunter–Saxton equations, see Table 1.

From the scalar product, we define the momentum m as the function which satisfies

for all v. Note that this scalar product is invariant with respect to relabeling of the particles, or right invariant in the terminology of [2]. This means that for any diffeomorphism, also called relabeling function, \(\phi (\xi )\), the transformation \(y\mapsto y\circ \phi \) and \(U\mapsto U\circ \phi \) leaves the energy invariant:

By Noether’s theorem, this invariance leads to the conserved quantity

which is presented as the first Euler theorem in [2]. We can recover the governing equation using the conserved quantity \(m_\text {c}\). We have

We use the definition of u as \(y_t = u\circ y\) and, after simplification, we obtain

which is exactly (1). Note that this derivation of the CH equation using Lagrangian variables and relabeling is similar to the derivations in [25, 43], which also can serve as starting points for multi-symplectic numerical methods.

One method for discretizing the CH equation comes from its multipeakon solution, as studied in [38]. This solution of (1) is a consequence of that the class of functions of the form

is preserved by the equation. By deriving an ODE system for \(y_i\) and \(U_i\) which define the position and height of the peaks, we can deduce their values at any time t. Then, given the points \((y_i,U_i)\) for \(i \in \{1,\dots ,n\}\), we can reconstruct the solution on the whole line by joining these points with linear combinations of the exponentials \(e^x\) and \(e^{-x}\). For the new scheme, we use instead a linear reconstruction, which is also the standard approach in finite difference methods. In this case we approximate the energy in Lagrangian variables using finite differences for \(y_i\) and \(U_i\), and then the corresponding Euler–Lagrange equation defines their time evolution. Finally, we apply a piecewise linear reconstruction to interpolate \((y_i,U_i)\) for \(i \in \{1,\dots ,n\}\).

Comparing these two reconstruction methods, we face a trade-off in how we interpolate the points \((y_i,U_i)\). Although the piecewise exponential reconstruction provides an exact solution of (1), one may, in absence of additional information on the initial data, consider it less natural to use these catenary curves to join the points instead of the more standard linear interpolation. On the other hand, linear reconstruction may approximate the initial data better, but an additional error is introduced since piecewise linear functions are not preserved by the equation, see Fig. 1.

Note that multipeakon solutions are not available in the case \(\rho \ne 0\), cf. [21], and so the method based on linear reconstruction is the only scheme presented here for the 2CH system which is based on variational principles in Lagrangian coordinates. We remark that for the Hunter–Saxton equation, the soliton-like solutions are piecewise linear, being solutions of \(u_{xx} =\sum _{i \in {\mathbb {Z}}}U_i\delta (x - y_i)\). Thus, the linear and the exact soliton reconstruction coincide for the Hunter–Saxton equation. As a matter of fact, in [35] there has recently been developed a fully discrete numerical method for conservative solutions of the Hunter–Saxton equation which is primarily set in Eulerian coordinates, but employs characteristics to handle wave breaking.

The rest of this paper is organized as follows. In Sect. 2 we briefly recall the conservative multipeakon method introduced in [38], where a finite set of peakons serves as the particles discretizing the CH equation, and outline how the corresponding system is derived for the periodic case. Moreover, we present efficient algorithms for computing the right-hand sides of their respective ODEs, which are inspired by the fast summation algorithms of Camassa et al. for their particle methods [9, 10]. Section 3 describes the new variational scheme in detail, and some emphasis is put on deriving fundamental solutions for a discrete momentum operator, which in turn allows for collisions between characteristics and hence wave breaking. Finally, in Sect. 4 we very briefly describe the methods we have chosen to compare with, before turning to a series of numerical examples of both quantitative and qualitative nature.

2 Conservative multipeakon scheme

An interesting feature of (1) on the real line is that it admits so-called multipeakon solutions, that is, solutions of the form

defined by the ODE system

for \(i \in \{1,\dots ,n\}\), where \(q_i\) and \(p_i\) can respectively be seen as the position and momentum of a particle labeled i. In this sense, \(q_i\) is analogous to the discrete characteristic \(y_i\) in the previous section. Several authors have studied the discrete system (8), in particular Camassa and collaborators who named it an integrable particle method, see for instance [7, 9, 37]. This system is Hamiltonian, and one of its hallmarks is that for initial data satisfying \(q_i \ne q_j\) for \(i \ne j\) and all \(p_i\) having the same sign, one can find an explicit Lax pair, meaning the discrete system is in fact integrable. The Lax pair also serves as a starting point for studying general conservative multipeakon solutions with the help of spectral theory, see [27, 28].

System (8) is however not suited as a numerical method for extending solutions beyond the collision of particles, which for instance occurs for the two peakon initial data with \(q_1 < q_2\), and \(p_2< 0 < p_1\). Indeed, as \(\left| q_2 - q_1\right| \rightarrow 0\), the momenta blow up as \((p_1,p_2) \rightarrow (+\infty ,-\infty )\), cf. [32, 56]. Even though this happens at a rate such that the associated energy remains bounded, unbounded solution variables are not well suited for numerical computations. One alternative way of handling this is to include an algorithm which transfers momentum between particles which are close enough according to some criterion, see for instance [12]. However, we prefer to use the method presented next, where a different choice of variables, which remain bounded at collision-time, is introduced.

2.1 Real line version

In [38] the authors propose a method for computing conservative multipeakon solutions of the CH equation (1), based on the observation that between the peaks located at \(q_i\) and \(q_{i+1}\) in (7), u satisfies the boundary value problem \(u-u_{xx} = 0\) with boundary conditions \(u(t,q_i) =:u_i(t)\) and \(u(t,q_{i+1}(t)) =:u_{i+1}(t)\). Moreover, from the transport equation for the energy density one can derive the time evolution of \(H_i\) which denotes the cumulative energy up to the point \(q_i\). Using \(y_i\) instead of \(q_i\) to denote the \(i^{\mathrm{th}}\) characteristic we then obtain the discrete system

for \(i \in \{1,\dots ,n\}\) with

We note that the solution u is of the form \(u(t,x) = A_i(t) e^x + B_i(t) e^{-x}\) between the peaks \(y_i\) and \(y_{i+1}\) with coefficients

and where we for any grid function \(\{v_i\}_{i=0}^{n}\) have defined

In order to compute the solution for \(x < y_1\) and \(x > y_n\), one introduces the convention \((y_0,u_0) = (-\infty ,0)\) and \((y_{n+1},u_{n+1}) = (\infty ,0)\). We also have the relation

which can be computed as

Here we emphasize that the total energy \(H_{n+1}\) is then given by

since \(H_0 = 0\). Due to the explicit form of u we may compute P and Q as

with \(Q_{ij} = -\sigma _{ij} P_{ij}\) and

where we have defined

For details on how such multipeakons can be used to obtain a numerical scheme for (1) we refer to [41].

2.1.1 Fast summation algorithm

Before presenting a periodic version of the latter method, we note that the above method can be computationally expensive if one naively computes (13) for each i and j, amounting to a complexity of \({\mathcal {O}}(n^2)\) for computing the right-hand side of (9). Inspired by [9] and borrowing their terminology we shall propose a fast summation algorithm for computing (12) with complexity \({\mathcal {O}}(n)\). Indeed, this can be done by noticing that our \(P_i\) and \(Q_i\) share a similar structure with the right-hand sides of (8). To this end we make the splittings

and note that \(f^{\mathrm{l}}_i\) and \(f^{\mathrm{r}}_i\) satisfy the recursions

and

for \(i \in \{1,\dots ,n-1\}\), where we have defined

Moreover we have the starting points for the recursions given by

Clearly, computing \(f^{\mathrm{l}}\) and \(f^{\mathrm{r}}\) recursively is of complexity \({\mathcal {O}}(n)\), while adding and subtracting them to produce P and Q is also of complexity \({\mathcal {O}}(n)\), which yields the desired result.

2.2 Periodic version

Now for the periodic version of (9) there are only a few modifications needed. First of all we have to replace the “peakons at infinity” given by \((y_0, u_0) = (-\infty ,0)\) and \((y_{n+1},u_{n+1}) = (\infty ,0)\) which in some sense define the domain of definition for the solution. The new domain will instead be located between the “boundary peakons” \((y_0,u_0) = (y_n-L,u_n)\) and \((y_n,u_n)\). Thus, we are still free to choose n peakons, but we impose periodicity by introducing an extra peakon at \(y_n-L\) with height \(u_n\). We also have to redefine \(H_i\), which now will denote the energy contained between \(y_0\) and \(y_i\). Thus we have \(H_0 = 0\), \(H_n\) is the total energy of an interval of length L, while each \(H_i\) can be computed as

with \(\delta H_i\) defined in (11). In addition, since the energy is now integrated over the interval \([y_0, y_i]\) we have to replace the evolution equation for \(H_i\) in (9) with

where the last identity follows from the periodicity of \(P_i\) by virtue of \(\delta y_i\), \(u_i\), and \(\delta H_i\) being n-periodic.

Moreover, we have to replace \(e^{-\left| y-x\right| }\) in \(P_i\) and \(Q_i\) with its periodic counterpart

and we now integrate over \([y_0,y_n]\) instead of \({\mathbb {R}}\). This is analogous to the derivation of the periodic particle method in [10], and the numerical results of [37]. The courageous reader may verify that the calculations in [38] can be reused to a great extent. In the end we find that the expressions for \(P_i\) and \(Q_i\) are essentially the same, we only need to replace each occurrence of \(e^{-\sigma _{ij}(y_i - {\bar{y}}_j)}\) and its “derivative” with respect to \(y_i\), \(-\sigma _{ij} e^{-\sigma _{ij}(y_i - {\bar{y}}_j)}\), with

respectively. To be precise, \(P_i\) and \(Q_i\) are given by

for \(i \in \{1,\dots ,n\}\), with

and

for \(j \in \{1,\dots ,n-1\}\). To summarize, the periodic system reads

for \(i \in \{1,\dots ,n\}\), and \(P_i\) and \(Q_i\) defined by (17), (18), and (19).

Example 1

(i) Following [38, Ex. 4.2] we set \(n = 1\), and use the periodicity to find \({\bar{y}}_0 = y_1 - L/2\), \(\delta y_0 = L/2\), \({\bar{u}}_0 = u_1\), \(\delta u_1 = 0\), and \(\delta H_0 = u_1^2 \tanh (L/2)\). Plugging into (18) and (19) we find

Then (20) yields \({\dot{u}}_1 = 0\) and \({\dot{H}}_1 = 0\), and setting \(u_1(t) \equiv c\) we obtain \(y_1(t) = y_1(0) + c t\). This shows that for \(n = 1\), in complete analogy to the real line case, the evolution equation for \(H_1\) decouples from the other equations, and we find that a periodic peakon travels with constant velocity c, equal to its height at the peak.

(ii) With substantially more effort compared to (i) we could also consider \(n = 2\) with antisymmetric initial datum for u to recover the periodic peakon–antipeakon solution computed in [16, pp. 5505–5510].

2.2.1 Fast summation algorithm

Drawing further inspiration from [10] we propose a fast summation algorithm for the periodic scheme as well. Following their lead we use the infinite sum rather than the hyperbolic function representation of the periodic kernel. Using geometric series we find

valid for \(\left| x-y\right| \le L\). Then, replacing \(e^{-\sigma _{ij}(y_i-{\bar{y}}_j)}\) in (13) with

we find that the periodic \(P_i\) and \(Q_i\) can be written

where in analogy to the full line case we have defined

and in addition

with \(a_j\) and \(b_j\) defined in (14). Defining \(g^{\mathrm{l}}_i :=g^{-}_i + f^{\mathrm{l}}_i\) and \(g^{\mathrm{r}}_i :=g^{+}_i + f^{\mathrm{r}}_i\), these functions satisfy the recursions

and

for \(i \in \{1,\dots ,n-1\}\). Once more, the recursions allow us to compute \(P_i\) and \(Q_i\) with complexity \({\mathcal {O}}(n)\) rather than \({\mathcal {O}}(n^2)\) for the naive computation of each distinct \(P_{ij}\) and \(Q_{ij}\) in (18) and (19).

3 Variational finite difference Lagrangian discretization

Here we describe the method which is based on a finite difference discretization in Lagrangian coordinates, as derived in [30].

Denoting the number of grid cells \(n \in {\mathbb {N}}\), we introduce the grid points \(\xi _i = i \varDelta \!\xi \) for \(i \in \{0,\dots ,n-1\}\) and step size \(\varDelta \!\xi > 0\) such that \(n \varDelta \!\xi = L\). These will serve as “labels” for our discrete characteristics \(y_i(t)\) which can be regarded as approximations of \(y(t,\xi _i)\). In a similar spirit we introduce \(U_i(t)\) and \(r_i(t)\). For our discrete variables, the periodicity in the continuous case (4) translates into

For a grid function \(f = \{f_i\}_{i \in {\mathbb {Z}}}\) we introduce the forward difference operator \(\mathrm {D}_+\) defined by

where we also have included the backward and central differences for future reference. We will use the standard Euclidean scalar product in \({\mathbb {R}}^n\) scaled by the grid cell size \(\varDelta \!\xi \) to obtain a Riemann sum approximation of the integral on \({\mathbb {T}}\). Moreover, we introduce the space \({\mathbb {R}}^n_{\text {per}}\) of sequences \(v = \{v_j\}_{j\in {\mathbb {Z}}}\) satisfying \(v_{j+n} = v_{j}\), and which is isomorphic to \({\mathbb {R}}^n\). For n-periodic sequences, the adjoint (or transpose) \(\mathrm {D}^\top \) of the discrete difference operator \(\mathrm {D}\) is defined by the relation

For instance, summation by parts shows that the differences in (22) satisfy \(\mathrm {D}_\pm ^{\top } = -\mathrm {D}_{\mp }\) and \(\mathrm {D}_0^{\top } = -\mathrm {D}_0\).

The variational derivation of the scheme for the CH equation (1) is based on an approximation of the energy given by

which corresponds to (5) in the continuous case. For the 2CH system (2) one has the identity

in the continuous setting. Based on this we introduce the discrete identity

which allows us to express the discrete density \(\rho _i(t)\) as a function of \(\mathrm {D}_+ y_i(t)\) and the initial data. Accordingly, we have the approximate relation \(\rho (t,y(t,\xi _i)) \approx (\rho _0)_i \mathrm {D}_+(y_0)_i / \mathrm {D}_+y_i(t)\).

Furthermore, the energy of the discrete 2CH system contains an additional term compared to (23), and reads

Note that compared to [30], one has here set \(\rho _\infty = 0\) as there is no need to decompose \(\rho \) on a bounded periodic domain. Following the procedure in the aforementioned paper we obtain a semidiscrete system which is valid also in the periodic case, namely

for initial data \(y_i(0) = (y_0)_i\) and \(U_i(0) = (U_0)_i\), and indices \(i \in \{0,\dots ,n-1\}\). Observe that in solving (25) we obtain approximations of the fluid velocity in Lagrangian variables since \(y_i(t) \approx y(t,\xi _i)\) and \(U_{i}(t) \approx u(t,y(t,\xi _{i}))\).

Note that (25) does not give an explicit expression for the time derivative \({\dot{U}}\), as a solution dependent operator has been applied to it. For \(\mathrm {D}_+ y_i \in {\mathbb {R}}^n_{\text {per}}\) and an arbitrary sequence \(w = \{w_i\}_{i\in {\mathbb {Z}}} \in {\mathbb {R}}^n_{\text {per}}\), let us define the discrete momentum operator \(\mathrm {A}[{\mathrm {D}_+ y}] : {\mathbb {R}}^n_{\text {per}}\rightarrow {\mathbb {R}}^n_{\text {per}}\) by

Note that when \(\mathrm {D}_+ y_i = 1\), (26) is a discrete version of the Sturm–Liouville operator \(\mathrm {Id}-\partial _{xx}\). The name momentum operator comes from the fact that the discrete energy can be written as the scalar product of \(\mathrm {A}[{\mathrm {D}_+ y}] U\) and U,

which corresponds to (6). Moreover, as in [30] we find that (25) preserves the total momentum

where the final identity comes from telescopic cancellations and periodicity.

3.1 Presentation of the scheme for global in time solutions

To follow [30] in obtaining a scheme which allows for global in time solutions, we have to invert the discrete momentum operator (26), and in the aforementioned paper this is done by finding a set of summation kernels, or fundamental solutions, \(g_{i,j}\), \(\gamma _{i,j}\), \(k_{i,j}\), and \(\kappa _{i,j}\) satisfying

where \(\mathrm {D}_{j\pm }\) denotes differences with respect to the index j. Let us for the moment assume that we have a corresponding set of kernels for the periodic case, namely

and which are n-periodic in their index j for fixed i. The existence of such kernels will be justified in the next subsection.

In the end we want to derive a system which is equivalent to (25) for \(\mathrm {D}_+y_j > 0\) and which serves as a finite-dimensional analogue to [30, Eq. (4.42)]. Following the convention therein we decompose \(y_j = \zeta _j + \xi _j\), which by (21) implies that \(\zeta \) is n-periodic as well: \(\zeta _{j+n} = \zeta _j\). Then, with appropriate modifications of the approach in [30], our system for \(j \in \{0,\dots ,{n-1}\}\) reads

where we have defined

and \(h_j\) is defined to satisfy

We note that we could have included

in (30), but since r does not appear in any of the other equations, we choose to omit it. Note that when considering the CH equation, \(\rho \), and thus also r, vanishes identically. In the current setting, this only affects the presence of r in the identity (32).

Observe that \(R_j\) and \(Q_j\) in (31) are n-periodic by virtue of the kernels being n-periodic in j, and so it follows that (30) is of the form \({\dot{X}}_j(t) = F_j(X(t))\), where \(X_{j+n}(0) = X_j(0)\) and \(F_{j+n}(X) = F_j(X)\). Then the integral form of (30) shows that \(X_{j+n}(t) = X_j(t)\), and so any solution of this equation must be n-periodic.

We also note that we can equivalently formulate (30) more in the spirit of [30, Eq. (4.42)] by defining

and replace (30a) and (30c) to obtain

where we have combined (30c) and (33) with the periodicity of U and R to get (34c). In this case we note that \(\mathrm {D}_+H_j = h_j\) for \(j \in \{0,\dots ,{n-1}\}\), \({\dot{H}}_{n}(t) = {\dot{H}}_0(t) \equiv 0\), and \(H_n(t) = H_n(0)\) is the total energy of the system. The energy \(H_n\) is a reformulation of (24) in Lagrangian variables. Equation (34) is in fact our preferred version of the scheme, as it more closely resembles (20) and preserves the discrete energy \(H_n\) identically.

An important observation is that the sequences defined in (31) solve

which is equivalent to

for the tridiagonal \(2n \times 2n\)-matrix

with corners. As shown in the next section, the matrix (36) is invertible whenever \(\mathrm {D}_+y_j \ge 0\) for \(j \in \{0,\dots ,n-1\}\). Thus, (35) provides a far more practical approach for computing the right hand side of (30) than the identities (31), especially for numerical methods, as there is no need to compute the kernels in (29). Indeed, if one uses an explicit method to integrate in time, given y, U and h we can solve (35) to obtain the corresponding R and Q.

3.2 Inversion of the discrete momentum operator

The alert reader may wonder why we work with the \(2n \times 2n\) matrix (36) when inverting the operator (26) defined in only n points. This comes from the approach in [30] which enables the discretization to handle “discrete” wave breaking, i.e., \(\mathrm {D}_+y_i = 0\). By introducing a change of variables we rewrite the second order difference operator (26) as the first order matrix operator appearing in (29). Thus we avoid \(\mathrm {D}_+y\) in the denominator at the cost of increasing the size of the system.

When introducing the change of variables, we lose some desirable properties which would have made it easy to establish the invertibility of the matrix corresponding to (36) in the cases where \(\mathrm {D}_+y_i \ge c\) for some positive constant c. This would for instance be the case for discretizations of (3) where \(\rho _{0}^2(x) \ge d\) for a constant \(d > 0\), since it is then known that wave breaking cannot occur, see [34, Thm. 4.5]. In particular, we lose symmetry of the matrix which would have enabled us to use the standard argument involving diagonal dominance, as used for instance in [49] for a discrete Helmholtz operator. Our matrix (36) is clearly not diagonally dominant, but it is still invertible, as shown in the following proposition.

Proposition 1

Assume \(y_n - y_0 = L\) and \(\mathrm {D}_+y_i \ge 0\) for \(i \in \{0,\dots ,n-1\}\). Then \(A[\mathrm {D}_+y]\) defined in (36) is invertible for any \(n \in {\mathbb {N}}\).

Proof

We will prove that the determinant of \(A[\mathrm {D}_+y]\) is bounded from below by a strictly positive constant. Thus it is never singular.

First we recall the matrix

which played an essential part when inverting the discrete momentum operator on the full line in [30]. Below we will see that it plays a role in the periodic case as well, and we emphasize the property \(\det {A_j} = 1\).

Turning back to \(A[\mathrm {D}_+y]\), we consider the rescaled matrix \(\varDelta \!\xi A[\mathrm {D}_+y]\) in order to have the absolute values of the off-diagonal elements equal to one. We observe that this matrix is tridiagonal, with nonzero corners owing to the periodic boundary. Then, the clever argument in [52, Lem. 1] gives an identity for the determinant of a general matrix of this form, which in our case reads

with

and where the last identity in (38) comes from \(\det (\varPi _0) = 1\). Next, we note that each factor \(A_j\) in \(\varPi _0\) can be written as

for which we have

Then we may expand \(\varPi _0\) as

which means that its trace can be expanded as

Since all factors are nonnegative, we throw away most terms to obtain

where the final identity follows from \(y_n - y_0 = L\). Hence, combining the above with \(n \varDelta \!\xi = L\) we obtain the lower bound

which clearly shows \(A[\mathrm {D}_+y]\) to be nonsingular for any \(n \in {\mathbb {N}}\). \(\square \)

To prove the existence of global solutions to the governing equations (30) or (34) by a fixed point argument, we have to establish Lipschitz continuity of the right-hand side. However, Lipschitz bounds for the inverse operator of \(\mathrm {A}[{\mathrm {D}_+y}]\) are difficult to obtain directly. In particular, we see that the estimates in Proposition 1 rely on the positivity of the sequence \(\mathrm {D}_+y\), which is difficult to impose in a fixed-point argument. Therefore, we will have to follow the approach developed in [30] where we introduce the fundamental solutions for the operator \(\mathrm {A}[{\mathrm {D}_+y}]\) and propagate those in time together with the solution. Proposition 1 gives us the existence of the fundamental solutions in (29). Indeed, comparing the equations (29) with the matrix (36) one can verify that each of \(G_{i,j}\), \(\varGamma _{i,j}\), \(K_{i,j}\), and \({\mathcal {K}}_{i,j}\) for \(i,j \in \{0,\dots ,n-1\}\), \(4n^2\) in total, appears as a distinct entry in the inverse of \(\varDelta \!\xi A[\mathrm {D}_+y]\).

We do not detail here the argument developed in [30] which shows the existence of global solutions to the semidiscrete system (30). In fact, the periodic case is of finite dimension and therefore easier to treat than the case of the real line. Instead we will devote most of the remaining paper to numerical results. Before that, we present nevertheless some interesting properties of the fundamental solutions that can be derived in the periodic case, and which show the connection to the fundamental solutions on the real line. Readers more interested in numerical results may skip to Sect. 4.

3.2.1 Properties of the fundamental solutions

Here we present an alternative method for deriving the periodic fundamental solutions, more in line with the procedure in [30]. The construction is done in two steps, the first of which is to find the fundamental solutions on the infinite grid \(\varDelta \!\xi {\mathbb {Z}}\) as was done in [30]. Then it turns out that we can periodize these solutions to find fundamental solutions for the grid given by \(i \varDelta \!\xi \) for \(0 \le i < n\). In this endeavor we only assume the periodicity \(y_{i+n} = y_i + L\) and \(\mathrm {D}_+y_i \ge 0\), as was done in Proposition 1.

Due to the periodicity, we can think of the sequences \(\{\mathrm {D}_+y_j, U_j, h_j, r_j\}_{j \in {\mathbb {Z}}}\) being a repetition of \(\{\mathrm {D}_+y_j, U_j, h_j, r_j\}_{j = 0}^{n-1}\), such that \(\mathrm {D}_+y_{j+k n} = \mathrm {D}_+y_j\) for \(j,k \in {\mathbb {Z}}\), and similarly for the other entries. Furthermore, it enforces the relation

which together with \(n \varDelta \!\xi = L\) yields

This also leads to the upper bounds

but note that this bound can only be attained if \(\mathrm {D}_+y_i = 0\) for every other index than the one achieving the maximum.

To find a fundamental solution \(g_{i,j}\) for the operator (26) defined on the real line, that is \(g_{i,j}\) which satisfies

we consider the homogeneous operator equation

By introducing the quantity \(\gamma _i = \mathrm {D}_+g_i / \mathrm {D}_+y_i\) we can restate (42) as

Thus, if for any index i we prescribe values for \(g_i\) and \(\gamma _{i-1}\), the corresponding solution of (42) in any other index can be found by repeated multiplication with the matrix \(A_i\) from (37) and its inverse. The eigenvalues and eigenvectors of \(A_i\) are found in [30, Lem. 3.3], and we briefly state its eigenvalues

and underline that \(\lambda _i^+ \lambda _i^- = 1\). Using (41) and the inequality

we find that we may write

for some \((1+L/2)^{-1} \le c_i \le \sqrt{1+ (L/2 )^2} + L/2\).

To construct fundamental solutions for the operator we need to find the correct homogeneous solutions for our purpose, namely those with exponential decay. In [30] one used the asymptotic relation \(\lim _{i\rightarrow \pm \infty } \mathrm {D}_+y_i = 1\) to deduce the existence of limit matrices, and the correct values to prescribe for g and \(\gamma \) were given by the eigenvectors of these matrices. The periodicity of \(\mathrm {D}_+y\) prevents us from applying the same procedure to the problem at hand, but fortunately it turns out that a different argument can be applied in our case. In fact, we can draw much inspiration from [54, Chap. 7] which treats Jacobi operators with periodic coefficients, since the operator (26) can be regarded as a particular case of such operators. However, we make some modifications in this argument for our setting, such as introducing the variable \(\gamma _i\) from earlier, and using \([g_i, \gamma _{i-1}]^\top \) as the vector to be propagated instead of \([g_i, g_{i-1}]^\top \). The reason for this is to ensure the nice properties of the transition matrix \(A_i\), such as symmetry and determinant equal to one, and to avoid problems with dividing by zero when \(\mathrm {D}_+y_i = 0\). See also the discussion leading up to [30, Lem. 3.3].

Proposition 2

The solutions of the homogeneous operator equation are of the form

which corresponds to the Floquet solutions in [54, Thm. 7.3].

Proof

Let us follow [30, Eq. (3.23)] in defining the transition matrix

which satisfies

By the n-periodicity of \(A_i\) we find \(\varPhi _{j+n,i+n} = \varPhi _{j,i}\), and since \(\det {A_i} = 1\) it follows that \(\det {\varPhi _{j,i}} = 1\). Next, for any \(i_0 \in \{0,\dots ,n-1\}\) we define the generalization of (39)

which is clearly n-periodic and contains every possible instance of \(A_i\) as a factor. Now, if we can prove that (45) has two distinct eigenvalues, the proof essentially follows that of Case 1) in the proof of [54, Thm. 7.3], and so we omit the details.

One way of proving that the eigenvalues are distinct is to combine the identity \(\det (\varPi _{i_0}) = 1\) with a trace estimate analogous of that in the proof of Proposition 1 which shows that \({{\,\mathrm{tr}\,}}(\varPi _{i_0}) > 2\). Consequently, the eigenvalues are positive and reciprocal. In particular, this implies the existence of a matrix Q with eigenvalues \(\pm q\) for some \(q > 0\) such that \(\varPi _{i_0} = \exp (n \varDelta \!\xi Q)\). Note that due to (43) we find it natural to include \(\varDelta \!\xi \) in the exponent to ensure that q can be bounded from above and below by constants depending only on the period \(L\) instead of the grid parameter \(\varDelta \!\xi \). \(\square \)

Hence, to obtain a fundamental solution centered at some \(0 \le i \le n-1\), we need only combine the Floquet solutions (44) with decay in each direction in such a way as to satisfy the correct jump condition at i. As shown in [30], the solution is of the form

where \(W = W_j = g^-_j \gamma ^+_j - g^+_j \gamma ^-_j\) is the spatially constant Wronskian. Furthermore, the use of \(g_{i,j}\) and \(\gamma _{i,j}\) in [30] to construct \(k_{i,j}\) and \(\kappa _{i,j}\) from (28) carries over directly.

Using the fundamental solutions found before we introduce the periodized kernels

which are defined for \(i,j \in \{0,\dots ,n-1\}\) and n-periodic in j, e.g., \(G_{i,j+n} = G_{i,j}\). By our previous analysis, the summands are exponentially decreasing in |m|, and so the series in (47) are well-defined. For instance, using (44) and (46) we may compute \(G_{i,j}\) as the sum of two geometric series,

Compare this expression for \(i=0\) to the definition of \(g^p_j\) in [36, p. 1658], and note that they coincide for \(\mathrm {D}_+y_j \equiv 1\) with our \(q\varDelta \!\xi \) and \(p_0 p_j/W\) corresponding to their \(\kappa \) and c respectively. Using the fact that \(\mathrm {D}_+y_{j+m n} = \mathrm {D}_+y_j\) for \(m \in {\mathbb {Z}}\) we observe that these functions satisfy the fundamental solution identity (29). Moreover, the identity (29) imposes two symmetry conditions and an anti-symmetry condition on (47), namely

These can be derived in complete analogy to the proof of [30, Lem. 4.1], replacing the decay at infinity by periodicity to carry out the summation by parts without any boundary terms. Alternatively, one can use the structure of the matrix (36) and its inverse to show that (48) holds.

4 Numerical experiments

In this section we will test our numerical method presented in the previous section for both the CH equation (1) and the 2CH system (2), and compare it to existing methods. As these are only discretized in space, we just want to consider the error introduced by the spatial discretization. To this end we have chosen to use explicit solvers from the Matlab ODE suite to integrate in time, and in most cases this amounts to using ode45, the so-called go-to routine. Matlab’s solvers estimate absolute and relative errors, and the user may set corresponding tolerances for these errors, AbsTol and RelTol, to control the accuracy of the solution. Our aim is to make the errors introduced by the temporal integration negligible compared to the errors stemming from the spatial discretization, and thus be able to compare the spatial discretization error of our schemes to those of existing methods. All experiments were performed using Matlab R2018b on a 2015 Macbook Pro with a 3.1 GHz Dual-Core Intel Core i7 processor.

For the examples where we have an exact reference solution, we would like to compare convergence rates for some fixed time t. To compute the error we have then approximated the \({\mathbf {H}}^1\)-norm by a Riemann sum

where u(x) is the reference solution, and \(u_n(x)\) is the numerical solution for \(n = 2^k\). The norm in (49) is interpolated on a reference grid \(x_i = i \varDelta \! x\) for \(\varDelta \! x= 2^{-k_0}L\). Here we ensure that \(k_0\) is large enough compared to k for the approximation to be sufficiently close to the \({\mathbf {H}}^1\)-norm. In general we have found that if \(k^*\) is the greatest k used in an experiment, then \(k_0 \ge k^* + 2\) works well in our examples. We omit the second term of the summand in (49) to obtain the corresponding approximation of the \({\mathbf {L}}^{2}\)-norm.

Note that for the schemes (20) and (34) set in Lagrangian coordinates, traveling waves in an initial interval will move away from this interval along their characteristics. To compare their solutions to schemes set in fixed Eulerian coordinates we consider only norm on the initial interval, and for the Lagrangian solution we use the periodicity to identify \(y(t,\xi )\) with a position on the initial interval. For instance, if the initial interval is \([0,L]\), we identify the solution in the positions y and y modulo \(L\).

4.1 Review of the discretization methods

Here we briefly review the discretization methods used in the coming examples, and in particular we specify how they have been interpolated on the reference grid. The schemes we use to compare with (34) can of course be just a small sample of existing methods, and we have chosen to compare with a subset of schemes which share some features with our variational scheme (34). As alluded to in the introduction, the conservative multipeakon scheme (9) from [38] shares much structure with (34), and so we found it natural to define its periodic version (20) for comparison. Furthermore, since the discrete energy (24) is defined using finite differences, we decided to implement some finite difference schemes, and here we included both conservative and dissipative methods to illustrate their features. Finally, we included a pseudospectral scheme, also known as Fourier collocation method, which has less in common with the other schemes. This is known to perform extremely well for smooth solutions, but we will see that it is less suited for solutions of peakon type.

We underline that even though these numerical schemes may have been presented with specific methods for integrating in time in their respective papers, for these examples we want to compare the error introduced by the spatial discretization only, and to treat all methods equally we choose a common explicit method as described before.

4.1.1 Conservative multipeakon scheme from Sect. 2

As mentioned in the introduction, when defining the interpolant \(u_n(t,x)\) for the multipeakon scheme (20) between the peaks located at \(y_i\), it is a piecewise combination of exponential functions. This in turn makes its derivative \((u_n)_x\) piecewise smooth, but discontinuous at the peaks. For the approximation of initial data, unless otherwise specified, we have chosen \(y_i(0) = \xi _i\), \(U_i(0) = u_0(\xi _i)\), and computed \(H_i(0)\) according to (15) for \(\xi _i = i \varDelta \!\xi \) and \(i \in \{0,\dots ,n-1\}\). In the subsequent figures this scheme goes by the acronym CMP.

4.1.2 Variational finite difference Lagrangian scheme from Sect. 3

We mentioned in Sect. 3 that it is computationally advantageous to solve the matrix system (35) when computing R and Q in the right-hand side of (34), or alternatively (30). Indeed, solving this nearly tridiagonal system should have a complexity close to \({\mathcal {O}}(n)\) when solved efficiently. In practice, we find that the standard Matlab backslash operator, or mldivide routine, is sufficient for our purposes, as it seems to scale approximately linearly with n in our experiments.

For the interpolant \(u_n(t,x)\) we solve (34) for a given n to find \(y_i(t)\) and \(U_i(t)\), and define a piecewise linear interpolation. This makes \((u_n)_x\) piecewise constant with value \(\mathrm {D}_+U_i / \mathrm {D}_+y_i\) for \(x \in [y_i, y_{i+1})\). Note that there is no trouble with dividing by zero as the corresponding intervals are empty. When applying the scheme to the 2CH system, we follow the convention in [30] with a piecewise constant interpolation \(\rho _n(t,x)\) for the density, setting it equal to \(r_i(0)\mathrm {D}_+y_i(0)/\mathrm {D}_+y_i(t)\) for \(x \in [y_i(t), y_{i+1}(t))\). For initial data, unless otherwise specified, we follow the multipeakon method in choosing \(y_i(0) = \xi _i\), \(U_i(0) = u_0(\xi _i)\), \((\rho _0)_i = \rho _0(\xi _i) = r_i(0)\), computing \(h_i(0)\) according to (32), and then compute \(H_i(0)\) as (33).

In the subsequent figures this scheme goes by the acronym VD for variational difference/discretization.

4.1.3 Finite difference schemes

As they remain a standard method for solving PDEs numerically, it comes as no surprise that several finite difference schemes have been proposed for the CH equation. We will consider the convergent dissipative schemes for (1) presented in [14, 36], and the energy-preserving scheme for (1) and (2) studied numerically in [49]. The schemes in [36, 49] are both based on the following reformulation of (1),

for \(u = u(t,x)\) and \(m = m(t,x)\). Here \(u(t,\cdot ) \in {\mathbf {H}}^1({\mathbb {T}})\) means that \(m(t,\cdot )\) corresponds to a Radon measure on \({\mathbb {T}}\). Then, with (50) as starting point, and grid points \(x_j = j\varDelta \! x, \varDelta \! x> 0\) we may apply finite differences, as defined in (22), to obtain various semidiscretizations, specifically

which is the discretization studied in [36] under the assumption of m initially being a positive Radon measure. In the same paper they also briefly mention three alternative evolution equations for \(m_j\) which for different reasons were troublesome in practice when integrating in time using the explicit Euler method. One of these evolution equations is used in [49] where the following discretization is used

The difference between (52) and the alternative method described in [36] is the use of a wider stencil when defining \(m_j\). A difference operator which approximates the rth derivative using exactly \(r+1\) consecutive grid points is called compact, cf. [4, Ch. 3]. Clearly, \(\mathrm {D}_{\pm }\) and \(\mathrm {D}_-\mathrm {D}_+\) are compact difference operators, while \(\mathrm {D}_0\) and \(\mathrm {D}_0\mathrm {D}_0\) are not. As pointed out in [4, Ch. 7], noncompact difference operators are notorious for producing spurious oscillations, and this is exactly the problem reported in [36] for the the alternative formulation. Similarly, in [49] the authors remark that oscillations may appear when the solution of (1) becomes less smooth. In these cases they propose an adaptive strategy of adding numerical viscosity to the scheme with the drawback that the discrete energy is no longer conserved. We have not incorporated such a strategy here, as we would like an energy-preserving finite difference scheme to compare with our energy-preserving variational discretizations.

An invariant-preserving discretization of the 2CH system (2) is also presented in [49], and using the notation (10) we can write it as

still with \(m_j = u_j -\mathrm {D}_0\mathrm {D}_0u_j\). The semidiscretizations (52) and (53) are conservative in the sense that they preserve the invariants

which respectively correspond to momentum, energy, and mass of the system. The first two have counterparts in (27) and (23) for the system (25).

A somewhat more refined spatial discretization is employed in [14]. This method is based on yet another reformulation of (1) and reads

with

where an additional staggered grid \(x_{j+1/2} = (j+1/2)\varDelta \! x\) is used. Moreover, \(u \vee 0 = \max \{u,0\}\) and \(u \wedge 0 = \min \{u,0\}\) is used to achieve proper upwinding for the scheme. Contrary to [36], this dissipative scheme allows for initial data of any sign for u.

For \(u_n\) corresponding to (51), (52), (53), and (55) we have made a piecewise interpolation between the grid points, meaning \((u_n)_x\) is piecewise constant. For initial data we define \(u_i(0) = u_0(x_i)\) for \(x_i = i \varDelta \! x\), \(n\varDelta \! x= L\), and apply the corresponding discrete Helmholtz operator to produce \(m_i(0)\) for the schemes (51), (52), and (53).

We note that in [36], instead of solving a linear system, the authors employ a fast Fourier transform (FFT) technique to compute \(u_i\) from the corresponding \(m_i\). This is possible, as the matrix associated with the linear system is circulant, and so it is diagonalizable using discrete Fourier transform matrices, cf. [51, p. 379]. The matrix system involved in (52) is also circulant, and so the same method can be used there. In our examples, this technique was consistently faster than solving the linear systems, and so we have used it when computing \(u_i\) in (51) and (52), and when computing \(P_i\) in (55).

In the subsequent figures these schemes go by abbreviations based on the authors introducing them, i.e., HR for (51), CKR for (55), and LP for (52) and (53).

4.1.4 Pseudospectral (Fourier collocation) scheme

Let us consider the so-called pseudospectral scheme used in the study of traveling waves for the CH equation in [44], see also [55] for an introduction to the general idea. This method is based on applying Fourier series to (1) and solving the resulting evolution equation in the frequency domain. Introducing the scaling factor \(a :=L/2\pi \) and assuming the discretization parameter n to be even, the pseudospectral method for (1) with period \(L\) can be written

with \(V(0,k) = {\mathcal {F}}_n[u_0](k)\) for \(k \in \{-\frac{n}{2},\dots ,\frac{n}{2}-1 \}\). Here \(\mathrm {i}k V\) means the pointwise product of the vectors \(\mathrm {i}[-\frac{n}{2},\dots ,\frac{n}{2}-1]\) and \([V(-\frac{n}{2}),\dots ,V(\frac{n}{2}-1)]\), while \({\mathcal {F}}_n\) and \({\mathcal {F}}_n^{-1}\) are the discrete Fourier transform and its inverse, see [44, 45] for details.

The right-hand side of (56) can be efficiently computed by applying FFT. Unfortunately, this scheme is prone to aliasing, and for this reason the authors of [45] propose a modified version of the scheme which employs the Orszag 2/3-rule, cf. [5, Ch. 11]. For this dealiased scheme, which they call a Fourier collocation method, the authors in [45] prove convergence in \({\mathbf {L}}^{2}\)-norm for sufficiently regular solutions of (1).

When interpolating \(u_n\) on a denser grid containing \(x_j\) we must use the corresponding real Fourier basis function for each frequency k to obtain the correct representation of the pseudospectral solution, which will always be smooth. For this we use the routine interpft which interpolates using exactly Fourier basis functions.

In the subsequent figures, (56) without dealiasing goes by the acronym PS, while the dealiased version is denoted by PSda.

4.2 Example 1: smooth traveling waves

To the best of our knowledge, there are no explicit formulae for smooth traveling wave solutions of either (1) or (2), and to obtain such solutions we make use of numerical integration in the spirit of [15, 16].

4.2.1 Numerical results for the CH equation

To compute a smooth reference solution for the CH equation we follow the experimental section of [16] with the same parameters, and integrate numerically. To integrate we used ode45 with very strict tolerances, namely \(\texttt {AbsTol}=\texttt {eps}\) and \(\texttt {RelTol} = 100\,\texttt {eps}\), where \(\texttt {eps} = 2^{-52}\) is the distance from 1.0 to the next double precision floating point number representable in Matlab. After integration we found the solution to have period \(L = 6.4695469424989\), where the first ten decimal digits agree with the period found in the experiments section of [16].

Figures 2 and 3 display numerical results for the smooth reference solution above after moving one period \(L\) to the right. As the traveling wave has velocity \(c = 3\), this corresponds to integrating over a time period \(L/3\). To integrate in time we have applied ode45 with parameters \(\texttt {AbsTol} = \texttt {RelTol} = 10^{-10}\).

To highlight the different properties of each scheme, Fig. 2 displays \(u_n\) and \((u_n)_x\) for the various schemes for the low number \(n = 2^4\) and interpolated on a reference grid with step size \(2^{-10}L\). It is apparent how the dissipative nature of the schemes HR (51) and CKR (55) reduces the height of the traveling wave such that it lags behind the true solution. This effect is particularly severe for (51), which probably explains why its error displayed in Fig. 3a is consistently the largest. The perhaps most obvious feature in Fig. 2b is the large-amplitude deviations introduced by the discontinuities for the multipeakon scheme CMP (20). As indicated by its decreasing \({\mathbf {H}}^1\)-error in Fig. 3a, the amplitudes of these discrepancies reduce as n increases.

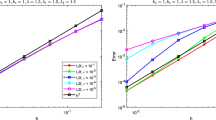

Smooth traveling wave for the CH equation. Errors in \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norms after one period (a), and execution times for ode45 in seconds (b). The schemes are tested with step sizes \(2^{-k} L\) where \(3 \le k \le 14\), and evaluated on a reference grid with step size \(2^{-16}L\)

Figure 3a contains \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-errors of the interpolated solutions for \(n = 2^k\) with \(k \in \{3,\dots ,14\}\), evaluated on a reference grid with \(k_0 = 16\). Before commenting on these convergence results, we underline that the pseudospectral method (56) has not been included in the figure, as its superior performance for this example would make it hard to differ between the plots for the remaining methods. Indeed, this scheme displays so-called spectral convergence in both \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norm, and exhibits an \({\mathbf {L}}^{2}\)-error close to rounding error already for \(n = 2^6\).

For the remaining methods it is perhaps not surprising that the finite difference scheme LP (52) based on central differences in general has the smallest error in \({\mathbf {H}}^1\)-norm for this smooth reference solution, exhibiting convergence orders of 2 and 1 for \({\mathbf {L}}^{2}\) and \({\mathbf {H}}^1\) respectively. However, we observe that the \({\mathbf {L}}^{2}\)-error of the multipeakon scheme is consistently the lowest, but its convergence in \({\mathbf {H}}^1\) is impeded by its irregular derivative. Moreover, it appears that for small n, i.e., \(n \le 2^5\) in this setting, the variational scheme VD (34) performs better.

An observation regarding the execution time of ode45 is that the finite difference schemes (51), (52) and (55) seem to experience some tipping point around \(k = 11\) where their running times tend to be of complexity \({\mathcal {O}}(n^2)\) rather than \({\mathcal {O}}(n)\), see Fig. 3b. A closer look at the statistics for the time integrator in these cases reveals that from \(k = 11\) and onwards, the solver starts experiencing failed attempts at satisfying the specified error tolerances, thus increasing the execution time. This does not occur for the schemes in Lagrangian coordinates, which exhibit execution times aligning well with the \({\mathcal {O}}(n)\)-reference line. A possible explanation for this could be that the semidiscrete schemes based in Lagrangian coordinates are easier to handle for time integrator. Indeed, the almost semilinear structure of the ODE system corresponding to (34) in [30] is key to its existence and uniqueness proofs, and perhaps this structure is advantageous also for the ODE solvers.

It should be emphasized that the multipeakon scheme is considerably faster than the other schemes, which likely comes from it being the only scheme where no matrix equations are solved. Thus, the fast summation algorithm appears to benefit the multipeakon scheme in this direction.

4.2.2 Numerical results for the 2CH system

Here we have only compared the variational scheme (30) to (53), as these are the only methods presented in Sect. 4.1 applicable to the 2CH system. To obtain a smooth solution, we followed the procedure in [15] with the same parameters, and computed the reference solution in the same way as for the CH equation. Both schemes performed similar to the case of the smooth CH case, i.e., for u we had convergence rates 2 and 1 in respectively \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norm for the difference scheme (53), and convergence rate 1 in both norms for the variational scheme (34). we have omitted figures for this case. However, for the interpolants \(\rho _n\) of the density, which were not present in the CH case, we mention that for the difference scheme a piecewise linear interpolation gave rate 2 convergence, while piecewise constant interpolation gave rate 1. On the other hand, for the variational scheme, there was little to gain in choosing a piecewise linear interpolation over a piecewise constant one, as both gave rate 1.

4.3 Example 2: periodic peakon

It is now well known, cf. [8], that a single peakon

is a weak solution of (1) with its peak at \(x = x_0 + c t\). The periodic counterpart of this solution is

valid for \(\left| x-x_0-ct\right| \le L\), and periodically extended outside this interval. This formula for the periodic peakon can in fact be deduced from (20) for \(n = 1\), or found in, e.g., [48, Eq. (8.5)]. Setting \(x_0 = \frac{1}{2}\), \(c = L= 1\), and \(t = 0\) we use this function as initial datum on [0, 1] for a numerical example. As the periodic multipeakon scheme reduces to exactly this peakon for \(n = 1\), \(y_1(0) = \frac{1}{2}\) and \(u_1(0) = 1\), we have chosen to omit this scheme for the experiment, and rather compare how well the other schemes approximate a peakon solution.

As one could expect, the schemes generally performed worse for this problem compared to the smooth traveling wave, and so we could reduce the tolerances for the time integrator to \(\texttt {AbsTol} = \texttt {RelTol} = 10^{-8}\) with no change in leading digits for the errors. However, as the finite difference schemes, and especially the noncompact scheme (52), were quite slow when using ode45 for large values of n, we instead used the solver ode113 which proved to be somewhat faster in this case. Moreover, when computing the approximate \({\mathbf {H}}^1\)-error (49) in this case, we encounter the problem of the reference solution derivative not being defined at the peak. To circumvent this issue, we measure the error at time \(t = L\) on a shifted reference grid. That is, we evaluate (49) on \(x_i = (i + \tfrac{1}{2}) 2^{-k_0}\) instead of \(x_i = i 2^{-k_0}\) for \(i \in \{0,\dots ,2^{k_0}-1\}\) to ensure \(x_i \ne \tfrac{1}{2}\). We plot the solutions again for the relatively small \(n = 2^4\) to highlight differences between the schemes in Fig. 4.

Once more we observe that the dissipativity of the schemes HR (51) and CKR (55) is quite severe for this step size and they fail to capture the shape of the peakon. The energy preserving difference scheme LP (52) is closer to the shape of the peakon, but exhibits oscillations which are particularly prominent in the derivative. On the other hand, the variational scheme VD (34) manages to capture the shape of the peakon very well, and manages far better than the other schemes to capture the derivative of the reference solution after one period.

Periodic peakon. Errors in \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norms after one period (a) and execution times for ode113 in seconds (b). The schemes are tested with step sizes \(2^{-k}\) where \(3 \le k \le 13\), except for the pseudospectral schemes which have \(3 \le k \le 10\). All are evaluated on a reference grid with step size \(2^{-15}\)

The above observations are reflected in Fig. 5a which shows the rate of convergence. The errors for the dissipative schemes decrease, which is expected since both have been proven to converge in \({\mathbf {H}}^1\). However, this convergence is quite slow, with approximate rates of 0.6 and 0.25 for the \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norms respectively.

The energy-preserving difference scheme (52) exhibits order 1 convergence in \({\mathbf {L}}^{2}\)-norm, but the oscillations in the derivative put an end to any hope of \({\mathbf {H}}^1\)-convergence. Indeed, the \({\mathbf {H}}^1\)-seminorm of the error is larger than 0.2 irrespective of the step size. The oscillations are of course even more severe for \(m_i\), the discrete version of \(u-u_{xx}\) which is actually solved for in the ODE.

The variational scheme (34) performs quite well, with convergence rate 1 in \({\mathbf {L}}^{2}\) and \({\mathbf {H}}^1\)-rates generally between 0.45 and 1. The exception is the transition from \(n = 2^9\) to \(n = 2^{10}\) where there was barely any decrease in the error, followed by a large decrease corresponding to a rate of 5 in \(n = 2^{11}\), and from here the \({\mathbf {H}}^1\)-error is comparable in magnitude to the \({\mathbf {L}}^{2}\)-error of (52). This jump is possibly connected to the discontinuity of the reference solution.

The pseudospectral schemes PS and PSda perform quite well for the \({\mathbf {L}}^{2}\)-norm, and for larger n they have the smallest error of all the methods, with the dealiased scheme showing a better convergence rate which approaches 1.5. However, in \({\mathbf {H}}^1\)-norm these schemes perform worse than the variational scheme, owing to the major oscillations close to the discontinuity in the reference solution. Note that we have only run these schemes for \(k \in \{3,\dots ,10\}\), as opposed to \(k \in \{3,\dots ,13\}\) for the other methods. The reason for this is that for larger n the pseudospectral method needs a finer reference grid to have consistent convergence rates, as opposed to the other schemes, and in addition the run times are very long for larger n.

Remark 1

Here we have only run the schemes over one period for the traveling wave, but an additional issue for the schemes in Lagrangian coordinates becomes apparent if they are run for a long time with initial data containing a derivative discontinuity, such as the traveling peakon. Then one typically observes a clustering of characteristics, or particles, at the front of the traveling discontinuity, leaving less particles to resolve the rest of the wave profile. Indeed, this is also reported in the numerical results of [9] for their particle method, and the authors suggest that a redistribution algorithm may be applied when particles come too close. Such redistribution algorithms would be useful for (20) and (34) when running them for long times, but development of such tools fall outside the scope of this paper. It is however important to be aware of this phenomenon, as the clustering can lead to artificial numerical collisions of the characteristics when they become too close for the computer to distinguish them. In worst case this can lead to a breakdown of the initial ordering of the characteristics \(y_i\), which again ruins the structure of the ODE system, leading to wrong solutions or breakdown of the method.

4.4 Example 3: peakon–antipeakon example

In this example we consider the interval \([0,L]\) and peakon–antipeakon initial datum

for \(c = 1\) and \(L= 2\pi \). We want to evaluate the numerical solutions at \(t = 4.5\), which is approximately the time when the two peaks have returned to their initial positions \(x = \pi /2\) and \(x = 3\pi /2\) after colliding once. Since this is a multipeakon solution, we may use the conservative multipeakon scheme (20) to provide a reference solution. Setting \(n = 2\), choosing \(y_1 = \pi /2\), \(y_2 = 3\pi /2\), \(u_1 = -u_2 = 1\), and computing \(H_i\) for \(i = 1,2\) according to (11), we integrated in time using ode113 with the very stringent tolerances \(\texttt {AbsTol} = \texttt {eps}\) and \(\texttt {RelTol} = 100\,\texttt {eps}\). For the schemes in the comparison we used the same solver with \(\texttt {AbsTol} = \texttt {RelTol} = 10^{-9}\), and a reference grid with \(2^{16}\) equispaced points.

For this example we have omitted the dissipative schemes (51) and (55), as the former cannot handle initial data of this type, while the latter would produce an approximation of the dissipative solution which is identically zero after the collision. Figure 6a shows \(u_n\) for the variational scheme VD (34), the finite difference scheme LP (52), and the pseudospectral schemes PS (56) and PSda, all for \(n = 2^6\). This is a numerical example which is especially ill-suited for the pseudospectral method (56), and illustrates the necessity of the dealiasing to have any form of convergence. Indeed, from the convergence plots in Fig. 6b we observe that for the pseudospectral methods, only the \({\mathbf {L}}^{2}\)-norm of the dealiased scheme decreases. Its \({\mathbf {H}}^1\)-error and the errors of the scheme without dealiasing do not decrease. The apparent cause for the lack of \({\mathbf {L}}^{2}\)-convergence for the scheme without dealiasing is a persistent phase error in the solution after collision time, and this error does not decrease with increasing n. Unlike the dissipative and conservative methods we compare with, the pseudospectral schemes appear to increase the energy of the solution after singularity formation. In particular, for the scheme without dealiasing this leads to increased magnitude of \(u_n\) after collision time, which again leads to the persistent phase shift. On the other hand, for the dealiased version we do not observe this, and the magnitude of \(u_n\) approaches the exact u as n increases. For the \({\mathbf {H}}^1\)-norm one cannot expect convergence from any version of (56), as the collision introduces severe oscillations in the pseudospectral derivative.

Oscillatory behavior also explains the very slow decrease in \({\mathbf {H}}^1\)-error for the invariant-preserving difference scheme LP (52), displaying a rate fluctuating around 0.15. For the \({\mathbf {L}}^{2}\)-norm it exhibits a rate which approaches 0.5.

Meanwhile, the variational scheme VD (34) performs rather well for this example, having the smallest errors in both \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norm, and displaying consistent rates of respectively 1 and 0.5.

4.5 Example 4: collision-time initial datum

An interesting feature discussed in [30, Section 5.2] is that the variational discretization allows for irregular initial data. That is, pairs \((u, \mu )\), where \(\mu \) may be a positive finite Radon measure, provide a complete description of the initial data and the corresponding solution of (1) in Eulerian coordinates. In particular, for the absolutely continuous part of \(\mu \) one has

and the cumulative energy \(\mu ((-\infty ,x))\) can be a step function, which is connected to the well-studied peakon–antipeakon dynamics.

For example, at collision time, u may be identically zero and all energy is concentrated in the point of collision as a delta distribution, meaning the cumulative energy will be a step function centered at the collision. To be able to accurately represent the solution between the two peakons emerging from a collision, we have to “pack” sufficiently many characteristics into the collision point. To this end we introduce the initial characteristics \(y_0(\xi ) = y(0,\xi )\) and the initial cumulative energy \(H_0(\xi ) = H(0,\xi )\) similar to [42, Eq. (3.20)],

where \(F_\mu (x) = \mu ([0,x))\) for \(x \in [0,L]\) and \(E = \mu ([0,L))\) is the total energy of the system.

This feature inspired the following variation of peakon–antipeakon initial data, where we initially have a system with period \(L=8\) and total energy \(E = 6\) equally concentrated in the points \(x=2\) and \(x = 6\) on the interval [0, 8]. In Eulerian variables this reads

On the other hand, for the Lagrangian description we use (57) to compute

together with \(U_0(\xi ) \equiv 0\). From this we define the discrete initial data for (34) by \((y_0)_i = y_0(\xi _i)\), \((H_0)_i= H_0(\xi _i)\), and \((U_0)_i = 0\) in the grid points \(\xi _i=i2^{-k} L\) for \(k \in \{3,\dots ,14\}\). Using ode45 with \(\texttt {AbsTol} = \texttt {RelTol} = 10^{-8}\) we integrate from \(t = 0\) to \(t = 4\).

As the conservative multipeakon method describes exactly the interaction of peakons, we may once more use it as reference solution. Setting \(n = 4\), \(L= 8\) in (20) we define initial data

corresponding to two pairs of peakons respectively placed at \(x = 2\) and \(x = 6\) with energy 6 contained between the peakons in each pair. Note that the energy is double that of the energy prescribed for the variational scheme (34), since the factor \(\frac{1}{2}\) is not present in the definition of the energy for the multipeakon scheme. This was then integrated using ode45 with the same tolerances as for the reference solution in the previous example, \(\texttt {AbsTol} = \texttt {eps}\) and \(\texttt {RelTol} = 100\, \texttt {eps}\).

Then we measured the errors using (49) on the reference grid \(x_i = 2^{-16} L\), and the results are displayed in Fig. 7b. We found the decrease in error to be remarkably consistent, rate 1 in the \({\mathbf {L}}^{2}\)-norm and approximately 0.5 for the \({\mathbf {H}}^1\)-norm, and this is true for both the time \(t = 2\) before the collision and time \(t = 4\) after the collision. Figure 7a displays the characteristics for the solution with \(n = 2^6\) together with the four trajectories of the peaks of the exact solution. Observe how the characteristics, initially clustered at the collision points \(x=2\) and \(x = 6\) in accordance with (57), spread out between the pairs of peaks in the reference solution.

In Fig. 8 we have plotted the solution for \(n = 2^6\) and interpolated on a reference grid with \(2^{10}\) grid points. We observe that the interpolants match the shape of the exact solution quite well, even for the derivative, and have only a slight phase error.

Collision-time initial datum. a The \(n = 2^6\) characteristics for the variational scheme (solid red) and for the four reference peakons (dash-dotted black). b Error rates at times \(t = 2\) and \(t = 4\) for \(n = 2^k\) and \(3 \le k \le 14\) evaluated on a \(2^{16}\) point reference grid (Color figure online)

4.6 Example 5: sine initial datum for the CH equation

In the following example we will qualitatively compare how the variational scheme (34) and the conservative multipeakon scheme (20) handle smooth initial data which leads to wave breaking. We have chosen to consider \(u_0(x) = \sin (x)\) for \(x \in [0,2\pi ]\), since this is a simple, smooth periodic function which leads to singularity formation. Furthermore, it is antisymmetric around the point \(x = \pi \), which will highlight another difference between the methods. Since we do not have a reference solution in this case, the comparison will be of a more qualitative nature than in the preceding examples.

As usual we chose \(y_i(0) = \xi _i = i 2 \pi /n\) and \(U_i(0) = u_0(\xi _i)\) for both schemes, and computed their corresponding initial cumulative energies \(H_i(0)\) in their own respective ways. Then we have integrated from \(t = 0\) to \(t = 6\pi \) using ode45 with \(\texttt {AbsTol} = \texttt {RelTol} = 10^{-10}\), and evaluated the interpolated functions on a finer grid with step size \(\varDelta \! x= 2^{-10}L\).

A striking difference in the methods is seen from their characteristics displayed in Fig. 9. That is, we find that the multipeakon method preserves the symmetry of the characteristics, and this we have observed for all values of n that we tested for whenever \(y_i(0)\) were equally spaced for \(i \in \{0,\dots ,n-1\}\). In particular, the characteristics starting at \(\xi _0 = 0\) and \(\xi _{n/2} = \pi \) remain in the same position for all t. Indeed, this is a consequence of the fact that antisymmetry is preserved by (1), cf. [8, 19, Rem. 4.2]. On the other hand, for the variational scheme the characteristics have a slight drift which becomes more pronounced over time, see Fig. 9. This difference is especially pronounced for small n.

Remark 2

A natural question arising from this example is whether one could have chosen a different discrete energy as a starting point for the variational discretization in order to obtain a scheme which respects the preservation of antisymmetry. One could for instance try to use symmetric differences such as the central difference from (22). However, there is the potential drawback of the oscillatory solutions associated with noncompact difference operators, cf. the discussion in [17, p. 1929]. In fact, an early prototype of the scheme (34), comprising only of (25) solved as an ODE system with solution dependent mass matrix, exhibited severe oscillations in front of the peak when applied to the periodic peakon example after replacing \(\mathrm {D}_+\) by \(\mathrm {D}_0\). This indicates that some care has to be exercised when choosing the defining energy.

5 Summary

We have applied the novel variational Lagrangian scheme (34) to several numerical examples. In general it performed well and displayed consistent convergence rates. In particular we saw rate 1 in both \({\mathbf {L}}^{2}\)- and \({\mathbf {H}}^1\)-norm for smooth reference solutions, while for the more irregular peakon reference solutions we observed rate 1 in \({\mathbf {L}}^{2}\)-norm and rate 0.5 in \({\mathbf {H}}^1\)-norm. Due to its rather simple discretization of the energy, it comes as no surprise that other higher-order methods outperform (34) for smooth reference solutions. However, it is for the more irregular examples involving wave breaking that this scheme stands apart, exhibiting consistent convergence even in \({\mathbf {H}}^1\)-norm where other methods may struggle with oscillations.

When it comes to extensions of this work there are several possible paths, and we mention those most apparent. An obvious question is whether the scheme could be improved by choosing a more refined discrete energy for the variational derivation, and if there are choices other than the multipeakons which lead to an integrable discrete system. Another extension is to make the method fully discrete, in the sense that one introduces a tailored method to integrate in time, preferably one that respects the conserved quantities of the system. Finally, one could consider developing a specific redistribution algorithm which can handle the potential clustering of characteristics and prevent artificial numerical collisions when such a Lagrangian method is run over long time intervals.

References

Antonopoulos, D.C., Dougalis, V.A., Mitsotakis, D.E.: Error estimates for Galerkin finite element methods for the Camassa–Holm equation. Numer. Math. 142(4), 833–862 (2019)

Arnold, V.I., Khesin, B.A.: Topological Methods in Hydrodynamics, Volume 125 of Applied Mathematical Sciences. Springer, New York (1998)

Artebrant, R., Schroll, H.J.: Numerical simulation of Camassa–Holm peakons by adaptive upwinding. Appl. Numer. Math. 56(5), 695–711 (2006)

Ascher, U.M.: Numerical Methods for Evolutionary Differential Equations, Volume 5 of Computational Science and Engineering. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (2008)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods, 2nd edn. Dover Publications Inc., Mineola (2001)

Bressan, A., Constantin, A.: Global conservative solutions of the Camassa–Holm equation. Arch. Ration. Mech. Anal. 183(2), 215–239 (2007)

Camassa, R.: Characteristics and the initial value problem of a completely integrable shallow water equation. Discrete Contin. Dyn. Syst. Ser. B 3(1), 115–139 (2003)

Camassa, R., Holm, D.D.: An integrable shallow water equation with peaked solitons. Phys. Rev. Lett. 71(11), 1661–1664 (1993)

Camassa, R., Huang, J., Lee, L.: On a completely integrable numerical scheme for a nonlinear shallow-water wave equation. J. Nonlinear Math. Phys. 12(suppl. 1), 146–162 (2005)

Camassa, R., Lee, L.: Complete integrable particle methods and the recurrence of initial states for a nonlinear shallow-water wave equation. J. Comput. Phys. 227(15), 7206–7221 (2008)

Chertock, A., Liu, J.-G., Pendleton, T.: Convergence of a particle method and global weak solutions of a family of evolutionary PDEs. SIAM J. Numer. Anal. 50(1), 1–21 (2012)

Chertock, A., Liu, J.-G., Pendleton, T.: Elastic collisions among peakon solutions for the Camassa–Holm equation. Appl. Numer. Math. 93, 30–46 (2015)

Coclite, G.M., Holden, H., Karlsen, K.H.: Global weak solutions to a generalized hyperelastic-rod wave equation. SIAM J. Math. Anal. 37(4), 1044–1069 (2005)

Coclite, G.M., Karlsen, K.H., Risebro, N.H.: A convergent finite difference scheme for the Camassa–Holm equation with general \(H^1\) initial data. SIAM J. Numer. Anal. 46(3), 1554–1579 (2008)

Cohen, D., Matsuo, T., Raynaud, X.: A multi-symplectic numerical integrator for the two-component Camassa–Holm equation. J. Nonlinear Math. Phys. 21(3), 442–453 (2014)

Cohen, D., Owren, B., Raynaud, X.: Multi-symplectic integration of the Camassa–Holm equation. J. Comput. Phys. 227(11), 5492–5512 (2008)

Cohen, D., Raynaud, X.: Geometric finite difference schemes for the generalized hyperelastic-rod wave equation. J. Comput. Appl. Math. 235(8), 1925–1940 (2011)

Cohen, D., Raynaud, X.: Convergent numerical schemes for the compressible hyperelastic rod wave equation. Numer. Math. 122(1), 1–59 (2012)

Constantin, A., Escher, J.: Global existence and blow-up for a shallow water equation. Ann. Scuola Norm. Sup. Pisa Cl. Sci. (4) 26(2), 303–328 (1998)

Constantin, A., Escher, J.: Wave breaking for nonlinear nonlocal shallow water equations. Acta Math. 181(2), 229–243 (1998)

Constantin, A., Ivanov, R.I.: On an integrable two-component Camassa–Holm shallow water system. Phys. Lett. A 372(48), 7129–7132 (2008)

Constantin, A., Kolev, B.: Least action principle for an integrable shallow water equation. J. Nonlinear Math. Phys. 8(4), 471–474 (2001)

Constantin, A., Kolev, B.: Geodesic flow on the diffeomorphism group of the circle. Comment. Math. Helv. 78(4), 787–804 (2003)

Constantin, A., Molinet, L.: Global weak solutions for a shallow water equation. Comment. Math. Phys. 211(1), 45–61 (2000)

Cotter, C.J., Holm, D.D., Hydon, P.E.: Multisymplectic formulation of fluid dynamics using the inverse map. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 463(2086), 2671–2687 (2007)

Dai, H.-H., Huo, Y.: Solitary shock waves and other travelling waves in a general compressible hyperelastic rod. Proc. R. Soc. Lond. Ser. A Math. Phys. Eng. Sci. 456(1994), 331–363 (2000)

Eckhardt, J., Kostenko, A.: An isospectral problem for global conservative multi-peakon solutions of the Camassa–Holm equation. Comment. Math. Phys. 329(3), 893–918 (2014)