Abstract

This paper concerns a posteriori error analysis for the streamline diffusion (SD) finite element method for the one and one-half dimensional relativistic Vlasov–Maxwell system. The SD scheme yields a weak formulation, that corresponds to an add of extra diffusion to, e.g. the system of equations having hyperbolic nature, or convection-dominated convection diffusion problems. The a posteriori error estimates rely on dual formulations and yield error controls based on the computable residuals. The convergence estimates are derived in negative norms, where the error is split into an iteration and an approximation error and the iteration procedure is assumed to converge.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper concerns a posteriori error analysis for approximate solution of the Vlasov–Maxwell (VM) system by the streamline diffusion (SD) finite element methods. Our main objective is to prove a posteriori error estimates for the SD scheme in the \(H_{-1}(H_{-1})\) and \(L_\infty (H_{-1})\) norms for the Maxwell equations and \(L_\infty (H_{-1})\) norm for the Vlasov part. The VM system lacking dissipativity exhibits severe stability draw-backs and the usual \(L_2(L_2)\) and \(L_\infty (L_2)\) errors are only bounded by the residual norms. Thus, in order not to rely on the smallness of the residual errors, we employ the negative norm estimates to pick up convergence rates also involving powers of the mesh parameter h and having optimality properties due to the maximal available regularity of the exact solution. Both Vlasov and Maxwell equations are of hyperbolic type and for the exact solution in the Sobolev space \(H^{r+1}\), the classical finite element method for hyperbolic partial differential equations will have, an optimal, convergence rate of order \({\mathcal {O}}(h^r)\), where h is the mesh size. On the other hand, with the same regularity (\(H^{r+1}\)) the optimal convergence rate for the elliptic and parabolic problems is of order \({\mathcal {O}}(h^{r+1})\). This phenomenon, and the lack of diffusivity in the hyperbolic equations which cause oscillatory behavior in the finite element schemes, sought for constructing modified finite element schemes that could enhance stability and improve the convergence behavior for hyperbolic problems. In this regard, compared to the classical finite element, the SD schemes, corresponding to the add of diffusion term to the hyperbolic equation, are more stable and have an improved convergence rate viz, \({\mathcal {O}}(h^{r+1/2})\). Roughly, the SD method is based on a weak formulation where a multiple of convection term is added to the test function. With this choice of the test functions the variational formulation resembles to that of an equation which, compared to the original hyperbolic equation, has an additional diffusion term of the order of the multiplier.

A difficulty arises deriving gradient estimates for the dual problems, which are crucial for the error analysis for the discrete models in both equation types in the VM system. This is due to the lack of dissipative terms in the equations. An elaborate discussion on this issue can be found in the classical results, e.g., [10, 18, 24] as well as in relatively recent studies in [11, 23].

We use the advantage of low spatial dimension that, assuming sufficient regularity, yields existence and uniqueness through d’Alembert formula. This study can be extended to higher dimensional geometries, where a different analytical approach for the well-posedness is available in the studies by Glassey and Schaeffer in, e.g., [13, 14]. Numerical implementations for this model will appear in [5]. We also mention related studies [19, 20] for the Maxwell’s equations where stabilized interior penalty method is used.

Problems of this type have been considered by several authors in various settings. In this regard, theoretical studies for the Vlasov–Maxwell system relevant to our work can be found in, e.g. [9] for treating the global weak solutions, [15] for global weak solutions with boundary conditions and more adequately [12,13,14] for relativistic models in different geometries. SD methods for the hyperbolic partial differential equations have been suggested by Hughes and Brooks in [16]. Mathematical developments can be found in [17]. For SD studies relevant to our approach see, e.g., [1, 2] and the references therein some containing also further studies involving discontinuous Galerkin schemes and their developments. A priori error estimates for a discontinuous Galerkin approach, that is not based on SD framework, are derived in [8]. The study of [8] relies on an appropriate choice of numerical flux and, unlike our fully discrete scheme, is split into separate spatial and temporal discretizations.

An outline of this paper is as follows: In the present Sect. 1, following the introduction, we comment on particular manner of various quantities in the Maxwell equations and introduce the relativistic one and one-half dimensional model with its well-posedness property. In Sect. 2 we introduce some notations and preliminaries. Section 3 is devoted to stability bounds and a posteriori error estimates for the Maxwell equations in both \(H_{-1}(H_{-1})\) and \(L_\infty (H_{-1})\) norms. Section 4 is the counterpart of Sect. 3 for the Vlasov equation which is now performed only in \(L_\infty (H_{-1})\) norm.

Finally, in our concluding Sect. 5, we summarize the results of the paper and discuss some future plans.

Throughout this note C will denote a generic constant, not necessarily the same at each occurrence, and independent of the parameters in the equations, unless otherwise explicitly specified.

The Vlasov–Maxwell (VM) system which describes time evolution of collisionless plasma is formulated as

Here f is density, in phase space, of particles with mass m, charge q and velocity

Further, the charge and current densities are given by

respectively. For a proof of the existence and uniqueness of the solution to VM system one may rely on mimicking the Cauchy problem for the Vlasov equation through using Schauder fixed point theorem: Insert an assumed and given g for f in (1.2). Compute \(\rho _g\), \(j_g\) and insert the results in Maxwell equations to get \(E_g\), \(B_g\). Then insert, such obtained, \(E_g\) and \(B_g\) in the Vlasov equation to get \(f_g\) via an operator \(\Lambda \) : \(f_g=\Lambda g\). A fixed point of \(\Lambda \) is the solution of the Vlasov equation. For the discretized version employ, instead, the Brouwer fixed point theorem. Both these proofs are rather technical and non-trivial. The fixed points argument, rely on viewing the equations in the Maxwell’s system as being valid independent of each others, but the quantities f, B, E, j and \(\rho \) are physically related to each others by the Vlasov–Maxwell system of equations and it is not the case that some of them are given to determine the others. However, in one and one-half geometry, relying on d’Alembert formula Schauder/Brouwer fixed point approach, is unnecessary. The fixed point approach, which was first introduced by Ukai and Okabe in [25] for the Vlasov-Poisson system, is performed for the Vlasov–Maxwell system in [22] in full details and therefore is omitted in here.

1.1 Relativistic model in one and one-half dimensional geometry

Our objective is to construct and analyze SD discretization schemes for the relativistic Vlasov–Maxwell model in one and one-half dimensional geometry (\(x\in {\mathbb R}, v\in {\mathbb R}^2\)), which then can be generalized to higher dimensions:

The system (1.3) is assigned with the Cauchy data

and with

This is the only initial data that leads to a finite-energy solution (see [12]). In (1.3) we have for simplicity set all constants equal to one. The background density \( \rho _b (x) \) is assumed to be smooth, has compact support and is neutralizing. This yields

To carry out the discrete analysis, we shall need the following global existence of classical solution due to Glassey and Schaeffer [12].

Theorem 1.1

(Glassey, Schaeffer) Assume that \( \rho _b \), the background density, is neutralizing and we have

Then, there exists a global \(C^1\) solution for the Relativistic Vlasov–Maxwell system. Moreover, if \( 0\le f^0\in C_0^r({\mathbb R}^3)\) and \(E_2^0,\,\,\, B^0 \in C_0^{r+1}({\mathbb R}^1)\), then (f, E, B) is of class \(C^r\) over \({\mathbb R}^+\times {\mathbb R}\times {\mathbb R^2}\).

Note that for the well-posedness of the discrete solution the existence and uniqueness is due to [22], whereas the stability of the approximation scheme is justified throughout Sects. 3 and 4.

2 Assumptions and notations

Let \( \Omega _x \subset {\mathbb {R}}\) and \( \Omega _v \subset {\mathbb {R}}^2 \) denote the space and velocity domains, respectively. We shall assume that f(t, x, v) , \( E_2(t,x) \), B(t, x) and \( \rho _b (x) \) have compact supports in \( \Omega _x \) and that f(t, x, v) has compact support in \( \Omega _v \). Since we have assumed neutralizing background density, i.e. \( \int \rho (0,x) dx=0 \), it follows that \( E_1 \) also has compact support in \( \Omega _x \) (see [12]).

Now we will introduce a finite element structure on \( \Omega _x \times \Omega _v \). Let \( T_h^x = \{ \tau _x \} \) and \( T_h^v = \{ \tau _v \} \) be finite elements subdivision of \( \Omega _x \) with elements \( \tau _x \) and \( \Omega _v \) with elements \( \tau _v \), respectively. Then \( T_h = T_h^x \times T_h^v = \{ \tau _x \times \tau _v \} = \{ \tau \} \) is a subdivision of \( \Omega _x \times \Omega _v \). Let \( 0= t_0< t_1< \cdots< t_{M-1} < t_M=T \) be a partition of [0, T] into sub-intervals \( I_m = (t_{m-1}, t_m ] \), \( m= 1, 2, \ldots , M \). Further let \( {\mathcal {C}}_h \) be the corresponding subdivision of \( Q_T = [0 ,T ] \times \Omega _x \times \Omega _v \) into elements \( K = I_m \times \tau \), with \( h= {\text {diam}} \, K \) as the mesh parameter. Introduce \( {\tilde{\mathcal {C}}}_h \) as the finite element subdivision of \( {\tilde{Q}}_T= [0 , T ] \times \Omega _x \). Before we define our finite dimensional spaces we need to introduce some function spaces, viz

where

In the discretization part, for \( k=0,1,2, \ldots \), we define the finite element spaces

and

where \( P_k ( \cdot ) \) is the set of polynomial with degree at most k on the given set. We shall also use some notation, viz

and

where \( S_m = I_m \times \Omega \), is the slab at m-th level, \( m=1, 2, \ldots , M \).

To proceed, we shall need to perform an iterative procedure: starting with \(f^{h,0} \) we compute the fields \(E_{1}^{h,1}, E_2^{h,1} \) and \( B^{h,1} \) and insert them in the Vlasov equation to get the numerical approximation \( f^{h,1} \). This will then be inserted in the Maxwell equations to get the fields \(E_{1}^{h,2}, E_2^{h,2} \) and \( B^{h,2} \) and so on. The iteration step i yields a Vlasov equation for \( f^{h,i } \) with the fields \(E_{1}^{h,i}, E_2^{h,i} \) and \( B^{h,i }\). We are going to assume that this iterative procedure converges to the analytic solution of the Vlasov–Maxwell system. More specifically, we have assumed that the iteration procedure generates Cauchy sequences.

Finally, due to the lack of dissipativity, we shall consider negative norm estimates. Below we introduce the general form of the function spaces that will be useful in stability studies and supply us the adequate environment to derive error estimates with higher convergence rates. In this regard: Let \(\Omega \) be a bounded domain in \(\mathbb R^N\), \(N \ge 2\). For \(m \ge 0\) an integer, \(1 \le p \le \infty \) and \(G \subseteq \Omega \), \(W^m_p(G)\) denotes the usual Sobolev space of functions with distributional derivatives of order \(\le m\) which are in \(L_p(G)\). Define the seminorms

and the norms

If \(m\ge 0\), \(W_p^{-m}(G)\) is the completion of \(C^\infty _0(G)\) under the norm

We shall only use the \(L_2\)-version of the above norm.

3 A posteriori error estimates for the Maxwell equations

Our main goal in this section is to find an a posteriori error estimate for the Maxwell equations. Let us first reformulate the relativistic Maxwell system, viz

Set now

Let \( W= (E_1, E_2, B)^T \), \( W^0=(E_1^0, E_2^0, B^0) \) and \( b=(\rho , - j_1, - j_2, 0)^T \). Then, the Maxwell equations can be written in compact (matrix equations) form as

The streamline diffusion method on the ith step for the Maxwell equations can now be formulated as: find \( W^{h,i} \in \tilde{V}_h \) such that for \( m=1, 2, \ldots , M \),

where \( \hat{g} = (g_1, g_1, g_2, g_3)^T \), \( g_\pm (t,x) = \lim _{s \rightarrow 0^\pm } g(t+s, x) \) and \( \delta \) is a multiple of h (or a multiple of \( h^\alpha \) for some suitable \( \alpha \)), see [11] for motivation of choosing \( \delta \).

Now we are ready to start the a posteriori error analysis. Let us decompose the error into two parts

where \( W^i \) is the exact solution to the approximated Maxwell equations at the ith iteration step:

3.1 \(H^{-1} (H^{-1})\) a posteriori error analysis for the Maxwell equations

We will start by estimating the numerical error \({\tilde{e}}^i\). To this end, we formulate the dual problem:

Here \( \chi \) is a function in \( [H^1 ( \tilde{Q}_T )]^3 \). The idea is to use the dual problem to get an estimate on the \( H^{-1} \)-norm of the error \( {\tilde{e}}^i\). Multiplying (3.4) with \( {\tilde{e}}^i\) and integrating over \( \tilde{Q}_T \) we obtain

where

Likewise, due to the fact that all involved functions have compact support in \(\Omega _x\), we can write

Inserting (3.6) and (3.7) into the error norm (3.5), we get

Now since both \(\varphi \) and W are continuous we have that \([\varphi ]=[W]\equiv 0\) and hence \( [{\tilde{e}}^i] =- [W^{h,i} ]\). Thus

Let now \( \tilde{\varphi } \) be an interpolant of \( \varphi \) and use (3.3) with \( g = \tilde{\varphi } \) to get

Now, to proceed we introduce the residuals

and

where the latter one is constant in time on each slab.

Further, we shall use two projections, P and \( \pi \), for our interpolants \( \tilde{\varphi } \). These projections will be constructed from the local projections

and

defined such that

and

Now we define P and \( \pi \), slab-wise, by the formulas

respectively. See Brezzi et al. [6] for the details on commuting differential and projection operators in a general setting. Now we may choose the interpolants as \(\tilde{\varphi }=P \pi \varphi = \pi P \varphi \), and write an error representation formula as

To estimate \( J_1 \) and \( J_2 \) we shall use the following identity

We estimate each term in the error representation formula separately:

where in the last estimate the, piecewise time-constant, residual is moved inside the time integration. As for the \(J_2\)-term we have that

Thus we can derive the estimate

To estimate the second term in (3.9) we proceed in the following way

To get an estimate for the \( H^{-1} \)-norm we need to divide both sides by \( \Vert \chi \Vert _{H^1 ( {\tilde{Q}}_T)} \) and take the supremum over \( \chi \in [H^1 ( \tilde{Q}_T )]^3 \). We also need the following stability estimate.

Lemma 3.1

There exists a constant C such that

Proof

To estimate the \( H^1 \)-norm of \( \varphi \) we first write out the equations for the dual problem explicitly:

We start by estimating the \( L_2 \)-norm of \( \varphi \). Multiply the first equation by \( \varphi _1 \) and integrate over \( \Omega _x \) to get

Standard manipulations yields

The second integral vanishes because \( \varphi _1 \) is zero on the boundary of \( \Omega _x \). We therefore have the following inequality

Integrate over (t, T) to get

Applying Grönwall’s inequality and then integrating over (0, T) we end up with the stability estimate

Similarly we estimate the second and third component of \( \varphi \) as follows: We multiply the second and the third equations of (3.15) with \( \varphi _2 \) and \( \varphi _3 \), respectively. Adding the resulting equations and integrating over \( \Omega _x \), yields the equation

We may rewrite (3.16) as

Note that the third term on the left hand side of (3.17) is identically equal to zero because both \( \varphi _2 \) and \( \varphi _3 \) vanish at the boundary of \( \Omega _x \). We therefore have the following inequality

Integrating over (t, T) we get that

Applying Grönwall’s inequality and then integrating over (0, T) we end up with the stability estimate

Next we need to prove that the \( L_2 \)-norms of the derivatives of \( \varphi \) are bounded by \( \Vert \chi \Vert _{H^1 ( {\tilde{Q}}_T)} \). To do this we first note that \( \varphi \) has analytical solutions, see [22],

Let us start by estimating the x-derivative of \( \varphi _1 \). By the above formula for \( \varphi _1 \) we have that

Cauchy–Schwartz inequality and a suitable change of variables yields

Integrating both sides of the inequality over (0, T) , gives the estimate

Now we can use this inequality together with the first equation in (3.15) to get an estimate for the time derivative of \( \varphi _1 \):

Similar estimates can be derived for the derivatives of \( \varphi _2 \) and \( \varphi _3 \). We omit the details and refer to the estimations of the derivatives for \( \varphi _1 \). \(\square \)

Summing up we have proved following estimate for the numerical error \( {\tilde{e}}^i\).

Theorem 3.1

(A posteriori error) There exists a constant C such that

As for the iterative error \( {\tilde{\mathcal {E}}}^i \) we assume that \( W^i \) converges to the analytic solution, so that, for sufficiently large i, \({\tilde{e}}^i\) is the dominating part of the error \( W - W^{h, i} \), see [22] for motivation of the iteration assumption. Therefore, for large enough i, we have that

This together with Theorem 3.1 yields the following result:

Corollary 3.1

There exists a constant C such that

3.2 \(L_\infty (H^{-1})\) a posteriori error analysis for the Maxwell equations

In this part we perform a \( L_\infty (H^{-1} ) \) error estimate. The interest in this norm is partially due to the fact that the Vlasov part is studied in the same environment. To proceed we formulate a new dual problem as

where \( \chi \in [H^1 (\Omega _x)]^3 \). We multiply \( {\tilde{e}}^i(T, x) \) by \( \chi \) and integrate over \( \Omega _x \) to get

Using (3.6) and (3.7) the above identity can be written as

With similar manipulations as in (3.8), this equation simplifies to

Following the proof of Theorem 3.1 we end up with the following result:

Theorem 3.2

There exists a constant C such that

In the proof of this theorem we use the stability estimate:

Lemma 3.2

There exists a constant C such that

The proof is similar to that of Lemma 3.1 and therefore is omitted. With the same assumption on the iteration error \( {\tilde{\mathcal {E}}}^i \) as in (3.19), the numerical error \( {\tilde{e}}^i\) will be dominant and we have the following final result:

Corollary 3.2

There exists a constant C such that

4 A posteriori error estimates for the Vlasov equation

The study of the Vlasov part relies on a gradient estimate for the dual solution. Here, the \(L_2\)-norm estimates, would only yield error bounds depending on the size of residuals, with no \(h^\alpha \)-rates. Despite the smallness of the residual norms this, however, does not imply concrete convergence rate and smaller residual norms require unrealistically finer degree of resolution. The remedy is to employ negative norm estimates, in order to gain convergence rates of the order \(h^\alpha \), for some \( \alpha >0.\) In this setting a \(H_{-1}(H_{-1})\)-norm is inappropriate. Hence, this section is devoted to \(L_\infty (H_{-1})\)-norm error estimates for the Vlasov equation in the Vlasov–Maxwell system.

4.1 \(L_\infty (H_{-1})\) a posteriori error estimates for the Vlasov equation

The streamline diffusion method on the ith step for the Vlasov equation can be formulated as: find \( f^{h,i} \in V_h \) such that for \( m=1, 2, \dots , M \),

where the drift factor

is computed using the solutions of the Maxwell equations. As in the Maxwell part we decompose the error into two parts

where \( f^i \) is the exact solution of the approximated Vlasov equation at the ith iteration step:

To estimate the numerical error we formulate a corresponding dual problem as

where \( \chi \in H^1 ( \Omega _x \times \Omega _v ) \). Multiplying \( e^i (T, x, v) \) by \( \chi \) and integrating over \( \Omega _x \times \Omega _v\),

Since \( G( f^{h,i-1}) \) is divergence free (i.e., we have a gradient field), we may manipulate the sum above as in (3.6) and (3.7), ending up with

Adding and subtracting appropriate auxiliary terms, see (3.8), this simplifies to

where we have used (4.2). Let now \( \tilde{\Psi }^i \) be an interpolant of \( \Psi ^i \) and use (4.1) with \( g=\tilde{\Psi }^i \) to get

In the sequel we shall use the residuals

and

where \( R_2^i \) is constant in time on each slab.

Finally, we introduce the projections P and \( \pi \) defined in a similar way as in the Maxwell part, where the local projections

and

are defined such that

and

The main result of this section is as follows:

Theorem 4.1

There exists a constant C such that

Proof

We choose the interpolants so that \( \tilde{\Psi }^i = P \pi \Psi ^i = \pi P \Psi ^i \), then

The terms \( \tilde{J}_1 \) and \( \tilde{J}_2 \) are estimated in a similar way as \( J_1 \) and \( J_2 \), ending up with the estimate

Hence, it remains to bound the second and third terms in (4.4). To proceed, recalling the residuals \(R_1^i\) and \(R_2^i\), we have that

where we used that \(\delta = C h\). Summing up we have the estimate

Together with the following stability estimate this completes the proof. \(\square \)

Lemma 4.1

There exists a constant C such that

Proof

We start estimating the \( L_2 \)-norm: multiply the dual equation (4.3) by \( \Psi ^i \) and integrate over \( \Omega \) to get

The second integral is zero, since \( \Psi ^i \) vanishes at the boundary of \( \Omega \), so that we have

Integrating over (t, T) yields

Once again integrating in time, we end up with

It remains to estimate \( \Vert \nabla \Psi ^i\Vert _{L_2(Q_T)} \). To this approach we rely on the characteristic representation of the solution for (4.3), see e.g., [21]:

with X(s, t, x, v) and V(s, t, x, v) being the solutions to the characteristic system

where

Hence, we have

Thus, it suffices to estimate the gradients of X and V. Below we shall estimate the derivatives of X, \( V_1 \) and \( V_2 \) with respect to x. The estimates with respect to \( v_i, \,\, i = 1, 2 \) are done in a similar way. Differentiating (4.5) with respect to x we get

Integrating these equations over [s, t] and then taking the absolute values give

Summing up we have that

Now an application of the Grönwall’s lemma yields

By similar estimates for derivatives with respect to velocity components we have

The estimates (4.6) and (4.7) would result to the key inequalities

which together with the equation for \( \Psi \) gives

Summing up we have shown that

which proves the desired result. \(\square \)

Using the assumption that the iteration error \( {\mathcal {E}}^i \) converges and is dominated by the numerical error \( e^i \), together with Theorem 4.1, we get the following result:

Corollary 4.1

There exists a constant C such that

5 Conclusions and future works

We have presented an a posteriori error analysis of the streamline diffusion (SD) scheme for the relativistic one and one-half dimensional Vlasov–Maxwell system. The motivation behind our choice of the method is that the standard finite element method for hyperbolic problems is sub-optimal. The streamline diffusion is performed slab-wise and allows jump discontinuities across the time grid-points. The SD approach have stabilizing effect due to the fact that, adding a multiple of the streaming term to the test function, it corresponds to an automatic add of diffusion to the equation.

Numerical study of the VM system has some draw-backs in both stability and convergence. The VM system lacks dissipativity which, in general, affects the stability. Further, \(L_2 (L_2)\) a posteriori error bounds would only be of the order of the norms of residuals. In our study, in order to derive error estimates with convergence rates of order \( h^\alpha \), for some \( \alpha > 0 \), the \( H^{-1} (H^{-1})\) and \(L_\infty (H^{-1})\) environments are employed. However, because of the lack of dissipativity, the \( H^{-1} (H^{-1})\)-norm is not extended to the Vlasov part, where appropriate stability estimates are not available. Therefore the numerical study of the Vlasov part is restricted to the \(L_\infty (H^{-1})\) environment.

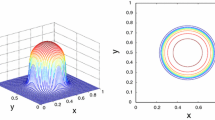

The computational aspects and implementations, which justify the theoretical results of this part, are the subject of a forthcoming study which is addressed in [5].

Future studies, in addition to considering higher dimensions and implementations, may contain investigations concerning the assumption on the convergence of the iteration procedure, see end of Sect. 2.

We also plan to extend this study to Vlasov-Schrödinger-Poisson system, where we rely on the theory developed by Ben Abdallah et al. in [3, 4] and consider a novel discretization procedure based on the mixed virtual element method, as in Brezzi et al. [7].

References

Asadzadeh, M.: Streamline diffusion methods for the Vlasov–Poisson equation. Model. Math. Anal. Numer. 24, 177–196 (1990)

Asadzadeh, M., Kowalczyk, P.: Convergence analysis of the streamline diffusion and discontinuous Galerkin methods for the Vlasov–Fokker–Planck system. Numer. Methods Partial Differ. Equ. 21(3), 472–495 (2005)

Ben Abdallah, N., Mehats, F., Quinio, G.: Global existence of classical solutions for a Vlasov–Schrödinger–Poisson system. Indiana Univ. Math. J. 55(4), 1423–1448 (2006)

Ben Abdallah, N., Mehats, F.: On a Vlasov–Schrödinger-Poisson model. Commun. Partial Differ. Equ. 29(1–2), 173–206 (2004)

Bondestam-Malmberg, J., Standar, C.: Computational aspects of streamline diffusion schemes for the one and one-half dimensional relativistic Vlasov–Maxwell system (In preparation)

Brezzi, F., Douglas Jr., J., Marini, L.D.: Two families of mixed finite elements for second order elliptic problems. Numer. Math. 47(2), 217–235 (1985)

Brezzi, F., Falk, R.S., Marini, L.D.: Basic principles of mixed virtual element methods. ESAIM Math. Model. Numer. Anal. 48(4), 1227–1240 (2014)

Cheng, Y., Gamba, I.M., Li, F., Morrison, P.J.: Discontinuous Galerkin methods for the Vlasov–Maxwell equations. SIAM J. Numer. Anal. 52(2), 1017–1049 (2014)

Diperna, R.J., Lions, P.L.: Global weak solutions of Vlasov–Maxwell systems. Commun. Pure Appl. Math. 42(6), 729–757 (1989)

Friedrichs, K.O.: Symmetric positive linear differential equation. Commun. Pure Appl. Math. 11, 333–418 (1958)

Johnson, C.: Adaptive Finite Element Methods For Conservation Laws, Advanced Numerical Approximation Of Nonlinear Hyperbolic Equations (Cetraro, 1997), pp. 269–323, Lecture Notes in Mathematics, 1697, Springer, Berlin (1998)

Glassey, R., Schaeffer, J.: On the one and one-half dimensional relativistic Vlasov–Maxwell system. Math. Methods Appl. Sci. 13, 169–179 (1990)

Glassey, R., Schaeffer, J.: On global symmetric solutions to the relativistic Vlasov–Poisson equation in three space dimensions. Math. Methods Appl. Sci. 24(3), 143–157 (2001)

Glassey, R., Schaeffer, J.: The Relativistic Vlasov–Maxwell System in 2D and 2.5D, Nonlinear Wave Equations (Providence, RI, 1998), pp. 61–69, Contemporary Mathematics, 263, American Mathematical Society, Providence, RI (2000)

Guo, Y.: Global weak solutions of Vlasov–Maxwell systems with boundary conditions. Commun. Math. Phys. 154, 245–263 (1993)

Hughes, T.J., Brooks, A.: A multidimensional upwind scheme with no crosswind diffusion. In: Hughes, T.J. (ed.) Finite Element Methods for Convection Dominated Flows, vol. 34. AMD ASME, New York (1979)

Johnson, C., Saranen, J.: Streamline diffusion methods for the incompressible Euler and Navier–Stokes equations. Math. Comput. 47, 1–18 (1986)

Lax, P.D., Phillips, R.S.: Local boundary conditions for dissipative symmetric linear differential operators. Commun. Pure Appl. Math. 13, 427–455 (1960)

Monk, P., Sun, J.: Finite element methods for Maxwell’s transmission eigenvalues. SIAM J. Sci. Comput. 34(3), B247–B264 (2012)

Perugia, I., Schötzau, D., Monk, P.: Stabilized interior penalty methods for the time-harmonic Maxwell equations. Comput. Methods Appl. Mech. Eng. 191(41–42), 4675–4697 (2002)

Rein, G.: Generic global solutions of the relativistic Vlasov–Maxwell system of plasma physics. Commun. Math. Phys. 135(1), 41–78 (1990)

Standar, C.: On streamline diffusion schemes for the one and one-half dimensional relativistic Vlasov–Maxwell system. Calcolo 53(2), 147–169 (2016)

Süli, E., Houston, P.: Finite element methods for hyperbolic problems: a posteriori error analysis and adaptivity. The state of the art in numerical analysis (York, 1996), pp. 441–471, Institute of Mathematics and its Applications Conference Series, 63, Oxford Univ. Press, New York (1997)

Tartakoff, D.S.: Regularity of solutions to boundary value problems of first order systems. Indiana Univ. Math. J. 21(12), 1113–1129 (1972)

Ukai, S., Okabe, T.: On classical solutions in the large in time of two-dimensional Vlasov’s equation. Osaka J. Math. 15(2), 245–261 (1978)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Rolf Stenberg.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Asadzadeh, M., Standar, C. A posteriori error estimates for the one and one-half Dimensional Relativistic Vlasov–Maxwell system. Bit Numer Math 58, 5–26 (2018). https://doi.org/10.1007/s10543-017-0666-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10543-017-0666-9