Abstract

Machine learning techniques have gained attention in earthquake engineering for their accurate predictions, but their opaque black-box models create ambiguity in the decision-making process due to inherent complexity. To address this issue, numerous methods have been developed in the literature that attempt to elucidate and interpret black-box machine learning methods. However, many of these methods evaluate the decision-making processes of the relevant machine learning techniques based on their own criteria, leading to varying results across different approaches. Therefore, the critical significance of developing transparent and interpretable models, rather than describing black-box models, becomes particularly evident in fields such as earthquake engineering, where the interpretation of the physical implications of the problem holds paramount importance. Motivated by these considerations, this study aims to advance the field by developing a novel methodological approach that prioritizes transparency and interpretability in estimating the deformation capacity of non-ductile reinforced concrete shear walls based on an additive meta-model representation. Specifically, this model will leverage engineering knowledge to accurately predict the deformation capacity, utilizing a comprehensive dataset collected from various locations globally. Furthermore, the integration of uncertainty analysis within the proposed methodology facilitates a comprehensive investigation into the influence of individual shear wall variables and their interactions on deformation capacity, thereby enabling a detailed understanding of the relationship dynamics. The proposed model stands out by aligning with scientific knowledge, practicality, and interpretability without compromising its high level of accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Shear walls are typically utilized as the primary elements to resist lateral loads in reinforced concrete buildings. Towards capacity design assumptions, shear walls are designed to exhibit ductile behavior by providing adequate reinforcement and proper detailing. However, experimental studies have shown that walls with an aspect ratio smaller than 1.5 (i.e., squat walls) and those with poor reinforcement and detailing, despite their higher aspect ratio, end up showing brittle failure (e.g., diagonal tension, web crushing) (ASCE-41 2017; Massone and Wallace 2004; Sittipunt et al. 2001). Such walls are often observed in buildings not designed according to modern seismic codes and are prone to severe damage (Wallace et al. 2012; Arnold et al. 2006). As the performance-based design and assessment approach has gained importance concordant with hazard mitigation efforts, there has been an increasing need and demand for reliable models to predict structural behavior under seismic actions. This objective is particularly important for walls that exhibit shear behavior as the nonlinear deformation capacity of such walls is assumed to be zero, potentially leading to technical and economical over-conservation. More realistic solutions can be achieved if their behavior is accurately estimated and considered in seismic performance evaluation.

The prediction of structural behavior has been achieved through the use of predictive equations or models that are developed based on available experimental data. Recently, machine learning (ML) methods have gained significant attention in the structural/earthquake engineering field, showing promising results even with limited data compared to other domains like computer vision and image processing. However, it should be noted that while black-box models often capture the input–output relationship more accurately in classification and regression tasks, they may not always align with the underlying physical behavior. This issue has been highlighted in various scientific and engineering applications, where black-box models have led to misleading conclusions (Lazer et al. 2014; Douglas and Aochi 2008; Karpatne et al. 2022). Consequently, despite their high accuracy, black-box models are not universally accepted within the earthquake engineering community. To leverage the benefits of advancements in artificial intelligence while considering the physical behavior, recent research efforts have focused on integrating black-box machine learning methods with domain-specific knowledge (Karpatne et al. 2022; Luo and Paal 2022; Zhou et al. 2022; Aladsani et al. 2022). This study takes a step further by employing an explainable machine learning approach, in contrast to black-box models, and incorporates the existing physics-based understanding of seismic behavior to estimate the deformation capacity of non-ductile shear walls.

2 Related research

Research efforts in the literature to estimate wall deformation capacity have produced empirical models, some recently adopted by building codes (Abdullah and Wallace 2019); however, they are relatively limited compared to other behavior features such as shear strength or failure mode. Earlier models were mainly developed using a limited number of experimental results (Paulay et al. 1982; Kazaz et al. 2012) or were trained using a single dataset; that is, they were not trained and tested based on unmixed data (Abdullah and Wallace 2019; Grammatikou et al. 2015). Over time, as machine learning is embraced in the earthquake engineering field (Mangalathu et al. 2020; Zhang et al. 2022; Deger and Taskin 2022; Deger and Taskin Kaya 2022; Aladsani et al. 2022) and new experiments are conducted, more advanced models have been developed. Yet, two main issues are encountered: (i) Some models used simple approaches such as linear regression for the sake of interpretability (Deger and Basdogan 2019) and sacrificed overall accuracy (or had large dispersion). One might think that accurate models that predict relatively complicated behavior attributes can only be achieved by increasing model complexity; however, literature studies have shown that this may cause problems with the structure and distribution of the data (Johnson and Khoshgoftaar 2019; Kailkhura et al. 2019). More importantly, urging the model to develop complex relationships to achieve higher performance typically leads to black-box models where internal mechanisms include highly nonlinear, complex relations. (ii) Such black-box models achieve high overall accuracy at the cost of explainability (Zhang et al. 2022). Researchers that acknowledge the significance of interpretability employed model-agnostic, local or global explanation methods, such as SHapley Additive exPlanation (SHAP) (Feng et al. 2021) and Local Interpretable Model-agnostic Explanations (LIME) (Ribeiro et al. 2016), to interpret the decision mechanism of their models (Aladsani et al. 2022). However, it should be noted that such algorithms are not fully verified (Kumar et al. 2020; Rudin 2019); besides, they are approximate approaches. In addition, due to the diverse criteria employed by each model-agnostic method, the interpretations derived from these methods can exhibit variations across different approaches, leading to different conclusions and insights. Apart from all these, despite their broadening use and high accuracy, the black-box models are not entirely accepted in the earthquake engineering society, primarily due to their opaque internal relationships and occasional lack of reliability (Molnar 2020).

Concerns regarding the trustworthiness and transparency of black-box models have led to the development of explainable artificial intelligence (XAI) (Adadi and Berrada 2018; Lipton 2018). The strategies in XAI can be classified into two main categories: post-hoc explainability, which involves using explanatory algorithms to explain existing black-box models, and the generation of transparent glass-box models (Deger and Taskin Kaya 2022) that are fully comprehensible and interpretable by humans. In fields where critical decisions are paramount, the adoption of transparent models is of utmost importance. However, their prevalence remains limited in comparison to black-box models (Doshi-Velez and Kim 2017). This discrepancy can be attributed to the superior decision-making capabilities demonstrated by black-box models. The use of highly complex functions during the training of these machine learning models allows them to capture the underlying nonlinear structure present in the data, thereby enabling them to make significantly better decisions than transparent models. On the other hand, transparent models aim to construct learning models with simpler functions in order to maximize performance while also facilitating human understanding and comprehension of the model (Selbst and Barocas 2018). Linear regression, logistic regression, and decision trees are widely recognized as transparent methods in the field of machine learning. However, due to the utilization of simpler functions or rules in such methods during the learning phase, the performance levels of transparent models are typically lower than black-box models (Linardatos et al. 2020). To improve performance, the structure of functions used to construct predictive models can be intentionally made complex. This can be achieved by leveraging the framework of generalized additive models (GAMs) (Hastie et al. 2009). The GAMs allow for flexible modeling by incorporating smooth and non-linear functions of the input variables while still maintaining interpretability. This flexibility enables the model to capture complex relationships and interactions between input variables. Some approaches developed based on the GAM framework include Neural additive model (NAM) (Agarwal et al. 2021), GAMI-Net (Yang et al. 2021), and Enhanced explainable neural network (ExNN) (Yang et al. 2020). Another method, Explainable Boosting Machine (EBM) is an alternative tree-based method that integrates the interpretability of generalized additive models (GAMs) with the predictive capabilities of boosting (Nori et al. 2019). Notably, EBM has been widely reported in several studies, including detecting common flaws in data (Chen et al. 2021), diagnosing COVID-19 using blood test variables (Thimoteo et al. 2022), predicting diseases such as Alzheimer (Sarica et al. 2021), or Parkinson (Sarica et al. 2022), as a competitive alternative to powerful boosting methods like XGBoost and LightGBM.

Understanding how the model makes the decision/estimation is critical to (i) verify that the model is physically meaningful, (ii) develop confidence in the predictive capabilities of the model, and (iii) broaden existing scientific knowledge with new insights. This study addresses this requirement and fills a crucial research gap in earthquake engineering by leveraging domain-specific knowledge to evaluate and validate the decisions made by machine learning methods. Unlike the existing ML-based predictive models (Aladsani et al. 2022; Zhang et al. 2022), the proposed model aims to estimate the deformation capacity of non-ductile reinforced concrete (RC) shear walls and inherently possesses transparency and interpretability. The methodology is developed based on the framework of generalized additive models and incorporates engineering knowledge to improve accuracy and reliability, providing valuable insights for structural analysis and design in earthquake engineering. The inputs of the predictive model are designated as the shear wall design properties (e.g., wall geometry, reinforcing ratio), whereas the output is one of the constitutive components of the nonlinear wall behavior, that is, the deformation capacity. The main contributions of this research are highlighted as follows:

-

A fully transparent and interpretable predictive model is developed to estimate the deformation capacity of RC shear walls that fail in pure shear or shear-flexure interaction.

-

The proposed model meets all desired properties, i.e., decomposability, algorithmic transparency, and simulatability, without compromising high performance.

-

This study integrates novel computational methods and domain-based knowledge to formalize complex engineering knowledge. The proposed model’s overall consistency with a physics-based understanding of seismic behavior is verified.

3 The RC shear wall database

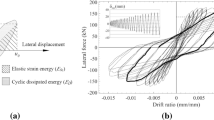

The experimental data used in this research is a sub-assembly of the wall test database utilized in Deger and Taskin (2022) with 30 additional data (Tokunaga and Nakachi 2012; Hirosawa 1975). As the main focus is to estimate the deformation capacity of walls governed by shear or shear-flexure interaction, walls that did not show so-called shear failure indications based on their reported failure modes are excluded from the database, resulting in 286 specimens of use for this research. Shear failure indications are mainly identified as diagonal tension failure and web crushing damage, which imply reaching shear strength. All specimens were tested under quasi-static cyclic loading, whereas none was retrofitted and re-tested. The database consists of wall design parameters, depicted in Fig. 1, which are herein designated as the input variables of the machine learning problem, namely: wall geometry (\(t_w\), \(l_w\), \(h_w\)), shear span ratio (\(M/Vl_w\)), concrete compressive strength (\(f_c\)), yield strength of longitudinal and transverse reinforcing steel at web (\(f_{yl}\), \(f_{yt}\)), yield strength of longitudinal and transverse reinforcing steel at boundary elements (\(f_{ybl}\), \(f_{ysh}\)), longitudinal and transverse reinforcing ratios at web (\(\rho _{yl}\), \(\rho _{yt}\)), longitudinal and transverse reinforcing ratios at boundary elements (\(\rho _{ybl}\), \(\rho _{ysh}\)), axial load ratio (\(P/(A_gf_c\))), shear demand (or strength) at the section (\(V_{max}\)), cross-section type (rectangular, barbell-shape, or flanged, XSec), curvature type (single or double, CT). It is noted that single curvature and double curvature correspond to the end conditions of the specimen, i.e., cantilever and fixed-fixed, respectively. Distributions of the input variables are presented in Fig. 2 along with their box plots (shown in blue).

The output variable of the ML problem, the deformation capacity, is taken directly as the reported ultimate displacement prior to its failure if the specimen is tested until failure. Otherwise, it is assumed as the displacement corresponding to \(0.8V_{max}\) as suggested by Park (1989). It is noted that failure displacement was taken as the total wall top displacement and was not separated into shear and flexural deformation components.

4 Generalized additive models

The Generalized Additive Model (GAM) (Hastie and Tibshirani 1987) was initially proposed as a representation model that models an n-dimensional complex mathematical function or a black-box problem as a linear combination of first-order basis functions. To improve the model representation and capture additional complexities, second-order terms, including the pairwise effects between variables, were incorporated into the model. This extended version of GAM, known as GA\(^2\)Ms (Generalized Additive Models with Second-order terms) (Lou et al. 2012), is expressed in Eq. 1.

where \(f_0\) denotes the intercept term, calculated as the mean response of all the outputs, while each \(f_j\) represents the univariate shape function for the i-th variable, capturing the individual effect of \(x_i\) on the model output \(f(x_1, \ldots , x_n)\). Additionally, as already mentioned earlier, the model incorporates K pairs of features \((k_1, k_2)\) to account for their combined effects (\(f_k(x_{k_1},x_{k_2}))\).

Notably, since the shape functions are trained independently for each input variable, the model is additive (decomposable), enabling the separate analysis of the effect of each input variable on the model output. Thus, the effect of each feature on the model’s predictions, which is captured by the corresponding univariate shape function, can be interpreted through visualization of these shape functions (algorithmically transparent). An example of such visualization can be observed in Fig. 3, where the shape functions provide insights into the influence of different features on the model’s output.

Similarly, the pairwise interaction \(f_{ij}(x_i,x_j)\) could be rendered as a heatmap on the two-dimensional \(x_i\), \(x_j\)-plane. In the inference phase, all the terms in Eq. 1 are added up, yielding a final prediction of model output, i.e., deformation capacity. Moreover, the GAM offers both local and global explanations of the learning model as each variable importance is estimated as the average absolute value of the predicted score. It should be noted that for better performance, additional interactions can be incorporated; however, this may result in a more complex model with lower generalization performance due to the increased number of model parameters to be trained. This increased complexity can make the final predictive model less comprehensible as well (less simulatability). Given this trade-off, the number of interactions is set to two for the proposed model.

The framework of Generalized Additive Models (GAMs) offers a flexible and interpretable approach for modeling complex relationships. It achieves this by decomposing the overall function into additive components represented by the shape functions. Each shape function captures a specific aspect of the relationship between the input variables, enabling the modeling of nonlinearity and interactions. The determination of these basis functions can be accomplished using various methods, resulting in different representation techniques. While alternative analytical functions like splines or orthogonal polynomials can be employed to define shape functions, they may yield less accurate representations of nonlinear models (McLean et al. 2014). Alternatively, shape functions can be modeled in such cases using black-box methods such as trees, boosting, or neural networks. This approach is particularly advantageous when the underlying relationship between variables is complex and nonlinear. Among these methods, Explainable Boosting Machine (EBM), developed based on the GAM framework, is a relatively new approach that employs random tree and boosting techniques to represent shape functions (Nori et al. 2019). The EBM method effectively models nonlinearity in the model and has demonstrated high performance in the literature, competing with other powerful boosting methods, such as LightGBM, XGBoost, etc. (Nori et al. 2021). The GAMs have certain advantages over tree-based models such as XGBoost, particularly in the context of interpretability, even when considering SHAP for tree models. The GAMs inherently provide a clear and direct understanding of how each feature influences the outcome, as they model the relationship between predictors and response in an additive and smooth manner. This smoothness is crucial for capturing non-linear trends in data, a feature that tree-based models, with their step-wise data splits, might not capture as effectively. Moreover, while tree models can handle complex interactions, GAMs maintain interpretability even when modeling the interactions between the model’s variables. Additionally, GAMs provide more stable predictions for minor variations in input data and can inherently model uncertainty, providing confidence intervals around predictions. This contrasts with tree-based models, where predictions can change more abruptly due to discrete data splits. Due to these strengths, the EBM, a model based on GAM, will be utilized in this study for modeling the deformation capacity, incorporating domain knowledge, and ensuring physical consistency with seismic behavior.

5 Experimental analysis

To assess whether the method compromises accuracy for the sake of interpretability, the performance of the proposed model, specifically utilizing the EBM, is compared to three state-of-the-art black-box machine learning models, namely: XGBoost (Chen and Guestrin 2016), Gradient Boost (Friedman 2001), Random Forest (Breiman 2001), and two glass-box models, namely Ridge Linear Regression (Hastie et al. 2009), Decision Tree (Breiman et al. 2017). All the implementations are carried out in a Python environment. To ensure a fair comparison, the entire database, including all twelve input variables (ten variables from Fig. 2 and two binary coded variables for curvature type and cross-section type), is randomly split into training and test datasets with a ratio of 90% and 10%, respectively. The performance of each model is influenced by hyperparameters such as learning rate, number of leaves, and number of interactions. A tenfold cross-validation technique is employed to optimize these hyperparameters. This technique involves splitting the data into ten subsets, using one subset as the validation set, while training the model with different hyperparameter settings on the remaining nine subsets. This helps prevent overfitting on the training dataset during hyperparameter tuning. This procedure is not only standard but recommended in the machine learning community, as it provides a comprehensive assessment over multiple train-test splits, thus offering more accurate results of the model’s performance in practical scenarios. By integrating this well-established method, we aim to address potential concerns regarding the reliability of our analysis.

For performance evaluations, the following three metrics are used over “unseen” (i.e., not used in the training process) test datasets of ten random train-test data splittings: coefficient of determination (\(R^2\)), relative error (RE), and prediction accuracy (PA), as given in Eqs. 2, 3, and 4, respectively.

where \(y_i\), \(\bar{y}\), \(\hat{y_i}\), and m refer to the actual output, the mean value of \(y_i\)s, predicted output of corresponding regression model, and a number of samples in the test dataset, respectively.

The EBM method was initially employed on the dataset without considering any form of physical meaningfulness to evaluate its performance, especially compared to other boosting-based methods. Table 1 presents the mean performance scores (evaluation metrics) of all the machine learning models, while Fig. 4 illustrates their dispersion through box plots. The results demonstrate that the EBM achieves comparable performance to its black-box counterparts, with a coefficient of determination (\(R^2\)) of 0.83, a relative error of 0.41%, and a prediction accuracy (PA) of 1.21. The box plots in Fig. 4 also indicate that EBM exhibits low deviations in \(R^2\), relative error (RE), and prediction accuracy (PA), indicating the model’s reliability and robustness across different train-test splits. Moreover, the mean prediction accuracy (PA) reveals an overestimation of approximately 20% for both EBM and the black-box methods, suggesting the presence of potential noise in some input variables. In comparison to transparent models, EBM outperforms both the Decision Tree (DT) and Ridge Linear Regression (RLR) across all three metrics, demonstrating its superiority over traditional glass-box approaches.

Considering the achieved results, the most remarkable advantage of the EBM method over the others is that it provides full explainability without sacrificing accuracy. Unlike other methods, EBM enables the user to understand how the prediction is made and which parameters are essential in the decision-making process. Therefore, the EBM method is selected as the baseline algorithm for the rest of the analysis to propose a prediction model for estimating the deformation capacity based on the following criteria: developing a model with fewer input variables (high simulatability), achieving high accuracy, and ensuring physical consistency.

5.1 The proposed deformation capacity model

To reduce the number of variables in the prediction model without sacrificing prediction accuracy, it is necessary to identify the most influential variables through uncertainty analysis. This approach aims to propose a model that is not only more interpretable and simple but also practical and easily implementable with a reduced number of variables for potential users. Therefore, the importance of the wall properties in predicting the deformation capacity is evaluated based on additive term contributions visualized in Fig. 5. The results reveal that \(t_w\) and \(M/Vl_w\) (or \(h_w\)/\(l_w\)) have the greatest impact on individual predictions. This is consistent with the mechanics of the behavior as walls with smaller thickness are shown to be more susceptible to lateral stiffness degradation due to concrete spalling, leading to a failure caused by lateral instabilities or out-of-plane buckling (Vallenas et al. 1979; Oesterle et al. 1976). The shear span ratio (or aspect ratio), on the other hand, has a significant impact on deformation capacity as the higher the shear span ratio gets, the slender the wall is, and the higher deformations it typically can reach prior to its failure. The least important wall parameters, on the other hand, are identified as curvature type, cross-section type, and concrete compressive strength.

One objective of this research is to propose a practical model as well as its accuracy and transparency. To develop the predictive model with as few input variables as possible, various combinations of input features, selected based on engineering knowledge, are thoroughly assessed to achieve performance scores comparable to those obtained when using all twelve features. Domain knowledge-based feature selection emphasizes two key features: (i) the shear stress demand (\(V_{max}\)) and (ii) the axial load ratio (\(P/(A_gf_c\))). These two features have been highlighted for their significant impact, as evidenced by various research studies [e.g., Abdullah and Wallace (2019), Lefas et al. (1990), Netrattana et al. (2017)], and are underscored by ASCE 41-17 (ASCE-41 2017) for accurately modeling wall deformation. Table 2 presents various input feature combinations along with the corresponding mean coefficient of determination (\(R^2\)) and mean prediction accuracy (PA) on the test datasets over ten random splittings. As shown in Table 2, EBM can achieve performance scores comparable to using all features by focusing on just four key features: \(V_{max}\), \(M/Vl_w\), \(P/(A_gf_c\)), and \(t_w\).Integrating additional features (e.g., \(\rho _l\), \(\rho _{bl}\), \(\rho _{sh}\)) deemed influential by experimental findings (Tasnimi 2000; Hube et al. 2014), has demonstrated only a marginal impact on the overall performance.

The proposed predictive model is selected to achieve the highest \(R^2\) with a prediction accuracy as close to 1.0 on the validation dataset as possible. The correlation plots are presented in Fig. 6 for training and test data sets, where scattered data are concentrated along the \(y=x\) line, demonstrating that the proposed model can make accurate predictions. Additionally, it is worth mentioning that the distribution of the residuals is concentrated around zero. It should be noted that overfitting occurs when a model is excessively complex and learns not only the underlying patterns in the training data but also its noise, leading to poor generalization on new, unseen data. As can be seen in Fig. 6a, the \(R^2\) accuracy of our model on the training data is 96%, while on the test data, it is also 92%. These results can be interpreted as an indication that our model has not overfitted. This is evident because the model exhibits consistent performance across both training and test datasets, suggesting that it generalizes well to new, unseen data without being overly tailored to the specific patterns of the training set.

Following all of these analyses, the proposed model is illustrated in Figure Fig. 7. As discussed above, the proposed model is an additive model in which each relevant feature is designated a quantitative term contribution, therefore enabling the user to examine the contribution of each feature through univariate (Fig. 7a–d) and bivariate shape functions (Fig. 7e–f). The values, called scores, are read from these functions, and those from heat maps representing pairwise interactions (i.e., between two features) are summed up to calculate the prediction. The gray zones displayed along the shape functions represent error bars, indicating the model’s uncertainty and sensitivity to the data. These error bars are particularly visible in cases of sparsity or the presence of outliers within the corresponding region. Furthermore, it is observed that the resulting shape functions exhibit characteristics of jump-like, piece-wise constant functions instead of smooth curves with gradual inclines and declines. This behavior can be attributed to the fact that the shape functions of the proposed model are derived from multiple decision-tree learning models.

The shape functions in Fig. 7 also indicate their correlations with the output in a graphical representation. For example, nonlinear patterns that can not be observed in linear approaches can be easily interpreted (Zschech et al. 2022), which provides new insights to broaden existing experimental-based knowledge. Experimental results (Corley et al. 1981) demonstrate that the shear strength \(V_{max}\) (Fig. 7d) reduces ductility, thus, the deformation capacity, aligning with the suggestions of ASCE 41-17 acceptance criteria, whereas, the collection of relevant experimental data in the literature has revealed a highly nonlinear pattern (Aladsani et al. 2022; Deger and Basdogan 2019). This nonlinearity can be observed in the shape function suggested by the proposed method. Considering the other input variables (\(M/Vl_w\),\(t_w\), \(P/(A_gf_c)\)), the proposed model is also consistent with experimental results in the literature such that \(M/Vl_w\) (Fig. 7a) and \(t_w\) (Fig. 7b) have a positive impact, as discussed above, whereas \(P/(A_gf_c)\) (Fig. 7c) has an adverse influence (Lefas et al. 1990; Farvashany et al. 2008). The reason for \(M/Vl_w\) and \(t_w\) (Fig. 7b) suggesting an inverse effect up to a certain point (\(M/Vl_w \approx 1.2\), \(t_w \approx 60\) cm, \(P/(A_gf_c) \approx 0.08\)) is because the model has an intercept value (\(f_0\), Eq. 1) and specimens with smaller deformation capacities (\(f_0\) less than 35.528) are predicted adding up negative values. The unexpected spikes in \(t_w\) are likely due to a sudden increase in the number of data points at \(t_w = 100\) mm and \(t_w = 200\) mm (64 and 44 specimens, respectively). These particular thickness values are commonly employed in experiments. Consequently, when the output varies while the input remains consistent across a significant number of specimens, it can pose challenges in decision-making processes.

As mentioned earlier, generalized additive models provide flexibility over the structure of the developed model by allowing modifications such as adjusting the number of pairwise interactions. This allows the GAM to suggest more than one model for the same input–output configuration for a particular train-test dataset. Reducing the number of interactions brings simplicity to the model; however, it typically loses accuracy as GAM relies on its automatically determined interactions in the decision-making process.

5.2 Sample-based verification of proposed model

To further examine the physical meaningfulness of the proposed model, the prediction of deformation capacity was validated based on two example specimens, where both good and poor results were obtained. The prediction for one specimen showed excellent accuracy with almost zero error (Fig. 8a), while the prediction for the other specimen had an error of approximately 15% (Fig. 8b). The contribution of each feature to the prediction is presented for each specimen such that the intercept is constant and shown in gray, the additive terms with positive impact are marked in orange, and additive terms decreasing the output are shown in blue. Each contribution estimate is extracted from the shape functions and two-dimensional heat maps (Fig. 7) based on the input values of a specific specimen. Overall, the model is consistent with physical knowledge, except \(V_{max}\) has an unexpected positive impact on the output for the relatively worse prediction (Fig. 8b). This is an excellent advantage of proposed method; that is, the user can prudently understand how the prediction is made for a new sample and develop confidence in the predictive model (versus blind acceptation in black-box models).

5.3 Comparisons with current code provisions

For validation and verification of the predictive model, performance scores are compared with those obtained using the current code provisions. ASCE 41-17 and ACI 369-17 (ACI-369 2017) provide recommended deformation capacities for nonlinear modeling purposes, where shear walls are classified into the following two categories based on their aspect ratio: shear-controlled (\(h_w/l_w > 1.5\)) and flexure-controlled (\(h_w/l_w > 3.0\)). The deformation capacity of shear-controlled walls is identified as drift ratio such that \(\Delta _u/h_w = 1.0\) if the wall axial load level is greater than 0.5 and \(\Delta _u/h_w = 2.0\) otherwise (ASCE-41 2017) (Table 3).

Deformation capacity predictions based on the proposed model are compared to ASCE 41-17 provisions in Fig. 9 based on the test data set. Predicted-to-actual ratios are \(1.06\pm 0.49\) and \(6.42\pm 3.17\) for proposed model and code predictions, respectively. The results imply that traditional approaches may lead to the overestimation of deformation capacities and cause unsafe assessments.

5.4 Conclusions

The utilization of machine learning techniques has gained significant attention in earthquake engineering, providing promising solutions to various challenges and yielding reasonably reliable and accurate predictions. However, the decision-making process of machine learning models remains ambiguous mainly due to their inherent complexity, thereby creating opaque black-box models. To deal with this issue, a fully transparent predictive model is developed in this study to estimate the deformation capacity of reinforced concrete shear walls that fail in pure shear or shear-flexure interaction. The proposed method is constructed based on a generalized additive model such that each relevant feature (wall parameters) is designated a quantitative term contribution. The input–output configuration of the model is designated as the shear wall design properties (e.g., wall geometry, axial load ratio) and ultimate wall displacement, respectively. The conclusions derived from this study are summarized as follows:

-

The importance of the wall properties in predicting the deformation capacity is evaluated based on additive term contributions. The feature, including \(t_w\) and \(M/Vl_w\) (or \(h_w\)/\(l_w\)), have the greatest effect on individual predictions, whereas the least relevant ones are identified as curvature type, cross-section type, and concrete compressive strength.

-

Compared to three black-box models (XGBoost, Gradient Boost, Random Forest), the model, constructed based on EBM, achieves similar or better performance in terms of correlation of determination (\(R^2\)), relative error (RE), and prediction accuracy (PA; the ratio of predicted to the actual value). The EBM-based model achieves a mean \(R^2\) of 0.83 and a mean RE of 0.41% using twelve input variables based on ten random train-test splittings.

-

Compared to two glass-box methods (Decision Tree (DT) and Ridge Linear Regression (RLR)), the EBM outperforms both methods across all three metrics.

-

The dispersion of performance metrics of the EBM-based model is small, implying that the model is robust and the performance is relatively less data-dependent.

-

Compared to the EBM-based model when all the available features are used, the proposed method achieves competitive performance scores using only four input variables: \(M/Vl_w\), \(P/(A_gf_c)\), \(t_w\), and \(V_{max}\). Using these four features, the proposed model achieves \(R^2\) of 0.92 and PA of 1.05 based on the test dataset. Using fewer variables ensures that the model is less simulatable, more practical, more comprehensible, and reduces the computational cost.

-

It is important to note that the decision-making process developed by the proposed EBM-based model has overall consistency with scientific knowledge despite several exceptions detected in sample-based inferences. This is an excellent advantage of the proposed model; that is, the user can assess and evaluate the prediction process before developing confidence in the result (versus blindly accepting it as in black-box models).

-

This model delivers exact intelligibility, i.e., there is no need to use local explanation methods (e.g., SHAP, LIME) to interpret the learning model, which obviates the uncertainties associated with their approximations.

The proposed model is valuable in that it is simultaneously accurate, explainable, and consistent with scientific knowledge. The proposed method’s ability to provide interpretable and transparent results would allow engineers to better understand the factors that affect the deformation capacity of non-ductile RC shear walls and make informed design decisions. The use of the EBM to estimate deformation capacity would improve the reliability and efficiency of structural analysis and design processes, leading to safer and more cost-effective buildings.

References

Abdullah SA, Wallace JW (2019) Drift capacity of reinforced concrete structural walls with special boundary elements. ACI Struct J 116(1):183

ACI-369 (2017) Standard Requirements for Seismic Evaluation and Retrofit of Existing Concrete Buildings and Commentary (ACI 369-17). American Concrete Institute, Farmington Hills

Adadi A, Berrada M (2018) Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6:52138–52160

Agarwal R, Melnick L, Frosst N, Zhang X, Lengerich B, Caruana R, Hinton GE (2021) Neural additive models: interpretable machine learning with neural nets. Adv Neural Inf Process Syst 34:4699–4711

Aladsani MA, Burton H, Abdullah SA, Wallace JW (2022) Explainable machine learning model for predicting drift capacity of reinforced concrete walls. ACI Struct J 119(3):191

Arnold C, Bolt B, Dreger D, Elsesser E, Eisner R, Holmes W, McGavin G, Theodoropoulos C (2006) FEMA 454: design for earthquakes: a manual for architects. Federal Emergency Management Agency, Washington

ASCE-41: ASCE Standard ASCE/SEI, 41-17 (2017) Seismic evaluation and retrofit of existing buildings. American Society of Civil Engineers https://books.google.com.tr/books?id=HoyatAEACAAJ

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Breiman L, Friedman JH, Olshen RA, Stone CJ (2017) Classification and regression trees. Routledge, London

Chen T, Guestrin C (2016) XGBoost: a scalable tree boosting system. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 785–794

Chen Z, Tan S, Nori H, Inkpen K, Lou Y, Caruana R (2021) Using explainable boosting machines (EBMs) to detect common flaws in data. In: Joint European conference on machine learning and knowledge discovery in databases. Springer, pp 534–551

Corley W, Fiorato A, Oesterle R (1981) Structural walls. Spec Publ 72:77–132

Deger ZT, Basdogan C (2019) Empirical expressions for deformation capacity of reinforced concrete structural walls. ACI Struct J. https://doi.org/10.14359/51716806

Deger ZT, Taskin Kaya G (2022) Glass-box model representation of seismic failure mode prediction for conventional reinforced concrete shear walls. Neural Comput Appl 34:1–13

Deger ZT, Taskin G (2022) A novel GPR-based prediction model for cyclic backbone curves of reinforced concrete shear walls. Eng Struct 255:113874

Doshi-Velez F, Kim B (2017) Towards a rigorous science of interpretable machine learning. arXiv preprint arXiv:1702.08608

Douglas J, Aochi H (2008) A survey of techniques for predicting earthquake ground motions for engineering purposes. Surv. Geophys. 29(3):187–220

Farvashany FE, Foster SJ, Rangan BV (2008) Strength and deformation of high-strength concrete shearwalls. ACI Struct J 105(1):21

Feng D-C, Wang W-J, Mangalathu S, Taciroglu E (2021) Interpretable XGBoost-SHAP machine-learning model for shear strength prediction of squat RC walls. J Struct Eng 147(11):04021173

Friedman JH (2001) Greedy function approximation: a gradient boosting machine. Ann Stat 29:1189–1232

Grammatikou S, Biskinis D, Fardis MN (2015) Strength, deformation capacity and failure modes of RC walls under cyclic loading. Bull Earthq Eng 13(11):3277–3300

Hastie T, Tibshirani R (1987) Generalized additive models: some applications. J Am Stat Assoc 82(398):371–386

Hastie T, Tibshirani R, Friedman J (2009) Additive models, trees, and related methods. Springer, New York

Hastie T, Tibshirani R, Friedman JH, Friedman JH (2009) The elements of statistical learning, vol 2. Data Mining, Inference, and Prediction. Springer, New York

Hirosawa M (1975) Past experimental results on reinforced concrete shear walls and analysis on them. Kenchiku Kenkyu Shiryo 6:33–34

Hube M, Marihuén A, Llera JC, Stojadinovic B (2014) Seismic behavior of slender reinforced concrete walls. Eng Struct 80:377–388

Johnson JM, Khoshgoftaar TM (2019) Survey on deep learning with class imbalance. J Big Data 6(1):1–54

Kailkhura B, Gallagher B, Kim S, Hiszpanski A, Han T (2019) Reliable and explainable machine-learning methods for accelerated material discovery. npj Comput Mater 5(1):1–9

Karpatne A, Kannan R, Kumar V (2022) Knowledge guided machine learning: accelerating discovery using scientific knowledge and data. CRC Press, Boca Raton

Kazaz İ, Gülkan P, Yakut A (2012) Deformation limits for structural walls with confined boundaries. Earthq Spectra 28(3):1019–1046

Kumar IE, Venkatasubramanian S, Scheidegger C, Friedler S (2020) Problems with Shapley-value-based explanations as feature importance measures. In: International conference on machine learning. PMLR, pp 5491–5500

Lazer D, Kennedy R, King G, Vespignani A (2014) The parable of google flu: traps in big data analysis. Science 343(6176):1203–1205

Lefas ID, Kotsovos MD, Ambraseys NN (1990) Behavior of reinforced concrete structural walls: strength, deformation characteristics, and failure mechanism. Struct J 87(1):23–31

Linardatos P, Papastefanopoulos V, Kotsiantis S (2020) Explainable AI: a review of machine learning interpretability methods. Entropy 23(1):18

Lipton ZC (2018) The mythos of model interpretability: in machine learning, the concept of interpretability is both important and slippery. Queue 16(3):31–57

Lou Y, Caruana R, Gehrke J (2012) Intelligible models for classification and regression. In: Proceedings of the 18th ACM SIGKDD international conference on knowledge discovery and data mining, pp 150–158

Luo H, Paal SG (2022) Artificial intelligence-enhanced seismic response prediction of reinforced concrete frames. Adv Eng Inform 52:101568. https://doi.org/10.1016/j.aei.2022.101568

Mangalathu S, Jang H, Hwang S-H, Jeon J-S (2020) Data-driven machine-learning-based seismic failure mode identification of reinforced concrete shear walls. Eng Struct 208:110331

Massone LM, Wallace JW (2004) Load-deformation responses of slender reinforced concrete walls. Struct J 101(1):103–113

McLean MW, Hooker G, Staicu A-M, Scheipl F, Ruppert D (2014) Functional generalized additive models. J Comput Gr Stat 23(1):249–269

Molnar C (2020) Interpretable machine learning. Lulu.com

Netrattana C, Taleb R, Watanabe H, Kono S, Mukai D, Tani M, Sakashita M (2017) Assessment of ultimate drift capacity of RC shear walls by key design parameters. Bull N Z Soc Earthq Eng 50(4):482–493

Nori H, Caruana R, Bu Z, Shen JH, Kulkarni J (2021) Accuracy, interpretability, and differential privacy via explainable boosting. In: International conference on machine learning. PMLR, pp 8227–8237

Nori H, Jenkins S, Koch P, Caruana R (2019) InterpretML: a unified framework for machine learning interpretability. arXiv preprint arXiv:1909.09223

Oesterle R, Fiorato A, Johal L, Carpenter J, Russell H, Corley W (1976) Earthquake resistant structural walls-tests of isolated walls. Research and Development Construction Technology Laboratories, Portland Cement Association, Washington

Park R (1989) Evaluation of ductility of structures and structural assemblages from laboratory testing. Bull N Z Soc Earthq Eng 22(3):155–166

Paulay T, Priestley M, Synge A (1982) Ductility in earthquake resisting squat shearwalls. J Proc 79:257–269

Ribeiro MT, Singh S, Guestrin C (2016) “Why should i trust you?” Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp 1135–1144

Rudin C (2019) Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 1(5):206–215

Sarica A, Quattrone A, Quattrone A (2022) Explainable machine learning with pairwise interactions for the classification of Parkinson’s disease and Swedd from clinical and imaging features. Brain Imaging Behav 16:1–11

Sarica A, Quattrone A, Quattrone A (2021) Explainable boosting machine for predicting Alzheimer’s disease from MRI hippocampal subfields. In: International conference on brain informatics. Springer, pp 341–350

Selbst AD, Barocas S (2018) The intuitive appeal of explainable machines. Fordham L. Rev. 87:1085

Sittipunt C, Wood SL, Lukkunaprasit P, Pattararattanakul P (2001) Cyclic behavior of reinforced concrete structural walls with diagonal web reinforcement. Struct J 98(4):554–562

Tasnimi A (2000) Strength and deformation of mid-rise shear walls under load reversal. Eng Struct 22(4):311–322

Thimoteo LM, Vellasco MM, Amaral J, Figueiredo K, Yokoyama CL, Marques E (2022) Explainable artificial intelligence for COVID-19 diagnosis through blood test variables. J Control Autom Electr Syst 33(2):625–644

Tokunaga R, Nakachi T (2012) Experimental study on edge confinement of reinforced concrete core walls. In: Fifteenth world conference on earthquake engineering, Lisbon, pp 1–5

Vallenas JM, Bertero VV, Popov EP (1979) Hysteric behavior of reinforced concrete structural walls. NASA STI/Recon Technical Report N 80, 27533

Wallace JW, Massone LM, Bonelli P, Dragovich J, Lagos R, Lüders C, Moehle J (2012) Damage and implications for seismic design of RC structural wall buildings. Earthq Spectra 28(1–suppl1):281–299

Yang Z, Zhang A, Sudjianto A (2020) Enhancing explainability of neural networks through architecture constraints. IEEE Trans Neural Netw Learn Syst 32(6):2610–2621

Yang Z, Zhang A, Sudjianto A (2021) GAMI-NET: an explainable neural network based on generalized additive models with structured interactions. Pattern Recognit 120:108192

Zhang H, Cheng X, Li Y, Du X (2022) Prediction of failure modes, strength, and deformation capacity of RC shear walls through machine learning. J Build Eng 50:104145

Zhou S, Liu S, Kang Y, Cai J, Xie H, Zhang Q (2022) Physics-based machine learning method and the application to energy consumption prediction in tunneling construction. Adv Eng Inform 53:101642. https://doi.org/10.1016/j.aei.2022.101642

Zschech P, Weinzierl S, Hambauer N, Zilker S, Kraus M (2022) Gam(e) changer or not? An evaluation of interpretable machine learning models based on additive model constraints. arXiv preprint arXiv:2204.09123

Acknowledgements

This research has been supported by funds from the Scientific and Technological Research Council of Turkey (TUBITAK) under Project No. 218M535. Opinions, findings, and conclusions in this paper are those of the authors and do not necessarily represent those of the funding agency.

Funding

Open access funding provided by the Scientific and Technological Research Council of Türkiye (TÜBİTAK). Funding was provided by Türkiye Bilimsel ve Teknolojik Arastirma Kurumu (Grant Number 218M535).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Deger, Z.T., Taskin, G. & Wallace, J.W. No more black-boxes: estimate deformation capacity of non-ductile RC shear walls based on generalized additive models. Bull Earthquake Eng (2024). https://doi.org/10.1007/s10518-024-01968-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10518-024-01968-z