Abstract

Model updating procedures based on experimental data are commonly used in case of historic buildings to identify numerical models that are subsequently employed to assess their structural behaviour. The reliability of these models is closely related to their ability to account for all the uncertainties that are involved in the knowledge process. In this regard, to handle these uncertainties and quantify their propagation, Bayesian inference is frequently employed being able to deal with the effects of parameter uncertainty, observation errors and model inadequacy. The computation of the posterior distribution through Bayesian inference needs–however–the evaluation of the likelihood function, which requires solving complex multi-dimensional integration problems. To bridge this shortcoming, the paper compares two Bayesian inference approaches to show how different approximations affect the results of simulated inference: a discrete approach for the likelihood computation in the Bayesian Model Updating (BMU) and a Monte Carlo likelihood-free method known as Approximate Bayesian Computation (ABC) are reported. As reference, the typology of historic masonry towers was considered by using their natural frequencies as experimental data for model updating. The two procedures provide very similar results supporting the validity of both methods despite ABC turns out to be a more flexible approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent decades, the scientific community has paid increasing interest in identifying appropriate computational models for historic masonry buildings, with the aim of evaluating their structural behaviour and performance under exceptional loads. Several modelling strategies are proposed in literature, from block-based models to continuum models, and a recent detailed review of these approaches and a possible classification for masonry structures is shown in D’Altri et al. (2020).

Because of the uncertainties that may affect the geometry of the building, the mechanical response of the masonry and the restraint conditions, the identification of reliable numerical models (i.e. model updating) still is a critical issue which necessarily involves the use of experimental data. It is therefore essential the development of procedures for formally quantifying the uncertainty and for updating the knowledge on unobserved quantities as experimental data are collected. Bayesian inference represents a possible solution; it is a well-known approach in civil engineering and its application to existing buildings for model updating is motivated by the requirement to solve an inverse-problem, i.e. inferring the unobserved parameters governing a particular observed physics, when different sources of uncertainties has led to collect noisy and incomplete data. Furthermore, since different parameter values can lead to the same observed physics the problem becomes ill-conditioned and ill-posed. In this scenario, Bayes' theorem can be employed for addressing inverse problems by using evidence to update the probability of each unknown quantity. As a result, the obtained probabilistic solution is able to describe a whole interval of plausible model parameters.

However, while the approach is adequately formalized from the theoretical point of view, in practice some difficulties still need to be handled.

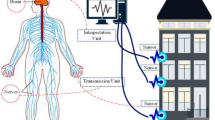

Firstly, the choice of experimental data and their acquisition procedure. Dynamic monitoring of the structural modal parameter under environmental vibration loading is widely recognized as a powerful procedure for investigating the evolution of structural health over time (Beck et al. 2001; Ubertini et al. 2018; Saisi et al. 2018; Standoli et al. 2021a, 2021b; Zini et al. 2022). The dynamic data provide information about the building global response, taking into account material properties as well as structural configuration, and variations in the dynamic response, compared to a reference configuration, can provide indication about damage detection (Pallarés et al. 2021). However, the need to adopt a limited number of sensors, or the necessity to employ no-contact techniques, can make these data unable of describing the structural complexity. Therefore, no experimental data is expected to provide an exact representation of how the system output behaves, as well as no model is expected to exactly represent the system input/output behaviour. Nevertheless, dynamic experimental data can be used to calibrate numerical models to describe not so much the single optimal parameters vector, as the family of all plausible values of model parameters based on the available data.

Secondly, the evaluation of the likelihood function in Bayesian inference. Indeed, despite Bayesian inference is often used in civil engineering for parameters estimation and model updating (Rocchetta et al. 2018; Huang et al. 2019; Pepi et al. 2020), in some cases the likelihood is analytically intractable, and an approximation is required. On the one hand, the likelihood can be computed by following a heuristic approach, using the deviousness of the probability distributions of measurement and modelling errors, when dealing with low-dimensional spaces and easy-to-evaluate distributions (Simoen et al. 2015). On the other hand, the difficulties in computing the likelihood function may be overtaken by using likelihood-free methods such as Approximate Bayesian Computation (ABC) (Tavarè et al. 1997; Pritchard et al. 1999). This latter solution is typically computationally intensive and often involves the employment of metamodels (Rutherford et al. 2005; Ren and Chen 2010; García-Macías et al. 2021; Teixeira et al. 2021) or the definition of empirical or synthetic likelihood functions (Mengersen et al. 2013; Wilkinson 2014; Price et al. 2018).

This paper aims to compare two Bayesian inference approaches for model updating by discussing a framework of realistic inference in case of inaccurate data. In particular, a discrete approach for the likelihood computation in the Bayesian Model Updating (BMU) and a Monte Carlo likelihood-free method are considered. The first, BMU, was proposed in Monchetti et al. (2022) based on Monte Carlo simulations producing an approximation of the continuous problem to a discrete one. The second, known as Approximate Bayesian Computation (ABC), was investigated in Kitahara et al. (2021), Feng et al. (2020). The case of a historic confined masonry tower is analysed as a reference study, and the natural periods are selected as observations employed to update the parameters of the numerical model.

The rest of the paper is organized as follows: Sect. 2 reports the related works. Section 3 outlines the main concepts of Bayesian inference and the two considered approaches are defined. The application of these approaches is shown in Sect. 4 by highlighting their advantages and limitations through discussion of the results. Section 5 closes this paper by reporting conclusions and possible future developments of this study.

2 Related works

The use of dynamic response data in the problem of updating a computational model using a Bayesian statistical framework was originally addressed in the late 1990s by Beck and Katafygiotis (1998). Their research has fostered in the following years a growing interest in the use of Bayesian inference for model updating in practical engineering applications and, at the same time, has been accompanied by an increasing development of computational modelling capabilities. Starting from these early studies, to date, a variety of Bayesian methods for model updating have been proposed in literature to obtain a family of plausible model parameters tuned based on the available experimental information with the aim of overcoming the drawbacks of the deterministic optimization procedure.

These approaches were initially applied to numerical case studies and, more recently, have been extended in the field of civil engineering to existing structures (e.g. Box and Tiao 1992; Beck et al. 2001; Beck 2010; Goller and Schuëller 2011; Arendt et al. 2012; Brynjarsdóttir and OʼHagan 2014; Cheung and Bansal 2017; Conde et al. 2018; Rocchetta et al. 2018; Huang et al. 2019; Pepi et al. 2020; Pepi et al. 2021). However, only a few contributions are available for masonry structures (e.g. Atamturktur et al. 2012; Beconcini et al. 2016; Campostrini et al. 2017; Conde et al. 2018; De Falco et al. 2018; Ierimonti et al. 2021).

In particular, Atamturktur et al. (2012) investigating the choir vaults of the Washington Cathedral employed a Bayesian approach in order to assess the sources of modelling error and to quantify their contribution to the overall structural response prediction. Beconcini et al. (2016) proposed a probabilistic reliability assessment of heritage buildings under seismic and wind loads based on Bayes’ theorem by discussing a practical application on a historical aqueduct in Italy. Campostrini et al. (2017), with the focus of the seismic vulnerability assessment at urban scale, proposed a probabilistic method based on a Bayesian approach, and applied it to a façade of a building structural unit in Italy. To investigate the causes that have originated damage in a masonry arch bridge, Conde et al. (2018) proposed an inverse analysis procedure based on the Bayesian approach formulating the damaged configuration as a parameter estimation problem. A comparison between two model updating procedures, one developed within a deterministic framework and the other based on a Bayesian probabilistic approach, was discussed by De Falco et al. (2018) for the model calibration of a masonry bridge in Italy. More recently, a transfer Bayesian learning methodology for structural health monitoring of an historic masonry palace has been developed in Ierimonti et al. (2021).

These studies show the effectiveness of the application of the Bayesian framework in the field of heritage structures with the purpose of model identification as well as damage detection. They also show how for historic constructions the updating process needs to be addressed by considering the (usual) scarce amount of information, therefore it becomes crucial to understand how limited data can affect model calibration. Recently Rocchetta et al. (2018), discussing a procedure for crack detection in mechanical components, outlined the importance of explicitly accounting for the relevant sources of uncertainties. They presented a comparison between different likelihood functions to improve the accuracy and robustness of the whole procedure.

A comprehensive classification of the various sources of uncertainty was originally proposed in Kennedy and O’Hagan (2001) where the model discrepancy was formally introduced as an uncertainty in the simulator prediction. The central role of the model discrepancy was then deepened in Brynjarsdóttir (2014). They showed that an analyst that does not account for model discrepancy may lead to biased and over-confident parameter estimates and predictions. Indeed, as reported in Beck (2010), since no model of the system is expected to give perfect predictions, it is important to explicitly quantify the uncertain prediction errors in the likelihood evaluation. However, despite in literature are proposed Bayesian methods based on the likelihood evaluation, most of them rely on strong assumptions of normality and independence to overcome the difficulties in writing down analytically the likelihood function. It is therefore becoming increasingly important to define a procedure allowing to easily take into account many different sources of uncertainty to provide a realistic description of the behaviour of the system. Observed data are often linked to mechanical properties through complex systems of differential equations, thus the introduction of the uncertainty produces very complex likelihood functions. Furthermore, each point-wise evaluation of such functions would require running finite element (FE) models to use its solution in complex integral evaluations.

To overcome these difficulties one may rely on likelihood-free methods such as Approximate Bayesian Computation (ABC) to bypass any likelihood definition. Recently, this issue has been attracting the attention of other research groups (among others, Chaudhuri et al. 2018; Feng et al. 2020; Kitahara et al. 2021). In particular, Chaudhuri et al. (2018) reviewed the empirical likelihood ABC methods investigated in literature and proposed an easy-to-use method applied to some illustrative examples. Feng et al. (2020) resorted to ABC methods, but they did not adopt a Bayesian perspective instead, they aimed at finding optimal values of the model parameters. More recently, Kitahara et al. (2021) developed a two-step ABC updating framework using dynamic response data, by using the adaptive Kriging model applied to a numerical example in order to reduce the computational effort. To the best of the authors’ knowledge, ABC methods have not yet been investigated in the model updating of existing buildings. A first attempt is provided in the present paper by overcoming the required computational effort through the definition of a metamodel, as shown in the following sections.

3 Bayesian inference

Bayesian inference is aimed to obtain the posterior probability density function \(p\left( {\overline{\theta }{|}D^{E} } \right)\), i.e. the distribution of the unknown quantities \(\left( {\overline{\theta }} \right)\) given knowledge of the data \(\left( {D^{E} } \right)\), by using Bayes’ theorem:

where \(p\left( {\overline{\theta }} \right)\) denotes the prior probability of the unknown quantities, and \(p\left( {D^{E} {|}\overline{\theta }} \right)\) is the probability of the data given the unknown quantities which is proportional to the likelihood. The denominator is a normalizing factor necessary to ensure that the integral of the posterior distribution equals one (Box and Tiao 1992). The prior distribution allows including the expert judgment in the analysis, and can be interpreted as a measure of relative plausibility of the initial hypothesis. The likelihood function results from the probabilistic model assumed for the data, and quantifies how likely are certain values of \(\overline{\theta }\) in the light of the observed data. The computation of the normalizing constant in Eq. (1) may be challenging, except for the special case of conjugacy, i.e. when the posterior distribution follows the same parametric form as the prior distribution (Gelman et al. (2013)). To solve this problem, a common strategy is to resort to Bayesian simulated inference, in particular to Monte Carlo (MC) and/or Markov Chain Monte Carlo (MCMC) methods (Robert and Casella (2013)). The computation of posterior quantities becomes even more complex when the likelihood function is intractable, i.e. its analytical form is elusive or it is computationally demanding to evaluate. The definition of a tractable likelihood function often requires simplification of the reality based on strong assumptions of independence and normality of the involved random variables. In general terms, it should be considered a numerical mechanical model M which reproduces the observed physical phenomenon. It requires, as an input, the vector of unknowns \(\overline{\theta } = \left( {\theta ,X} \right) \in {\mathbb{R}}^{m + n}\). It is characterized by m independent unknown random parameters \(\theta = (\theta_{1} , \ldots ,\theta_{m}\)), and n latent variables \(X = (X_{1} , \ldots ,X_{n}\)). Note that random parameters are unknown and unobservable characteristics, while latent variables appear as unknown but fixed quantities that are only observable in principle, not in practice.

In this framework, the experimental data represent observable random variables whose generative process can be reproduced through a numerical model, the so-called “simulator”. They, as reported in Kennedy and O’Hagan (2001); Arendt et al. (2012); Brynjarsdóttir (2014), can be expressed according to the following mathematical formulation:

where \({\text{D}}^{{\text{E}}} = \left( {{\text{D}}_{1}^{{\text{E}}} , \ldots ,{\text{D}}_{{\text{k}}}^{{\text{E}}} } \right)\) is the vector of k random variables representing experimental data, \({\text{d}}^{{\text{M}}} \left( {\overline{\theta }} \right)\) denotes the outputs of the computer model, i.e. the simulator, \({\text{b}}\) is a bias term, and \(\epsilon\) represents the random measurement error. Note that the input of the simulator is the vector of unknowns \(\overline{\theta } = \left( {\theta ,X} \right)\). Therefore, given a certain value of \(\overline{\theta }\), Eq. (2), combines two sources of uncertainties (Kennedy and O’Hagan (2001): the model inadequacy, i.e. the difference between the simulator and the real behaviour of the system, and the observation error. These terms represent the discrepancy between the reality and its approximations obtained from the simulator and from the acquisition of experimental data, respectively. From Eq. (2), it is apparent that \({\text{D}}^{{\text{E}}}\) is a random vector whose randomness is induced by the randomness of the observation error and the bias term (given certain values of \({\overline{\theta }}\)). This random vector has a probability density function, \(p\left( {D^{E} |\overline{\theta }} \right)\), that can be obtained as the convolution of two probability distributions according to the total probability theorem:

where, \(D = d^{M} \left( {\overline{\theta }} \right) + b\) is the actual behaviour of the system and, given \(D\), the values of the experimental data depend only on the measurement errors, being conditionally independent from \(\overline{\theta }\).

The two probability density functions (PDFs) \(p\left( {D^{E} |D} \right)\) and \(p\left( {D|\overline{\theta }} \right)\) derive from the distributional assumptions on \(\epsilon\) and \({\text{b}}\). It follows that the way in which the probability distributions for these two sources of uncertainty is specified affects the easiness of computation of the integral in Eq. (3). Alternatively, two additional hypotheses can be considered: (i) the object of the inference procedure is the vector of parameters (\(\theta )\) and, (ii) the bias term is equal to zero because the whole discrepancy between the output of the model and the real behaviour of the system may be led back to latent random variables \(X\). In this simplified scenario, the likelihood function is thus modelled by considering the PDF of the observation error.

Given the posterior distribution for \(\overline{\theta }\), the posterior distribution for θ is provided by marginalizing over \(X\), as shown in the following equation:

where \(c = \iint {p\left( \theta \right)p\left( x \right)p\left( {D^{E} |\theta ,x} \right)dxd\theta }\) is the normalizing constant and \(L\left( {\theta ,D^{E} } \right) = \smallint p\left( x \right)p\left( {D^{E} |\theta ,x} \right)dx\) is the likelihood. Note that both the computation of \(c\) and \(L\left( {\theta ,D^{E} } \right)\) require numerical approximations and may be challenging. In particular, multiple pointwise evaluation of \(L\left( {\theta ,D^{E} } \right)\) would be demanding because also the evaluation of \(p\left( {D^{E} |\theta ,x} \right)\) itself may require numerical approximation of the integral in Eq. (3) based on multiple runs of FE model. In this framework, the computational cost of these evaluations often makes the implementation of standard MCMC methods prohibitive and requires novel likelihood-free approaches (Huang et al. (2019)).

Hereinafter, the solution of Eq. (4) is obtained following two approaches which differ in the computation of \(L\left( {\theta ,D^{E} } \right)\): the first, a BMU approach, is based on a discrete approximation of the likelihood and the second, an ABC method, overcomes the problem of computing the likelihood through a likelihood-free approach. Their applicability is discussed next (in Sect. 4).

3.1 Bayesian model updating (BMU): a discrete approximation of likelihood

Model updating allows the identification of the unknown system properties whenever new information is acquired. Different techniques are proposed in the literature to compute the high-dimensional integrals which usually are involved in the definition of the posterior distributions. For small dimensional problems, when the number of involved latent variables and variables to be inferred is limited, their continuum joint-space can be discretized and the integrals involved in Eq. (4) can be solved via numerical solution. This approach is proposed in Monchetti et al. (2022) where the large number of required simulations necessary to reproduce the continuum joint-space is decreased by using Latin Hypercube sampling technique. Note that this procedure is a simplification of the problem which is applied when the dimension of the vector of unknowns is limited. Moreover, this approach is feasible only when one assumes known and tractable PDFs for \(\mathrm{b}\) and \(\epsilon\), otherwise the evaluation of the integral in Eq. (3) would become too challenging. However, in this simplified scenario, as discussed in Sect. 4, the aim is to obtain a prompt solution for the Bayesian inference, by taking the following actions:

To sum up, the discrete approximation of the likelihood function is workable when (i) a parametric setting of the errors and the latent variables is available and, (ii) the continuum joint-space of the parameters is simple enough to solve the integral \(L\left( {\theta ,D^{E} } \right)\) (i.e. Equation (4)) via numerical solutions. It is worth noticing that assuming the numerical model as perfect (\(b = 0\), with probability 1) and that there are not observation errors (\(\epsilon = 0\), with probability 1), the probability in Eq. (3) is equal to 1 only when \(D^{E}\) is exactly equal to the model output with \(\overline{\theta }\) given as an input. The posterior distribution thus becomes a point of mass over the exact solution of the inverse problem. This particular condition underlines that, even an exact solution to the deterministic optimization problem is reliable only when any discrepancies from the numerical model is completely impossible. In summary, the proposed BMU shows the benefit of not needing the use of metamodels but, as a deficiency, the number of involved variables to be inferred must necessarily be limited.

3.2 Approximate Bayesian computation (ABC): a likelihood-free approach

ABC (Sisson et al. (2018)) is a class of likelihood-free methods for drawing posterior inference when the likelihood function is unavailable, intractable or computationally demanding to be computed. The key idea of this method traces back to Rubin (1984) and is to convert samples from the prior distribution into samples from the posterior. The only requirement is the ability to produce samples from a simulator, beyond that from the prior. The method is based on the comparison between observetions and data produced by the simulator, the so-called pseudo-data,\({D}^{S}\). In particular, the primal ABC algorithm (Tavaré et al. 1997; Pritchard et al. 1999) proceeds by: (i) drawing \(N\) parameter proposals \(\theta_{1} , \ldots ,\theta_{N}\) from the prior distribution, (ii) giving each parameter proposal as input to the simulator to get \(N\) pseudo-dataset \(D_{1}^{S} , \ldots ,D_{N}^{S}\) and, (iii) accepting only parameters \(\theta_{j}\) such that \(D_{j}^{S} = D^{E}\).

The described procedure returns a sample from the exact posterior distribution. However, the sampling scheme described above may be very inefficient with high-dimensional data, or infeasible when dealing with continuous data. Thus, the exact equality constraint at the final step is usually relaxed by introducing a distance function, \(d\left( {D^{S} ,D^{E} } \right)\), and a positive threshold, \(\delta\).

The resulting algorithm can be summarized as follows:

The output of this algorithm is a sample from the following approximate posterior distribution:

where \(d\left( {D^{S} ,D^{E} } \right)\) and \(\delta\) are the two sources of approximation. Note that as long as \(d\left( {D^{S} ,D^{E} } \right)\) is a proper distance function, as \(\delta\) goes to zero the approximate posterior distribution converges to the true posterior distribution. The reader is referred to Sisson et al. (2018) for further details on the method.

This approach avoids the analytical (or numerical) evaluation of \(p\left( {D^{E} |\theta } \right)\) allowing to specify whatever probability distribution for each of the considered sources of uncertainty. Furthermore, many latent variables can be introduced in the model to take into account all the sources of uncertainty, thus making the simulator as close as possible to the reality. However, realistic simulators are often computationally demanding, and Algorithm 2 requires a huge number of simulations to get an adequate number of accepted parameter proposals. Hence, a possible strategy to reduce the computational burden is to approximate the simulator through an emulator, i.e. a metamodel. Note also that the procedure reported in Algorithm 2 represents the primal ABC algorithm, but more sophisticated sampling schemes can be implemented to improve the efficiency of the method (e.g. Sisson et al. (2018)).

3.3 Experiment

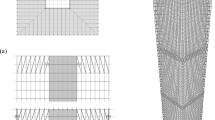

In this section, as illustrative example, a confined medieval masonry tower is considered to make a comparison between the two approximate Bayesian inference BMU and ABC approaches previously introduced. The considered tower has a quite regular geometry (see Fig. 1): the height is about 38 m and the base cross-section sizes 6.8 × 6.9 m which gradually narrows along the height. Geometric of the tower was acquired combining terrestrial laser scanning techniques and traditional surveys. The walls are constituted by a multi-leaf stone masonry whose thickness ranges from 2.3 to 1.5 m. The internal and external faces of the walls are made with a soft stone masonry, whereas the internal core (despite it is not visible) is likely composed of heterogeneous stone blocks tied by a good mortar. At the lower levels the tower is integrated to an urban context built in the same or later period. This characteristic architectural context interacts with the tower and affects its dynamic behaviour by introducing some complexities in the model identification. The effective height of the tower, i.e. the length of the portion of the tower that is free from the restraint offered by adjacent buildings, strongly affects the estimation of the natural periods as discussed in Bartoli et al. (2017a); Bartoli et al. (2019). However, its determination may not be straightforward since it involves the quality of the restraints provided by the adjacent buildings and this parameter is usually uncertain (additional information on this parameter could be provided by adding as information the mode shapes).

Available experimental data for this tower are the first two natural periods along the two main directions; they are \(T_{1} = 0.73 s\) and \(T_{2} = 0.60 s\) (Pieraccini 2017).

As shown in Fig. 1, a three dimensional FE model is built to represent the masonry tower and to reproduce its dynamic behaviour. Even though the numerical model is always a rough simplification of the reality, it is essential to understand the system behaviour and to evaluate the effect of the input parameters on the structure output. Indeed, there are quantities usually considered as known and fixed despite their uncertainty (e.g. some geometric data or the restraint conditions offered by the soil) and others whose uncertainty is directly modelled through probability function (e.g. the lateral buildings interaction and the mechanical properties of the masonry). The uncertainty around these parameters fosters the discrepancy between the model output and the reality and it is taken into account in the bias term \(b\). However, some of these variables may be explicitly modelled as latent variables allowing the definition of their posterior distributions. In such a case, as described in Sect. 3, to get the posterior distribution of the unobservable variables it is needed to marginalize with respect to the all the latent variables considered in the model. More in detail, by considering the specific application herein introduced, the elastic modulus (\(E\)) of the masonry and the effective height (\(H\)) of the tower (i.e. the height of the unrestrained part of the tower, Bartoli et al. 2017b) were chosen to represent the vector collecting the unknowns. In particular, the effective height is considered as the factor determining the discrepancy from the simulator and the reality. It is explicitly modelled as a latent variable, but its posterior distribution is not evaluated.

Looking at Eq. (4), \(\theta\) represents the elastic modulus of the masonry (\(E\)) and \(X\) the effective height (\(H\)). For these unknown quantities the chosen probability distributions are shown in Fig. 2 and Fig. 3. Note that, the prior probability distribution of the elastic modulus of the masonry is selected according to the reference values reported in NTC (2018) and CNR (2013) and a sensitivity analyses on the effect of different prior distributions on the posterior ones is reported in Monchetti et al. (2022).

Figure 2 shows the prior distribution of the elastic modulus of the masonry and the measurement uncertainty of the experimental observations. Note that the elastic modulus of the masonry is hypothesized as homogeneous along the height of the tower as a lognormal distribution with standard deviation equal to 273.98 MPa and mean value 1600 MPa. For both the natural periods the measurement error is considered as a zero mean Gaussian distribution with standard deviation equal to 0.01 s. Figure 3 shows the latent variables along the two main directions of the tower (North–South and East–West). In the North–South direction the effective height is simulated through a lognormal distribution with standard deviation corresponding to approximatively 1 m and mean equal to 25 m. In the East–West direction the urban context is different and, keeping standard deviation 0.98 m, the mean value is equal to 23 m.

In the following, BMU and ABC methods are applied and compared by considering the same probability distributions.

3.4 Bayesian model updating (BMU): application

BMU method has required the evaluation of the likelihood function and the run of the FE model, following the Algorithm 1 previously introduced. In this case, the limited number of parameters to be inferred allows to discretize the continuum joint-space of \(\theta\) and \(X\). In this manner, the integrals which define the likelihood function and the normalizing factor are solved via numerical solution. The high number of required simulations is reduced by using Latin Hypercube Sampling (LHS) technique to \(N=40\) thousand runs of the FE model. The results, in terms of posterior distributions of the elastic modulus are discussed next.

3.5 Approximate Bayesian computation (ABC): application

The ABC procedure is implemented as described in Algorithm 2. In principle, the simulator should be a function which (i) takes as input the elastic modulus of the masonry, \(\theta = E\); (ii) imputes the values of the latent variables (\(X = [H_{1} ,H_{2} ]\), the effective heights); (iii) run the FE model to compute the first two natural periods of the tower; (iv) returns the simulated data with an additive error of measurement. All the random variables are sampled from the probability distributions illustrated in Fig. 2 and 3. However, the computational burden of the FE model does not allow to run the high number of simulations necessary in the ABC method. Thus, a third-order cubic Response Surface Method (RSM) is used to build a metamodel or emulator (Myers et al. 2016) in order to reproduce the k-th natural period of the tower; see Eq. (6):

The polynomial metamodel is built by obtaining the coefficients \({p}_{ij,k}\) (Table 1) through the least-square estimator (Han and Zhang 2012); in this way the trend of the FE model performance is reproduced (Fig. 4) and \(\omega\) represents the error. This latter term, herein negligible, could be treated as a statistical error normally distributed with zero mean.

In order to assess the goodness of fit of the response surface in Eq. (6), the coefficient of determination is evaluated. For the present application it approaches 1 for both the two natural periods considered.

For the sake of comparison with BMU, the two posterior distributions are computed: the one based only on the first observed period, and the one based on the first and the second observed periods. The two distributions have been approximated running two independent ABC procedures. Recalling that the quality of the approximation of the posterior distributions provided by ABC algorithms depends on the tolerance threshold \(\delta\), the sensitivity of the results to different choices were also investigated. Each value of \(\delta\) was computed using the \(\alpha\)-quantile method (Beaumont et al. (2002)). Table 2 displays the value of the threshold and the number of retained simulations corresponding to three different choices of \(\alpha\). The absolute value of the difference between observed and simulated data was employed as distance metric when using only the first period. When considering both periods the Euclidean distance was employed. Figure 5 show the posterior distributions. All the results are obtained with \(N=10\) millions of iterations.

4 Results and discussion

The results of the application of the two BMU and ABC Algorithms introduced in Sect. 3 are shown in Fig. 6 where the posterior distributions of the elastic masonry (\(E\)) of the masonry are compared to the prior ones. The results of BMU are represented through a green line and the results of ABC with a yellow one. On the left, the figure shows the results of the two algorithms in the case of using the first observed period and, on the right, the figure represents the results in the case of using both the first and the second observed periods. Figure 6, as well as Tables 3 and 4, show that BMU is comparable to ABC in terms of median value and interquartile range (IQR). All the posterior distributions give evidence that the value of the elastic modulus is greater than what is suggested only by prior knowledge. Moreover, the introduction of the second period produces a further increase in the median value.

As already noted, the quality of the approximation of the ABC’s results depends both on the value of the tolerance threshold, \(\updelta\), and on the goodness of fit of the metamodel. The sensitivity of the results to different tolerance thresholds was investigated (see Table 2). Generally speaking, the lower is the threshold, the higher is the quality of the approximation. Looking at Fig. 5 and Table 2 it is possible to observe that the posterior distributions corresponding to lower \(\delta\)-values tend to be slightly less diffuse. On the other hand, a small threshold corresponds to a lower size of the sample involved in the computation of posterior quantities. In the case at hand, the results are only slightly affected by \(\updelta\) (see Table 2 and Fig. 5).

It is worth noting that the results of the two methods are very similar in terms of shape of the posterior distributions (see Fig. 6). This fact gives support to the validity of both the approaches and guarantees that the metamodel produces a good approximation of the FE model. Indeed, BMU does not involve any metamodel but rather uses simulations outputted by the FE model. Thus, ABC results that resemble BMU results give strong evidence that the error induced by the metamodel, i.e. discrepancies due to the term \(\upomega\) in Eq. (6), is negligible.

Both the methods are sensitive to the choice of the prior distributions and the parametric form of the bias, \(b\), and measurement error \(\epsilon\). An analysis of this sensitivity was performed for the BMU approach in Monchetti et al. (2022), but results are extendable to the ABC approach.

All the comparisons between the two methods are effective only because the number of parameters to be inferred is restricted and the model is simple enough to allow the computation via numerical solution of the integrals involved in the BMU algorithm. In more complex cases, BMU becomes infeasible, whereas ABC continues to be a very flexible solution. Indeed, even when introducing a greater number of latent variables in the model, ABC only requires the ability of imputing their values when running the simulator. This means also that one can assume whatever PDFs for these random variables, since no analytical evaluations of that functions are needed.

5 Conclusions and future research

This paper was aimed at investigating strengths and weaknesses of two different Bayesian methods: BMU and ABC. The comparison was carried out by considering a representative case study dealing with the structural typology of confined historic masonry towers. Both BMU and ABC were implemented to infer the posterior distribution of the elastic modulus by using natural period data. The results show the effectiveness of the two procedures that have led to very similar results.

Both methods provide approximate posterior distributions, but they have different sources of approximations. BMU relies on a numerical approximation of the integrals involved in the computation of the likelihood function and of the normalizing constant of the posterior distribution. This approximation is achieved through a discretization of the space on which the unknown quantities are defined and the more finely is the discretization the smaller is the approximation error. ABC avoids the computation of that integrals relying on a Monte Carlo approximation of the likelihood function based on a rejection scheme. Therefore, the quality of the posterior distribution depends on the value of the chosen threshold, \(\delta\). Both the methods require multiple evaluations of the FE model and a very low value of the threshold, as well as a very fine discretization, can be achieved at the cost of a high number of simulations. However, ABC algorithms require a huge number of iterations to get a proper sample size. Accordingly, to reduce the computational cost, the simulator involving the FE model was approximated through a statistical model: the emulator. This solution introduced a further source of approximation in the ABC procedure but the results show that (in the case at hand), the ABC procedure is only slightly affected by these sources of uncertainty as it leads to results consistent with those of the BMU approach.

It is worth noting that the comparison between the two methods is feasible in this framework only because the number of parameters and latent variables is restricted. Furthermore, the PDF assumed for all the unknown quantities are characterized by the easiness of evaluation. Otherwise, numerical solutions of the integrals involved in the BMU algorithm would be infeasible. More sophisticated models may be developed in order to reduce the discrepancy between the real behaviour of the system and the observations. One solution would be involving statistical models in the formalization of the generative process of data. On the one hand, it would allow to introduce additional sources of uncertainty besides the additive errors; on the other hand, replacing mathematical models (such as the FEM considered so far) with complex stochastic relationships may make the likelihood function more complex. This latter aspect needs to involve specific Bayesian simulated inference approaches and an example is provided by Approximate Bayesian Computation.

Data availability statement

Some or all data, models, or code that support the findings of this study are available from the corresponding author upon reasonable request.

References

Arendt PD, Apley DW, Chen W (2012) Quantification of model uncertainty: calibration, model discrepancy, and identifiability. J Mech Design 134(10):100908

Atamturktur S, Hemez FM, Laman JA (2012) Uncertainty quantification in model verification and validation as applied to large scale historic masonry monuments. Eng Struct 43:221–234. https://doi.org/10.1016/j.engstruct.2012.05.027

Bartoli G, Betti M, Monchetti S (2017a) Seismic risk assessment of historic masonry towers: comparison of four case studies. ASCE J Perform Constr Facil 31(5):04017039–04017041. https://doi.org/10.1061/(ASCE)CF.1943-5509.0001039

Bartoli G, Betti M, Marra AM, Monchetti S (2017b) Semiempirical formulations for estimating the main frequency of slender masonry towers. ASCE J Perform Constr Facil 31(4):04017025–04017031. https://doi.org/10.1061/(ASCE)CF.1943-5509.0001017

Bartoli G, Betti M, Marra AM, Monchetti S (2019) A Bayesian model updating framework for robust seismic fragility analysis of non-isolated historic masonry towers. Philosop Transact Royal Soc A 377:20190024. https://doi.org/10.1098/rsta.2019.0024

Beaumont MA, Wenyang Z, Balding DJ (2002) Approximate Bayesian computation in population genetics. Genetics 162(4):2025–2035. https://doi.org/10.1093/genetics/162.4.2025

Beck JL (2010) Bayesian system identification based on probability logic. Structural Control Health Monitoring 17(7):825–847. https://doi.org/10.1002/stc.424

Beck JL, Katafygiotis LS (1998) Updating models and their uncertainties I: Bayesian statistical framework. J Eng Mech 124(4):455–461

Beck JL, Au S, Vanik MW (2001) Monitoring Structural Health Using a Probabilistic Measure. Comput-Aided Civil Infrastruct Eng 16:1–11. https://doi.org/10.1111/0885-9507.00209

Beconcini ML, Croce P, Marsili F, Muzzi M, Rosso E (2016) Probabilistic reliability assessment of a heritage structure under horizontal loads. Probab Eng Mech 45:198–211. https://doi.org/10.1016/j.probengmech.2016.01.001

Box GEP, Tiao GC (1992) Bayesian Inference in Statistical Analysis. Wiley, NY

Brynjarsdóttir J (2014) Learning about physical parameters: the importance of model discrepancy. Inverse Prob 30(11):114007

Campostrini GP, Taffarel S, Bettiol G, Valluzzi MR, Da Porto F, Modena C (2017) A Bayesian approach to rapid seismic vulnerability assessment at urban scale. Int J Archit Heritage 12(1):36–46. https://doi.org/10.1080/15583058.2017.1370506

Chaudhuri S, Ghosh S, Nott D., Pham KC (2018) An easy-to-use empirical likelihood ABC method. arXiv preprint arXiv: 1810.01675. https://doi.org/10.48550/arXiv.1810.01675

Cheung SH, Bansal S (2017) A new Gibbs sampling based algorithm for Bayesian model updating with incomplete complex modal data. Mech Syst Signal Process 92:156–172. https://doi.org/10.1016/j.ymssp.2017.01.015

CNR (2013) CNR-DT 212/2013 – Guide for the probabilistic assessment of the seismic safety of existing buildings. Consiglio Nazionale delle Ricerche, Roma. https://www.cnr.it/en/node/2643 (In Italian).

Conde B, Eguìa P, Stavroulakis GE, Granada E (2018) Parameter identification for damaged condition investigation on masonry arch bridges using a Bayesian approach. Eng Struct 172:275–285. https://doi.org/10.1016/j.engstruct.2018.06.040

D’Altri AM, Sarhosis V, Milani G, Rots J, Cattari S, Lagomarsino S, Sacco E, Tralli A, Castellazzi G, de Miranda S (2020) Modeling strategies for the computational analysis of unreinforced masonry structures: review and classification. Arch Comput Methods Eng 27:1153–1185. https://doi.org/10.1007/s11831-019-09351-x

De Falco A, Girardi M, Pellegrini D, Robol L, Sevieri G (2018) Model parameter estimation using Bayesian and deterministic approaches: the case study of the Maddalena Bridge. Procedia Struct Int 11:210–217. https://doi.org/10.1016/j.prostr.2018.11.028

Feng Z, Lin Y, Wang W, Hua X, Chen Z (2020) Probabilistic updating of structural models for damage assessment using approximate bayesian computation. Sensors 20(11):3197. https://doi.org/10.3390/s20113197

García-Macías E, Ierimonti L, Vevanzi I, Ubertini F (2021) An innovative methodology for online surrogate-based model updating of historic buildings using monitoring data. Int J Archit Heritage 15(1):92–112. https://doi.org/10.1080/15583058.2019.1668495

Gelman A, Carlin JB, Stern HS, Rubin DB (2013) Bayesian data analysis (3rd Edition). Chapman and Hall/CRC, NY. https://doi.org/10.1201/b16018

Goller B, Schuëller GI (2011) Investigation of model uncertainties in Bayesian structural model updating. J Sound Vib 330(25):6122–6136. https://doi.org/10.1016/j.jsv.2011.07.036

Han ZH, Zhang KS (2012) Surrogate-based optimization. In: Roeva O (ed) Real-world applications of genetic algorithms. Rijeka, Croatia, IntechOpen, pp 343–362

Huang Y, Shao C, Wu B, Beck JL, Li H (2019) State-of-the-art review on Bayesian inference in structural system identification and damage assessment. Adv Struct Eng 22(6):1329–1351. https://doi.org/10.1177/1369433218811540

Ierimonti L, Cavalagli N, Venanzi I, Garcìa-Macìas E, Ubertini F (2021) A transfer Bayesian learning methodology for structural health monitoring of monumental structures. Eng Struct 247:113089

Katafygiotis LS, Beck JL (1998) Updating models and their uncertainties II: model identifiability. J Eng Mech 124(4):463–467

Kennedy MC, O’Hagan A (2001) Bayesian calibration of computer models. Journal of the Royal Statistical Society B 63(3):425–465. https://doi.org/10.1111/1467-9868.00294

Kitahara M, Bi S, Broggi M, Beer M (2021) Bayesian model updating in time domain with metamodel-based reliability method. ASCE-ASME J Risk and Uncertainty Eng Syst, Part a: Civil Eng 7(3):04021030. https://doi.org/10.1061/AJRUA6.0001149

Mengersen KL, Pudlo P, Robert CP (2013) Bayesian computation via empirical likelihood. Proc Natl Acad Sci 110(4):1321–1326. https://doi.org/10.1073/pnas.1208827110

Monchetti S, Viscardi C, Betti M, Bartoli G (2022) Bayesian-based model updating using natural frequency data for historic masonry towers. Probabilistic Eng Mech 70:103337

Myers RH, Montgomery DC, Anderson-Cook CM (2016) Response surface methodology: Process and product optimization using designed experiments, 4th edn. Wiley, New York

NTC (2018) Nuove Norme Tecniche per le Costruzioni. D.M. 17/01/2018 del Ministero delle Infrastrutture e dei Trasporti. Gazzetta Ufficiale 20/02/2018, No. 42 (In Italian).

Pallarés FJ, Betti M, Bartoli G, Pallarés L (2021) Structural health monitoring (SHM) and Nondestructive testing (NDT) of slender masonry structures: A practical review. Construct Build Mater 279:123768

Pepi C, Gioffrè M, Grigoriu MD (2020) Bayesian inference for parameters estimation using experimental data. Probabil Eng Mech 60:103025

Pepi C, Cavalagli N, Gusella V, Gioffrè M (2021) An integrated approach for the numerical modeling of severely damaged historic structures: Application to a masonry bridge. Adv Eng Soft 151:102935

Pieraccini M (2017) Extensive measurement campaign using interferometric radar. ASCE J Perform Constr Facil 31(3):04016113. https://doi.org/10.1061/(ASCE)CF.1943-5509.0000987

Price LF, Drovandi CC, Lee A, Nott DJ (2018) Bayesian synthetic likelihood. J Comput Graph Stat 27(1):1–11. https://doi.org/10.1080/10618600.2017.1302882

Pritchard JK, Seielstad MT, Perez-Lezaun A, Feldman MW (1999) Population growth of human y chromosomes: a study of Y chromosome microsatellites. Mol Biol Evol 16(12):1791–1798. https://doi.org/10.1093/oxfordjournals.molbev.a026091

Ren W, Chen H (2010) Finite element model updating in structural dynamics by using the response surface method. Eng Struct 32(8):2455–2465. https://doi.org/10.1016/j.engstruct.2010.04.019

Robert CP, Casella G (2013) Monte Carlo Statistical Methods, 2nd edn. Springer, New York, NY

Rocchetta R, Broggi M, Huchet Q, Patelli E (2018) On-line Bayesian model updating for structural health monitoring. Mech Syst Signal Process 103:174–195. https://doi.org/10.1016/j.ymssp.2017.10.015

Rubin DB (1984) Bayesianly justifiable and relevant frequency calculations for the applied statistician. Ann Stat 12(4):1151–1172. https://doi.org/10.1214/aos/1176346785

Rutherford AC, Inman DJ, Park G, Hemez FM (2005) Use of response surface metamodels for identification of stiffness and damping coefficients in a simple dynamic system. Shock Vib 12:317–331. https://doi.org/10.1155/2005/484283

Saisi A, Gentile C, Ruccolo A (2018) Continuous monitoring of a challenging heritage tower in Monza, Italy. J Civ Struct Heal Monit 8:77–90. https://doi.org/10.1007/s13349-017-0260-5

Simoen E, De Roeck G, Lombaert G (2015) Dealing with uncertainty in model updating for damage assessment: A review. Mech Syst Signal Process 56–57:123–149. https://doi.org/10.1016/j.ymssp.2014.11.001

Sisson SA, Fan Y, Beaumont M (2018) Handbook of Approximate Bayesian Computation. Chapman and Hall/CRC, New York

Standoli G, Giordano E, Milani G, Clementi F (2021a) Model Updating of Historical Belfries Based on Oma Identification Techniques. Int J Archit Heritage 15(1):132–156. https://doi.org/10.1080/15583058.2020.1723735

Standoli G, Salachoris GP, Masciotta MG, Clementi F (2021b) Modal-based FE model updating via genetic algorithms: Exploiting artificial intelligence to build realistic numerical models of historical structures. Construct Build Mater 303:124393

Tavaré S, Balding DJ, Griffiths RC, Donnelly P (1997) Inferring coalescence times from DNA sequence data. Genetics 145(2):505–518. https://doi.org/10.1093/genetics/145.2.505

Teixeira R, Nogal M, O’Connor A (2021) Adaptive approaches in metamodel-based reliability analysis: A review. Struct Saf 89:102019

Ubertini F, Cavalagli N, Kita A, Comanducci G (2018) Assessment of a monumental masonry bell-tower after 2016 Central Italy seismic sequence by long-term SHM. Bull Earthq Eng 16:775–801. https://doi.org/10.1007/s10518-017-0222-7

Wilkinson R (2014) Accelerating ABC methods using Gaussian processes. In: Kaski, S., Corander, J. (eds) Proceedings of the Seventeenth International Conference on Artificial Intelligence and Statistics. Reykjavik, Iceland.

Zini G, Betti M, Bartoli G (2022) A quality-based automated procedure for operational modal analysis. Mech Syst Signal Process 164:108173

Funding

Open access funding provided by Università Politecnica delle Marche within the CRUI-CARE Agreement. The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Monchetti, S., Viscardi, C., Betti, M. et al. Comparison between Bayesian updating and approximate Bayesian computation for model identification of masonry towers through dynamic data. Bull Earthquake Eng 22, 3491–3509 (2024). https://doi.org/10.1007/s10518-023-01670-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10518-023-01670-6