Abstract

The emergence of 6G networks initiates significant transformations in the communication technology landscape. Yet, the melding of quantum computing (QC) with 6G networks although promising an array of benefits, particularly in secure communication. Adapting QC into 6G requires a rigorous focus on numerous critical variables. This study aims to identify key variables in secure quantum communication (SQC) in 6G and develop a model for predicting the success probability of 6G-SQC projects. We identified key 6G-SQC variables from existing literature to achieve these objectives and collected training data by conducting a questionnaire survey. We then analyzed these variables using an optimization model, i.e., Genetic Algorithm (GA), with two different prediction methods the Naïve Bayes Classifier (NBC) and Logistic Regression (LR). The results of success probability prediction models indicate that as the 6G-SQC matures, project success probability significantly increases, and costs are notably reduced. Furthermore, the best fitness rankings for each 6G-SQC project variable determined using NBC and LR indicated a strong positive correlation (rs = 0.895). The t-test results (t = 0.752, p = 0.502 > 0.05) show no significant differences between the rankings calculated using both prediction models (NBC and LR). The results reveal that the developed success probability prediction model, based on 15 identified 6G-SQC project variables, highlights the areas where practitioners need to focus more to facilitate the cost-effective and successful implementation of 6G-SQC projects.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The dawn of the sixth generation of wireless technology, or 6G, is poised to bring transformative changes to the field of communication technology, laying the foundation for an even more interconnected and digitized world (Alraih et al. 2022). These advances, while promising, come with their own set of complex considerations, notably the integration of quantum computing (QC) within these 6G networks (Vista et al. 2021).

Quantum computing operates with the capability to navigate through convoluted problems at a speed exponentially surpassing that of classical computers (Linke et al. 2017). This vast computational advantage heralds groundbreaking benefits for 6G networks (Nawaz et al. 2019). Among these potential benefits, secure quantum communication (SQC) emerges as a particularly exciting prospect (Chen et al. 2020). SQC leverages the immense computational power of QC to devise advanced encryption methods (Chen et al. 2020). The result is a significant enhancement in the security measures of communications within the 6G networks (Chen et al. 2020; Bassoli et al. 2023). This could redefine the secure data exchange standard, offering security and encryption far exceeding anything currently available in our communications infrastructure (Bassoli et al. 2023).

Nevertheless, the journey toward seamlessly integrating QC into 6G is riddled with intricate challenges; it is far from a straightforward endeavor (Wang and Rahman 2022), as it demands a comprehensive understanding and meticulous evaluation of an array of pivotal variables. The 6G-SQC encompasses a spectrum of elements, from precise technical specifications and extensive infrastructural adaptations to an in-depth grasp of the rudimentary principles of quantum physics (Prateek et al. 2023). These complexities accentuate the multidimensional and strenuous nature of incorporating SQC into 6G networks, making it a formidable task requiring expert navigation through a labyrinth of intricate factors (Prateek et al. 2023).

The promising potential of 6G-SQC networks, coupled with the intricate challenges it presents, has captivated the attention of the academic and research communities (Abdel Hakeem et al. 1969). Numerous scholars and researchers have explored this dynamic and emerging field (Wang and Rahman 2022; Abdel Hakeem et al. 1969; Ulitzsch et al. 2022). Consequently, abundant research has been generated, and multiple predictive models have been proposed, each aiming to quantify and anticipate the successful marriage of these novel technologies (Zaman et al. 2023; Okey et al. 2022). Yet, despite the extensive efforts and the multitude of proposed models, a comprehensive predictive model that hones in on secure quantum communication within the 6G networks remains elusive (Okey et al. 2022). The development of such a model would mark a significant stride in our understanding and practical application of these transformative technologies.

In this uncharted terrain of research, the successful incorporation of 6G -SQC networks remains a complex set of variables yet to be solved. The intricate balance of technical, infrastructural, and quantum physics principles presents a daunting variable and an exciting opportunity for research and innovation (Zhang et al. 2019a; Porambage et al. 2021a). Indeed, resolving these variables could hold the key to a new era of secure, high-speed, and efficient communication networks.

To the best of our knowledge, no existing empirical study has identified the variables that potentially influence the success of the 6G-SQC project. Therefore, we have been motivated to develop a successful probability prediction model for the 6G-SQC project. To address the study objective, firstly, we explore the potential variables that could impact the success of the 6G-SQC project reported in the literature. Next, we collected the experts' insights on the identified variables using a survey questionnaire for developing a training dataset. Finally, we applied the Genetic Algorithm to Naive Bayes Classifier (NBC) and Logistic Regression (LR) to develop the success probability prediction model for 6G-SQC projects by analyzing the data collected from experts. This model will enable practitioners to assess the success probability of the 6G-SQC project, and we believe these findings will significantly contribute to developing effective strategies for addressing critical aspects of the 6G-SQC project.

2 Background

The advent of the sixth generation of wireless technology, known as 6G, is poised to revolutionize communication technology, paving the way for a more interconnected and digitized world (Saad et al. 2019; Lu and Zheng 2020). However, integrating quantum computing (QC) into these 6G networks poses a set of complex considerations (Wang and Rahman 2022).

Quantum computing operates exponentially faster than classical computers, offering a remarkable computational advantage (Briegel et al. 2009). This breakthrough capability holds tremendous potential for 6G networks, manifesting in groundbreaking benefits. Of particular interest is the prospect of secure quantum communication (SQC) (Lu et al. 2019). Leveraging the immense computational power of QC, SQC enables the development of advanced encryption methods (Lu et al. 2019; Shi et al. 2012). Therefore, it brings about a significant enhancement in the security measures employed within the 6G networks. This has the potential to redefine the standards of secure data exchange, surpassing the capabilities of the current communications infrastructure (Wang and Rahman 2021).

Nevertheless, the journey toward seamless integration of QC into 6G networks is fraught with intricate challenges and far from straightforward as it requires a comprehensive understanding and meticulous evaluation of a diverse set of pivotal variables (Wang and Rahman 2022). These variables encompass a wide spectrum of elements, including precise technical specifications, extensive infrastructural adaptations, and an in-depth grasp of the fundamental principles of quantum physics (Wang and Rahman 2022; Porambage et al. 2021a; Failed 2022a). The multidimensional and demanding nature of incorporating 6G-SQC networks underscores the formidable task, requiring expert navigation through a labyrinth of intricate factors (Chen et al. 2020; Failed 2023a).

The promising potential of 6G-SQC, alongside the complex challenges it presents, has captivated the attention of the academic and research communities(Failed 2022a). Several studies have explored this dynamic and emerging field (Wang and Rahman 2022; Failed 2022a; Duong et al. 2022; Porambage et al. 2021b; Nayak and Patgiri 2004). Consequently, a significant body of research has been generated, and several predictive models have been proposed, each striving to quantify and anticipate the successful convergence of these transformative technologies.

For instance, in their study, Lui et al. (Liu et al. 2022) examined the feasibility of implementing quantum key distribution protocols in 6G networks and evaluated their effectiveness in achieving secure communication. Their research highlighted the significance of robust key distribution methods to overcome security vulnerabilities and mitigate potential threats in 6G-SQC projects. Another study conducted by Porambage et al. (Porambage et al. 2021a) investigated the impact of quantum noise on the performance of SQC in 6G networks. They shed light on the critical role of error correction techniques in mitigating quantum noise effects and ensuring reliable and secure quantum communication within 6G infrastructure. Additionally, Mumtaz et al. (Mumtaz et al. 2022) proposed a novel optimization framework for resource allocation in 6G-SQC projects. They focused on optimizing the allocation of computational, communication, and quantum resources to enhance the overall efficiency and effectiveness of 6G-SQC networks. Furthermore, Ray (Ray 2021) conducted a comprehensive study on integrating QC into 6G networks from a regulatory perspective. They explored the legal and policy challenges of implementing QC technology in 6G-SQC projects and proposed recommendations for addressing regulatory barriers.

Despite these notable research contributions and many proposed models, a comprehensive predictive model focusing on secure quantum communication within 6G networks remains elusive (Wang et al. 2020a; Kota and Giambene 2021; Lin et al. 2111). The development of such a model would signify a significant stride in our understanding and practical application of these transformative technologies.

In recent years, the prediction of software project success has emerged as a significant research area, aiming to identify critical features and develop models that enable real-world practitioners to manage complex development activities and mitigate risk factors cost-effectively and effectively. Various engineering industries have seen the development of project success prediction models (PSPMs) that utilize different risk factors (Li et al. 2016; Ko and Cheng 2007; Verner et al. 2007).

For example, Abe et al. (Abe et al. 2006) collected 29 factors across five categories, including development process, project management, company organization, human factors, and external factors, to build a PSPM using a Bayesian classifier and (k)-means clustering algorithm. In another study, Cheng and Wu (Cheng et al. 2010) proposed an evolutionary support vector machine inference model that dynamically predicts project success based on a hybrid approach incorporating a support vector machine and a fast messy genetic algorithm. Reyes et al. (Reyes et al. 2011) conducted an empirical study investigating various software features and developed a model to evaluate project success/failure using a genetic algorithm. Shameem et al. (Shameem et al. 2023) developed a cost and success probability prediction model for agile methodology success factors in the context of global software development using genetic algorithms. These studies demonstrate the usefulness of predictive modeling in software project management, as it enables effective tracking and management of the development process towards successful project completion.

Despite the increasing prominence of 6G-SQC, it has been a lack of research on the prediction models that can predict the success or failure of the considering the quantum for secure communication of 6G. To bridge this gap, our study uses a genetic algorithm to introduce an innovative, cost-effective 6G-SQC project success prediction model based on the various features of the 6G-SQC projects. The model posits that the probability of success for a 6G-SQC project is strongly tied to specific features, which are briefly discussed in a later section.

The primary aim of our model is to help organizations gauge the influence of these identified variables on the effective consideration of 6G-SQC variables. In order to construct the success probability prediction model, we adhered to a series of generalized steps illustrated in Fig. 1. This research makes a valuable contribution to the field by presenting a groundbreaking approach for forecasting the success or failure of 6G-SQC projects and pinpointing the key variables that substantially impact the 6G-SQC project's success.

3 Research setting

To address this study's objectives discussed in Sects. 1 and 2, we have used a hybrid approach presented in Fig. 1. The research has been divided into four phases, and they have been briefly discussed in the following sections.

Phase–1 To explore variables that could impact the success probability of 6G-SQC.

Phase–2 To collect training data for evaluating the success probability of 6G-SQC.

Phase–3 Determine the optimal fitness of variables for 6G-SQC.

Phase–4 Develop a success probabilistic prediction model for cost-effective 6G-SQC.

3.1 Literature survey approach

To discern the variables influencing 6G-SQC, we conducted an extensive literature review by examining journal articles, conference proceedings, and other pertinent literature (Akbar et al. 2020, 2021a, 2021b; Shameem et al. 2020). We used Google Scholar as our search engine because of its user-friendly interface and comprehensive access to scholarly articles from various digital libraries, such as Springer Link, IEEE Xplore, and ACM Digital Library. This broad-based approach ensured that we didn't overlook any relevant digital libraries. We employed pertinent keywords to pinpoint suitable published articles. Moreover, we used snowballing data sampling to gather potential literature related to our research objective. This involved scrutinizing reference sections of relevant publications (backward snowballing) and exploring studies citing our selected literature (forward snowballing) (Wohlin 2014). This strategy resulted in a continuously expanding sample size as we delved deeper into references and citations (Wohlin 2014).

The survey incorporated literature studies that deliberated on the variables influencing quantum communication. We also considered studies that did not explicitly discuss these variables (Niazi 2012, 2015) as long as they offered pertinent lessons learned and experience reports related to quantum communication for smart cities. Extracting variables from such reports was challenging and necessitated meticulous, in-depth reviews (Niazi 2015; Khan et al. 2021). The study selection process was primarily undertaken by the first and second authors, with disagreements resolved through discourse and collaborative input from all authors. In total, through forward and back snowballing, we shortlisted 65 studies.

These shortlisted studies informed the structure of this article and contributed to phase-1: the identification of 6G-SQC variables (Fig. 1). The first and second authors examined the chosen studies to recognize pivotal themes, concepts, or variables related to 6G-SQC. The third and fourth authors conducted a secondary review to refine initial findings and report any omitted information. Following Kitchenham and Charters' guidelines (Kitchenham and Charters 2007), we applied a quantitative approach to analyze the extracted themes and concepts. A coding scheme (Hsieh and Shannon 2005) was utilized to evaluate the obtained data quantitatively. Implementing the steps of the coding scheme, i.e., ‘code’, ‘sub-categories’, and ‘categories and theory’, we mapped and paraphrased the identified concepts, culminating in a final roster of 6G-SQC variables. Upon completing the data extraction process, we reworded the identified themes and concepts, yielding a list of 15 essential variables for adopting and enhancing 6G-SQC.

3.2 Training data collection

To assess the prediction of success and the associated costs for the successful execution of a 6G-SQC project, we collected data to train the model. The process used for data collection is elaborated upon below.

3.2.1 Designing the questionnaire

We conducted unstructured interviews with 11 professionals in quantum communication and IoT to collect the training data. Utilizing video conferencing platforms such as Google Meet and Zoom, these face-to-face interactions lasted approximately 30 min per session. We designed a comprehensive, closed-ended survey questionnaire for data collection purposes based on insights gleaned from the interviews and relevant variables identified in the 6G-SQC literature. This method was selected as it is a widely-accepted approach for efficiently acquiring information from a sizable and diverse population.

The questionnaire was divided into two categories, with the first focusing on demographic questions and the second consisting of closed-ended questions based on project attributes. We employed a 9-point rating scale, ranging from extremely low (EL) to extremely high (EH), to evaluate the participant's responses. The questionnaire survey approach has been widely acknowledged as a useful method for obtaining information that is challenging to acquire using observational methods, as reported in various studies (Khan et al. 2017).

3.2.2 Pilot assessment of the questionnaire

To ensure the reliability and consistency of our survey instrument, we conducted a pilot evaluation following the questionnaire's design. Pre-testing the survey, as highlighted in prior research, is crucial for improving the questionnaire's quality and obtaining pertinent responses from the target population (Khan et al. 2021; Failed 2023b). For the pilot testing, we invited ten experts, including six from the initial unstructured interviews and four new participants. These experts represented institutions such as King Saud University in Saudi Arabia, the University of Oulu Finland, Nanjing University in China, and Aligarh Muslim University in India.

After incorporating the experts' suggestions, we finalized the questionnaire into three sections: demographic information, closed-ended questions regarding attributes, and cost-related data for implementing specific attributes. We rephrased the questionnaire items to enhance readability, and one expert recommended presenting the survey questions in a tabular format. Additionally, another expert suggested that a project's alteration or termination should be considered a failure. The updated questionnaire instrument is given at: https://tinyurl.com/26ux5mse. This study examined Naive Bayes and Logistic Regression-based predictive models to generate success probabilities for given input sets.

3.3 Development of a predictive model for 6G-SQC

The proposed work tries to find the significance of the identified variables by determining the scale for which the project may achieve the highest probability of success. This will help the software practitioners to focus more on the important aspects of the 6G-SQC project for its effective execution. We have used Genetic Algorithms (GA) to maximize the project success probability while minimizing the associated cost to achieve this objective. (GA will generate states of factors and calculate success probability with the help of predictive models. It will also try to balance success probability and cost to identify affordable scales of factors. The performance of GA will primarily rely on the following three components: (1) Naive-Bayes and logistic regression-based predictive models tell GA the success probability corresponding to given risk factor values; (2) the cost associated with each scale value of a risk factor. 3- A success probability and cost-based function that tells GA the goodness of a given set of feature values. Here, the predictive models will give GA the required probability once they are trained properly, and we have used Naive Bayes algorithms. The cost of process areas has already been gathered from experts and is mentioned in Table 1. An efficacy function is defined to fulfill the requirement of a third component based on success probability and cost.

3.3.1 Predictive models

In this study, we have utilized predictive models based on Naive Bayes and Logistic Regression. These models generate the probability of a class variable attaining a specific value, which signifies either success or failure in our case.

3.3.2 Naive bayes classifier (NBC)

The NBC model calculates the likelihood of a particular outcome for a class variable, such as success or failure. Bayesian Networks provide a variety of probability-based classifiers (Kotsiantis et al. 2006). Among these, the Naive Bayes Classifier (NBC) stands out due to its simplicity and effectiveness (Berrar 2018).

The NBC operates under the assumption that the predictors or features are independent given the class. In this model, each independent variable has a singular parent—the class or target variable. Owing to its solid mathematical foundation, the NBC algorithm is recognized for its speed, ease of implementation, and suitability for handling high-dimensional datasets. This efficiency is primarily due to NBC's methodology of estimating the probability of each feature independently. (Berrar 2018; Cerpa et al. 2016).

Equation 1 represents the calculation of target variable T's highest posterior probability concerning the observation's attributes F based on Bayes' rules:

NBC considers that all the elements of F = {f1, f2,…,fn}, given T, are conditionally independent, and therefore, the probability described in Eq. 1 can be computed according to Eq. 2

Equation 2 can be rewritten as Eq. 3 in its expanded form:

For classification tasks, Eq. 3 is typically sufficient to identify the most probable state of the target variable given a specific set of factors. However, in our research, we leverage the Naive Bayes approach, which uses input values of various factors to predict the probability of project success. Once trained, this model can estimate the likelihood of a successful outcome.

3.3.3 Logistic regression (LR)

In this research, we also employ logistic regression (LR) as a predictive algorithm, primarily to estimate the probability of a binary class (Cerpa et al. 2016). It's viewed as an enhancement of regression techniques designed for estimating continuous target variables. A limitation of traditional regression methods is their potential to yield predicted values of the target variable beyond the range (0,1), where 0 denotes a negative state (Failure, False, or No) and 1 signifies a positive state (Success, True, or Yes) (Wolpert and Macready 1997). To circumvent this problem, logistic regression uses a logistic function, as delineated in Eq. (4):

Initially, a function is defined based on independent variables as follows:

In Eq. (5), βi represents the weight of attribute fi, and the primary objective of the Logistic Regression (LR) algorithm is to ascertain the optimal values for each βi. Leveraging Eq. (6), LR generates probabilistic predictions, classifying the binary target variable T as 1 or 0.

Equation (7) can be used to calculate the probability (T = = 0):

The LR algorithm carries out a sequence of steps to update the values of βi based on Eqs. (5) and (6) until the algorithm reaches a point of relative stability, where the values of βis do not significantly fluctuate. At this juncture, the LR algorithm amalgamates the attributes to maximize the probability of determining the state of T in probabilistic terms. For our study, we deployed two models, NBC and LR, which ingest the input values of various factors and use them to predict the probability of project success. After training, these models can estimate the probability of a successful outcome.

3.3.4 Optimization problem

This section elaborates on the mathematical formulation of the optimization problem that facilitates the application of the Genetic Algorithm (GA). The predictive models will be trained to calculate probability values based on the provided data. Both probability and cost will be defined, and an efficacy function will be subsequently derived from these values.

3.3.5 Probability of success

Based on a given set of attributes, a project's probability of success can be expressed as follows:

Prob (S) accepts a potential solution S and computes the likelihood of its success p, which falls from 0 to 1. The set S can be expressed in the following manner:

In Eq. 9, the variable s1 signifies the magnitude of the ith factor, and n denotes the total number of factors under consideration. In this context, si can assume values within the range of 1 to 9, and n corresponds to the number of factors. Equation 10 illustrates a specific instance of the solution set S, represented as S (n = 15).

In this instance, S' depicts a solution set where the first factor possesses a scale value of 6, the second factor carries a value of 5, and so forth. When S' is fed into the model as input, it yields the corresponding probability since the predictive models have already undergone training.

3.3.6 Cost calculation

A key component of problem formulation is pinpointing the costs associated with each factor's magnitude. Domain experts have manually assigned these costs. The goal is to augment the probability of project success while keeping the total costs to a minimum. The variable cij signifies the cost for a scale value j of variable i. The total cost of solution S can be computed using Eq. 11.

Table 4 specifies the costs associated with various magnitudes of factors. When a new instance of S is generated, the total project cost is determined according to Table 4 and Eq. (11).

3.3.7 Efficacy

The efficacy of a project is ascertained by examining its success probability in tandem with the cost. This issue can also be viewed as a bi-objective optimization problem aiming to amplify the project success probability while concurrently minimizing operational costs. An efficacy function was developed to consolidate this dual-faceted challenge into a single optimization problem as described below:

A plausible method to establish the efficacy of a specific instance S is by calculating "the difference between the success probability and its cost." This simplistic approach amalgamates both criteria into one function. However, as depicted in Eq. 8, the cost C tends to overshadow since the probability Prob always falls within the range of [0,1], while C could reach a maximum value of max(C) when all attributes have a scale of 9. To mitigate this issue, we can employ the normalized cost provided in Eq. 13.

In Eq. 13, C represents the cost to be normalized, while min(C) and max(C) correspond to a project's minimum and maximum costs, respectively. For our problem, the number of factors is 14 (i.e., n = 14). Therefore, max (C) will be 126, and min (C) will be 14 (when all factors have a magnitude of 1). By applying Eq. 13, the cost is constrained to (0,1), ensuring that it does not completely govern Eq. 12. The resulting efficacy of S is computed using Eq. 14.

It's worth noting that there are alternative methods to incorporate the cost into the problem statement. One method is to consider cost and success probability as distinct objectives, thereby transforming the current problem into a multi-objective optimization problem. Alternatively, we could view the cost as a constraint rather than an element of the fitness function. This means the goal is to find a solution with the highest success probability, provided the cost does not exceed a predefined maximum affordable cost, denoted as Cmax. Nevertheless, this study chose to employ Eq. 14 as the objective function due to its simplicity and clarity.

3.3.8 Mathematical modeling of the optimization problem

Having outlined the problem's crucial parts in preceding sections, the optimization problem and its mathematical expression are mentioned next, which must be maximized.

In the above Equation, the Smax and Smin represent the respective maximum and minimum scale values. It can be inferred from Eq. 15 that we are searching for an instance S that produces the highest efficacy value, determined by a high probability of success and a low normalized cost.

3.3.8.1 Optimization problem, genetic algorithm, and its significance

Optimization problems can be solved using traditional methods like exhaustive search, but this approach becomes unfeasible when the search space is vast (Kumar et al. 2023). In the current research scenario, there are fourteen features, and each feature can take on any value between 1 and 9, resulting in more than 22.8 trillion possible solutions (914 > 22.8 trillion). As a result, meta-heuristic-based approaches, such as GA, are preferred because they can produce near-optimal solutions within reasonable time limits. GA is a popular meta-heuristic approach for solving optimization problems, and many researchers have widely used it in various domains, including combinatorial optimization problems. Although there are other meta-heuristics available, according to the No-Free-Lunch Theorem (Wolpert and Macready 1997), no metaheuristic is superior to others, and they produce similar outcomes. GA has been used by many researchers in different fields for combinatorial optimization problems with promising results, as evidenced by previous studies(Komaki and Kayvanfar 2015; Hu et al. 2020; Mirjalili et al. 2014). Therefore, this research employs GA.

3.3.8.2 Genetic algorithm

Genetic Algorithm (GA) is an evolutionary algorithm inspired by the theory of natural selection by Charles Darwin and developed by John Holland in the 1970s (Mahmoodabadi et al. 2013). It adopts the concept of natural selection to select potential parents from a population to produce better-quality offspring in future generations (Holland 1992). GA improves the population of solutions iteratively, gradually approaching an optimal solution. It is particularly effective when the objective function is stochastic, non-differentiable, discontinuous, or highly non-linear. GA comprises three fundamental operators, selection, crossover, and mutation, which determine how the optimal solution is generated after each iteration (Mirjalili and Mirjalili 2019). The standard GA algorithm's fundamental steps are illustrated in Algorithm 1 and Phase 3 of Fig. 1.

The components of GA should be adjusted to the optimization problem to achieve good performance. However, since GA is a well-known algorithm, we will not explain its components extensively in this paper. For detailed information, the readers can refer to existing studies (Mirjalili and Mirjalili 2019; Mitchell 1998). Nonetheless, the algorithm must be adapted to fit the specific problem. The following sections will outline how we have adapted the standard GA for our particular scenario.

4 Results and analysis

The results and analysis of the study are discussed in this section. The identified key variables of 6G-SQC are discussed in sub-Sect. 4.1, and the success probability prediction model is detailed in Sect. 4.2.

4.1 Identified list of variables

Through a comprehensive review of the literature, we have pinpointed 15 significant variables that may impact 6G-SQC projects. The variables identified are elaborated upon in the following discussion.

V1 (Infrastructure implementation): Quantum communication networks in a 6G environment demand a specialized infrastructure that differs from traditional communication networks (Akyildiz et al. 2020). Quantum repeaters are crucial components needed to strengthen and transmit quantum signals over long distances, ensuring the integrity and quality of quantum information(Awschalom et al. 2021). However, implementing such a widespread infrastructure presents significant challenges (Awschalom et al. 2021). Firstly, the cost of deploying and maintaining quantum repeaters and associated equipment is high. Additionally, the complexity of these systems, including the need for precise control and synchronization, adds to the implementation challenges (Urgelles et al. 2022). Moreover, the requirement for supercooling quantum systems to maintain their delicate quantum states further complicates infrastructure deployment, especially in densely populated smart cities where space and logistics are constrained (Brin 2022). Overcoming these complications requires substantial investments, technical expertise, and careful planning to build a robust and efficient infrastructure for quantum communication in 6G networks.

V2 (Security in 6G quantum communication networks): Security has become a paramount concern with the increased volume and sensitivity of data from 6G networks. While quantum mechanics offers theoretically secure communication methods, practical implementations face vulnerabilities(Zhang et al. 2019b). These vulnerabilities can arise from weaknesses in hardware components, software systems, or even during the transition between quantum and classical data formats (Ball et al. 2021). Addressing these vulnerabilities and ensuring robust security in 6G quantum communication networks is crucial (Siriwardhana et al. 2021). It requires comprehensive security protocols, rigorous testing, and continuous monitoring to detect and mitigate potential risks and threats(Siriwardhana et al. 2021). Moreover, advancements in secure hardware design, software development, and encryption techniques are essential to safeguard the integrity and confidentiality of data in 6G networks(Siriwardhana et al. 2021).

V3 (Quantum computing resources): Quantum systems are notoriously sensitive, with quantum states being easily disturbed and challenging to maintain over time, especially when data processing needs are high, as expected in 6G networks (Ralegankar et al. 2021). This issue escalates as the quantum data volume and processing speed increase in the context of 6G, where a higher information transfer rate is required(Ralegankar et al. 2021). Therefore, managing these quantum systems, including maintaining the coherence of quantum states and precise control for efficient information processing and transfer, represents a significant challenge (Cory et al. 2000). This necessitates advanced quantum computing resources and techniques, further driving the need for research and development (Cory et al. 2000; Zoller et al. 2005).

V4 (Standardization): The push towards 6G heightens the need for standardized protocols in quantum communication to ensure compatibility across various systems and prevent network fragmentation(Chaoub et al. 2023). However, the field of quantum communication is still relatively new, and the development of universally accepted standards is a complex task (Länger and Lenhart 2009). It involves comprehensive understanding, cooperation, and consensus among researchers, developers, and industry stakeholders worldwide (Länger and Lenhart 2009; Acín et al. 2018). Achieving such global standardization in a rapidly evolving field presents a significant challenge, yet it is crucial for the widespread adoption and efficient operation of quantum communication within 6G networks (Acín et al. 2018).

V5 (Quantum key distribution (QKD)): QKD is a method used in quantum communication for secure communication, where two parties can generate a secret key. However, deploying QKD on the scale required for 6G networks is challenging(Wang and Rahman 2022). It presents significant hurdles, such as high implementation costs, limited key distribution over long distances, and specialized hardware requirements (Wang and Rahman 2022; Nguyen et al. 2021). This hardware must operate at the high speeds and capacities expected of 6G networks while maintaining the delicate quantum states, which is a difficult balance to achieve and represents a significant technical challenge(Liu et al. 2022).

V6 (Education and training): The emergence of quantum communication in the 6G landscape necessitates a skilled workforce, specifically quantum engineers and technicians, who can develop, maintain, and improve these systems (Mourtzis et al. 2021; Chavhan 2022). However, there is a noticeable market gap for such trained professionals(Li et al. 2018). Addressing this skills shortage requires substantial investment in education and training programs focused on quantum technologies(Li et al. 2018). This involves imparting technical knowledge and fostering an understanding of quantum communication's unique challenges and opportunities within a 6G framework (Li 2022).

V7 (Integration with existing networks): As the shift towards 6G occurs, there is crucial to integrate quantum communication networks with pre-existing 5G and legacy networks. This complex integration involves merging fundamentally different technologies while ensuring seamless performance and interoperability (Failed 2022b; Wang et al. 2020b). It requires thoughtful planning, innovative technical solutions, and a high level of expertise in conventional and quantum communication technologies to ensure a smooth and effective transition that doesn't disrupt current services or compromise network security (Saeed et al. 2021).

V8 (Cost management): Quantum technologies, being at the frontier of scientific research and application, are inherently costly to build, maintain, and operate, primarily due to their complexity and the need for specialized equipment (Aiello et al. 2021). Implementing QC within the framework of 6G networks, with their higher demands for speed, capacity, and extensive coverage, will likely drive these costs even higher(Prateek et al. 2023). Balancing the financial implications of adopting advanced quantum technologies to pursue superior network performance under 6G is a significant challenge that developers, carriers, and governments must address(Ahammed et al. 2023; Bhat et al. 2023).

V9 (Regulatory): The introduction of quantum communication with 6G technology represents a leap into largely uncharted territory. As these technologies are still emerging, many countries and regions lack established regulations to govern their use(Nguyen et al. 2021; Sandeepa et al. 2022; Soldani 2021). This leads to uncertainty and risk for organizations adopting or developing these technologies. It requires proactive collaboration between governments, regulatory bodies, and technology developers to establish a clear and beneficial regulatory framework to guide the evolution and application of quantum communication and 6G technology (Yrjola et al. 2005).

V10 (Data privacy): As 6G networks are expected to handle massive data volumes, and quantum encryption offers robust security, there's a risk of misuse leading to invasive surveillance(Porambage et al. 2021a). While strong encryption ensures data security, it could also enable unauthorized access to sensitive information if misused(Porambage et al. 2021b). The challenge lies in balancing: ensuring robust security with quantum encryption and implementing safeguards to protect individual privacy (Nguyen et al. 2021). This requires careful regulation and ethical practices in designing and deploying 6G quantum networks (Yrjola et al. 2005).

V11 (Energy consumption): Quantum systems operate under shallow temperatures, often close to absolute zero, to maintain the delicate quantum states, leading to substantial energy usage(Abe et al. 2021). The expected high data rates and broader coverage of 6G networks will further drive-up energy demands (Maraqa et al. 2020). Balancing this increasing energy consumption while maintaining sustainability is a critical challenge (Failed 2022c; Khorsandi et al. 2022). Innovative energy-efficient technologies and strategies will be necessary to ensure that the advancement toward quantum communication within 6G networks aligns with global environmental and sustainability goals (Matinmikko-Blue et al. 2004).

V12 (Error detection and correction): Within a 6G framework, the high data rates coupled with the delicate nature of quantum states can lead to frequent and potentially disruptive errors (Akyildiz et al. 2020). This is further complicated because quantum information can't be copied or amplified without altering its state, making traditional error correction methods ineffective (Head-Marsden et al. 2020). Therefore, creating effective quantum error correction methods suitable for 6G networks becomes paramount (Prateek et al. 2023). These methods will need to efficiently detect and correct errors without disturbing the quantum states, ensuring the integrity and reliability of quantum communication (Prateek et al. 2023; Moser 2021).

V13 (Network management): In a 6G environment, quantum communication networks pose unique challenges. The volume of quantum data will be significantly higher, requiring advanced methods for data management and error correction(Chong et al. 2017). The stability of quantum systems, which are notoriously sensitive, will also be crucial, necessitating advanced monitoring and maintenance protocols (Akhtar et al. 2020; Mahmoud et al. 2021). Additionally, the seamless transition between quantum and classical data forms an essential part of this network management, requiring sophisticated systems that can handle this complex interchange without compromising speed, security, or reliability (Zhao et al. 2023).

V14 (End-user devices): The advent of 6G and quantum communication brings a new level of complexity to end-user devices (Tariq et al. 2020). These devices must be designed to handle the increased speeds and capacities of 6G but also the intricacies of quantum data(Monserrat et al. 2020). This means incorporating technology that can process and interpret quantum information effectively. However, balancing the technical requirements with consumer factors like cost-effectiveness and user-friendliness is a significant challenge (Failed 2022d). The devices must be affordable for widespread use and user-friendly to promote adoption among the general public, despite the complex technology they incorporate (Failed 2022d).

V15 (Quantum resistance): As we move towards 6G, ensuring that communication remains secure even in the face of advancing decryption technologies, including quantum computers, becomes crucial (Prateek et al. 2023). Developing quantum-resistant algorithms for secure communication in a 6G setting is a significant challenge (Wang and Rahman 2022). Quantum computers have the potential to break many current encryption methods, which could compromise the network's security(Partala 2021). This threat increases the need for new encryption methods that can withstand attacks from quantum computers (Prateek et al. 2023; Liu et al. 2021).

4.2 Success probability model development

This section provides an overview of the participants' demographics who were invited and contributed to the training data collection (refer to Sect. 4.2.1). Additionally, it outlines the application procedure for Genetic Algorithms (GA) (see sub-Sect. 4.2.2), and the results are discussed in Sect. 4.2.3.

4.2.1 Experts demographics

We used a frequency analysis strategy to scrutinize our descriptive data, an effective technique for systematically evaluating multiple variables, encompassing numeric and ordinal data sets. Our study incorporated a diverse pool of 78 participants from 13 nations spanning four continents, encapsulating a broad range of 12 professional roles and a myriad of 12 distinct project experiences, as portrayed in Fig. 2a–c.

A substantial proportion of participants boast a significant experience level, falling within the 11–15 years bracket in their respective fields (Fig. 2d). Moreover, the majority of the research participants (34%) were associated with project teams consisting of 11–15 members (Fig. 2e).

Our research further extended to scrutinize gender balance within the industry. We sought self-disclosed gender identities from the participants. The resulting data significantly highlighted a male-dominated industry landscape, with men making up a majority (69%) of the 78 respondents, as opposed to only 21% of females, and 10% chose not to disclose their gender (Fig. 2f).

To gain deeper insights into the professional personas of our participants, we employed thematic mapping, enabling us to categorize their roles across a spectrum of 12 distinct categories, as illustrated in Fig. 2b. The roles of Quantum Security Analyst and Quantum Software Developer were found to be the most frequently reported, with 12 and 10 participants, respectively, aligning with these categories. In addition, we classified 12 core working domain categories for the participants' organizations. Quantum-safe 6G Infrastructure emerged as the leading domain, accommodating the majority (11) of our study participants (Fig. 2c).

4.2.2 Genetic algorithm application process

This section formally presents the optimization problem of success probability maximization in the presence of cost. It is explained that the GA algorithm needs to be customized for this specific problem. Additionally, the necessary modifications to GA and its components are also discussed. We have employed the GA in the following steps:

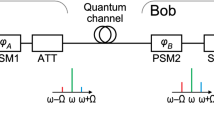

5 Step-1 Representation

In the current study, each potential solution, i.e., chromosome, comprises an ordered set of values. The order of the values in the set corresponds to the order of attributes described in Fig. 3. The first value in the set represents the first attribute scale, the second value represents the second attribute level, and so on.

6 Step- 2 Initialization

The study employed a population size of 50, with the initial population being generated randomly. Specifically, 50 chromosomes were created at the algorithm's beginning, and each chromosome element was assigned a random integer value between 1 and 9, inclusive.

7 Step-3 Fitness function

The effectiveness of a chromosome is calculated using Eq. 15, and it represents the chromosome's fitness value. A higher fitness value indicates a greater success probability and lower associated costs.

8 Step -4 Constraint handling

Many real-world problems are constrained, implying the solution may not be feasible. The new chromosomes created in GA may occasionally exist in an infeasible search space, meaning they do not satisfy the conditions stated in Eq. 15. The chromosomes are constructed to comply with constraints to avoid violating constraints, ensuring they do not breach any restrictions even after undergoing modifications over generations. The factor range was limited to values between Smax and Smin, and the GA parameters were written to create new chromosomes within the feasible regions.

9 Step-5 Selection & reproduction

The current study utilized roulette-wheel selection from the available choices because of its effectiveness and simplicity.

10 Step–6 Crossover

A pre-determined probability value of 0.8 is used for chromosome crossover, and a single-point crossover method was selected due to its simplicity.

11 Step–7 Mutation

We opted for the random mutation approach, which has a probability of 0,1. This means there is a 10% chance for each chromosome to be substituted with a random number ranging between Smax and Smin.

12 Step-8 Stopping criteria

A maximum number of 100 generations was chosen as the stopping criterion for the study. All of the key components and their respective values used in the current optimization problem described in Eq. 15 are listed in Table 2.

Execution of GA-In the preceding sections, we have provided details on all the components and parameter values necessary for implementing GA. Algorithm 2 summarizes all the steps of the algorithm comprehensively.

Once Algorithm 2 has been executed successfully, the chromosome SB with the highest fitness value is saved. By utilizing the scale values of each variable within SB, a favorable solution for project success can be generated.

Results and discussions

This section discussed the final results obtained by implementing the GA. The determined results of using the Naive Bayes classifier are discussed in Sect. 5.1, and the Logistic regression model results are explained in Sect. 5.2.

-

Naive bayes classifier

Table 3 and Fig. 4 present Naive Bayes Classification (NBC) models for 6G-SQC project success. The results demonstrate the performance of an NBC-based Genetic Algorithm (GA) for implementing QC practices. Initially, the success probability and cost were 45.17% and 0.499, respectively. After 100 generations, the best fitness value was determined to be 0.623. The optimal solution yielded a cost of 0.411 and a success probability of 98.68%, signifying a 53.31% improvement in success probability and an 8.80% decrease in cost, as illustrated in Fig. 4.

The results from the NBC and GA analyses indicate the initial cost of implementing a 6G-SQC project was relatively high.

This circumstance could be attributed to the limited Infrastructure Implementation, the lack of standardized 6G-SQC methodologies, and insufficient technological infrastructure. Furthermore, a scarcity of skilled resources played a role in the elevated initial cost. Nevertheless, as time progressed, 6G-SQC methodologies matured, and the participation of highly skilled professionals enhanced the effective management and utilization of available resources. Consequently, this led to an increased project success probability and reduced costs.

The analysis provided in Table 4 suggests that in the context of 6G-SQC, the variable V6 (Education and Training) holds the most significant impact on the success of these projects. It implies that the availability of skilled professionals directly influences the success rate, making it the most critical variable. However, V10 (Data Privacy) and V11 (Energy Consumption) follow closely, holding the position of the second most critical variable for success. The former relates to safeguarding citizens' sensitive data, which is crucial for maintaining public trust and legal compliance. The latter represents the project's efficiency and sustainability in energy usage, indicative of the city's overall performance. Balancing these variables is key to optimizing the success probability of 6G-SQC projects, thereby emphasizing their central role within the 6G-SQC framework.

-

Logistic regression models

A logistic regression (LR) model was employed to calculate the success probability of a 6G-SQC project. As detailed in Table 5 and Fig. 5, the performance of LR, in conjunction with a genetic algorithm (GA), significantly influenced the implementation of the 6G-SQC project. Initially, the probability of success was 56.42%, with a corresponding cost of 0.492. After running the GA for 100 generations, the best fitness value achieved was 0.596. This evolution resulted in an optimal solution with a success probability of 99.29% at a reduced cost of 0.418, signifying a 42.87% increase in the likelihood of success and a 7.4% cost reduction, as depicted in Fig. 2. Therefore, utilizing the most impactful variables can boost the success rate of a 6G-SQC project by 42.87% while concurrently decreasing the costs.

Table 6 displays the optimal blend of variables for successful 6G-SQC project implementation, as determined by applying linear regression and a genetic algorithm. The analysis revealed that V1 (Infrastructure Implementation), V10 (Data Privacy), and V11 (Energy Consumption) are of utmost importance in shaping the project's success. Thus, project managers should give these variables equal consideration when planning for the 6G-SQC project. Moreover, V2 (Security in 6G Quantum Communication Networks) and V6 (Education and Training) are identified as the influential secondary variables for augmenting success and curtailing costs in the 6G-SQC project.

-

Comparison between NBC and LR based best fitness of variables

In this section, we evaluated the ranking of variables in the 6G-SQC project based on their significance. We compared the outcomes of the Naïve Bayes Classifier (NBC) and Logistic Regression (LR) methods for a comprehensive understanding of their performance.

Variable best fitness order—Our proposed model for predicting the success probability of 6G-SQC projects yields two primary advantages. First, it offers an optimal cost distribution across various variables to maximize project success probability. Second, it sheds light on the relative importance of these variables.

Table 3 outlines the variable costs needed to achieve close to 100% project success. This indicates that certain variables might be less crucial, as high success rates can be sustained even at lower cost values. For instance, variable V7(Integration with Existing Networks) requires only 2 units to reach a success probability of 98.68% with the Naive Bayes prediction model. However, variable V6 (Education and Training) demands a cost of 9 units, suggesting a higher monetary and effort investment for maximizing the 6G-SQC project's success probability (Table 4).

Similar analyses used Logistic Regression to determine the cost-per-variable needed to optimize project success probability. Table 5 shows that some variables have minimal impact on success and maintain high success rates even with lower allocated costs. For instance, variable V3(Quantum Computing Resources) demands a cost of only 2 units, while variables V1(Infrastructure Implementation), V10 (Data Privacy), and V11 (Energy Consumption) each require 8 units. As per these results, prioritizing V1, V10, and V11 while limiting the budget for V3 allows for the highest success probability (99.29%).

Evaluating the correlation between each variable's cost and the project success probability allows us to rank the variables based on their cost-to-success ratio and overall significance. Using the results from Naive Bayes Classifier (Table 4) and Logistic Regression (Table 6), we assign rankings to each variable, with higher costs suggesting higher rankings. Table 7 presents these rankings.

For instance, V6(Education and Training), with a cost of 9 units in the NBC analysis and 7 units in the LR analysis, ranks first and second, respectively, indicating its high significance. Employing a similar approach for all variables, we generate a complete ranking (Table 7), aiding practitioners in prioritizing the critical variables for 6G-SQC projects that significantly enhance the probability of project success.

Statistical analysis—We conducted a non-parametric statistical examination to identify differences and parallels between the Naive Bayes Classifier (NBC), and Logistic Regression (LR) results (Failed 2004; Winter et al. 2016). Numerous analogous studies have employed This non-parametric statistical approach (Khan et al. 2017; Akbar et al. 2022a; Khan and Akbar 2020). To develop a consistent metric, we used the non-parametric Spearman's Rank-Order Correlation Coefficient, ranking each variable involved in the 6G-SQC project based on the optimal fitness values produced by NBC and LR (Table 7). This correlation coefficient measures the linear relationship between variables, with possible values ranging from + 1 to −1. A value of + 1 indicates a total linear dependence.

A statistically significant Spearman's Rank-order correlation coefficient value was obtained (rs = 0.895, p = 0.001), as indicated in Table 8. This elevated coefficient value, further substantiated by the scatter plot shown in Fig. 6, emphasizes a robust correlation between the ranks generated from Naive Bayes Classifier (NBC) and Logistic Regression (LR) analyses. This compelling concordance suggests that professionals can employ either of these algorithms to identify the most impactful variables for a 6G-SQC project.

Despite the substantial correlation, we observed subtle differences in the ranks assigned to specific variables by NBC and LR. For instance, V1 (Infrastructure Implementation) was ranked 3rd using NBC, but it climbed to 1st with LR, whereas V2 (Security in 6G Quantum Communication Networks) and V4 (Standardization) also exhibited minor variations (Table 8). These discrepancies suggest a degree of divergence in the rank assignment between the two algorithms, which should be considered when identifying the most influential variables for 6G-SQC projects.

In addition to Spearman's Rank-order correlation, we used an independent t-test to compare the mean rank differences produced by both algorithms (Table 9). The result from Levene's Test was non-significant (p = 0.745 > 0.05), indicating equal variances, and we continued with this assumption. The outcome of the t-test (t = 0.752, p = 0.502 > 0.05) indicated no substantial differences between the ranks generated by the two algorithms. This consistency affirms that practitioners can reliably use either NBC or LR to determine variable rankings, facilitating resource allocation efficiency and boosting the likelihood of 6G-SQC project success.

6G-SQC project success probability model—The ultimate aim of this study was to create a predictive model to estimate the likelihood of success for 6G-SQC projects, providing practitioners with an innovative strategy augmentation tool. The model was built upon thoroughly examining critical variables for 6G-SQC projects, using data gathered from industry professionals through a questionnaire survey.

In our methodology, we harnessed a genetic algorithm (GA) in conjunction with the Naive-Bayes Classifier (NBC) and Logistic Regression (LR) to calculate the success probability of 6G-SQC projects. This process factored in project costs, human resources, financial investments, and other crucial resources. We also ascertained the optimal fitness rankings of the identified variables, emphasizing their role in steering project success.

As illustrated in Fig. 7, our approach proved effective. With the employment of GA and NBC, for instance, the 6G-SQC project's success probability increased from an initial 45.17 to 98.68% upon project maturity, concurrently reducing costs from 0.499 to 0.411%. Similarly, utilizing GA alongside LR, the success probability rose from 56.42 to 99.29%, with costs decreasing from 0.492 to 0.418% after 100 iterations.

Furthermore, we ranked the variables related to 6G-SQC projects using GA, NBC, and LR. The findings demonstrated a strong positive correlation between both analyses, thus supplying practitioners with a prioritization guide for impactful variables and formulating strategies based on their influence on project success. Significant variables such as Education and Training, Data Privacy, Quantum Computing Resources, Integration with Existing Networks, Error Detection and Correction, and Network Management were highlighted. Hence, practitioners can focus on these areas, tailoring their approach to specific project demands. In conclusion, our study offers a valuable instrument for practitioners aiming to amplify the success likelihood of their 6G-SQC projects.

13 Study implications and threats to validity

We now summarize the implications of this study in Sect. 7.1. The potential threats to the validity of study findings are presented in Sect. 7.2.

13.1 Implications

The implications of this research are multi-fold and can significantly contribute to the practical, theoretical, and policy aspects of 6G-SQC projects:

13.1.1 Practical implications

This research has developed a robust prediction model for the success of 6G-SQC projects, which is a crucial tool for industry professionals, decision-makers, and stakeholders. This model can help practitioners identify and prioritize key variables impacting project success, enhancing strategic planning, resource allocation, and overall project management. Using Naive-Bayes Classifier (NBC) and Logistic Regression (LR) in the model adds flexibility, allowing practitioners to select an approach that aligns best with their available resources and strategic goals.

13.1.2 Theoretical implications

This study advances our understanding of the role and significance of different variables in predicting 6G-SQC project success. It brings new insights into the dynamics of these variables and how they interact, contributing valuable knowledge to the Quantum Computing and Project Management field. The high correlation between the rankings obtained from NBC and LR also validates the reliability of these methodologies in this context.

13.1.3 Policy implications

This research underlines the need for policymakers to focus on variables such as Infrastructure Implementation, Security in 6G Quantum Communication Networks, Education and Training, and Data Privacy, among others, as they significantly influence the success probability of 6G-SQC projects. The prediction model and the variable ranking can guide the development of effective policies and regulations to foster a conducive environment for successful 6G-SQC project implementation.

13.1.4 Cost implications

The research demonstrates a significant reduction in cost as 6G-SQC projects mature, which has substantial implications for budget planning and financial management within these projects. The model could be an essential tool for cost optimization, helping organizations achieve higher success rates at reduced costs.

13.1.5 Implications for future research

The methodology adopted in this research, combining genetic algorithm with NBC and LR, provides a novel approach that future research could leverage for predicting project success in other fields or contexts. The minor discrepancies in variable rankings between the two methodologies also open avenues for further investigation into the underpinning computational processes of these algorithms.

13.2 Threats to validity

Various threats could impact the validity of this study. However, we adopted the hybrid research methodology used by different existing studies (Akbar et al. 2022b, 2023). The potential threats are analyzed based on the core four types of validity threats: internal validity, external validity, construct validity, and conclusion validity (Wohlin et al. 2012; Zhou et al. 2016).

13.2.1 Internal validity

Internal validity refers to the extent to which the observed effects can be attributed to the studied variables and not influenced by confounding factors. In this research, potential threats to internal validity may arise from biases in the data collection process, such as response biases or sample biases. To mitigate these threats, we employed a comprehensive survey questionnaire and ensured the anonymity of respondents to encourage honest and unbiased responses. Additionally, statistical analysis techniques, such as Genetic Algorithm, Naïve Bayes Classifier, and Logistic Regression, help control for potential confounding variables.

13.2.2 External validity

External validity concerns the generalizability of the research findings to broader populations and settings. Since our study focused on 6G-SQC projects, the external validity of our findings may be limited to similar contexts. The sample size and characteristics of the participants in the survey questionnaire also influence external validity. To enhance external validity, we used a diverse sample of experts with varied backgrounds and experiences in the field. However, it is important to acknowledge that the generalizability of our findings may be limited to similar settings, and further validation with larger and more diverse samples is recommended.

13.2.3 Construct validity

Construct validity refers to the extent to which the variables and measures used in the study accurately capture the intended constructs. In our research, construct validity could be threatened by potential measurement biases or limitations in the operationalization of the variables. To address this, we conducted an extensive literature review to identify key variables in 6G-SQC projects and utilized a questionnaire survey to collect data on these variables. Using established measurement scales and statistical analyses helps ensure the validity of the constructs under investigation.

13.2.4 Conclusion validity

Conclusion validity pertains to the accuracy and reliability of the conclusions drawn from the data analysis. In our study, threats to conclusion validity may arise from potential errors in statistical analyses, interpretation of results, or limitations in the sample size. We mitigated these threats by employing robust statistical methods, including Genetic Algorithm, Naïve Bayes Classifier, and Logistic Regression, to analyze the data. Using statistical significance tests, such as t-tests, helped assess the reliability of the results. However, it is important to acknowledge that there may be limitations inherent in any statistical analysis, and caution should be exercised when drawing definitive conclusions.

14 Related work

Numerous scholars and researchers have explored the potential of quantum computing (QC) integration within 6G networks, and several studies have proposed predictive models to quantify the success of such integration. However, our research presents distinctive advancements and improvements compared to existing studies in the field. For instance, a study conducted by Minrui et al. (Xu et al. 2022) focused on the feasibility of implementing quantum key distribution (QKD) protocols in 6G networks to enhance secure communication. While their research shed light on the importance of key distribution methods, our study goes beyond this by identifying and analyzing a broader range of variables that influence the success of 6G-SQC projects. By incorporating insights from experts and utilizing a predictive model, our research provides a comprehensive framework to evaluate the overall success probability of 6G-SQC projects.

Similarly, Ken and Futami (Tanizawa and Futami 2023) investigated the impact of quantum noise on secure quantum communication in 6G networks. Their findings emphasized the significance of error correction techniques to mitigate quantum noise effects. While their research focused on one specific aspect of the 6G-SQC project, our study encompasses a broader scope by examining multiple critical variables in 6G-SQC projects. Our success probability prediction model considers a comprehensive set of factors, enabling practitioners to assess the overall success probability of 6G-SQC projects.

Furthermore, Khan et al. (Khan et al. 2020) proposed an optimization framework for resource allocation in 6G-SQC projects to enhance the overall efficiency of 6G-SQC networks. While their research primarily concentrated on resource allocation, our study encompasses various variables, including technical specifications, infrastructural adaptations, and quantum physics principles. By employing the Genetic Algorithm and utilizing two different prediction methods, our research provides a comprehensive success probability prediction model that considers the interplay of various factors crucial to 6G-SQC project success. Additionally, Hakeem et al. (Abdel Hakeem et al. 1969) investigated the regulatory challenges and policy considerations surrounding the integration of QC into 6G networks. While their study focused on the legal and policy aspects, our research complements this work by concentrating on the technical and practical variables influencing the success of 6G-SQC projects. By incorporating empirical data from experts, our study provides a more comprehensive and practical understanding of the critical variables affecting the successful implementation of 6G-SQC projects.

In summary, while existing studies have significantly contributed to quantum computing integration in 6G networks, our research distinguishes itself by developing a comprehensive success probability prediction model for 6G-SQC projects. By considering a wide range of variables and utilizing an advanced analysis methodology, our study offers practitioners a valuable tool to assess and enhance the success probability of 6G-SQC projects. This comprehensive approach bridges the gap in existing research and contributes to developing effective strategies for addressing critical aspects of 6G-SQC projects.

15 Conclusion and future directions

This study delivers an innovative prediction model for 6G-SQC, designed to estimate project success probabilities. Leveraging the power of a Genetic Algorithm (GA) and two machine learning techniques—the Naive-Bayes Classifier (NBC) and Logistic Regression (LR), the model demonstrates a considerable boost in project success probability and a simultaneous reduction in overall costs as the project advances. It further outlines the importance of key variables involved in 6G-SQC, offering a clear ranking that aids practitioners in prioritizing these variables based on their influence on project success. While there were slight disparities between the NBC and LR analyses, the strong general correlation signals that practitioners can choose either methodology or a combination of both, depending on their resources and strategic requirements. The research emphasizes variables such as Education and Training, Data Privacy, Quantum Computing Resources, Integration with Existing Networks, Error Detection and Correction, and Network Management, indicating their pivotal role in enhancing the success of 6G-SQC projects.

In future research, we plan to expand this study by pinpointing further variables that impact the 6G-SQC project and uncovering key motivators. We will also employ empirical methods to determine the most effective practices that can enhance the projects for the 6G Secure Quantum Computing project success probability.

Data availability

The codes and data are available under request from the authors.

References

Abdel Hakeem, S.A., Hussein, H.H., Kim, H.: Security requirements and challenges of 6G technologies and applications. Sensors 22, 1969 (2022)

Abe, M., Adamson, P., Borcean, M., Bortoletto, D., Bridges, K., Carman, S.P., et al.: Matter-wave atomic gradiometer interferometric sensor (MAGIS-100). Quant. Sci. Technol. 6, 044003 (2021)

Abe, S., Mizuno, O., Kikuno, T., Kikuchi, N., Hirayama, M.: Estimation of project success using Bayesian classifier. In: Proceedings of the 28th international conference on Software engineering 600–603 (2006)

Acín, A., Bloch, I., Buhrman, H., Calarco, T., Eichler, C., Eisert, J., et al.: The quantum technologies roadmap: a European community view. New J. Phys. 20, 080201 (2018)

Ahammed, T.B., Patgiri, R., Nayak, S.: A vision on the artificial intelligence for 6G communication. ICT Exp. 9, 197–210 (2023)

Aiello, C.D., Awschalom, D.D., Bernien, H., Brower, T., Brown, K.R., Brun, T.A., et al.: Achieving a quantum smart workforce. Quant. Sci. Technol. 6, 030501 (2021)

Akbar, M.A., Shameem, M., Khan, A.A., Nadeem, M., Alsanad, A., Gumaei, A.: A fuzzy analytical hierarchy process to prioritize the success factors of requirement change management in global software development. J. Softw.: Evol. Process 33, e2292 (2021a)

Akbar, M.A., Khan, A.A., Mahmood, S., Alsanad, A., Gumaei, A.: A robust framework for cloud-based software development outsourcing factors using analytical hierarchy process. J. Softw.: Evol. Process 33, e2275 (2021b)

Akbar, M.A., Smolander, K., Mahmood, S., Alsanad, A.: Toward successful DevSecOps in software development organizations: A decision-making framework. Inf. Softw. Technol. 147, 106894 (2022b)

Akbar, M.A., Khan, A.A., Huang, Z.: Multicriteria decision making taxonomy of code recommendation system challenges: A fuzzy-AHP analysis. Inf. Technol. Manage. 24, 115–131 (2023)

Akbar, M. A., Naveed, W., Alsanad, A. A., Alsuwaidan, L., Alsanad, A., Gumaei, A., et al.: Requirements change management challenges of global software development: An empirical investigation. IEEE Access 8, 203070–203085 (2020)

Akbar, M. A., Khan, A. A., Mahmood, S., Mishra, A.: SRCMIMM: the software requirements change management and implementation maturity model in the domain of global software development industry. Inf. Technol. Manag. 1–25 (2022)

Akhtar, M.W., Hassan, S.A., Ghaffar, R., Jung, H., Garg, S., Hossain, M.S.: The shift to 6G communications: vision and requirements. HCIS 10, 1–27 (2020)

Akyildiz, I.F., Kak, A., Nie, S.: 6G and beyond: The future of wireless communications systems. IEEE Access 8, 133995–134030 (2020)

Ali, M. Z., Abohmra, A., Usman, M., Zahid, A., Heidari, H., Imran, M. A., et al.: Quantum for 6G communication: A perspective. IET Quant. Commun. (2023).

Alraih, S., Shayea, I., Behjati, M., Nordin, R., Abdullah, N.F., Abu-Samah, A., et al.: Revolution or evolution? Technical requirements and considerations towards 6G mobile communications. Sensors 22, 762 (2022)

Awschalom, D., Berggren, K.K., Bernien, H., Bhave, S., Carr, L.D., Davids, P., et al.: Development of quantum interconnects (quics) for next-generation information technologies. PRX Quantum 2, 017002 (2021)

Azari, M. M., Solanki, S., Chatzinotas, S., Kodheli, O., Sallouha, H., Colpaert, A., et al.: Evolution of non-terrestrial networks from 5G to 6G: A survey. IEEE Commun. Surv. Tutor. (2022)

Ball, H., Biercuk, M.J., Carvalho, A.R., Chen, J., Hush, M., De Castro, L.A., et al.: Software tools for quantum control: Improving quantum computer performance through noise and error suppression. Quant. Sci. Technol. 6, 044011 (2021)

Bassoli, R., Fitzek, F.H., Boche, H.: Quantum Communication Networks for 6G. Photonic Quantum Technol.: Sci. Appl. 2, 715–736 (2023)

Berrar, D.: Bayes’ theorem and naive Bayes classifier. Encyclopedia Bioinf. Comput. Biol.: ABC Bioinf. 403, 412 (2018)

Bhat, J.R., AlQahtani, S.A., Nekovee, M.: FinTech enablers, use cases, and role of future internet of things. J. King Saud Univ.-Comput. Inf. Sci. 35, 87–101 (2023)

Briegel, H.J., Browne, D.E., Dür, W., Raussendorf, R., Van den Nest, M.: Measurement-based quantum computation. Nat. Phys. 5, 19–26 (2009)

Brin, D.: Convergence: Artificial Intelligence and Quantum Computing: Social, Economic, and Policy Impacts: John Wiley & Sons. Hoboken, New Jersey (2022)

Cerpa, N., Bardeen, M., Astudillo, C.A., Verner, J.: Evaluating different families of prediction methods for estimating software project outcomes. J. Syst. Softw. 112, 48–64 (2016)

Chaoub, A., Mämmelä, A., Martinez-Julia, P., Chaparadza, R., Elkotob, M., Ong, L., et al.: Hybrid self-organizing networks: Evolution, standardization trends, and a 6G architecture vision. IEEE Commun. Stand. Mag. 7, 14–22 (2023)

Chavhan, S.: Shift to 6G: Exploration on trends, vision, requirements, technologies, research, and standardization efforts. Sustain. Energy Technol. Assess. 54, 102666 (2022)

Chen, H., Tu, K., Li, J., Tang, S., Li, T., Qing, Z.: "6G wireless communications: security technologies and research challenges. Int. Conf. Urban Eng. Manag. Sci. (ICUEMS) 2020, 592–595 (2020)

Cheng, M.-Y., Wu, Y.-W., Wu, C.-F.: Project success prediction using an evolutionary support vector machine inference model. Autom. Constr. 19, 302–307 (2010)

Chong, F.T., Franklin, D., Martonosi, M.: Programming languages and compiler design for realistic quantum hardware. Nature 549, 180–187 (2017)

Cory, D.G., Laflamme, R., Knill, E., Viola, L., Havel, T., Boulant, N., et al.: NMR based quantum information processing: Achievements and prospects. Fortschritte Der Physik: Progress of Physics 48, 875–907 (2000)

De Winter, J.C., Gosling, S.D., Potter, J.: Comparing the Pearson and Spearman correlation coefficients across distributions and sample sizes: A tutorial using simulations and empirical data. Psychol. Methods 21, 273 (2016)

Duong, T.Q., Ansere, J.A., Narottama, B., Sharma, V., Dobre, O.A., Shin, H.: Quantum-inspired machine learning for 6G: fundamentals, security, resource allocations, challenges, and future research directions. IEEE Open J. Veh. Technol. 3, 375–387 (2022)

Duong, T. Q., Nguyen, L. D., Narottama, B., Ansere, J. A., Van Huynh, D., Shin, H.: Quantum-inspired real-time optimisation for 6G networks: Opportunities, challenges, and the road ahead. IEEE Open J. Commun. Soc. (2022).

Li, J., Liu, X., Han, G., Cao, S., Wang, X.: TaskPOI priority based energy balanced multi-UAVs cooperative trajectory planning algorithm in 6G networks. IEEE Trans. Green Commun. Netw. (2022)

Khan, A. A., Akbar, M. A., Fahmideh, M., Liang, P., Waseem, M., Ahmad, A., et al.: AI Ethics: An empirical study on the views of practitioners and lawmakers. IEEE Trans. Comput. Soc. Syst. (2023)

Hakeem, S. A. A., Hussein, H. H., Kim, H.: Vision and research directions of 6G technologies and applications. J. King Saud Univ.-Comput. Inf. Sci. (2022)

Head-Marsden, K., Flick, J., Ciccarino, C.J., Narang, P.: Quantum information and algorithms for correlated quantum matter. Chem. Rev. 121, 3061–3120 (2020)

Holland, J.H.: Genetic algorithms. Sci. Am. 267, 66–73 (1992)

Hsieh, H.-F., Shannon, S.E.: Three approaches to qualitative content analysis. Qual. Health Res. 15, 1277–1288 (2005)

Hu, P., Pan, J.-S., Chu, S.-C.: Improved binary grey wolf optimizer and its application for feature selection. Knowl.-Based Syst. 195, 105746 (2020)

Khan, A.A., Akbar, M.A.: Systematic literature review and empirical investigation of motivators for requirements change management process in global software development. J. Softw.: Evol. Process 32, e2242 (2020)

Khan, A.A., Keung, J., Niazi, M., Hussain, S., Ahmad, A.: Systematic literature review and empirical investigation of barriers to process improvement in global software development: Client–vendor perspective. Inf. Softw. Technol. 87, 180–205 (2017)

Khan, L.U., Yaqoob, I., Imran, M., Han, Z., Hong, C.S.: 6G wireless systems: A vision, architectural elements, and future directions. IEEE Access 8, 147029–147044 (2020)

Khan, A.A., Shameem, M., Nadeem, M., Akbar, M.A.: Agile trends in Chinese global software development industry: Fuzzy AHP based conceptual mapping. Appl. Soft Comput. 102, 107090 (2021)

Khorsandi, B.M., Bassoli, R., Bernini, G., Ericson, M., Fitzek, H.F., Gati, A., et al.: 6G E2E architecture framework with sustainability and security considerations. IEEE Globecom Workshops (GC Wkshps) 2022, 1–6 (2022)

Kitchenham, B., Charters, S.: Guidelines for performing systematic literature reviews in software engineering. ed: UK (2007)