Abstract

This paper focuses on inverse reinforcement learning for autonomous navigation using distance and semantic category observations. The objective is to infer a cost function that explains demonstrated behavior while relying only on the expert’s observations and state-control trajectory. We develop a map encoder, that infers semantic category probabilities from the observation sequence, and a cost encoder, defined as a deep neural network over the semantic features. Since the expert cost is not directly observable, the model parameters can only be optimized by differentiating the error between demonstrated controls and a control policy computed from the cost estimate. We propose a new model of expert behavior that enables error minimization using a closed-form subgradient computed only over a subset of promising states via a motion planning algorithm. Our approach allows generalizing the learned behavior to new environments with new spatial configurations of the semantic categories. We analyze the different components of our model in a minigrid environment. We also demonstrate that our approach learns to follow traffic rules in the autonomous driving CARLA simulator by relying on semantic observations of buildings, sidewalks, and road lanes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Autonomous systems operating in unstructured, partially observed, and changing real-world environments need an understanding of semantic meaning to evaluate the safety, utility, and efficiency of their performance. For example, while a bipedal robot may navigate along sidewalks, an autonomous car needs to follow the road lanes and traffic signs. Designing a cost function that encodes such rules by hand is impractical for complex tasks. It is, however, often possible to obtain demonstrations of desirable behavior that indirectly capture the role of semantic context in the task execution. Semantic labels provide rich information about the relationship between object entities and their utility for task execution. In this work, we consider an inverse reinforcement learning (IRL) problem in which observations containing semantic information about the environment are available.

Autonomous vehicle in an urban street, simulated via the CARLA simulator (Dosovitskiy et al., 2017). The vehicle is equipped with a LiDAR scanner, four RGB cameras, and a segmentation algorithm, providing a semantically labeled point cloud. An expert driver demonstrates lane keeping (green) and avoidance of sidewalks (pink) and buildings (gray). This paper considers inferring the expert’s cost function and generating behavior that can imitate the expert’s response to semantic observations in new operational conditions (Color figure online)

Consider imitating a driver navigating in an unknown environment as a motivating scenario (see Fig. 1). The car is equipped with sensors that can reveal information about the semantic categories of surrounding objects and areas. An expert driver can reason about a course of action based on this contextual information. For example, staying on the road relates to making progress, while hitting the sidewalk or a tree should be avoided. One key challenge in IRL is to infer a cost function when such expert reasoning is not explicit. If reasoning about semantic entities can be learned from the expert demonstrations, the cost model may generalize to new environment configurations. To this end, we propose an IRL algorithm that learns a cost function from semantic features of the environment. Simultaneously recognizing the environment semantics and encoding costs over them is a very challenging task. While other works learn a black-box neural network parametrization to map observations directly to costs (Wulfmeier et al., 2016; Song, 2019), we take advantage of semantic segmentation and occupancy mapping before inferring the cost function. A metric-semantic map is constructed from causal partial semantic observations of the environment to provide features for cost function learning. Contrary to most IRL algorithms, which are based on the maximum entropy expert model (Ziebart et al., 2008; Wulfmeier et al., 2016), we propose a new expert model allowing bounded rational deviations from optimal behavior (Baker et al., 2007). Instead of dynamic programming over the entire state space, our formulation allows efficient deterministic search over a subset of promising states. A key advantage of our approach is that this deterministic planning process can be differentiated in closed-form with respect to the parameters of the learnable cost function.

This work makes the following contributions:

-

1.

We propose a cost function representation composed of a map encoder, capturing semantic class probabilities from online, first-person, distance and semantic observations and a cost encoder, defined as a deep neural network over the semantic features.

-

2.

We propose a new expert model which enables cost parameter optimization with a closed-form subgradient of the cost-to-go, computed only over a subset of promising states.

-

3.

We evaluate our model in autonomous navigation experiments in a 2D minigrid environment (Chevalier-Boisvert et al., 2018) with multiple semantic categories (e.g. wall, lawn, lava) as well as an autonomous driving task that respects traffic rules in the CARLA simulator (Dosovitskiy et al., 2017).

2 Related work

2.1 Imitation learning

Imitation learning (IL) has a long history in reinforcement learning and robotics (Ross et al., 2011; Atkeson & Schaal, 1997; Argall et al., 2009; Pastor et al., 2009; Zhu et al., 2018; Rajeswaran et al., 2018; Pan et al., 2020). The goal is to learn a mapping from observations to a control policy to mimic expert demonstrations. Behavioral cloning (Ross et al., 2011) is a supervised learning approach that directly maximizes the likelihood of the expert demonstrated behavior. However, it typically suffers from distribution mismatch between training and testing and does not consider long-horizon planning. Another view of IL is through inverse reinforcement learning where the learner recovers a cost function under which the expert is optimal (Neu & Szepesvári, 2007; Ng & Russell, 2000; Abbeel & Ng, 2004). Recently, Ghasemipour et al. (2020) and Ke et al. (2020) independently developed a unifying probabilistic perspective for common IL algorithms using various f-divergence metrics between the learned and expert policies as minimization objectives. For example, behavioral cloning minimizes the Kullback–Leibler (KL) divergence between the learner and expert policy distribution while adversarial training methods, such as AIRL (Fu et al., 2018) and GAIL (Ho & Ermon, 2016) minimize the KL divergence and Jenson Shannon divergence, respectively, between state-control distributions under the learned and expert policies.

2.2 Inverse reinforcement learning

Learning a cost function from demonstration requires a control policy that is differentiable with respect to the cost parameters. Computing policy derivatives has been addressed by several successful IRL approaches (Neu & Szepesvári, 2007; Ratliff et al., 2006; Ziebart et al., 2008). Early works assume that the cost is linear in the feature vector and aim at matching the feature expectations of the learned and expert policies. Ratliff et al. (2006) compute subgradients of planning algorithms to guarantee that the expected reward of an expert policy is better than any other policy by a margin. Value iteration networks (VIN) by Tamar et al. (2016) show that the value iteration algorithm can be approximated by a series of convolution and maxpooling layers, allowing automatic differentiation to learn the cost function end-to-end. Ziebart et al. (2008) develop a dynamic programming algorithm to maximize the likelihood of observed expert data and learn a policy with maximum entropy (MaxEnt). Many works (Levine et al., 2011; Wulfmeier et al., 2016; Song, 2019) extend MaxEnt to learn a nonlinear cost function using Gaussian Processes or deep neural networks. Finn et al. (2016) use a sampling-based approximation of the MaxEnt partition function to learn the cost function under unknown dynamics for high-dimensional continuous systems. However, the cost in most existing work is learned offline using full observation sequences from the expert demonstrations. A major contribution of our work is to develop cost representations and planning algorithms that rely only on causal partial observations. In the case where demonstrations are suboptimal with respect to the true cost function, a learned cost function can be recovered with preference-based comparisons (Brown et al., 2020; Jeon et al., 2020), self-supervision (Chen et al., 2021) or human corrections and improvements (Bajcsy et al., 2017; Jain et al., 2015). In this work, we assume that only the demonstrations are provided and we cannot assess the demonstrator’s suboptimality with respect to the unknown true cost.

2.3 Mapping and planning

There has been significant progress in semantic segmentation techniques, including deep neural networks for RGB image segmentation (Papandreou et al., 2015; Badrinarayanan et al., 2017; Chen et al., 2018) and point cloud labeling via spherical depth projection (Wu et al., 2018; Dohan et al., 2015; Milioto et al., 2019; Cortinhal et al., 2020). Maps that store semantic information can be generated from segmented images (Sengupta et al., 2012; Lu et al., 2019). Gan et al. (2020) and Sun et al. (2018) generalize binary occupancy mapping (Hornung et al., 2013) to multi-class semantic mapping in 3D. In this work, we parameterize the navigation cost of an autonomous vehicle as a nonlinear function of such semantic map features to explain expert demonstrations.

Achieving safe and robust navigation is directly coupled with the quality of the environment representation and the cost function specifying desirable behaviors. Traditional approaches combine geometric mapping of occupancy probability (Hornung et al., 2013) or distance to the nearest obstacle (Oleynikova et al., 2017) with hand-specified planning cost functions. Recent advances in deep reinforcement learning demonstrated that control inputs may be predicted directly from sensory observations (Levine et al., 2016). However, special model designs (Khan et al., 2018) that serve as a latent map are needed in navigation tasks where simple reactive policies are not feasible. Gupta et al. (2017) decompose visual navigation into two separate stages explicitly: mapping the environment from first-person RGB images in local coordinates and planning through the constructed map with VIN (Tamar et al., 2016). Our model constructs a global map instead and, yet, remains scalable with the size of the environment due to our sparse tensor implementation.

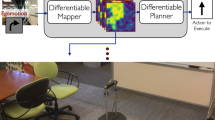

This paper is a revised and extended version of our previous conference publications (Wang et al., 2020a, b). In our previous work (Wang et al., 2020a), we proposed differentiable mapping and planning stages to learn the expert cost function. The cost function is parameterized as a neural network over binary occupancy probabilities, updated from local distance observations. An A* motion planning algorithm computes the policy at the current state and backpropagates the gradient in closed-form to optimize the cost parameterization. We proposed an extension of the occupancy map from binary to multi-class in Wang et al. (2020b), which allows the cost function to capture semantic features from the environment. This paper unifies our results in a common differentiable multi-class mapping and planning architecture and presents an in-depth analysis of the various model components via experiments in a minigrid environment and the CARLA simulator. This work also introduces a new sparse tensor implementation of the multi-class occupancy mapping stage to enable its use in large environments.

3 Problem formulation

3.1 Environment and agent models

Consider an agent aiming to reach a goal in an a priori unknown environment with different terrain types. Figure 2 shows a grid-world illustration of this setting. Let \(\varvec{x}_t \in \mathcal {X}\) denote the agent state (e.g., pose, twist, etc.) at discrete time t. In this work, we will consider \(\varvec{x}_t \in SE(2)\) composed of 2D position and orientation. Let \(\varvec{x}_g \in \mathcal {X}\) be the goal state. The agent state evolves according to known deterministic dynamics, \(\varvec{x}_{t+1} = f(\varvec{x}_t, \varvec{u}_t)\), with control input \(\varvec{u}_t \in \mathcal {U}\). The control space \(\mathcal {U}\) is assumed finite. Let \(\mathcal {K} = \left\{ 0,1,2,\dots ,K\right\} \) be a set of class labels, where 0 denotes “free” space and \(k \in \mathcal {K} {\setminus } \left\{ 0\right\} \) denotes a particular semantic class such as road, sidewalk, or car. Let \(m^*: \mathcal {X} \rightarrow \mathcal {K}\) be a function specifying the true semantic occupancy of the environment by labeling states with semantic classes. We implicitly assume that \(m^*\) assigns labels to agent positions rather than to other state variables. We do not introduce an output function, mapping an agent state to its position, to simplify the notation. Let \(\mathcal {M}\) be the space of possible environment realizations \(m^*\). Let \(c^*: \mathcal {X} \times \mathcal {U} \times \mathcal {M} \rightarrow \mathbb {R}_{\ge 0}\) be a cost function specifying desirable agent behavior in a given environment, e.g., according to an expert user or an optimal design. We assume that the agent does not have access to either the true semantic map \(m^*\) or the true cost function \(c^*\). However, the agent is able to obtain point-cloud observations \(\varvec{P}_t = \left\{ \left( \varvec{p}_l, \varvec{y}_l\right) \right\} _l \in \mathcal {P}\) at each step t, where \(\varvec{p}_l\) is the measurement location. In the following sections, we consider \(\varvec{p}_l \in \mathbb {R}^2\) for MiniGrid experiments in Sect. 7 and \(\varvec{p}_l \in \mathbb {R}^3\) for CARLA experiments in Sect. 8. The vector of weights \(\varvec{y}_l = \left[ y_l^1, \dots , y_l^K\right] ^\top \), where \(y_l^k \in \mathbb {R}\), indicates the likelihood that semantic class \(k \in \mathcal {K} {\setminus } \left\{ 0\right\} \) was observed. For example, \(\varvec{y}_l \in \mathbb {R}^K\) can be obtained from the softmax output of a semantic segmentation algorithm (Papandreou et al., 2015; Badrinarayanan et al., 2017; Chen et al., 2018) that predicts the semantic class of the corresponding measurement location \(\varvec{p}_l\) in an RGBD image. The observed point cloud \(\varvec{P}_t\) depends on the agent state \(\varvec{x}_t\) and the environment realization \(m^*\).

A \(9\times 9\) grid environment with cells from four semantic classes: empty, wall, lawn, lava. An autonomous agent (red triangle, facing down) starts from the top left corner and is heading towards the goal in the bottom right. The agent prefers traversing the lawn but dislikes lava. LiDAR points detect the semantic labels of the corresponding tiles (gray on empty, white on wall, purple on lawn and cyan on lava) (Color figure online)

Architecture for cost function learning from demonstrations with semantic observations. Our main contribution is a cost representation, combining a probabilistic semantic map encoder, with recurrent dependence on semantic observations \(\varvec{P}_{1:t}\), and a cost encoder, defined over the semantic features \(\varvec{h}_t\). Efficient forward policy computation and closed-form subgradient backpropagation are used to optimize the cost representation parameters \(\varvec{\theta }\) in order to explain the expert behavior

3.2 Expert model

We assume the expert chooses a control according to a Boltzmann-rational policy (Ramachandran & Amir, 2007; Neu & Szepesvári, 2007) given the true cost \(c^*\) and the true environment \(m^*\),

We assume that an expert user or algorithm demonstrates desirable agent behavior in the form of a training set \(\mathcal {D}:= \left\{ (\varvec{x}_{t,n},\varvec{u}_{t,n}^*,\varvec{P}_{t,n}, \varvec{x}_{g,n})\right\} _{t=1, n=1}^{T_n, N}\). The training set consists of N demonstrated executions with different lengths \(T_n\) for \(n \in \left\{ 1,\ldots ,N\right\} \). Each demonstration trajectory contains the agent states \(\varvec{x}_{t,n}\), expert controls \(\varvec{u}_{t,n}^*\), and sensor observations \(\varvec{P}_{t,n}\) encountered during navigation to a goal state \(\varvec{x}_{g,n}\).

The design of an IRL algorithm depends on a model of the stochastic control policy \(\pi ^*(\varvec{u} \mid \varvec{x}; c^*, m^*)\) used by the expert to generate the training data \(\mathcal {D}\), given the true cost \(c^*\) and environment \(m^*\). The state of the art relies on the MaxEnt model (Ziebart et al., 2008), which assumes that the expert minimizes the weighted sum of the stage cost \(c^*(\varvec{x}, \varvec{u}; m^*)\) and the negative policy entropy over the agent trajectory.

We propose a new model of expert behavior to explain rational deviation from optimality. We assume that the expert is aware of the optimal value function:

but does not always choose strictly rational actions. Instead, the expert behavior is modeled as a Boltzmann policy over the optimal value function:

where \(\alpha \) is a temperature parameter. The Boltzmann policy stipulates an exponential preference of controls that incur low long-term costs. We will show in Sect. 5 that this expert model allows very efficient policy search as well as computation of the policy gradient with respect to the stage cost, which is needed for inverse cost learning. In contrast, the MaxEnt policy requires either value iteration over the full state space (Ziebart et al., 2008) or sampling-based estimation of a partition function (Finn et al., 2016). Appendix A provides a comparison between our model and the MaxEnt formulation.

3.3 Problem statement

Given the training set \(\mathcal {D}\), our goal is to:

-

learn a cost function estimate \(c_t: \mathcal {X} \times \mathcal {U} \times \mathcal {P}^t \times \Theta \rightarrow \mathbb {R}_{\ge 0}\) that depends on an observation sequence \(\varvec{P}_{1:t}\) from the true latent environment and is parameterized by \(\varvec{\theta } \in \Theta \),

-

design a stochastic policy \(\pi _t\) from \(c_t\) such that the agent behavior under \(\pi _t\) matches the demonstrations in \(\mathcal {D}\).

The optimal value function corresponding to a stage cost estimate \(c_t\) is:

Following the expert model proposed in Sect. 3.2, we define a Boltzmann policy corresponding to \(Q_t\):

and aim to optimize the stage cost parameters \(\varvec{\theta }\) to match the demonstrations in \(\mathcal {D}\).

Problem 1

Given demonstrations \(\mathcal {D}\), optimize the cost function parameters \(\varvec{\theta }\) so that log-likelihood of the demonstrated controls \(\varvec{u}_{t,n}^*\) is maximized by policy functions \(\pi _{t,n}\) obtained according to (4):

The problem setup is illustrated in Fig. 3. An important consequence of our expert model is that the computation of the optimal value function corresponding to a given stage cost estimate is a standard deterministic shortest path (DSP) problem (Bertsekas, 1995). However, the challenge is to make the value function computation differentiable with respect to the cost parameters \(\varvec{\theta }\) in order to propagate the loss in (5) back through the DSP problem to update \(\varvec{\theta }\). Once the parameters are optimized, the associated agent behavior can be generalized to navigation tasks in new partially observable environments by evaluating the cost \(c_t\) based on the observations \(\varvec{P}_{1:t}\) iteratively and re-computing the associated policy \(\pi _t\).

4 Cost function representation

We propose a cost function representation with two components: a semantic occupancy map encoder with parameters \(\varvec{\Psi }\) and a cost encoder with parameters \(\varvec{\phi }\). The model is differentiable by design, allowing its parameters to be optimized by the subsequent planning algorithm described in Sect. 5.

4.1 Semantic occupancy map encoder

We develop a semantic occupancy map that stores the likelihood of the different semantic categories in \(\mathcal {K}\) in different areas of the map. We discretize the state space \(\mathcal {X}\) into J cells and let \(\varvec{m} = \left[ m^1, \dots , m^J\right] ^\top \in \mathcal {K}^J\) be an a priori unknown vector of true semantic labels over the cells. Given the agent states \(\varvec{x}_{1:t}\) and observations \(\varvec{P}_{1:t}\) over time, our model maintains the semantic occupancy posterior \(\mathbb {P}(\varvec{m} = \varvec{k} \mid \varvec{x}_{1:t}, \varvec{P}_{1:t})\), where \(\varvec{k} = \left[ k^1, \dots , k^J\right] ^\top \in \mathcal {K}^J\). The representation complexity may be reduced significantly if one assumes independence among the map cells \(m^j\): \(\mathbb {P}(\varvec{m} = \varvec{k} \mid \varvec{x}_{1:t}, \varvec{P}_{1:t}) = \prod _{j=1}^J \mathbb {P}(m^j = k^j \mid \varvec{x}_{1:t}, \varvec{P}_{1:t})\).

We generalize the binary occupancy grid mapping algorithm (Thrun et al., 2005; Hornung et al., 2013) to obtain incremental Bayesian updates for the mutli-class probability at each cell \(m^j\). In detail, at time \(t-1\), we maintain a vector \(\varvec{h}_{t-1,j}\) of class log-odds at each cell and update them given the observation \(\varvec{P}_{t}\) obtained from state \(\varvec{x}_{t}\) at time t.

Definition 1

The vector of class log-odds associated with cell \(m^j\) at time t is \(\varvec{h}_{t,j} = \left[ h_{t,j}^0, \dots , h_{t,j}^K\right] ^\top \) with elements:

Note that by definition, \(h_{t,j}^0 = 0\). Applying Bayes rule to (6) leads to a recursive Bayesian update for the log-odds vector:

where \(p(\varvec{P}_t \mid m^j = k, \varvec{x}_t)\) is the likelihood of observing \(\varvec{P}_t\) from agent state \(\varvec{x}_t\) when cell \(m^j\) has semantic label k. Here, we assume that the observations \(\left( \varvec{p}_l, \varvec{y}_l\right) \in \varvec{P}_{t}\) at time t, given the cell \(m^j\) and state \(\varvec{x}_t\), are independent among each other and of the previous observations \(\varvec{P}_{1:t-1}\). The semantic class posterior can be recovered from the log-odds vector \(\varvec{h}_{t,j}\) via a softmax function \(\mathbb {P}(m^j = k \mid \varvec{x}_{1:t}, \varvec{P}_{1:t}) = \sigma ^k(\varvec{h}_{t,j})\), where \(\sigma : \mathbb {R}^{K+1} \rightarrow \mathbb {R}^{K+1}\) satisfies:

To complete the Bayesian update in (7), we propose a parametric inverse observation model, \(\mathbb {P}(m^j = k \mid \varvec{x}_t, (\varvec{p}_l, \varvec{y}_l))\), relating the class likelihood of map cell \(m^j\) to a labeled point \(\left( \varvec{p}_l, \varvec{y}_l\right) \) obtained from state \(\varvec{x}_t\).

Definition 2

Consider a labeled point \(\left( \varvec{p}_l, \varvec{y}_l\right) \) observed from state \(\varvec{x}_t\). Let \(\mathcal {J}_{t,l} \subset \left\{ 1,\ldots ,J\right\} \) be the set of map cells intersected by the sensor ray from \(\varvec{x}_t\) toward \(\varvec{p}_l\). Let \(m^j\) be an arbitrary map cell and \(d(\varvec{x}, m^j)\) be the distance between \(\varvec{x}\) and the center of mass of \(m^j\). Define the inverse observation model of the class label of cell \(m^j\) as:

where \(\varvec{\Psi }_l\in \mathbb {R}^{(K+1)\times (K+1)}\) is a learnable parameter matrix, \(\delta p_{t,l,j}:= d(\varvec{x}_t, m^j) - \left\Vert \varvec{p}_l-\varvec{x}_t\right\Vert _2\), \(\epsilon > 0\) is a hyperparameter (e.g., set to half the size of a cell), and \(\bar{\varvec{y}_l}:= \left[ 0, \varvec{y}_l^\top \right] ^\top \) is augmented with a trivial observation for the “free” class.

Intuitively, the inverse observation model specifies that cells intersected by the sensor ray are updated according to their distance to the ray endpoint and the detected semantic class probability, while the class likelihoods of other cells remain unchanged and equal to the prior. For example, if \(m^j\) is intersected, the likelihood of the class label is determined by a softmax squashing of a linear transformation of the measurement vector \(\varvec{y}_l\) with parameters \(\varvec{\Psi }_l\), scaled by the distance \(\delta p_{t,l,j}\). Otherwise, Definition 2 specifies an uninformative class likelihood in terms of the prior log-odds vector \(\varvec{h}_{0,j}\) of cell \(m^j\) (e.g., \(\varvec{h}_{0,j} = \varvec{0}\) specifies a uniform prior over the semantic classes).

Definition 3

The log-odds vector of the inverse observation model associated with cell \(m^j\) and point observation \((\varvec{p}_l, \varvec{y}_l)\) from state \(\varvec{x}_t\) is \(\varvec{g}_j(\varvec{x}_t, (\varvec{p}_l, \varvec{y}_l))\) with elements:

The log-odds vector of the inverse observation model, \(\varvec{g}_j\), specifies the increment for the Bayesian update of the cell log-odds \(\varvec{h}_{t,j}\) in (7). Using the softmax properties in (8) and Definition 2, we can express \(\varvec{g}_j\) as:

Note that the inverse observation model definition in (9) resembles a single neural network layer. One can also specify a more expressive multi-layer neural network that maps the observation \(\varvec{y}_l\) and the distance differential \(\delta p_{t,l,j}\) along the l-th ray to the log-odds vector:

Illustration of the log-odds update in (13) for a single point observation. The sensor ray (blue) hits an obstacle (black) in cell \(m^j\). The log-odds increment \(\varvec{g}_j-\varvec{h}_{0,j}\) on each cell is shown in grayscale (Color figure online)

Proposition 1

Given a labeled point cloud \(\varvec{P}_{t} = \left\{ \left( \varvec{p}_l, \varvec{y}_l\right) \right\} _l\) obtained from state \(\varvec{x}_t\) at time t, the Bayesian update of the log-odds vector of any map cell \(m^j\) is:

Figure 4 illustrates the increment of the log-odds vector \(\varvec{h}_{t,j}\) for a single point \(\left( \varvec{p}_l, \varvec{y}_l\right) \). The log-odds are increased more at \(m^j\) than other cells far away from the observed point. When \(\epsilon \) in (12) is set to half the cell size, values of the cells beyond the observed point are unchanged. Figure 5 shows the semantic class probability prediction for the example in Fig. 2 using the inverse observation model in Definition 2 and the log-odds update in (13).

4.2 Cost encoder

We also develop a cost encoder that uses the semantic occupancy log odds \(\varvec{h}_{t}\) to define a cost function estimate \(c_t(\varvec{x},\varvec{u})\) at a given state-control pair \((\varvec{x},\varvec{u})\). A convolutional neural network (CNN) (Goodfellow et al., 2016) with parameters \(\varvec{\phi }\) can extract cost features from the multi-class occupancy map: \(c_t = {\textbf {CNN}}(\varvec{h}_t; \varvec{\phi })\). We adopt a fully convolutional network (FCN) architecture (Badrinarayanan et al., 2017) to parameterize the cost function over the semantic class probabilities. The model is a multi-scale architecture that performs downsamples and upsamples to extract feature maps at different layers. Features from multiple scales ensure that the cost function is aware of both local and global context from the semantic map posterior. FCNs are also translation equivariant (Cohen & Welling, 2016), ensuring that map regions of the same semantic class infer the same cost, irrespective of the specific locations of those regions. Our model architecture (illustrated in Fig. 7) consists of a series of convolutional layers with 32 channels, batch normalization (Ioffe & Szegedy, 2015) and ReLU layers, followed by a max-pooling layer with \(2\times 2\) window with stride 2. The feature maps go through another series of convolutional layers with 64 channels, batch normalization, ReLU and max-pooling layers before they are upsampled by reusing the max-pooling indices. The feature maps then go through two series of upsampling, convolution, batch normalization and ReLU layers to produce the final cost function \(c_t\). We add a small positive constant to the ReLU output to ensure that \(c_t > 0\) and there are no negative cycles or cost-free paths during planning.

In summary, the semantic map encoder (parameterized by \(\left\{ \varvec{\Psi }_l\right\} _l\)) takes the agent state history \(\varvec{x}_{1:t}\) and point cloud observation history \(\varvec{P}_{1:t}\) as inputs to encode a semantic map probability as discussed in Sect. 4.1. The FCN cost encoder (parameterized by \(\varvec{\phi }\)) in turn defines a cost function from the extracted semantic features. The learnable parameters of the cost function, \(c_t(\varvec{x}, \varvec{u}; \varvec{P}_{1:t}, \varvec{\theta })\), are \(\varvec{\theta } = \left\{ \left\{ \varvec{\Psi }_l\right\} _l, \varvec{\phi }\right\} \).

5 Cost learning via differentiable planning

We focus on optimizing the parameters \(\varvec{\theta }\) of the cost representation \(c_t(\varvec{x},\varvec{u}; \varvec{P}_{1:t}, \varvec{\theta })\) developed in Sect. 4. Since the true cost \(c^*\) is not directly observable, we need to differentiate the loss function \(\mathcal {L}(\varvec{\theta })\) in (5), which, in turn, requires differentiating through the DSP problem in (3) with respect to the cost function estimate \(c_t\).

Previous works rely on dynamic programming to solve the DSP problem in (3). For example, the VIN model (Tamar et al., 2016) approximates T iterations of the value iteration algorithm by a neural network with T convolutional and minpooling layers. This allows VIN to be differentiable with respect to the stage cost \(c_t\) but it scales poorly with the size of the problem due to the full Bellman backups (convolutions and minpooling) over the state and control space. We observe that it is not necessary to determine the optimal cost-to-go \(Q_t(\varvec{x}, \varvec{u})\) at every state \(\varvec{x}\in \mathcal {X}\) and control \(\varvec{u} \in \mathcal {U}\). Instead of dynamic programming, a motion planning algorithm, such as a variant of A* (Likhachev et al., 2004) or RRT (LaValle, 1998; Karaman & Frazzoli, 2011), may be used to solve problem (3) efficiently and determine the optimal cost-to-go \(Q_t(\varvec{x},\varvec{u})\) only over a subset of promising states. The subgradient method of Shor (2012); Ratliff et al. (2006) may then be employed to obtain the subgradient of \(Q_t(\varvec{x}_t,\varvec{u}_t)\) with respect to \(c_t\) along the optimal path.

5.1 Deterministic shortest path

Given a cost estimate \(c_t\), we use the A* algorithm (Algorithm 1) to solve the DSP problem in (3) and obtain the optimal cost-to-go \(Q_t\). The algorithm starts the search from the goal state \(\varvec{x}_g\) and proceeds backwards towards the current state \(\varvec{x}_t\). It maintains an OPEN set of states, which may potentially lie along a shortest path, and a CLOSED list of states, whose optimal value \(\min _{\varvec{u}} Q_t(\varvec{x},\varvec{u})\) has been determined exactly. At each iteration, the algorithm pops a state \(\varvec{x}\) from OPEN with the smallest \(g(\varvec{x}) + \epsilon h(\varvec{x}_t, \varvec{x})\) value, where \(g(\varvec{x})\) is an estimate of the cost-to-go from \(\varvec{x}\) to \(\varvec{x}_g\) and \(h(\varvec{x}_t, \varvec{x})\) is a heuristic function that does not overestimate the true cost from \(\varvec{x}_t\) to \(\varvec{x}\) and satisfies the triangle inequality. We find all predecessor states \(\varvec{x}'\) and their corresponding control \(\varvec{u}'\) that lead to \(\varvec{x}\) under the known dynamics model \(\varvec{x} = f(\varvec{x}', \varvec{u}')\) and update their g values if there is a lower cost trajectory from \(\varvec{x}'\) to \(\varvec{x}_g\) through \(\varvec{x}\). The algorithm terminates when all neighbors of the current state \(\varvec{x}_t\) are in the CLOSED set. The following relations are satisfied at any time throughout the search:

The algorithm terminates only after all neighbors \(f(\varvec{x}_t, \varvec{u})\) of the current state \(\varvec{x}_t\) are in CLOSED to guarantee that the optimal cost-to-go \(Q_t(\varvec{x}_t,\varvec{u})\) at \(\varvec{x}_t\) is exact. A simple choice of heuristic that guarantees the above relations is \(h(\varvec{x},\varvec{x}') = 0\), which reduces A* to Dijkstra’s algorithm. Alternatively, the cost encoder output may be designed to ensure that \(c_t(\varvec{x}, \varvec{u}) \ge 1\), which allows using Manhattan distance, \(h(\varvec{x},\varvec{x}') = \Vert \varvec{x}-\varvec{x}'\Vert _1\), as the heuristic.

Finally, a Boltzmann policy \(\pi _t(\varvec{u} \mid \varvec{x})\) can be defined using the g values returned by A* for any \(\varvec{x} \in \mathcal {X}\):

The policy discourages controls that lead to states outside of CLOSED because \(c_t(\varvec{x},\varvec{u}) + g(f(\varvec{x},\varvec{u}))\) overestimates \(Q_t(\varvec{x}, \varvec{u})\). For any unvisited states, the policy is uniform since g values are initialized to infinity. In practice, we only need to query the policy at the current state \(\varvec{x}_t\), which is always in CLOSED, for the loss function \(\mathcal {L}(\varvec{\theta })\) in (5) during training and policy inference during testing.

5.2 Backpropagation through planning

Having solved the DSP problem in (3) for a fixed cost function \(c_t\), we now discuss how to optimize the cost parameters \(\varvec{\theta }\) such that the planned policy in (14) minimizes the loss in (5). Our goal is to compute the gradient \(\frac{d \mathcal {L}(\varvec{\theta })}{d \varvec{\theta }}\), using the chain rule, in terms of \(\frac{\partial \mathcal {L}(\varvec{\theta })}{\partial Q_t(\varvec{x}_t,\varvec{u}_t)}\), \(\frac{\partial Q_t(\varvec{x}_t,\varvec{u}_t)}{\partial c_t(\varvec{x},\varvec{u})}\), and \(\frac{\partial c_t(\varvec{x},\varvec{u})}{\partial \varvec{\theta }}\). The first gradient term can be obtained analytically from (5) and (4), as we show later, while the third one can be obtained via backpropagation (automatic differentiation) through the neural network cost model \(c_t(\varvec{x},\varvec{u}; \varvec{P}_{1:t}, \varvec{\theta })\) developed in Sect. 4. We focus on computing the second gradient term.

We rewrite \(Q_t(\varvec{x}_t,\varvec{u}_t)\) in a form that makes its subgradient with respect to \(c_t(\varvec{x},\varvec{u})\) obvious. Let \(\mathcal {T}(\varvec{x}_t,\varvec{u}_t)\) be the set of trajectories, \(\varvec{\tau } = \varvec{x}_t, \varvec{u}_t, \varvec{x}_{t+1}, \varvec{u}_{t+1}, \dots , \varvec{x}_{T-1}, \varvec{u}_{T-1}, \varvec{x}_{T}\), of length \(T-t+1\) that start at \(\varvec{x}_t\), \(\varvec{u}_t\), satisfy transitions \(\varvec{x}_{t+1} = f(\varvec{x}_t, \varvec{u}_t)\), and terminate at \(\varvec{x}_{T} = \varvec{x}_g\). Let \(\varvec{\tau }^* \in \mathcal {T}(\varvec{x}_t,\varvec{u}_t)\) be an optimal trajectory corresponding to the optimal cost-to-go \(Q_t(\varvec{x}_t,\varvec{u}_t)\). Define a state-control visitation function which counts the number of times transition \((\varvec{x},\varvec{u})\) appears in \(\varvec{\tau }\):

The optimal cost-to-go \(Q_t(\varvec{x}_t,\varvec{u}_t)\) can be viewed as a minimum over trajectories \(\mathcal {T}(\varvec{x}_t,\varvec{u}_t)\) of the inner product between the cost function \(c_t\) and the visitation function \(\mu _{\varvec{\tau }}\):

where \(\mathcal {X}\) can be assumed finite because both T and \(\mathcal {U}\) are finite. We use the subgradient method (Shor, 2012; Ratliff et al., 2006) to compute a subgradient of \(Q_t(\varvec{x}_t,\varvec{u}_t)\) with respect to \(c_t\).

Learned cost function for the example in Fig. 2. The cost of control “right" is the smallest at the agent’s location after training. The agent correctly predicts that it should move right and step on the lawn

A fully convolutional encoder-decoder neural network similar to that in Badrinarayanan et al. (2017) is used as the cost encoder to learn features from semantic map \(\varvec{h}_t\) to cost function \(c_t\)

Subgradient of the optimal cost-to-go \(Q_t(\varvec{x}_t,\varvec{u}_t)\) for each control \(\varvec{u}_t\) with respect to the cost \(c_t(\varvec{x},\varvec{u})\) in Fig. 6

Lemma 1

Let \(f(\varvec{x},\varvec{y})\) be differentiable and convex in \(\varvec{x}\). Then, \(\nabla _{\varvec{x}} f(\varvec{x}, \varvec{y}^*)\), where \(\varvec{y}^*:= \arg \min _{\varvec{y}} f(\varvec{x},\varvec{y})\), is a subgradient of the piecewise-differentiable convex function \(g(\varvec{x}):= \min _{\varvec{y}} f(\varvec{x},\varvec{y})\).

Applying Lemma 1 to (16) leads to the following subgradient of the optimal cost-to-go function:

which can be obtained along the optimal trajectory \(\varvec{\tau }^*\) by tracing the CHILD relations returned by Algorithm 1. Figure 8 shows an illustration of this subgradient computation with respect to the cost estimate in Fig. 6 for the example in Fig. 2. The result in (17) and the chain rule allow us to obtain a complete subgradient of \(\mathcal {L}(\varvec{\theta })\).

Proposition 2

A subgradient of the loss function \(\mathcal {L}(\varvec{\theta })\) in (5) with respect to \(\varvec{\theta }\) can be obtained as:

5.3 Algorithms

The computation graph implied by Proposition 2 is illustrated in Fig. 3. The graph consists of a cost representation layer and a differentiable planning layer, allowing end-to-end minimization of \(\mathcal {L}(\varvec{\theta })\) via stochastic subgradient descent. The training algorithm for solving Problem 1 is shown in Algorithm 2. The testing algorithm that enables generalizing the learned semantic mapping and planning behavior to new sensory data in new environments is shown in Algorithm 3.

6 Sparse tensor implementation

In this section, we propose a sparse tensor implementation of the map and cost variables introduced in Sect. 4. The region explored during a single navigation trajectory is usually a small subset of the full environment due to the agent’s limited sensing range. The map and cost variables \(\varvec{h}_t\), \(\varvec{g}_t\), \(c_t(\varvec{x},\varvec{u})\) thus contains many 0 elements corresponding to “free” space or unexplored regions and only a small subset of the states in \(c_t(\varvec{x},\varvec{u})\) are queried during planning and parameter optimization in Sect. 5. Representing these variables as dense matrices is computationally and memory inefficient. Instead, we propose an implementation of the map encoder and cost encoder that exploits the sparse structure of these matrices. Choy et al. (2019) developed the Minkowski Engine, an automatic differentiation neural network library for sparse tensors. This library is tailored for our case as we require automatic differentiation for operations among the variables \(\varvec{h}_t\), \(\varvec{g}_t\), \(c_t\) in order to learn the cost parameters \(\varvec{\theta }\).

During training, we pre-compute the variable \(\delta p_{t,l,j}\) over all points \(\varvec{p}_l\) from a point cloud \(\varvec{P}_t\) and all grid cells \(m^j\). This results in a matrix \(\varvec{R}_t \in \mathbb {R}^{K\times J}\) where the entry corresponding to cell \(m^j\) stores the vector \(\varvec{y}_l \delta p_{t,l,j}\).Footnote 1 The matrix \(\varvec{R}_t\) is then converted to COOrdinate list (COO) format (Tew, 2016), specifying the nonzero indices \(\varvec{C}_t \in \mathbb {R}^{N_{nz} \times 1}\) and their feature values \(\varvec{F}_t \in \mathbb {R}^{N_{nz} \times K}\), where \(N_{nz} \ll J\) if \(\varvec{R}_t\) is sparse. To construct \(\varvec{C}_t\) and \(\varvec{F}_t\), we append non-zero features \(\varvec{y}_l\delta p_{t,l,j}\) to \(\varvec{F}_t\) and their coordinates j in \(\varvec{R}_t\) to \(\varvec{C}_t\). The inverse observation model log-odds \(\varvec{g}_t\) can be computed from \(\varvec{C}_t\) and \(\varvec{F}_t\) via (11) and represented in COO format as well. Hence, a sparse representation of the semantic occupancy log-odds \(\varvec{h}_t\) can be obtained by accumulating \(\varvec{g}_t\) over time via (13).

We use the sparse tensor operations (e.g., convolution, batch normalization, pooling, etc.) provided by the Minkowski Engine in place of their dense tensor counterparts in the cost encoder defined in Sect. 4.2. For example, the convolution kernel does not slide sequentially over each entry in a dense tensor but is defined only over the indices in \(\varvec{C}_t\), skipping computations at the 0 elements. To ensure that the sparse tensors are compatible in the backpropagtion step of the cost parameter learning (Sect. 5.2), the analytic subgradient in (18) should also be provided in sparse COO format. We implement a custom operation in which the forward function computes the cost-to-go \(Q_t(\varvec{x}_t, \varvec{u}_t)\) from \(c_t(\varvec{x}, \varvec{u})\) via Algorithm 1 and the backward function multiplies the sparse matrix \(\frac{\partial Q_t(\varvec{x}_t,\varvec{u}_t)}{\partial c_t(\varvec{x},\varvec{u})}\) with the previous gradient in the computation graph, \(\frac{\partial \mathcal {L}(\varvec{\theta })}{\partial Q_t(\varvec{x}_t,\varvec{u}_t)}\), to get \(\frac{\partial \mathcal {L}(\varvec{\theta })}{\partial c_t(\varvec{x},\varvec{u})}\). The output gradient \(\frac{\partial \mathcal {L}(\varvec{\theta })}{\partial c_t(\varvec{x},\varvec{u})}\) is used as input to the downstream operations defined in Sects. 4.2 and 4.1 to update the cost parameters \(\varvec{\theta }\).

7 MiniGrid experiment

We first demonstrate our inverse reinforcement learning approach in a synthetic minigrid environment (Chevalier-Boisvert et al., 2018). We consider a simplified setting to help visualize and understand the differentiable semantic mapping and planning components.Footnote 2 A more realistic autonomous driving setting is demonstrated in Sect. 8.

7.1 Experiment setup

7.1.1 Environment

Grid environments of sizes \(16\times 16\) and \(64\times 64\) are generated by sampling a random number of random length rectangles with semantic labels from \(\mathcal {K}:= \left\{ \textit{empty}, \textit{wall}, \textit{lava}, \textit{lawn}\right\} \). One such environment is shown in Fig. 9. The agent motion is modeled over a 4-connected grid such that a control \(\varvec{u}_t\) from \(\mathcal {U}:= \left\{ \textit{up}, \textit{down}, \textit{left}, \textit{right}\right\} \) causes a transition from \(\varvec{x}_t\) to one of the four neighboring tiles \(\varvec{x}_{t+1}\). A wall tile is not traversable and a transition to it does not change the agent’s position.

7.1.2 Sensor

At each step t, the agent receives 72 labeled points \(\varvec{P}_t = \left\{ \varvec{p}_l,\varvec{y}_l\right\} _l\), obtained from ray-tracing a \(360^{\circ }\) field of view at angular resolution of \(5^{\circ }\) with maximum range of 3 grid cells and returning the grid location \(\varvec{p}_l\) of the hit point and its semantic class encoded in a one-hot vector \(\varvec{y}_l\). See Fig. 2 for an illustration. The sensing range is smaller than the environment size, making the environment only partially observable at any given time.

7.1.3 Demonstrations

Expert demonstrations are obtained by running a shortest path algorithm on the true map \(\varvec{m}^*\), where the cost of arriving at an empty, wall, lava, or lawn tile is 1, 100, 10, 0.5, respectively. We generate 10, 000, 1000, and 1000 random map configurations for training, validation, and testing, respectively. Start and goal locations are randomly assigned and maps without a feasible path are discarded. To avoid overfitting, we use the model parameters that perform best in validation for testing.

7.2 Models

7.2.1 DeepMaxEnt

We use the DeepMaxEnt IRL algorithm of Wulfmeier et al. (2016) as a baseline. DeepMaxEnt is an extension of the MaxEnt IRL algorithm (Ziebart et al., 2008), which uses a deep neural network to learn a cost function directly from LiDAR observations. In contrast to our model, DeepMaxEnt does not have an explicit map representation. The cost representation is a multi-scale FCN (Wulfmeier et al., 2016) adapted to the \(16\times 16\) and \(64\times 64\) domains. Value iteration over the cost matrix is approximated by a finite number of Bellman backup iterations, equal to the number of map cells. The original experiments in Wulfmeier et al. (2016) use the mean and variance of the height of 3D LiDAR points in each cell, as well as a binary indicator of cell visibility, as input features to the FCN neural network. Since our synthetic experiments are in 2D, the point count in each grid cell is used instead of the height mean and variance. This is a fair adaptation since Wulfmeier et al. (2016) argued that obstacles generally represent areas of larger height variance which corresponds to more points within obstacles cells for our observations. We compare against the original DeepMaxEnt model in Sect. 8.

7.2.2 Ours

Our model takes as inputs the semantic point cloud \(\varvec{P}_t\) and the agent position \(\varvec{x}_t\) at each time step and updates the semantic map probability via Sect. 4.1. The cost encoder goes through two scales of convolution and down(up)-sampling as introduced in Sect. 4.2. The models are trained using the Adam optimizer (Kingma & Ba, 2014) in Pytorch (Paszke et al., 2019). The neural network model training and online inference during testing are performed on an Intel i7-7700K CPU and an NVIDIA GeForce GTX 1080Ti GPU.

7.3 Evaluation metrics

The following metrics are used for evaluation: negative log-likelihood (NLL) and control accuracy (Acc) for the validation set and trajectory success rate (TSR) and modified Hausdorff distance (MHD) for the test set. Given learned cost parameters \(\varvec{\theta }^*\) and a validation set \(\mathcal {D} = \left\{ (\varvec{x}_{t,n},\varvec{u}_{t,n}^*,\varvec{P}_{t,n}, \varvec{x}_{g,n})\right\} _{t=1, n=1}^{T_n, N}\), policies \(\pi _{t,n}(\cdot \mid \varvec{x}_{t,n}; \varvec{P}_{1:t,n}, \varvec{\theta }^*)\) are computed online via Algorithm 1 at each demonstrated state \(\varvec{x}_{t,n}\) and are evaluated according to:

In the test set, the agent is initialized at the starting pose and iteratively applies control inputs \(\varvec{u}_{t,n} = \mathop {\arg \max }\limits _{\varvec{u}} \pi _{t,n}(\varvec{u} \mid \varvec{x}_{t,n}; \varvec{P}_{1:t,n}, \varvec{\theta }^*)\) as described in Algorithm 3. The agent trajectories can deviate from expert trajectories and the agent has to recover from states which were not encountered by the expert. To find whether the agent eventually reaches the goal, we report the success rate TSR, where success is defined as reaching the goal state \(\varvec{x}_{g,n}\) within twice the number of steps of the expert trajectory. In addition, MHD compares how far the agent trajectories \(\tau _n^A\) deviate from the expert trajectories \(\varvec{\tau }_n^E\):

where \(d(\varvec{\tau }_{t,n}^A, \varvec{\tau }_n^E)\) is the minimum Euclidean distance from the state \(\varvec{\tau }_{t,n}^A\) at time t in \(\varvec{\tau }_n^{A}\) to any state in \(\varvec{\tau }_n^{E}\).

Examples of probabilistic multi-class occupancy estimation, cost encoder output, and subgradient computation. The first column shows the agent in the true environment at different time steps. The second column shows the semantic occupancy estimates of the different cells. The third column shows the predicted cost of arriving at each cell. Note that the learned cost function correctly assigns higher costs (in brighter scale) to wall and lava cells and lower costs (in darker scale) to lawn cells. The last column shows subgradients obtained via 17 during backpropagation to update the cost parameters (Color figure online)

7.4 Results

The results are shown in Table 1. Our model outperforms DeepMaxEnt in every metric. Specifically, low NLL on the validation set indicates that map encoder and cost encoder in our model are capable of learning a cost function that matches the expert demonstrations. During testing in unseen environment configurations, our model also achieves a higher score in successfully reaching the goal. In addition, the difference in the agent trajectory and the expert trajectory is smaller, as measured by the MHD metric.

The outputs of our model components, i.e., map encoder, cost encoder and subgradient computation, are visualized in Fig. 9. The map encoder integrates past observations and holds a correct estimate of the semantic probability of each cell. The subgradients in the last column enable us to propagate the negative log-likelihood of the expert controls back to the cost model parameters. The cost visualizations indicate that the learned cost function correctly assigns higher costs to wall and lava cells (in brighter scale) and lower costs to lawn cells (in darker scale).

7.5 Inference speed

The problem setting in this paper requires the agent to replan at each step when a new observation \(\varvec{P}_t\) arrives and updates the cost function \(c_t\). Our planning algorithm is computationally efficient because it searches only through a subset of promising states to obtain the optimal cost-to-go \(Q_t(\varvec{x}_t,\varvec{u}_t)\). On the other hand, the value iteration in DeepMaxEnt has to perform Bellman backups on the entire state space even though most of the environment is not visited and the cost in these unexplored regions is inaccurate. Table 2 shows the average inference speed to predict a new control \(\varvec{u}_t\) at each step during testing.

8 CARLA experiment

Building on the insights developed in the 2D minigrid environment in Sect. 7, we design an experiment in a realistic autonomous driving simulation.

8.1 Experiment setting

8.1.1 Environment

We evaluate our approach using the CARLA simulator (0.9.9) (Dosovitskiy et al., 2017), which provides high-fidelity autonomous vehicle simulation in urban environments. Demonstration data is collected from maps \(\left\{ Town01, Town02, Town03\right\} \), while Town04 is used for validation and Town05 for testing. Town05 includes different street layouts (e.g., intersections, buildings and freeways) and is larger than the training and validation maps.

8.1.2 Sensors

The vehicle is equipped with a LiDAR sensor that has 20 meters maximum range and \(360^{\circ }\) horizontal field of view. The vertical field of view ranges from \(0^{\circ }\) (facing forward) to \(-40^{\circ }\) (facing down) with \(5^{\circ }\) resolution. A total of 56000 LiDAR rays are generated per scan \(\varvec{P}_t\) and point measurements are returned only if a ray hits an obstacle (see Fig. 10). The vehicle is also equipped with 4 semantic segmentation cameras that detect 13 different classes, including road, road line, sidewalk, vegetation, car, building, etc. The cameras face front, left, right, and rear, each capturing a \(90^{\circ }\) horizontal field of view (see Fig. 10). The semantic label of each LiDAR point is retrieved by projecting the point in the camera’s frame and querying the pixel value in the segmented image.

8.1.3 Demonstrations

In each map, we collect 100 expert trajectories by running an autonomous navigation agent provided by the CARLA Python API. On the graph of all available waypoints, the expert samples two waypoints as start and goal and searches the shortest path as a list of waypoints. The expert uses a PID controller to generate a smooth and continuous trajectory to connect the waypoints on the shortest path. The expert respects traffic rules, such as staying on the road, and keeping in the current lane. The ground plane is discretized into a \(256\times 256\) grid of 0.5 meter resolution. Expert trajectories that do not fit in the given grid size are discarded. For planning purposes, the agent motion is modeled over a 4-connected grid with control space \(\mathcal {U}:= \left\{ \textit{up}, \textit{down}, \textit{left}, \textit{right}\right\} \). A planned sequence of such controls is followed using the CARLA PID controller. Simulation features not related to the experiment are disabled, including spawning other vehicles and pedestrians, changing traffic signals and weather conditions, etc. Designing an agent that understands more complicated environment settings with other moving objects and changing traffic lights will be considered in future research.

8.2 Models and metrics

8.2.1 DeepMaxEnt

We use the DeepMaxEnt IRL algorithm Wulfmeier et al. (2016) with a multi-scale FCN cost encoder as a baseline again. Unlike the previous 2D experiment in Sect. 7, we use the input format from the original paper. Specifically, observed 3D point clouds are mapped into a 2D grid with three channels: the mean and variance of the height of the points as well as the cell visibility of each cell. This model does not utilize the point cloud semantic labels.

8.2.2 DeepMaxEnt + Semantics

The input features are augmented with additional channels that contain the number of points in a cell of each particular semantic class. This model uses the additional semantic information but does not explicitly map the environment over time.

8.2.3 Ours

We ignore the height information in the 3D point clouds \(\varvec{P}_{1:t}\) and maintain a 2D semantic map. The cost encoder is a two scale convolution and down(up)-sampling neural network, described in Sect. 4.2. Additionally, our model is implemented using sparse tensors, described in Sect. 6, to take advantage of the sparsity in the map \(\varvec{h}_t\) and cost \(c_t\). The models are implemented using the Minkowski Engine (Choy et al., 2019) and the PyTorch library (Paszke et al., 2019) and are trained with the Adam optimizer (Kingma & Ba, 2014). The neural network training and the online inference during testing are performed on an Intel i7-7700K CPU and an NVIDIA GeForce GTX 1080Ti GPU.

8.2.4 Metrics

The metrics, NLL, Acc, TSR, and MHD, introduced in Sect. 7.3, are used for evaluation.

Examples of semantic occupancy estimation and cost encoding during different steps in a test trajectory marked in red (also see Extension 1). The left column shows the most probable semantic class of the map encoder and the right column shows the cost to arrive at each state. Our model correctly distinguishes the road from other categories (e.g., sidewalk, building, etc) and assigns lower cost to road than sidewalks (Color figure online)

8.3 Results

Table 3 shows the performance of our model in comparison to DeepMaxEnt and DeepMaxEnt + Semantics. Our model learns to generate policies closest to the expert demonstrations in the validation map Town04 by scoring best in NLL and Acc metrics. During testing in map Town05, the models predict controls at each step online to generate the agent trajectory. Ours achieves the highest success rate of reaching the goal without hitting sidewalks and other obstacles. Among the successful trajectories, Ours is also closest to the expert by achieving the minimum MHD. The results demonstrate that the map encoder captures both geometric and semantic information, allowing accurate cost estimation and generation of trajectories that match the expert behavior. Figure 11 shows an example of a generated trajectory during testing in the previously unseen Town05 environment (also see Extension 1). The map encoder predicts correct semantic class labels for each cell and the cost encoder assigns higher costs to sidewalks than the road. We notice that the addition of semantic information actually degrades the performance of DeepMaxEnt. We conjecture that the increase in the number of input channels, due to the addition of the number of LiDAR points per category, makes the convolutional neural network layers prone to overfit on the training set but generalize poorly on the validation and test sets. Additional examples of agent trajectories and semantic mapping predictions are given in Online Resource 1. We also report runtime analysis for test-time model inference in Table 4. Each time step is divided into (1) simulator update, where the agent is set at new states and image and lidar observations are generated, (2) data preprocessing, where semantic labels are retrieved for point clouds and data are moved to GPU, and (3) model inference.

8.4 Evaluation with dynamic obstacles

Two scenarios with dynamic obstacles. Left column (scenario 1): the agent vehicle (blue) keeps in its own lane when overtaking the NPC vehicle (red) in the left lane. Right column (scenario 2): when the NPC and agent vehicles are spawned in the same lane, the agent switches to the left lane to overtake (Color figure online)

In this section, we study the effects of dynamic obstacles in the scene on our model’s map and cost encoders. We create three different scenarios where the agent vehicle has to overtake a lower speed non-player character (NPC vehicle). In Scenario 1, the NPC is spawned in the left lane to the agent and 20 ms ahead. The agent is expected to stay in its own lane when overtaking the NPC. In Scenario 2, the NPC is spawned in the same lane as the agent and 20 ms ahead. The agent has to move to its left lane to overtake the NPC. Scenario 3 is a mixture of the first two where the NPC could be either in the same lane or in the left lane to the agent. A visualization of the first two scenarios is shown in Fig. 12. Training and evaluation are conducted in the Town05 map since it contains multi-lane streets while other maps contain mostly single-lane streets. We sample 100 trajectories for testing within the top-left quadrant of the map and 200 trajectories for training from other quadrants as illustrated in Fig. 13. We train our model in all three scenarios and test it in the same scenario where it is trained. Each trajectory is discretized on a \(128\times 128\) grid of 1 meter resolution.

To effectively capture the most current information of the dynamic NPC vehicle, we multiply the grid log-odds \(\varvec{h}_{t,j}\) with a decay rate \(\gamma \in \left\{ 1.0, 0.9, 0.8, 0.7\right\} \), i.e.,

The map encoder is the same as in previous experiments when \(\gamma = 1.0\), while when \(\gamma < 1\) past observation information is slowly removed. Note that we use the same decay rate across all semantic classes since we do not assume prior knowledge of which classes are dynamic or static. Alternatively, it is possible to use a different decay rate for each class, \(h_{t-1,j}^k \rightarrow \gamma ^k h_{t-1,j}^k\), or set \(\gamma ^k\) as a learnable parameter to be optimized with the overall objective in (5). The semantic probabilities and cost encoder output of the same trajectory with different decay rates is shown in Fig. 14.

We report the results of our model with different decay rates in each scenario in Table 5. In addition to the TSR and MHD metrics, we report the collision rate (CR) between the agent and the NPC vehicles in the test trajectories. We find that CR is higher in Scenario 2 than in Scenario 1, which is expected as lane changing is a harder task when a moving NPC vehicle is present. The performance in the mixed scenario is on par with that in Scenario 2, suggesting that our policy class in (4) may not capture a multi-modal distribution in the demonstrated behaviors effectively. Within each scenario, we find that the model generally works better when the decay rate \(\gamma \) is close to 1.0. We suspect that since both vehicles are moving forward in the same direction, it does not hurt to map the NPC’s past locations. However, when \(\gamma \) is small, forgetting the NPC’s history makes its semantic probability smaller (as shown in Fig. 14), and thus the agent has a higher chance of colliding into the NPC vehicle. Finally, we find that MHD is consistent across all settings which shows that the agent trajectories are close to the expert’s, when they are successful.

8.5 Evaluation with noisy semantic observations

In this section, we study how noisy semantic observations can affect downstream cost prediction and policy inference. First, we consider noise added to the contours of each segmentation region. We replace each pixel value in the original \(600\times 800\) semantic segmentation image with a random pixel within its local \(5\times 5\) pixel window. This makes the segment boundaries blurry while the interior of each semantic region is unchanged (see Fig. 15b). With this noise model, only \(0.2\%\) of the lidar points are labeled incorrectly. We considered two additional noise models in which \(2\%\) and \(20\%\) of all pixels are randonmly changed to an incorrect label chosen among the remaining semantic labels (see Fig. 15c, d).

We find that these noise models have very little influence on the policy inference. To understand this, we study how much the map encoder output changes when using noisy semantic images. We calculate the total variation distance between the semantic map probabilities obtained from the original and perturbed semantic images. Specifically, let \(\mathbb {P}_a:= \mathbb {P}(m^j = k \mid \varvec{x}_{1:T}, \varvec{P}_{1:T})\) be the semantic posterior probability of a trajectory using the original semantic segmentation images and, correspondingly, let \(\mathbb {P}_b\), \(\mathbb {P}_c\), \(\mathbb {P}_d\) denote the posteriors using perturbed images from Fig. 15. The total variation distance between two discrete probability measures is

Table 6 and Fig. 16 show the maximum and a histogram, respectively, of the total variation across all grid cells. These results show that our map encoder is robust to noise even when \(20\%\) of the labels in the semantic images are wrong.

9 Conclusion

This paper introduced an inverse reinforcement learning approach for inferring navigation costs from demonstrations with semantic category observations. Our cost model consists of a probabilistic multi-class occupancy map and a deep fully convolutional cost encoder defined over the class likelihoods. The cost function parameters are optimized by computing the optimal cost-to-go of a deterministic shortest path problem, defining a Boltzmann control policy over the cost-to-go, and backprogating the log-likelihood of the expert controls with a closed-form subgradient. Experiments in simulated minigrid environments and the CARLA autonomous driving simulator show that our approach outperforms methods that do not encode semantic information probabilistically over time. Our work offers a promising solution for learning complex behaviors from visual observations that generalize to new environments.

Notes

In our experiments, we found that storing only \(\varvec{y}_l\) at the cell \(m^j\) where \(p_l\) lies, instead of along the sensor ray, does not degrade performance.

Our code for the minigrid experiments is open-sourced at https://github.com/tianyudwang/sirl.

References

Abbeel, P., & Ng, A. Y. (2004). Apprenticeship learning via inverse reinforcement learning. In International conference on machine learning (p. 1).

Argall, B. D., Chernova, S., Veloso, M., & Browning, B. (2009). A survey of robot learning from demonstration. Robotics and Autonomous Systems, 57(5), 469–483.

Atkeson, C. G., & Schaal, S. (1997). Robot learning from demonstration. In International conference on machine learning (Vol. 97, pp. 12–20).

Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(12), 2481–2495.

Bajcsy, A., Losey, D. P., O’malley, M. K., & Dragan, A. D. (2017). Learning robot objectives from physical human interaction. In Conference on robot learning.

Baker, C. L., Tenenbaum, J. B., & Saxe, R. R. (2007). Goal inference as inverse planning. In Annual meeting of the cognitive science society (Vol. 29).

Bertsekas, D. (1995). Dynamic programming and optimal control. Athena Scientific.

Brown, D. S., Goo, W., & Niekum, S. (2020). Betterthan-demonstrator imitation learning via automatically-ranked demonstrations. In Conference on robot learning (pp. 330–359).

Chen, L., Paleja, R., & Gombolay, M. (2021). Learning from suboptimal demonstration via self-supervised reward regression. In Conference on robot learning.

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder–decoder with atrous separable convolution for semantic image segmentation. In European conference on computer vision (pp. 801–818).

Chevalier-Boisvert, M., Willems, L., & Pal, S. (2018). Minimalistic grid-world environment for OpenAI Gym. https://github.com/ maximecb/gym-minigrid. GitHub.

Choy, C., Gwak, J., & Savarese, S. (2019). 4D spatio-temporal convnets: Minkowski convolutional neural networks. In IEEE conference on computer vision and pattern recognition (pp. 3075–3084).

Cohen, T., & Welling, M. (2016). Group equivariant convolutional networks. In International conference on machine learning (pp. 2990–2999).

Cortinhal, T., Tzelepis, G., & Aksoy, E. E. (2020). SalsaNext: Fast, uncertainty-aware semantic segmentation of lidar point clouds for autonomous driving. arXiv preprint arXiv:2003.03653.

Dohan, D., Matejek, B., & Funkhouser, T. (2015). Learning hierarchical semantic segmentations of lidar data. In International conference on 3D vision (pp. 273–281).

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., & Koltun, V. (2017). CARLA: An open urban driving simulator. In Proceedings of the 1st annual conference on robot learning (pp. 1–16).

Finn, C., Christiano, P., Abbeel, P., & Levine, S. (2016). A connection between generative adversarial networks, inverse reinforcement learning, and energy-based models. arXiv preprint arXiv:1611.03852.

Finn, C., Levine, S., & Abbeel, P. (2016). Guided cost learning: Deep inverse optimal control via policy optimization. In: International conference on machine learning (pp. 49–58).

Fu, J., Luo, K., & Levine, S. (2018). Learning robust rewards with adverserial inverse reinforcement learning. In International conference on learning representations.

Gan, L., Zhang, R., Grizzle, J. W., Eustice, R. M., & Ghaffari, M. (2020). Bayesian spatial kernel smoothing for scalable dense semantic mapping. IEEE Robotics and Automation Letters, 5(2), 790–797.

Ghasemipour, S. K. S., Zemel, R., & Gu, S. (2020). A divergence minimization perspective on imitation learning methods. In Conference on robot learning (pp. 1259–1277).

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

Gupta, S., Davidson, J., Levine, S., Sukthankar, R., & Malik, J. (2017). Cognitive mapping and planning for visual navigation. In Computer vision and pattern recognition (CVPR).

Haarnoja, T., Tang, H., Abbeel, P., & Levine, S. (2017). Reinforcement learning with deep energy-based policies. In International conference on machine learning (pp. 1352–1361).

Ho, J., & Ermon, S. (2016). Generative adversarial imitation learning. In Advances in neural information processing systems (pp. 4565–4573).

Hornung, A., Wurm, K. M., Bennewitz, M., Stachniss, C., & Burgard, W. (2013). OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Autonomous Robots, 34(3), 189–206.

Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning (Vol. 37, pp. 448–456).

Jain, A., Sharma, S., Joachims, T., & Saxena, A. (2015). Learning preferences for manipulation tasks from online coactive feedback. The International Journal of Robotics Research, 34, 1296–1313.

Jeon, H. J., Milli, S., & Dragan, A. (2020). Rewardrational (implicit) choice: A unifying formalism for reward learning. Advances in Neural Information Processing Systems, 33, 4415–4426.

Karaman, S., & Frazzoli, E. (2011). Sampling-based algorithms for optimal motion planning. The International Journal of Robotics Research, 30(7), 846–894.

Ke, L., Choudhury, S., Barnes, M., Sun, W., Lee, G., & Srinivasa, S. (2020). Imitation learning as f-divergence minimization. In International workshop on the algorithmic foundations of robotics.

Khan, A., Zhang, C., Atanasov, N., Karydis, K., Kumar, V., & Lee, D. D. (2018). Memory augmented control networks. In International conference on learning representations.

Kingma, D. P., & Ba, J. (2014). ADAM: A method for stochastic optimization. In International conference on learning representations.

LaValle, S. (1998). Rapidly-exploring random trees: A new tool for path planning (TR 98-11). Department of Computer Science, Iowa State University.

Levine, S. (2018). Reinforcement learning and control as probabilistic inference: Tutorial and review. arXiv preprint arXiv:1805.00909.

Levine, S., Popovic, Z., & Koltun, V. (2011). Nonlinear inverse reinforcement learning with Gaussian processes. In Advances in neural information processing systems (pp. 19–27).

Levine, S., Finn, C., Darrell, T., & Abbeel, P. (2016). End-to-end training of deep visuomotor policies. The Journal of Machine Learning Research, 17(1), 1334–1373.

Likhachev, M., Gordon, G., & Thrun, S. (2004). ARA*: Anytime A* with provable bounds on sub-optimality. In Advances in neural information processing systems (pp. 767–774).

Lu, C., van de Molengraft, M. J. G., & Dubbelman, G. (2019). Monocular semantic occupancy grid mapping with convolutional variational encoder–decoder networks. IEEE Robotics and Automation Letters, 4(2), 445–452.

Milioto, A., Vizzo, I., Behley, J., & Stachniss, C. (2019). RangeNet++: Fast and accurate lidar semantic segmentation. In IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 4213–4220).

Neu, G., & Szepesvári, C. (2007). Apprenticeship learning using inverse reinforcement learning and gradient methods. In Conference on uncertainty in artificial intelligence (pp. 295–302).

Ng, A. Y., & Russell, S. (2000). Algorithms for inverse reinforcement learning. In International conference on machine learning (pp. 663–670).

Oleynikova, H., Taylor, Z., Fehr, M., Siegwart, R., & Nieto, J. (2017). Voxblox: Incremental 3D Euclidean signed distance fields for onboard MAV planning. In IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 1366–1373).

Pan, Y., Cheng, C.-A., Saigol, K., Lee, K., Yan, X., Theodorou, E. A., & Boots, B. (2020). Imitation learning for agile autonomous driving. The International Journal of Robotics Research, 39(2–3), 286–302.

Papandreou, G., Chen, L.-C., Murphy, K. P., & Yuille, A. L. (2015). Weakly- and semi-supervised learning of a deep convolutional network for semantic image segmentation. In IEEE international conference on computer vision (pp. 1742–1750).

Pastor, P., Hoffmann, H., Asfour, T., & Schaal, S. (2009). Learning and generalization of motor skills by learning from demonstration. In IEEE international conference on robotics and automation (pp. 763–768).

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., & Desmaison, A. (2019). Pytorch: An imperative style, high-performance deep learning library. In Advances in neural information processing systems (pp. 8026–8037).

Rajeswaran, A., Kumar, V., Gupta, A., Vezzani, G., Schulman, J., Todorov, E., & Levine, S. (2018). Learning complex dexterous manipulation with deep reinforcement learning and demonstrations. In Proceedings of robotics: Science and systems (RSS).

Ramachandran, D., & Amir, E. (2007). Bayesian inverse reinforcement learning. In International joint conferences on artificial intelligence organization (Vol. 7, pp. 2586–2591).

Ratliff, N. D., Bagnell, J. A., & Zinkevich, M. A. (2006). Maximum margin planning. In International conference on machine learning (pp. 729–736).

Ross, S., Gordon, G., & Bagnell, D. (2011). A reduction of imitation learning and structured prediction to no-regret online learning. In International conference on artificial intelligence and statistics (pp. 627–635).

Sengupta, S., Sturgess, P., Ladickỳ, L., & Torr, P. H. (2012). Automatic dense visual semantic mapping from street-level imagery. In IEEE/RSJ international conference on intelligent robots and systems (pp. 857–862).

Shor, N. Z. (2012). Minimization methods for nondifferentiable functions (Vol. 3). Springer.

Song, Y. (2019). Inverse reinforcement learning for autonomous ground navigation using aerial and satellite observation data (unpublished master’s thesis). Carnegie Mellon University.

Sun, L., Yan, Z., Zaganidis, A., Zhao, C., & Duckett, T. (2018). Recurrent-OctoMap: Learning state-based map refinement for long-term semantic mapping with 3D-Lidar data. IEEE Robotics and Automation Letters, 3(4), 3749–3756.

Tamar, A., Wu, Y., Thomas, G., Levine, S., & Abbeel, P. (2016). Value iteration networks. In Advances in neural information processing systems (pp. 2154–2162).

Tew, P. A. (2016). An investigation of sparse tensor formats for tensor libraries (unpublished doctoral dissertation). Massachusetts Institute of Technology.

Thrun, S., Burgard, W., & Fox, D. (2005). Probabilistic robotics. MIT Press.

Wang, T., Dhiman, V., & Atanasov, N. (2020a). Learning navigation costs from demonstration in partially observable environments. In IEEE international conference on robotics and automation.

Wang, T., Dhiman, V., & Atanasov, N. (2020b). Learning navigation costs from demonstrations with semantic observations. In Conference on learning for dynamics and control.

Wu, B., Wan, A., Yue, X., & Keutzer, K. (2018). SqueezeSeg: Convolutional neural nets with recurrent CRF for real-time road-object segmentation from 3D lidar point cloud. In International conference on robotics and automation (pp. 1887–1893).

Wulfmeier, M., Wang, D. Z., & Posner, I. (2016). Watch this: Scalable cost-function learning for path planning in urban environments. In IEEE/RSJ international conference on intelligent robots and systems (IROS) (pp. 2089–2095).

Zhu, Y., Wang, Z., Merel, J., Rusu, A., Erez, T., Cabi, S., Tunyasuvunakool, S., Kramar, J., Hadsell, R., de Freitas, N., & Heess, N. (2018). Reinforcement and imitation learning for diverse visuomotor skills. In Robotics: Science and systems.

Ziebart, B. D., Maas, A., Bagnell, J., & Dey, A. K. (2008). Maximum entropy inverse reinforcement learning. In AAAI conference on artificial intelligence (pp. 1433–1438).

Funding

We gratefully acknowledge support from NSF CRII IIS-1755568, ONR SAI N00014-18-1-2828 and NSF No. 2218063.

Author information

Authors and Affiliations

Contributions

Conceptualization: TW, NA; Methodology: TW, NA; Software, investigation, and analysis: TW, VD; Writing, review and editing: TW, VD, NA; Supervision: NA.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary file 1 (mp4 86650 KB)

Appendix A: Comparison between Boltzmann and maximum entropy policies

Appendix A: Comparison between Boltzmann and maximum entropy policies

Value functions corresponding to the MaxEnt and Boltzmann policies in infinite horizon setting with discount \(\gamma =0.95\). The environment only has obstacles around the outer boundary. The start state is marked in green and the goal in red. The controls are \(\left\{ \textit{right}, \textit{down}, \textit{left}, \textit{up}\right\} \) at each state with constant true cost of 0 to arrive at the goal (which is an absorbing state), 1 to any state except the goal in the grid and infinity to any obstacle outside the border. Darker color indicates higher cost-to-go values to reach the goal (top two rows) or higher probability of choosing a control (bottom two rows). Although the absolute values of \(Q_{ME}\) and \(Q_{BM}\) are different, both have similar relative value differences across the controls, providing well-performing policies \(\pi _{ME}\) and \(\pi _{BM}\) (Color figure online)

This appendix compares the MaxEnt expert model of Ziebart et al. (2008) to the expert model proposed in Sect. 3.2. The MaxEnt model has been widely studied in the context of reinforcement learning and inverse reinforcement learning (Haarnoja et al., 2017; Finn et al., 2016; Levine, 2018). On the other hand, while a Boltzmann policy is a well-known method for exploration in reinforcement learning, it has not been used to model expert or learner behavior in inverse reinforcement learning.

The work of Haarnoja et al. (2017) shows that both a Boltzmann policy and the MaxEnt policy are special cases of an energy-based policy:

with appropriate choices of the energy function E. We study the two policies in the discounted infinite-horizon setting, as this is the most widely used setting for the MaxEnt model. Extensions to first-exit and finite-horizon formulations are possible. Consider a Markov decision process with finite state space \(\mathcal {X}\), finite control space \(\mathcal {U}\), transition model \(p(\varvec{x}' \mid \varvec{x}, \varvec{u})\), stage cost \(c(\varvec{x},\varvec{u})\), and discount factor \(\gamma \in (0,1)\).

Proposition 3

(Haarnoja et al. (2017, Thm. 1)) Define the maximum entropy Q-value as:

where \(\mathcal {H}(\pi (\cdot \mid \varvec{x})) = -\sum _{\varvec{u} \in \mathcal {U}} \pi (\varvec{u} \mid \varvec{x}) \log \pi (\varvec{u} \mid \varvec{x})\) is the Shannon entropy of \(\pi (\cdot \mid \varvec{x})\). Then, the maximum entropy policy satisfies:

Similarly, define the usual Q-value as:

and the Boltzmann policy associated with it as:

The value functions \(Q_{ME}\) and \(Q_{BM}\) can be seen as the fixed points of the following Bellman contraction operators:

In the latter, the Q values are bootstrapped with a “hard” min operator, while in the former they are bootstrapped with a “soft” min operator given by the log-sum-exponential operation. The form of the Bellman equations resembles the online SARSA update and offline Q-learning update in reinforcement learning. Consider temporal difference control with transitions \((\varvec{x}, \varvec{u}, c, \varvec{x}', \varvec{u}')\) using SARSA backups:

and Q-learning backups:

where \(\eta \) is a step-size parameter. If we additionally assume that the controls are sampled from the energy-based policy in (A1) defined by Q, the SARSA algorithm specifies the MaxEnt policy, while the Q-learning algorithm specifies the Boltzmann policy.