Abstract

Modeling law search and retrieval as prediction problems has recently emerged as a predominant approach in law intelligence. Focusing on the law article retrieval task, we present a deep learning framework named LamBERTa, which is designed for civil-law codes, and specifically trained on the Italian civil code. To our knowledge, this is the first study proposing an advanced approach to law article prediction for the Italian legal system based on a BERT (Bidirectional Encoder Representations from Transformers) learning framework, which has recently attracted increased attention among deep learning approaches, showing outstanding effectiveness in several natural language processing and learning tasks. We define LamBERTa models by fine-tuning an Italian pre-trained BERT on the Italian civil code or its portions, for law article retrieval as a classification task. One key aspect of our LamBERTa framework is that we conceived it to address an extreme classification scenario, which is characterized by a high number of classes, the few-shot learning problem, and the lack of test query benchmarks for Italian legal prediction tasks. To solve such issues, we define different methods for the unsupervised labeling of the law articles, which can in principle be applied to any law article code system. We provide insights into the explainability and interpretability of our LamBERTa models, and we present an extensive experimental analysis over query sets of different type, for single-label as well as multi-label evaluation tasks. Empirical evidence has shown the effectiveness of LamBERTa, and also its superiority against widely used deep-learning text classifiers and a few-shot learner conceived for an attribute-aware prediction task.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The general purpose of law search is to recognize legal authorities that are relevant to a question expressing a legal matter (Dadgostari et al. 2021). The interpretative uncertainty in law, particularly that related to the jurisprudential type which is capable of directly affecting citizens, has prompted many to model law search as a prediction problem (Dadgostari et al. 2021). Ultimately, this would allow lawyers and legal practitioners to explore the possibility of predicting the outcome of a judgment (e.g., the probable sentence relating to a specific case), through the aid of computational methods, also sometimes referred to as predictive justice (Viola 2017). Predictive justice is currently being developed, to a prevalent extent, following a statistical-jurisprudential approach: the jurisprudential precedents are verified and future decisions are predicted on their basis. However, as stated by legal professionals (Viola 2017), several reasons point out that this approach should not be preferred, because of its limited scope only to cases in which there are numerous precedents, so as to exclude unprecedented cases relating to new regulations, not yet subject to stratified jurisprudential guidelines. In fact, the jurisprudential approach is not in line with any civil law system—which is adopted in most European Union states and non-Anglophone countries in the world—with the consequence of a high risk of fallacy (i.e., repetition of errors based on precedents) and risk of standardization (i.e., if a lawsuit is contrary to many precedents, then no one will propose such a lawsuit).

Clearly, from a major perspective as a data-driven artificial-intelligence task, predicting judicial decisions is carried out exclusively based on the legal corpora available and the selected algorithms to use, as remarked by Medvedeva et al. (2020). Moreover, as also witnessed by an increased interest from the artificial intelligence and law research community, a key perspective in legal analysis and problem solving lays on the opportunities given by advanced, data-driven computational approaches based on natural language processing (NLP), data mining, and machine learning disciplines (Conrad and Branting 2018).

Predictive tasks in legal information systems have often been addressed as text classification problems, ranging from case classification and legal judgment prediction (Nallapati and Manning 2008; Liu and Hsieh 2006; Lin et al. 2012; Aletras et al. 2016; Sulea et al. 2017; Wang et al. 2018; Medvedeva et al. 2020), to legislation norm classification (Boella et al. 2011), and statute prediction (Liu et al. 2015). Early studies have focused on statistical textual features and machine learning methods, then the progress of deep learning methods for text classification (Goodfellow et al. 2016; Goldberg 2017) has prompted the development of deep neural network frameworks, such as recurrent neural networks, for single-task learning [e.g., charge prediction (Luo et al. 2017; Ye et al. 2018), sentence modality classification (O’Neill et al. 2017; Chalkidis et al. 2018), legal question answering (Do et al. 2017)] or even multi-task learning (e.g., Yang et al. (2019), Zhou et al. (2019)).

More recently, deep pre-trained language models, particularly the Bidirectional Encoder Representations from Transformers (BERT) (Devlin et al. 2019), have emerged showing outstanding effectiveness in several NLP tasks. Thanks to their ability to learn a contextual language understanding model, these models overcome the need for feature engineering (upon which classic, sparse vectorial representation models rely). Nonetheless, since they are originally trained from generic domain corpora, they should not be directly applied to a specific domain corpus, as the distributional representation (embeddings) of their lexical units may significantly shift from the nuances and peculiarities expressed in domain-specific texts—and this certainly holds for the legal domain as well, where interpreting and relating documents is particularly challenging.

Developing BERT models for legal texts has very recently attracted increased attention, mostly concerning classification problems (e.g., Rabelo et al. 2019; Chalkidis et al. 2019a; Sanchez et al. 2020; Shao et al. 2020; Chalkidis et al. 2020; Yoshioka et al. 2021; Nguyen et al. 2021). Our research falls into this context, as we propose a BERT-based framework for law article retrieval based on civil-law-based corpora. More specifically, as we wanted to benefit from the essential consultation provided by law professionals in our country, our proposed framework is completely specified using the Italian Civil Code (ICC) as the target legal corpus. Notably, only few works have been developed for Italian BERT-based models, such as a retrained BERT for various NLP tasks on Italian tweets (Polignano et al. 2019), and a BERT-based masked-language model for spell correction (Puccinelli et al. 2019); however, no study leveraging BERT for the Italian civil-law corpus has been proposed so far.

Our main contributions in this work are summarized as follows:

-

We push forward research on law document analysis for civil law systems, focusing on the modeling, learning and understanding of logically coherent corpora of law articles, using the Italian Civil Code as case in point.

-

We study the law article retrieval task as a prediction problem based on the deep machine learning paradigm. More specifically, following the lastest advances in research on deep neural network models for text data, we propose a deep pre-trained contextualized language model framework, named LamBERTa (Law article mining based on BERT architecture). LamBERTa is designed to fine-tune an Italian pre-trained BERT on the ICC corpora for law article retrieval as prediction, i.e., given a natural language query, predict the most relevant ICC article(s).

-

Notably, we deal with a very challenging prediction task, which is characterized not only by a high number (i.e., hundreds) of classes—as many as the number of articles—but also by the issues that arise from the need for building suitable training sets given the lack of test query benchmarks for Italian legal article retrieval/prediction tasks. This also leads to coping with few-shot learning issues (i.e., learning models to predict the correct class of instances when a small amount of examples are available in the training dataset), which has been recognized as one of the so-called extreme classification scenarios (Bengio et al. 2019; Chalkidis et al. 2019b). We design our LamBERTa framework to solve such issues based on different schemes of unsupervised training-instance labeling that we originally define for the ICC corpus, although they can easily be generalized to other law code systems.

-

We address one crucial aspect that typically arises in deep/machine learning models, namely explainability, which is clearly of interest also in artificial intelligence and law (e.g., Branting et al. 2019; Hacker et al. 2020). In this regard, we investigate explainability of our LamBERTa models focusing on the understanding of how they form complex relationships between the textual tokens. We further provide insights into the patterns generated by LamBERTa models through a visual exploratory analysis of the learned representation embeddings.

-

We present an extensive, quantitative experimental analysis of LamBERTa models by considering:

-

six different types of test queries, which vary by originating source, length and lexical characteristics, and include comments about the ICC articles as well as case law decisions from the civil section of the Italian Court of Cassation that contain significant jurisprudential sentences associated with the ICC articles;

-

single-label evaluation as well as multi-label evaluation tasks;

-

different sets of assessment criteria.

The obtained results have shown the effectiveness of LamBERTa, and its superiority against (i) widely used deep-learning text classifiers that have been tested on our different query sets for the article prediction tasks, and against (ii) a few-shot learner conceived for an attribute-aware prediction task that we have newly designed based on the availability of ICC metadata.

-

The remainder of the paper is organized as follows. Section 2 overviews recent works that address legal classification and retrieval problems based on deep learning methods. Section 3 describes the ICC corpus, and Sect. 4 presents our proposed framework for the civil-law article retrieval problem. Sections 5 and 6 are devoted to qualitative investigations on the explainability and interpretability of LamBERTa models. Quantitative experimental evaluation methodology and results are instead presented in Sects. 7 and 8. Finally, Sect. 9 concludes the paper.

2 Related work

Our work belongs to the corpus of studies that reflect the recent revival of interest in the role that machine learning, particularly deep neural network models, can take on artificial intelligence applications for text data in a variety of domains, including the legal one. In this regard, here we overview recent research works that employ deep learning methods for addressing computational problems in the legal domain, with a focus on classification and retrieval tasks. Note that the latter are major categories for the data-driven legal analysis literature review, along with entailment and information extraction based on NLP approaches (e.g., named entity recognition, relation extraction, tagging), as extensively studied by Chalkidis and Kampas in Chalkidis and Kampas (2019), to which we refer the interested reader for a broader overview.

Most existing works on deep-learning-based law analysis exploit recurrent neural network models (RNNs) and convolutional neural networks (CNNs), along with the classic multi-layer perceptron (MLP). For instance, O’Neill et al. (2017) utilize all the above methods for classifying deontic modalities in regulatory texts, demonstrating superiority of neural network models over competitive classifiers including ensemble-based decision tree and largest margin classifiers. Focusing on obligation and prohibition extraction as a particular case of deontic sentence classification, Chalkidis et al. (2018) show the benefits of employing a hierarchical attention-based bidirectional LSTM model that considers both the sequence of words in each sentence and the sequence of sentences. Branting et al. (2017) consider administrative adjudication prediction in motion-rulings, Board of Veterans Appeals issue decisions, and World Intellectual Property Organization domain name dispute decisions. In this regard, three approaches for prediction are evaluated: maximum entropy over token n-grams, SVM over token n-grams, and a hierarchical attention network (Yang et al. 2016) applied to the full text. While no absolute winner was observed, the study highlights the benefit of using feature weights or network attention weights from these predictive models to identify salient phrases in motions or contentions and case facts. Nguyen et al. (2017, 2018) propose several approaches to train long short term memory (LSTMs) models and conditional random field (CRF) models for the problem of identifying two key portions of legal documents, i.e., requisite and effectuation segments, with evaluation on Japanese civil code and Japanese National Pension Law dataset. In Chalkidis and Kampas (2019) by Chalkidis and Kampas, a major contribution is the development of word2vec skip-gram embeddings trained on large legal corpora (mostly from European, UK, and US legislations).

Note that our work is clearly different from the aforementioned ones, since they not only focus on legal corpora other than Italian civil law articles but also they consider machine learning and neural network models that do not exploit the same ability as pre-trained deep language models.

To address the problem of predicting the final charges according to the fact descriptions in criminal cases, Hu et al. (2018) propose to exploit a set of categorical attributes to discriminate among charges (e.g., violence, death, profit purpose, buying and selling). By leveraging these annotations of charges based on representative attributes, the proposed learning framework aims to predict attributes and charges of a case simultaneously. An attribute attention mechanism is first applied to select factual information from facts that are relevant to each particular attribute, so to generate attribute-aware fact representations that can be used to predict the label of an attribute, under a binary classification task. Then, for the task of charge prediction, the attribute-aware fact representations aggregated by average pooling are also concatenated with the attribute-free fact representations produced by a conventional LSTM neural network. The training objective is twofold, as it minimizes the cross-entropy loss of charge prediction and the cross-entropy loss of attribute prediction.

It should be noticed that the above study was especially designed to deal with the typical imbalance of the case numbers of various charges as well as to distinguish related or “confusing” charges. In particular, the first aspect corresponds to a challenge of insufficient training data for some charges, as there are indeed charges with limited cases. This appears to be in analogy with the few-shot learning scenario in law article prediction; therefore, in Sect. 8.3, we shall present a comparative evaluation stage with the method in Hu et al. (2018) adapted for the ICC law article prediction task.

Also in the context of legal judgment prediction, Li et al. (2019) propose a multichannel attention-based neural network model, dubbed MANN, that exploits not only the case facts but also information on the defendant persona, such as traits that determine the criminal liability (e.g., age, health condition, mental status) and criminal records. A two-tier structure is used to empower attention-based sequence encoders to hierarchically model the semantic interactions from different parts of case description. Results on datasets of criminal cases in mainland China have shown improvements over other neural network models for judgment prediction, althugh MANN may suffer from the imbalanced classes of prison terms and cannot deal with criminal cases with multiple defendants. More recently, Gan et al. (2021) have proposed to inject legal knowledge into a neural network model to improve performance and interpretability of legal judgment prediction. The key idea is to model declarative legal knowledge as a set of first-order logic rules and integrate these logic rules into a co-attention network based model (i.e., bidirectional information flows between facts and claims) in an end-to-end way. The method has been evaluated on a collection of private loan law cases, where each instance in the dataset consists of a fact description and the plaintiff’s multiple claims, demonstrating some advantage over AutoJudge (Long et al. 2019), which models the interactions between claims and fact descriptions via pair-wise attention in a judgment prediction task.

The above two works are distant from ours, not only in terms of the target corpora and addressed problems but also since we do not use any type of information other than the text of the articles, nor any injected knowledge base.

In the last few years, the Competition on Legal Information Extraction/ Entailment (COLIEE) has been an important venue for displaying studies focused on case/statute law retrieval, entailment, and question answering. In most works appeared in the most recent COLIEE editions, the observed trend is to tackle the retrieval task by using CNNs and RNNs in the entailment phase, possibly in combination of additional features produced by applying classic term relevance weighting methods (e.g., TF-IDF, BM25) or statistical topic models (e.g., Latent Dirichlet Allocation). For instance, Kim et al. (2015) propose a binary CNN-based classifier model for answering to the legal queries in the entailment phase. The entailment model introduced by Morimoto et al. (2017) is instead based on MLP incorporating the attention mechanism. Nanda et al. (2017) adopt a combination of partial string matching and topic clustering for the retrieval task, while they combine LSTM and CNN models for the entailment phase. Do et al. (2017) propose a CNN binary model with additional TF-IDF and statistical latent semantic features.

The aforementioned studies differ from ours as they mostly focus on CNN and RNN based neural network models, which are indeed used as competing methods against our proposed deep-pretrained language model framework (cf. Sect. 8.3).

Exploiting BERT for law classification tasks has recently attracted much attention. Besides a study on Japanese legal term correction proposed by Yamakoshi et al. (2019), a few very recent works address sentence-pair classification problems in legal information retrieval and entailment scenarios. Rabelo et al. (2019) propose to combine similarity based features and BERT fine-tuned to the task of case law entailment on the data provided by the Competition on Legal Information Extraction/Entailment (COLIEE), where the input is an entailed fragment from a case coupled with a candidate entailing paragraph from a noticed case. Sanchez et al. (2020) employ BERT in its regression form to learn complex relevance criteria to support legal search over news articles. Specifically, the input consists of a query-document pair, and the output is a predicted relevance score. Results have shown that BERT trained either on a combined title and summary field of documents or on the documents’ contents outperform a learning-to-rank approach based on LambdaMART equipped with features engineered upon three groups of relevance criteria, namely topical relevance, factual information, and language quality. However, in legal case retrieval, the query case is typically much longer and more complex than common keyword queries, and the definition of relevance between a query case and a supporting case could be beyond general topical relevance, which makes it difficult to build a large-scale case retrieval dataset. To address this challenge, Shao et al. (2020) propose a BERT framework to model semantic relationships to infer the relevance between two cases by aggregating paragraph-level dependencies. To this purpose, the BERT model is fine-tuned with a relatively small-scale case law entailment dataset to adapt it to the legal scenario. Experiments conducted on the benchmark of the relevant case retrieval task in COLIEE 2019 have shown effectiveness of the proposed BERT model.

We notice that the above two works require to classify query-document pairs (i.e., pairs of query and news article, in Sanchez et al. (2020), or pairs of case law documents, in Shao et al. (2020)), whereas our models are trained by using articles only. At the time of submission of this article, we also become aware of a small bunch of works presented at COLIEE-2020 that compete for the statute law retrieval and question answering (statute entailment) tasksFootnote 1 using BERT (Rabelo et al. 2020). In particular, for the statute law retrieval task, the goal is to read a legal bar exam question and retrieve a subset of Japanese civil code articles to judge whether the question is entailed or not. The BERT-based approach has shown to improve overall retrieval performance, although there are still numbers of questions that are difficult to retrieve by BERT too. At COLIEE-2021, which was held in June 2021,Footnote 2 there has been an increased attention and development of BERT-based methods to address the statute and case law processing tasks (Yoshioka et al. 2021; Nguyen et al. 2021).

Again, it should be noted that the above works at COLIEE Competitions assume that the training data are questions and relevant article pairs, whereas our training data instances are derived from articles only. Nonetheless, we recognize that some of the techniques introduced at COLIEE-2021, such as deploying weighted aggregation on models’ predictions and the iterated self-labeled and fine-tuning process, are worthy of investigation and we shall delve into them in our future research.

In addition to the COLIEE Competitions, it is worth mentioning the study by Chalkidis et al. (2019a), which provides a threefold contribution. They release a new dataset of cases from the European Court of Human Rights (ECHR) for legal judgment prediction, which is larger (about 11.5k cases) than earlier datasets, and such that each case along with its list of facts is mapped to articles violated (if any) and is assigned an ECHR importance score. The dataset is used to evaluate a selection of neural network models, for different tasks, namely binary classification (i.e., whether a case violates a human rights article or not), multi-label classification (i.e., which types of violation, if any), and case importance detection. Results have shown that the neural network models outperform an SVM model with bag-of-words features, which was previously used in related work such as (Aletras et al. 2016). Moreover, a hierarchical version of BERT, dubbed HIER-BERT, is proposed to overcome the BERT’s maximum length limitation, by first generating fact embeddings and then using them through a self-attention mechanism to produce case embeddings, similarly to a hierarchical attention network model (Yang et al. 2016).

The latter aspect on the use of a hierarchical attention mechanism, especially when integrated into BERT, is very interesting and useful on long legal documents, such as case law documents, to improve the performance of pre-trained language models like BERT that are designed with constraints on the tokenized text length. Nonetheless, as we shall discuss later in Sect. 4.4, this contingency does not represent an issue in the setting of our proposed framework, due not only to the characteristic length of ICC articles but also to our designed schemes of unsupervised training-instance labeling.

3 Data

The Italian Civil Code (ICC) is divided into six, logically coherent books, each in charge of providing rules for a particular civil law theme:

-

Book-1, on Persons and the Family, articles 1-455–contains the discipline of the juridical capacity of persons, of the rights of the personality, of collective organizations, of the family;

-

Book-2, on Successions, articles 456-809—contains the discipline of succession due to death and the donation contract;

-

Book-3, on Property, articles 810-1172—contains the discipline of ownership and other real rights;

-

Book-4, on Obligations, articles 1173-2059—contains the discipline of obligations and their sources, that is mainly of contracts and illicit facts (the so-called civil liability);

-

Book-5, on Labor, articles 2060-2642—contains the discipline of the company in general, of subordinate and self-employed work, of profit-making companies and of competition;

-

Book-6, on the Protection of Rights, articles 2643-2969—contains the discipline of the transcription, of the proofs, of the debtor’s financial liability and of the causes of pre-emption, of the prescription.

The articles of each book are internally organized into a hierarchical structure based on four levels of division, namely (from top to bottom in the hierarchy): “titoli” (i.e., chapters), “capi” (i.e., subchapters), “sezioni” (i.e., sections), and “paragrafi” (i.e., paragraphs). It should however be emphasized that this hierarchical classification was not meant as a crisp, ground-truth organization of the articles’ contents: indeed, the topical boundaries of contiguous chapters and subchapters are often quite smooth, as articles in the same group often not only vary in length but can also provide dispositions that are more related to articles in other groups.

The ICC is obviously publicly available, in various digital formats. From one of such sources, we extracted article id, title and content of each article. We cleaned up the text from non-ASCII characters, removed numbers and date, normalized all variants and abbreviations of frequent keywords such as “articolo” (i.e., article), “decreto legislativo” (i.e., legislative decree), “Gazzetta Ufficiale” (i.e., Official Gazette), and finally we lowercased all letters.

The ICC currently in force was enacted by Royal decree no. 262 of 16 March 1942, and it consists of 2969 articles. This number actually corresponds to 3225 articles considering all variants and subsequent insertions, which are designated by using Latin-term suffixes (e.g., “bis”, “ter”, “quater”). However, during its history, the ICC was revised several times and subjected to repealings, i.e., per-article partial or total insertions, modifications and removals; to date, 2294 articles have been repealed. Table 1 summarizes main statistics on the preprocessed ICC books.

4 The proposed LamBERTa framework

In this section we present our proposed learning framework for civil-law article retrieval. We first formulate the problem statement in Sect. 4.1 and overview the framework in Sect. 4.2. We present our devised learning approaches in Sect. 4.3. We describe the data preparation and preprocessing in Sect. 4.4, then in Sect. 4.5 we define our unsupervised training-instance labeling methods for the articles in the target corpus. Finally, in Sect. 4.6, we discuss major settings of the proposed framework.

4.1 Problem setting

Our study is concerned with law article retrieval, i.e., finding articles of interest out of a legal corpus that can be recommended as an appropriate response to a query expressing a legal matter.

To formalize this problem, we assume that any query is expressed in natural language and discusses a legal subject that is in principle covered by the target legal corpus (i.e., the ICC, in our context). Moreover, a query is assumed to be free of references to any article identifier in the ICC.

We address the law article retrieval task based on the supervised machine learning paradigm: given a new, user-provided instance, i.e., a legal question, the goal is to automatically predict the category associated to the posed question. More precisely, we deal with the more general case in which a probability distribution over all the predefined categories is computed in response to a query. The prediction is carried out by a machine learning system that is trained on a target legal corpus—whose documents, i.e., articles, are annotated with the actual category they belong to—in order to learn a computational model, also called classifier, that will then be used to perform the predictions against legal queries by exclusively utilizing the textual information contained in the annotated documents.

4.1.1 Motivations for BERT-based approach

In this respect, our objective is to leverage deep neural-network-based, pre-trained language modeling to solve the law article retrieval task. This has a number of key advantages that are summarized as follows. First, like any other deep neural network models, it totally avoids manual feature engineering, and hence the need for employing feature selection methods as well as feature relevance measures (e.g., TF-IDF). Second, like sophisticated recurrent and convolutional neural networks, it models language semantics and non-linear relationships between terms; however, better than recurrent and convolutional neural networks and their combinations, it is able to capture subtle and complex lexical patterns including the sequential structure and long-term dependencies, thus obtaining the most comprehensive local and global feature representations of a text sequence. Third, it incorporates the so-called attention mechanism, which allows a learning model to assign higher weight to text features according to their higher informativeness or relevance to the learning task. Fourth, being a real bidirectional Transformer model, it overcomes the main limitations of early deep contextualized models like ELMO (Peters et al. 2018) (whose left-to-right language model and right-to-left language model are actually independently trained) or decoder-based Transformer models, like OpenAI GPT (Radford and Sutskever 2018).

4.1.2 Challenges

It should however be noted that, like any other machine learning method, using deep pre-trained models like BERT for classification tasks normally requires the availability of data annotated with the class labels, so to design the independent training and testing phases for the classifier. However, this does not apply to our context.

As previously mentioned, in this work we face three main challenges:

-

the first challenge refers to the high number (i.e., hundreds) of classes, which correspond to the number of articles in the ICC corpus, or portion of it, that is used to train a LamBERTa model;

-

the second challenge corresponds to the so-called few-shot learning problem, i.e., dealing with a small amount of per-class examples to train a machine learning model, which Bengio et al. recognize as one of the “extreme classification” scenarios (Bengio et al. 2019).

-

the third challenge derives from the unavailability of test query benchmarks for Italian legal article retrieval/prediction tasks. This has prompted us to define appropriate methods for data annotation, thus for building up training sets for the LamBERTa framework. To address this problem, we originally define different schemes of unsupervised training-instance labeling; notably, these are not ad-hoc defined for the ICC corpus, rather they can be adapted to any other law code corpus.

In the following, we overview our proposed deep pre-trained model based framework that is designed to address all the above challenges.

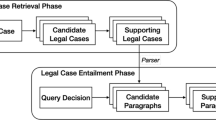

4.2 Overview of the LamBERTa framework

Figure 1 shows the conceptual architecture of our proposed LamBERTa – Law article mining based on BERT architecture. The starting point is a pre-trained Italian BERT model whose source data consists of a recent Wikipedia dump, various texts from the OPUS corpora collection, and the Italian part of the OSCAR corpus; the final training corpus has a size of 81GB and 13 138 379 147 tokens.Footnote 3

LamBERTa models are generated by fine-tuning the pre-trained Italian BERT model on a sequence classification task (i.e., BERT with a single linear classification layer on top) given in input the articles of the ICC or a portion of it.

It is worth noting that the LamBERTa architecture is versatile w.r.t. the adopted learning approach and the training-instance labeling scheme for a given corpus of ICC articles. These aspects are elaborated on next.

4.3 Global and local learning approaches

We consider two learning approaches, here dubbed global and local learning, respectively. A global model is trained on the whole ICC, whereas a local model is trained on a particular book of the ICC.Footnote 4 Our rationale underlying this choice is as follows:

-

on the one hand, local models are designed to embed the logical coherence of the articles within a particular book and, although limited to its corresponding topical boundaries, they are expected to leverage the multi-faceted semantics underlying a specific civil law theme (e.g., inheritage);

-

on the other hand, books are themselves part of the same law code, and hence a global model might be useful to capture possible interrelations between the single books, however, by embedding different topic signals from different books (e.g. inheritage of Book-2 vs. labor law of Book-5), it could incur the risk of topical dilution over all the ICC.

Either type of model is designed to be a classifier at article level, i.e., class labels correspond to the articles in the book(s) covered by the model. Given the one-to-one association between classes and articles, a question becomes how to create suitable training sets for our LamBERTa models. Our key idea is to adopt an unsupervised annotation approach, which is discussed in the next section.

4.4 Data preparation

To tailor the ICC articles to the BERT input format, we initially carried out segmentation of the content of each article into sentences. This was then followed by tokenization of the sentences and text encoding, which are described next.

4.4.1 Domain-specific terms injection and tokenization

BERT was trained using the WordPiece tokenization. This is an effective way to alleviate the open vocabulary problems in neural machine translation, since a word can be broken down into sub-word units, which are constructed during the training time and depend on the corpus the model was initially trained on.

However, when retraining BERT on the ICC articles to learn global as well as local models, it is likely that some important terms occurring in the legal texts are missing in the pre-trained lexicon; therefore, to make BERT aware of the domain-specific (i.e., legal) terms and avoid subwording such terms thus disrupting their semantics, we injected a selection of terms from the ICC articles into the pre-existing BERT vocabulary before tokenization.Footnote 5

To select the domain-specific terms to be added, we carried out the following steps: if we denote with D the input (portion of) ICC text for the model to learn, we first preprocessed the text D as described in Sect. 3, then we removed Italian stopwords, and finally filtered out overly frequent terms (as occurring in more than 50% of the articles in D) as well as hapax terms. Table 2 reports the number of added tokens and the final number of tokens, for each input corpus to our LamBERTa local and global models.

4.4.2 Text encoding

BERT utilizes a fixed sequence size (usually 512) for each tokenized text, which implies both padding of shorter sequences and truncation of longer sequences. While padding has no side effect, truncation may produce loss of information.

In learning our models, we wanted to avoid the above aspect, therefore we investigated any condition causing such undesired effect in our input data. We found out that this contingency is very rare in each book of the ICC, even reducing the maximum length to 256 tokens; the only situation that would lead to truncation corresponds to an article sentence that is logically organized in multiple clauses separated by semicolon: for these cases, we treated each of the subsentences as one training unit associated with the same article class-label.

4.5 Methods for unsupervised training-instance labeling

As previously discussed, our LamBERTa classification models are trained in such a way that it holds an one-to-one correspondence between articles in a target corpus and class labels. Moreover, the entire corpus must be used to train the model so to embed the whole knowledge therein. However, given the uniqueness of each article, the general problem we face is how to create as many training instances as possible for each article to effectively train the models.

Our key idea is to select and combine portions of each article to generate the training units for it. To this aim, we devise different unsupervised schemes for data labeling the ICC articles to create the training sets of LamBERTa models. Our schemes adopt different strategies for selecting and combining portions of each article to derive the training sets, but they share the requirements of generating a minimum number of training units per article, here denoted as minTU; moreover, since each article is usually comprised of few sentences, and minTU needs to be relatively large (we chose 32 as default value), each of the schemes implements a round-robin (RR) method that iterates over replicas of the same group of training units per article until at least minTU are generated.

In the following, we define our methods for unsupervised training-instance labeling of the ICC articles (in square brackets, we indicate the notation that will be used throughout the remainder of the paper):

-

Title-only [T]. This is the simplest yet lossy scheme, which keeps an article’s title while discarding its content; the round-robin block is just the title of an article.Footnote 6

-

n-gram. Each training unit corresponds to n consecutive sentences of an article; the round-robin block starts with the n-gram containing the title and ends with the n-gram containing the last sentence of the article. We set \(n \in \{1,2,3\}\), i.e., we consider a unigram [UniRR], a bigram [BiRR], and a trigram [TriRR] model, respectively.

-

Cascade [CasRR]. The article’s sentences are cumulatively selected to form the training units; the round-robin block starts with the first sentence (i.e., the title), then the first two sentences, and so on until all article’s sentences are considered to form a single training unit.

-

Triangle [TglRR]. Each training unit is either an unigram, a bigram or a trigram, i.e., the round-robin block contains all n-grams, with \(n \in \{1,2,3\}\), that can be extracted from the article’s title and description.

-

Unigram with parameterized emphasis on the title [UniRR.T\(^+\)]. The set of training units is comprised of one subset containing the article’s sentences with round-robin selection, and another subset containing only replicas of the article’s title. More specifically, the two subsets are formed as follows:

-

The first subset is of size equal to the maximum between the number of article’s sentences and the quantity \(m \times mean\_s\), where m is a multiplier (set to 4 as default) and \(mean\_s\) expresses the average number of sentences per article, excluding the title. As reported in Table 1 (sixth column), this mean value can be recognized between 3 and 4—recall that the title is excluded from the count— therefore we set \(mean\_s \in \{3,4\}\).

-

The second subset finally contains \(minTU - m \times mean\_s\) replicas of the title.

-

-

Cascade with parameterized emphasis on the title [CasRR.T\(^+\)] and Triangle with parameterized emphasis on the title [TglRR.T\(^+\)]. These two schemes follow the same approach as UniRR.T\(^+\) except for the composition of the round-robin block, which corresponds to CasRR and TglRR, respectively, with the title left out from this block and replicated in the second block, for each article.

4.6 Learning configuration

An input to LamBERTa is of the form [CLS, \(\langle\)text\(\rangle\), SEP], where CLS (stands for ‘classification’) and SEP are special tokens respectively at the beginning of each sequence and at separation of two parts of the input. These two special tokens are associated with two vectorial dense representations or embeddings, denoted as \(\hbox {E}_{[\mathrm {CLS}]}\) and \(\hbox {E}_{[\mathrm {SEP}]}\), respectively; analogously, there are as many embeddings \(\hbox {E}_i\) as the number of tokens of the input text. Note also that as discussed in Sect. 4.4, each sequence in a mini-batch is padded to the maximum length (e.g., 256 tokens) in the batch. In correspondence of each input embedding \(\hbox {E}_i\), an embedding \(\hbox {T}_i\) is outputted, and it can be seen as the contextual representation of the i-th token of the input text. Instead, the final hidden state, i.e., output embedding C, corresponding to the CLS token captures the high level representation of the entire text to be used as input to further levels in order to train any sentence-based task, such as sentence classification. This vector, which is by design of size 768, is fed to a single layer neural network followed by sigmoid activation whose output represents the distribution probability over the law articles of a book.

LamBERTa models were trained using a typical configuration of BERT for masked language modeling, with 12 attention heads and 12 hidden layers, and initial (i.e., pre-trained) vocabulary of 32 102 tokens. Each model was trained for 10 epochs, using cross-entropy as loss function, Adam optimizer and initial learning rate selected within [1e-5, 5e-5] on batches of 256 examples.

It should be noted that our LamBERTa models can be seen as relatively light in terms of computational requirements, since we developed them under the Google Colab GPU-based environment with a limited memory of 12 GB and 12-hour limit for continuous assignment of the virtual machine.Footnote 7

5 Explainability of LamBERTa models based on Attention Patterns

In this section, we start our understanding of what is going on inside the “black box” of LamBERTa models. One important aspect is the explainability of LamBERTa models, which we investigate here focusing on the formation of complex relationships between tokens.

Like any BERT-based architecture, our LamBERTa leverages the Transformer paradigm, which allows for processing all elements simultaneously by forming direct connections between individual elements through a mechanism known as attention. Attention is a way for a model to assign weight to input features (i.e., parts of the texts) based on their high informativeness or importance to some task. In particular, attention enables the model to understand how the words relate to each other in the context of the sentence, by forming composite representations that the model can reason about.

BERT’s attention patterns can assume several forms, such as delimiter-focused, bag-of-words, and next-word patterns. Through the lens of the bertviz visualization tool,Footnote 8 we show how LamBERTa forms its distinctive attention patterns. For this purpose, here we present a selection of examples built upon sentences from Book-2 of the ICC (i.e., relevant to key concepts in inheritance law), which are next reported both in Italian and English-translated versions.

The attention-head view in bertviz visualizes attention patterns as lines connecting the word being updated (left) with the word(s) being attended to (right), for any given input sequence, where color intensity reflects the attention weight. Figure 2a–c shows noteworthy examples focused on the word “succession”, from the following sentences:

from Art. 456: “la successione si apre al momento della morte nel luogo dell’ultimo domicilio del defunto” (i.e., “the succession opens at the moment of death in the place of the last domicile of the deceased person”)

from Art. 737: “i figli e i loro discendenti ed il coniuge che concorrono alla successione devono conferire ai coeredi tutto ciò che hanno ricevuto dal defunto per donazione” (i.e., the children and their descendants and the spouse who contribute to the succession must give to the co-heirs everything they have received from the deceased person as a donation)

In Fig. 2a, we observe how the source is connected to a meaningful, non-contiguous set of words, particularly, “apre” (“opens”), “morte” (“death”), and “defunto” (“deceased person”). In addition, in Fig. 2b, we observe how “successione” is related to “coniuge” (“spouse”), “donazione” (“donation”), which is further enriched in the two-head attention patterns with “coeredi” (“co-heirs”), “conferire” (“give”), and “concorrono” (“contribute”), shown in Fig. 2c; moreover, “successione” is still connected to “defunto”. Remarkably, these patterns highlight the model’s ability not only to mine semantically meaningful patterns that are more complex than next-word or delimiter-focused patterns, but also to build patterns that consistently hold across various sentences sharing words. Note that, as shown in our examples, these sentences can belong to different contexts (i.e., different articles), and can significantly vary in length. The latter point is particularly evident, for instance, in the following example sentences:

from Art. 457: “l’eredità si devolve per legge o per testamento. Non si fa luogo alla successione legittima se non quando manca, in tutto o in parte, quella testamentaria” (i.e., “the inheritance is devolved by law or by will. There is no place for legitimate succession except when the testamentary succession is missing, in whole or in part”)

from Art. 683: “la revocazione fatta con un testamento posteriore conserva la sua efficacia anche quando questo rimane senza effetto perché l’erede istituito o il legatario è premorto al testatore, o è incapace o indegno, ovvero ha rinunziato all’eredità o al legato” (i.e., “ the revocation made with a later will retains its effectiveness even when this remains without effect because the established heir or legatee is premortal to the testator, or is incapable or unworthy, or has renounced the inheritance or the legacy”)

Focusing now on “eredità” (“inheritance”), in Art. 457 we found attention patterns with “testamento” (“testament”), “legittima” (“legitimate”), “testamentaria” (“testamentary succession”), whereas in the long sentence from Art. 683, we again found a link to “testamento” (“testament”) as well as with the related concept “testatore” (“testator”), in addition to connections with “premorto” (“premortal”), “indegno” (“unworthy”), and “rinunziato” (“renounced”).

We point out that our investigation of LamBERTa models’ attention patterns was found to be persistent over different input sequences, besides the above discussed examples. This has unveiled the ability of LamBERTa models to form different types of patterns, which include complex bag-of-words and next-word patterns.

6 Visualization of ICC LamBERTa Embeddings

Visualization of the ICC article embeddings produced by LamBERTa global model, transformed onto a 3D t-SNE space. Color coding is the same as in Fig. 3

Our qualitative evaluation of LamBERTa models is also concerned with the core outcome of the models, that is, the learned representation embeddings for the inputted text.

As previously mentioned in Sect. 4.6, for any given text in input, a real-valued vector C of length 768 is produced downstream of the 12 layers of a BERT model, in correspondence with the input embedding \(\hbox {E}_{[\mathrm {CLS}]}\) relating to the special token CLS. This output embedding C can be seen as an encoded representation of the input text supplied to the model.

In the following, we present a visual exploratory analysis of LamBERTa embeddings based on a powerful, widely-recognized method for the visualization of high-dimensional data, namely t-Distributed Stochastic Neighbor Embedding (t-SNE) (van der Maaten and Hinton 2008). t-SNE is a non-linear technique for dimensionality reduction that is highly effective at providing an intuition of how the data is arranged in a high-dimensional space. The t-SNE algorithm calculates a similarity measure between pairs of instances in the high-dimensional space and in the low-dimensional space, usually 2D or 3D. Then, it converts the pairwise similarities to joint probabilities and tries to minimize the Kullback-Leibler divergence between the joint probabilities of the low-dimensional embedding and the high-dimensional data.

It is well-known that t-SNE outputs provide better and more interpretable results than Principal Component Analysis (PCA) and other linear dimensionality reduction models, which are not effective at interpreting complex polynomial relationships between features. In particular, by seeking to maximize variance and preserving large pairwise distances, the classic PCA focuses on placing dissimilar data points far apart in a lower dimension representation; however, in order to represent high-dimensional data on low-dimensional, non-linear manifold, it is important that similar data points must be represented close together. This is ensured by t-SNE, which preserves small pairwise distances or local similarities (i.e., nearest-neighbors), so that similar points on the manifold are mapped to similar points in the low-dimensional representation.

Figure 3 displays 3D t-SNE representations of the article embeddings generated by LamBERTa local models, for each book of the ICC. (Each point represents the t-SNE transformation of the embedding of an article onto a 3D space, while colors are used to distinguish the various books.) In the figure, we show progressive combinations of the results from different books,Footnote 9 while the last chart corresponds to the whole ICC. This choice of presentation is motivated to ease the readability of each book’s article embeddings. It can be noted that the LamBERTa local models are able to generate embeddings so that t-SNE can effectively put nearest-neighbor cases together, with a certain tendency of distributing the points from different books in differest subspaces.

Let us now compare the above results with those from Fig. 4, which shows the 3D t-SNE representations of the article embeddings generated by LamBERTa global model.Footnote 10 From the comparison, we observe a less compact and localized representation in the global model embeddings w.r.t. the local model ones. This is interesting yet expected, since global models are designed to go beyond the boundaries of a book, whereas local models focus on the interrelations between articles on the same book.

It should be noted that while the above remark would hint at preferring the use of local models against global models, at least in terms of interpretability based on visual exploratory analysis, we shall deep our understanding on the effectiveness of the two learning approaches in the next section, which is dedicated to the presentation and discussion of our extensive, quantitative experimental evaluation of LamBERTa models.

7 Experimental evaluation

In this section we present the methodology and results of an experimental evaluation that we have thoroughly carried out on LamBERTa models. In the following, we first state our evaluation goals in Sect. 7.1, then we define the types of test queries and select the datasets in Sect. 7.2, finally we describe our methodology and assessment criteria in Sect. 7.3.

7.1 Evaluation goals

Our main evaluation goals can be summarized as follows:

-

To validate and measure the effectiveness of LamBERTa models for law article retrieval tasks: how do local and global models perform on different evaluation contexts, i.e., against queries of different type, different length, and different lexicon? (Sect. 8.1)

-

To evaluate LamBERTa models in single-label as well as multi-label classification tasks: how do they perform w.r.t. different assumptions on the article relevance to a query, particularly depending on whether a query is originally associated with or derived from a particular article, or by-definition associated with a group of articles? (Sect. 8.1)

-

To understand how a LamBERTa model’s behavior is affected by varying and changing its constituents in terms of training-instance labeling schemes and learning parameters (Sect. 8.2).

-

To demonstrate the superiority of our classification-based approach to law article retrieval by comparing LamBERTa to other deep-learning-based text classifiers (Sect. 8.3.1) and to a few-shot learner conceived for an attribute-aware prediction task that we have newly designed based on the ICC heading metadata (Sect. 8.3.2).

7.2 Query sets

A query set is here meant as a collection of natural language texts that discuss legal subjects relating to the ICC articles. For evaluation purposes, each query as a test instance is associated with one or more article-class labels.

It should be emphasized that, on the one hand, we cannot devise a training-test split or cross-validation of the target ICC corpus (since we want that our LamBERTa models embed the knowledge from all articles therein), and on the other hand, there is a lack of query evaluation benchmarks for Italian civil law documents. Therefore, we devise different types of query sets, which are aimed at representing testbeds at varying difficulty level for evaluating our LamBERTa models:

-

QType-1—book-sentence-queries refer to a set of queries that correspond to randomly selected sentences from the articles of a book. Each query is derived from a single article, and multiple queries are from the same article.

-

QType-2—paraphrased-sentence-queries share the same composition of QType-1 queries but differ from them as the sentences of a book’s articles are paraphrased. To this purpose, we adopt a simple approach based on backtranslation from English (i.e., an original sentence in Italian is first translated to English, then the obtained English sentence is translated to Italian).Footnote 11

-

QType-3—comment-queries are defined to leverage the publicly available comments on the ICC articles provided by legal experts through the platform “Law for Everyone”.Footnote 12 Such comments are delivered to provide annotations about the interpretation of the meanings and law implications associated to an article, or to particular terms occurring in an article. Each query corresponds to a comment available about one article, which is a paragraph comprised of about 5 sentences on average.

-

QType-4—comment-sentence-queries refer to the same source as QType-3, but the comments are split into sentences, so that each query contains a single sentence of a comment. Therefore, each query will be associated to a single article, and multiple queries will refer to the same article.

-

QType-5—case-queries refer to a collection of case law decisions from the civil section of the Italian Court of Cassation, which is the highest court in the Italian judicial system. These case law decisions are selected from publicly available corpora of the most significant jurisprudential sentences associated with the ICC articles, spanning over the period 1977-2015.

-

QType-6—ICC-heading-queries are defined by extracting the headings of chapters, subchapters, and sections of each ICC book. Such headings are very short, ranging from one to few keywords used to describe the topic of a particular division of a book.

7.2.1 Characteristics and differences of the query-sets

It should be noted that while QType-1 and QType-6 query sets have the same lexicon as the corresponding book articles, this does not necessarily hold for QType-2 due to the paraphrasing process, and the difference becomes more evident with QType-3, QType-4, and QType-5 query sets. Indeed, the latter not only originate from a corpus different from the ICC, but they have the typical verbosity of annotations or comments about law articles as well as of case law decisions; moreover, they often provide cross-book references, therefore, QType-3, QType-4, and QType-5 query-set contents are not necessarily bounded by a book’s context.

Moreover, QType-3, QType-5, and QType-6 differ from the other types in terms of length of each query, which corresponds indeed to multiple sentences or a paragraph, in the case of QType-3 and QType-5, and to a single or few keywords, in the case of QType-6. Note that, although derived from the ICC books, the contents of the QType-6 queries were totally discarded when training our LamBERTa models; moreover, unlike the other types of queries, each QType-6 query is by definition associated with a group of articles (i.e., according to the book divisions) rather than a single article, therefore we shall use QType-6 queries for the multi-label evaluation task only. Also, it should be emphasized that the QType-5 queries represent a different difficult testbed as they contain real-life, heterogeneous fact descriptions of the case and judicial precedents.

Table 3 summarizes main statistics on the query sets. In addition, note that the percentage of QType-1 that were paraphrased (i.e., to produce QType-2 queries) resulted in above 85% for each of the books. Also, the number of sentences in a book’s query set (last column of the upper subtable) corresponds to the number of queries of QType-4 for that book.

It is also worth emphasizing that all query sets were validated by legal experts. This is important to ensure not only generic linguistic requirements but also meaningfulness of the query contents from a legal viewpoint.

7.3 Evaluation methodology and assessment criteria

Let us denote with \({\mathcal {C}}\) either a portion (i.e., a single book) of the ICC, or the whole ICC. We specify an evaluation context in terms of a test set (i.e., query set) Q pertinent to \({\mathcal {C}}\) and a LamBERTa model \({\mathcal {M}}\) learnt from \({\mathcal {C}}\). Note that the pertinence of Q w.r.t. \({\mathcal {C}}\) is differently determined depending on the type of query set, as previously discussed in Sect. 7.2.

We consider two multi-class evaluation contexts: single-label and multi-label. In the single-label context, each query is pertinent to only one article, therefore there is only one relevant result for each query. In the multi-label context, each query can be pertinent to more than one article, therefore there can be multiple relevant results for each query.

7.3.1 Single-label evaluation context

We consider a confusion matrix in which the article ids correspond to the classes. Upon this matrix, we measure the following standard statistics for each article \(A_i \in {\mathcal {C}}\): the precision for \(A_i\) (\(P_i\)), i.e., the number of times (queries) \(A_i\) was correctly predicted out of all predictions of \(A_i\), the recall for \(A_i\) (\(R_i\)), i.e., the number of times (queries) \(A_i\) was correctly predicted out of all queries actually pertinent to \(A_i\), the F-measure for \(A_i\) (\(F_i\)), i.e., \(F_i = 2P_iR_i/(P_i+R_i)\). Then, we averaged over all articles to obtain the per-article average precision (P), recall (R), and two types of F-measure: micro-averaged F-measure (\(F^\mu\)) as the actual average over all \(F_i\)s, and macro-averaged F-measure (\(F^M\)) as the harmonic mean of P and R, i.e., \(F^M = 2PR/(P+R)\).

In addition, we consider two other types of criteria that respectively account for the top-k predictions and the position (rank) of the correct article in predictions. The first aspect is captured in terms of the fraction of correct article labels that are found in the top-k predictions (i.e., top-k-probability results in response to each query), and averaging over all queries, which is the recall@k (R@k). The second aspect is measured as the mean reciprocal rank (MRR) considering for each query the rank of the correct prediction over the classification probability distribution, and averaging over all queries.

Moreover, we wanted to understand the uncertainty of prediction of LamBERTa models when evaluated over each query: to this purpose, we measured the entropy of the classification probability distributions obtained for each query evaluation, and averaged over all queries; in particular, we distinguished between the entropy of each entire distribution (E) from the entropy of the distribution corresponding to the top-k-probability results (E@k).

7.3.2 Multi-label evaluation context

The basic requirement for the multi-label evaluation context is that, for each test query, a set of articles is regarded as relevant to the query. In this respect, we consider two different perspectives, depending on whether a query is (i) originally associated with or derived from a particular article, or (ii) by-definition associated with a group of articles. We will refer to the former scenario as article-driven multi-label evaluation, and to the second scenario as topic-driven multi-label evaluation.

Article-driven multi-label evaluation. For this stage of evaluation, we require that, for each test query associated with article \(A_i\), a set of articles related to \(A_i\) is to be selected as the set of articles relevant to the query, together with \(A_i\). We adopt two approaches to determine the article relatedness, which rely on a supervised and an unsupervised grouping of the articles, respectively. The supervised approach refers to the logical organization of the articles of each book originally available in the ICC (cf. Sect. 3), whereas the unsupervised approach leverages a content-similarity-based grouping of the articles produced by a document clustering method.

ICC-classification-based. We exploit the ICC-provided classification meta-data of each book to label each article with the terminal division it belongs to. Since a division usually contains a few articles, the same label will be associated to multiple articles. It should be noted that the book hierarchies are quite different to each other, both in terms of maximum branching (e.g., from 5 chapters in Book-2 and Book-6, to 14 chapters in Book-1) and total number of divisions (e.g., from 51 in Book-2 to 135 in Book-4); moreover, sections, subchapters or even chapters can be leaf nodes in the corresponding hierarchy, i.e., they contain articles without any further division. This leads to the following distribution of number of labels over the various books: 50 in Book-1, 40 in Book-2, 47 in Book-3, 112 in Book-4, 94 in Book-5, 56 in Book-6.

Clustering-based. As concerns the unsupervised, similarity-based approach, we compute a clustering of the set of articles of a given book, so that each cluster will correspond to a group of articles that are similar by content. Each of the produced clusters will be regarded as the set of relevant articles for each query whose original label article belongs to that set. Therefore, if a cluster contains n articles, then each of the queries originally labeled with any of such n articles, will share the same set of n relevant articles.

To perform the clustering of the article set of a given book, we resort to a widely-used, well-known document clustering method, which consists in applying a centroid-based partitional clustering algorithm (Jain and Dubes 1988) over a document-term matrix modeling a vectorial bag-of-words representation of the documents over the term feature space, using TF-IDF (term-frequency inverse-document-frequency) as term relevance weighting function (Jones 2004) and cosine similarity for document comparison. This method is also known as spherical k-means (Dhillon and Modha 2001). We used a particularly effective and optimized version of this method, called bisecting k-means (Zhao and Karypis 2004), which is a standard de-facto of document clustering tools based on the classic bag-of-words model.Footnote 13

It should be emphasized that our goal here is to induce an organization of the articles that is based on content affinity while discarding any information on the ICC article labeling. In this regard, we have deliberately exploited a representation model that, despite its known limitations in being unable to capture latent semantic aspects underlying correlations between words, it is still an effective baseline, yet unbiased with respect to the deep language model ability of LamBERTa.

Computation of relevant sets. Let us denote with \({\mathcal {P}}\) a partitioning of \({\mathcal {C}}\) that corresponds to either the ICC classification of the articles in \({\mathcal {C}}\) (i.e., supervised organization) or a clustering of \({\mathcal {C}}\) (i.e., unsupervised organization). For either approach, given a test query \(q_i \in Q\) with article label A, we detect the relevant article set for q as the partition \(P \in {\mathcal {P}}\) that contains A, then we match this set to the set of top-|P| predictions of \({\mathcal {M}}\) to compute precision, recall, and F-measure for \(q_i\). Finally, we averaged over all queries to obtain overall precision, recall, micro-averaged F-measure and macro-averaged F-measure.

Topic-driven multi-label evaluation. Unlike the previously discussed evaluation stage, here we consider queries that are expressed by one or few keywords describing the topic associated with a set of articles.

To this purpose, we again exploit the meta-data of article organization available in the ICC books, so that the articles belonging to the same division of a book (i.e., chapter, subchapter, section) will be regarded as the set of articles relevant to the query corresponding to the description of that division. Depending on the choice of the type of a book’s division, i.e., the level of the ICC-classification hierarchy of that book, a different query set will be produced for the book.

Given a test query \(q_i \in Q\) with article labels \(A_{i_1}, \ldots , A_{i_n}\), we match this set to the set of top-\(i_n\) predictions of \({\mathcal {M}}\) to compute precision, recall, and F-measure for \(q_i\). Finally, we averaged over all queries to obtain overall precision, recall, micro-averaged F-measure and macro-averaged F-measure. Moreover, we measured the fraction of top-k predictions that are relevant to a query, and averaging over all queries, i.e., precision@k (P@k).

8 Results

We organize the presentation of results into three parts. Section 8.1 describes the comparison between global and local models under both the single-label and multi-label evaluation contexts; note that we will use notations G and \(L_i\) to refer to the global model and the local model corresponding to the i-th book, respectively, for any given test query-set. Section 8.2 is devoted to an ablation study of our models, focusing on the analysis of the various unsupervised data labeling methods and the effect of the training size on the models’ performance. Finally, Sect. 8.3 presents results obtained by competing deep-learning methods.

8.1 Global vs. local models

8.1.1 Single-label evaluation

Table 4 and Fig. 5 compare global and local models, for all books of the ICC, according to the criteria selected for the single-label evaluation context. For the sake of brevity yet representativeness, here we show results corresponding to the UniRR.T\(^+\) labeling scheme—as we shall discuss in Sect. 8.2, this choice of training-instance labeling scheme is justified as being in general the best-performing scheme for the query sets; nonetheless, analogous findings were drawn by using other types of queries and labeling schemes.

Looking at the table, let us first consider the results obtained by the LamBERTa models against QType-1 queries. At a first sight, we observe the outstanding scores achieved by all models; this was obviously expected since QType-1 queries are directly extracted from sentences of the book articles used to train the models. More interesting is the comparison between the local models and the global model: for any query-set from the i-th book, the corresponding local model behaves significantly better than the global model in most cases. It should be noticed that the slightly better results of the global model in terms of precision are actually determined by its occasional wrongly predictions of articles from different books in place of articles of Book-i.

The outperformance of local models over the global one is also confirmed in terms of the entropy results, which are always lower, and hence better, than the global’s ones. Moreover, while the plots in Fig. 5 are representative for QType-1 and QType-4 queries, the above result for the comparison between local and global models in terms of entropy behavior equally holds regardless of the type of query set. Note that, in Fig. 5a and (d), the values of entropy are normalized within the interval [0, 1] to get a fair comparison between the local models and global models.Footnote 14 This indicates that the predictions made by a local model are generally more certain than those by the global model.

Turning back to the results reported in Table 4, we observe that high performance scores are still obtained for the paraphrased-sentence-based queries, which indicates a remarkable robustness of LamBERTa models w.r.t. lexical variants of queries.

Considering now the comment-based queries, both in the paragraph-size (i.e., QType-3) and sentence-size versions (i.e., QType-4), we observe a much lower performance of the models. This is nonetheless not surprising since QType-3 and QType-4 queries are lexically and linguistically different from the ICC contents (cf. Sect. 7.2). Besides that, local models show to be consistently better than the global ones, despite the cross-corpora learning ability of the global model which, in principle, could be beneficial for queries that may contain references to articles of different books. One exception corresponds to Book-5: as confirmed by a qualitative inspection of the legal experts who assisted us, this is due to an accentuation in the queries for Book-5 of a tendency in more broadly covering subjects of articles from other books. This is also supported by quantitative statistics about the uniqueness of the terms in the vocabulary of a book: in fact, we found out that the percentage of a book’s vocabulary terms that are unique is minimum w.r.t. Book-5; in detail, 39% of Book-1 and Book-2, 48% of Book-3, 43% of Book-4, and 36% of Book-6. A further interesting remark can be drawn from the comparison of the QType-3 scores with the QType-4 scores, as these appear to be generally higher for the former queries, which provide more topical context than sentence-size queries. This would hence suggest that relatively long queries can be handled by LamBERTa models effectively.

Notably, the above finding on the ability of handling long, contextualized queries is also confirmed by inspectioning the results obtained against the queries stating case law decisions (i.e., QType-5 queries). Indeed, despite the particular difficulty of such a testbed, LamBERTa models show performance scores that are generally comparable to those obtained for the QType-3 comment queries; moreover, once again, local models behave better than the corresponding global ones, according to all assessment criteria (and even without exceptions as opposed to QType-3 comment queries).

8.1.2 Multi-label evaluation

Our investigation on the effectiveness of global and local models was further deepened under the different multi-label evaluation contexts we devised.

Article-driven multi-label evaluation. Tables 5 and 6 report results according to the article-driven clustering-based and ICC-classification-based analyses, respectively. For the clustering-based approach, results correspond to a number of clusters over the articles of each book that was set so to have mean cluster size close to three.

At a first glance, the superiority of local models stands out, thus confirming the findings drawn from the single-label evaluation results. Upon a focused inspection, we observe few exceptions in Table 5 corresponding to results on Book-5 (similarly as we already found in Table 4) for the QType-1, QType-3, and QType-4, and on Book-4 for QType-2; however, this does not occur in Table 6. We tend to ascribe this to the fact that, being driven by a content-similarity-based approach, the clustering of the articles is clearly conditioned on the higher topic variety observable in Book-5 or Book-4, which may favor a global model against a local one in better capturing topics that are outside the boundaries of that particular book. By contrast, this does not hold for the ICC-classification-based grouping of the articles, as it relies on an externally provided organization of the articles in a particular book that is not constrained by the topic patterns (e.g., word co-occurrences) that might be distinctive of that book.

Notably, looking at the QType-5 results, the performance scores obtained by local and global models are generally comparable to or even higher than those respectively obtained on QType-3 (or QType-4) queries, which is particularly evident for the ICC-classification-based grouping of the articles (Table 6).

As a further point of investigation, we explored whether the article-driven clustering-based multi-label evaluation is sensitive to how the query relevant sets (i.e., clusters of articles) were formed, with a focus on the content representation model of the articles. In particular, we replaced the TF-IDF vectorial representation of the articles with the article embeddings generated by our LamBERTa models, while keeping the same clustering methodology and setting as used in our previous analysis. From the comparison results reported in the Appendix, Table 13, it stands out that using the embeddings generated by LamBERTa models to represent the articles in the clustering process is always beneficial to the multi-label classification performance of LamBERTa local models, according to all criteria and query sets. As expected, the improvements are generally more evident for QType-1 and QType-2 query sets, since these queries have lexicons close to the training data. More interestingly, we also observe that the performance gain by LamBERTa embeddings tends to be higher for the largest books, i.e., Book-4 and Book-5, with peaks of about 300% percentage increase reached with the QType-2 query-set relevant for Book-4. Nonetheless, apart from these particular cases, discovering clusters over a set of articles represented by their LamBERTa embeddings does not bring in general to outstanding boost of performance against the TF-IDF vectorial representation: indeed, this can be ascribed to the very small size of the clusters produced, and hence the TF-IDF vectorial representation can well approximate and match the clusters detected over the LamBERTa embeddings of the articles, especially in smaller collections of articles (i.e., ICC books).

Topic-driven multi-label evaluation. Let us now focus on the topic-driven multi-label evaluation results, based on QType-6 queries, which are shown in Table 7 (details on precision and recall values are omitted for the sake of presentation, but this does not change the remarks being discussed). Three major remarks arise here. The first one is again about the higher effectiveness of local models against the global one, for each of the book query-sets, with the usual exception corresponding to Book-5, which is more evident as the type of division is finer-grain. The second remark concerns a comparison between the methods’ performance by varying the types of book division: the chapter, subchapter and section cases are indeed quite different to each other, which should be noticed through the different values of the average number of articles “covered” by each query label-class; in general, higher values correspond to more abstract divisions. Moreover, the roughly monotone variation of this statistic over all books cannot be coupled with a monotonic variation of the performances: for instance, the highest F-measure values correspond to the division at section level in Book-1, whereas they correspond to the chapter level in Books-2, 3, 4 and 6. Finally, it is worth noticing that the performances significantly increase when precision@3 scores are considered, often reaching very high values: this would suggest that, despite the intrinsic complexity of this evaluation task, the models are able to guarantee a high fraction of top-3 predictions that correspond to the articles that are relevant for each query.

8.2 Ablation study

8.2.1 Training-instance labeling schemes

Besides the settings of BERT learning parameters, our LamBERTa models are expected to work differently depending on the choice of training-instance labeling scheme (cf. Sect. 4.5). Understanding how this aspect relates to the performance is fundamental, as it impacts on the complexity of the induced model. In this respect, we compared our defined labeling schemes, using the induced local models of Book-2 (i.e., \(L_2\)) as case in point.

Table 8 shows single-label evaluation results for all types of query-sets. As expected, we observe that the scheme based on an article’s title only is largely the worst-performing method (with the exception of QType-2 where, due to the little impact of paraphrasing on the article titles, it happens that the performance of T is slightly better than CasRR). More interestingly, lower size of n-gram seems to be beneficial to the effectiveness of the model, especially on QType-3, QType-4, and QType-5 queries, indeed UniRR always outperforms both BiRR and TriRR; also, UniRR behaves better than the cascade scheme (CasRR) as well, and again the gap is more evident in the comment-based queries. The combination of n-grams of varying size reflected by the TglRR scheme leads to a significant increase in the performance over all previously mentioned schemes, for QType-1, QType-2, and QType-5 queries. This would suggest that more sophisticated labeling schemes can lead to higher effectiveness in the learned model. Nonetheless, superior performance is obtained by considering the schemes with emphasis on the title, which all improve upon the corresponding schemes not emphasizing the title, with UniRR.T\(^+\) being the best-performing method by far according to all criteria.