Abstract

Document-level relation extraction (RE) aims to predict the relational facts between two given entities from a document. Unlike widespread research on document-level RE in English, Korean document-level RE research is still at the very beginning due to the absence of a dataset. To accelerate the studies, we present TREK (Toward Document-Level Relation Extraction in Korean) dataset constructed from Korean encyclopedia documents written by the domain experts. We provide detailed statistical analyses for our large-scale dataset and human evaluation results suggest the assured quality of TREK . Also, we introduce the document-level RE model that considers the named entity-type while considering the Korean language’s properties. In the experiments, we demonstrate that our proposed model outperforms the baselines and conduct qualitative analysis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Relation extraction (RE) is a task that captures relational facts between entities from plain text and serves as the basis of large-scale knowledge base construction and population research [1,2,3]. Previous RE studies focus on sentence-level RE which is to extract relational facts from a sentence [4,5,6], but the sentence-level RE models only concentrate on the local context within the single sentence [7, 8].

For the comprehensive context understanding capability of the RE model, one research [9] builds document-level RE dataset in English, and it derives widespread research [10,11,12,13]. However, in Korean, document-level RE research is hardly conducted due to the absence of a document-level RE dataset. Although the Korean cross-sentence-level RE dataset is collected [14], it is built on the paragraph-level so that the relations spread across the multiple paragraphs in a document are not considered. In other words, they constructed the dataset considering inter-sentence relations and intra-sentence relations only within paragraphs. Therefore, a document-level RE dataset is required to accelerate the document-level RE research in Korean.

Simply translating English document-level RE as an alternative to the absence of a dataset is inappropriate. The reason is that Korean is an agglutinative language, whereas English belongs to an inflectional language [15]. As an agglutinative language, one of the characteristics of Korean language is the relatedness between lexical morpheme and functional morpheme [16, 17]. As the complete meaning of the word in Korean depends on the type of functional morpheme, it is essential to identify the functional morpheme’s role in the sentence. For example, in the sentence “

. (An apple is at home.)”, the word “

. (An apple is at home.)”, the word “

” is a combination of the entity “

” is a combination of the entity “

(apple)” and functional morpheme “

(apple)” and functional morpheme “

[-neun]Footnote 1”. “

[-neun]Footnote 1”. “

” serves the subject role because “

” serves the subject role because “

[-neun]” is a subjective functional morpheme. Meanwhile, in “

[-neun]” is a subjective functional morpheme. Meanwhile, in “

. (I like the apple at home.)”, “

. (I like the apple at home.)”, “

” indicates the object as an objective functional morpheme “

” indicates the object as an objective functional morpheme “

[-reul]” is combined. Likewise, simply translating the English dataset is not desirable for collecting Korean dataset. Therefore, building Korean-based RE resources from scratch regarding the property is crucial.

[-reul]” is combined. Likewise, simply translating the English dataset is not desirable for collecting Korean dataset. Therefore, building Korean-based RE resources from scratch regarding the property is crucial.

An example from TREK dataset. For clarity, named entity involved in relations are colored in blue or green and other named entities are underlined. The relational triple (

, org:founded,

, org:founded,

) is an inter-sentence relation extracted from multiple sentences and (

) is an inter-sentence relation extracted from multiple sentences and (

, org:members,

, org:members,

) is an intra-sentence relation extracted from a single sentence

) is an intra-sentence relation extracted from a single sentence

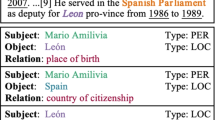

In this paper, we present a TREK (Toward document-level Relation Extraction in Korean) dataset from Korean encyclopedia documents written by the domain experts. We construct the dataset with distant-supervision manner and conduct an additional human inspection to consider the missing or noisy annotations. As shown in Fig. 1, TREK dataset consists of a document and relational triples, i.e., (head, relation, tail). Along with the dataset construction, we analyze TREK dataset regarding named entity-types and relation classes. Furthermore, the human evaluation results suggest that our document RE dataset is quality assured. We also propose a Korean document-level RE model that employs named entity-type information and reflects the characteristics of the Korean language. Since the role of the word changes according to the type of functional morpheme, we adopt special tokens surrounding the entity to let the model distinguish the entity from the functional morpheme. We show the improved performance of our model on TREK and conduct qualitative analysis as well.

Our contributions are as follows:

-

We introduce a large-scale Korean document-level RE dataset, TREK (Toward document-level Relation Extrac-tion in Korean), based on an encyclopedia with distant-supervision and human inspection. TREK dataset contains a total of 285,696 examples with 15,703 documents, 329,099 sentences, and 100,776 entities. Our human evaluation also shows the ensured quality of our dataset.

-

We propose the document-level RE model that considers named entity-type information within the documents while taking into account of Korean language’s characteristics.

-

We show our model’s effectiveness on TREK dataset in the experiments and demonstrate its enhanced understanding capability through qualitative analysisFootnote 2

2 Related work

2.1 Document-level Relation Extraction

Document-level RE is challenging since entities are spread within multiple sentences [9]. To solve this problem, diverse studies have been conducted regarding mentions and entities. The method that uses the selective attention network, which regards the same entities with different positions as distinct mention representations to capture the relations between the scattered entities, is proposed [18]. Also, a study [19] considers various kinds of mention dependencies exhibited in documents, while another study [20] introduces mention-level RE model to extract the key mention pairs in the document. The method that utilizes relation information is also proposed. A study [21] uses relation embedding to capture the co-occurrence correlation of relations.

On the other hand, the research that focuses on an entity also appears. One research mitigates the sparsity of the entity-pair representation and achieves a holistic understanding of the document[22]. In addition, the method that enriches the entity-pair representation by introducing an axial attention mechanism with adaptive focal loss is proposed [23]. By exploiting the entity-types that provide additional information on the relatedness between each entity pair, various research shows improved performance on document-level RE task [24]. Likewise, diverse branches of research are widely conducted in document-level RE in English.

The process of TREK dataset construction. For comprehension, we provide an example in English. The original example in Korean is described in Appendix B. In phase 1 and 2, the entity “

(the Holy See)” marked in blue indicates the subject inserted by pseudo-subject insertion. The highlighted texts and solid green lines are the named entities and relations, respectively. In phase 3, the dashed gray indicates deleted relation and the red lines indicate re-assigned relations

(the Holy See)” marked in blue indicates the subject inserted by pseudo-subject insertion. The highlighted texts and solid green lines are the named entities and relations, respectively. In phase 3, the dashed gray indicates deleted relation and the red lines indicate re-assigned relations

2.2 Relation extraction in korean

In previous RE research in Korean, sentence-level RE studies have been conducted actively [25]. There exists research that considers Korean characteristics by exploiting dependency structures to find proper relation in a sentence [25,26,27]. Moreover, entity position tokens are utilized to capture contextual information in a sentence [28]. These studies are promoted by the publicly available datasets to build sentence-level RE models. A sentence-level RE dataset, KLUE [29], is published to overcome the lack of up-to-date Korean resources. Additionally, a Korean cultural heritage corpus [30] for entity-related tasks is disclosed. Even though the Korean cross-sentence-level RE dataset, Crowdsourcing [14], is presented, this dataset only considers the paragraph-level, not the document-level. In other words, the relations spread across the multiple paragraphs in a document do not exist, which limits the capability of document-level REFootnote 3. Another research [31] presents HistRED constructed from Yeonhaengnok, which is a collection of records written in Korean and Hanja, classical Chinese writing. Due to the limited scope of HistRED to specific domains, history, its applicability to general domains is constrained. Furthermore, all existing datasets rely on human annotation, making it challenging and costly to construct large-scale data. Therefore, document-level RE resources are limited, and this leads to a lack of studies on document-level RE in Korean.

3 TREK dataset

We first describe how we build our TREK (Toward document-level Relation Extraction in Korean) dataset in Section 3.1. In Section 3.2, we analyze the TREK dataset and describe the statistics on the types of named entities and relation classes. We conduct human evaluation on our dataset in Section 3.3.

3.1 Data construction

We first annotate both entities and relations in a distant supervision manner from NAVER encyclopedia documentsFootnote 4, which consists of refined documents on diverse topics. Then, we additionally conduct a human inspection to consider missing or noisy annotations. To build a document-level RE dataset, we follow four steps; 1) named entity annotation, 2) sentence-level relation annotation, 3) document-level relation construction, and 4) human inspection.

Named entity annotation (NEA) We annotate the named entity to the documents as illustrated in the first phase of Fig. 2. Owing to the characteristic of the NAVER encyclopedia, most of the sentences in the raw documents do not have a subject which is a document’s titleFootnote 5

Thus, we manually insert the subject and annotate the named entities in the document. In detail, we first find the sentences where the subject is missing by utilizing a pre-trained dependency parser library [32] and then insert the document’s title as the subject. Also, we concatenate the subjective functional morpheme at the end of the title-entity to indicate the subject in the sentence. This pseudo-subject insertion process clarifies the structure of the sentence, and the named entity recognition (NER) model predicts the position and type of each entity from the clarified sentence.

Since Korean belongs to an agglutinative language, distinguishing entity and functional morpheme is critical in NER. Therefore, we let the NER model annotate being aware of the functional morpheme.

In addition to defined entity types, we define the named entity-type ‘TITLE’ and assign it to the entities which are equal to the title due to its importance in the document. We use pre-trained model which is trained with Korean documentsFootnote 6 based on the ELECTRA [33] architecture and fine-tune the pre-trained model with KLUE-NER [29] datasetFootnote 7.

Sentence-level Relation Annotation (SRA) After annotating the named entity in the documents, we assign the relation between the two entities in a sentence.

We implement the sentence-level RE models by considering the characteristics of the Korean language. Since Korean is an agglutinative language that forms a word with lexical morpheme and functional morpheme, capturing the scope of the entity in the sentence is significant [15].

Therefore, we employ entity position tokens to denote the position of subject and object entities in a sentence following previous Korean research [28]. Start position tokens are inserted at the beginning of subject and object entities, while end position tokens are appended at the end of subject and object entities. We conduct experiments on sentence-level RE performance utilizing different types of representations as presented in Table 1.

From the result, it is shown that the KLUE-RoBERTa utilizing [END] token embeddings shows the best performance among the others. It implies that distinguishing the borderline between the entity and functional morpheme is critical for predicting the relation. Consequently, we annotate intra-relation utilizing the KLUE-RoBERTa with [END] token, which shows the best performance.

We train the sentence-level RE model with KLUE-RoBERTa based on KLUE-RE [29] datasetFootnote 8.

Document-level Relation Construction (DRC) For inter-sentence relations, we remove the pseudo-inserted subject, re-assigning the relation of the removed entity to the existing subject in the documents. For example, as depicted in phase 3 of Fig. 2, the red line indicates the re-assigned relation. When “

(the Holy See)” inserted by the pseudo-subject insertion in sentence [2] is removed, the relation “r2: org:member_of” is re-assigned to “

(the Holy See)” inserted by the pseudo-subject insertion in sentence [2] is removed, the relation “r2: org:member_of” is re-assigned to “

(the Holy See)” of sentence [1] and “

(the Holy See)” of sentence [1] and “

(Italy)” from sentence [2].

(Italy)” from sentence [2].

Human Inspection Our human inspection process is divided into annotation and filtering to handle missing and noisy annotations. First, when assigning inter-sentence relations based on subjects in phase 3, the relation between non-subject entities may exist in the document. To consider these relations, we additionally annotate all possible relations in the document with humans and collect missing relations between non-subject entities. As a result, 63,482 examples are additionally annotated.

Afterward, we exclude incorrect examples with the human-filtering process to remove the noises from the automatically annotated examples following previous research [34]. In detail, human workers are instructed to decide whether the given example is valid or invalid. We delete the examples that are determined as invalid, and 14,663 examples are removed. The details of annotation and filtering are described in Appendix C.

3.2 Data statistics

We compare TREK dataset with existing Korean RE datasets [14, 29, 30] as in Table 2.

TREK dataset has approximately three times as many examples at a minimum compared to the other datasets. Our dataset covers both intra-sentence and inter-sentence relations at a document-level, and the ratios of intra-relation and inter-relation are 25.31% and 74.69%, respectively. This ratio is reasonable for a document-level RE dataset, considering DocRED [9], which has 18.49% and 81.51% of intra and inter-relation. Our dataset encompasses a general domain and is rich in diverse aspects, including the number of documents, examples, sentences, and entities compared to the existing datasets. Table 3 shows the statistics of train, development, and test sets in our TREK dataset. The dataset contains a total of 285,696 examples with 15,703 documents, 329,099 sentences, and 100,776 entities. The samples of TREK dataset are also described in Appendix D.

Types of Named Entity Figure 3 depicts the distribution of the named entity in TREK dataset. The distributions of each dataset split are depicted in Appendix E.

Out dataset has 11 named entity-types including LOCATION (30.65 %), CIVILIZATION (17.18 %), PERSON (13.44 %), TITLE (12.90 %), etc. The descriptions of each entity-type are indicated in Appendix F.

The overall architecture of the TREKER. TREKER reconstructs a given input document and performs three subtasks, i.e., coreference resolution, named entity prediction, and relation extraction in a multi-tasking manner. The reconstructed document D’ is fed in to the pre-trained language model (PLM) and we obtain the document embedding H. m is the mention embeddings from H. Also, v is the entity mention embedding for NEP while V is the entity embedding for bilinear operation in RE prediction. \(\mathcal {L}\) indicates the loss

Relation Classes Figure 4 illustrates the distribution of relation classes in TREK dataset. The relation class distributions of the dataset splits are depicted in Appendix E. Our dataset consists of 26 various relation types, including org:member_of (31.40 %), org:founded_by (16.32 %), per:colleagues (9.12 %), org:place_of_headquarters (7.69 %), etc. The descriptions of each relation class are shown in Appendix G. We also consider the inverse relation type such as ‘org:members’ and ‘org:member_of’. For example, when the head is a higher organization, and the tail is affiliated with the head, the relation is tagged as ‘org:members’. On the other hand, ‘org:member_of’ means that the head belongs to the higher-level organization, which is the tail. Therefore, the relation classes cover diverse relations between entities, including inverse relations.

3.3 Human evaluation

We conduct a human evaluation on our dataset, TREK . We collect 29 workers who are Korean undergraduate students. We randomly chose 485 documents from the dataset. We asked the workers to evaluate whether the given head and tail are mapped to the given relation appropriately based on the document. The score is scaled from 1 to 3, and a higher score indicates that the relation is more appropriately annotated. As a result, 40.73% of the examples are evaluated as appropriate and 35.26% as plausible. Only 24.00% of the examples are regarded as less appropriate relations. The agreement between the annotators is calculated with Fleiss’ Kappa coefficient [35] and is 0.4763, implying moderate agreement. In this result, we demonstrate the assured quality of TREK dataset.

4 Model

We implement our Korean document-level RE model based on TREK dataset considering named entity-type information and Korean language characteristics, and entitled as TREKER. As illustrated in Fig. 5, our model aims to predict the relation when the document and two entities, head and tail, are given. We first reconstruct a document input to reflect the properties of the Korean language. Then, the model extracts embeddings of the entity mention which represent the same entity scattered in the documents. With the extracted entity mention embeddings, the model trains on coreference resolution, named entity prediction, and relation extraction tasks in a multi-tasking manner.

4.1 Input document reconstruction

In TREK dataset, a document D has a set of entities \(E = \left\{ e_1, e_2, \cdots , e_{|E|}\right\} \). With the given documents and entities, we reconstruct the input document. We mark the special token with an asterisk (*) at the start and the end of every entity mention allowing the model to recognize and comprehend the functional morphemes and entity. Subsequently, the [CLS] token is inserted at the start of the document and the [SEP] token is placed at the end of every sentence. The reconstructed document D’ is then fed into the pre-trained language model and we obtain document embedding H \(\in \) \(\mathrm {\mathbb {R}}^{T \times dim}\) . T and dim indicate the maximum length of tokens in D’ and the dimension of the embeddings, respectively. From the document embedding H, we define the mention embedding \(M_k = \left\{ m_k^1, m_k^2, \cdots , m_k^{|M_k|}\right\} \) of k-th entity \(e_k\) by extracting the embeddings of the start special token of every entity in the H.

4.2 Coreference resolution

Our model performs the coreference resolution (CR) to capture the interactions between the long-distance mentions in multiple sentences. We define \(\mathbf {C^{\mathcal {Z}_E}}\), the set of all possible pair-combinations from all entity mention embeddings \(\mathcal {Z}_E = \left\{ M_1 \cup M_2 \cup ... \cup M_{|E|} \right\} \).

We obtain the probability of whether the two mention embeddings represent the same entity:

where \(\textbf{C}\) is the entity mention embedding pair in \(\mathbf {C^{\mathcal {Z}_E}}\) and \((\overline{ \cdot })\) is the concatenation operation. \(\textbf{W}^{CR}\) \(\in \) \(\mathrm {\mathbb {R}}^{dim \times 2}\) is a weight matrix for binary classification. \(\textbf{b}^{CR}\) is the bias of CR.

Since entities have a small number of mentions, each mention pairs from the combinations is rarely co-referencedFootnote 9. Due to the class imbalance, we apply the focal loss [36] which is known as a loss function that is robust to the class imbalance issue for \(\mathcal {L}^{CR}\) :

where \(y^{CR}\) is 1 if mention pair refers to the same entity, otherwise 0. \(Q^{CR}\) is the class weight vector obtained by reversing the ratio in \(y^{CR}\), which is introduced in previous research[36]. \(\gamma ^{CR}\) is the hyperparameter.

4.3 Named Entity Prediction

We perform the named entity prediction (NEP) task to let the model comprehend head and tail with named entity-type information. We integrate all mention embeddings of the entity with logsumexp pooling [37] to obtain head and tail embeddings as in (4):

Since head and tail are given, the model calculates the probability \(P^{NEP}\) of each named entity-type with the corresponding entity mention embeddings \(v (\texttt{head})\) and \(v (\texttt{tail})\):

where \(\textbf{W}^{NEP} \in \mathrm {\mathbb {R}}^{dim \times |nep|}\) and |nep| is the number of named entity-type set. \({\textbf{b}}^{NEP}\) is the bias of NEP task.

We compute the NEP loss \(\mathcal {L}^{NEP}\) through cross-entropy loss:

where \(y_{\texttt{head}}^{NEP}\) and \(y_{\texttt{tail}}^{NEP}\) are a ground-truth named entity-type of head and tail, respectively.

4.4 Relation extraction

For the relation extraction task, we model the interactions between the mention embeddings as in (7).

Inspired by previous research[10], we employ group bilinear classifier [38, 39] to reduce the number of parameters when utilizing vanilla bilinear classifier. Therefore, we divide the entity embeddings into block size \(\alpha \) as in (8):

Afterward, we apply group bilinear operation as indicated in (9):

where \({\textbf{W}}_i^{RE}\in \mathrm {\mathbb {R}}^{dim/\alpha \times dim/\alpha }\) is the weight matrix of the operation and \(\alpha \) is the hyperparameter. \({\textbf{b}}^{RE}\) is the bias of RE task.

Additionally, we utilize adaptive threshold loss [10] to consider the multiple relations that can exist between two entities in prediction. The adaptive threshold loss is used to obtain an entity-dependent threshold value with the learnable threshold class TH. It is tuned to maximize the evaluation scores in the inference stage, thereby returning predicted relations label above the threshold TH, otherwise deciding there is no relation.

For the loss function \(\mathcal {L}^{RE}\), we apply binary cross entropy loss for training as below and split the relation labels into \(\mathcal {O}^+\) and \(\mathcal {O}^-\):

where \(\mathcal {O}^+\) are positive relation classes that exist between head and tail and \(\mathcal {O}^-\) are negative relation classes that do not exist between two entities.

4.5 Final training objective

Consequently, we obtain the final loss \(\mathcal {L}^{total}\) by integrating losses with task-specific weights, \(\eta ^{CR}\), \(\eta ^{NEP}\), and \(\eta ^{RE}\).

5 Experiments

5.1 Experimental Setup

The evaluation metrics are the F1 and Ign F1 scores. Ign F1 score is an evaluation metric that is computed by excluding the triples which are included in the train set, supplementing the F1 score in unseen data.

For hyperparameters, we set the learning rate as 5e-5 with AdamW [40] optimizer, and then the learning rate is linearly decayed. The batch size and sequence length are set as 2 and 512, respectively. The whole model was trained for 30 epochs on 4 RTX A6000 GPUs. The average training time of TREKER for each PLM is 21 hours. Following previous document-level RE research in English [24], we set \(\alpha \) as 64 and \(\gamma ^{CR}\) as 2. The task weight \(\eta ^{CR}\), \(\eta ^{ET}\), and \(\eta ^{RE}\) are set as 0.1, 0.1, and 1, respectively and we manually searched for the parameters.

As baselines, we adopt the base version of BERT-multilin-gual [41], KoBERT(SKT Brain, 2019), KLUE-BERT [29], and KLUE-RoBERTa [29] from huggingface [42]. The baseline models were presented with the previous Korean language understanding evaluation benchmark.

5.2 Main results

We compare TREKER models with the baselines and they all show improved performance as demonstrated in Table 4. TREKER\(_{\text {RoBERTa}}\) achieves the best performance among other TREKER models. In particular, TREKER\(_{\text {KoBERT}}\) achieves the largest improvements of 6.02%p and the result implies that our method can be applied regardless of the type of language model. Also, these substantial improvements show that the learning objectives with consideration of the named entity-types lead to the effective relation prediction in given documents.

We observe the performance differences between Korean and multilingual language models. TREKER\(_{\text {KLUE-RoBERTa}}\) and TREKER\(_{\text {KLUE-BERT}}\) outperform performances of TREKER\(_{\text {BERT-multilingual}}\), respectively. The gaps demonstrate that the TREKER\(_{\text {KLUE-RoBERTa}}\) and TREKER\(_{\text {KLUE-BERT}}\) models generally understand better the Korean context than TREKER\(_{\text {BERT-multilingual}}\) model which is trained in various languages.

Meanwhile, it is shown that TREKER\(_{\text {KoBERT}}\) model demonstrate lower performances than those of TREKER\(_{\text {BERT-multilingual}}\). We assume that the size of the vocabulary and pre-trained corpus of the model affect performances. KLUE-BERT and KLUE-RoBERTa [29] are trained on 63G sentences with the size of 32K vocabulary, whereas KoBERT (SKT Brain, 2019) is trained on 5M sentences has the size of 8,002 vocabulary. Therefore, our TREK dataset is challenging if the language model’s understanding capability is limited.

5.3 Ablation studies

Table 5 presents the ablation results of the proposed model. TREKER\(_{\text {KLUE-RoBERTa}}\) is validated by excluding the CR and NEP tasks. When the CR and NEP are both removed, the performance decreases by 3.49% on average in the Ign F1 and F1, respectively. When the CR task is removed, there is an average decrease of 0.79%, respectively. Similarly, the score drops when the TREKER is not trained on NEP task. These findings indicate that considering the named entity-type information significantly contributes to the accurate prediction of relations.

6 Qualitative analysis

We conduct the qualitative analysis to demonstrate how each model understands the relation between entities in Korean document-level RE task. Figure 6 shows the relation prediction results obtained from KLUE-RoBERTa and TREKER\(_{\text {KLUE-RoBERTa}}\). In sentences [1] and [2],

(Natural IT Industry Promotion Agency) and

(Natural IT Industry Promotion Agency) and

(Seoul) are given. The gold relation of the enti-ties is ‘org:member_of’. In the prediction of KLUE-RoBERTa, incorrect relation ‘org:founded_by’ is suggested, while TREKER\(_{\text {KLUE-RoBERTa}}\) correctly finds ‘org:member_of’.

(Seoul) are given. The gold relation of the enti-ties is ‘org:member_of’. In the prediction of KLUE-RoBERTa, incorrect relation ‘org:founded_by’ is suggested, while TREKER\(_{\text {KLUE-RoBERTa}}\) correctly finds ‘org:member_of’.

We attribute these results to that TREKER\(_{\text {KLUE-RoBERTa}}\) understands the characteristics of the Korean language more precisely than KLUE-RoBERTa does. TREKER\(_{\text {KLUE-RoBERTa}}\) is more effective in distinguishing the functional morpheme “

[-eun]” and

[-eun]” and

(National IT Industry Promotion Agency) and is shown to predict the ground-truth relation. The result is compatible with the experiments in Section 5.2.

(National IT Industry Promotion Agency) and is shown to predict the ground-truth relation. The result is compatible with the experiments in Section 5.2.

7 Limitation

In this work, we introduce the document-level RE dataset in Korean. We believe that our TREK dataset will contribute to the RE research in Korean, but there are still some limitations that can be improved in future work. Since we did not use all of the documents from NAVER encyclopedia, the scalability of the dataset is relatively lower than that of DocRED [9]. However, our dataset is the largest document-level RE dataset in Korean, and we plan to build a larger dataset in future work. Another limitation of our data construction process is the necessity of human inspection. We aim to address this by either using documents where the subject missing sentence does not exist or refining our NER and RE modules to reduce error cases, ultimately aiming to construct a quality-ensured dataset without the need for human inspection.

8 Conclusion

In this paper, we introduced the TREK dataset, which is the first large-scale Korean document-level RE dataset built from high-quality Korean encyclopedia documents. We constructed TREK dataset in a distantly-supervised manner for labor- and cost-effectiveness and conducted a human inspection for ensured quality. Moreover, we showed the detailed analyses on our TREK dataset and conduct the human evaluation. Furthermore, we proposed a model that utilizes named entity-type information and adopts the properties of the Korean language. In experiments, our proposed models outperformed the baselines, and ablation studies were also conducted to show our method’s effectiveness. The qualitative results showed the model’s enhanced capability. It is expected that our TREK dataset can contribute to the field of Korean document-level RE, bringing out various research in regard to both data construction and the development of ultimate Korean RE models in the future. requirements.

Data availability and access

The TREK dataset generated during the current study is available at https://github.com/sonsuhyune/TREKER. Other data that support the findings of this study are available from the corresponding author upon reasonable request.

Notes

‘()’ is a translation of the word and ‘[]’ indicates the pronunciation of functional morpheme. The functional morphemes such as “

[-neun]” are cannot be translated directly to the English word.

[-neun]” are cannot be translated directly to the English word.Our code and the dataset are publicly accessible at https://github.com/sonsuhyune/TREKER.

The details about the characteristic of NAVER encyclopedia is in Appendix A.

Our NER model achieves F1 score of 88.64.

Our sentence-level RE model shows F1 score of 82.88.

In TREK dataset, each entity has 1.61 mentions on average.

References

Hendrickx I, Kim SN, Kozareva Z, Nakov P, Séaghdha DÓ, Padó S, Pennacchiotti M, Romano L, Szpakowicz S (2010) Semeval-2010 task 8: Multi-way classification of semantic relations between pairs of nominals. In: Proceedings of the 5th International Workshop on Semantic Evaluation, pp. 33–38

Shen Y, Huang XJ (2016) Attention-based convolutional neural network for semantic relation extraction. In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pp. 2526–2536

Zhang Y, Zhong V, Chen D, Angeli G, Manning CD (2017) Position-aware attention and supervised data improve slot filling. In: Conference on Empirical Methods in Natural Language Processing

Zeng D, Liu K, Lai S, Zhou G, Zhao J (2014) Relation classification via convolutional deep neural network. In: Proceedings of COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers, pp. 2335–2344. Dublin City University and Association for Computational Linguistics, Dublin, Ireland. https://aclanthology.org/C14-1220

Soares LB, FitzGerald N, Ling J, Kwiatkowski T (2019) Matching the blanks: Distributional similarity for relation learning. arXiv:1906.03158

Ye D, Lin Y, Li P, Sun M (2022) Packed levitated marker for entity and relation extraction. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp. 4904–4917

Ru D, Sun C, Feng J, Qiu L, Zhou H, Zhang W, Yu Y, Li L (2021) Learning logic rules for document-level relation extraction. arXiv:2111.05407

Yu J, Yang D, Tian S (2022) Relation-specific attentions over entity mentions for enhanced document-level relation extraction. arXiv:2205.14393

Yao Y, Ye D, Li P, Han X, Lin Y, Liu Z, Liu Z, Huang L, Zhou J, Sun M (2019) Doc RED: A large-scale document-level relation extraction dataset. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 764–777. Association for Computational Linguistics, Florence, Italy. https://doi.org/10.18653/v1/P19-1074, https://aclanthology.org/P19-1074

Zhou W, Huang K, Ma T, Huang J (2021) Document-level relation extraction with adaptive thresholding and localized context pooling. Proceedings of the AAAI Conference on Artificial Intelligence 35:14612–14620

Xu B, Wang Q, Lyu Y, Zhu Y, Mao Z (2021) Entity structure within and throughout: Modeling mention dependencies for document-level relation extraction. Proceedings of the AAAI Conference on Artificial Intelligence 35:14149–14157

Giorgi J, Bader GD, Wang B (2022) A sequence-to-sequence approach for document-level relation extraction. arXiv:2204.01098

Sun Q, Zhang K, Huang K, Xu T, Li X, Liu Y (2023) Document-level relation extraction with two-stage dynamic graph attention networks. Knowl-Based Syst 267:110428

Nam S, Lee M, Kim D, Han K, Kim K, Yoon S, Kim Ek, Choi KS (2020) Effective crowdsourcing of multiple tasks for comprehensive knowledge extraction. In: Proceedings of the 12th Language Resources and Evaluation Conference, pp. 212–219

Jung J, Jung S, Roh Yh (2022) Sequential alignment methods for ensemble part-of-speech tagging. In: 2022 IEEE International Conference on Big Data and Smart Computing (BigComp), pp. 175–181. IEEE

Ss Oh (1998) A Syntactic and Semantic Study of Korean Auxiliaries: A Grammaticalization Perspective. University of Hawai’i at Manoa, Honolulu, HI

Lee S, Jang TY, Seo J (2002) The grammatical function analysis between korean adnoun clause and noun phrase by using support vector machines. In: COLING 2002: The 19th International Conference on Computational Linguistics

Yu J, Yang D, Tian S (2022) Relation-specific attentions over entity mentions for enhanced document-level relation extraction. In: Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 1523–1529. Association for Computational Linguistics, Seattle, United States. https://doi.org/10.18653/v1/2022.naacl-main.109, https://aclanthology.org/2022.naacl-main.109

Xu W, Chen K, Zhao T (2021) Document-level relation extraction with reconstruction. Proceedings of the AAAI Conference on Artificial Intelligence 35:14167–14175

Jiang F, Niu J, Mo S, Fan S (2022) Key mention pairs guided document-level relation extraction. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 1904–1914. International Committee on Computational Linguistics, Gyeongju, Republic of Korea. https://aclanthology.org/2022.coling-1.165

Han R, Peng T, Wang B, Liu L, Tiwari P, Wan X (2024) Document-level relation extraction with relation correlations. Neural Netw 171:14–24

Huang X, Yang H, Chen Y, Zhao J, Liu K, Sun W, Zhao Z (2022) Document-level relation extraction via pair-aware and entity-enhanced representation learning. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 2418–2428. International Committee on Computational Linguistics, Gyeongju, Republic of Korea. https://aclanthology.org/2022.coling-1.213

Tan Q, He R, Bing L, Ng HT (2022) Document-level relation extraction with adaptive focal loss and knowledge distillation. In: Findings of ACL. https://aclanthology.org/2022.findings-acl.132

Xiao Y, Zhang Z, Mao Y, Yang C, Han J (2022) SAIS: Supervising and augmenting intermediate steps for document-level relation extraction. In: Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 2395–2409. Association for Computational Linguistics, Seattle, United States . https://doi.org/10.18653/v1/2022.naacl-main.171, https://aclanthology.org/2022.naacl-main.171

Jeong M, Suh H, Lee H, Lee JH (2022) A named entity and relationship extraction method from trouble-shooting documents in korean. Appl Sci 12(23):11971

Kwak S, Kim B, Lee JS (2013) Triplet extraction using korean dependency parsing result. In: Annual Conference on Human and Language Technology, pp. 86–89. Human and Language Technology

Kim B, Lee JS (2016) Extracting spatial entities and relations in Korean text. In: Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pp. 2389–2396. The COLING 2016 Organizing Committee, Osaka, Japan. https://aclanthology.org/C16-1225

Hur Y, Son S, Shim M, Lim J, Lim H (2021) K-epic: Entity-perceived context representation in korean relation extraction. Appl Sci 11(23):11472

Park S, Kim S, Moon J, Cho WI, Cho K, Han J, Park J, Song C, Kim J, Song Y et al (2021) Klue: Korean language understanding evaluation. In: Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021). Advances in Neural Information Processing Systems

Kim G, Kim J, Son J, Lim HS (2022) Kochet: A korean cultural heritage corpus for entity-related tasks. In: Proceedings of the 29th International Conference on Computational Linguistics, pp. 3496–3505

Yang S, Choi M, Cho Y, Choo J (2023) Histred: A historical document-level relation extraction dataset. arXiv:2307.04285

Heo H, Ko H, Kim S, Han G, Park J, Park K (2021) PORORO: Platform Of neuRal mOdels for natuRal language prOcessing. https://github.com/kakaobrain/pororo

Clark K, Luong MT, Le QV, Manning CD (2019) Electra: Pre-training text encoders as discriminators rather than generators. In: International Conference on Learning Representations

Chia YK, Bing L, Aljunied SM, Si L, Poria S (2022) A dataset for hyper-relational extraction and a cube-filling approach. arXiv:2211.10018

Fleiss JL (1971) Measuring nominal scale agreement among many raters. Psychol Bull 76(5):378

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988

Jia R, Wong C, Poon H (2019) Document-level \( n \)-ary relation extraction with multiscale representation learning. arXiv:1904.02347

Zheng H, Fu J, Zha ZJ, Luo J (2019) Learning deep bilinear transformation for fine-grained image representation. Advances in Neural Information Processing Systems. 32

Tang Y, Huang J, Wang G, He X, Zhou B (2020) Orthogonal relation transforms with graph context modeling for knowledge graph embedding. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 2713–2722

Loshchilov I, Hutter F (2017) Decoupled weight decay regularization. arXiv:1711.05101

Devlin J, Chang MW, Lee K, Toutanova K (2019) Bert: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pp. 4171–4186

Wolf T, Debut L, Sanh V, Chaumond J, Delangue C, Moi A, Cistac P, Rault T, Louf R, Funtowicz M, Davison J, Shleifer S, Platen P, Ma C, Jernite Y, Plu J, Xu C, Scao TL, Gugger S, Drame M, Lhoest Q, Rush AM (2020) Transformers: State-of-the-art natural language processing. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pp. 38–45. Association for Computational Linguistics, Online. https://www.aclweb.org/anthology/2020.emnlp-demos.6

Acknowledgements

This work was supported by Institute for Information & communications Technology Promotion(IITP) grant funded by the Korea government(MSIT) (RS-2024-00398115, Research on the reliability and coherence of outcomes produced by Generative AI). This work was supported by ICT Creative Consilience Program through the Institute of Information & Communications Technology Planning & Evaluation(IITP) grant funded by the Korea government(MSIT)(IITP-2024-2020-0-01819). This research was supported by Basic Science Research Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Education(NRF-2021R1A6A1A03045425)

Author information

Authors and Affiliations

Contributions

All authors contributed to the study’s conception and design. Suhyune Son performed material preparation, experiments, and writing the manuscript. Jungwoo Lim and Seonmin Koo performed the experiments and analyzed the results. Jinsung Kim developed the model. Younghoon Kim, Youngsik Lim, and Dongseok Hyun performed data collection and material preparation. Heuiseok Lim commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Ethical and informed consent for data used

The authors declare that they have no conflict of interest regarding the research carried out and the data produced. The authors are aware of and consent to the publication of data resulting from this research.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Examples of NAVER Encyclopedia Documents

As illustrated in Table 6, most of the sentences in the raw documents do not have a subject which is a document’s title. Since NAVERFootnote 10 encyclopedia documents concentrate the title of the document directly, most of the sentences naturally omit the subjects. The red tag indicates the place for the subject.

Appendix: B TREK dataset construction process in korean

The process of TREK dataset construction is in Fig. 7.

The process of TREK dataset construction. In phase 1 and 2, the word “

(the Holy See)” marked in blue indicates the subject inserted by pseudo-subject insertion. The highlighted texts and solid green lines are the named entities and relations, respectively. In phase 3, the dashed gray indicate deleted relation and the red lines indicate re-assigned relations

(the Holy See)” marked in blue indicates the subject inserted by pseudo-subject insertion. The highlighted texts and solid green lines are the named entities and relations, respectively. In phase 3, the dashed gray indicate deleted relation and the red lines indicate re-assigned relations

Appendix: C Details of human inspection

Human Annotation We employed 32 individuals who are native speakers of Korean and hold at least a bachelor’s degree. Workers annotate relations of non-subject entities and are provided sufficient explanations on the task. Each worker has been fairly compensated at a rate of $4.5 per single document. The expected productivity is completing 2-3 documents per hour, ensuring a minimum compensation of $10 per hour. It is worth noting that the minimum hourly wage in South Korea for 2023 is $8. The user interface can be found in Fig. 8.

Human Filtering In order to enhance the quality of the dataset, we conducted human filtering. To eliminate incorrect examples, we employed 29 workers under the same conditions as mentioned earlier. Workers evaluated the validity of the examples, and three individuals assessed each example. All workers have received reasonable monetary compensation; $4 per single document. All workers are expected to finish 2 3 documents in one hour, resulting in the minimum compensation is $8.5 per hour. The user interface can be found in Fig. 9.

Appendix: D Examples of TREK dataset

Appendix: E Distribution of each dataset split in TREK dataset

Appendix: F Named Entity-Type

Appendix: G Relation type

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Son, S., Lim, J., Koo, S. et al. A large-scale dataset for korean document-level relation extraction from encyclopedia texts. Appl Intell 54, 8681–8701 (2024). https://doi.org/10.1007/s10489-024-05605-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-024-05605-9

[-neun]” are cannot be translated directly to the English word.

[-neun]” are cannot be translated directly to the English word.