Abstract

One of the main challenges in the industry is having trained and efficient operators in manufacturing lines. Smart adaptive guidance systems are developed that offer assistance to the operator during assembly. Depending on the operator’s level of execution, the system should be able to serve a different guidance response. This paper investigates the assessment and classification of the operator’s functional state using observed task execution times. Five different classifiers are studied for operator functional state classification on task execution time series. The experiments are based on an industry case and the ground truth is provided by an expert rule-based system. Three classification scenarios are defined that segment the problem on the level of the task, the individual, or the team. Furthermore, the investigation includes the evaluation of four distinct window-size configurations. The examination of how these scenarios and window-sizes influence the studied dataset across diverse classifiers reveals that achieving enhanced accuracy necessitates a larger input dimension. In this context, Convolutional Neural Networks predominantly exhibit superior performance compared to alternative classifiers. Careful attention needs to be paid to performance over classes and skills, but results confirm the validity of the approach for data-driven operator functional state classification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last years, manufacturing companies have experienced an ever-increasing demand for more complex products with an increasing amount of product variants. As a result, the number of tasks assembly operators need to master is growing accordingly. The introduction of Industry 4.0 concepts and technologies also requires operators to acquire new sets of skills (e.g., robot assistance, AR/VR) linked to their role within the production environment. The impact of this change cannot be underestimated and is, therefore, one of the main focus points in the recently defined Industry 5.0 concept [7].

The fast adjustment of operators’ skills to produce new and complex products whilst decreasing human errors can benefit the industry [5]. Traditionally, corrective actions such as quality checkpoints, training, operating procedures and guidelines have been used to improve the readiness of operators and mitigate these human errors [16]. Current technological advances allow tracking the operator’s performance during the assembly operation with the help of sensors and cameras. They monitor behavior at the assembly workstation according to defined procedures such as hand tracking, object detection or change occurrences in zones. This raw data is then processed to detect the task performed and support or evaluate the execution of the assembly step [44]. Projection techniques using light guide systems or augmented reality can show the relevant instruction to support the operator. As a result, companies improve the operators’ performance and efficiency while controlling product quality [41].

For operator support, it is important to acknowledge that operators perceive instructions differently depending on their background and mental state. The concept of Operator Functional State (OFS) has been introduced to define the variable capacity of the operator for effective task performance in response to the task and environmental demands, and under the constraints imposed by cognitive and physiological processes that control and energize behavior [25]. OFS classes are introduced to classify the operator’s performance. The OFS classes can be determined based on task performance, subjective evaluation, and psycho-physiological measurements [8,9,10, 43]. In our work, we focus on OFS classification based on observed task performance, where this performance is a proxy for the skill level of the operator. The OFS classification is used to balance between the detail of support and the operator’s skill level for that task and to adapt the support depending on the OFS class. This allows the instructions to evolve and adapt while the operator improves according to the rate of experience [26].

One of the performance features that are significant in OFS classification is the time of execution of each task for operators [39]. The industrial case considered in this study in the assembly manufacturing section includes a dataset of the timing of assembly steps by human operators classified in four classes of skill levels calculated from a commercial system, namely the smart guidance system from the company [3]. This system processes observed execution times of tasks and classify operator experience levels based on time averages and pre-defined thresholds and parameters.

The Arkite system is an example of a rule-based system for automated OFS classification based on observed task execution times. Such rule-based algorithms, which rely on human knowledge to apply predefined rules and parameters, can have several weaknesses. While these systems are transparent and explainable, they can have drawbacks like a lack of consistency when different people set up rules and high maintenance costs when conditions change. Specifically, in the case of OFS classification, the ARKITE system has more than 20 parameters to assess per experience level and assembly task, which means that in a typical assembly line with 10 steps and 4 experience levels, this would result in more than 800 parameters to determine.

To handle the complexity of tuning thresholds and parameters, we study data-driven OFS classifiers based on machine learning, that can learn from collected data. We aim to determine if the proposed classifiers can approach the existing rule-based ARKITE system with high accuracy. The current rule-based system needs careful setup and calibration of all parameters when installed in a new or changed context. With this data-driven approach, we are exploring alternatives to this manual calibration. The data-driven approach can be built on benchmark data sets of observed task execution times of experienced and inexperienced personnel for a company. Constructing such a data set only requires domain knowledge of skill level and can be done by the operations manager. This contrasts with the parameters of the rule-based system, which are more related to signal processing and require a data scientist background to determine correctly. So, considering the series of recorded times as time series and labeled by the rule-based system, we propose five state-of-the-art classifiers to examine if they can learn OFS classification based on the operators’ task execution times. The ARKITE system is used as ground truth data in our experiments. The main objective of this study is to assess if data-driven approaches are able to mimic this performance under different scenarios.

2 Literature review

Helping the operators using the sensor technology allows for a higher degree of automation for optimizing the process control in the manufacturing field [45]. Additionally, help from sensors enables a new quality improvement in tracking the assembly steps by tracking activities with minimal effort and providing guidelines for operators to decrease the rate of errors [4]. Knoch et al. [28] also showed that they could increase the accuracy of operators by using sensor detection.

As mentioned before, guidelines should be provided in case the operators need them. Hoon et al. [26] proved that balancing between showing more guidelines in the workstation and the operators’ expertise level can lead to providing evident assistance for operators and, in addition, higher quality control. So, being aware of the operators’ experience level in each activity can be helpful. Ghazarian and Noorhosseini [20] presented an automatic skill classifier based on a machine-learning algorithm to predict the human expertise levels considering the dynamic change in expertise levels over multiple repetitions of a specific task. Hockey [25] introduced OFS, which refers to the multidimensional pattern of processes that mediate task performance under different situations. They show that the relationship between the OFS and operator performance can lead to OFS classification under different categories for operators related to one specific feature. [e.g., junior/senior or capable/incapable]. Based on [29], most of the works in OFS are related to binary OFS classification, and just a few works investigate a multi-class classification considering a single signal or several signals in OFS classifiers.

Thus, finding an efficient algorithm to predict the operators’ skill level is addressed in this research. As the sensor will detect the existence of a hand or object for each needed activity and operator in the assembly line, the captured data is a time series of start and stop times of each activity, one of the interesting problems in data mining [13]. Due to the need to categorize the operators’ experience level on the assembly line, the problem which needs to be considered is time series classification (TSC), which attracted significant attention in data mining research due to a wide range of real-life domains [15, 31]. In recent decades, much research has focused on machine learning algorithms for classification problems, with both supervised and unsupervised models.

There exist different algorithms in the machine learning domain. Muirhead and Puff [33] offered a simple Bayesian method (NB) for the TSC problem in the human heart rate data to classify the heart’s normal function or classify the symptoms of congestive heart failure. Furthermore, cancer classification as one of the vital problems in TSC was done through an NB algorithm by [30], showing the efficient accuracy and area under the curve. Tran et al. [40] proposed a K-Nearest Neighbor (KNN) method with the help of time warping for TSC. Their experimental results show a significant improvement in TSC performance. Geler et al. [17] continued their investigation of the KNN classifier on time series data and the impact of various classic distance-based vote weighting schemes by considering constrained versions of distance measures. According to [6, 34], Random Forest Classifiers (RFC) and Support Vector Machine (SVM) can be efficient in classification problems. Aamir and Zaidi [1] applied SVM and RFC to obtain the trained models for TSC problems. The accuracy of these methods is efficient in their time series traffic database.

According to [15], scientists recently moved to deep learning techniques. One of the efficient algorithms for TSC problems with high non-linearity is the deep CNNs. CNN algorithms are recently used to support the deep learning process [11]. They have a high ability of gaining an understanding of features without any need for manual engineering. CNN will automatically take input, learn the features, assign weights and biases, and classify the output. The main objective of CNN is to reduce the input dimension for easier and faster processing while keeping all the critical features that lead to a good prediction. In short, CNN extracts the image’s features and converts them into a lower dimension without losing its characteristics [35]. Many researchers show the good performance of the CNN approach in different TSC problems [2, 42, 46]. Iwana and Uchida [27] solved the TSC problem with two different types of inputs by considering the 1D CNN method. Sinanc et al. [38] used a novel approach to convert the time series into images as the input and put these inputs in a CNN classifier. They used the gradient-weighted class activation mapping method to explain their CNN efficiency.

In our previous paper [32], the research focused more on the CNN technique. However, in this research, we aim to extend the existing expertise on considered classification techniques for smart assistance in the assembly line of the manufacturing field and focus more on evaluating the different techniques, considering precision, recall and training time metrics. Compared to the previous research, we now use more values in tuning the parameters in the considered classifiers and try to dive deeper in order to analyze the proposed classifiers. Three different scenarios are introduced to tackle this problem in Section 3. In the studied case, the operators’ different skill levels range from level 1 as a non-expert level to level 4 as a high-expert one. The results show that CNN performs more accurately on a real case study data set from the assembly manufacturing line compared to other classifiers. The rest of this paper is organized as follows. In Section 3, we introduce our methodology and framework. Section 4 discussed the proposed methods and results. Finally, the conclusions and future work are presented in Section 5.

3 Materials and methods

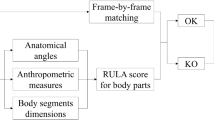

In this paper, experiments are performed on data from an industrial manual assembly environment where the activity of one assembly workstation is monitored. One operator is active per workstation, and his operations are supported by a smart guidance system from the company [3]. This system is installed above the workstation and consists of a smart 3D camera and a light projector. Through the smart camera, the execution of picking and assembly tasks are monitored and validated (Fig. 1a). Based on the observed pace of the operator, the platform provides the operator with real-time visual picking and assembly instructions through projected Augmented Reality (Fig. 1c). This technology helps operators to reduce the rate of errors and to improve the execution time [12].

3.1 Modelling of the time series

As the use of sensors and cameras in the quality section can be helpful to increase production efficiency, with the help of these artificial intelligent devices, we will collect the input as the duration of each activity in the assembly line. According to the Method Time Measurement system description (MTM) [18], the activity in the assembly line can be called micro-steps (such as the activity “take” and “reach”). By relying on installed sensors and cameras, ARKITE can monitor these micro-steps.

A micro-step involves two phases: comprehension and execution. The comprehension phase is related to the time needed for the operator to figure out the assembly sequences of the execution phase, and the execution phase refers to performing the micro-step. The sensor can detect the presence of the operator’s hand or object in the specific area of the workstation based on the setup threshold, and it records the ON and OFF triggers as hand or object present or absent detection (see Fig. 1b). Two timestamps are linked to these triggers: ON-time and OFF-time. ON-time as \(T_{m,ON}\) is the start time of the execution phase for micro-step m, and the OFF-time as \(T_{m,OFF}\) marks to the end of the execution phase for micro-step m. The duration of the micro-step m as the recorded time is as \(T_{m,OFF} - T_{m-1,OFF}\), meaning the difference between the execution end time of micro-step m and the previous one.

However, a new concept is introduced as the step for further calculation. The step is an unfinished kind of activity in an assembly line. Also, each step includes a number of different micro-steps. Figure 2 shows the duration of one step considering the number of micro-steps inside. Considering the concept of step, as Fig. 2 presents, instead of considering the duration of each micro-step, the input of the algorithm for calculating the OFS classes of each operator will be the duration recorded time of each step defined as \(T_{m,ON} - T_{1,ON}\) for a step considering of m micro-steps as shown in Fig. 2. Thus with the help of vision sensor detection, which rely on the movement and existence of the operator’s hand or object, the duration recorded time of a step will be recorded in a data set.

The ARKITE system has an engineered rule-based approach that classifies operators to a certain OFS level based on the observed execution times of assembly steps. Based on this classification, the operators are given instructions with a different detail level, depending on a number of parameters and different types of thresholds. Each operator with their specific identification number should connect to the interface. The system will detect the duration of each step with the help of a vision sensor, record all the duration times, and then use several rules to estimate the OFS classes of the operators based on the recorded historical data as time series. Table 1 contains the features used for calculating the micro-step and step duration. Our ground truth is determined by ARKITE’s rule-based system and includes four OFS classes, starting from class 1 as the junior operator to class 4 as the senior operator.

The rule-based system used by ARKITE for OFS classification is proven in the commercial setting. This research tries to extend the solution’s scalability to generalize the rule-based system with an automated one in order to avoid having to manually set the parameters and needed thresholds.

The ground truth size used in this research, collected and calculated by the rule-based system is 34900 recorded times to 11 operators in 16 steps considering four different OFS classes. In Fig. 3, the recorded time series for two different operators in one specific step is shown. The OFS classes are shown with different colors changing over time in the ground truth. Here, we can see that in the ground truth, it seems some classes (e.g., class 3 and 4) patterns have some conflict with each other. We will discuss this in Section 4.

We introduce a significant parameter in this research. The time-window is the fixed number of freshest observation entries considered as our classifier’s input. In other words, if we consider TW as the size for the time-window, it means that in each of the training steps, we consider the TW last recording times as our new input in the classifier. So with N samples in the data set and with considering TW as the length of time-window, we will have \(N-TW+1\) number of sub-samples as our initial input for considered algorithms. The sub-sample will move forward according to the TW value. The sub-sample definition will be explained in more detail in the next section. The efficiency of time-window as a parameter in our case study is shown in Section 4.

3.2 Functional scenarios

Taking into account an assembly manufacturing workstation with different operators and steps, we consider three different functionality scenarios in our approach in Table 2 to have the different classification models for training the classifiers on the point of individual-based system or population-based one.

The determination of the OFS classes for the operator in each step is based on their duration recorded time. As it is a repetition process for each step and each operator, we will have a series of recorded time as time series vector \(X_{ijk}\), where i is the operator ID (\(i=1,...,n_O\) as \(n_O\) is the number of operators), j is the step (\(j=1,...,n_S\) as \(n_S\) is the numbers of steps), and k is the repetition number for step j by operator i. The ground truth label of \(X_{ijk}\) is \(Y_{ijk}\in \{1,2,3,4\}\). Considering the window-size definition, we have TW number of \(X_{ijk}\), which should be trained in a function to be classified into different classes. Therefore, our problem is a TSC. In addition, every operator is planned to do the steps as a sequence of the entire assembly process. Our main goal is OFS classification based on time data on an individual assembly step independent from the other steps.

For a better understanding of the input in each scenario, the input sub-samples and the labels are: (1), (2):

According to Table 2, we describe the scenarios as follows:

Scenario 1, based on level of the individual,

we implement the classification algorithm for each operator, considering one step each time. In this scenario, as clear in Fig. 4a, the processed recorded time of one operator gathered by the sensor for one specific step will be considered as input for the algorithm. Therefore, the operator’s considered OFS class will be calculated compared to himself. That is, to classify the OFS of the operator i for step j, the classifier is trained by (1)-(2) for the operator i and step j only.

Scenario 2, based on level of the team,

as in Fig. 4b, the classifier is trained by all the data at once. So, the input considers all the operators and all the steps, and the classifier will be trained once with the entire data set, i.e., (1)-(2) for all \(i=1,\ldots ,n_0\) and \(j=1,\ldots ,n_S\)

Scenario 3, based on level of the task,

according to Fig. 4c, we study the algorithm with all the operators for one specific step. In this scenario, the model will consider all the operators with specific steps and classify them in different OFS classes compared to each other. Then, we have fixed j but all \(i=1,\ldots ,n_0\) in the sub-samples based on the (1), (2).

3.3 Classification techniques

As explained before, some suitable classifiers are based on the state-of-the-art for the TSC problem. In this research, we investigate the performance of five different techniques: Naive Bayes (NB), K-nearest neighbor (KNN), Random Forest Classifier (RFC), Support Vector Machine (SVM), and Convolutional Neural Network (CNN).

NB is the technique that has remained popular over the years due to good performance in different types of classification problems [34]. The NB model assumes that given a class j, the features \(x_i\) are independent, so the joint probability density of these features can be factorized:

Where p is the number of features.

KNN is a model-free method for classification, highly unstructured but as a black-box prediction engine, can be effective in real data problems [24]. The critical parameter for this classifier is the number of neighbors as the core deciding factor. After that, the algorithm will classify each point by calculating the similarity between points in the data set. A distance metric (e.g., Euclidean, Manhattan, Minkowski) can measure the similarity.

The essential idea of the RFC technique is to average noisy and unbiased models with reducing the variance. The main parameter is the number of decision trees T in the forest of the model [24]. In RFC models, the importance of each feature on the decision trees is first calculated. Then the final feature importance at the random forest level is the average over all the decision trees, as

where T is total number of trees, \(RFfi_i\) the importance of feature i calculated from all trees and \(norm fi_{ij}\) the normalized feature importance for feature i in tree j.

SVM models try to maximize the margin between points of one class and those of another, and they can be easily kernelized to solve complex nonlinear classification problems [24, 37]. SVM fits using input features as h(x) and produces the nonlinear function

where vector \(\beta \) are the coefficients. Here, \(x=(x_1,\dots ,x_p)\) are the features of a data point, and h(x) is a non-linear kernel vector, typically with a higher dimension than p. For binary classification, the classifier is then

CNN models perform efficiently in the TSC problem. A CNN consists of an input layer, one or more hidden layers, and an output layer. In any feed-forward neural network, the hidden layers are referred to as the middle layers, in which the activation function and final convolution mask their input and output. In other words, convolutional neural networks are composed of multiple layers of artificial neurons. Artificial neurons, a rough imitation of their biological counterparts, are mathematical functions that calculate the weighted sum of multiple inputs and output an activation value [35].

3.4 Experiment setup

In the existing data set as our ground truth, we have \(n_S=16\) steps and \(n_O=11\) different operators who work in a manual assembly line. For splitting the train and test set, we used the train-test-split techniques from the sklearn library [36], using stratification to achieve the same proportions of class labels as in the input data set. Also, we use 5-fold cross-validation to have a well-rounded evaluation metric to use all our data in testing the model. Moreover, we used standard scaler techniques for normalization [19] which standardizes a feature by subtracting the mean and then scaling to unit variance. Unit variance means dividing all the values by the standard deviation.

In machine learning, parameter tuning is essential for choosing optimal values for parameter sets in a learning algorithm. In Table 3, we introduce the different parameters we used in the considered classifiers based on the literature review.

In addition, we use categorical-cross-entropy as the loss function in CNN, which in multi-class classification models performs well [22]. And, the Adam optimizer is used as literature shows that it performs adequately for most of the linear and non-linear problems [37]. We have used two techniques for considering the model types [23], a sequential one for Scenario 1 and Scenario 3 and a functional one for Scenario 2. For tuning the parameters, we used "Grid-search" for the considered classifiers based on the level of the task and then used the tuned parameters to implement Scenario 1 and Scenario 2 for each classifier.

The computing system used for these experiments was configured to ensure good performance and efficiency. It features an Intel Core i7-8700k CPU running at a base clock speed of 3.70GHz. Additionally, the system was equipped with 64GB of DDR4 RAM running.

4 Results and discussion

In this section, we examine the performance of the proposed algorithms by assessing their ability to replicate the expert classes in close approximation to the rule-based system. Specifically, we investigate the performance of the introduced five state-of-the-art systems as the data-driven approaches, under three distinct scenarios, aiming to determine which system achieves the closest resemblance to the output of the rule-based system.

4.1 Classification performance in three scenarios

We consider three different scenarios to define the OFS classification problem. Each scenario aggregates the data on a different level. In Scenario 1, data is segmented per operator and per step on which individual classification models are built. In total, there are 176 models, i.e., 11 operators times 16 steps. In Scenario 2, we train them one time with all the operators and steps, and for Scenario 3, we will put the eleven operators in the pool for each step and start training our classifiers. For the window-size, we considered four values for the \(TW = [3,10,20,25]\). Table 4 shows the accuracy, precision, and recall of selected classifiers on the test sets, compared to the ground truth for four different time windows.

As is clear in Table 4, with different scenarios, for a lower window-size, all the classifiers perform approximately the same. However, as the window-size gets higher, CNN gains better accuracy than other classifiers. This difference is more evident in Scenario 2, where the classifier is trained over the entries data set, and in Scenario 3, where it is trained for one step but all the workers. So, by choosing a larger window-size, the accuracy of the classifiers in our case study can be increased. Moreover, the value of recall for most cases is equal to the value of accuracy and the difference between precision and accuracy tends to be the smaller amounts, especially in higher time windows and the CNN classifier, showing the balanced model. Here, having the approximately same values for accuracy, precision, and recall metrics, means that the model is making accurate predictions for both positive and negative cases.

In Table 4, we present the results of the classifiers on the test data. It can be observed that the CNN algorithm demonstrates higher accuracy in replicating the output of the rule-based system across the various time-windows in the three introduced scenarios. Moreover, when examining each algorithm individually, Fig. 5 illustrates the influence of the time window in both individual and population-based scenarios. The impact of the window-size varies among the classifiers based on the introduced scenarios, as evidenced by Fig. 5. For Scenario 1, which represents the individual-based scenario, the KNN and NB classifiers exhibit a slight increase in accuracy values as the time-window increases. This effect of the window-size is relatively consistent across population-based Scenarios 2 and 3 for the same classifiers. However, the RFC and SVM classifiers demonstrate a more pronounced impact of the window-size in the individual-based scenario than the population-based scenarios. Notably, the CNN algorithm displays a significant impact of the window size across all the scenarios.

The accuracy is shown in Fig. 6 for \(TW=20\). In Scenario 1, the accuracy of CNN is higher than that of other classifiers. However, RFC and SVM also show approximately 60% accuracy on average in Scenario 1. In Scenario 2, it is clear that CNN has the highest accuracy compared to the other classifiers. The other methods have low performance in comparison to CNN (less than 50% accuracy). Moreover, the Scenario 3 has the same behavior as Scenario 2 in the considered data set. As it is apparent in Fig. 6 and Table 4, the CNN algorithm performs more accurately than the other classifiers in all three scenarios and for all investigated window-sizes. In addition, it is the only classifier that performs better on average in Scenario 2 (trained on the measurements aggregated across all operators and all steps) than in Scenario 1 (trained on the measurements for the specific operator and step). Therefore, CNN seems to favor more training data (but less specific) over less training data (but more specific). The opposite seems to hold for the other classifiers, which may indicate that they are less generalizable.

As demonstrated in Table 4, larger time window-sizes will increase the accuracy of the result. According to the results, for Scenario 1 and Scenario 2, the accuracy rises as the time windows increase. But the relative accuracy increase between \(TW=10\) to \(TW=20\) is much higher than the relative increase between \(TW=20\) and \(TW=25\). Additionally, in Scenario 3, as Table 4 shows, \(TW=20\) can be the best choice for the considered data set with the most significant improvement in accuracy.

As Table 4 shows that CNN is the best choice in terms of accuracy, we now go deeper into the results of each scenario for the CNN method with \(TW=20\).

In Scenario 1, after training the classifier \(16\times 11\) times on the 5 validation folds, the average accuracy, when applied to the training sets, is 86% and 71% for the classification of the test sets. In Fig. 7, we can see the accuracy range over operators and steps is between 58% as the lowest and 87% as the highest, with an average of 71% for the entire data set. The low accuracy in some cases can be because of the limited amount of data for some of the operators doing one specific step. On the other hand, due to lack of data, we used the tuned parameters from Scenario 3 for implementing Scenario 1. So, this can be the reason for the low accuracy of this scenario in comparison to other scenarios.

For Scenario 2, after training the CNN classifier on the full training set in each fold, the average accuracy on the train sets is 94% and 78% for the test sets. For Scenario 3, with sixteen times training the CNN in each fold, the average accuracy on the sets is 86%. In Fig. 8, we show the accuracy for each of the sixteen steps for our CNN in Scenario 3. As the figure is shown, the smallest accuracy is related to step KE with 77%, and the biggest is 92% for step EE. The average of all the steps for the test accuracy is 86%.

In Fig. 9, we show the confusion matrix for three steps in Scenario 3 to illustrate the performance measurement for each OFS class for these steps. The matrix shows if the predictions match the ground truth. As the figure shows, classes 1, 2, and 4 have good prediction accuracy, but class 3 has some misclassifications with class 4. The operators who are classified as class 3 in the ground truth tend to be classified in class 4 as the prediction. The operators can easily switch between class 3 and class 4, even in the ground truth (Fig. 3).

To a deeper view of CNN efficiency in three scenarios, Table 5 shows the accuracy of the test set for different classes in Scenario 2 and Scenario 3. The table shows that the accuracy of four classes in these scenarios is acceptable in the test set. On the other hand, for efficient OFS classes in the data set, the OFS classification definition should be dimensional. The ground truth we used as the case study in implementing considered classifiers has four OFS classes. Due to Figs. 3 and 9, we implement CNN in Scenario 2 and Scenario 3 considering 3 OFS classes. In the last columns of Table 5, we can see the results for three OFS classes. As results show in the table, considering the three OFS classes instead of four can be helpful for having higher accuracy for each class and also as average. The average for Scenario 2 is increased from 78% to 91% and for Scenario 3 from 86% to 92%.

In order to delve deeper into the efficacy of the proposed CNN approach in overcoming the start-up phase challenge encountered by the rule-based algorithm (where an initial expert level of 1 is assigned to an operator for the first n iterations of each task without computational evaluation, necessitating the need for such evaluation in new shifts), a series of training experiments were conducted in Scenario 3 utilizing different entry values. These entries represent the initial number of repetitions required for each operator’s step to obtain a reliable estimation of their expert level. Figure 10 demonstrates that even with as few as 25 entries, the proposed algorithm achieves a 70% similarity to the rule-based algorithm, indicating its robust prediction capability.

Besides the classification accuracy, the training time is another important factor that efficiently chooses the classifiers for the specific data set. In Table 6, the training time for the scenarios related to the CNN method is indicated for window-sizes 3, 10, 20 and 25. As it is clear, the training time is increased with a higher window-size. On the other hand, the training time for other considered classifiers; for NB and KNN, the average training time is less than one second, and for SVM and RFC, it is less than 100 seconds.

As it has been pointed out in the tables and plots above, for our specific case study related to an assembly environment, the proposed CNN algorithm performs better than the other methods in each of the three scenarios under consideration for this assembly data set, considering the accuracy metric. However, considering the time metric, KNN and NB are the first well-performing classifiers, and RFC and SVM with less than 100s training time perform well.

5 Conclusion

In this research, the operator’s skill level is classified based on the recorded time for performing each step. Integrating the proposed technique with augmented reality (to provide virtual/visual instructions in the workstation) creates innovative workstations that provide smart adaptive guidance for operators. Taking recorded time into account as a factor of task performance in OFS classification, and knowing the different skill levels of operators in OFS classification, instructions, and guidelines are only shown in the working space when necessary to guide expert and non-expert operators. Therefore, this adaptive guidance can support the development of skills and knowledge in operators while increasing the accuracy of manual tasks and reducing assembly error rates. Considering the mentioned challenges in tuning thresholds and parameters in the existing rule-based system, we conducted a study on proposed algorithms to determine their capability to mimic the output of the existing system across various scenarios.

Our results show that the proposed CNN method can function as a more accurate classifier for the introduced scenarios based on the individual, team, or task level, with specific input. Based on Scenario 1 as the individual-level analysis, Scenario 2 as the team-level analysis, and Scenario 3 as the task-level analysis, we realize that CNN as an automatic classifier can learn and mimic the data prepared by the rule-based in the more efficient way. In comparison, other introduced classifiers (NB, KNN, RFC, SVM) do not present an accurate performance in the operator classes in the OFS classification problem. On the other hand, CNN’s training duration time is more than other classifiers.

We examined the impact of time window-size on classifier performance, finding that a window-size of 20 improved metrics in Scenario 1 and Scenario 3, while a size of 25 was optimal for Scenario 2. The findings revealed that the window-size had distinct effects on the classifiers when analyzed in the context of individual-level, team-level, and task-level scenarios. Additionally, considering three OFS classes instead of four showed promising results, enhancing both individual class accuracy and overall classification performance.

The findings clearly demonstrate the challenging nature of replicating the mentioned type of classification, with only the CNN classifier capable of effectively handling the non-linearity present in the data set to achieve high accuracy in the TSC problem. Particularly in population-based approaches, such as Scenario 2 and Scenario 3, the CNN in the proposed system successfully addresses the limitations observed in the current rule-based algorithm, which involves extensive parameter and threshold tuning. By producing results closely aligned with the rule-based algorithm, the proposed system presents a viable alternative.

Future work can analyze the CNN layers and interpret the CNN method for turning the black-box model into a white-box and more interpretable model. In addition, considering the high ability of the smart classifier to adapt to the automated system, we can use the classifier for auditing the system and finding the inconsistency between each pair algorithm.

Availability of data and material

Due to the data is collected from a real case study and based on the agreement with that specific production line, the data which is used in this paper is confidential.

Code Availability

The code can be available for the reviewers on request. However the code can be sent without the data which is used as the case study.

References

Aamir M, Zaidi SMA (2021) Clustering based semi-supervised machine learning for ddos attack classification. J King Saud Univ - Comput Inf Sci 33(4):436–446

Anand G, Nayak R (2021) Delta: Deep local pattern representation for time-series clustering and classification using visual perception. Knowl-Based Syst 212(106):551

ARKITE (2015) Arkite company official website. https://arkite.com/. Accessed 2015

Bader S, Aehnelt M (2014) Tracking assembly processes and providing assistance in smart factories. In: ICAART (1), pp 161–168

Bagnasco A, Chirico M, Parodi G et al (2003) A model for an open and flexible e-training platform to encourage companies’ learning culture and meet employees’ learning needs. J Educ Techno Soc 6(1):55–63

Bishop CM, Nasrabadi NM (2006) Pattern recognition and machine learning, vol 4. Springer, New York

Breque M, De Nul L, Petridis A (2021) Industry 5.0: Towards more sustainable, resilient and human-centric industry. Res Innov, Eur Commission

Byrne EA, Parasuraman R (1996) Psychophysiology and adaptive automation. Biol Psychol 42(3):249–268

Cannon J, Krokhmal PA, Chen Y et al (2012) Detection of temporal changes in psychophysiological data using statistical process control methods. Comput Methods Programs Biomed 107(3):367–381

Cannon JA, Krokhmal PA, Lenth RV et al (2010) An algorithm for online detection of temporal changes in operator cognitive state using real-time psychophysiological data. Biomed Signal Process Control 5(3):229–236

Ding L, Fang W, Luo H et al (2018) A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Autom Constr 86:118–124

Drouot M, Le Bigot N, Bolloc’h J et al (2021) The visual impact of augmented reality during an assembly task. Displays 66(101):987

Esling P, Agon C (2012) Time-series data mining. ACM Comput Surv 45(1):1–34

Fang P, Zhang X, Wei P et al (2020) The classification performance and mechanism of machine learning algorithms in winter wheat mapping using sentinel-2 10 m resolution imagery. Appl Sci 10(15):5075

Fawaz HI, Forestier G, Weber J et al (2019) Deep learning for time series classification: a review. Data Min Knowl Discov 33(4):917–963

Figgis J, Blackwell A, Alderson A et al (2001) What convinces enterprises to value training and learning and what does not? National Centre for Vocational Education Research

Geler Z, Kurbalija V, Ivanović M et al (2020) Weighted knn and constrained elastic distances for time-series classification. Expert Syst Appl 162(113):829

Genaidy A, Agrawal A, Mital A (1990) Computerized predetermined motion-time systems in manufacturing industries. Comput Ind Eng 18(4):571–584

Géron A (2022) Hands-on machine learning with Scikit-Learn, Keras, and TensorFlow. " O’Reilly Media, Inc."

Ghazarian A, Noorhosseini SM (2010) Automatic detection of users’ skill levels using high-frequency user interface events. User Model User-Adapt Interact 20(2):109–146

Gislason PO, Benediktsson JA, Sveinsson JR (2006) Random forests for land cover classification. Pattern Recognit Lett 27(4):294–300

Goodfellow I, Bengio Y, Courville A (2017) Deep learning (adaptive computation and machine learning series). Cambridge Massachusetts pp 321–359

Gulli A, Pal S (2017) Deep learning with Keras. Packt Publishing Ltd

Hastie T, Tibshirani R, Friedman JH et al (2009) The elements of statistical learning: data mining, inference, and prediction, vol 2. Springer, New York

Hockey GRJ (2003) Operator functional state: the assessment and prediction of human performance degradation in complex tasks, vol 355. IOS Press

Hoon GK, Min GK, Wong O et al (2015) Classifly: Classification of experts by their expertise on the fly. In: 2015 IEEE/WIC/ACM international conference on web intelligence and intelligent agent technology (WI-IAT), IEEE, pp 245–246

Iwana BK, Uchida S (2020) Time series classification using local distance-based features in multi-modal fusion networks. Pattern Recognit 97(107):024

Knoch S, Herbig N, Ponpathirkoottam S et al (2018) Enhancing process data in manual assembly workflows. In: International Conference on Business Process Management, Springer, pp 269–280

Kostenko A, Rauffet P, Coppin G (2022) Supervised classification of operator functional state based on physiological data: Application to drones swarm piloting. Front Psychol 12(770):000

Kourou K, Rigas G, Papaloukas C et al (2020) Cancer classification from time series microarray data through regulatory dynamic bayesian networks. Comput Biol Med 116(103):577

Liang Z, Wang H (2021) Efficient class-specific shapelets learning for interpretable time series classification. Inf Sci 570:428–450

Moghaddam FB, Lopez AJ, De Vuyst S et al (2021) Operator’s experience-level classification based on micro-assembly steps for smart factories. In: 2021 IEEE 8th international conference on industrial engineering and applications (ICIEA), IEEE, pp 142–148

Muirhead R, Puff R (2004) A bayesian classification of heart rate variability data. Phys A: Stat Mech Appl 336(3–4):503–513

Murphy KP (2012) Machine learning: a probabilistic perspective. MIT press

Nielsen MA (2015) Neural networks and deep learning, vol 25. Determination press, San Francisco, CA, USA

Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: Machine learning in Python. J Mach Learn Res 12:2825–2830

Raschka S, Mirjalili V (2017) Python machine learning: machine learning and deep learning with python. Packt Publishing Ltd

Sinanc D, Demirezen U, Sağıroğlu Ş et al (2021) Explainable credit card fraud detection with image conversion. Advances in distributed computing and artificial intelligence pp 63–76

Stanton N, Salmon PM, Rafferty LA (2013) Human factors methods: a practical guide for engineering and design. Ashgate Publishing, Ltd

Tran TM, Le XMT, Nguyen HT et al (2019) A novel non-parametric method for time series classification based on k-nearest neighbors and dynamic time warping barycenter averaging. Eng Appl Artif Intell 78:173–185

Urgo M, Tarabini M, Tolio T (2019) A human modelling and monitoring approach to support the execution of manufacturing operations. CIRP Ann 68(1):5–8

Xiao Z, Xu X, Zhang H et al (2021) A new multi-process collaborative architecture for time series classification. Knowl-Based Syst 220(106):934

Yin Z, Zhang J (2014) Operator functional state classification using least-square support vector machine based recursive feature elimination technique. Comput Methods Programs Biomed 113(1):101–115

Zhao Y, Yang J, Bao Y et al (2021) Trustworthy authorization method for security in industrial internet of things. Ad Hoc Netw 121(102):607

Zogopoulos V, Birem M, De Geest R et al (2021) Image-based state tracking in augmented reality supported assembly operations. Procedia CIRP 104:1113–1118

Zou X, Wang Z, Li Q et al (2019) Integration of residual network and convolutional neural network along with various activation functions and global pooling for time series classification. Neurocomputing 367:39–45

Funding

This work was supported by the VLAIO-funded project ADAPT, in collaboration with the company ARKITE.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. The first draft of the manuscript was written by Fatemeh Besharati Moghaddam and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflicts of interest/Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Ethics approval, Consent to participate, Consent for publication

All the authors participate in preparing the paper, read and approved the final manuscript to be sent to the journal for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Besharati Moghaddam, F., Lopez, A.J., Van Gheluwe, C. et al. Data-driven operator functional state classification in smart manufacturing. Appl Intell 53, 29140–29152 (2023). https://doi.org/10.1007/s10489-023-05059-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05059-5