Abstract

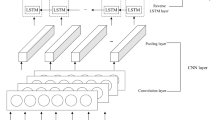

Time series analysis is the process of exploring and analyzing past trends to predict future events for any given time interval. Powered by recent advances in convolutional, recurrent and self-attention mechanisms, many deep learning methods have facilitated the investigation of time series forecasting. However, despite their effectiveness, it is doubtful that future trends can be accurately predicted due to the intricate temporal irregularities. Plus, time series frequently exhibit features at various time scales, but existing approaches do not adequately take this into account. To address above issues, this paper offers a new Multi-scale Adaptive attention-based Time-Variant neural Networks (MATVN) for multi-step ahead time series forecasting. Specifically, we contribute a novel framework capable of capturing irregular dynamic behaviors observed in temporal data over time with a Time-Variant architecture. Furthermore, a newly proposed Multi-scale Multi-head Adaptive attention module is introduced into the Time-Variant architecture to encode temporal dependencies from various pre-defined scale-aware ranges. Additionally, we endow the proposed module with more flexible individual representation learning and scale-aware attention scopes for each token to better capture multi-scale temporal patterns by designing a new Adaptive Window-aware Mask strategy. Experimental results on the vast majority of application scenarios, including climatology and energy consumption, demonstrate that the proposed model outperforms a lot of recent state-of-the-art methods in multi-step time series forecasting tasks.

Similar content being viewed by others

Data Availability

We use real-world datasets that are collected by [45].

Notes

The code has been released at https://github.com/chxiag/MATVN.

References

Xu C, Zhang A, Xu C, Chen Y (2022) Traffic speed prediction: spatiotemporal convolution network based on long-term, short-term and spatial features. Appl Intell 52(2):2224–2242

Banerjee S, Lian Y (2022) Data driven covid-19 spread prediction based on mobility and mask mandate information. Appl Intell 52(2):1969–1978

Peng Y, Gong D, Deng C, Li H, Cai H, Zhang H (2022) An automatic hyperparameter optimization DNN model for precipitation prediction. Appl Intell 52(3):2703–2719

Taieb S, Bontempi G, Atiya A, Sorjamaa A (2011) A review and comparison of strategies for multi-step ahead time series forecasting based on the nn5 forecasting competition. Expert Syst Appl 39(8):7067–7083

Box G, Jenkins G (1976) Time series analysis: Forecasting and control (2nd ed)

Guin A (2006) Travel time prediction using a seasonal autoregressive integrated moving average time series model. In: IEEE Intelligent Transportation Systems Conference 493–498

Nawaz M, FournierViger P, Shojaee A, Fujita H (2021) Using artificial intelligence techniques for COVID-19 genome analysis. Appl Intell 51(5):3086–3103

Rumelhart D, Hinton G, Williams R (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Acharya U, Fujita H, Oh S, Hagiwara Y, Tan J, Adam M, Tan R (2019) Deep convolutional neural network for the automated diagnosis of congestive heart failure using ECG signals. Appl Intell 49(1):16–27

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez A, Polosukhin I (2017) Attention is all you need. In: Advances in neural information processing systems 6000–6010

Dabrowski J, Zhang Y, Rahman A (2020) A time-variant deep feed-forward neural network architecture for multi-step-ahead time series forecasting. In: International Conference on Neural Information Processing 579–591

Oh J, Wang J, Tang S, Sjoding M, Wiens J (2019) Relaxed parameter: sharing Effectively modeling time-varying relationships in clinical time-series. PMLR 27–52

Kag A, Saligrama V (2021) Training recurrent neural networks via forward propagation through time. In: International Conference on Machine Learning 139:5189–5200

Yue X, Zhang C, Fujita H, Lv Y (2021) Clothing fashion style recognition with design issue graph. Appl Intell 51(6):3548–3560

LeCun, Y., Bengio, Y.: Convolutional networks for images, speech, and time series. The handbook of brain theory and neural networks 3361(10) (1995)

Gao C, Zhang N, Li Y, Bian F, Wan H (2022) Self-attention-based time-variant neural networks for multi-step time series forecasting. Neural Comput Appl 34(11):8737–8754

Michael, M.: Induction of multiscale temporal structure. In: Advances in neural information processing systems, 275–282 (1991)

Wang M, Deng Z (2018) Densely connected cnn with multi scale feature attention for text classification. In: International Joint Conference on Artificial Intelligence 4468–4474

Zhang Z, Zhao T, Gay H, Zhang W, Sun B (2021) Weaving attention u-net: A novel hybrid CNN and attention-based method for organs-at-risk segmentation in head and neck CT images. Med Phys 48(11):7052–7062

Ma Q, Yan J, Lin Z, Yu L, Chen Z (2021) Deformable self-attention for text classification. Trans Audio Speech Lang Process 29:1570–1581

Guo Q, Qiu X, Liu P, Xue X, Zhang Z (2020) Multi scale self-attention for text classification. In: Association for the Advancement of Artificial Intelligence 34( 5):7847–7854

Shen T, Zhou T, Long G, Jiang J, Pan S, Zhang C (2018) Directional self-attention network for rnn/cnn-free language understanding. In: Association for the Advancement of Artificial Intelligence 32( 1):5446–5455

Li S, Jin X, Xuan Y, Zhou X, Chen W, Wang Y, Yan X (2019) Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. In: Neural Information Processing Systems 32:5244–5254

Li Y, Zhang K, Cao J, Timofte R, Van GL (2021) Localvit: Bringing locality to vision transformers. In: Conference on Computer Vision and Pattern Recognition. arXiv:2104.05707

Hajirahimi Z, Khashei M (2023) Hybridization of hybrid structures for time series forecasting: a review. Artif Intell Rev 56(2):1201–1261

Wu Y, Zhao X, Li Y, Guo L, Zhu X, Fournier-Viger P, Wu X (2022) OPR-Miner: Order-preserving rule mining for time series. arXiv:2209.08932

Chen H, Rossi RA, Mahadik K, Kim S, Eldardiry H (2023) Graph deep factors for probabilistic time-series forecasting. ACM Trans Knowl Discov Data 17(2):26–12630

Ilhan F, Karaahmetoglu O, Balaban I, Kozat S (2021) Markovian rnn: An adaptive time series prediction network with hmm-based switching for nonstationary environments. IEEE Trans Neural Netw Learn Syst, 1–14

Cirstea R, Kieu T, Guo C, Yang B, Pan S (2021) Enhancenet: Plugin neural networks for enhancing correlated time series forecasting. In: International Conference on Data Engineering 1739–1750

Bai S, Kolter J, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv:1803.01271

Liu S, Ji H, Wang M (2020) Nonpooling convolutional neural network forecasting for seasonal time series with trends. IEEE Trans Neural Netw Learn Syst 31(8):2879–2888

Tang W, Long G, Liu L, Zhou T, Blumenstein M (2022) Omni-scale cnns: a simple and effective kernel size configuration for time series classification. In: International Conference on Learning Representations

Choromanski K, Likhosherstov V, Dohan D, Song X, Gane A, Sarlos T, Hawkins P, Davis J, Mohiuddin A, Kaiser L, Belanger D, Colwell L, Weller A (2021) Rethinking attention with performers. In: International Conference on Learning Representations

Fan Z, Liu Z, Wang A, Nazari Z, Zheng L, Peng H, Yu P (2022) Sequential recommendation via stochastic self-attention. In: Proceedings of the ACM Web Conference 2036–2047

Lin Y, Koprinska I, Rana M (2021) Ssdnet: State space decomposition neural network for time series forecasting. In: International Conference on Data Mining 370–378

Wu H, Xu J, Wang J, Long M (2021) Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In: Advances in Neural Information Processing Systems 34:22419–22430

Wu N, Green B, Ben X, Banion S (2020) Deep transformer models for time series forecasting: The influenza prevalence case. arXiv:2001.08317

Zhou T, Ma Z, Wen Q, Wang X, Sun L, Jin R (2020) Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In: International Conference on Machine Learning. arXiv:2201.12740

Shih SH, Tsokos CP (2008) A weighted moving average process for forecasting. J Mod Appl Stat Meth 7(1):15

Oppenheim A, Schafer R, Buck J (2009) Pearson education signal processing series. Discrete-time signal processing (2nd ed)

Bahdanau K, Cho D, Bengio Y (2015) Neural machine translation by jointly learning to align and translate. In: International Conference on Learning Representations.arXiv:1409.0473

Salinas D, Flunkert V, Gasthaus J, Januschowski T (2022) Deepar: Probabilistic forecasting with autoregressive recurrent networks. Int J Forecasting 36(3):1181–1191

Bai S, Kolter J, Koltun V (2018) An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv:1803.01271

Liu S, Ji H, Wang M (2020) Reformer: The efficient transformer. In: International Conference on Learning Representations. arXiv:2001.04451

Zhou H, Zhang S, Peng J, Zhang S, Li J, Xiong H, Zhang W (2021) Informer: Beyond efficient transformer for long sequence time-series forecasting. In: Association for the Advancement of Artificial Intelligence 35(12):11106–11115

Acknowledgements

This research was supported by National Key R & D Program for the Core Technology of Innovation and Entrepreneurship based on AI under Grant 2019YFB1405202.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no relevant financial or non financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Changxia, G., Ning, Z., Youru, L. et al. Multi-scale adaptive attention-based time-variant neural networks for multi-step time series forecasting. Appl Intell 53, 28974–28993 (2023). https://doi.org/10.1007/s10489-023-05057-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-05057-7