Abstract

Meta-reinforcement learning (Meta-RL) utilizes shared structure among tasks to enable rapid adaptation to new tasks with only a little experience. However, most existing Meta-RL algorithms lack theoretical generalization guarantees or offer such guarantees under restrictive assumptions (e.g., strong assumptions on the data distribution). This paper for the first time conducts a theoretical analysis for estimating the generalization performance of the Meta-RL learner using the PAC-Bayesian theory. The application of PAC-Bayesian theory to Meta-RL poses a challenge due to the existence of dependencies in the training data, which renders the independent and identically distributed (i.i.d.) assumption invalid. To address this challenge, we propose a dependency graph-based offline decomposition (DGOD) approach, which decomposes non-i.i.d. Meta-RL data into multiple offline i.i.d. datasets by utilizing the techniques of offline sampling and graph decomposition. With the DGOD approach, we derive the practical PAC-Bayesian offline Meta-RL generalization bounds and design an algorithm with generalization guarantees to optimize them, called PAC-Bayesian Offline Meta-Actor-Critic (PBOMAC). The results of experiments conducted on several challenging Meta-RL benchmarks demonstrate that our algorithm performs well in avoiding meta-overfitting and outperforms recent state-of-the-art Meta-RL algorithms without generalization bounds.

Similar content being viewed by others

References

Amit R, Meir R (2018) Meta-learning by adjusting priors based on extended pac-bayes theory. In: International Conference on Machine Learning, PMLR, pp 205–214

Arriba-Pérez F, García-Méndez S, González-Castaño FJ, et al (2022) Automatic detection of cognitive impairment in elderly people using an entertainment chatbot with natural language processing capabilities. J Ambient Intell Human Comput pp 1–16

Belkhale S, Li R, Kahn G et al (2021) Model-based meta-reinforcement learning for flight with suspended payloads. IEEE Robot Autom Lett 6(2):1471–1478

Brockman G, Cheung V, Pettersson L, et al (2016) Openai gym. arXiv e-prints pp arXiv–1606

Catoni O (2007) Pac-bayesian supervised classification: the thermodynamics of statistical learning. Stat 1050:3

Dhanaseelan FR, Sutha MJ (2021) Detection of breast cancer based on fuzzy frequent itemsets mining. Irbm 42(3):198–206

Duan Y, Schulman J, Chen X, et al (2016) Rl\(^{2}\): Fast reinforcement learning via slow reinforcement learning. arXiv:1611.02779

Fakoor R, Chaudhari P, Soatto S, et al (2019) Meta-q-learning. In: ICLR 2019: Proceedings of the Seventh International Conference on Learning Representations

Fard M, Pineau J (2010) Pac-bayesian model selection for reinforcement learning. Adv Neural Inf Process Syst 23

Fard MM, Pineau J, Szepesvári C (2011) Pac-bayesian policy evaluation for reinforcement learning. In: Proceedings of the Twenty-Seventh Conference on Uncertainty in Artificial Intelligence, pp 195–202

Finn C, Levine S (2019) Meta-learning: from few-shot learning to rapid reinforcement learning. In: ICML

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: International conference on machine learning, PMLR, pp 1126–1135

Fujimoto S, Gu SS (2021) A minimalist approach to offline reinforcement learning. Adv Neural Inf Process Syst 34:20,132-20,145

Fujimoto S, Hoof H, Meger D (2018a) Addressing function approximation error in actor-critic methods. In: International conference on machine learning, PMLR, pp 1587–1596

Fujimoto S, Hoof H, Meger D (2018b) Addressing function approximation error in actor-critic methods. In: International conference on machine learning, PMLR, pp 1587–1596

Germain P, Lacasse A, Laviolette F, et al (2009) Pac-bayesian learning of linear classifiers. In: Proceedings of the 26th Annual International Conference on Machine Learning, pp 353–360

Guan J, Lu Z (2022) Fast-rate pac-bayesian generalization bounds for meta-learning. In: International Conference on Machine Learning, PMLR, pp 7930–7948

Guo S, Yan Q, Su X et al (2021) State-temporal compression in reinforcement learning with the reward-restricted geodesic metric. IEEE Trans Pattern Anal Mach Intell 44(9):5572–5589

Haarnoja T, Zhou A, Abbeel P, et al (2018) Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In: International conference on machine learning, PMLR, pp 1861–1870

Hoeffding W (1994) Probability inequalities for sums of bounded random variables. In: The collected works of Wassily Hoeffding. Springer, p 409–426

Hsu KC, Ren AZ, Nguyen DP et al (2023) Sim-to-lab-to-real: safe reinforcement learning with shielding and generalization guarantees. Artif Intell 314(103):811

Huang B, Feng F, Lu C, et al (2021) Adarl: What, where, and how to adapt in transfer reinforcement learning. In: International Conference on Learning Representations

Humplik J, Galashov A, Hasenclever L, et al (2019) Meta reinforcement learning as task inference. arXiv:1905.06424

Janson S (2004) Large deviations for sums of partly dependent random variables. Random Structures & Algorithms 24(3):234–248

Langford J, Shawe-Taylor J (2002) Pac-bayes & margins. Adv Neural Inf Process Syst 15:439–446

Lee DD, Pham P, Largman Y, et al (2009) Advances in neural information processing systems 22. Tech. rep., Tech. Rep., Tech. Rep

Levine S, Kumar A, Tucker G, et al (2020) Offline reinforcement learning: Tutorial, review. and Perspectives on Open Problems

Li J, Vuong Q, Liu S, et al (2020a) Multi-task batch reinforcement learning with metric learning. In: Larochelle H, Ranzato M, Hadsell R, et al (eds) Advances in Neural Information Processing Systems, vol 33. Curran Associates, Inc., pp 6197–6210

Li L, Yang R, Luo D (2020b) Focal: Efficient fully-offline meta-reinforcement learning via distance metric learning and behavior regularization. In: International Conference on Learning Representations

Lin Z, Thomas G, Yang G et al (2020) Model-based adversarial meta-reinforcement learning. Adv Neural Inf Process Syst 33:10,161-10,173

Liu T, Huang J, Liao T et al (2022) A hybrid deep learning model for predicting molecular subtypes of human breast cancer using multimodal data. Irbm 43(1):62–74

Majumdar A, Farid A, Sonar A (2021) Pac-bayes control: learning policies that provably generalize to novel environments. Int J Robot Res 40(2–3):574–593

McAllester DA (1999a) Pac-bayesian model averaging. In: Proceedings of the twelfth annual conference on Computational learning theory. Citeseer, pp 164–170

McAllester DA (1999) Some pac-bayesian theorems. Mach Learn 37(3):355–363

Mitchell E, Rafailov R, Peng XB, et al (2021a) Offline meta-reinforcement learning with advantage weighting. In: International Conference on Machine Learning, PMLR, pp 7780–7791

Mitchell E, Rafailov R, Peng XB, et al (2021b) Offline meta-reinforcement learning with advantage weighting. In: International Conference on Machine Learning, PMLR, pp 7780–7791

Mnih V, Kavukcuoglu K, Silver D et al (2015) Human-level control through deep reinforcement learning. Nature 518(7540):529–533

Mnih V, Badia AP, Mirza M, et al (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning, PMLR, pp 1928–1937

Mubarak D et al (2022) Classification of early stages of esophageal cancer using transfer learning. IRBM 43(4):251–258

Nagabandi A, Clavera I, Liu S, et al (2019) Learning to adapt in dynamic, real-world environments through meta-reinforcement learning. In: ICLR 2019: Proceedings of the Seventh International Conference on Learning Representations

Neyshabur B, Bhojanapalli S, McAllester D, et al (2017a) Exploring generalization in deep learning. Adv Neural Inf Process Syst 30

Neyshabur B, Bhojanapalli S, Srebro N (2017b) A pac-bayesian approach to spectrally-normalized margin bounds for neural networks. In: International Conference on Learning Representations

Pentina A, Lampert CH (2015) Lifelong learning with non-iid tasks. Adv Neural Inf Process Syst 28:1540–1548

Pong VH, Nair AV, Smith LM, et al (2022) Offline meta-reinforcement learning with online self-supervision. In: International Conference on Machine Learning, PMLR, pp 17,811–17,829

Rahman MM, Ghasemi Y, Suley E et al (2021) Machine learning based computer aided diagnosis of breast cancer utilizing anthropometric and clinical features. Irbm 42(4):215–226

Rajasenbagam T, Jeyanthi S, Pandian JA (2021) Detection of pneumonia infection in lungs from chest x-ray images using deep convolutional neural network and content-based image retrieval techniques. J Ambient Intell Human Comput pp 1–8

Rakelly K, Zhou A, Finn C, et al (2019) Efficient off-policy meta-reinforcement learning via probabilistic context variables. In: International conference on machine learning, PMLR, pp 5331–5340

Ralaivola L, Szafranski M, Stempfel G (2009) Chromatic pac-bayes bounds for non-iid data. In: Artificial Intelligence and Statistics, PMLR, pp 416–423

Ralaivola L, Szafranski M, Stempfel G (2010) Chromatic pac-bayes bounds for non-iid data: Applications to ranking and stationary \(\beta \)-mixing processes. J Mach Learn Res 11(65):1927–1956

Rezazadeh A (2022) A unified view on pac-bayes bounds for meta-learning. In: International Conference on Machine Learning, PMLR, pp 18,576–18,595

Rothfuss J, Lee D, Clavera I, et al (2019) Promp: Proximal meta-policy search. In: ICLR 2019: Proceedings of the Seventh International Conference on Learning Representations

Rothfuss J, Fortuin V, Josifoski M, et al (2021) Pacoh: Bayes-optimal meta-learning with pac-guarantees. In: International Conference on Machine Learning, PMLR, pp 9116–9126

Scheinerman ER, Ullman DH (2011) Fractional graph theory: a rational approach to the theory of graphs. Courier Corp

Seeger M (2002) Pac-bayesian generalisation error bounds for gaussian process classification. J Mach Learn Res 3(oct):233–269

Shawe-Taylor J, Williamson RC (1997) A pac analysis of a bayesian estimator. In: Proceedings of the tenth annual conference on Computational learning theory, pp 2–9

Sutton RS, Barto AG (1998) Introduction to Reinforcement Learning. MIT Press

Sutton RS, Barto AG (2018) Reinforcement learning: An introduction. MIT press

Todorov E, Erez T, Tassa Y (2012) Mujoco: A physics engine for model-based control. In: 2012 IEEE/RSJ international conference on intelligent robots and systems, IEEE, pp 5026–5033

Wang H, Zheng S, Xiong C, et al (2019) On the generalization gap in reparameterizable reinforcement learning. In: International Conference on Machine Learning, PMLR, pp 6648–6658

Williams RJ (1992) Simple statistical gradient-following algorithms for connectionist reinforcement learning. Mach learn 8(3):229–256

Yang Y, Caluwaerts K, Iscen A, et al (2019) Norml: No-reward meta learning. In: Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems, pp 323–331

Yu T, Quillen D, He Z, et al (2020) Meta-world: A benchmark and evaluation for multi-task and meta reinforcement learning. In: Kaelbling LP, Kragic D, Sugiura K (eds) Proceedings of the Conference on Robot Learning, Proceedings of Machine Learning Research, vol 100. PMLR, pp 1094–1100

Zhang T, Guo S, Tan T, et al (2022) Adjacency constraint for efficient hierarchical reinforcement learning. IEEE Trans Pattern Anal Mach Intell

Zintgraf L, Shiarlis K, Igl M, et al (2020) Varibad: A very good method for bayes-adaptive deep rl via meta-learning. In: ICLR 2020: Proceedings of the Eighth International Conference on Learning Representations

Acknowledgements

This work was in part by the National Natural Science Foundation of China under Grant 62206151 and 62072355, the China National Postdoctoral Program for Innovative Talents under grant BX20220167, the Shuimu Tsinghua Scholar Program, the Key Research and Development Program of Shaanxi Province of China under Grant 2022KWZ-10, and the Natural Science Foundation of Guangdong Province of China under Grant 2022A1515011424. Finally, we express our gratitude to Bo Wan for his contributions to refining the language and conducting a segment of the new experiments

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declared that they had no conflicts of interest with this work

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Proof and derivation

1.1 A.1 Auxiliary Results

We introduce Lemma 1 prior to deriving our result.

Lemma 1

(Change of Measure Inequality) Let \(\mathcal {F}\) be a set of random variables f. Let \({{\mathcal {S}}^{*}}=\{{{X}_{k}}\}_{k=1}^{K}\) be a sequence of random variables with each component \({{X}_{k}}(k\in [K])\) drawn independently according to the measure \({{u}_{k}}\) over the set \({{A}_{k}}\). Then, for any functions R(f), r(f) over \(\mathcal {F}\), either of which may be a statistic of \({{\mathcal {S}}^{*}}\), any reference measure \(\pi \) over \(\mathcal {F}\), any \(\lambda > 0\), and any convex function \(\mathcal {C}:\mathbb {R}\times \mathbb {R}\rightarrow \mathbb {R}\), the following holds for any measure \(\rho \) over \(\mathcal {F}\):

where \(KL(\rho \Vert \pi )\) denotes the KL-divergence between the distributions \(\rho \) and \(\pi \).

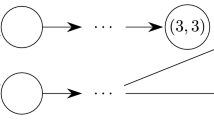

Then, we provide a fundamental lemma [24] regarding the property of the exact proper fraction cover of the dependence graph.

Lemma 2

If \(C=\{({{C}_{j}},{{w}_{j}})\}_{j=1}^{J}\) is an exact fractional cover of the dependence graph \(\Gamma =(V,E)\), with \(V=[K]\), then

Further, \(K=\sum \limits _{j=1}^{J}{{{w}_{j}}\vert {{C}_{j}}\vert }\), where \(\vert {{C}_{j}}\vert \) is the size of \({{C}_{j}}\).

1.2 A.2 Proof of Theorem 1

Prior to commencing our proof, we first present Proposition 1, which is utilized in obtaining our result.

Proposition 1

In the setting of Lemma 1, \(\mathcal {C}(q,p)=q-p\) is set. Let \(R(f,{{\mathcal {S}}^{*}})=\frac{1}{K}\sum \nolimits _{k=1}^{K}{{{E}_{{{X}_{k}}}}{{g}_{k}}(f,{{X}_{k}})}\), \(r(f,{{\mathcal {S}}^{*}})=\frac{1}{K}\sum \nolimits _{k=1}^{K}{{{g}_{k}}(f,{{X}_{k}})}\), where \({{g}_{k}}:\mathcal {F}\times {{A}_{k}}\rightarrow [{{a}_{k}},{{b}_{k}}]\) is a bounded function. Then, for any reference measure \(\pi \) over \(\mathcal {F}\) with a probability of at least \(1-\delta \) over the drawing of \({{\mathcal {S}}^{*}}\), the following holds for any measure \(\rho \):

where \(W=\sum \limits _{j=1}^{J}{{{w}_{j}}}\) denotes the fractional chromatic number of the dependence graph of \({{\mathcal {S}}^{*}}\).

Proof

A cover can be viewed as a special case of a fractional cover where every \({{w}_{j}}\) equals 1, thus all fractional cover results can be applied to covers. Let us define \(C=\{({{C}_{j}},{{w}_{j}})\}_{j=1}^{J}\) as the exact proper fractional cover of the dependence graph \(\Gamma ({{\mathcal {S}}^{*}})\). With \({{\pi }_{j}}=\frac{{{w}_{j}}\vert {{C}_{j}}\vert }{K}\) denoted as a previously defined term, we can arrive at \(\sum \limits _{j}{{{\pi }_{j}}=1}\). Based on Definition 2, we define \({{\mathcal {S}}_{j}}^{*}={{\{{{X}_{k}}\}}_{k\in {{C}_{j}}}}\) and observe that every element in \({{\mathcal {S}}_{j}}^{*}\) is independent. In other words, defining \({{\mathcal {S}}^{*}}=\{{{\mathcal {S}}_{j}}^{*}\}_{j=1}^{J}\) as a set of random variables, selecting a (fractional) proper cover of \(\Gamma ({{\mathcal {S}}^{*}})\) enables the division of \({{\mathcal {S}}^{*}}\) into subsets consisting of independent random variables. This feature plays a crucial role in establishing our results. By applying Lemma 2, we have

Meanwhile, we also have

Since \(\mathcal {C}(q,p)=q-p\), we have

Since for any fixed f, the factors are independent. From this premise, the Hoeffding lemma can be applied to each factor, and \({{g}_{k}}:\mathcal {F}\times {{A}_{k}}\rightarrow [{{a}_{k}},{{b}_{k}}]\). Thus, we have:

By taking the expectation over \(f\sim \pi \), we have

where the second equality uses Fubini’s theorem. Then, with a probability of at least \(1-\delta \), we have

where the first inequality holds due to the ‘change of measure inequality’. In Lemma 1, the second inequality uses Markov’s inequality, and the proof of the last inequality has been given in above. Let \({{a}_{1}}=\cdots ={{a}_{\vert {{C}_{j}}\vert }}=a\) and \({{b}_{1}}=\cdots ={{b}_{\vert {{C}_{j}}\vert }}=b\), we have:

where \(W=\sum \limits _{j=1}^{J}{{{w}_{j}}}\) denotes the fractional chromatic number of the dependence graph of \({{\mathcal {S}}^{*}}\).

Actually, the RHS of the above inequality is an increasing function with respect to W, and its minimum is reached when \(W={{X}^{*}}({{\mathcal {S}}^{*}})\). \({{X}^{*}}({{\mathcal {S}}^{*}})\) can be taken to measure the amount of dependence within \({{\mathcal {S}}^{*}}\). Thus, the whole proof is completed.

Next, we apply Proposition 1 to derive Propositions 2 and 3.

Step 1: bounding \(\mathcal {C}(L(\mathcal {Q},\Im ),\hat{\mathop {L}}\,(\mathcal {Q},{{\mathcal {D}}_{1}},\cdots ,{{\mathcal {D}}_{n}}))\) for Meta-RL, we can obtain:

Proposition 2

For any \(\delta \in (0,1)\), with a probability of at least \(1-\delta \) over the drawing of n distributions \(D=\{{{\mathcal {D}}_{i}}\}_{i=1}^{n}\), the following holds for any hyper-posterior \(\mathcal {Q}\):

where \({{X}^{*}}(D)\) denotes the fractional chromatic number of the dependence graph of D.

Proof

Notice that

Recalling Proposition 1, we set \(K=n\), \(f=P\), \(\pi =\mathcal {P}\), \(\rho =\mathcal {Q}\), \({{X}_{k}}=({{\mathcal {D}}_{i}},{{S}_{i}})\), \({{g}_{k}}(f,{{X}_{k}})={{E}_{h\sim Q({{S}_{i}},P)}}{{E}_{z\sim {{\mathcal {D}}_{i}}}}l(h,z)\in [a,b]\). Thus, we have

where \(W={{X}^{*}}(D)\). Thus, the proof of Proposition 2 is completed.

Step 2: bounding \(\mathcal {C}(\hat{\mathop {L}}\,(\mathcal {Q},{{\mathcal {D}}_{1}},\cdots ,{{\mathcal {D}}_{n}}),\hat{\mathop {L}}\,(\mathcal {Q},{{S}_{1}},\cdots ,\)\({{S}_{n}}))\) for Meta-RL, we have:

Proposition 3

For any hyper-prior \(\mathcal {P}\), any \(\delta \in (0,1)\), with a probability of at least \(1-\delta \) over the drawing of the training sample \(S=\{{{S}_{i}}\}_{i=1}^{n}\), the following holds for any hyper-posterior \(\mathcal {Q}\):

where \({{X}^{*}}(S)\) denotes the fractional chromatic number of the dependence graph of \(S=\{{{S}_{i}}\}_{i=1}^{n}=\{{{z}_{ij}}\}_{i=1,j=1}^{n,m}\).

Proof

Notice that

Recalling Proposition 1, we set \(f=(P,{{h}_{1}},\cdots ,{{h}_{n}})\), \(\pi =\mathcal {P}\times {{P}^{n}}\), \(\rho =\mathcal {Q}\times \prod \limits _{i=1}^{n}{{{Q}_{i}}}\), where \({{Q}_{i}}\text {=}Q({{S}_{i}},P)\), \({{X}_{k}}={{z}_{ij}}\), \({{g}_{k}}(f,{{X}_{k}})=l({{h}_{i}},{{z}_{ij}})\). With a probability of at least \(1-\delta \), we have:

where \(W={{X}^{*}}(S)\). Further, note that

which completes the proof of Proposition 3.

Finally, the proof of Theorem 1 is provided.

Proof

We complete this proof by bounding the probability of the event that is the intersection of the events in Propositions 2 and 3 using the union bound to the two high-probability inequalities.

Appendix B: Pseudo code

Appendix C: Experiment details

1.1 C.1 Overview of the meta environments

-

Ant-Fwd-Back: Train an ant simulator with 8 articulated joints to move forward or backward, with feedback rewards related to the walking direction and loss of control. For our experiments, the two tasks’ directions are set to be forward or backward, respectively.

-

Half-Cheetah-Fwd-Back: The cheetah simulator was trained to move either forward or backward, and feedback rewards were provided based on the direction of movement and any loss of control. In our experiments, the directions for the two tasks were set as forward and backward, respectively.

-

Half-Cheetah-Vel: Train a cheetah simulator to achieve a desired velocity while moving forward, with feedback rewards related to the target velocity and control loss. For our experiments, a fixed seed of 1337 is set, and 15 velocities are randomly sampled from the uniform interval range [0, 3] as our meta-tasks, of which 10 tasks are used as meta-training tasks, and the remaining 5 are used as meta-testing tasks.

-

Point-Robot-Wind: A variant of the 2D navigation problem Sparse-Point-Robot, in which all task target positions are fixed but a distinct “wind” is sampled uniformly from \([-0.05, 0.05]^2\). Each time the agent takes a step will be affected by the “wind”, and the feedback reward is related to the Euclidean distance between the current position and the target position. For our experiments, a fixed seed of 1337 is set, and 15 tasks are randomly sampled, 10 of which are used as meta-training tasks, and the remaining 5 are used as testing tasks.

In the first three MuJoCo domains, every trajectory consists of 200-time steps, while in Point-Robot-Wind, each trajectory encompasses 20-time steps.

1.2 C.2 System configuration

-

System version: Ubuntu 18.04.6;

-

Processor: Intel64 Family 6 Model 165 Stepping 5 GenuineIntel 2904 Mhz;

-

Graphics driver: NVIDIA GeForce GTX 1660 SUPER;

-

Memory: 16384MB RAM.

1.3 C.3 Hyperparameter settings and offline data collection

Table 4 is the hyperparameter information used to reproduce the experimental results of three continuous controls and one 2D Navigation (Fig. 3).

Table 5 shows the offline data information used in the four comparative experiments. We utilized the pre-trained expert policy to collect samples for each task to ensure a fixed sample distribution so that the training samples for each task satisfy the i.i.d. assumption.

1.4 C.4 Robotic manipulation experiments details

MetaWorld [62] has designed and applied a diverse set of challenging robotic manipulation tasks. To validate the performance of our method in a more complex task environment and on a wide range of task distributions, we also adopt MetaWorld’s robotic manipulation tasks as our simulation benchmarks.

1.4.1 C.4.1 Overview of robotic manipulation tasks

In three manipulator simulation environments, we utilized Reach, Door-Close, and Drawer-Open benchmarks from MetaWorld.

-

Reach: Train the manipulator simulator to reach the target position, and provide feedback rewards for the current position of the front end of the manipulator and the target position. In our experiment, the target position is obtained by random sampling, where the target range is low=(-0.1, 0.8, 0.05), high=(0.1, 0.9, 0.3).

-

Door-Close: Train the manipulator to close the door by rotating joint, and provide feedback reward according to the current position and target position of the door. In our experiment, we set fixed seed 1234 and randomly sampled door positions as our meta-learning tasks from the target range low=(0.2, 0.65, 0.1499), high=(0.3, 0.75, 0.1501).

-

Drawer-Open: Train the manipulator to open a drawer and provide feedback rewards based on the drawer’s current position and target position. In our experiment, we set fixed seed 1234 and randomly sampled drawer’s positions as our meta-learning tasks from the target range low=(-0.5, 0.40, 0.05), high=(0.5, 1, 0.5).

1.4.2 C.4.2 Hyperparameter Settings

Table 7 are the details of the hyperparameters used to reproduce the results of the MetaWorld Robotic Manipulate experiments.

1.4.3 C.4.3 Offline data collection

Table 8 is the detailed information for generating offline data of three MetaWorld Robotic Manipulate environments.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, Z., Jing, C., Guo, S. et al. PAC-Bayesian offline Meta-reinforcement learning. Appl Intell 53, 27128–27147 (2023). https://doi.org/10.1007/s10489-023-04911-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10489-023-04911-y